Vision-Based Autonomous Landing of a Quadrotor on the Perturbed Deck of an Unmanned Surface Vehicle

Abstract

:1. Introduction

2. State of the Art

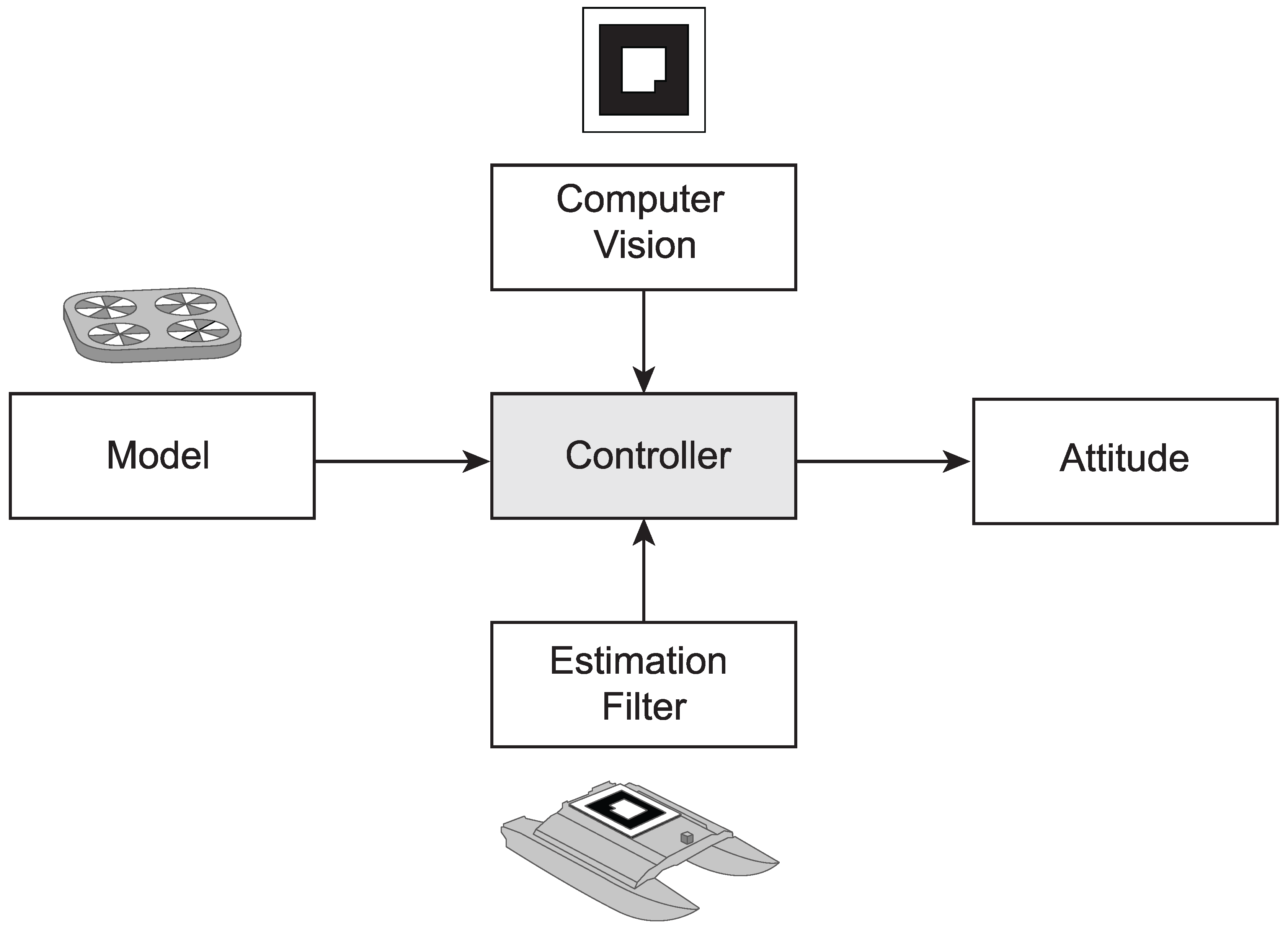

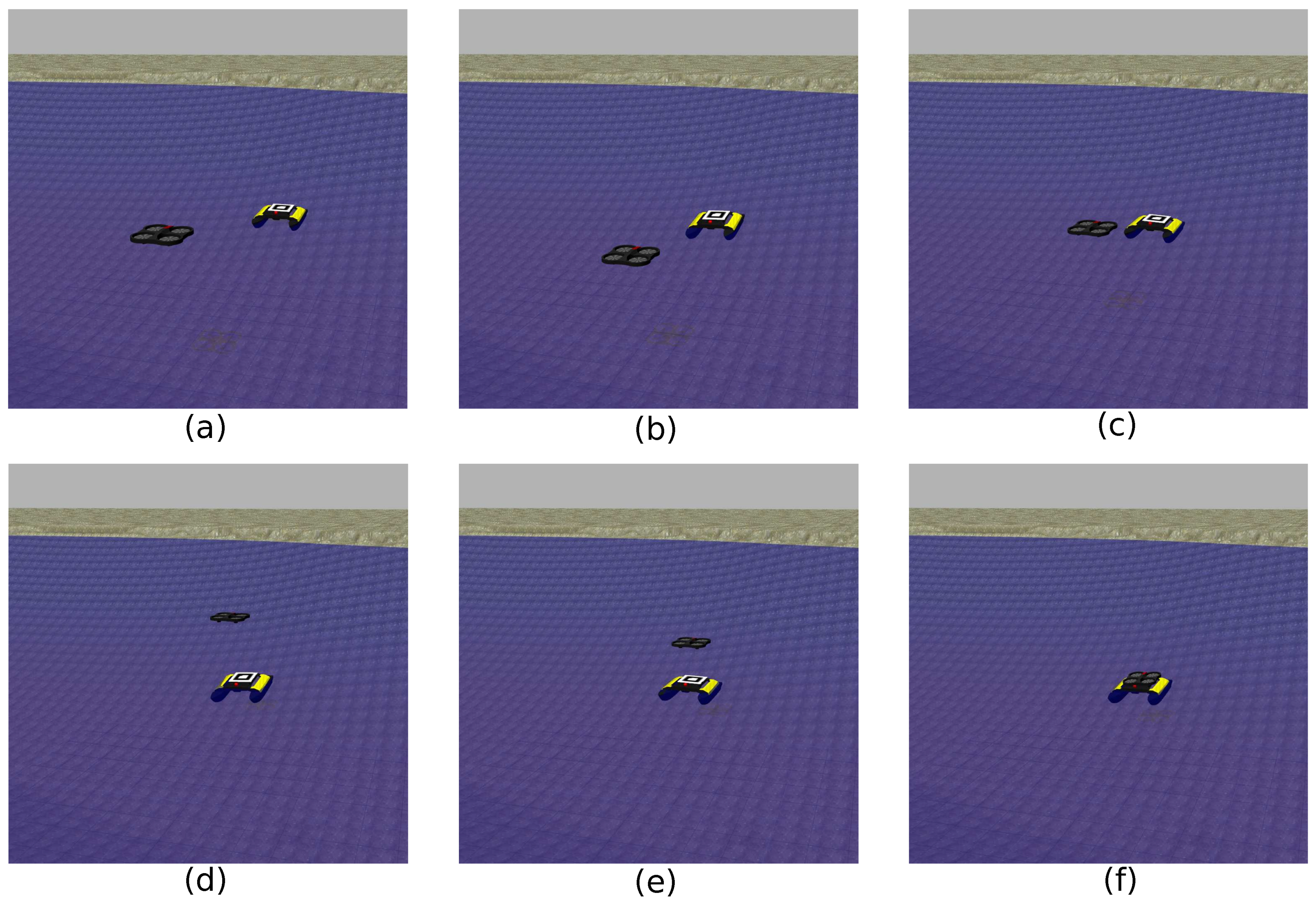

3. Methods

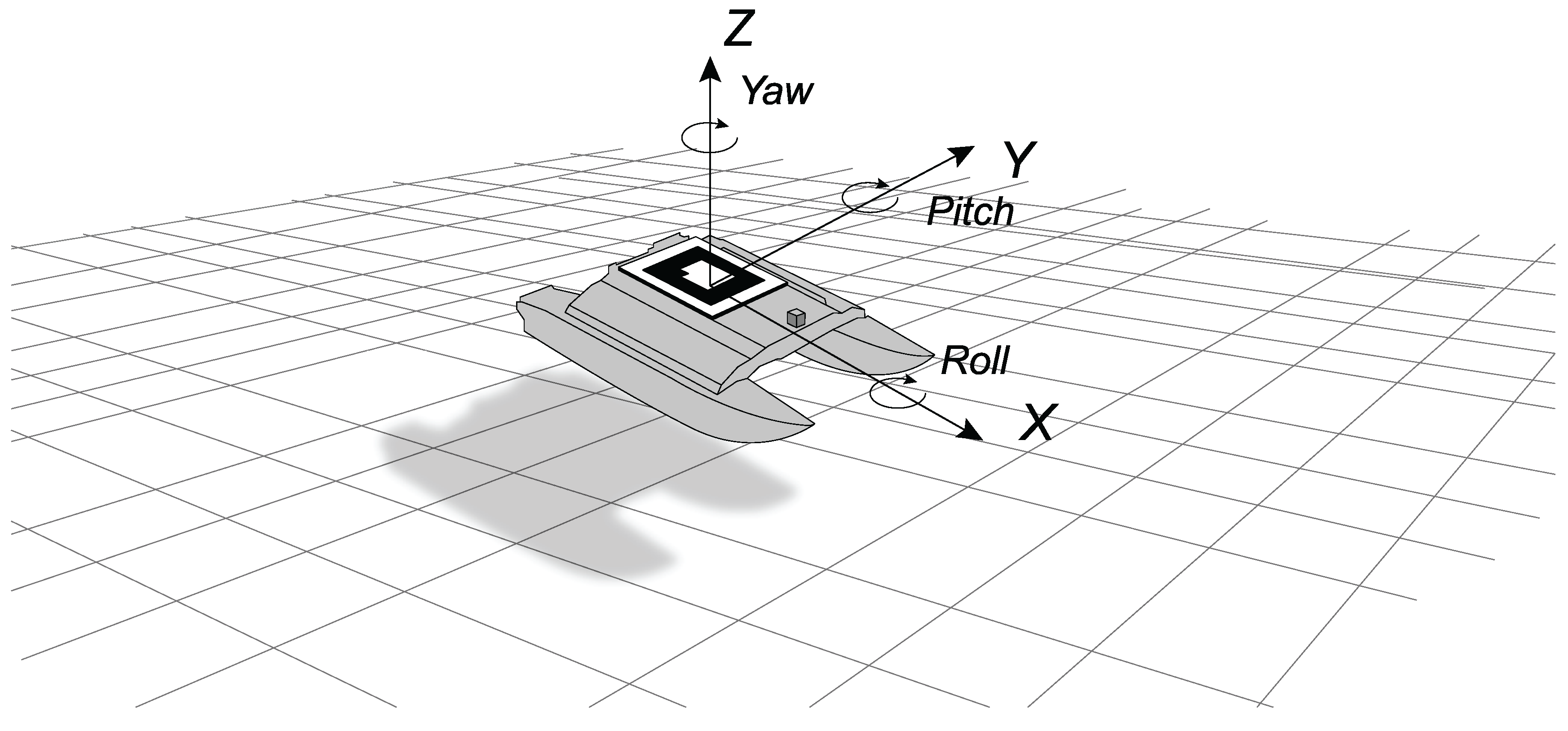

3.1. Quad-Copter Model

- Dimensions: 53 cm × 52 cm (hull included);

- Weight: 420 g;

- IMU, including gyroscope, accelerometer, magnetometer, altimeter and pressure sensor;

- Front-camera with a high-definition (HD) resolution (1280 × 720), a field of view (FOV) of and video streamed at 30 frames per second (fps);

- Bottom-camera with a Quarted Video graphics Array (QVGA) resolution (320 × 240), a FOV of and video streamed at 60 fps;

- Central processing unit running an embedded version of the Linux operating system;

3.2. Augmented Reality

3.3. Controller

3.4. Pose Estimation

- the filter estimates the USV’s pose at 50 Hz, and its encoding is saved in a hash table using the time stamp as the key;

- when the UAV loses the track, the hash table is accessed, and the last record inserted (the most recent estimate produced by the filter) together with the one having as the key the time stamp of the last recorded observation are retrieved;

- the deck’s current position with reference to the old one is calculated using the geometric relationship;

- the controller commands are updated including the new relative position.

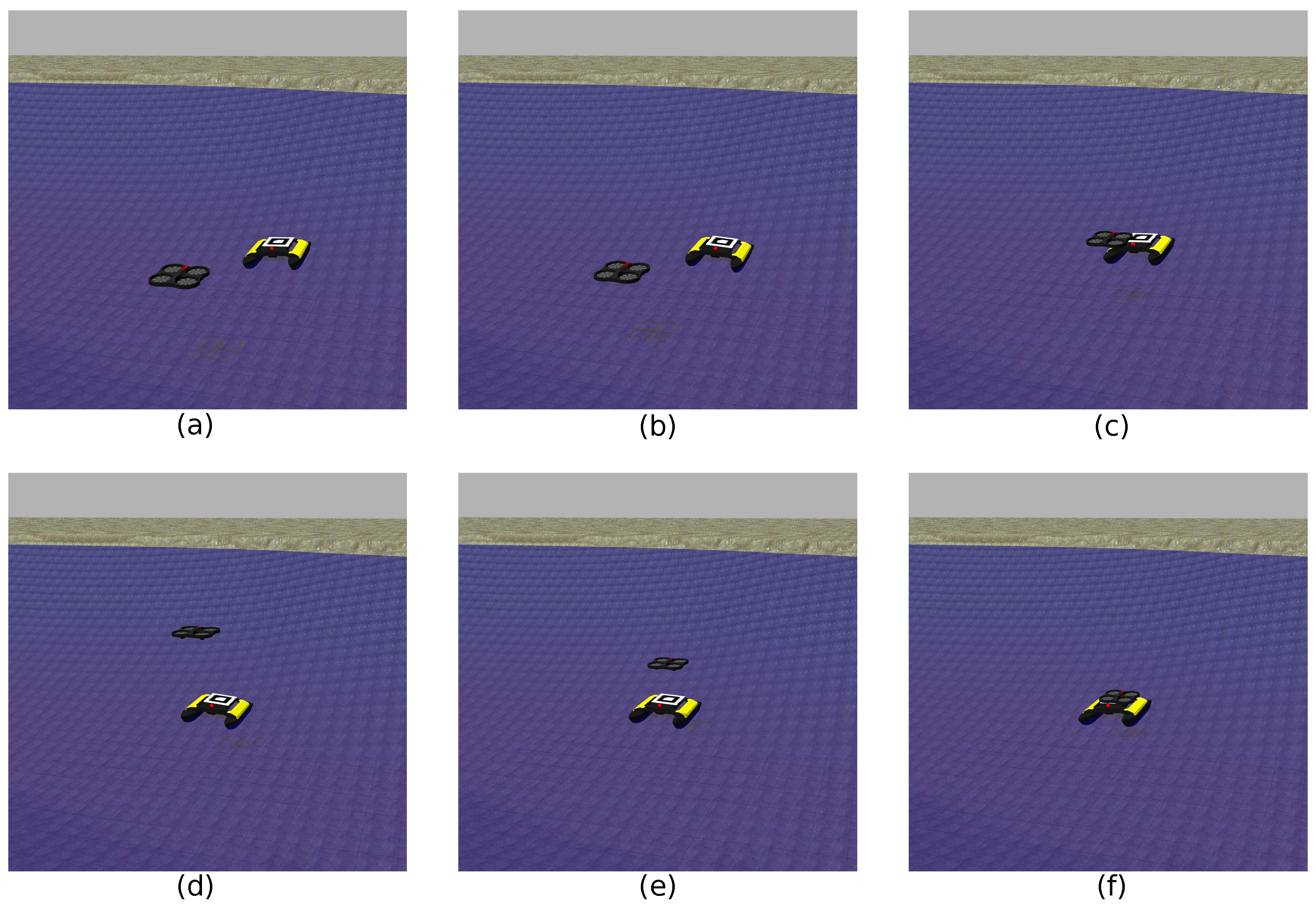

3.5. Methodology

| Algorithm 1 Landing Algorithm. |

|

4. Results and Discussion

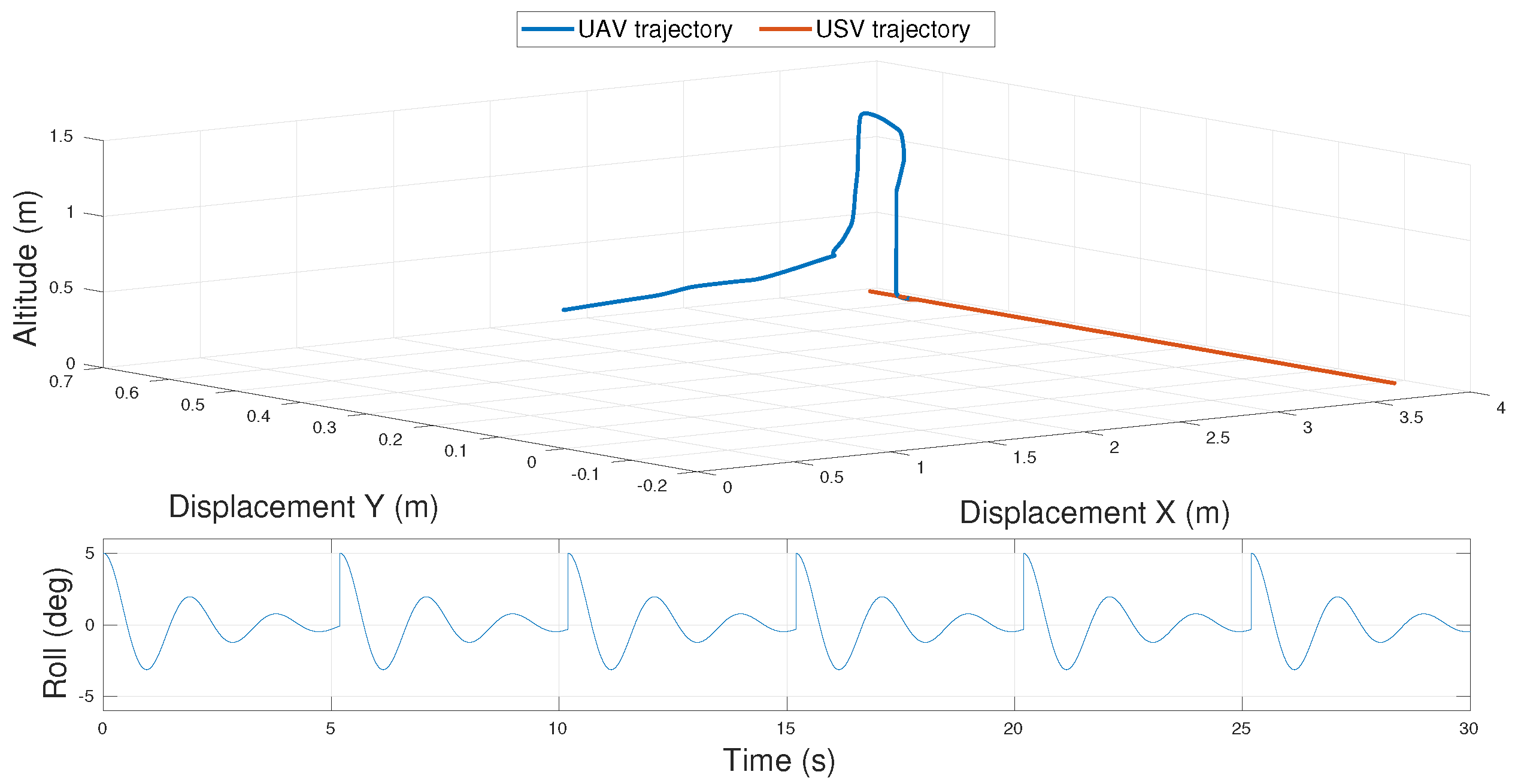

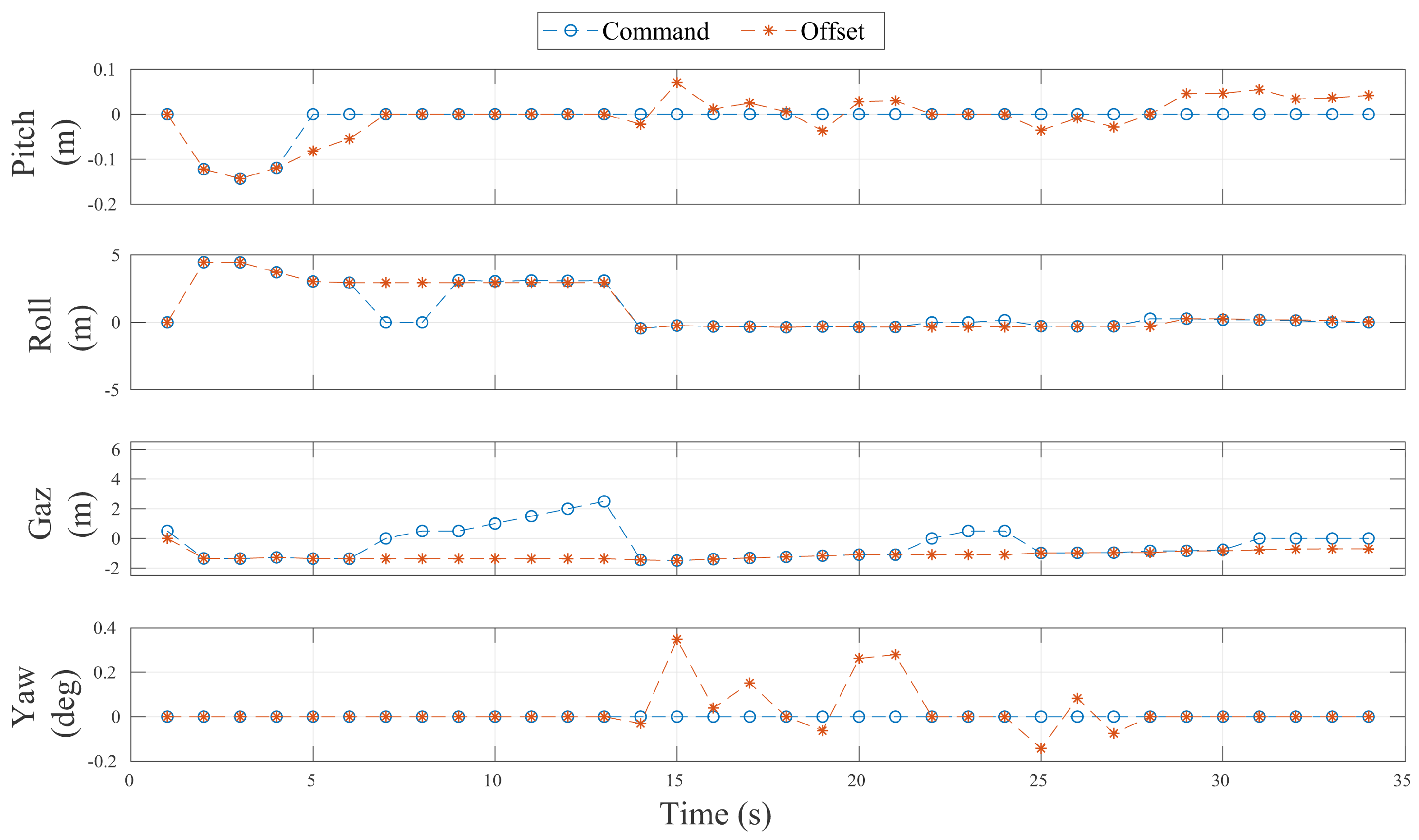

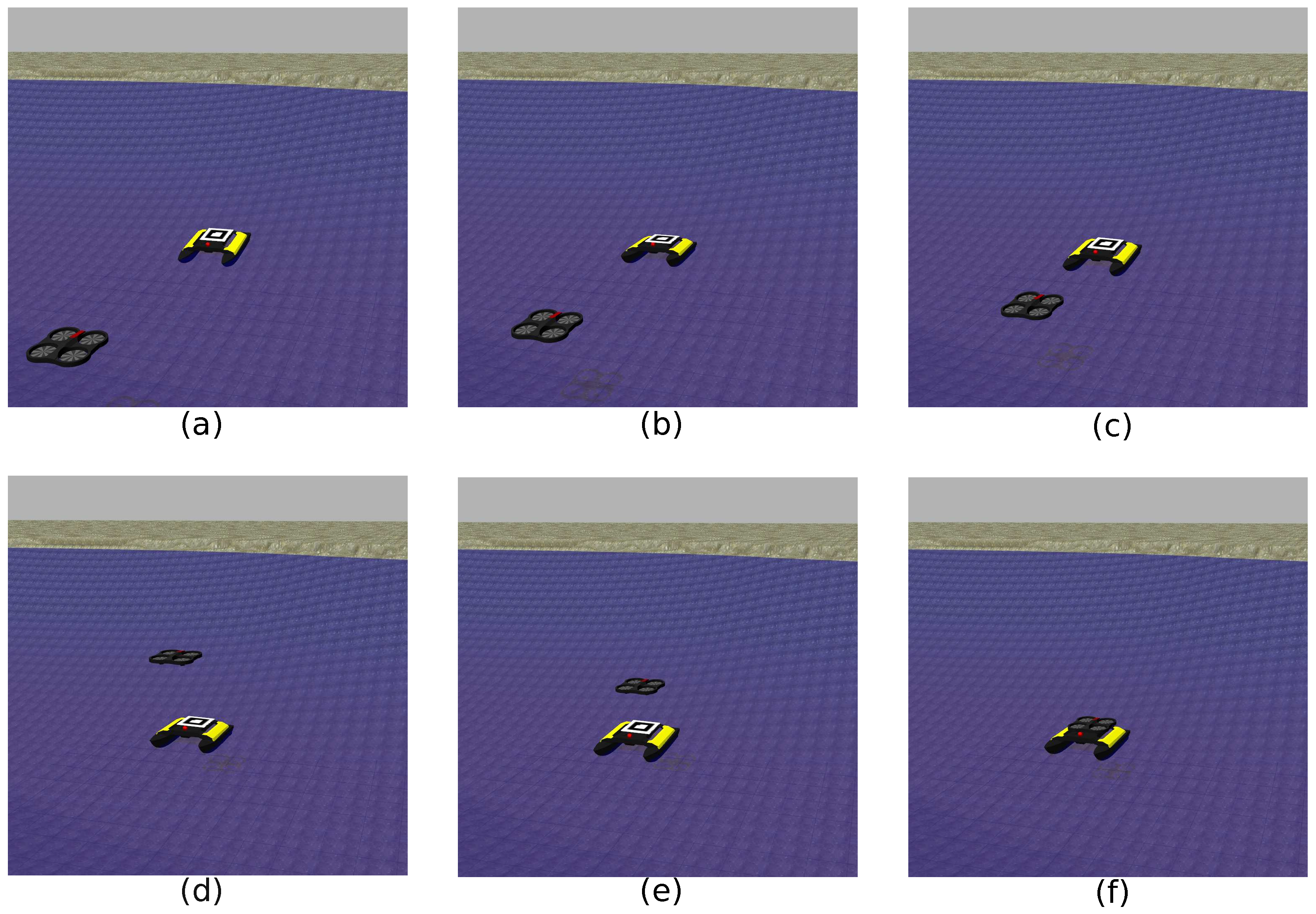

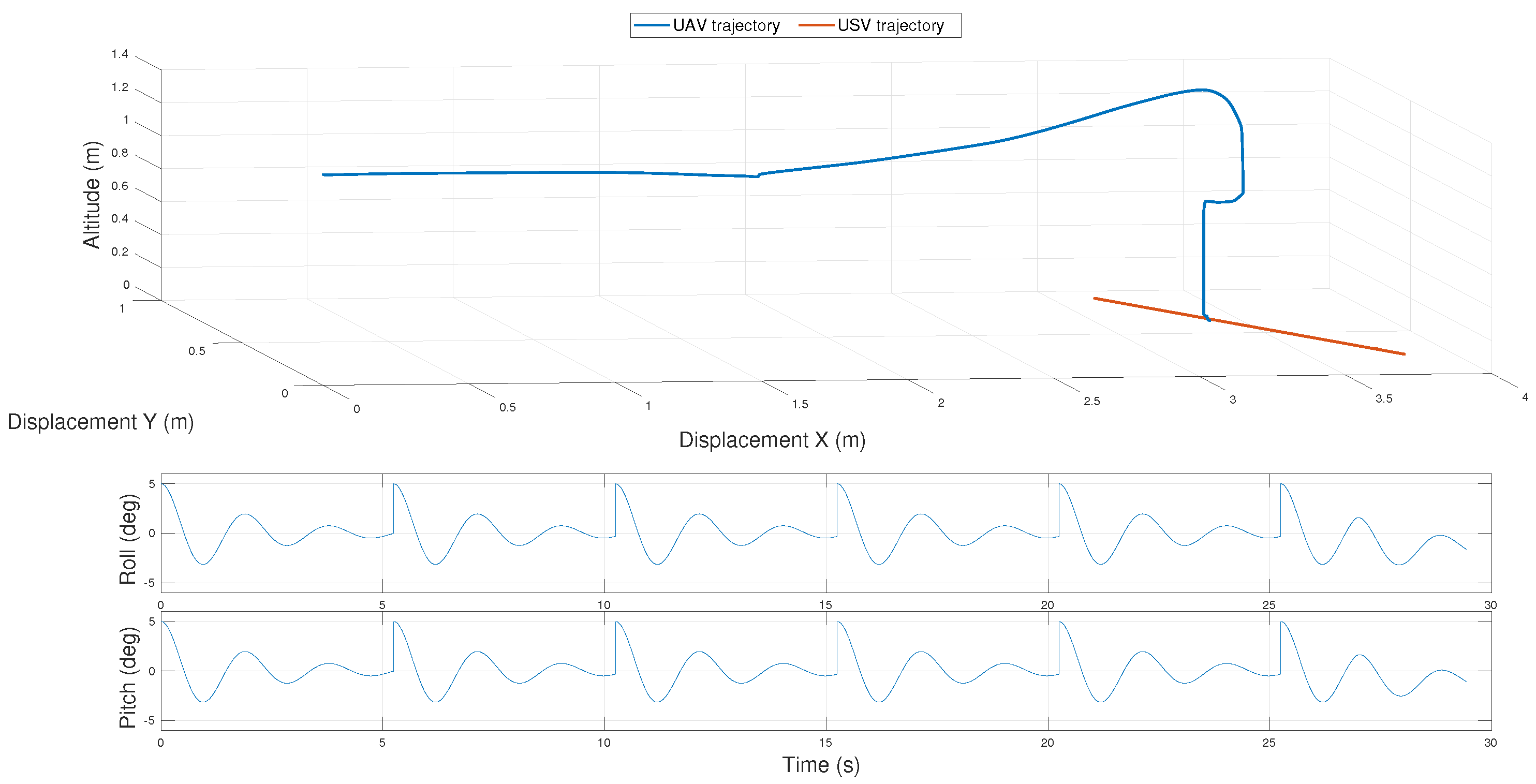

4.1. Rolling Platform

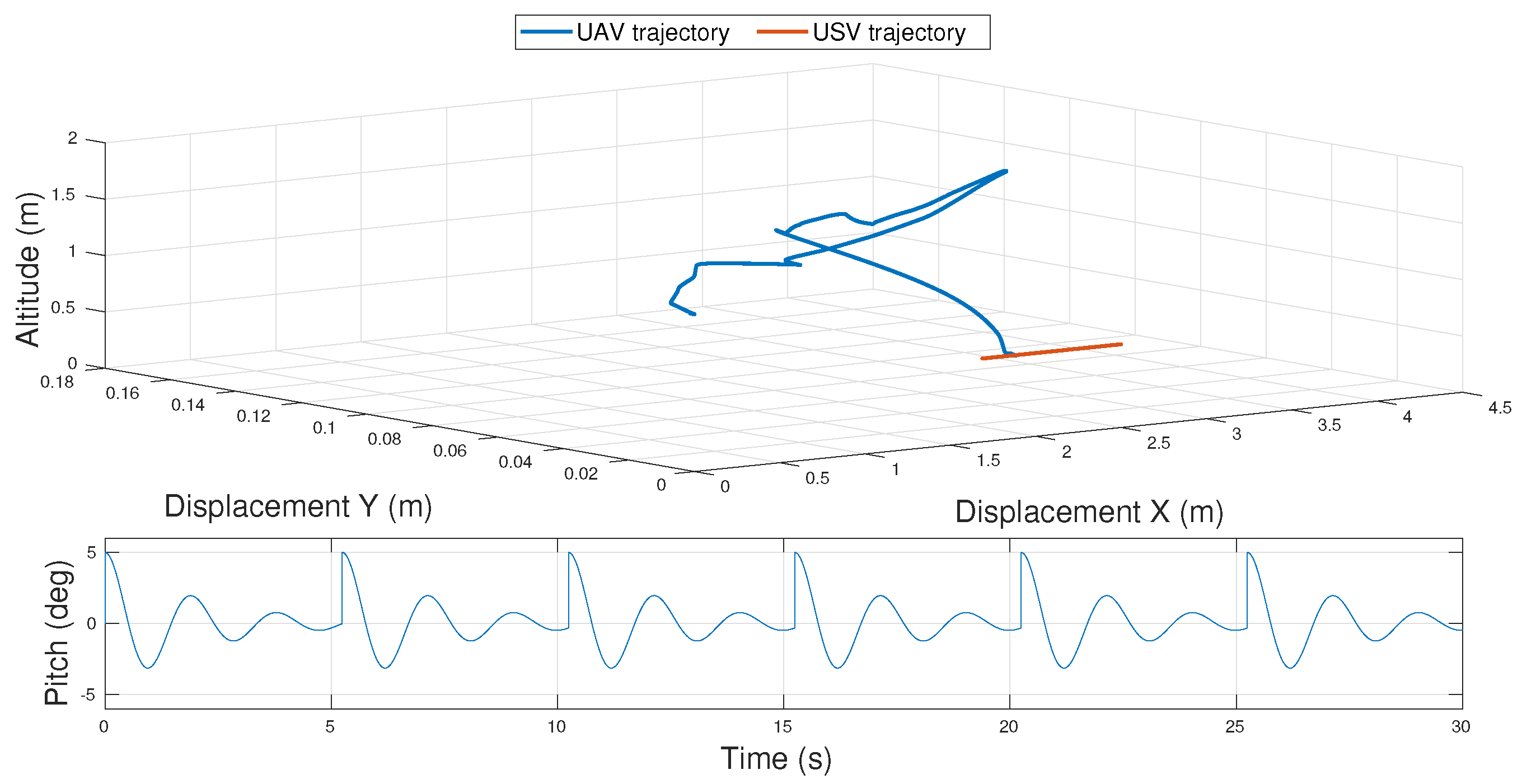

4.2. Pitching Platform

4.3. Rolling and Pitching Platform

5. Conclusions and Future Directions

Author Contributions

Conflicts of Interest

References

- Kumar, V.; Michael, N. Opportunities and challenges with autonomous micro aerial vehicles. Int. J. Robot. Res. 2012, 31, 1279–1291. [Google Scholar] [CrossRef]

- Shim, D.; Chung, H.; Kim, H.J.; Sastry, S. Autonomous exploration in unknown urban environments for unmanned aerial vehicles. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, San Francisco, CA, USA, 15–18 August 2005; pp. 1–8. [Google Scholar]

- Bourgault, F.; Göktogan, A.; Furukawa, T.; Durrant-Whyte, H.F. Coordinated search for a lost target in a Bayesian world. Adv. Robot. 2004, 18, 979–1000. [Google Scholar] [CrossRef]

- Neumann, P.P.; Bennetts, V.H.; Lilienthal, A.J.; Bartholmai, M.; Schiller, J.H. Gas source localization with a micro-drone using bio-inspired and particle filter-based algorithms. Adv. Robot. 2013, 27, 725–738. [Google Scholar] [CrossRef]

- Murphy, D.W.; Cycon, J. Applications for mini VTOL UAV for law enforcement. In Enabling Technologies for Law Enforcement and Security; International Society for Optics and Photonics: Bellingham, WA, USA, 1999; pp. 35–43. [Google Scholar]

- Colorado, J.; Perez, M.; Mondragon, I.; Mendez, D.; Parra, C.; Devia, C.; Martinez-Moritz, J.; Neira, L. An integrated aerial system for landmine detection: SDR-based Ground Penetrating Radar onboard an autonomous drone. Adv. Robot. 2017, 31, 791–808. [Google Scholar] [CrossRef]

- Tomic, T.; Schmid, K.; Lutz, P.; Domel, A.; Kassecker, M.; Mair, E.; Grixa, I.L.; Ruess, F.; Suppa, M.; Burschka, D. Toward a fully autonomous UAV: Research platform for indoor and outdoor urban search and rescue. IEEE Robot. Autom. Mag. 2012, 19, 46–56. [Google Scholar] [CrossRef]

- Kruijff, G.; Kruijff-Korbayová, I.; Keshavdas, S.; Larochelle, B.; Janíček, M.; Colas, F.; Liu, M.; Pomerleau, F.; Siegwart, R.; Neerincx, M.; et al. Designing, developing, and deploying systems to support human–robot teams in disaster response. Adv. Robot. 2014, 28, 1547–1570. [Google Scholar] [CrossRef]

- Minaeian, S.; Liu, J.; Son, Y.J. Vision-based target detection and localization via a team of cooperative UAV and UGVs. IEEE Trans. Syst. Man Cybern. Syst. 2016, 46, 1005–1016. [Google Scholar] [CrossRef]

- Kim, S.J.; Jeong, Y.; Park, S.; Ryu, K.; Oh, G. A Survey of Drone use for Entertainment and AVR (Augmented and Virtual Reality). In Augmented Reality and Virtual Reality; Springer: Berlin, Germany, 2018; pp. 339–352. [Google Scholar]

- Polvara, R.; Sharma, S.; Sutton, R.; Wan, J.; Manning, A. Toward a Multi-agent System for Marine Observation. In Advances in Cooperative Robotics; World Scientific: Singapore, 2017; pp. 225–232. [Google Scholar]

- Murphy, R.R.; Steimle, E.; Griffin, C.; Cullins, C.; Hall, M.; Pratt, K. Cooperative use of unmanned sea surface and micro aerial vehicles at Hurricane Wilma. J. Field Robot. 2008, 25, 164–180. [Google Scholar] [CrossRef]

- Pereira, E.; Bencatel, R.; Correia, J.; Félix, L.; Gonçalves, G.; Morgado, J.; Sousa, J. Unmanned air vehicles for coastal and environmental research. J. Coast. Res. 2009, 1557–1561. [Google Scholar]

- Pinto, E.; Santana, P.; Barata, J. On collaborative aerial and surface robots for environmental monitoring of water bodies. In Proceedings of the Doctoral Conference on Computing, Electrical and Industrial Systems, Costa de Caparica, Portugal, 15–17 April 2013; pp. 183–191. [Google Scholar]

- Linchant, J.; Lisein, J.; Semeki, J.; Lejeune, P.; Vermeulen, C. Are unmanned aircraft systems (UASs) the future of wildlife monitoring? A review of accomplishments and challenges. Mammal Rev. 2015, 45, 239–252. [Google Scholar] [CrossRef]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned aircraft systems in remote sensing and scientific research: Classification and considerations of use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Polvara, R.; Sharma, S.; Wan, J.; Manning, A.; Sutton, R. Obstacle Avoidance Approaches for Autonomous Navigation of Unmanned Surface Vehicles. J. Navig. 2017, 71, 241–256. [Google Scholar] [CrossRef]

- Stacy, N.; Craig, D.; Staromlynska, J.; Smith, R. The Global Hawk UAV Australian deployment: imaging radar sensor modifications and employment for maritime surveillance. In Proceedings of the 2002 IEEE International on Geoscience and Remote Sensing Symposium, Toronto, ON, Canada, 24–28 June 2002; Volume 2, pp. 699–701. [Google Scholar]

- Ettinger, S.M.; Nechyba, M.C.; Ifju, P.G.; Waszak, M. Vision-guided flight stability and control for micro air vehicles. Adv. Robot. 2003, 17, 617–640. [Google Scholar] [CrossRef]

- Polvara, R.; Sharma, S.; Wan, J.; Manning, A.; Sutton, R. Towards Autonomous Landing on a Moving Vessel through Fiducial Markers. In Proceedings of the IEEE European Conference on Mobile Robotics (ECMR), Paris, France, 6–8 September 2017; pp. 1–6. [Google Scholar]

- Kong, W.; Zhou, D.; Zhang, D.; Zhang, J. Vision-based autonomous landing system for unmanned aerial vehicle: A survey. In Proceedings of the 2014 International Conference on Multisensor Fusion and Information Integration for Intelligent Systems (MFI), Beijing, China, 28–29 September 2014; pp. 1–8. [Google Scholar]

- Kendoul, F. Survey of advances in guidance, navigation, and control of unmanned rotorcraft systems. J. Field Robot. 2012, 29, 315–378. [Google Scholar] [CrossRef]

- Sanz, D.; Valente, J.; del Cerro, J.; Colorado, J.; Barrientos, A. Safe operation of mini UAVs: A review of regulation and best practices. Adv. Robot. 2015, 29, 1221–1233. [Google Scholar] [CrossRef]

- Masselli, A.; Yang, S.; Wenzel, K.E.; Zell, A. A cross-platform comparison of visual marker based approaches for autonomous flight of quadrocopters. In Proceedings of the 2013 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 28–31 May 2013; pp. 685–693. [Google Scholar]

- Wenzel, K.E.; Rosset, P.; Zell, A. Low-cost visual tracking of a landing place and hovering flight control with a microcontroller. In Proceedings of the Selected Papers from the 2nd International Symposium on UAVs, Reno, NV, USA, 8–10 June 2009; pp. 297–311. [Google Scholar]

- Cesetti, A.; Frontoni, E.; Mancini, A.; Zingaretti, P.; Longhi, S. A vision-based guidance system for UAV navigation and safe landing using natural landmarks. In Proceedings of the Selected Papers from the 2nd International Symposium on UAVs, Reno, NV, USA, 8–10 June 2009; pp. 233–257. [Google Scholar]

- Yang, S.; Scherer, S.A.; Zell, A. An onboard monocular vision system for autonomous takeoff, hovering and landing of a micro aerial vehicle. J. Intell. Robot. Syst. 2013, 69, 499–511. [Google Scholar] [CrossRef]

- Saripalli, S.; Montgomery, J.F.; Sukhatme, G.S. Visually guided landing of an unmanned aerial vehicle. IEEE Trans. Robot. Autom. 2003, 19, 371–380. [Google Scholar] [CrossRef]

- Hrabar, S.; Sukhatme, G.S. Omnidirectional vision for an autonomous helicopter. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation, Taipei, Taiwan, 14–19 September 2003; Volume 1, pp. 558–563. [Google Scholar]

- Garcia-Pardo, P.J.; Sukhatme, G.S.; Montgomery, J.F. Towards vision-based safe landing for an autonomous helicopter. Robot. Auton. Syst. 2002, 38, 19–29. [Google Scholar] [CrossRef]

- Scherer, S.; Chamberlain, L.; Singh, S. Autonomous landing at unprepared sites by a full-scale helicopter. Robot. Auton. Syst. 2012, 60, 1545–1562. [Google Scholar] [CrossRef]

- Barber, B.; McLain, T.; Edwards, B. Vision-based landing of fixed-wing miniature air vehicles. J. Aerosp. Comput. Inf. Commun. 2009, 6, 207–226. [Google Scholar] [CrossRef]

- Chahl, J.S.; Srinivasan, M.V.; Zhang, S.W. Landing strategies in honeybees and applications to uninhabited airborne vehicles. Int. J. Robot. Res. 2004, 23, 101–110. [Google Scholar] [CrossRef]

- Ruffier, F.; Franceschini, N. Optic flow regulation in unsteady environments: A tethered mav achieves terrain following and targeted landing over a moving platform. J. Intell. Robot. Syst. 2015, 79, 275–293. [Google Scholar] [CrossRef]

- Herissé, B.; Hamel, T.; Mahony, R.; Russotto, F.X. Landing a VTOL unmanned aerial vehicle on a moving platform using optical flow. IEEE Trans. Robot. 2012, 28, 77–89. [Google Scholar] [CrossRef]

- Shakernia, O.; Vidal, R.; Sharp, C.S.; Ma, Y.; Sastry, S. Multiple view motion estimation and control for landing an unmanned aerial vehicle. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; Volume 3, pp. 2793–2798. [Google Scholar]

- Saripalli, S.; Montgomery, J.F.; Sukhatme, G.S. Vision-based autonomous landing of an unmanned aerial vehicle. In Proceedings of the 2002 IEEE International Conference on Robotics and Automation, Washington, DC, USA, 11–15 May 2002; Volume 3, pp. 2799–2804. [Google Scholar]

- Kim, J.; Jung, Y.; Lee, D.; Shim, D.H. Outdoor autonomous landing on a moving platform for quadrotors using an omnidirectional camera. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 1243–1252. [Google Scholar]

- Wenzel, K.E.; Masselli, A.; Zell, A. Automatic take off, tracking and landing of a miniature UAV on a moving carrier vehicle. J. Intell. Robot. Syst. 2011, 61, 221–238. [Google Scholar] [CrossRef]

- Gomez-Balderas, J.E.; Flores, G.; Carrillo, L.G.; Lozano, R. Tracking a ground moving target with a quadrotor using switching control. J. Intell. Robot. Syst. 2013, 70, 65–78. [Google Scholar] [CrossRef] [Green Version]

- Yakimenko, O.A.; Kaminer, I.I.; Lentz, W.J.; Ghyzel, P. Unmanned aircraft navigation for shipboard landing using infrared vision. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 1181–1200. [Google Scholar] [CrossRef]

- Belkhouche, F. Autonomous Navigation of an Unmanned Air Vehicle Towards a Moving Ship. Adv. Robot. 2008, 22, 361–379. [Google Scholar] [CrossRef]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA workshop on open source software, Kobe, Japan, 12 May 2009; Volume 3, p. 5. [Google Scholar]

- Engel, J.; Sturm, J.; Cremers, D. Accurate figure flying with a quadrocopter using onboard visual and inertial sensing. Imu 2012, 320, 240. [Google Scholar]

- Engel, J.; Sturm, J.; Cremers, D. Camera-based navigation of a low-cost quadrocopter. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 2815–2821. [Google Scholar]

- Engel, J.; Sturm, J.; Cremers, D. Scale-Aware Navigation of a Low-Cost Quadrocopter with a Monocular Camera. Robot. Auton. Syst. 2014, 62, 1646–1656. [Google Scholar] [CrossRef]

- Nonami, K.; Kendoul, F.; Suzuki, S.; Wang, W.; Nakazawa, D. Autonomous Flying Robots: Unmanned Aerial Vehicles and Micro Aerial Vehicles, 1st ed.; Springer Publishing Company: New York, NY, USA, 2010. [Google Scholar]

- Goldstein, H. Classical Mechanics; World Student Series; Addison-Wesley: Boston, MA, USA; Reading, MA, USA; Menlo Park, CA, USA; Amsterdam, The Netherlands, 1980. [Google Scholar]

- Azuma, R.T. A survey of augmented reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Dryanovsk, I.; Morris, B.; Duonteil, G. ar_pose. Available online: http://wiki.ros.org/ar_pose (accessed on 1 January 2017).

- Kato, H.; Billinghurst, M. Marker tracking and hmd calibration for a video-based augmented reality conferencing system. In Proceedings of the 2nd IEEE and ACM International Workshop on Augmented Reality, San Francisco, CA, USA, 20–21 October 1999; pp. 85–94. [Google Scholar]

- Abawi, D.F.; Bienwald, J.; Dorner, R. Accuracy in optical tracking with fiducial markers: An accuracy function for ARToolKit. In Proceedings of the 3rd IEEE/ACM International Symposium on Mixed and Augmented Reality, Arlington, VA, USA, 5 November 2004; pp. 260–261. [Google Scholar]

- Hartley, R.I.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004; ISBN 0521540518. [Google Scholar]

- Moore, T.; Stouch, D. A generalized extended kalman filter implementation for the robot operating system. In Intelligent Autonomous Systems 13; Springer: Berlin, Germany, 2016; pp. 335–348. [Google Scholar]

- Jetto, L.; Longhi, S.; Venturini, G. Development and experimental validation of an adaptive extended Kalman filter for the localization of mobile robots. IEEE Trans. Robot. Autom. 1999, 15, 219–229. [Google Scholar] [CrossRef]

- Chenavier, F.; Crowley, J.L. Position estimation for a mobile robot using vision and odometry. In Proceedings of the 1992 IEEE International Conference on Robotics and Automation, Nice, France, 12–14 May 1992; pp. 2588–2593. [Google Scholar]

- Handbook, C. Cargo Loss Prevention Information from German Marine Insurers; GDV: Berlin, Germany, 2003. [Google Scholar]

| Parameter | Value | Parameter | Value |

|---|---|---|---|

| K_direct | 5.0 | K_rp | 0.3 |

| droneMass (kg) | 0.525 | max_yaw (rad/s) | 1.0 |

| xy_damping_factor st19 | 0.65 | max_gaz_rise (m/s) | 1.0 |

| max_gaz_drop (m/s) | −0.1 | max_rp | 1.0 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Polvara, R.; Sharma, S.; Wan, J.; Manning, A.; Sutton, R. Vision-Based Autonomous Landing of a Quadrotor on the Perturbed Deck of an Unmanned Surface Vehicle. Drones 2018, 2, 15. https://doi.org/10.3390/drones2020015

Polvara R, Sharma S, Wan J, Manning A, Sutton R. Vision-Based Autonomous Landing of a Quadrotor on the Perturbed Deck of an Unmanned Surface Vehicle. Drones. 2018; 2(2):15. https://doi.org/10.3390/drones2020015

Chicago/Turabian StylePolvara, Riccardo, Sanjay Sharma, Jian Wan, Andrew Manning, and Robert Sutton. 2018. "Vision-Based Autonomous Landing of a Quadrotor on the Perturbed Deck of an Unmanned Surface Vehicle" Drones 2, no. 2: 15. https://doi.org/10.3390/drones2020015

APA StylePolvara, R., Sharma, S., Wan, J., Manning, A., & Sutton, R. (2018). Vision-Based Autonomous Landing of a Quadrotor on the Perturbed Deck of an Unmanned Surface Vehicle. Drones, 2(2), 15. https://doi.org/10.3390/drones2020015