1. Introduction

As von Neumann [

1] pointed out: “It is very likely that on the basis of philosophy that every error has to be caught, explained, and corrected, a system of the complexity of the living organism would not last for a millisecond. Such a system is so integrated that it can operate across errors. An error in it does not in general, indicate a degenerate tendency. The system is sufficiently flexible and well organized that as soon as an error shows up in any part of it, the system automatically senses whether this error matters or not. If it doesn’t matter, the system continues to operate without paying any attention to it… This is completely different philosophy from the philosophy which proclaims that the end of the world is at hand as soon as the first error has occurred.”

All living organisms have managed to develop various degrees of intelligence to manage the business of sensing, modeling, analyzing, predicting, and taking action to maintain the system stability and respond to disturbances within the system or in their interactions with their environment. The system has an identity as a unit, a sense of “self,” along with knowledge about its constituent components, and their interactions with its environment. The knowledge representations of the information gathered from various senses with embedded, embodied, extended, and enactive (4E) cognition are normalized to create a global view, analyze, and take appropriate action.

While calling this behavior the result of “consciousness” is a risky business, it is appropriate to ask if we could identify the key ingredients that make systems autopoietic and cognitive and if we can infuse them into digital automata to also make them sentient, resilient, and intelligent to a certain degree. As many IT professionals well know, the complexity of troubleshooting when a distributed mission-critical application fails because of local disturbances caused by external or internal factors keeps them awake at night. The application developers and IT operators managing storage, networking, and computer resources collaborate to fix the problem. In addition, could we integrate the knowledge we glean from sub-symbolic computations with the best practices we know from experience, that deal with external disturbances, to predict and mitigate risks in real-time, while maintaining stability, as living organisms do?

Fortunately, three developments are paving the way toward infusing autopoietic and cognitive behaviors into current digital information processing systems:

Our understanding of the limitations of current computing models based on the John von Neumann stored program control implementation of the universal Turing machines;

New information on how biological systems combine both the mammalian neocortex and the reptilian cortical columns use information processing structures to exhibit autopoietic and cognitive behaviors;

The general theory of information (GTI) allows us to model information structures in living organisms and also to design a new class of autopoietic and cognitive machines.

In

Section 2, we discuss the limitations of current information processing systems to implement autopoietic and cognitive behaviors. In

Section 3, we present a model derived from [

2] GTI to discuss both autopoietic and cognitive behaviors using genes and neurons. In

Section 4, we discuss the design of new digital automata, called autopoietic machines [

3,

4,

5], with autopoietic and cognitive behaviors using digital genes and digital neurons. In

Section 5 we conclude with some observations on current attempts to implement autopoietic machines.

2. Limitations of Current Computing Models to Implement Autopoietic and Cognitive Behaviors

While calling the Church–Turing thesis (CTT) into question by pointing out its limitations may be somewhat [

6,

7,

8] controversial, it should be noted that this only relates to a very limited view of CTT boundaries, regarding the finite nature of computing resources available for computations at any particular time. CTT states that “all algorithms that are Turing computable”, fall within the boundaries of the Church Turing thesis which states that “a function on the natural numbers is computable by a human being following an algorithm,

ignoring resource limitations, if and only if it is computable by a Turing machine”.

Current business services demand non-stop operation, with adjustments to their performance made in real-time to meet rapid fluctuations in demand while utilizing finite available resources. The speed with which the quality of service has to be adjusted to meet these demands is becoming faster than the time it takes to orchestrate the myriad infrastructure components (such as virtual machine (VM) images, network plumbing, application configurations, middleware, etc.) distributed across multiple locations and operated by different agents with different profit motives. It takes time and effort to reconfigure distributed plumbing, which results in increased cost and complexity. The Church–Turing thesis’s boundaries are challenged when rapid non-deterministic fluctuations drive the demand for resource readjustment in real-time without interrupting the service transactions while they are in progress. Autopoietic and cognitive behaviors address this issue.

A more foundational issue is discussed in the book

Computation and its Limits [

9]. “The key property of general-purpose computers is that they are general purpose. We can use them to deterministically model any physical system, of which they are not themselves a part, to an arbitrary degree of accuracy. Their logical limits arise when we try to get them to model a part of the world that includes themselves.” A non-functional requirement is a requirement that specifies criteria that can be used to judge the operation of a system, rather than specific behaviors. This should be contrasted with functional requirements that define specific behavior or functions. The plan for implementing functional requirements is detailed in the system design (the computed). The plan for implementing non-functional requirements is detailed in the system architecture. These requirements include availability, reliability, performance, security, scalability, and efficiency at run-time (the computer). The meta-knowledge of the intent of the computed, the association of a specific algorithm execution to a specific device, and the temporal evolution of information processing and exception handling when the computation deviates from the intent (be it because of software behavior, hardware behavior, or their interaction with the environment) is outside the software and hardware design and is expressed in non-functional requirements. Mark Burgin calls this infware [

10] which contains the description and specification of the meta-knowledge that can be implemented using hardware and software to enforce the intent with appropriate actions. According to GTI, the infware provides a path to address the issue of including the computer and the computed in the information processing model of the world. In the next section, we discuss GTI and a path for describing the autopoietic and cognitive behaviors.

3. The Genome and General Theory of Information

Our understanding of how biological intelligence works comes from the study of genomics [

11] and neuroscience [

12,

13,

14]. I will summarize here a few relevant observations from these studies:

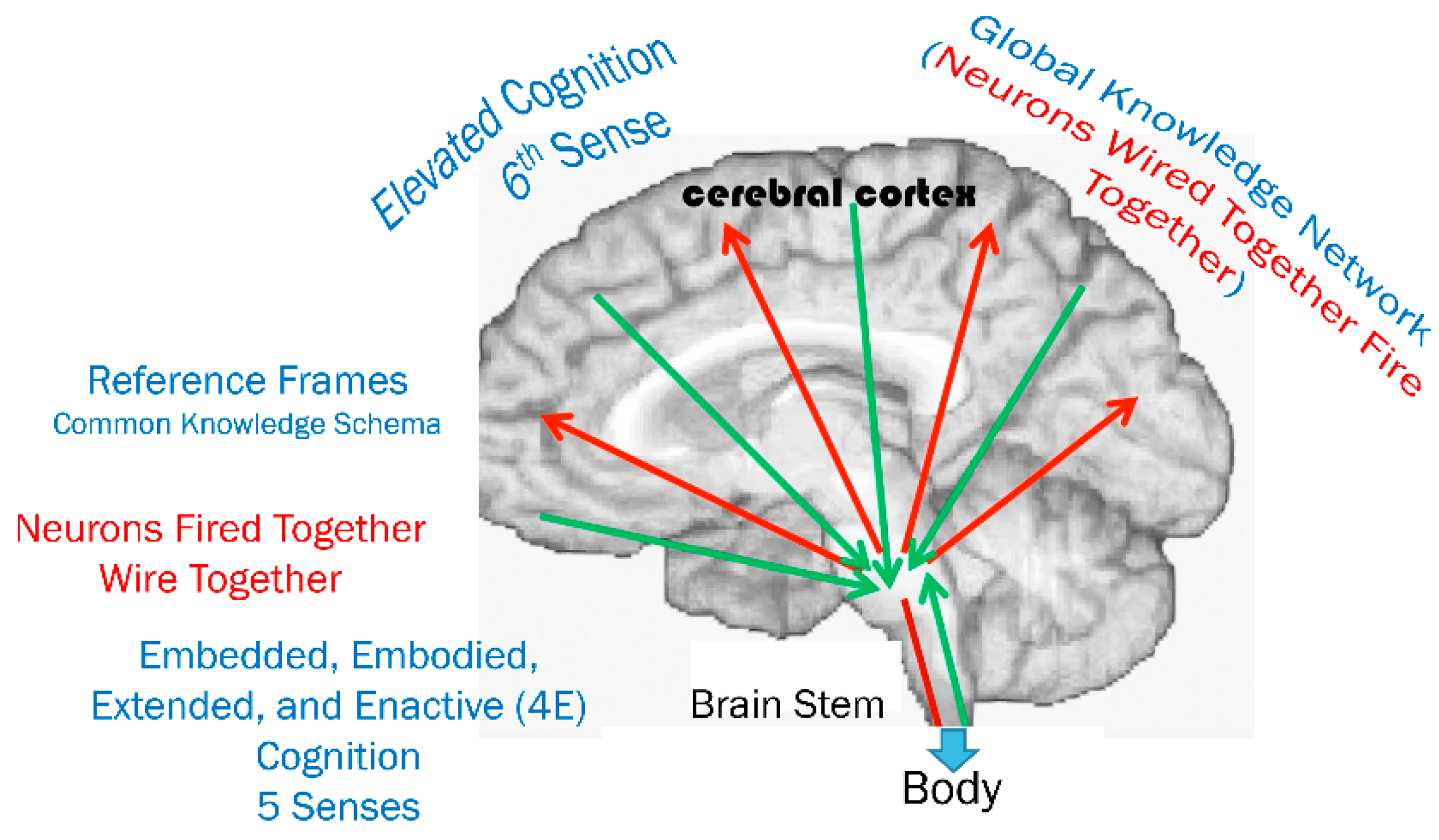

Consciousness in humans comes from a long series of computations. When we see images, what we are aware of is not the real images but subjective feelings that result from several levels of computations. The neocortex and cortical columns provide various layers of cognitive processing using neural networks (sub-symbolic computing);

Knowledge representation of the “Self” is created through mind maps of the body’s interior and is used as a reference to all other maps created from the five senses through cortical columns;

The neocortex uses the brain maps to create a model of the “self” and its interactions with the real world to predict and mitigate risk using both the body and the brain (the sixth sense or elevated cognition);

Autopoiesis arises from the networks of genes regulated by the knowledge encoded in the genome in the form of chromosomes and DNA [

11].

In essence, consciousness is the interaction between the body and the brain with a model of the “self” which provides stability and interactions with the outside using the five senses and 4E cognition.

Figure 1 summarizes these observations.

The genome, in the physical world, is knowledge coded in the executable form of deoxyribonucleic (DNA) and executed by ribonucleic acid (RNA). DNA and RNA use the knowledge of the physical and chemical processes to discover resources in an environment using the cognitive apparatuses of genes and neurons. They build and evolve hardware utilizing various embedded, embodied, enacted, elevated, and extended (5E) cognitive (sentient, resilient, intelligent, and efficient) processes to manage both the self and the environment. The genome encapsulates both autopoietic and cognitive behaviors. The autopoietic behaviors are capable of regenerating, reproducing, and maintaining the system by itself with the production, transformation, and destruction of its components and the networks of processes in these components. These cognitive behaviors are capable of sensing, predicting, and regulating the stability of the system in the face of both deterministic and non-deterministic fluctuations in the interactions among the internal components or their interactions with the environment.

The Burgin Mikkilineni thesis [

15] uses this information to provide a structural machine model that uses knowledge structures to model the autopoietic and cognitive behaviors encoded in the genome. “A genome in the language of General Theory of Information (GTI) [

2] encapsulates “knowledge structures [

3]” coded in the form of DNA and executed using the “structural machines [

3,

4,

5]” in the form of genes and neurons which use physical and chemical processes (dealing with the conversion of matter and energy). The information accumulated through biological evolution is encoded into knowledge to create the genome which contains the knowledge network defining the function, structure, and autopoietic and cognitive processes to build and evolve the system while managing both deterministic and non-deterministic fluctuations in the interactions among the internal components or their interactions with the environment”.

The ontological BM thesis states that the autopoietic and cognitive behavior of artificial systems must function on three levels of systemic information processing and be based on triadic automata. The axiological BM thesis states that efficient autopoietic and cognitive behavior has to employ structural machines. In the next section, we discuss the digital genome and the autopoietic and cognitive behaviors in digital automata.

4. Infusing Autopoietic and Cognitive Behaviors into Digital Automata

The digital genome specifies the execution of knowledge networks using both symbolic computing and sub-symbolic computing structures. The knowledge network is a super symbolic network comprised of symbolic and sub-symbolic networks executing the functions defined in their components. This structure defines system behavior and evolution, maintaining the system’s stability in the face of fluctuations in both internal and external interactions.

Figure 2 shows a structural machine implementation with knowledge structures discussed in GTI. The advantage of this architecture is the decoupling of application workload (algorithms and data) management, independent of what resources (IaaS and PaaS) are used and who provides them. The digital genome encodes knowledge at various levels (4E cognition) to manage functional knowledge nodes using super-symbolic computation, managing both symbolic and sub-symbolic computations downstream.

A detailed discussion of the application of structural machines and knowledge networks to improve cognitive capabilities using deep memory, and deep reasoning with knowledge based on experience and history, is provided in [

16,

17] and the video

https://youtu.be/0QTCxDZpYiI (accessed on 16 March 2022). The video and the paper discuss the structural machine and knowledge structure framework where a model encoded in the cognitive layer is populated with data from both ontological models and deep learning.

5. Conclusions

GTI, the structural machines, and the knowledge structures show the path to designing and implementing autopoietic and cognitive digital automata. Currently, efforts are underway to use the new class of digital automata in:

Building self-managing application workloads, which utilize distributed IaaS and PaaS from multiple vendors, and maintaining stability and service level agreements in the face of rapid fluctuations in the demand for, and the availability of, resources;

Building applications that integrate sub-symbolic and symbolic computing to predict system-level risk and take appropriate action to mitigate it using elevated cognition managing the 4E cognition downstream.

In conclusion, we assert that GTI provides a path to autopoietic and cognitive behaviors. The science of information processing structures is still in its infancy but the foundation on which it is being built is proven to be theoretically sound.