Digital Image Quality Prediction System †

Abstract

:1. Introduction

2. Materials and Methods

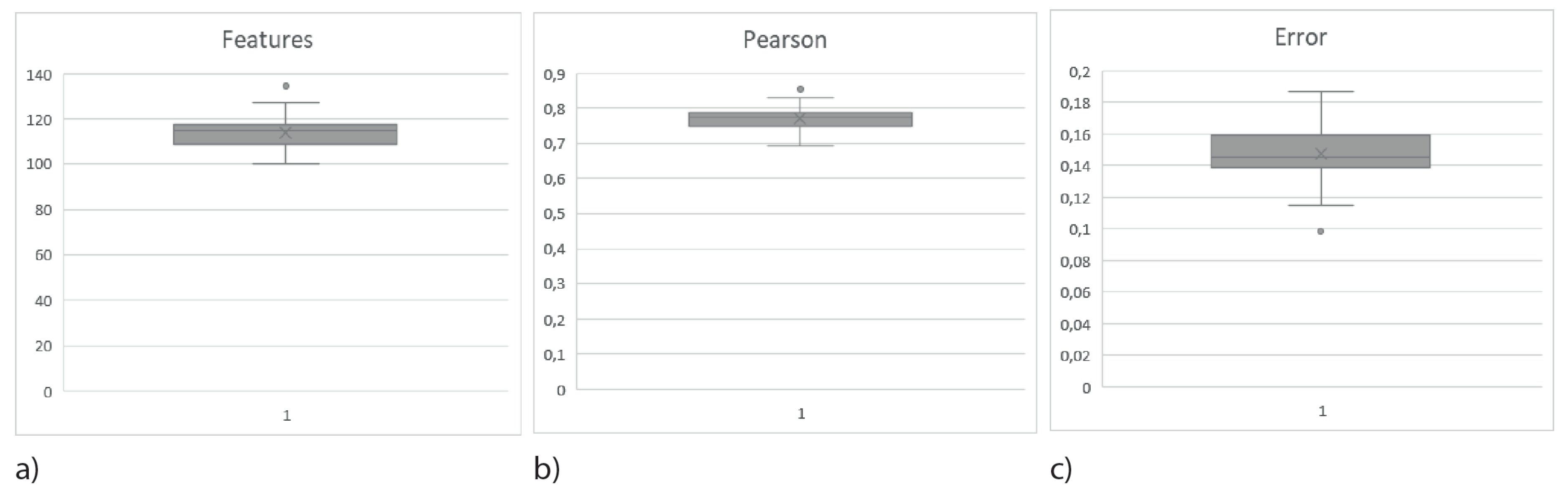

3. Results

4. Conclusions

Acknowledgments

References

- Maitre, H. A Review of Image Quality Assessment with application to Computational Photography. In Proceedings of the Ninth International Symposium on Multispectral Image Processing and Pattern Recognition, Enshi, China, 31 October–1 November 2015. [Google Scholar]

- Celona, L. Learning Quality, Aesthetics, and Facial Attributes for Image Annotation. Tesi di Dottorato, Universitàdegli Studi di Milano-Bicocca, Milan, Italy, 2018. [Google Scholar]

- Li, J.; Yan, J.; Deng, D.; Shi, W.; Deng, S. No-reference image quality assessment based on hybrid model. Signal Image Video Process. 2016, 11. [Google Scholar] [CrossRef]

- Xu, J.; Ye, P.; Li, Q.; Du, H.; Liu, Y.; Doermann, D. Blind image quality assessment based on high order statistics aggregation. IEEE Trans. Image Process. 2016, 25, 4444–4457. [Google Scholar] [CrossRef] [PubMed]

- Ghadiyaram, D.; Bovik, A. Automatic quality prediction of authentically distorted pictures. Proc. SPIE 2015. [Google Scholar] [CrossRef]

- Ghadiyaram, P.D. Perceptual Quality Assessment of Real-World Images and Videos. Ph.D. Thesis, The University of Texas, Austin, TX, USA, 2017. [Google Scholar]

- Rodriguez-Fernandez, N.; Santos, I.; Torrente, A. Dataset for the Aesthetic Value Automatic Prediction. Proceedings 2019, 21, 31. [Google Scholar]

- Carballal, A.; Fernandez-Lozano, C.; Rodriguez-Fernandez, N.; Castro, L.; Santos, A. Avoiding the inherent limitations in datsets used for measuring aesthetics whtn using a machine learning approach. Complexity 2019, 1–12. [Google Scholar] [CrossRef]

- Carballal, A.; Fernandez-Lozano, C.; Heras, J.; Romero, J. Transfer learning for predicting aesthetics through a novel hyprid machine learning method. Neural Comput. Appl. 2019, 32, 5889–5900. [Google Scholar] [CrossRef]

- Pazos Perez, R.I.; Carballal, A.; Rabuñal, J.R.; Mures, O.A.; García-Vidaurrázaga, M.D. Predicting vertical urban growth using genetic evolutionary algorithms in Tokyo’s Minato ward. J. Urban Plan. Dev. 2018, 144, 04017024. [Google Scholar] [CrossRef]

- Nadal, M.; Munar, E.; Marty, G.; Cela-Conde, C. Visualcomplexity and beauty appreciation: Explaining the divergenceof results. Empir. Stud. Arts 2010, 28, 173–191. [Google Scholar] [CrossRef]

- Marin, M.; Leder, H. Examining complexity acrossdomains: Relating subjective and objective measures of affectiveenvironmental scenes, paintings and music. PLoS ONE 2013, 8, e72412. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rodriguez-Fernandez, N.; Santos, I.; Torrente-Patiño, A.; Carballal, A. Digital Image Quality Prediction System. Proceedings 2020, 54, 15. https://doi.org/10.3390/proceedings2020054015

Rodriguez-Fernandez N, Santos I, Torrente-Patiño A, Carballal A. Digital Image Quality Prediction System. Proceedings. 2020; 54(1):15. https://doi.org/10.3390/proceedings2020054015

Chicago/Turabian StyleRodriguez-Fernandez, Nereida, Iria Santos, Alvaro Torrente-Patiño, and Adrian Carballal. 2020. "Digital Image Quality Prediction System" Proceedings 54, no. 1: 15. https://doi.org/10.3390/proceedings2020054015

APA StyleRodriguez-Fernandez, N., Santos, I., Torrente-Patiño, A., & Carballal, A. (2020). Digital Image Quality Prediction System. Proceedings, 54(1), 15. https://doi.org/10.3390/proceedings2020054015