Thermodynamic Computing: An Intellectual and Technological Frontier †

Abstract

:1. Introduction

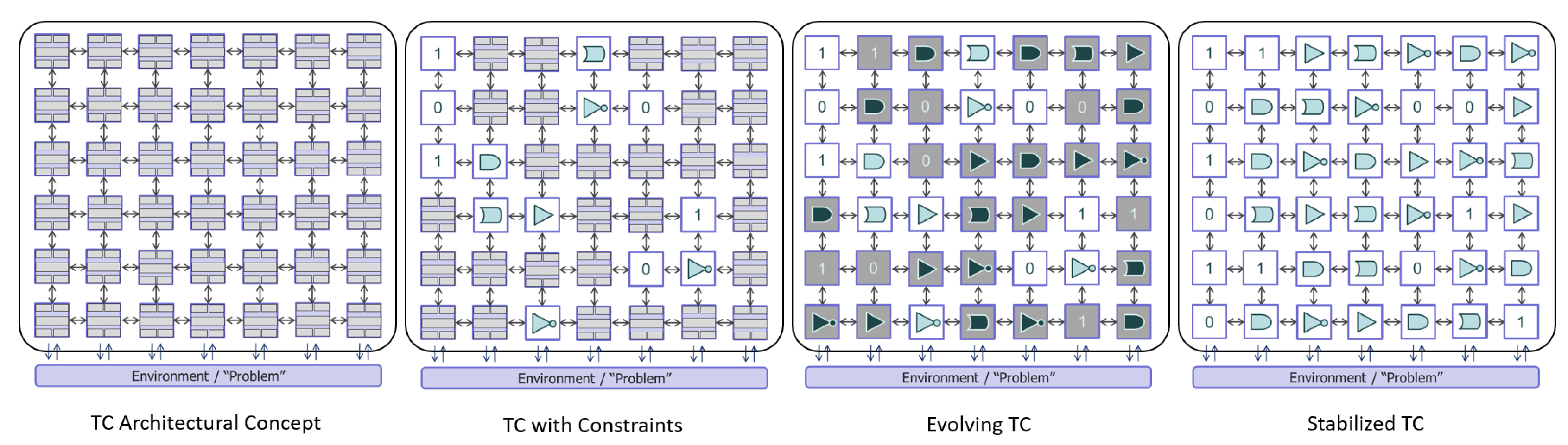

2. Motivation

- Thermodynamics is universal. There is no domain of science, engineering or everyday life in which thermodynamics is not a primary concern. As far as we know, ideas from thermodynamics are the only ones that are universally applicable.

- Thermodynamics is the problem in computing today. As described in Section 2, challenges of power consumption, device fluctuations, fabrication costs, and organization are fundamentally thermodynamic.

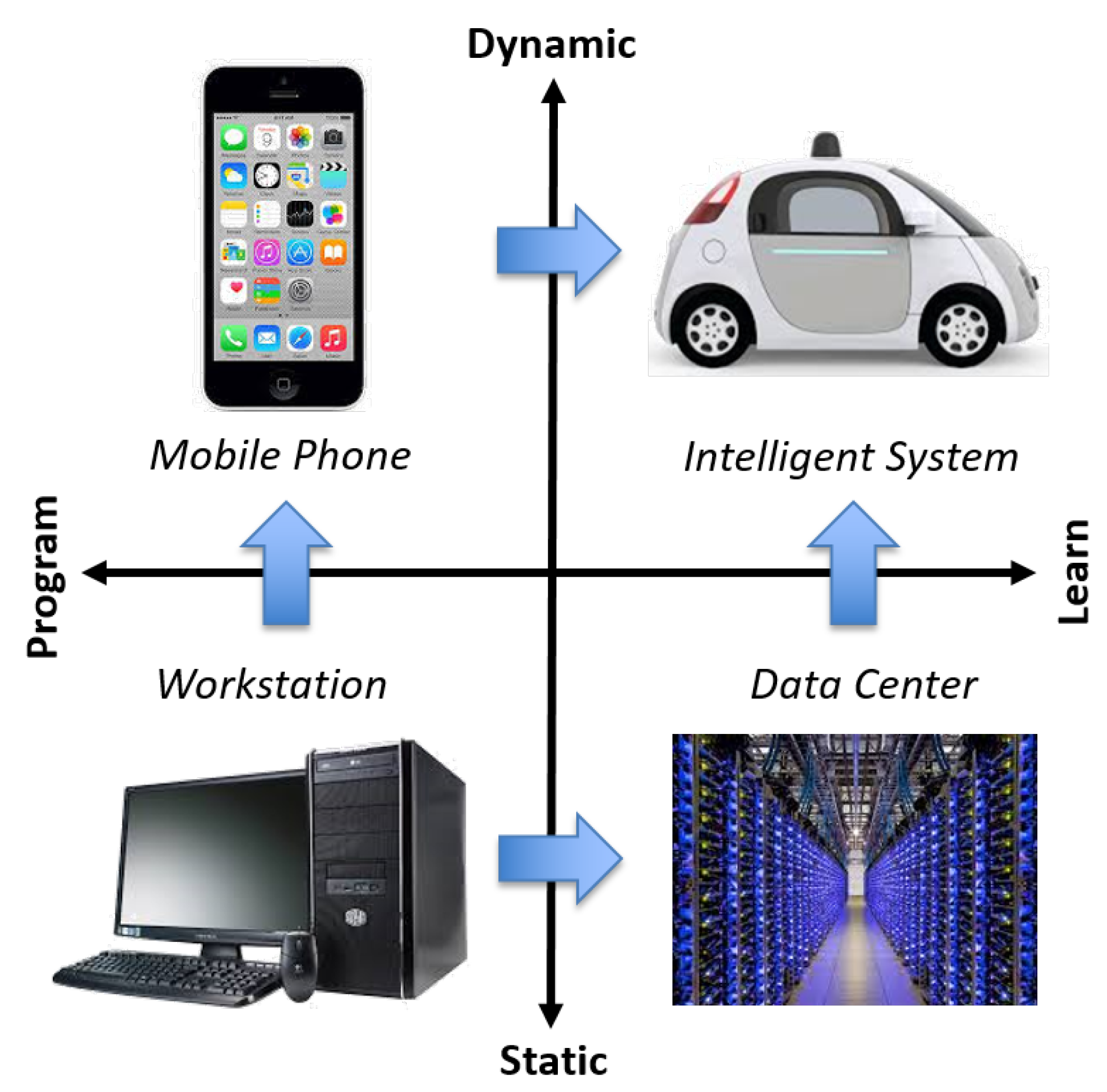

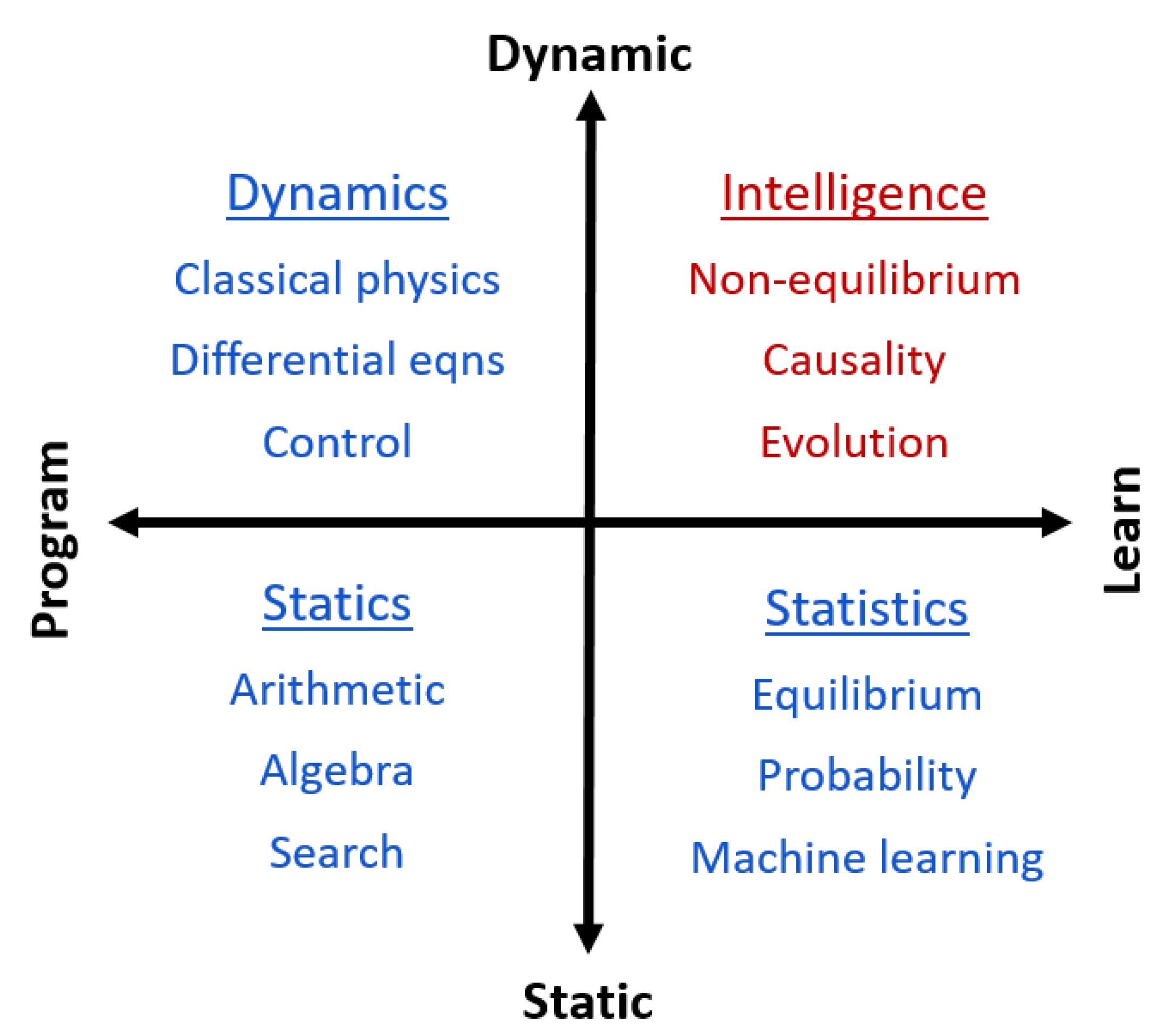

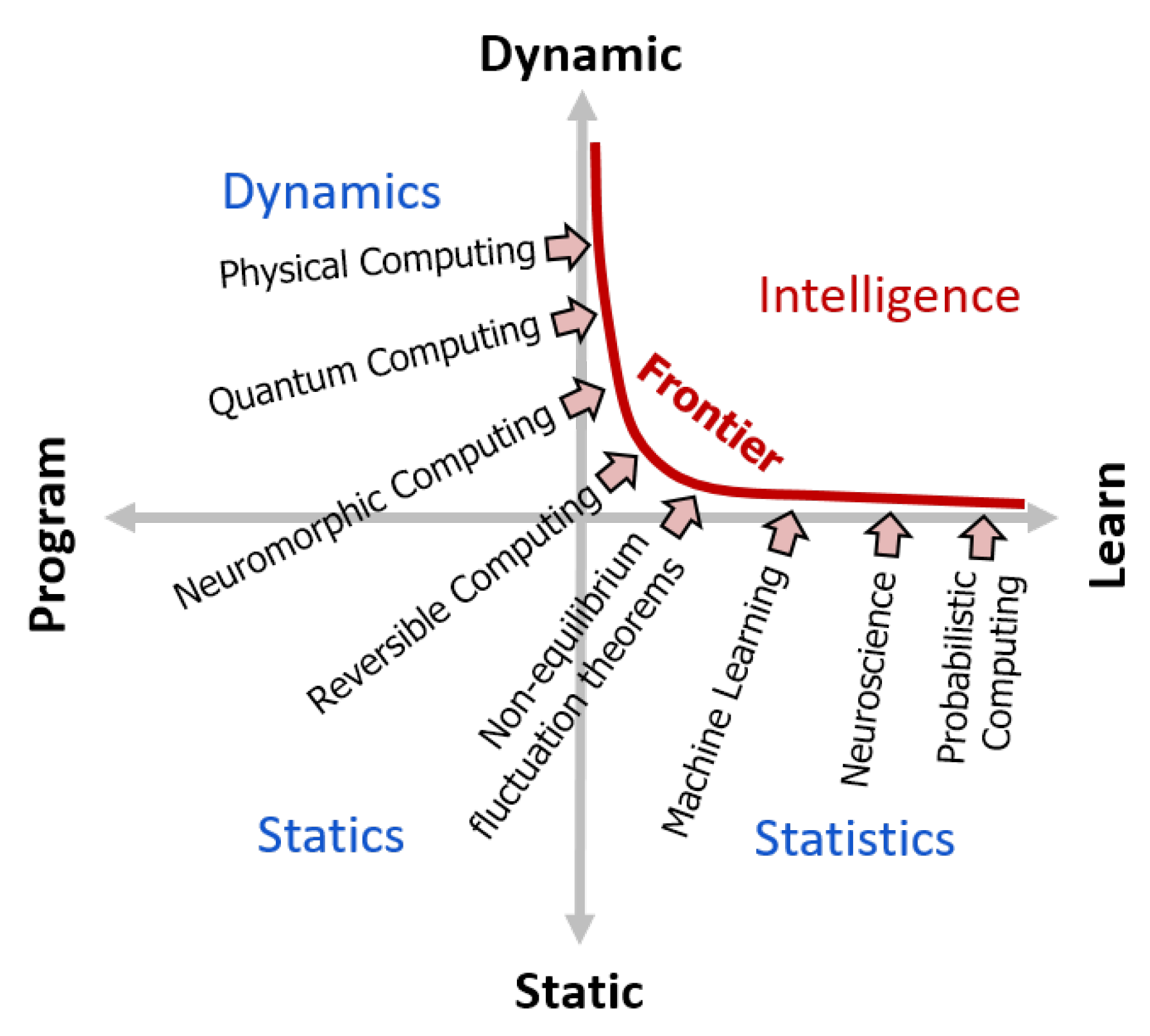

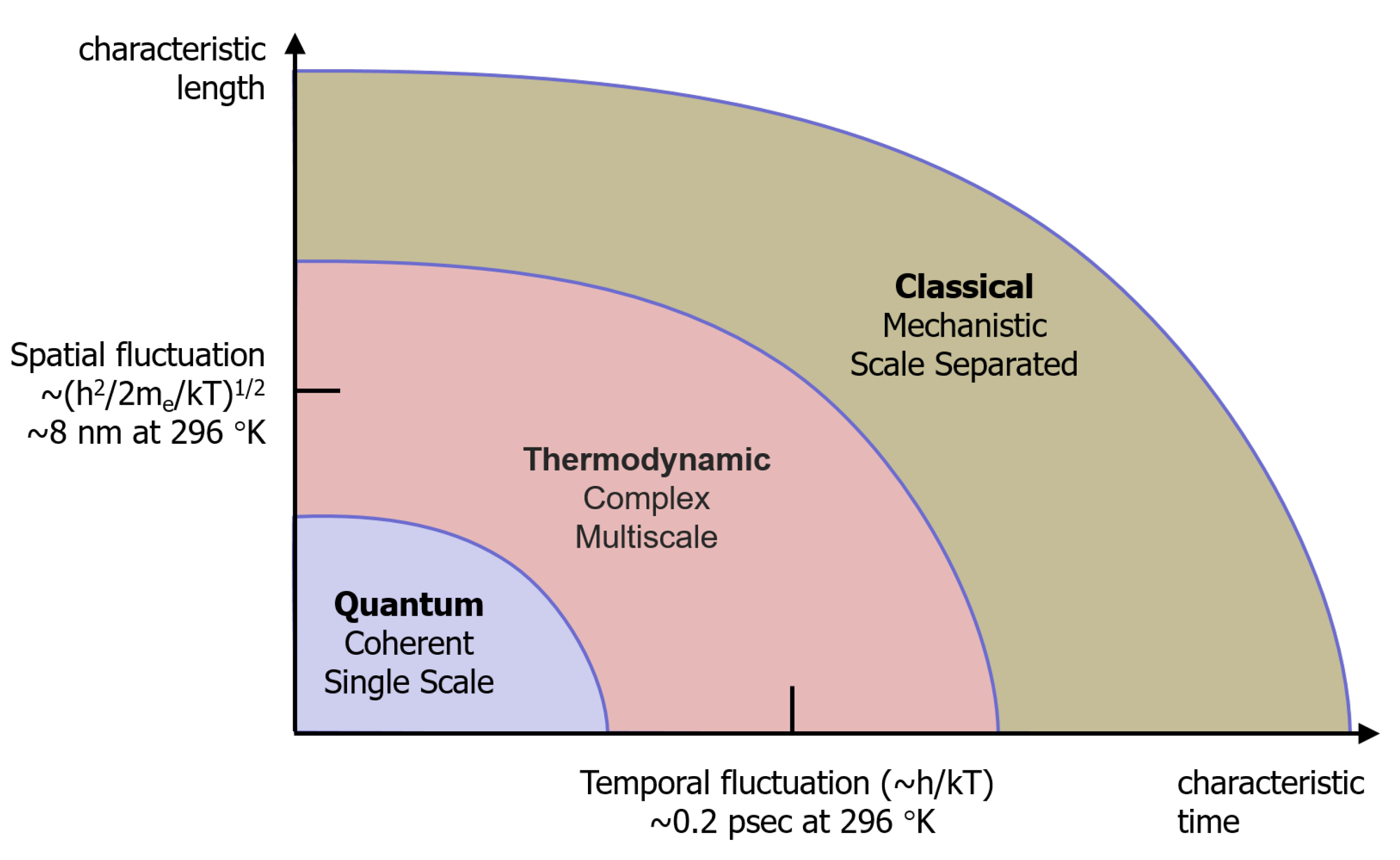

- Thermodynamics is temporal. The second law of thermodynamics is the only explanation for the existence of time. If our objective is to build technologies in the dynamic-learned-intelligence domain of Figure 1, Figure 2 and Figure 3 then an incorporation of this understanding should be a primary concern.

- Thermodynamics is efficient. All systems spontaneously evolve to achieve thermodynamic efficiency. This is particularly evident in living systems, but also in human built technologies and organizations, which are almost always under pressure to improve their efficiency. The most likely explanation for this observation, of course, is that thermodynamics drives their evolution.

- Thermodynamics is ancillary to the current computing paradigm. Thermodynamics is currently viewed as a practical constraint in the implementation of a programmed state machine and memory technology, rather than as a fundamental concept. In computing systems today, physics ends at the level of the device and is replaced by bits, gates and algorithms thereafter. We suppose that physics should extend throughout the computing system, as it evidently does in all natural systems.

- Electronic systems are well-suited for thermodynamic evolution. The energy, time and length scales of today’s basic computational elements (e.g., transistors) approach that of biological elements (e.g., proteins). Instead of struggling to prevent the spontaneous evolution of systems of these devices (i.e., eliminate fluctuations), we should learn how to guide their evolution in the solution of problems that we specify at high levels.

3. Vision

4. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Gent, E. To make smartphones sustainable, we need to rethink thermodynamics. New Sci. 2020. Available online: https://www.newscientist.com/article/mg24532733-300-to-make-smartphones-sustainable-we-need-to-rethink-thermodynamics/ (accessed on 11 March 2020).

- Landauer, R. Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 1961, 5, 183–191. [Google Scholar] [CrossRef]

- Schrödinger, E. What is Life?: The Physical Aspect of the Living Cell; Cambridge University Press: Cambridge, UK, 1944. [Google Scholar]

- Verlinde, E. On the Origin of Gravity and the Laws of Newton. J. High Energy Phys. 2011, 2011, 29. [Google Scholar] [CrossRef]

- Hylton, T.; Conte, T.; DeBenedictis, E.; Ganesh, N.; Still, S.; Strachan, J.P.; Williams, R.S.; Alemi, A.; Altenberg, L.; Crooks, G.; et al. Thermodynamic Computing: Report Based on a CCC Workshop Held on January 3–5, 2019; Technical Report; Computing Community Consortium: Washington, DC, USA, 2019. [Google Scholar]

- Hylton, T. Thermodynamic Neural Network. Entropy 2020, 22, 256. [Google Scholar] [CrossRef] [PubMed]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hylton, T. Thermodynamic Computing: An Intellectual and Technological Frontier. Proceedings 2020, 47, 23. https://doi.org/10.3390/proceedings2020047023

Hylton T. Thermodynamic Computing: An Intellectual and Technological Frontier. Proceedings. 2020; 47(1):23. https://doi.org/10.3390/proceedings2020047023

Chicago/Turabian StyleHylton, Todd. 2020. "Thermodynamic Computing: An Intellectual and Technological Frontier" Proceedings 47, no. 1: 23. https://doi.org/10.3390/proceedings2020047023

APA StyleHylton, T. (2020). Thermodynamic Computing: An Intellectual and Technological Frontier. Proceedings, 47(1), 23. https://doi.org/10.3390/proceedings2020047023