A Visuo-Haptic Framework for Object Recognition Inspired by Human Tactile Perception †

Abstract

:1. Introduction

2. State of the Art

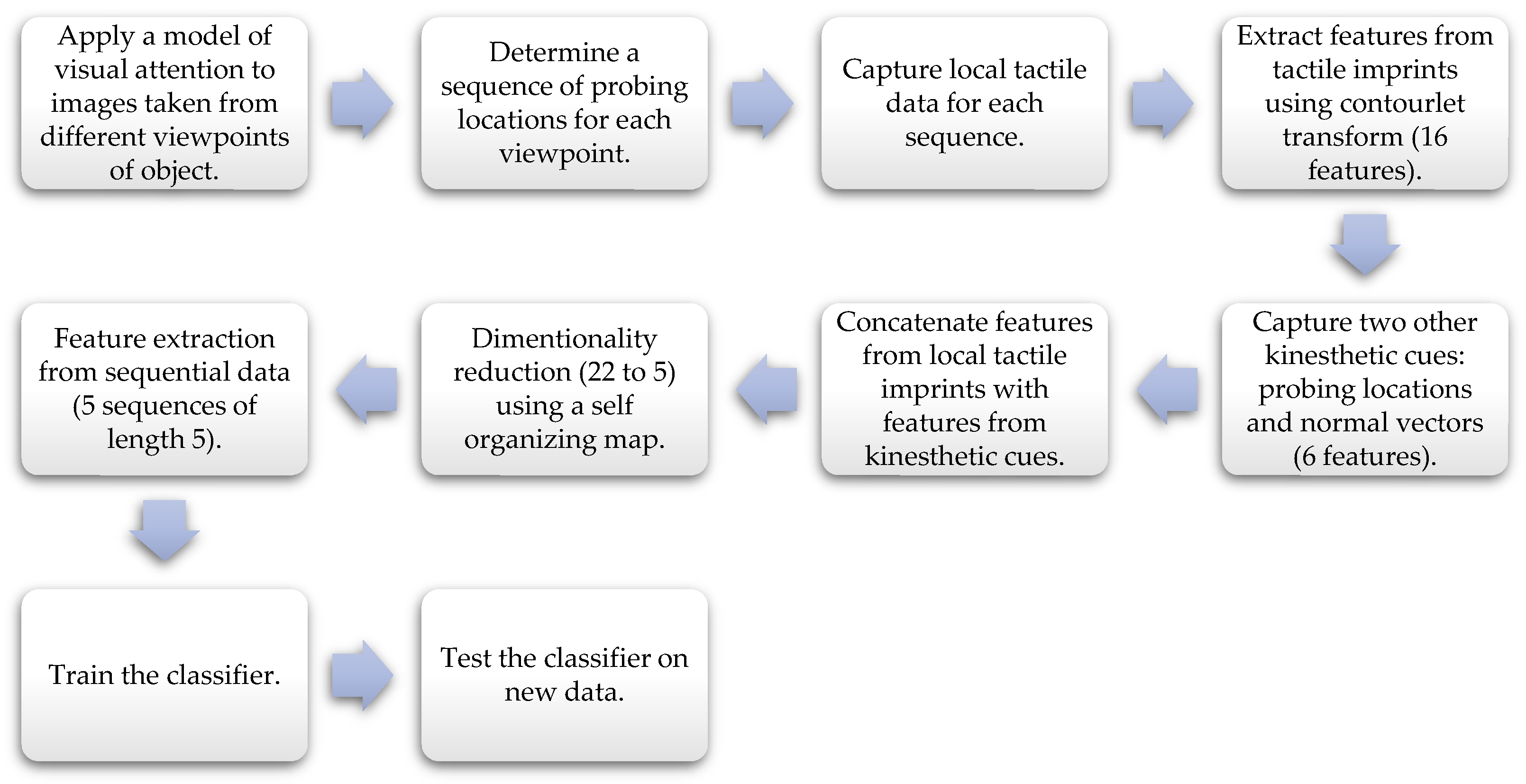

3. Framework

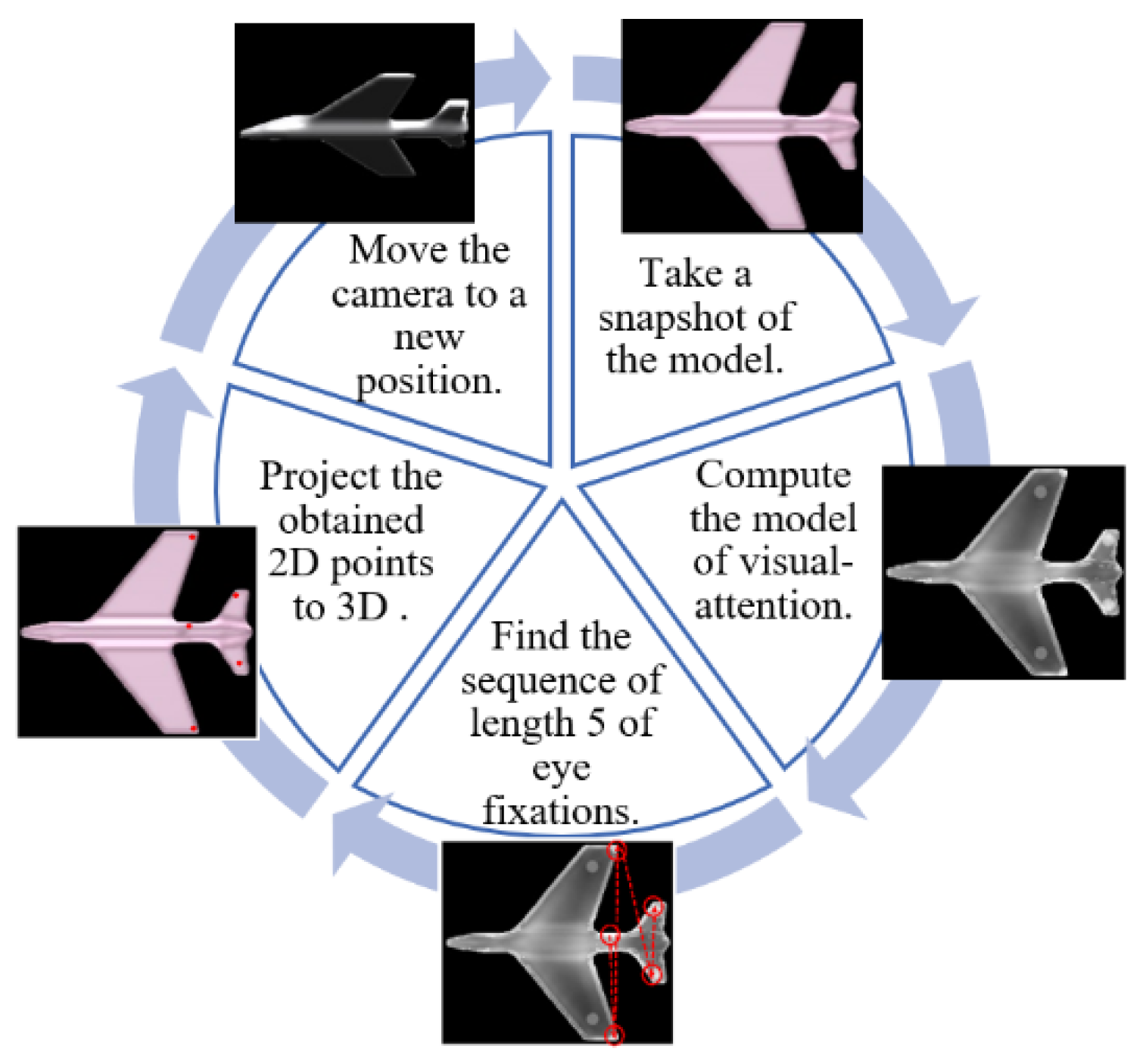

3.1. Sequences of Eye Fixations

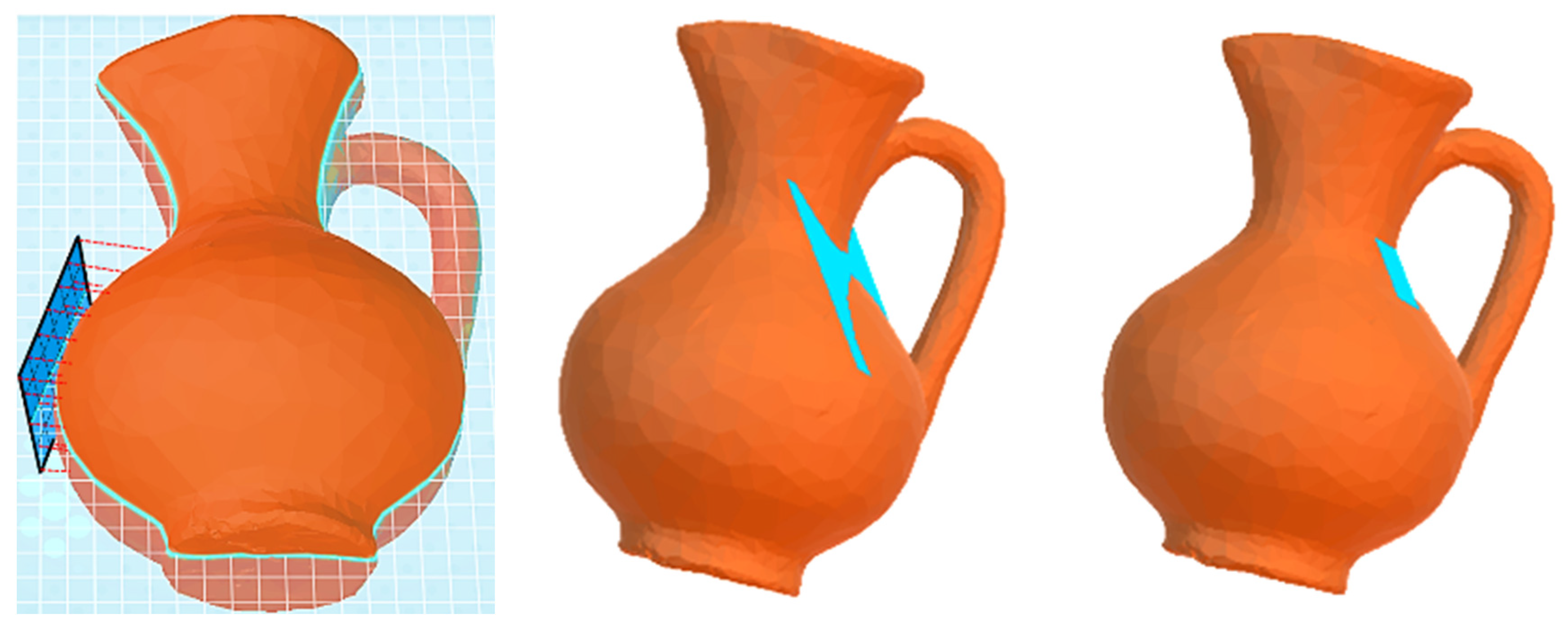

3.2. Tactile Data Collection Using Adaptive Sensor Size

3.3. Feature Extraction from Tactile Imprints

3.4. Kinesthetic Cues

3.5. Data Processing

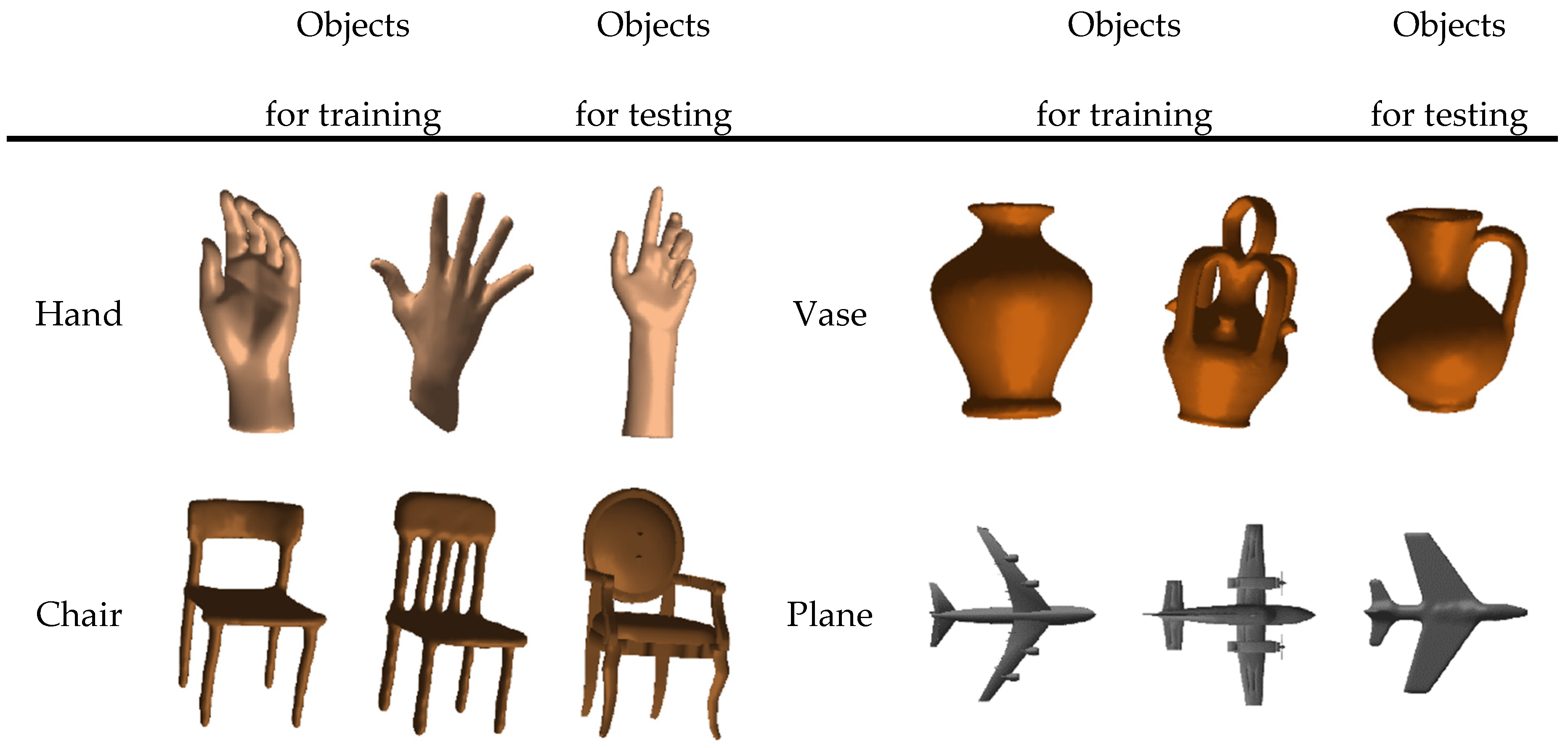

4. Experiments and Results

5. Conclusions

Funding

Conflicts of Interest

References

- Lederman, S.J.; Klatzky, R. Haptic perception: A tutorial. Atten. Percept. Psychophys. 2009, 71, 1439–1459. [Google Scholar] [CrossRef] [PubMed]

- Klatzky, R.L.; Lederman, S.J.; Matula, D.E. Haptic Exploration in the Presence of Vision. Hum. Percept. Perform. 1993, 19, 726–743. [Google Scholar] [CrossRef] [PubMed]

- Chi, C.; Sun, X.; Xue, N.; Li, T.; Liu, C. Recent Progress in Technologies for Tactile Sensors. Sensors 2018, 18, 948. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Guo, D.; Sun, F. Object Recognition Using Tactile Measurements: Kernel Sparse Coding Methods. IEEE Trans. Instrum. Meas. 2016, 65, 656–665. [Google Scholar] [CrossRef]

- Ratnasingam, S.; McGinnity, T. Object recognition based on tactile form perception. In Proceedings of the IEEE Workshop Robotic Intelligence in Informationally Structured Space, Paris, France, 11–15 April 2011. [Google Scholar]

- Luo, S.; Mou, W.; Althoefer, K.; Liu, H. iCLAP: Shape recognition by combining proprioception and touch sensing. In Autonomous Robots; Springer: Berlin/Heidelberg, Germany, 2018; pp. 1–12. [Google Scholar]

- Gorges, N.; Navarro, S.E.; Goger, D.; Worn, H. Haptic Object Recognition using Passive Joints and Haptic Key Features. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010. [Google Scholar]

- Gao, Y.; Hendricks, L.; Kuchenbecker, K.J. Deep learning for tactile understanding from visual and haptic data. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–20 May 2016. [Google Scholar]

- Rouhafzay, G.; Pedneault, N.; Cretu, A.-M. A 3D Visual Attention Model to Guide Tactile Data Acquisition for Object Recognition. In Proceedings of the 4th International Electronic Conference on Sensors and Applications, Wilmington, DE, USA, 15–30 November 2017. [Google Scholar]

- Rouhafzay, G.; Cretu, A.-M. Perceptually Improved 3D Object Representation Based on Guided Adaptive Weighting of Feature Channels of a Visual-Attention Model. In 3D Research; Springer: Berlin/Heidelberg, Germany, 2018; Volume 9. [Google Scholar]

- Adi, W.; Sulaiman, S. Using Wavelet Extraction for Haptic Texture Classification, Visual Informatics: Bridging Research and Practice. IVIC. Lect. Notes Comput. Sci. 2009, 5857, 314–325. [Google Scholar]

- Do, M.N.; Vetterli, M. The Contourlet Transform: An Efficient Directional Multiresolution Image Representation. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef] [PubMed]

| Classifier | Guiding the Tactile Sensor by a Sequence of Five Eye Fixations | Using a Random Movement of the Tactile Sensor |

|---|---|---|

| K-nearest neighbors (kNN) | 85.58% | 84.94% |

| Support vector machine (SVM) | 93.45% | 86.14% |

| Decision trees | 73.56% | 71.63% |

| Quadratic discrimination | 91.89% | 84.28% |

| Naïve Bayes | 56.68% | 57.16% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rouhafzay, G.; Cretu, A.-M. A Visuo-Haptic Framework for Object Recognition Inspired by Human Tactile Perception. Proceedings 2019, 4, 47. https://doi.org/10.3390/ecsa-5-05754

Rouhafzay G, Cretu A-M. A Visuo-Haptic Framework for Object Recognition Inspired by Human Tactile Perception. Proceedings. 2019; 4(1):47. https://doi.org/10.3390/ecsa-5-05754

Chicago/Turabian StyleRouhafzay, Ghazal, and Ana-Maria Cretu. 2019. "A Visuo-Haptic Framework for Object Recognition Inspired by Human Tactile Perception" Proceedings 4, no. 1: 47. https://doi.org/10.3390/ecsa-5-05754

APA StyleRouhafzay, G., & Cretu, A.-M. (2019). A Visuo-Haptic Framework for Object Recognition Inspired by Human Tactile Perception. Proceedings, 4(1), 47. https://doi.org/10.3390/ecsa-5-05754