1. Introduction

Recent changes in demographic structure have caused an increase in the efforts to research technical support systems for ambulant and residential care. The atomization of households [

1], coinciding with a prolonged life expectancy [

2], has put an increased care demand into the hands of third parties—according to the German Federal Statistical Office, the ratio between ambulant care personnel supply and demand will halve between 2009 and 2030 while the number of single households will increase sixfold in relation to the population numbers [

3]. Furthermore, increased life expectancy and improved medical care are causing a rise in the proportion of population living with chronic diseases. Lastly, there is a general trend towards outpatient care by hospitals. According to the Avalere Health analysis of American Hospital Association Annual Survey [

4], the percentage of outpatient (vs. ambulant) revenue for community hospitals in the United States has increased from 28% to about 46% between 1994 and 2014.

Previous research on technical support systems in ambulant care environments has shown the feasibility of using simple, low-cost sensors to detect, measure and react to several care-related observations, such as falls [

5], changes in gait [

6] and performance during Activities of Daily Living (ADLs) [

7].

The above-mentioned applications require different types and numbers of sensors but they share a common obstacle—the perceived intrusion of privacy. Especially complex sensors, such as cameras and microphones, are rarely accepted in living spaces. At the same time, some applications depend on the identification or at least separation of individuals.

The focus of this work thus lies on the use of binary sensors such as light barriers and motion sensors for the separation of residents for the long-term collection of activity data. These sensors have relatively little power consumption, are easy to retrofit and can be installed unobtrusively. The amount of information provided by an individual binary sensor is small but a network of such sensors may help collect sufficient data to differentiate between multiple residents.

It has been shown that a tracking algorithm can track an individual in a network of binary sensors with sufficient accuracy over short periods of time [

8]. However, unless an identifying or body-worn sensor such as an RFID tag or biometric sensor is added, the resulting tracking data usually does not contain sufficient information in order to connect tracks to create a motion or behavior profile and to detect individual changes in activity over time.

We hypothesize that tracks of binary signals provide sufficient information to separate two residents over large periods of time by grouping the data into “motion profiles”—sets of tracks describing residents’ activity over several days or weeks. To test this hypothesis, we use three methods of unsupervised learning—fuzzy clustering, constrained clustering and contrained evidential fuzzy clustering—to combine tracking data lacking identification into clusters representing each resident’s motion profile. To achieve this, we identify data and metadata that can be used to distinguish tracks of all residents and guests, such as activity in unique locations, at unique times of the day or relative gait speed.

It should be noted that clustering of activity data helps to separate activity of multiple residents but it does not identify them because none of the sensors provides any identifiying information. However, once the residents’ activities are separated, identifying data on any of the residents’ activities can then be generalised to the whole cluster. We show an example of such identifying data in the second part of our evaluation (

Section 5.2).

The remainder of this article is structured as follows—

Section 2 introduces work on similar methods of separation or identification of residents in smart home environments.

Section 3 describes the data and procedures used.

Section 5 describes the results of the evaluation and subsequent steps taken.

Section 6 summarizes the findings and describes possible directions to take from here.

2. State of the Art

Previous work on separation and identification of residents in smart homes covers a wide range of technologies and algorithms. Wearable and body-worn sensors require continuous participation of the residents and are therefore often perceived as a burden. High-resolution sensors such as cameras and microphone arrays are commonly rejected for reasons of privacy. We will therefore limit this section to approaches relying on low-resolution ambient sensors only.

Ivanov et al. [

9] present a thorough overview of existing presence detection technologies and the kind of information they are able to provide. The list ranges from binary passive-infrared (PIR) sensors, which merely detect movement or physical activity, to video cameras, which can be used to count, identify and locate individuals. The authors suggest that low-price sensors are too low in resolution for person identification. While higher-resolution sensor technologies allow identification, they are commonly more expensive and pose a psychological barrier as their use is often considered an invasion of privacy.

Wilson & Atkeson [

10] use a particle filter to track multiple residents’ location and activities in a home equipped with motion sensors, pressure mats and contact switches. The main goal of their work is room-level tracking and rudimentary activity recognition. Beside keeping track of presence count and motion paths, this approach also allows identification of residents through motion models. Similar to the sensor graph used in the algorithms presented here, this approach maintains a room transition probability matrix to calculate likely motion paths.

Mokhtari et al. [

11] built an ambient sensor system to identify residents of a smart home based on PIR motion sensors and an array of ultrasound sensors. The “Bluesound” system is installed in doorways and uses motion sensors to detect a person’s walking direction and an ultrasound sensor array to detect the person’s height. A linear discriminant analysis can distinguish between residents with an accuracy of at least 97.4% if the height difference is at least 4cm and as little as 59.5% if the height difference is 1cm.

Yun and Lee [

12] developed a “data collection module” to detect direction, distance, speed and identity of a passing individual based on the raw data of three PIR sensor modules. Using three modules with four PIR sensors each, mounted in a hallway on two opposite walls and the ceiling, the authors achieve a 95% classification accuracy. Fang et al. [

13] use a similar system—one module composed of up to eight PIR sensors with diverse detection regions—to identify up to 10 individuals using one trained Hidden Markov Model (HMM) for each individual and assigning the identity of the HMM with the highest likelihood of having produced the observation.

Crandall and Cook [

14] use the binary signal of PIR motion sensors, contact switches and other smart home sensors to train Markov models on labelled data of multiple residents. Each model generates a resident identifier based on a fixed-length sequence of sensor events. The models achieve an identification accuracy of up to 90%. The data is recorded in the living lab at the Washington State University’s Center of Advanced Studies in Adaptive Systems, which is also the source of the data in our first evaluation.

Zeng et al. [

15] show that two residents can be distinguished with the help of WiFi signal analysis based on differences in gait with 92% accuracy. The “WiWho” system is trained on Channel State Information from a wifi signal that is optimized to separate based on stepping and walking parameters.

All identification approaches described here have one thing in common—they rely on labelled data to train a supervised learning system. Supervised learning brings certain advantages—the correctness of the system can be verified immediately after learning and the residents are not just separated but identified. However, the recording of training data is an elaborate task, which usually takes several hours, if not days, during which the system cannot be used. Furthermore, if these systems are to be used in hundreds or thousands of households, the labelling of said data will be a costly requirement.

3. Approach

Common smart home sensors, such as motion sensors, contact switches and light barriers usually provide a binary signal (activity—no activity) that contains no identifying information. We hypothesize, however, that tracks of such binary signals may provide sufficient information to separate the data into clusters that represent each resident’s activity.

3.1. Data Preprocessing

The idea of identification based on activity data relies on the assumption that people are discriminable from another based on the features and metadata of their activities. People might be distinguishable by their daily routine, such as presence and absence during specific times of the day. Other factors might be the presence in specific areas of the house such as separated bedrooms or a home office and motion parameters such as gait speed. Furthermore, metadata such as temporal overlap of tracks may provide additional information to improve the identification procedure.

To generate the required data, we first separate the data of multiple residents collected in living spaces equipped with ambient sensors with a multi-target tracking algorithm. This algorithm separates the stream of sensor events into tracks based on spatial adjacency of the triggered sensors (“tracking on a graph”). The result is a database of tracks consisting of sensor signals and associated timestamps signifying human motion. The tracks vary in length, both temporally and in the number of sensor events. The length of tracks as well as their accuracy depend on the number of people present, their spatial proximity, the range of each sensor and the number of sensors installed. The premises and the sensor setups used are presented in

Section 4. The tracking algorithm is described in Müller et al. [

8].

For the clustering and classification procedures, for each track we extract

day of the week,

time of day, represented as sine and cosine so as to encode the cyclical nature of time,

a binary value for each sensor in the graph representing whether it was triggered during the track or not, and

relative gait speed, calculated by the median transition time between all pairs of adjacent sensors.

3.2. Resident Discrimination

3.2.1. Supervised

In order to verify our hypothesis that the data produced by the tracking algorithm lends itself to a separation of two or more residents, we first label the tracks produced from the WSU living lab data with IDs 1 and 2 for Resident A and B and run the labelled data through a classification algorithm. If a supervised learning method shows that the two residents can be separated with sufficient accuracy based on the track data, we can continue to find an unsupervised method, so that the system can run without human interference, learning periods and manual labelling. If, however, the classifier shows poor performance, it is unlikely that an unsupervised algorithm will be able to perform satisfactorily.

As the classifier, we chose a C4.5 decision tree [

16] as implemented in the Waikato Environment for Knowledge Acquisition (WEKA) [

17]. We train the decision tree with ten-fold cross validation with default parameters (confidence threshold for pruning = 0.25, minimum number of instances per leaf = 2) on a database of 11000 sensor events. Gait speed was not considered in this evaluation.

97.98% of tracks were correctly associated with another, with a weighted precision and recall averaged over the two classes for both residents of 0.98. The classifier also gives insight into the separation criteria of the tracks. The average merit (“merit” is based on the order of selection of classification criteria of the algorithm, which in turn is based on the information gain of said criterion at each branching step. It is computed by summing the rank (1 for the criterion with the highest information gain, 2 for the second, etc.) for this criterion over all folds and dividing by the number of folds (in our case 10)) for the criteria is: 0.688, = 0.118 and = 0.021. This shows us that (a) unsupervised learning might be successful and (b) the location plays a significant role in the classification and the role of the day-of-the-week feature is negligible.

3.2.2. Unsupervised

The classification procedure shows that the activity of the two residents is generally distinguishable. However, as described in

Section 2, we reject the idea of a system that has the collection and labeling of data as a requirement. Instead, we seek an unsupervised learning method that is able to separate the tracks of two or more residents without a tedious setup process.

Fuzzy Clustering

A simple clustering algorithm such as K-Means [

18] could be applied. However, we assume that there is an intra-individual variation of activity characteristics that might cause clusters to overlap. For example, gait speed might be slower in the morning than at noon and the daily rhythm might vary by several minutes.

In order to accommodate these possible variations, we apply Fuzzy C-Means [

19], a fuzzy clustering algorithm. This algorithm will not return a strict separation of the data into clusters but a matrix of cluster membership probabilities. The closer a data point is to the cluster center, the higher is its membership probability.

The fuzzy clustering approach also allows us to further filter the data based on the membership probabilities—we can choose to not include data in further analysis where the maximum membership probability is less than a certain percentage. In a two-cluster scenario, this number could be 60 or 70%.

Constrained Clustering

In the fuzzy clustering approach, we use day of week, time of day, location and time difference (i.e., gait speed) between sensors as features. However, this approach ignores important meta-information in the dataset—tracks that overlap in time cannot originate from the same person. Since this might be useful information in the process, we also employed COP K-Means clustering [

20] in order to be able to include them in the clustering decision.

Beside minimising the distance of objects to a cluster center, constrained clustering techniques also take into account a set of constraints that describe the relationship between data points. In the case of COP K-Means, these are

must-link and

cannot-link constraints. Each of these sets contains a two-column matrix, each line describing a pair of data points that either belong (must-link) or do not belong (cannot-link) to the same cluster [

20].

Constrained Fuzzy Clustering

To combine the advantages of fuzzy and constrained clustering, we lastly use an implementation of Constrained Evidential C-Means (CECM) [

21]. In evidential clustering, the cluster membership of a data point is described by a belief function. CECM is a variant that takes pairwise must-link and cannot-link constraints into account. The constraints are integrated into the cost function, whereby—unlike in COP K-Means—constraints may contradict each other. Each constraint that is not satisfied by a cluster association reduces the value of the belief function for this association.

Equation (

1) shows the cost of violating constraints:

and

are the set of must-link and cannot-link constraints and

and

describe the plausibilities of objects

and

belonging and not belonging to the same class. Equation (

2) shows the update to the original Evidential C-Means cost function

, where

M is the cluster association matrix and

V describes the cluster centers.

controls the weight balance between the constraint violations and the cluster center distance.

4. Evaluation

The procedures described in

Section 3 are tested on two datasets, one recorded in a living lab and one recorded in the home of an elderly couple situated in an assisted living facility. Both datasets encompass regular daily activities; we do not filter or differentiate by the type of activity residents are engaging in, as our aim is to track and separate them across any activity and location throughout daily life.

4.1. Dataset 1: Living Lab

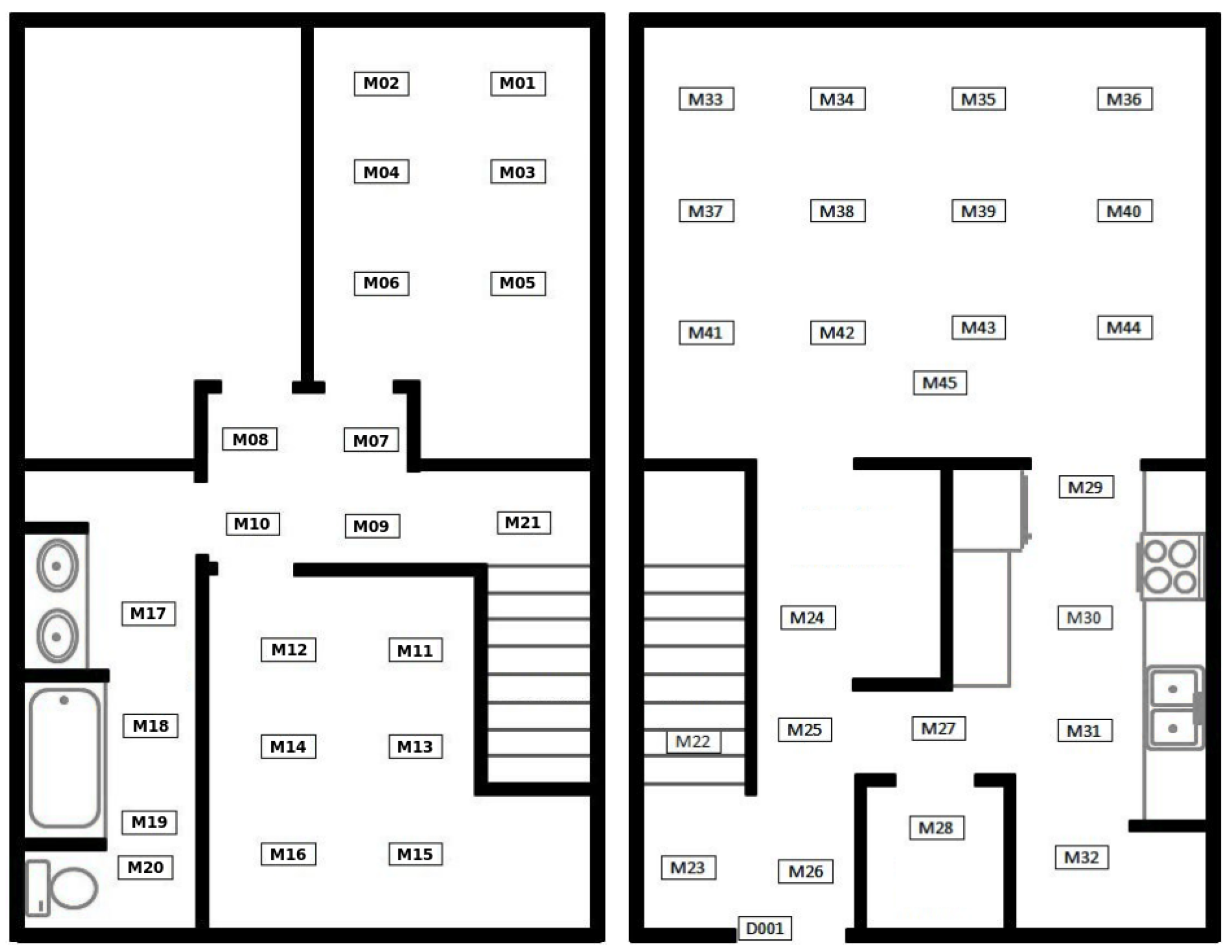

The data used for this evaluation was recorded at the University of Washington’s Center for Advanced Studies in Adaptive Systems (CASAS) [

22]. The laboratory is a 4-room, 2-story apartment and was inhabited by two volunteer students for approximately 8 months. The laboratory is equipped with various sensor technologies but for this evaluation we consider the data recorded by the 45 motion sensors only as they already provide a very thorough coverage and precision with low amounts of noise. Each sensor is mounted on the ceiling and pointing downwards to cover an area of approximately 1.5 × 1.5 m. The layout of the laboratory and the sensor placement is depicted in

Figure 1.

Due to our specific interest in multi-target tracking, we focus on data for which both residents were present and active. The data was retroactively annotated with the identity of the resident based on video recordings. We chose the first 20 time frames for which the following conditions were met:

The time frame is at least 20 min long or contains at least 300 sensor events,

none of the residents remained in one room for the whole time frame, and

neither resident is sleeping (i.e., inactive) for more than 20% of the time.

The resulting time frames cover 6985 sensor events, where the tracks range from 10 to 338 events and last between 24 min and 8 h and 50 min.

4.2. Dataset 2: Field Trial

The flat equipped for the field trial is part of a retirement complex and inhabited by a couple (75 and 82 years) with separate bedrooms.

The floor plan of the flat with the location of the sensors and actuators is shown in

Figure 2. The flat is about 80 m

in size. In total, we are using 38 sensors and actuators installed in the flat—10 passive-infrared motion sensors (

M), 11 contact sensors on doors and windows (

D), 11 light switches (

L) and 6 roller shutter actuators (

R). The data contains 24 h of data or 4083 events spread across 230 tracks, during which both residents were present at all times. For privacy reasons, no video recording was possible. Therefore, the tracks were labelled by hand using a blueprint of the flat and the timestamps of sensor events.

5. Results

The tracking algorithm used in the data preprocessing step can be inaccurate and produce tracks that contain data from multiple residents (referred to here as “noise”). Therefore, we first test all procedures on a subset of the data which has been verified to be error-free. Additionally, we will test the procedures also on the whole dataset which contains tracking errors. That way, we can determine the correctness of an individual procedure and also determine its utility under realistic conditions.

5.1. Evaluation 1: Living Lab

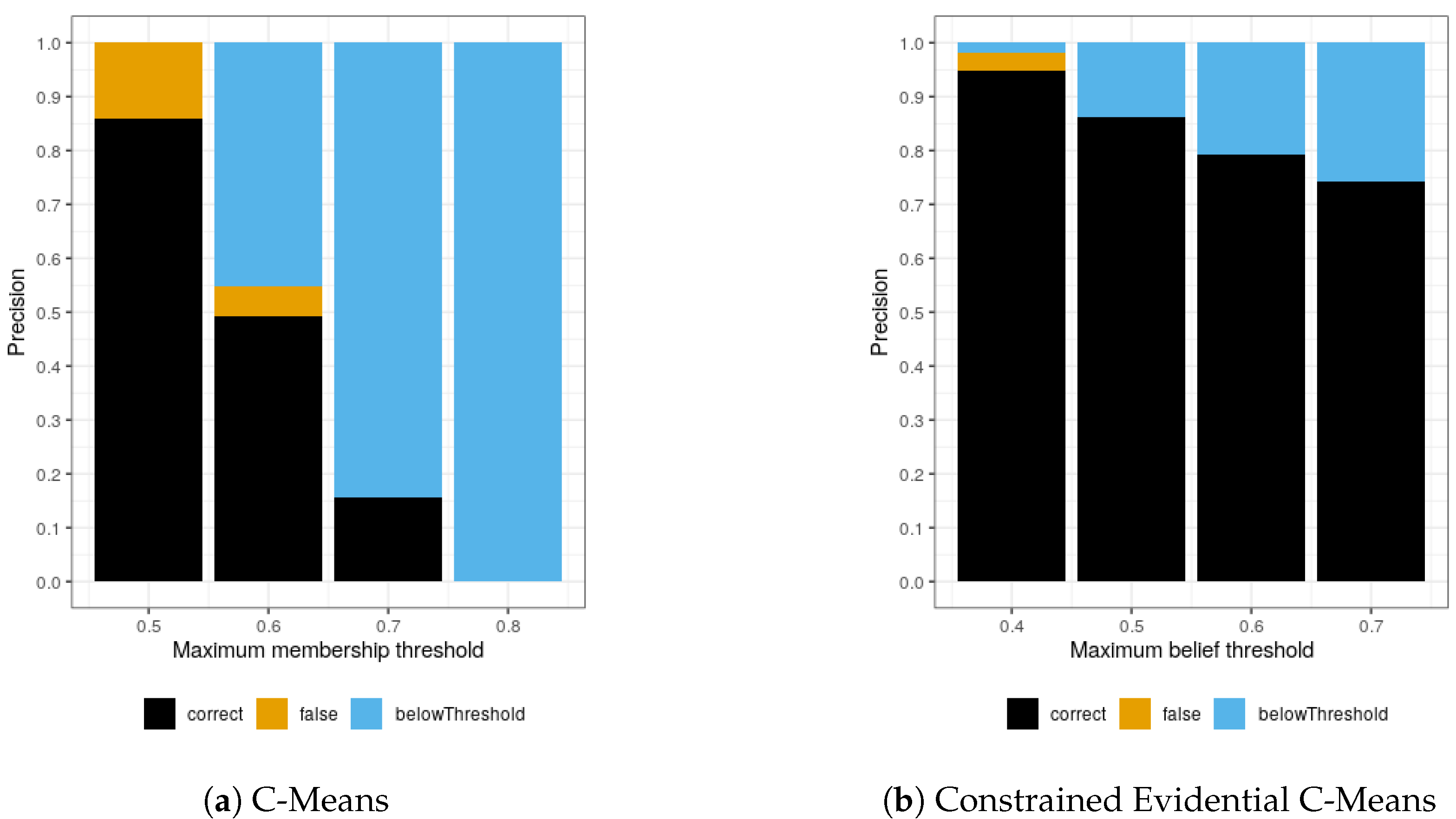

5.1.1. Fuzzy Clustering

After some preliminary tests, the fuzzifier value was set to 2. While the fuzzifier may be subject to further analysis, tests showed that a higher value merely stretched the results across larger filtering values, thus adding no benefit to the analysis but for more fine-grained control over the filtering values.

The first column of

Figure 3a shows that 85.9% of tracks are correctly associated to another using Fuzzy C-Means membership values (Rand Index = 85.8). At a filter value of 0.5, no filtering happens in a two-cluster scenario. When filtering data points where the maximum membership value (MMV) is less than 0.6, 45% of data is filtered but of the remaining data 89.7% is correctly associated. When filtering MMVs of less than 0.7, 84.5% of data is filtered but 100% of the remaining data is associated correctly.

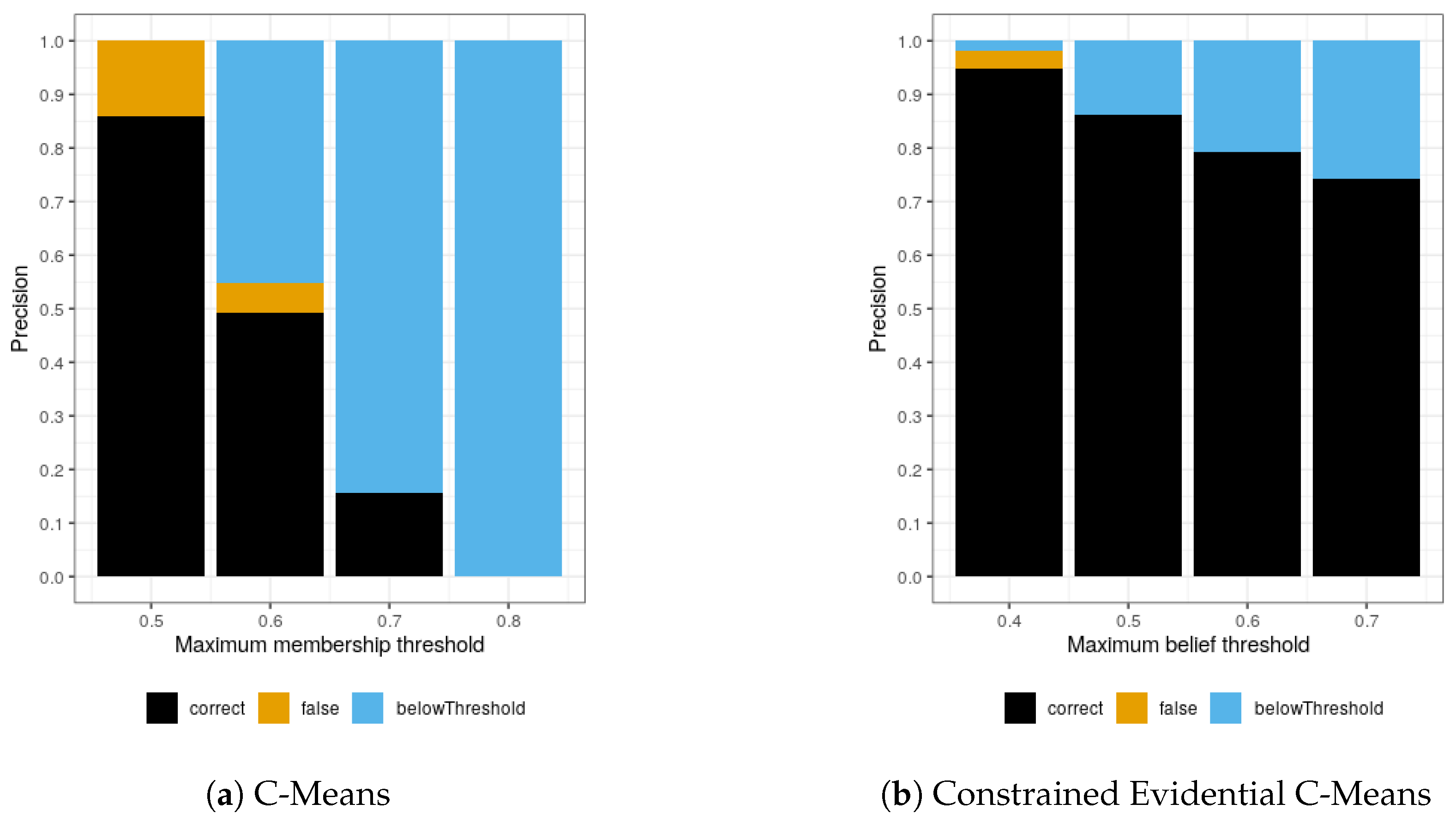

The performance drastically decreases with the introduction of noisy data.

Figure 4a shows a correct association of 60.7% (Rand Index 0.68). No data points have an MMV as high as 0.6.

5.1.2. Constrained Clustering

COP K-Means produced a 91.5% precise clustering (Rand Index = 0.92). Due to the nature of k-means clustering, no further filtering can be performed. The precision drops to 58.0% (Rand Index = 0.67) with the noisy dataset.

5.1.3. Constrained Fuzzy Clustering

Figure 3b shows that filtering MMVs below 0.4 produces a 96.5% correct clustering and a Rand Index of 0.97. When filtering below 0.5 MMV or more, no errors remain. At this point (after removing outliers and filtering), we are left with 70% of the original data.

As with the fuzzy clustering, the precision drops significantly (error rate of 34.6%, Rand Index = 0.71) when the data is noisy (see

Figure 4b).

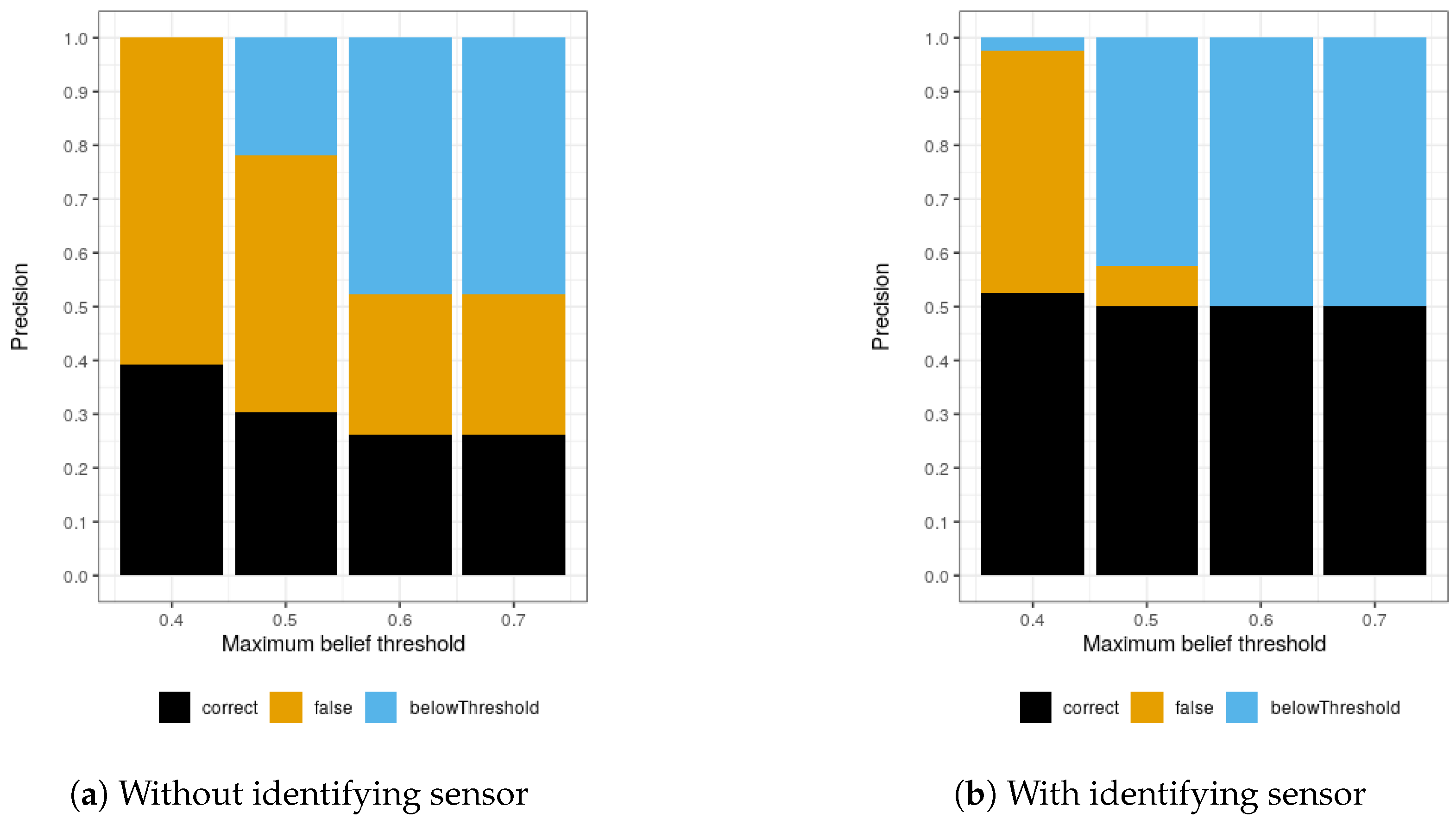

5.2. Evaluation 2: Identifying Sensor Areas

In reference to previous work [

10] in which a single identifying sensor in a central location is added to the system, we introduce a second set of constraints. Based on our knowledge of residents having their own bedrooms, we associate one sensor—a sensor of which we know that is mostly triggered by one of the residents, in our case a motion sensor in the bedroom—with each resident. These constraints rely on the fact that certain locations in a home can be attributed to the activity of a single individual with high probability, such as separate bedrooms or a home office. While this data cannot guarantee the identity of the individual, it might help us create more precise clusters which then improve the clustering of

all tracks, even those that do not pass those sensors. These constraints are added to the list of cannot-link constraints constructed from temporal overlap of tracks.

As described in

Section 3, CECM does not consider the constraints axiomatic but instead adds an error metric to the cost function based on the number of violated constraints. Because of this, the “identifying sensors” do not have to be triggered by one resident only; it does not break the metric if someone else triggers the sensor occasionally.

Figure 5 shows that adding one identifying sensor region for each resident has a significant impact on the clustering precision. While the error rate remains high (12.5%) when filtering MMVs up to 0.5 (Rand Index = 0.85), 95.5% are correctly assigned above that. The error completely disappears when filtering maximum beliefs of 0.7 or less. At this point, we are left with 17% of the original data.

5.3. Evaluation 3: Field Trial

To confirm the results of the first evaluation we installed a similar but more realistic setup in the home of an elderly couple. Here, too, the residents have separate bedrooms. However, during the annotation process, it became apparent that, while the two residents sleep in their separate bedrooms, both inhabited either bedroom during the day. This suggests that an identification process using location will not work as efficiently here as in the first evaluation.

We ran CECM on the whole dataset twice, once using temporal overlap constraints only, once including the identifying sensors.

Figure 6a confirms the observation from the labelling procedure—location is a less identifying feature in this household than in the living lab, therefore the clustering precision is a lot lower. Even when filtering data points with a maximum cluster membership belief of less than 0.7, there are as many correctly as incorrectly clustered tracks.

Figure 6b shows that adding one identifying sensor region for each resident has a significant impact on the clustering precision. While the rate of false cluster assignments is still high when filtering low membership beliefs, 86.9% are correctly assigned when filtering MMVs of under 0.5 (Rand Index = 0.87). The error completely disappears when filtering maximum beliefs of 0.6 or less. At this point, we are left with 26% of the original data.

6. Discussion

Previous research on identification and separation of residents in smart homes has exclusively utilised body-worn sensors or biometric sensors and supervised learning techniques. To enable a less costly and time-consuming procedure, we explored several clustering techniques in order to separate activity data of multiple residents in an unsupervised manner.

Our experiments showed that, for the datasets used in our experiments, location was the primary predictor for the identity of a resident. Day of week proved to be useless as a predictor in our datasets. Gait speed also proved difficult—first, there was great variation of gait speed in all datasets. Second, for n sensors there are, on average, pairs of adjacent sensors. Thus, for our dataset with 45 sensors, there are an additional 135 new features for gait speed alone. This increased state size requires more data to accurately measure than the few days of data we use.

The first evaluation has shown that two residents can be well separated based on binary activity sensor data if they show significant differences in their whereabouts during presence. Both fuzzy and constrained fuzzy clustering successfully separated tracks of two residents based on location mostly. COP K-Means, a constrained k-Means algorithm, proved unsuitable for the task. First, the algorithm performs a “hard” clustering, which means it does not leave room for further filtering. Second, it treats the must-link and cannot-link constraints as irrevocable—if any two constraints are contradictory, the clustering will simply fail. Although contradicting constraints might be rare in our dataset, errors introduced by the tracking algorithm might cause this procedure to fail.

The evaluation further showed that noise in the tracking data (errors in data association during the tracking procedure) significantly reduces the precision of both clustering techniques. It is likely that false associations of sensor events to residents during the tracking pre-processing causes the clustering algorithms to dismiss location as an important clustering feature, thus causing poor results.

To compensate for the noise, we finally introduced an identifying sensor area for each resident to be used to introduce cannot-link constraints for constrained clustering. The CECM algorithm does not treat the constraints as axiomatic but rather as an extra parameter in the cost function, whereby each violated constraint increases the cost. As a result, we are able to correctly associate 96% and 100% of two datasets of two residents after filtering with a maximum cluster belief of less than 0.6. It should be noted, however, that the data used in the evaluation stems from two-person households. Those are—beside one-person households—the ones most commonly found in the target demographic of assistive technologies. It must be assumed that the clustering error rate would increase with increasing number of people present.

The approach suggested in this article does not solve the identification problem. Identification requires either a body-worn sensor or supervised learning. However, our results show that, at least for a subset of the data, it is sufficient to provide vague identifying information concerning a small area of the monitored space to correctly associate large amounts of data.

Future work will compare these results to ambient sensors combined with body-worn sensors in order to establish whether a clustering can be constrained using short periods of data tagged by a body-worn sensor. The good results using “identifying sensor” constraints must further be compared to the performance given an actual identifying sensor, whether this is a centrally located RFID reader or a biometric sensor.

Ultimately, the tracking and identification precision required from a smart home system depends on the application. To determine the changes in gait speed of an ambulant care patient, it is sufficient to identify them once a day while the cost of false identification is high. To enable personalised automation, such as light switching or heating, the resident must be permanently identified but the consequences of a wrong identification are less critical. In safety-critical applications, a biometric or body-worn solution is preferable. For all other cases, we have shown a way to solve the problem without additional hardware or complicated setup.