Abstract

Recently, with the development of remote sensing and computer techniques, automatic and accurate road extraction is becoming feasible for practical usage. Nowadays, accurate extraction of road information from satellite data has become one of the most popular topics in both remote sensing and transportation fields. It is very useful for applying this technique to fast map updating, construction supervision, and so on. However, as there is usually a huge volume of information provided by remote sensing data, an efficient method to refine the big volume of data is important in corresponding applications. We apply deep convolution network to perform an image segmentation approach, as a solution for extracting road networks from high resolution images. In order to take advantage of deep learning, we study the methods of generating representative training and testing datasets, and develop semi-supervised leaning skills to enhance the data scale. The extraction of the satellite images that are affected by color distortion is also studied, in order to make the method more robust for more applicational fields. The GF-2 satellite data is used for experiments, as its images may show optical distortion in small pieces. Experiments in this paper showed that, the proposed solution successfully identifies road networks from complex situations with a total accuracy of more than 80% in discriminable areas.

1. Introduction

In remote sensing applications, the road network extraction plays an important role in traffic management, navigation, map updating, and city planning. Remote sensing images have the unique advantages of providing large scale information, which is very suitable for analyzing road networks efficiently [1,2]. Accurate road information, from high spatial-resolution images, has become urgently required in recent years. In the past few decades, great efforts have been made for extracting and updating the road network [3,4,5,6,7,8].

The road network has standard geometrical morphology, but generally speaking, it is not easy to extract road networks precisely from remote sensing images. The reason is that the road networks presented in real settings are usually covered by many kinds of ground objects, like vehicles, trees, and shadows. Therefore, the shape and color of roads are usually diverse in different areas. In recent years, different methods have been developed. The state-of-art methods could be divided into three typical categories: (1) outline or border extraction; (2) object detection/classification; and (3) image segmentation [9,10,11]. This paper focuses on the third category, i.e., the image scene is divided into road area and background area. If desired, the road areas can be further segmented, for example, according to the paved materials. This method aims to label each pixel with an ownership probability of different classes, which is challenging work. Recently, with the development of calculation power (GPU) and the big data concept, deep convolutional neural network (DCNN) has been widely used in image studies [12,13]. It is believed that DCNN methods have revolutionized the computer vision area with remarkable achievements. On the other hand, in the current era of big data, although various images could be easily achieved from the network for free, which actually supports the use of DCNN, these images consist of huge amounts of information, incluing both the valuable and useless ones for our specific research. Consequently, the first problem that should be solved is to effectively refine and enhanced the datasets to make them more suitable for our study.

GaoFen-2 (GF-2) is one of China’s civilian optical satellite products, with the resolution of better than 1 m. Due to the excellent spatial resolution of GF-2 image, it could be widely used in various fileds, including the road uptate. However, the reason we choose GF-2 is not for its high spatial resolution, but because GF-2 has an obvious problem in radiance calibration, which has been found in previous studies. We aim to develop road extraction methods that are feasible even in color distortion cases, which may be helpful to expand the applicational areas of GF-2 images.

Inspired by big data theory and deep learning techniques, this paper proposes a solution for using the GF-2 images to extract road networks. Firstly, the semi-supervised method is applied to generate labeled data. The benchmark road is automatic produced, and then manually revised according to the road design and construction specifications made by the transportation industry. The data with color distortion is also regarded as one of the road types. Following that, the DCNN model with deep layers was trained to learn the various road characters. The DCNN will distinguish multi-type roads from complex situations.

2. The Proposed Methods

2.1. The Problem and Task Description

As mentioned before, we use GF-2 data in this paper. GF-2 satellite was launched on 19 August 2014, which is the first optical remote sensing satellite in China with a spatial resolution superior to 1 m for panchromatic, and 4 m for multispectral. Since the first image and transmission of data was started, GF-2 has supported various studies, such as civil land observation, land and resource monitoring, map updating, and others. Table 1 shows the detailed parameters of the GF-2 satellite [14].

Table 1.

Parameters of GF-2.

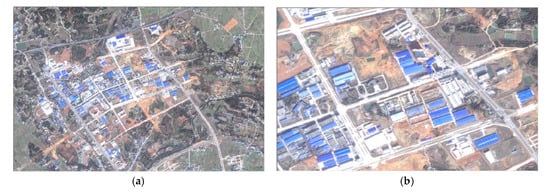

A disadvantage of GF-2 is that its images are sometimes affected by optical distortion. Actually, this is a common problem for many high resolution optical satellites. However, in GF-2, obvious radiometric distortion is a very noticeable problem when identifying road networks. Figure 1 shows a typical example of this problem, where we find that the road surface gives abnormal spectra reflectivity. The roads are paved with asphalt and concrete, shown with a different color. However, in Figure 1, we cannot differentiate them because the color of the same asphalt road is turned from dark grey into bright white. The problem is more likely to happen when the nearby objects have some certain reflectances, which may affect the satellite sensors.

Figure 1.

The optical distortion on road networks of a GF-2 image: (a) A village area with abnormally whitened road networks ; (b) magnified detail of (a).

This paper aims to handle this problem mainly by the following ways. Firstly, inspired by the basic idea of big data, we try to use data of large scale and varied information, in order to gain different conditions; as many as possible. Secondly, we use DCNN methods with very deep layers to learn the abstract features from these conditions. Morever, data enhancement and perturbations are applied to further generate enough data and avoid overfitting.

2.2. Producing Dataset by Semi-Surprised Method

For deep learning algorithm, a pixel-level labelled dataset is very crucial for model training, but for a remote sensing dataset, manually drawing regions with clear boundaries is a very time-consuming process. Nowadays, with the development of web-map services, rich information about the roads could be easily obtained from the network for free. For example, as one of the most popular web-maps, the Open Street Map (OSM) has been widely used in providing road information, including the road level, road name, and road type. In this case, by combining the OSM’s road vectors and the satellite images, it will obviously decrease the time cost in producing the road labels. More importantly, through this way, it could also help to improve the accuracy of the labels that are produced by different people. The main workload is to register the road network and satellite images to a relative coordinate, which can be performed by typical geographic information system (GIS) software. After that, we need to further improve the label accuracy, because in some areas, the map may outdated. One can use the open social platforms or free map sever, API, to search the ground place/road names of assigned coordinate ranges, providing suspected locations. And finally, we inspect and manually draw the final road regions. The paved materials of road surface can be set according to the road color . For a specific case like Figure 1, the road surface will be labeled as an “other case”, in order to distinguish it from normal situations.

After labeling the images, a subsequent pre-preprocessing and data cleaning step are required to produce the training datasets for building the model, that is, since the original image scene usually covers hundreds of square kilometers. The computer cannot handle such large data at a time. In order to generate train/test datasets, each original satellite image should be firstly clipped into several small ones, according to the specific computer settings. The following procedure of data cleaning is to remove the images with none or few road segments in it. This process is also very crucial, because the images having roads usually make up only a small proportion, as most training images have no roads on them, and the DCNN algorithms will tend to classify most pixels as background.

2.3. Road Segmentation by DCNN

There are different DCNN methods that can be used, like Unet, DeepLab, Segnet, and so on [15,16,17]. In this paper, we chose DeepLab, since it can applied with very deep layers. In order to match such large DCNN layers, data enhancement is a necessary process. Usually, the image rotation and mirror is processed to extend the training datsets by times. We can also randomly set the clipping origin of Section 2.2, and from the view of computer, this procedure will produce different object shapes.

2.4. Post-Processing and Refining

Many researchers aim to find an end-to-end way for image segmentation, but for practical applications of road verification from complex satellite data, it is desirable to apply a modified approach. For example, using a morphology algorithm to combine the broken network structures, filtering, out spots and burrs. To make the extracted road region more realistic, the extracted road segments are processed through several morphological algorithms to fill the holes, smooth the edges, connect the road segment, and finally, achieve the coarse center lines of road segments.

- (1)

- Order the initial center lines by giving a start pixel, and divide the lines into several parts according to the branch points;

- (2)

- For each segment of the lines, a group of straight line approximations can be obtained after giving an interval [18], which is 50 in this paper.

- (3)

- A group tragedy, which is inspired by [19], is finally adopted to further improve the results iteratively. To put it simply, for each three neighbor line segments, if these segments share the same direction up to a tolerance τ, they will be regarded as the same line, and a new line approximation will be made for all the points in these segments.

- (4)

- Iteratively check the current lines through (3) and finish modification.

3. Results and Disscussion

In our experiments, the GF-2 data of different locations and seasons are used for the experimental test. The multispectral image bands are fused with panchromatic bands to produce the images with the spatial resolution of 1 m. The original image scene is divided into 256 × 256 size images, producing thousands of images for training and testing. The label consists of three parts: centerline, width, and material. There are materials, including asphalt, concrete, and others. The label details have been checked manually, in order to guarantee the authenticity of the data.

Figure 2 shows the results in the country area. There is an optical distortion in this location, and we can see that the roof in the center is excessively bright in the scene, while the vegetation in the top right corner is converted to a grey color. However, as shown in Figure 2b, the proposed method extracts road segments successfully.

Figure 2.

Road extraction result: (a) Input image; (b) Output results.

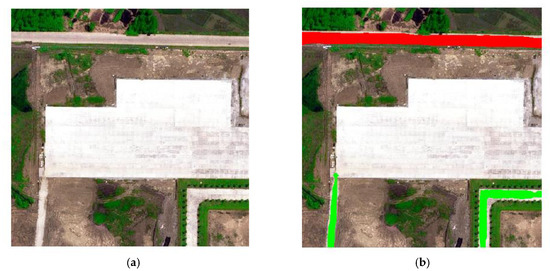

Figure 3 gives the classification results when different materials are represented in the same image scope. It can be found that there are three road segments with two different pavements, asphalt and concrete, respectively. The asphalt material is labeled as red, and concrete is represented as green. There is a parking lot in the center of this image, paved with concrete. The parking lot is not extracted, implying that the proposed method judges the object not only by colors, but also utilizes the shape information. In this image, we can see that the asphalt road is not precisely in dark color, due to the optical distortion, but the road segment is also extracted correctly.

Figure 3.

Road extraction result: (a) Input image; (b) Output results.

4. Discussion

However, there is still lots of further work needed for improvements: (1) the method should be continually tested with various areas for wide usage; (2) accurate and smoothness approximations for curve lines should be further studied.

5. Conclusions

This paper transposes DCNN to remote sensing road detection. Semi-supervised labelling method is studied to provide the applicability for a wide range of different areas. DCNN is used as a tool for feature extraction, and the precise road boundaries are achieved by post-processing, and the final results will be more consistent with the real situation. That is, a much straighter and smoother road region could be provided, with further steps towards a practical application. According to the experiments, a total correctness of approximately 80% can be obtained through our proposed method.

Author Contributions

W.X. and Y.-Z.Z. conceived this work, conducted the analyses, and wrote the paper; other authors provided theoretical guidance for this work and the paper; All authors have read and agreed to the published version of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ziems, M.; Gerke, M.; Heipke, C. Automatic road extraction from remote sensing imagery incorporating prior information and colour segmentation. In Proceedings of the PIA07—Photogrammetric Image Analysis, Munich, Germany, 19–21 September 2007. [Google Scholar]

- Wang, W.; Nan, Y.; Zhang, Y.; Wang, F.; Cao, T.; Eklund, P. A review of road extraction from remote sensing images. J. Traffic Transp. Eng. 2016, 3, 271–282. [Google Scholar] [CrossRef]

- Stoica, R.; Descombes, X.; Zerubia, J. A Gibbs Point Process for Road Extraction from Remotely Sensed Images. Int. J. Comput. Vis. 2004, 57, 121–136. [Google Scholar] [CrossRef]

- Baumgartner, A.; Steger, C.; Mayer, H.; Eckstein, W.; Ebner, H. Automatic Road Extraction Based on Multi-Scale, Grouping, and Context. Photogramm. Eng. Remote Sens. 1999, 65, 777–785. [Google Scholar]

- Gruen, A.; Li, H. Road extraction from aerial and satellite images by dynamic programming. Isprs J. Photogramm. Remote Sens. 1995, 50, 11–20. [Google Scholar] [CrossRef]

- Heipke, C.; Mayer, H.; Wiedemann, C.; Jamet, O. Evaluation of Automatic Road Extraction. Int. Arch. Photogramm. Remote Sens. Impact Factor 1997, 32, 47–56. [Google Scholar]

- Mena, J.B. State of the art on automatic road extraction for GIS update: A novel classification. Pattern Recognit. Lett. 2003, 24, 3037–3058. [Google Scholar] [CrossRef]

- Mena, J.B.; Malpica, J.A. An automatic method for road extraction in rural and semi-urban areas starting from high resolution satellite imagery. Pattern Recognit. Lett. 2005, 26, 1201–1220. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. 2016, 5967–5976.

- Rathore, M.M.U.; Paul, A.; Ahmad, A.; Chen, B.-W.; Huang, B.; Ji, W. Real-Time Big Data Analytical Architecture for Remote Sensing Application. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 8, 4610–4621. [Google Scholar] [CrossRef]

- Chi, M.; Plaza, A.; Benediktsson, J.A.; Sun, Z.; Shen, J.; Zhu, Y. Big Data for Remote Sensing: Challenges and Opportunities. Proc. IEEE 2016, 104, 2207–2219. [Google Scholar] [CrossRef]

- Mnih, V.; Hinton, G.E. Learning to Detect Roads in High-Resolution Aerial Images. In Computer Vision ECCV 2010. Number 6316 in Lecture Notes in Computer Science; Daniilidis, K., Maragos, P., Paragios, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 210–223. [Google Scholar]

- Li, P.; Zhang, Y.; Wang, C.; Li, J.; Cheng, M.; Luo, L.; Yu, Y. Road network extraction via deep learnig and line integral convolution. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1599–1602. [Google Scholar]

- Pan, T. Technical Characteristics of GaoFen-2 Satellite; China Aerospace: Beijing, China, 2015; pp. 3–9. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Wang, N.; Wu, H.; Nerry, F.; Li, C.; Li, Z.-L. Temperature and Emissivity Retrievals From Hyperspectral Thermal Infrared Data Using Linear Spectral Emissivity Constraint. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1291–1303. [Google Scholar] [CrossRef]

- Von Gioi, R.G.; Jakubowicz, J.; Morel, J.; Randall, G. LSD: A Line Segment Detector. Image Process. Line 2012, 2, 35–55. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).