Real-time Recognition of Interleaved Activities Based on Ensemble Classifier of Long Short-Term Memory with Fuzzy Temporal Windows †

Abstract

:1. Introduction

2. Methodology

- A fuzzy temporal representation of long-term and short-term activations, which define temporal sequences.

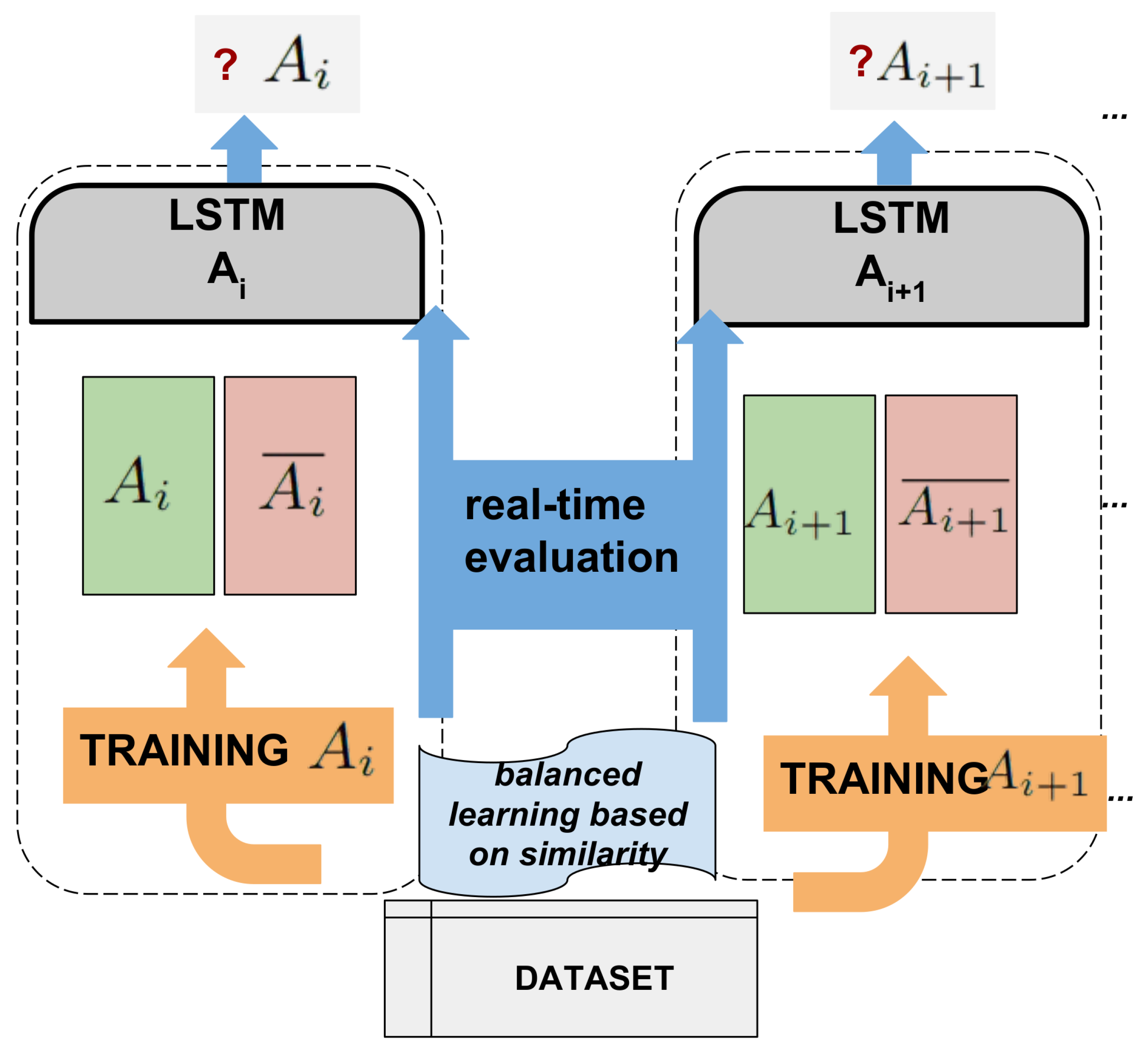

- An ensemble of of activity-based classifiers, which are defined by the suitable sequence classifier: Long Short-Term Memories (LSTM) [19].

- Balanced learning for each activity-based classifier, to avoid the imbalance problem that suffers daily activity datasets [12,13]. It is optimized by the similarity relation between activities which: (i) determines the adequate samples within the training dataset, based on the similarity with activity to learn; and (ii) filters the relevant sensors to take into account in the learning process.

2.1. Representation of Binary Sensors and Activities

2.2. Segmentation of Dataset in Time-Slots

2.3. Sensor Features Defined by Fuzzy Temporal Windows

2.4. Sequence Features of FTW

2.5. Ensemble of Classifiers for Activities

2.5.1. Balancing Learning With Similarity Relation Between Activities and Filtering of Relevant Sensors

- , defines a fixed percentage of samples corresponding to the activity to learn.

- , defines a fixed percentage of samples corresponding to any activity (Idle).

- , configures a dynamic percentage from the all other activities in the balanced-activity training dataset , which is calculated by weighting the normalized similarity degree with the percentage from the other activities:

3. Experimental Setup

- Number of FTWs=.

- Incremental FTWs defined by the Fibonacci sequence [22] .

- For balancing training dataset for each activity:

- −

- Number of training samples = 5000.

- −

- Percentage of samples from target activity .

- −

- Percentage of idle activity .

- −

- Percentage of samples corresponding to the non-target activity .

- For each LSTM activity-based classifier: learning rate = 0.003, number of neurons = 64, number of layers = 3.

- F1-coverage (F1-sc), which provides an insight into the balance between precision (), and recall () from predicted and ground truth time-slots. Although well-known in activity recognition [23], we note a key issue from this metric on time interval analysis: the false positives of an activity, far from any time interval activation, are equally computed to false positives closer to end of activities. Which is common in the end of activities more so than in interleaved activities.

- F1-interval-intersection (F1-ii), evaluates the time intervals of each activity based on: (i) the precision of predicted time intervals; which intersects to a ground truth time interval; (ii) the recall of the ground truth time intervals; which intersects with a predicted time interval.

4. Results

4.1. Discussion

5. Conclusions and Ongoing Works

Author Contributions

Acknowledgments

Conflicts of Interest

Abbreviations

| FTW | Fuzzy Temporal Window |

| LSTM | Long short-term memory |

References

- Kon, B.; Lam, A.; Chan, J. Evolution of Smart Homes for the Elderly. In Proceedings of the 26th International Conference on World Wide Web Companion, Perth, Australia, 3–7 April 2017; pp. 1095–1101. [Google Scholar]

- Bilodeau, J.; Fortin-Simard, D.; Gaboury, S.; Bouchard, B.; Bouzouane, A. Assistance in Smart Homes: Combining Passive RFID Localization and Load Signatures of Electrical Devices. In Proceedings of the 2014 IEEE International Conference on Bioinformatics and Biomedicine, Belfast, UK, 2–5 November 2014; pp. 19–26. [Google Scholar]

- Ordonez, F.; Roggen, D. Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115–139. [Google Scholar] [CrossRef] [PubMed]

- Okeyo, G.; Chen, L.; Wang, H.; Sterritt, R. Dynamic sensor data segmentation for real-time knowledge-driven activity recognition. Pervasive Mob. Comput. 2014, 10, 115–172. [Google Scholar] [CrossRef]

- Orr, C.; Nugent, C.; Wang, H.; Zheng, H. A Multi-Agent Approach to Facilitate the Identification of Interleaved Activities. DH’18. In Proceedings of the 2018 International Conference on Digital Health, Dublin, Ireland, 14–15 June 2018; pp. 126–130. [Google Scholar]

- Krishnan, N.C.; Cook, D.J. Activity recognition on streaming sensor data. Pervasive Mob. Comput. 2014, 10, 138–154. [Google Scholar] [CrossRef] [PubMed]

- Singla, G.; Cook, D.J.; Schmitter-Edgecombe, M. Tracking activities in complex settings using smart environment technologies. Int. J. Biosci. Psychiatry Technol. 2009, 1, 25. [Google Scholar]

- Yan, S.; Liao, Y.; Feng, X.; Liu, Y. Real time activity recognition on streaming sensor data for smart environments. In Proceedings of the International Conference on Progress in Informatics and Computing (PIC), Shanghai, China, 23–25 December 2016; pp. 51–55. [Google Scholar]

- Cicirelli, F.; Fortino, G.; Giordano, A.; Guerrieri, A.; Spezzano, G.; Vinci, A. On the Design of Smart Homes: A Framework for Activity Recognition in Home Environment. J. Med. Syst. 2016, 40, 200. [Google Scholar] [CrossRef] [PubMed]

- Banos, O.; Galvez, J.M.; Damas, M.; Pomares, H.; Rojas, I. Window size impact in human activity recognition. Sensors 2014, 14, 6474–6499. [Google Scholar] [CrossRef] [PubMed]

- Espinilla, M.; Medina, J.; Hallberg, J.; Nugent, C. A new approach based on temporal sub-windows for online sensor-based activity recognition. J. Ambient Intell. Hum. Comput. 2018, 1–13. [Google Scholar] [CrossRef]

- Ordonez, F.J.; de Toledo, P.; Sanchis, A. Activity recognition using hybrid generative discriminative models on home environments using binary sensors. Sensors 2013, 13, 5460–5477. [Google Scholar] [CrossRef] [PubMed]

- Van Kasteren, T.; Noulas, A.; Englebienne, G.; Krose, B. Accurate activity recognition in a home setting. In Proceedings of the 10th International Conference on Ubiquitous Computing, Seoul, Korea, 21–24 September 2008; pp. 1–9. [Google Scholar]

- Chawla, N.V.; Bowyer, K.W.; Hall, L.O.; Kegelmeyer, W.P. SMOTE: Synthetic minority over-sampling technique. J. Artif. Intel. Res. 2002, 16, 321–357. [Google Scholar] [CrossRef]

- Fortin-Simard, D.; Bilodeau, J.; Gaboury, S.; Bouchard, B.; Bouzouane, A. Human Activity Recognition in Smart Homes: Combining Passive RFID and Load Signatures of Electrical Devices. In Proceedings of the IEEE Symposium on Intelligent Agents (IA), Orlando, FL, USA, 9–12 December 2014; pp. 22–29. [Google Scholar]

- Ye, C.; Sun, Y.; Wang, S.; Yan, H.; Mehmood, R. ERER: An event-driven approach for real-time activity recognition. In Proceedings of the 2015 International Conference on Identification, Information, and Knowledge in the Internet of Things, Beijing, China, 22–23 October 2015; pp. 288–293. [Google Scholar]

- Riboni, D.; Sztyler, T.; Civitarese, G.; Stuckenschmidt, H. Unsupervised recognition of interleaved activities of daily living through ontological and probabilistic reasoning. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 1–12. [Google Scholar]

- Medina, J.; Zhang, S.; Nugent, C.; Espinilla, M. Ensemble classifier of Long Short-Term Memory with Fuzzy Temporal Windows on binary sensors for Activity Recognition. Expert Systems with Applications. Expert Syst. Appl. 2018, 114, 441–453. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Medina, J.; Espinilla, M.; Nugent, C. Real-time fuzzy linguistic analysis of anomalies from medical monitoring devices on data streams. In Proceedings of the 10th EAI International Conference on Pervasive Computing Technologies for Healthcare, Cancun, Mexico, 16–19 May 2016; ICST (Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering): Gent, Belgium, 2016; pp. 300–303. [Google Scholar]

- Medina, J.; Espinilla, M.; Zafra, D.; Martínez, L.; Nugent, C. Fuzzy Fog Computing: A Linguistic Approach for Knowledge Inference in Wearable Devices. In Proceedings of the International Conference on Ubiquitous Computing and Ambient Intelligence, Philadelphia, PA, USA, 7–10 November 2017; Springer: Cham, Switzerland, 2017; pp. 473–485. [Google Scholar]

- Stakhov, A. The golden section and modern harmony mathematics. In Applications of Fibonacci Numbers; Springer: Dordrecht, The Netherlands, 1998; pp. 393–399. [Google Scholar]

- Van Kasteren, T.L.M.; Englebienne, G.; Krose, B.J. An activity monitoring system for elderly care using generative and discriminative models. Pers. Ubiquitous Comput. 2010, 14, 489–498. [Google Scholar] [CrossRef]

| F1-ii | F1-sc | ||||||

|---|---|---|---|---|---|---|---|

| 0 slot margin | 30s | 84.54 | 80.75 | 79.13 | 70.95 | 70.78 | 66.98 |

| 60s | 85.96 | 85.87 | 86.35 | 73.03 | 73.89 | 72.52 | |

| 90s | 81.50 | 87.60 | 90.47 | 68.52 | 75.99 | 75.48 | |

| 1 slot margin | 30s | 88.30 | 85.46 | 87.34 | 73.97 | 72.91 | 69.56 |

| 60s | 88.09 | 89.26 | 91.05 | 74.31 | 77.16 | 74.77 | |

| 90s | 84.23 | 91.96 | 95.61 | 70.86 | 77.94 | 77.58 | |

| = 30 s | = 60 s | = 90 s | |||||||

|---|---|---|---|---|---|---|---|---|---|

| t1 | 90.06 | 86.17 | 92.83 | 91.16 | 94.87 | 98.29 | 91.19 | 92.43 | 100.00 |

| t2 | 91.35 | 84.26 | 90.40 | 85.69 | 87.37 | 90.41 | 85.61 | 93.39 | 93.37 |

| t3 | 82.58 | 85.05 | 88.95 | 86.21 | 90.87 | 90.23 | 78.29 | 93.74 | 91.04 |

| t4 | 82.00 | 71.05 | 71.67 | 81.28 | 77.74 | 75.65 | 75.65 | 86.04 | 86.72 |

| t5 | 91.78 | 89.07 | 89.92 | 95.08 | 95.85 | 95.07 | 87.07 | 91.84 | 96.58 |

| t6 | 88.91 | 92.32 | 83.02 | 90.84 | 96.27 | 95.62 | 90.18 | 93.93 | 100.00 |

| t7 | 93.30 | 85.91 | 89.26 | 85.28 | 87.64 | 89.50 | 85.15 | 90.36 | 98.15 |

| t8 | 86.45 | 89.83 | 92.72 | 89.19 | 83.44 | 93.59 | 80.68 | 94.00 | 98.99 |

| t9 | 88.30 | 85.46 | 87.34 | 88.09 | 89.26 | 91.05 | 84.23 | 91.96 | 95.61 |

| = 30 s | = 60 s | = 90 s | |||||||

|---|---|---|---|---|---|---|---|---|---|

| t1 | 81.50 | 76.64 | 77.82 | 80.87 | 84.60 | 80.94 | 75.17 | 81.12 | 81.40 |

| t2 | 76.74 | 67.88 | 64.55 | 73.99 | 75.72 | 74.56 | 75.85 | 76.89 | 72.87 |

| t3 | 65.43 | 68.03 | 66.28 | 67.85 | 77.21 | 70.75 | 68.94 | 80.11 | 79.23 |

| t4 | 63.07 | 50.49 | 50.20 | 60.47 | 61.56 | 46.15 | 58.42 | 61.73 | 46.15 |

| t5 | 85.96 | 88.73 | 85.97 | 85.51 | 86.01 | 84.23 | 76.99 | 86.90 | 87.48 |

| t6 | 70.67 | 75.63 | 58.03 | 75.28 | 80.41 | 74.60 | 67.11 | 73.99 | 76.96 |

| t7 | 77.40 | 72.31 | 68.66 | 75.49 | 75.84 | 76.55 | 76.21 | 75.67 | 83.51 |

| t8 | 70.99 | 83.56 | 84.94 | 75.03 | 75.93 | 90.39 | 68.15 | 87.13 | 92.99 |

| t9 | 73.97 | 72.91 | 69.56 | 74.31 | 77.16 | 74.77 | 70.86 | 77.94 | 77.58 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Quero, J.M.; Orr, C.; Zang, S.; Nugent, C.; Salguero, A.; Espinilla, M. Real-time Recognition of Interleaved Activities Based on Ensemble Classifier of Long Short-Term Memory with Fuzzy Temporal Windows. Proceedings 2018, 2, 1225. https://doi.org/10.3390/proceedings2191225

Quero JM, Orr C, Zang S, Nugent C, Salguero A, Espinilla M. Real-time Recognition of Interleaved Activities Based on Ensemble Classifier of Long Short-Term Memory with Fuzzy Temporal Windows. Proceedings. 2018; 2(19):1225. https://doi.org/10.3390/proceedings2191225

Chicago/Turabian StyleQuero, Javier Medina, Claire Orr, Shuai Zang, Chris Nugent, Alberto Salguero, and Macarena Espinilla. 2018. "Real-time Recognition of Interleaved Activities Based on Ensemble Classifier of Long Short-Term Memory with Fuzzy Temporal Windows" Proceedings 2, no. 19: 1225. https://doi.org/10.3390/proceedings2191225

APA StyleQuero, J. M., Orr, C., Zang, S., Nugent, C., Salguero, A., & Espinilla, M. (2018). Real-time Recognition of Interleaved Activities Based on Ensemble Classifier of Long Short-Term Memory with Fuzzy Temporal Windows. Proceedings, 2(19), 1225. https://doi.org/10.3390/proceedings2191225