Meaning Generation for Animals, Humans and Artificial Agents. An Evolutionary Perspective on the Philosophy of Information †

Abstract

:1. Introduction

2. Meaning Generation in Animals, Humans and Artificial Agents. The Meaning Generator System

3. Philosophy of Information and the MGS

- Meaningfulness. The MGS considers meaningful and meaningless information. PI deals with semantic information as well formed, meaningful and truthful data [5].

- Meaning generation. The function of meaning generation is at the core of the MGS approach. PI does not prioritize that aspect in semantic information.

- Evolution. The MGS is an evolutionary approach to meaning generation. Current PI does not explicitly consider evolution as an active part of semantic information.

- Veridicality thesis. PI considers that semantic information has to encapsulate truthfulness. The MGS does not use truth as a component of meaningful information.

- Ontological status of information. PI addresses that question in terms of ontological category. MGS proposes an option with potential local constraints in a pre-biotic world.

Conflicts of Interest

References

- Menant, C. Information and meaning. Entropy 2003, 5, 193–204. Available online: http://philpapers.org/rec/MENIAM-2 (accessed on 18 July 2017).

- Menant, C. Computation on Information, Meaning and Representations. Proposal for an Evolutionary Approach. In Information and Computation. Essays on Scientific and Philosophical Understanding of Foundations of Information and Computation; Dodig-Crnkovic, G., Burgin, M., Eds.; World Scientific: Singapore, 2011; pp. 255–286. Available online: https://philpapers.org/rec/MENCOI (accessed on 18 July 2017).

- Menant, C. Turing Test, Chinese Room Argument, Symbol Grounding Problem. Meanings in Artificial Agents. In APA Newsletter Fall 2013, 2013. Available online: https://philpapers.org/rec/MENTTC-2 (accessed on 18 July 2017).

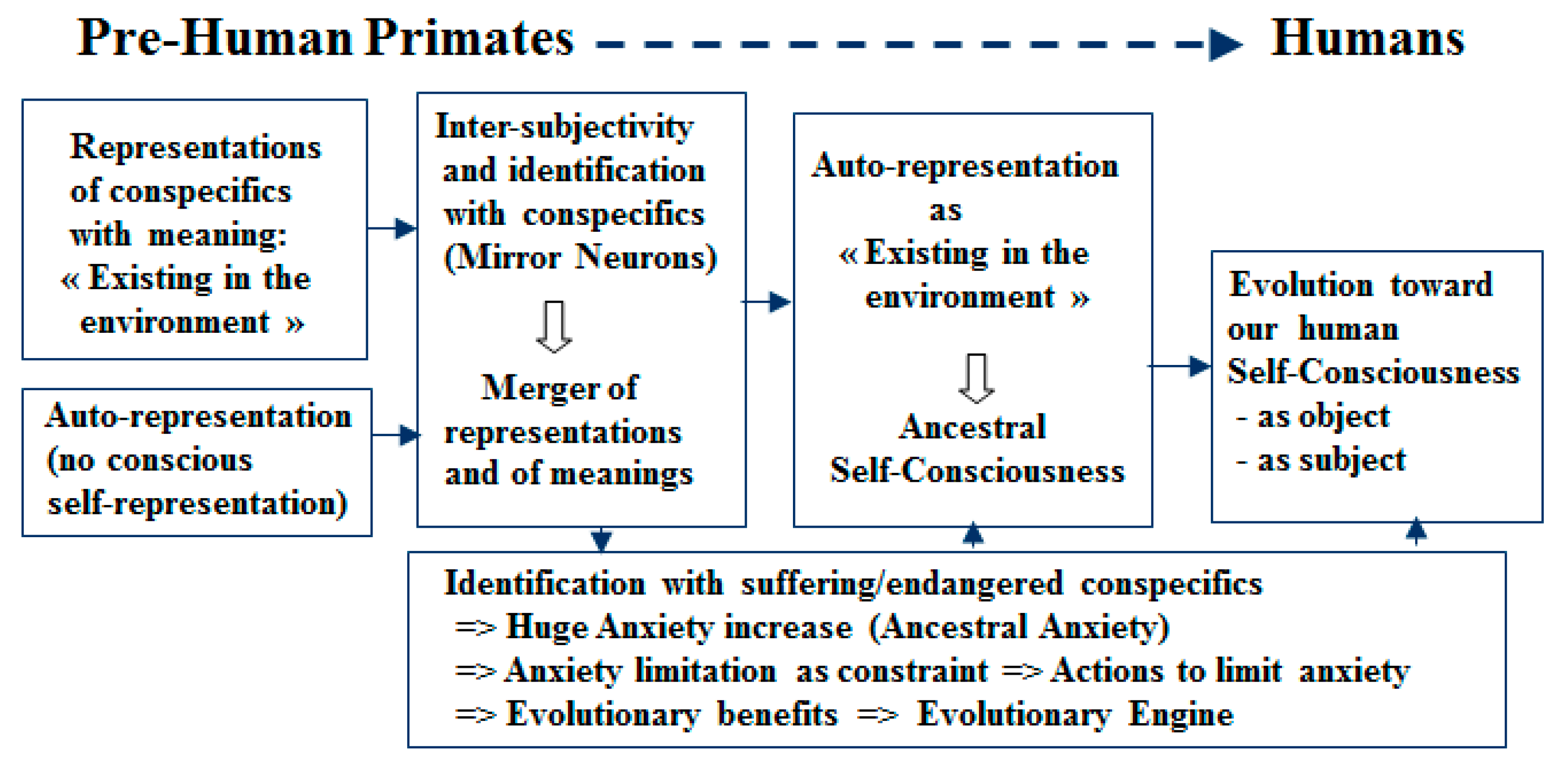

- Menant, C. Proposal for an Evolutionary Nature of Self-Consciousness. 2014. Available online: https://philpapers.org/rec/MENPFA-3 (accessed on 18 July 2017).

- Floridi, L. The Philosophy of Information; Oxford University Press: Oxford, UK, 2012. [Google Scholar]

- Taddeo, M. Floridi, L. Solving the symbol grounding problem: A critical review of fifteen years of research. J. Exp. Theor. Artif. Intell. 2005, 17, 419–445. Available online: https://philpapers.org/rec/TADSTS (accessed on 18 July 2017). [CrossRef]

- Taddeo, M.; Floridi, L. A Praxical Solution of the Symbol Grounding Problem. Minds Mach. 2007, 17, 369–389. [Google Scholar] [CrossRef]

- Menant, C. Biosemiotics, Aboutness of Meaning and Bio-intentionality. Proposal for an Evolutionary Approach. In Proceedings of the Gatherings in Biosemiotics 2015, Copenhagen, Denmark, 30 June–4 July 2015; Available online: https://philpapers.org/rec/MENBAM-2 (accessed on 18 July 2017).

- Burgin, M. Is information some kind of data? In Proceedings of the Third Conference on the Foundation of information Science (FIS 2005), Paris, France, 4–7 July 2005; pp. 1–31. Available online: http://www.mdpi.net/fis2005/F.08.paper.pdf (accessed on 18 July 2017).

- Dodig Crnkovic, G.; Hofkirchner, W. Floridi’s open problems in philosophy of information, ten years later. Information 2011, 2, 327–359. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Menant, C. Meaning Generation for Animals, Humans and Artificial Agents. An Evolutionary Perspective on the Philosophy of Information. Proceedings 2017, 1, 94. https://doi.org/10.3390/IS4SI-2017-03942

Menant C. Meaning Generation for Animals, Humans and Artificial Agents. An Evolutionary Perspective on the Philosophy of Information. Proceedings. 2017; 1(3):94. https://doi.org/10.3390/IS4SI-2017-03942

Chicago/Turabian StyleMenant, Christophe. 2017. "Meaning Generation for Animals, Humans and Artificial Agents. An Evolutionary Perspective on the Philosophy of Information" Proceedings 1, no. 3: 94. https://doi.org/10.3390/IS4SI-2017-03942

APA StyleMenant, C. (2017). Meaning Generation for Animals, Humans and Artificial Agents. An Evolutionary Perspective on the Philosophy of Information. Proceedings, 1(3), 94. https://doi.org/10.3390/IS4SI-2017-03942