1. Introduction

Consider a fractional Brownian motion (fBm)

with Hurst index

. That is,

is a centered Gaussian process with covariance function of the form

Let

be the

kth increment of fBm taken in subsequent integer points

. Then, we obtain from (

1) that

Due to the stationarity of the increments,

These subsequent increments

,

, create a process that is named fractional Gaussian noise (fGn). The main properties of this relatively simple discrete-time Gaussian process are stationarity and the presence of what we call memory. The length of memory is infinite; however, its intensity depends on Hurst index

H, which in turn should be the object of statistical estimation. For the properties of fGn and statistical estimation, see, e.g., [

1,

2,

3,

4,

5,

6,

7,

8,

9] (of course, any list of references is not exhaustive). These properties have made fractional Gaussian noise extremely popular in applications, in particular, to physics [

10,

11], hydrology [

12], information theory [

13], signal detection [

14], related permutation entropy [

15,

16], and many other fields. Additionally, fGn serves as a model for chain polymers exhibiting long-range interactions among monomers along the chain, see, e.g., [

17].

However, considerable analytical and computational difficulties arise in those problems in which the covariance matrices of fBm and fGn and their determinants are involved. The reason for this is obvious, it lies in the huge number of various fractional powers presented in covariance function and covariance matrices, and the source of this is the fractional Hurst index, see (

2). For a more in-depth description of related problems, see [

18,

19].

In particular, the paper [

19] contains three open problems related to the covariance matrices of fBm and fGn. To formulate these problems, let us define, for

, the triangular array

by the following relation:

The sequence

exists and is unique, as (

3) represents the Cholesky decomposition of the covariance matrix of fBm. Similarly to (

3), we can define the Cholesky decomposition of the covariance matrix of fGn as follows

The properties of the sequences

and

for

were investigated in [

19]. In particular, the positivity of both sequences was established, along with the monotonicity of

with respect to

k for a fixed

j. In their study of this problem, the authors of [

19] found a connection between the projection coefficients, that is, the coefficients of the one-sided projection of any value of a stationary Gaussian process onto finitely many subsequent elements, and the Cholesky decomposition of the covariance matrix of the process. More precisely, according to the theorem of normal correlation, there exists a real-valued sequence

such that

Due to the stationarity of fractional Gaussian noise, we can consider the projection directed both to the “past” and to the “future”, and in the first case, consider the result as a solution to the prediction problem. For any

, the coefficients

can be computed as a solution to the following linear system of equations

The properties of the coefficients

were further investigated in [

18], where recurrence relations for them were obtained, see (

11) and (12) below.

Moreover, the following open problems were posed in [

19] as conjectures.

Conjecture 1. For all , .

Conjecture 2. For all , .

Conjecture 3. The coefficients for , , are strictly positive.

It was shown in [

19] that Conjecture 3 implies Conjecture 2, which in turn implies Conjecture 1. Also, Conjecture 3 was numerically confirmed in [

18,

19] for a wide range of values of

n. Note also that due to stationarity of fGn, coefficients of one-sided projection can be considered as the prediction coefficients, because

It is worth noting that the aforementioned open problems initially arose in [

19] during the study of limit theorems for financial markets with memory. Specifically, the weak convergence of a discrete-time multiplicative scheme to an asset price model with memory was investigated. In this context, the pre-limit processes were constructed using the Cholesky decomposition of the covariance function of fBm. The corresponding proofs required an in-depth analysis of the properties of the Cholesky decomposition for the covariance matrix of fBm, which subsequently led to the identification of the open problems related to the coefficients

. Therefore, the further study of these coefficients is not only of intrinsic mathematical interest but also essential for advancing the understanding of financial markets with memory.

To better understand the properties of projection coefficients for other Gaussian–Volterra noises, a very simple process of the form

where

W is a Wiener process, was considered in [

20].

It is established in [

20] that

X, like fBm, is self-similar, non-Markov, has a long memory, and its increments over non-overlapping intervals are positively correlated. But, unlike fBm, its increments are not stationary. The projection problem of the form (

4) for the process

X was considered in [

20]. Using a combinatorial approach, we obtained the explicit formulas for the respective projection coefficients. Note that this is apparently one of the few cases when the coefficients can be explicitly calculated. We established that the coefficients are not all positive; moreover, they are alternating. Thus, we can assume that the stationarity or non-stationarity of the increments is precisely the property that is determining the signs of projection coefficients. But for now, this statement is still a hypothesis.

With all these previous results in mind, in this paper, we considered three main tasks: to proceed analytically with the properties of the coefficients of one-sided projection, to investigate the coefficients of the two-sided (bilateral) projection and to study the norms of both kinds of projections as the functions of

n and

H. The motivation for considering a two-sided projection comes mostly from physics, since we are interested in the influence of the state of a physical system at a given point and at a given moment on the multilateral environment with which it interacts. That is, in a sense, we are interested in the influence of a given point on the two-sided surrounding space, and not just on one side of it. From this point of view, one can also interpret the influence of the system’s position on both the past and the future, which does not contradict modern physical theories about time loops, as can be seen, for example, in recent research [

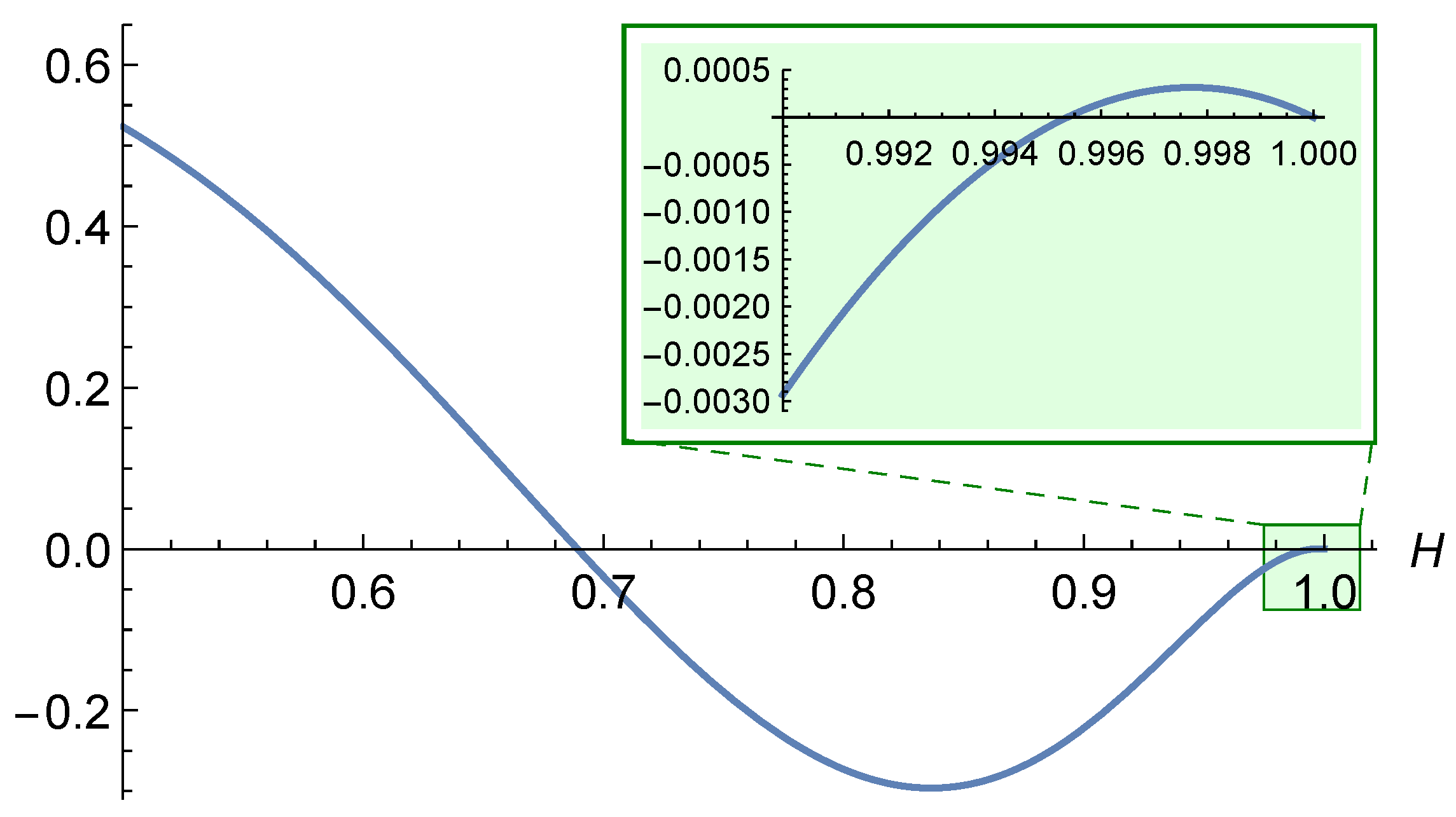

21]. Along the way, we made a rather unexpected observation: while all the coefficients of one-sided projection remain positive, at least within the limits of our observations, with a two-sided projection, one of the coefficients steadily becomes negative, but quite small in absolute value. We established this property analytically for a small value of

n, although this “exceptional” behavior seems somewhat strange and inexplicable, from a logical point of view.

The paper is organized as follows:

Section 2 is devoted to some properties of the coefficients of one-sided projections. More precisely, we establish that all coefficients of one-sided projection (

4) in the case

are strictly positive and at the same time we show what technical difficulties will arise along the way and why we really limit ourselves to small values of

n in precise calculations. Then, we consider another form of projection that contains orthogonal summands, calculate the coefficients of such projection, and give a simple equality for the

-norm of one-sided projection. In

Section 3 and

Section 4, we calculate the coefficients of bilateral projection and comment in detail their (somehow unexpected) properties.

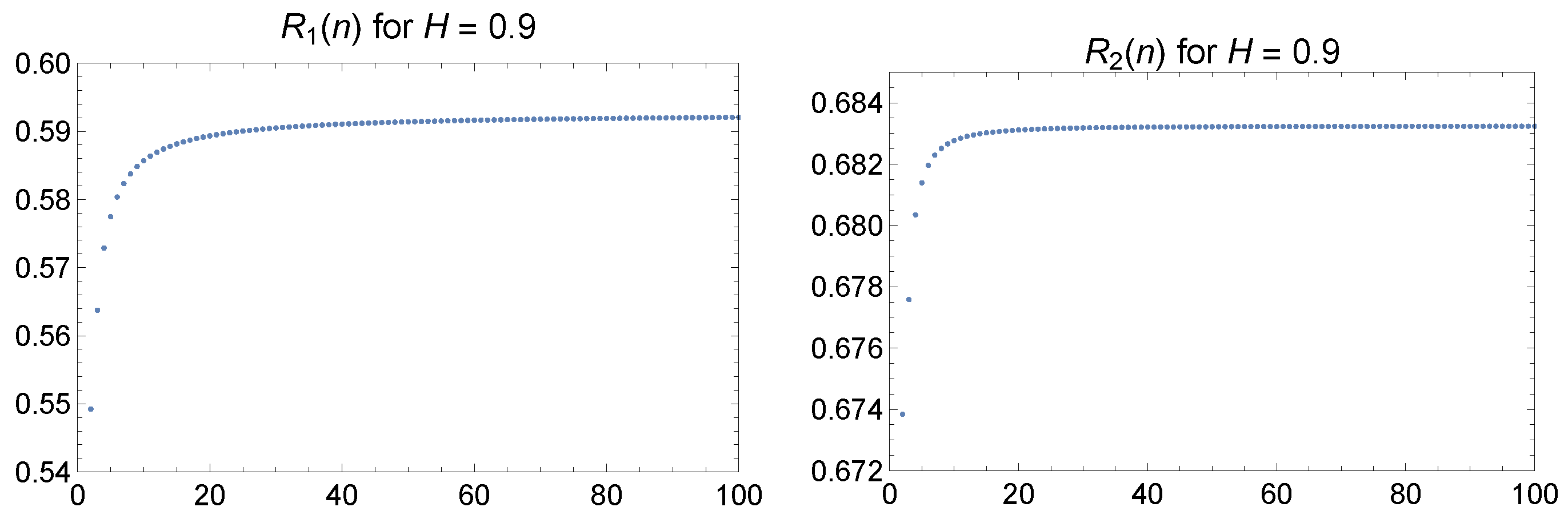

Section 5 is devoted to the norms of projections. We see that the norms are stabilized after some not very big value of

n, and both of them do not tend towards 1. This means that, from the point of view of the theory of stationary sequences, fractional Gaussian noise is a purely nondeterministic sequence.

6. Discussion and Conclusions

In this work, we studied the projections of subsequent increments of a fractional Brownian motion onto neighboring elements. Several properties of the coefficients arising in both one-sided and two-sided projections have been established.

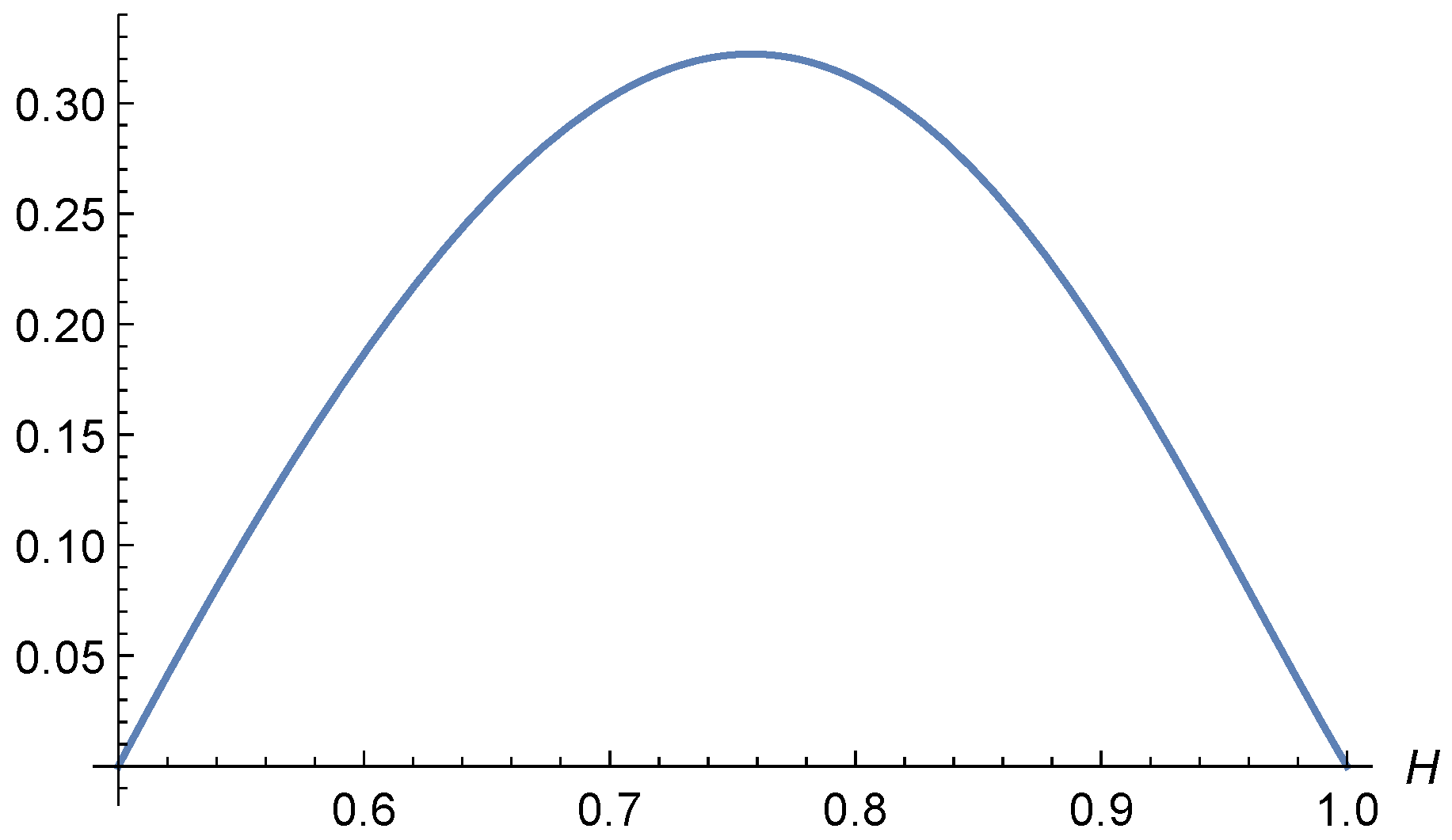

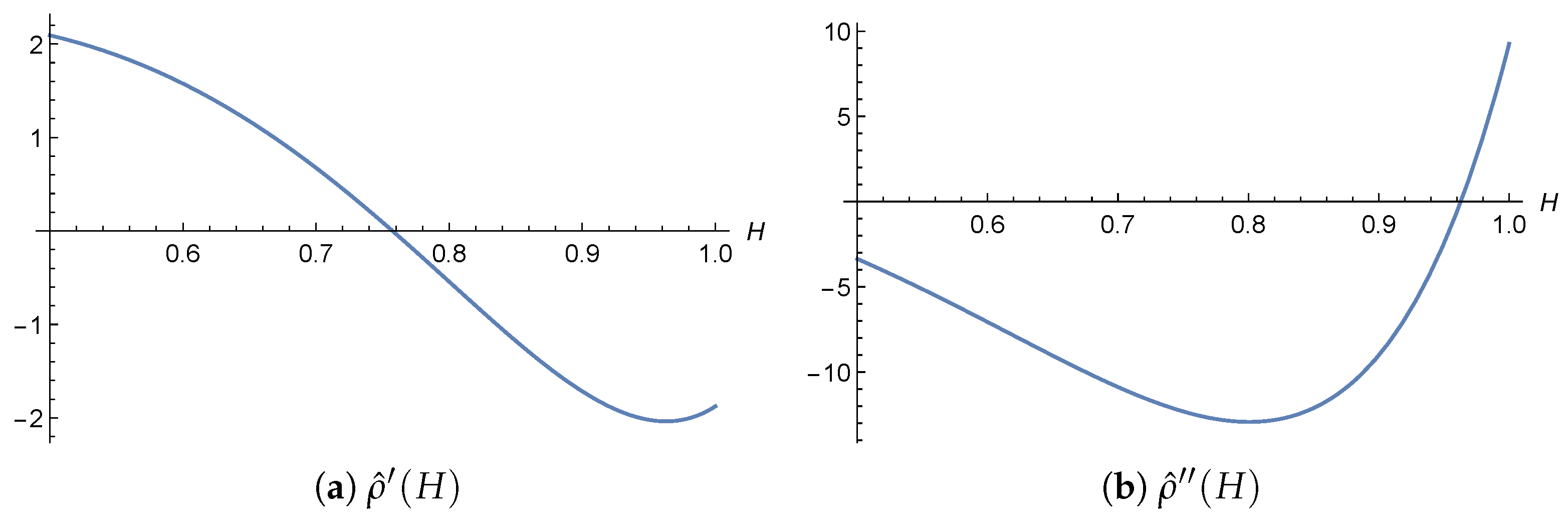

In particular, for the case

, we thoroughly investigated the coefficient

of the one-sided projection and proved its positivity on a specific subinterval

and in a neighborhood of 1. These findings complement the results of [

18], where other coefficients of the one-sided projection for

were analyzed.

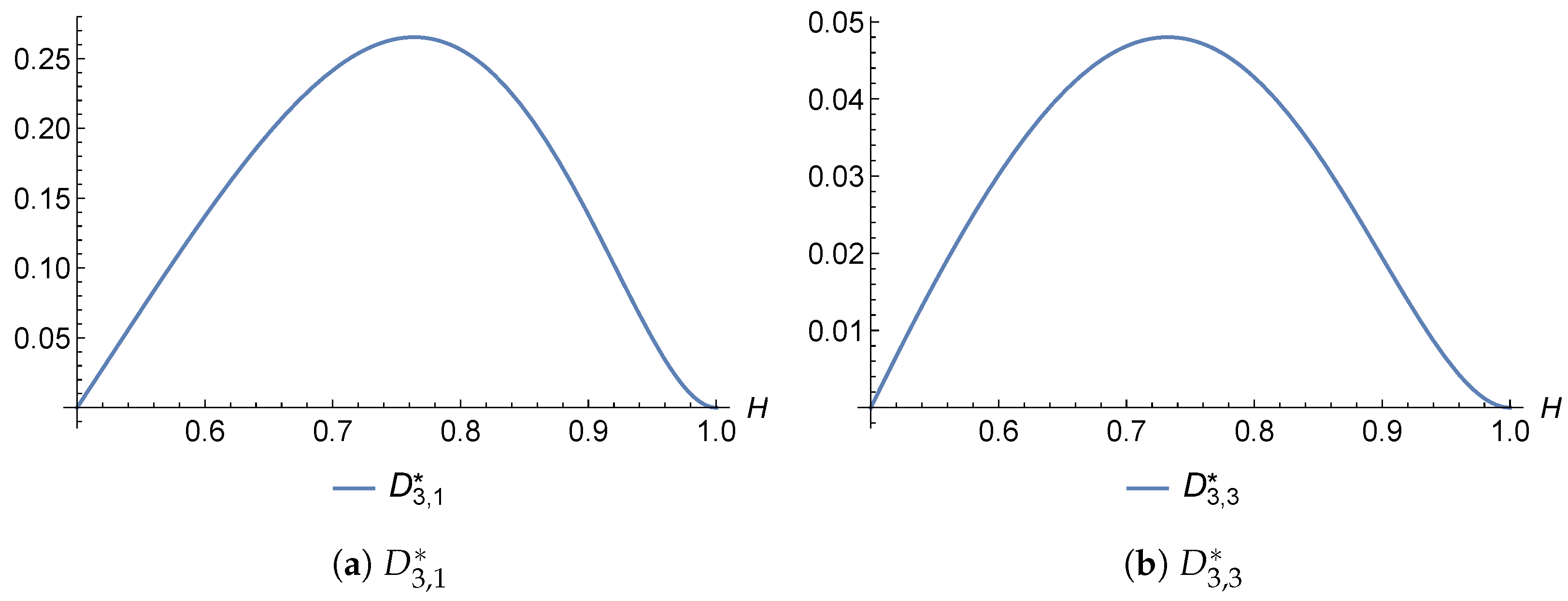

For the two-sided (bilateral) projection, we derived recurrence relations for the coefficients. These formulas are notably complex, especially for projections involving distant neighbors, and significant simplification appears unlikely. Therefore, we focused on specific cases with , where the projection coefficient formulas are relatively tractable. For and , we obtained explicit expressions for the coefficients, established their strict positivity for all , and showed that they are concave functions of H. In the case , a numerical analysis revealed that the coefficients and remain strictly positive for all . However, we also provided an analytical proof demonstrating that there exists some such that for any , the coefficient becomes negative.

Furthermore, we computed the coefficients numerically for various values of H and , obtaining results consistent with the case .

In addition, we analyzed the asymptotic behavior of the projection norm. Recurrence formulas for the norms of both one-sided and two-sided projections were derived, and their behavior was investigated numerically.

As a natural continuation of this work, it would be worthwhile to explore similar projection procedures applied to integrated fractional Brownian motion and the fractional Ornstein–Uhlenbeck process (see [

25,

26]). These processes are increasingly employed in applications as models of relatively smooth systems with memory.