Abstract

This paper presents a modified Euler—Maruyama (EM) method for mixed stochastic fractional integro—differential equations (mSFIEs) with Caputo—type fractional derivatives whose coefficients satisfy local Lipschitz and linear growth conditions. First, we transform the mSFIEs into an equivalent mixed stochastic Volterra integral equations (mSVIEs) using a fractional calculus technique. Then, we establish the well—posedness of the analytical solutions of the mSVIEs. After that, a modified EM scheme is formulated to approximate the numerical solutions of the mSVIEs, and its strong convergence is proven based on local Lipschitz and linear growth conditions. Furthermore, we derive the modified EM scheme under the same conditions in the sense, which is consistent with the strong convergence result of the corresponding EM scheme. Notably, the strong convergence order under local Lipschitz conditions is inherently lower than the corresponding order under global Lipschitz conditions. Finally, numerical experiments are presented to demonstrate that our approach not only circumvents the restrictive integrability conditions imposed by singular kernels, but also achieves a rigorous convergence order in the sense.

1. Introduction

Mixed stochastic fractional integro—differential equations (mSFIEs) are essential tools for understanding certain system properties that cannot be captured with a deterministic framework; for example, such equations can capture the long—memory effects arising from macroeconomic factors or systemic trends [1,2,3].

Many numerical methods have been developed for SFIEs, such as the EM method [4,5,6,7], stopped Euler—Maruyama method [3,8], truncated Euler—Maruyama method [9,10,11], Milstein method [12,13]), Maruyama method [14,15], explicit Euler method [16], and implicit Euler method [17]. In particular, the authors of [3] considered a class of mixed SDEs driven by both Brownian motion and fractional Brownian motion (fBm) with the Hurst parameter , and they obtained the convergence rate (the diameter of partition) using a modified Euler method. In [4], the authors proved strong first—order superconvergence for linear SVIEs with convolution kernels when the kernel of the diffusion term becomes 0. In [5], nonlinear SFIEs were considered under non—Lipschitz conditions, and the EM solutions of SFIDEs shared strong first—order convergence. The authors of [7] introduced the initial value problem of Caputo—tempered SFIEs and proved the well—posedness of its solution, and the strong convergence order of the derived EM method was reported to be , with the fractional derivative’s order . Additionally, a fast EM method based on the sum—of—exponentials approximation was developed. However, most SDE models in real—world applications do not satisfy the global Lipschitz condition in the analysis of numerical solutions, especially Caputo—type fractional SDEs [5,18,19], where the local Lipschitz condition alone is insufficient to guarantee the existence of a global solution [12,20,21]. In [21], the authors found that, under linear growth conditions (Khasminskii—type conditions), both the exact and numerical solutions obtained via the EM or stochastic theta method satisfy the moment—boundedness condition, thereby establishing the strong convergence of the numerical solutions to the exact solution under local Lipschitz and linear growth conditions [9,10,11]. As the classical explicit EM method has a simple structure, is not time—consuming, and has an acceptable convergence rate under the global Lipschitz condition, it has attracted significant attention [16,17,22].

Additionally, research on the above numerical methods for SFIEs or SVIEs has concentrated on convergence under global Lipschitz and linear growth conditions, but the numerical stability properties of SFIEs or Hölder continuous kernels under local Lipschitz and linear growth conditions are rarely discussed. Specifically, no results have been reported on the mean—square stability of the analytical solutions of mSFIEs with singular kernels. Based on the Caputo—type fractional SDE, we consider the following mSFIE in It’s sense:

where represents the Caputo—type fractional derivative of order on , , , , and . is defined as an dimensional standard Brownian motion on the complete probability space with an algebra filtration , where is right—continuous, and contains all -null sets. is an measurable valued random variable, which is defined on the same probability space and satisfies . is an fBm defined on . As observed above, mSFIE (1) includes the Riemann—Liouville fractional integral operator and the fBm process. It becomes more complex to compare classical SDEs containing Brownian motion and fBm. Two major difficulties arise when investigating stochastic equations driven by ; namely, the presence of correlated increments and the absence of the martingale property, which compromises the validity of the classical convergence theorem [12] in numerical analysis. In addition, it is challenging to determine the stability properties of analytical and numerical solutions, necessitating further in—depth research.

To the best of our knowledge, few studies have investigated the convergence rate of the numerical solutions of mSFIE (1) under local Lipschitz and linear growth conditions. To overcome the above difficulties and obtain the strong convergence rates in additive noise cases when , we first loosen the assumption of the global Lipschitz condition to the local Lipschitz condition and proceed to prove the well—posedness, and then we study the strong convergence order of the numerical method. We first transform the mSFIEs into the equivalent mSVIEs using a fractional calculus technique, and then we present the well—posedness of the analytical solutions of the mSVIEs. After that, a modified EM method is devised to approximate the numerical solutions of mSVIEs, and its strong convergence is obtained under local Lipschitz and linear growth conditions. Furthermore, we derive the modified EM scheme under the same conditions in the sense, which is consistent with the strong convergence result of the corresponding EM scheme. Notably, the strong convergence order under local Lipschitz conditions is inherently lower than the corresponding order under global Lipschitz conditions. Finally, numerical experiments are presented to demonstrate that our approach not only circumvents the restrictive integrability conditions imposed by singular kernels, but also achieves a rigorous convergence order in the sense.

The structure of this paper is as follows. In Section 2, some basic notations, preliminary facts on stochastic integrals for fBm, and some special functions are given, and some mild hypotheses are constructed. In Section 3, we transform the mSFIE into an equivalent mSVIE using a fractional calculus technique and Malliavin calculus. In Section 4, we employ a modified EM approximation to study the well—posedness of the solution to the mSVIE. In Section 5, we derive the strong convergence order of the modified EM method under local Lipschitz and linear growth conditions in the mean—square sense. Numerical experiments are presented in Section 6. Finally, we end with a brief conclusion in Section 7.

2. Preliminaries

In this paper, denotes the expectation corresponding to . Let be the Euclidean norm on and the trace norm on . We define matrix A, and then for . Consider a complete probability space with a filtration that satisfies common assumptions. For the real numbers a, b, and c, we write and . The following notations and preliminaries are provided in [23,24].

Definition 1

([23,24]). Let and , and let and . The order left—sided fractional Riemann—Liouville integral of f on is defined as

and the order right—sided fractional Riemann—Liouville integral of f on is defined as

where denotes the Gamma function.

Consider two continuous functions and . For almost all , we define the following fractional derivatives:

Assume that and , where and . Under these assumptions, the generalized (fractional) Lebesgue—Stieltjes integral is defined as

Note that, for all , fBm has the () Hölder regularity of continuous paths. Then, for and , the explicit expression in (2) becomes

where .

Definition 2.

We define the following norms for :

where , and

Next, we formulate some necessary mild hypotheses, which will be used in the next section.

Assumption 1.

There exists a constant that, for any , , such that , satisfies the following condition:

Assumption 2.

There exists a constant that, for any , , such that , satisfies the following condition:

Assumption 3.

There exists a constant that is dependent on m and, for any , and , such that , satisfies the following condition:

Assumption 4.

There exists a constant that, for any , , such that , satisfies the following linear growth condition:

Remark 1.

In Assumption 3, we point out that this local Lipschitz condition is significantly weaker than the following global Lipschitz condition; that is, there exists a constant that, for any , , such that , satisfies the following condition:

3. An Equivalent mSVIE

In this section, an equivalent mSVIE of mSFIE (1) is formulated for the deterministic fractional integral equation see [25] Section 2.2.2. page 119, [26] Section 3.1.3. page 204, and the integral Equation (2.14) of [27]. The integral equation form of mSFIE (1) can be rigorously defined to form the following mSVIE:

Similar to the solution definition of the stochastic integral equation in [20] (Section 2.2. page 48, Definition 2.1), the solution of mSFIE (1) can be defined as follows.

Definition 3.

Let be an valued stochastic process if it satisfies the following conditions:

(1) is adapted and continuous;

(2) , , , and ;

(3) mSVIE (4) holds for every with probability 1.

A solution is determined to be unique if any other solution is indistinguishable from , such that

Under Assumption 1, we can use the stochastic Fubini theorem (ref. [2] Theorem 1.13.1. page 57) for mSVIE (4); then,

We let

and then mSVIE (5) is equivalent to the following mSVIE:

For technical reasons, we need the following auxiliary lemmas.

Lemma 1.

Let be an Hölder continuous function. We define for and . Then, for , there exists a constant such that

where

is the ε-Hölder constant of .

The proof can be seen in Appendix A.

Lemma 2.

Under Assumptions 1–4, for any , mSVIE (7) has a unique solution such that Furthermore, for any and , there exists a constant such that

where

is the -Hölder constant of .

The proof can be seen in Appendix B.

4. Existence and Uniqueness of Solution to mSVIE (7)

In this section, we employ a modified EM approximation with the aim of proving the existence, uniqueness, and stability of the solution to mSVIE (7).

4.1. A Modified EM Scheme

For every integer , we let be a given uniform mesh on . Then, we can define a stopping time and a stopped process . The solution of mSVIE (7) is denoted by , with W replaced by . For , mSVIE (7) becomes

and

for and . Let , . Then, the modified EM scheme is as follows:

Obviously, the Hölder continuous trajectory satisfies for .

4.2. Existence and Uniqueness

Lemma 3.

Under Assumption 4, there exists a constant that is independent of N, and for any , , it satisfies

where .

The proof can be seen in Appendix C.

Lemma 4.

Under Assumptions 1 and 4, for any integer , , there exists a constant such that

where .

The proof can be seen in Appendix D.

Theorem 1.

Based on Assumption 1, Assumption 3, and Assumption 4, mSFIE (1) has a unique solution , and for any (p is positive integer), , it satisfies

The proof is provided in Appendix E.

5. Strong Convergence Analysis of the Modified EM Approximation

Note that the numerical approximation provided by EM Scheme (11) in Section 4 will incur significant computational overhead with stochastic fractional integrals. In this section, we propose a modified version of Euler—Maruyama (EM) Scheme (11) that reduces computational complexity while preserving the desired strong convergence rates.

Given the setting with scheme (11), we design the modified version by using left—endpoint approximation [4]:

where for , . Our modified EM method can be defined as follows:

where and , . Obviously, we only simulate and without computing stochastic integrals. Noticing that the discrete of the fBm increment in the interval can be using a binomial approach (see [28]), such as

and the fBm value on the interval is computed as

which effectively reduces the calculations.

5.1. The Mean—Square Convergence Theorem of the Modified EM Method (14)

To analyze the strong convergence of the modified EM method (14), the boundedness of the numerical solution can be established using the following lemma.

Lemma 5.

Under the same conditions as Lemma 2, for any , ,

where

is the Hölder constant of .

The proof can be seen in Appendix F.

Lemma 6.

Under Assumption 4, for any , there exists a constant , which is independent of h, for any integer , , such that

The proof is similar to that of Lemma 4.

Lemma 7.

Under Assumptions 1 and 4, for any , there exists a constant that, independent of h, satisfies

The proof can be seen in Appendix G.

Next, we study the mean-square convergence of the modified EM method (14) under Assumptions 3 and 4. More details of the properties of the local Lipschitz condition can be seen in Remark 2.1 of [29]. Notice that is an increasing function depending on m, and we need to consider as . Therefore, we let be sufficiently small for a strictly positive decreasing function such that

where denotes the order of the modified EM method under the global Lipschitz condition (Remark 1).

Theorem 2.

Based on Assumptions 3 and 4, for the arbitrary constant and , , we assume that there exists an h that satisfies

and for any , there exists a constant , which is independent of h. Then, the modified EM solution to mSVIE (14) converges to the exact solution , that is,

Proof.

We define the error as

and the stopping time as

According to Theorem 1 and Lemma 7, for any , we have

where the constant is independent of and m. Furthermore, we can estimate in the last expression of inequality (16). With a Hölder—type inequality, we have

Using the Cauchy—Schwarz—type inequality and Itô isometry, under Assumption 1, Assumption 2, Assumption 4, and Lemmas 6–7, we have

With the Cauchy—Schwarz—type inequality and Itô isometry, under Assumption 3, combined with Theorem 1, we have

Applying Lemma 7 and the weakly singular Gronwall—type inequality (ref. [30], Theorem 3.3.1. page 349), we have

Note that the constant depends on m, and it is independent of h and . Then, (16) becomes

We can then choose h, , and

Next, let , for any given , such that

Hence, for arbitrary ,

and the proof is complete. □

Remark 2.

The limitation expression in (15) indicates the strong convergence of the modified EM method (14). Unfortunately, it is challenging to derive the exact orders of strong convergence since . The precise orders of strong convergence can only be obtained under the global Lipschitz condition. Using Theorem 2, we investigate the strong convergence order with the local Lipschitz condition, which is inherently lower than the strong convergence order with the global Lipschitz condition.

5.2. Strong Convergence Order Analysis

In order to analyze the strong convergence order of the modified EM method (14), the following theorem is used to demonstrate the computational efficiency of the numerical scheme.

Theorem 3.

Based on Assumptions 3 and 4, let , , and suppose that there exists an h satisfying

Then, there exists a constant , which is independent of h and ϵ, such that

Proof.

With mSVIE (7) and (13), we have

Using similar derivation steps for inequalities (18) and (19), we also have

and

where , and is the same stopping time as before. Notice that

Using the Young—type inequality, let . Then,

According to Theorem 2, Lemma 7, and the BDG inequality, we obtain

Thus, we choose , and

Then, we have

□

6. Numerical Experiments

In this section, we consider two examples to verify the strong convergence orders of our modified EM scheme (11). We characterize the mean—square errors at the terminal time as

where denotes the ith single sample path. Furthermore, the computing order is defined as

Example 1.

We consider the following one-dimensional mSVIE:

It is obvious that , , and are locally Lipschitz continuous with and satisfy linear growth conditions. For , we choose

We let and

As and , the limitation expression in (15) holds, and

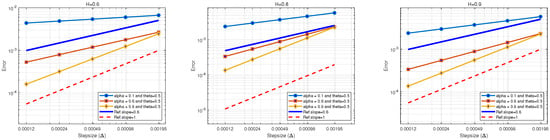

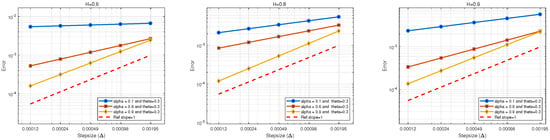

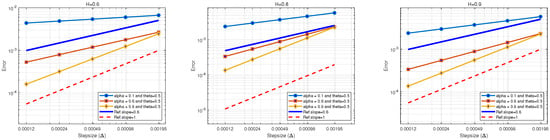

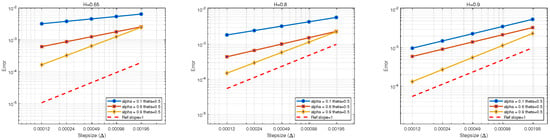

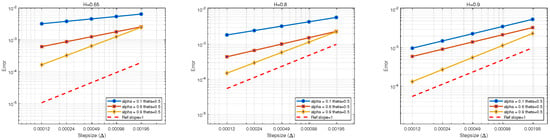

which means that satisfies the condition in Theorem 3. Figure 1 shows the mean—square errors, which become smaller as h decreases. Furthermore, we find that the Hurst parameter H has a significant impact on the convergence order, such that an increase in H results in a higher convergence order. To be specific, when , the strong convergence order of our modified EM method is close to 1, and for the cases of and , the strong convergence orders of our modified EM method is also close to 1, respectively. To illustrate this result, we recall the example 6.1 of [5], which is similar to our Example 1. Ref. [5] verified that the mean—square errors of explicit EM method applied to SFIDEs has strong first—order convergence. They also pointed out that the convergence rate of the numerical scheme used the global Lipschitz condition, but under non—Lipschitz condition, to prove the convergence rate is still an open problem. In our test, we fixed and varying α, we find that an increase in α results in a higher convergence order. The convergence orders with fixed and varying α are shown in Figure 2 and are consistent with the theoretical analysis. These numerical results verify that the strong convergence order with the local Lipschitz condition is inherently lower than the strong convergence order with the global Lipschitz condition.

Figure 1.

(Left): the mean—square errors of the modified EM scheme with and . (Middle): the mean—square errors of the modified EM scheme with and . (Right): the mean—square errors of the modified EM scheme with and .

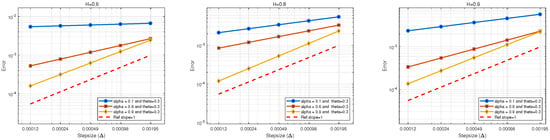

Figure 2.

(Left): the mean—square errors of the modified EM scheme with and . (Middle): the mean—square errors of the modified EM scheme with and . (Right): the mean—square errors of the modified EM scheme with and .

Example 2.

We consider the one—dimensional mixed fractional Volterra O—U (Ornstein—Uhlenbeck) equation (also see [31,32]), which is a special case of mSVIE (7) with Hölder continuous kernels. A mixed fractional Volterra Ornstein—Uhlenbeck equation is defined by

where .

According to Theorems 2 and 3, the convergence order is . We choose the following parameters:

The computed results are shown in Figure 3, which demonstrates that our modified EM method for the mixed fractional Volterra O-U equation can achieve strong first-order convergence when .

Figure 3.

(Left): the mean—square errors of the modified EM scheme with and . (Middle): the mean—square errors of the modified EM scheme with and . (Right): the mean—square errors of the modified EM scheme with and .

Remark 3.

Example 1 and Example 2 shows that the convergence rate of our modified EM scheme using the local Lipschitz condition is much more complex than using the global Lipschitz condition. Since our error consists of two components, the first one is the error of a modified EM method, and the second one is the error of Monte Carlo method, thus, it is challenging to derive the exact orders of strong convergence under the local Lipschitz condition, even the exact orders of strong convergence is completely invisible. The precise orders of strong convergence can only be obtained under the global Lipschitz condition. We only show that the strong convergence order with the local Lipschitz condition, which is inherently lower than the strong convergence order with the global Lipschitz condition. To prove the convergence rate under non—Lipschitz condition is still an open problem.

7. Conclusions

In this study, we relaxed the assumption of the global Lipschitz condition to the local Lipschitz condition and showed the strong convergence order of a modified EM method for mSFIEs. We first transformed the mSFIEs into an equivalent mSVIEs using a fractional calculus technique, and then proved the well—posedness of the analytical solutions to mSFIEs with weakly singular kernels. Moreover, a modified EM method was developed for numerically solving mSVIEs, and the strong convergence of the solutions was proven under local Lipschitz and linear growth conditions, as well as the well—posedness. Furthermore, we obtained the accurate convergence order of this method under the same conditions in the mean—square sense. Notably, the strong convergence order under local Lipschitz conditions is inherently lower than the corresponding order under global Lipschitz conditions. Finally, numerical experiments were presented to demonstrate that our approach not only circumvents the restrictive integrability conditions imposed by singular kernels, but also achieves a rigorous convergence order in the sense.

Author Contributions

Z.Y.: conceptualization, methodology, software, validation, formal analysis, investigation, writing—original draft preparation, project administration, and funding acquisition; C.X.: methodology, writing—review and editing, visualization, and supervision. All authors have read and agreed to the published version of the manuscript.

Funding

The authors are supported financially by the National Natural Science Foundation of China (No. 72361016); the Financial Statistics Research Integration Team of Lanzhou University of Finance and Economics (No. XKKYRHTD202304); and the General Scientific Research Project of Lzufe (Nos. Lzufe2017C-09 and 2019C-009).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No data are included in this article.

Acknowledgments

The authors would like to thank the anonymous referee and the editor for their helpful comments and valuable suggestions that led to several important improvements.

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. The Proof of Lemma 1

We let and for . Taking any , for , there exists a constant such that

and for , we also have

and hence,

that is,

Appendix B. The Proof of Lemma 2

Let . We write (8) as

Obviously,

and

Furthermore,

where

is the -Hölder constant of .

Similarly,

where

is the Hölder constant of .

Combining the above estimates, for , we have

Appendix C. The Proof of Lemma 3

We first consider the boundedness of . Using Lemma 2 and the Gronwall—type inequality, for , we have

where

is the -Hölder constant of . Then, according to Lemma 1.17.1 in [2] (page 88, the estimates for fractional derivatives of fBm and the Wiener process via the Garsia—Rodemich—Rumsey inequality), we can assume that, for arbitrary ,

Then, for , we have

Obviously,

Next, we prove the case for . We define the following stopping time:

where the integer , as . We set , for all . Under Assumption 4 and (A2), we have

where is independent of N and . Then, for , we have

Using the weakly singular Gronwall—type inequality [30] (Theorem 3.3.1. page 349), we have

and according to Fatou’s Lemma, for , (A3) implies that

For , by using the Cauchy—Schwarz—type inequality, as well as Assumption 4 and (A2), we can obtain the results.

Appendix D. The Proof of Lemma 4

Using mSVIE (11), we have

Applying the Hölder—type inequality, Assumption 1, Assumption 4, Lemma 2, and Lemma 3, we have

and

Applying the Burkholder—Davis—Gundy (BDG)—type inequality (Theorem 7.3 of [20], page 40), we also have

Observing that , if , then , and for ,

Using (A2), we also estimate as follows:

Hence,

Appendix E. The Proof of Theorem 1

From the case of the global Lipschitz condition (Remark 1), inspired by Theorem 3.4 of [20] (Section 2.3. page 56), we define the following truncation function:

For each , satisfies the Lipschitz and linear growth conditions, and there is a unique solution to the equation

We can define the stopping time as

Note that is increasing and

We use the linear growth condition to show that, for almost all , there exists an integer such that

Let ; then, is a solution of mSFIE (1).

Uniqueness. We define and as two solutions of mSFIE (1) on with . According to Lemma 2, and are solutions to mSVIE (7), and according to Lemma 3, for , we have

Hence, for arbitrary , using the weakly singular Gronwall—type inequality [30] (Theorem 3.3.1. page 349), we have

which means

Uniqueness has been obtained.

Existence. We let . Using mSVIE (11) and estimating (A4), for any , we have

Next, we show that is a Cauchy sequence almost surely and has a limit in . We first construct a Picard sequence , which satisfies mSFIE (1), and let , , that is,

- Step 1: The Picard sequence . For arbitrary , using inequality (15), let . Then, we have

- Step 2: The Picard sequence is a Cauchy sequence almost surely.We define arbitrary and . We need to argue that, for any , for , orWe writeUsing the Cauchy—Schwarz inequality and Lemma 1, we have the estimateandFurthermore, according to Lemma 2,Similarly, according to Lemma 2,Notice thatand thus,Combining (A3), (12), and (A5), we haveThis means that a.s., hence, a.s. for , especially a.s., as for . Then, we conclude that the Picard sequence is almost surely a Cauchy sequence.

Moreover, according to Lemma 4, we have

Then, the process is a continuous solution of mSFIE (1). According to Lemma 3, let , and then for any ,

Appendix F. The Proof of Lemma 5

According to Lemma 2, we have

Similarly,

The proof is complete.

Appendix G. The Proof of Lemma 7

Using the modified EM method (14), there exists a unique integer n for and . For arbitrary , we have

Applying the Hölder—type inequality, Assumption 1, Assumption 4, Lemma 6, and Lemma 7, we have

and

Applying the BDG—type inequality, we also have

Similarly,

Then,

and hence,

and the proof is complete.

References

- Mounir, Z.L. On the mixed fractional Brownian motion. J. Appl. Math. Stoch. Anal. 2006, 1, 1–9. [Google Scholar]

- Mishura, Y.S. Stochastic Calculus for Fractional Brownian Motion and Related Processes; Springer: Berlin, Germany, 2008. [Google Scholar]

- Liu, W.G.; Luo, J.W. Modified Euler approximation of stochastic differential equation driven by Brownian motion and fractional Brownian motion. Commun. Stat-Theor. Meth. 2017, 46, 7427–7443. [Google Scholar] [CrossRef]

- Liang, H.; Yang, Z.W.; Gao, J.F. Strong superconvergence of the Euler-Maruyama method for linear stochastic Volterra integral equations. J. Comput. Appl. Math. 2017, 317, 447–457. [Google Scholar] [CrossRef]

- Dai, X.J.; Bu, W.P.; Xiao, A.G. Well-posedness and EM approximations for non-Lipschitz stochastic fractional integro-differential equations. J. Comput. Appl. Math. 2019, 356, 377–390. [Google Scholar] [CrossRef]

- Zhang, J.N.; Lv, J.Y.; Huang, J.F.; Tang, Y.F. A fast Euler-Maruyama method for Riemann-Liouville stochastic fractional nonlinear differential equations. Phy. D 2023, 446, 133685. [Google Scholar] [CrossRef]

- Huang, J.F.; Shao, L.X.; Liu, J.H. Euler-Maruyama methods for Caputo tempered fractional stochastic differential equations. Int. J. Comput. Math. 2024, 101, 1113–1131. [Google Scholar] [CrossRef]

- Liu, W.; Mao, X.R. Strong convergence of the stopped Euler-Maruyama method for nonlinear stochastic differential equations. Appl. Math. Comput. 2013, 223, 389–400. [Google Scholar] [CrossRef]

- Song, M.H.; Hu, L.J.; Mao, X.R.; Zhang, L.G. Khasminskii-type theorems for stochastic functional differential equations. Discrete. Cont. Dyn-B 2013, 18, 1697–1714. [Google Scholar] [CrossRef]

- Mao, X.R.; Szpruch, L. Strong convergence and stability of implicit numerical methods for stochastic differential equations with non-globally Lipschitz continuous coefficients. J. Comput. Appl. Math. 2013, 238, 14–28. [Google Scholar] [CrossRef]

- Mao, X.R. The truncated Euler-Maruyama method for stochastic differential equations. J. Comput. Appl. Math. 2015, 290, 370–384. [Google Scholar] [CrossRef]

- Milstein, G.N.; Tretyakov, M.V. Stochastic Numerics for Mathematical Physics; Springer: Berlin, Germany, 2004. [Google Scholar]

- Wang, X.J.; Gan, S.Q. The tamed Milstein method for commutative stochastic differential equations with non-globally Lipschitz continuous coefficients. J. Difference Equ. Appl. 2013, 19, 466–490. [Google Scholar] [CrossRef]

- Wang, X.J.; Gan, S.Q.; Wang, D.S. θ-Maruyama methods for nonlinear stochastic differential delay equations. Appl. Num. Math. 2015, 98, 38–58. [Google Scholar] [CrossRef]

- Li, M.; Huang, C.M.; Hu, Y.Z. Numerical methods for stochastic Volterra integral equations with weakly singular kernels. IMA J. Numer. Anal. 2022, 42, 2656–2683. [Google Scholar] [CrossRef]

- Hutzenthaler, M.; Jentzen, A.; Kloeden, P.E. Strong convergence of an explicit numerical method for SDEs with nonglobally Lipschitz continuous coefficients. Ann. Appl. Proba. 2012, 22, 1611–1641. [Google Scholar] [CrossRef]

- Kamrani, M.; Jamshidi, N. Implicit Euler approximation of stochastic evolution equations with fractional Brownian motion. Commun. Nonlinear Sci. Numer. Simu. 2017, 44, 1–10. [Google Scholar] [CrossRef]

- Anh, P.; Doan, T.; Huong, P. A variation of constant formula for Caputo fractional stochastic differential equations. Stat. Proba. Lett. 2019, 145, 351–358. [Google Scholar] [CrossRef]

- Ford, N.J.; Pedas, A.; Vikerpuur, M. High order approximations of solutions to initial value problems for linear fractional integro-differential equations. Fract. Calc. Appl. Anal. 2023, 26, 2069–2100. [Google Scholar] [CrossRef]

- Mao, X.R. Stochastic Differential Equations and Applications; Horwood Pub Ltd.: Chichester, UK, 1997.

- Higham, D.J.; Mao, X.R.; Stuart, A. Strong convergence of Euler-type methods for nonlinear stochastic differential equations. SIAM J. Numer. Anal. 2003, 40, 1041–1063. [Google Scholar] [CrossRef]

- Yang, Z.W.; Yang, H.Z.; Yao, Z.C. Strong convergence analysis for Volterra integro-differential equations with fractional Brownian motions. J. Comput. Appl. Math. 2021, 383, 113156. [Google Scholar] [CrossRef]

- Liu, W.G.; Jiang, Y.; Li, Z. Rate of convergence of Euler approximation of time-dependent mixed SDEs driven by Brownian motions and fractional Brownian motions. AIMS Math. 2020, 5, 2163–2195. [Google Scholar] [CrossRef]

- Mishura, Y.; Shevchenko, G. Mixed stochastic differential equations with long-range dependence: Existence, uniqueness and convergence of solutions. Comput. Math. Appl. 2012, 64, 3217–3227. [Google Scholar] [CrossRef]

- Nualart, D. The Malliavin Calculus and Related Topics (Probability and Its Applications), 2nd ed.; Springer: Berlin, Germany, 2006. [Google Scholar]

- Biagini, F.; Hu, Y.Z.; Øksendal, B.; Zhang, T.S. Stochastic Calculus for Fractional Brownian Motion and Applications; Cambridge University Press: London, UK, 2008. [Google Scholar]

- Li, L.; Liu, J.G.; Lu, J.F. Fractional stochastic differential equations satisfying fluctuation-dissipation theorem. J. Stat. Phy. 2017, 169, 316–339. [Google Scholar] [CrossRef]

- Costabile, C.; Massabó, I.; Russo, E.; Staino, A. Lattice-based model for pricing contingent claims under mixed fractional Brownian motion. Commun. Nonlinear Sci. Num. Sim. 2023, 118, 107042. [Google Scholar] [CrossRef]

- Lan, G.Q.; Xia, F. Strong convergence rates of modified truncated EM method for stochastic differential equations. J. Comput. Appl. Math. 2018, 334, 1–17. [Google Scholar] [CrossRef]

- Qin, Y.M. Integral and Discrete Inequalities and Their Applications, Volume I: Linear Inequalities; Springer: Berna, Switzerland, 2016. [Google Scholar]

- Rao, B.L.S.P. Parametric estimation for linear stochastic differential equations driven by mixed fractional Brownian motion. Stoch. Anal. Appl. 2018, 36, 767–781. [Google Scholar] [CrossRef]

- Alazemi, F.; Douissi, S.; Sebaiy, K.E. Berry-Esseen bounds and asclts for drift parameter estimator of mixed fractional Ornstein-Uhlenbeck process with discrete observations. Theor. Proba. Appl. 2019, 64, 401–420. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).