Abstract

This study delves into the synchronization issues of the impulsive fractional-order, mainly the Caputo derivative of the order between 0 and 1, bidirectional associative memory (BAM) neural networks incorporating the diffusion term at a fixed time (FXT) and a predefined time (PDT). Initially, this study presents certain characteristics of fractional-order calculus and several lemmas pertaining to the stability of general impulsive nonlinear systems, specifically focusing on FXT and PDT stability. Subsequently, we utilize a novel controller and Lyapunov functions to establish new sufficient criteria for achieving FXT and PDT synchronizations. Finally, a numerical simulation is presented to ascertain the theoretical dependency.

Keywords:

fractional-order; impulse effect; fixed-time synchronization; predefined-time synchronization MSC:

03B52; 34A37; 34D06; 34D20

1. Introduction

Neural networks are a cornerstone of artificial intelligence, mimicking the human brain to address technological challenges and pave the way for new discoveries. These networks are instrumental in information processing, pattern classification, and cognitive control. Through mathematical modeling, we can examine and replicate brain functions in artificial systems, such as robotics. Both theoretical and practical research are essential to advance the field of dynamic neural networks.

Recently, fractional-order neural networks have become popular among scholars because fractional calculus can explain phenomena that classical calculus cannot, such as random errors and unusual diffusion processes. Their memory and hereditary properties make them valuable in various fields, including blood flow, electrolysis, and viscosity [1]. These fractional calculus properties, which allow neural networks to exhibit characteristics such as memory and heredity, demonstrate advantages over traditional integer-order derivatives when applied to various processes. These networks possess infinite memory, and the adjustment of the fractional parameters enhances the device output by increasing the degrees of freedom. They excel in information processing, parameter estimation, and diverse artificial intelligence applications and are comparable to biological networks [2,3] and geometric models [4]. Additionally, fractional-order derivatives offer more accurate depictions of chaotic behavior and a variety of nonlinear phenomena, because fractional-order derivatives exhibit global correlation and can better describe processes with strong historical dependence. Consequently, in recent years, a significant number of studies have been presented focusing on fractional-order chaotic systems [5,6]. With the increasing complexity of economic issues in the financial field, which are influenced by nonlinear factors, financial systems exhibit highly intricate phenomena. Therefore, it is essential to investigate the dynamic characteristics and effects of chaos in intricate financial systems [7]. Fractional-order models can better capture the complex behaviors and interactions within financial markets or provide more accurate risk assessments and predictions. In [8], variable-order time-fractional generalized Navier–Stokes equations were introduced to describe the anomalous dynamics in porous flows to reveal the impact of the fractional order on fluid flow in porous media. Other works detailed the use of fractional-order systems in the encryption of images [9,10,11].

On the other hand, in various scientific domains, including image encryption [12,13], pattern formation, biology [14] and chemistry, reaction–diffusion terms are indispensable. In particular, bidirectional associative memory (BAM) neural networks with reaction–diffusion terms enhances their ability to store and recall the associations between input and output patterns, making them effective tools for pattern recognition [15] and associative memory tasks [16]. Ali et al. [17] examined the synchronization of fractional-order BAM neural networks with fuzzy terms and time-varying delays by presenting sufficient conditions. Lin et al. [18] investigated the synchronization of issue of BAM neural networks with diffusion and time-varying delays and achieved spatio-temporal synchronization by proposing an impulsive pinning controller. Chen et al. [19] investigated the global exponential synchronization for BAM neural memristive neural networks with mixed delays and reaction–reaction diffusion terms based on a new integral inequality with an infinite distributed delay. Furthermore, in certain networks, especially biological neural networks, there are instances where we must precisely capture the propagation of electrical signals and the diffusion of neurotransmitters within a neuron network. To achieve this, we must consider the impact of previous states and the spatial arrangement of neurons. Therefore, we will focus on fractional-order BAM neural networks subject to reaction–diffusion terms.

However, in the aforementioned studies, they neglected abrupt changes in the system. Many motion processes in nature undergo sudden changes compared with the entire motion process, and the duration of these abrupt changes is very short; we refer to this phenomenon as impulse effects. Impulse effects are significant in many engineering systems, such as instantaneous transitions or resets in system states, video encoding, image encryption [20,21], and natural language processing [22]. Several studies have been conducted on impulse phenomena [16,22,23]. In [23], the synchronization of linearly coupled memristor-based recurrent neural networks with impulses was investigated. In [22], the authors provided sufficient conditions for the asymptotic stability of impulse stochastic systems. In [16], new stability criteria for reaction–diffusion impulsive neural networks were developed.

Synchronization is also a critical element in numerous natural processes, and it holds paramount importance within neural network systems. The analysis of the synchronization between two systems can be encapsulated in the stability of an error system. Particularly in the realm of fractional-order neural networks, synchronization is vital for preserving the stability, performance, and communication among linked networks. It improves the precision and efficiency of computations, enhancing performance in tasks such as signal processing, pattern recognition, and control systems. Many studies have explored this field; for instance, Pratap et al. [24] investigated the asymptotic synchronization of Cohen–Grossberg fractional-order neural networks and confirmed the global existence of asymptotically stable solutions. Velmurugan et al. [25] analyzed finite-time synchronization conditions for fractional-order memristor-based neural networks using Laplace transforms and Mittag–Leffler functions. Subsequently, Du and Lu [26] introduced a fractional-order Gronwall inequality for finite-time synchronization of fractional-order memristor-based neural networks with a time delay. However, finite-time synchronization has limitations such as the settling time depending on the initial conditions, which are often unknown in practical applications, complicating the determination of precise convergence times. To address these limitations, the concept of fixed-time (FXT) stability was introduced by Polyakov [27] and has been applied to various neural network systems, including those of fractional-order, with reaction–diffusion and impulsive terms [5,6,7,20]. Nevertheless, there is a paucity of studies on the synchronization of fractional-order impulsive neural networks incorporating reaction–diffusion terms. Building upon preceding work, this study explores the FXT and predefined-time (PDT) synchronizations in impulsive fractional-order systems with reaction–diffusion components. The main contributions of this study are as follows:

- We present a novel controller to establish a sufficient condition for reaching FXT and PDT synchronizations in fractional-order impulsive neural networks with diffusion terms.

- We establish the robustness of the FXT and PDT synchronization approaches against fluctuations in parameter configurations.

- We demonstrate the influence of the fractional-order parameter on the synchronization of the given system.

The organization of the paper is laid out as follows: Section 2 provides the necessary definitions, lemmas, and details of the systems under study, which are vital for the proof of the main results in the following section. Section 3 describes the design of a new controller intended for FXT and PDT synchronizations in impulsive reaction–diffusion fractional-order neural networks. Section 4 presents an evaluation of the effectiveness of the theoretical findings introduced in this study. Finally, Section 5 concludes the paper with a summary of the main points.

2. Preliminaries

2.1. Theoretical Background

The study of fractional-order derivatives has gained prominence with the development of mathematical analysis. There are various definitions of fractional-order derivatives, including the Caputo derivative and Riemann–Liouville’s derivative. The specific definition is as follows [28]:

- Riemann–Liouville integral for :

- Riemann–Liouville derivative for :

- Caputo derivative:

Our main concentration is on the Caputo derivative with order . We will now present lemmas and properties that facilitate the derivation of the main results.

Property 1

([29]). The following property can potentially be met for ,

Property 2

([30]). The Caputo fractional derivative of the satisfies the following inequality:

where , .

Lemma 1

([31]). The equality

holds true if . Where, .

Lemma 2

([32]). Given that , , and for , the subsequent inequalities are valid:

2.2. System Description

Throughout this study, we consider the following class of nonlinear impulsive neural networks characterized by fractional-order dynamics and the inclusion of diffusion terms:

for , , where the numbers of neurons are represented by n and m, , and l is the space dimension. The state variables for the th neuron and the th neuron at time t and spatial location x are represented by and , respectively. The self-inhibition rates of the neurons are denoted by the positive constants and . The synaptic connection weights are represented by the constants and . The activation functions for the neurons are and . The biases of the neurons are given by the variables and . Additionally, and are positive constants. The functions and describe the impulse jumps that take place at the specific impulse moments . The sequence is strictly increasing and satisfies the condition .

The initial and boundary conditions for the system (1) are detailed as

for , , where and are bounded continuous functions.

The response system for the drive system can be introduced as

for , . Where, and represent the state variables of the system (2). The controllers, denoted as , , will be designed in the subsequent section.

The initial and boundary conditions for the system (2) are detailed as

where the functions and are both continuous and bounded. Let , , and the paper maintains the following consistent assumptions.

Assumption 1.

There exist constants and such that the activation functions and satisfy

where and are arbitrary real constants.

Here. we delineate the essential definitions and supporting lemmas that form the basis of our main results. Consider the system described below:

where the state vector of the system denoted by . is a given continuous function with the condition that . is a function that is both continuously differentiable and locally Lipschitz and meets the condition .

Definition 1

([32,33]). The zero solution of the system (4) is called FXT-stable if the solution starting from the initial condition meets the following criteria:

- (i)

- Lyapunov stable. For any , there is a such that for any and ;

- (ii)

- Finite-time convergence. There exists a function , called the settling time (ST) function, such that and for all ;

- (iii)

- is bounded. There exist such that for all .

Definition 2

([34]). The zero solution of system (4) is PDT-stable if it exhibits FXT stability for any initial condition and for any given time holds true.

Lemma 3

([33]). If a Lyapunov function exists and satisfies

- (i)

- , ;

- (ii)

In Lemma 3, denotes the average impulse interval, and is a positive constant. For the detailed definitions of and , refer to Definition 2 in the reference [32].

Lemma 4

([34]). If a Lyapunov function exists and satisfies:]

- (i)

- , ;

- (ii)

then the system (4) will be PDT-stable with a preassigned time , where is given as , , . are described in Lemma 3.

2.3. Fractional-Order Lyapunov Exponent

Lyapunov exponents are invaluable tools for characterizing chaos, analyzing stability, predicting critical transitions, and efficiently computing the properties of chaotic attractors. Their applications extend from theoretical studies to practical data-driven approaches in various scientific fields. For example, the largest Lyapunov exponent determines the dominant rate of divergence or convergence of trajectories, while the full spectrum of Lyapunov exponents can reveal the overall stability characteristics of the system. In chaotic systems, the presence of at least one positive Lyapunov exponent confirms the existence of chaotic dynamics [35,36]. Now, we introduce the Lyapunov exponent for a fractional-order system , where , are real-valued n-dimensional vector. Then, its Lyapunov exponents can be introduced as [35]

with , and initial value (I is n identity matrix). For more specific information, please refer to work [35].

3. Main Results

We present a pair of pivotal theorems that are instrumental in achieving FXT and PDT synchronizations within the system under consideration.

3.1. FXT Synchronization

The controllers and are designed as

where , , and are positive constants, , . and are given as follows:

Theorem 1.

Proof.

In this paper, we construct the Lyapunov function below:

By the definition of , and by applying Property 1 and Property 2, the derivative of the Lyapunov function can be handled as

Then, substituting (7) into the above inequality, it follows that

First, by utilizing Green’s identities along with the model’s boundary condition for the diffusion term, we have

Then, in view of Cauchy’s inequality and Assumption 1, we have

Furthermore, for the controller term, we have

Similarly, one can obtain

Then, by substituting (9), (11), (12) into (10), we can obtain the following inequality for ,

where and . Similarly, we have the subsequent inequality for ,

where and .

If the following inequalities hold true,

and by applying Lemma 1, we can derive the following inequality,

where and .

Remark 1.

In prior studies, particularly those involving neural networks incorporating reaction–diffusion terms [37,38], the scholars applied inequality . According to Hölder’s inequality [39], inequality holds for , , where stands for the volume of the bounded compact set Ω with the smooth boundary . However, in [37,38], the authors did not show the necessary condition for values of p in . In this paper, we present a novel controller and Lyapunov function that enables us to bypass this inequality during the proof.

3.2. PDT Synchronization

As we mentioned in the introduction section, PDT synchronization is necessary in some situations. Here we consider the PDT synchronization of the drive response systems (1) and (2). To achieve PDT synchronization, we redesign controller and as

Theorem 2.

Proof.

In this paper, we construct the Lyapunov function as shown below;

By the definition of , and by applying Property 1 and Property 2, the derivative of Lyapunov function can be described as

Then, substituting (7) into the above inequality, it follows

Similar to Theorem 1, by using inequalities (9)–(12), we can obtain that

where , . Similarly, we have the subsequent inequality for ,

where and .

Suppose the following inequalities hold:

and by applying Lemma 1, we can derive the following inequality,

where and . Based on inequalities (16) and (23), and in accordance with Lemma 4, the drive–response system detailed in (1) and (2) attains PDT synchronization under the controller (19). The proof is complete. □

Remark 2.

Most synchronization outcomes related to fractional-order neural networks, such as those reported in [2,26], are characterized by infinite-time synchronization, also known as asymptotic synchronization. However, infinite-time synchronization may not align with specific practical requirements, particularly in terms of speed and precision. Consequently, the studies in [5,20,26,29] focused on finite and FXT synchronization in fractional-order neural networks. Building on this foundation, we investigate the finite-time and FXT synchronization of impulsive fractional-order BAM neural networks incorporating reaction–diffusion terms. Definitions 1 and 2 outline FXT and PDT synchronization, respectively, revealing that PDT synchronization is an extension of FXT synchronization. This approach allows us to tailor the control strategy to meet actual engineering requirements. Therefore, the synchronization criterion presented in this paper offers greater flexibility and broader applicability than those in [5,20,29].

4. Numerical Examples

The simulations are conducted using Python. The -th-order integrals are computed through the Grünwald–Letnikov method, since, when , the two fractional-order integrals, Grünwald–Letnikov and Caputo integrals, are indistinguishable in practical applications and completely equivalent [28,40]. To discretize the fractional-order diffusion equation, the L1 approximation method was employed [41]. This section includes two numerical examples to confirm the correctness of the analytical findings presented in the previous section.

Example 1.

Consider the following fractional-order impulsive neural networks with reaction–diffusion term

for , and , , the spatial and temporal step sizes are taken as and , respectively. We take the parameters to , , and . The other parameters are shown in Table 1. The activation functions are given as and .

Table 1.

The main parameters for Example 1.

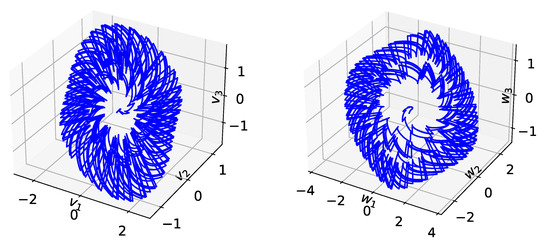

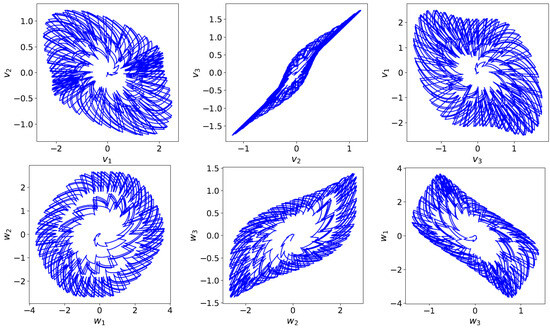

The initial conditions are , , and . The results presented in Figure 1 and Figure 2, along with the corresponding Lyapunov exponents calculated using the method outlined in Section 2.3 are and , , . Therefore, the system (24) exhibits a chaotic attractor.

Figure 1.

The chaotic attractor of (left) and (right) in system (24), where is fixed.

Figure 2.

The chaotic attractor of the system (24), where is fixed.

The response system of the system (24) is given as

where parameters , , , , , , , , along with the activations functions and , are similar to those in the drive system (24). The jump coefficients , . , , . , , , , , , , , and , , . Consequently, we can easily calculate that

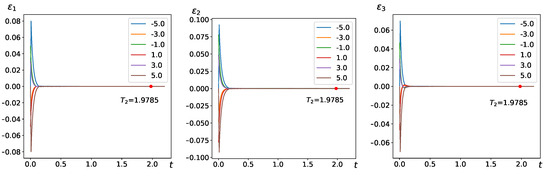

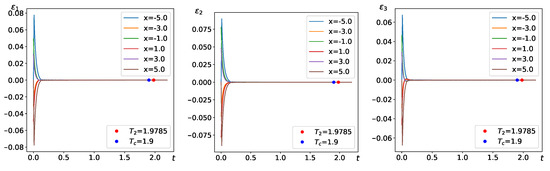

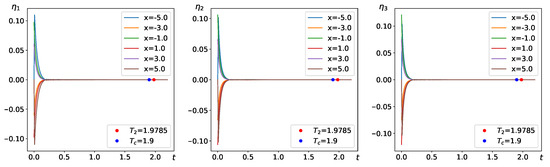

This indicates that the inequalities outlined in Theorem 1 are met. Therefore, as shown in Figure 3 and Figure 4, the response system (25) achieves synchronization with the drive system (24) within FXT under the controller (7). This confirms the controller’s efficiency in reaching the desired FXT synchronization through numerical means.

Figure 3.

The evolution diagram of (left), (middle), and (right).

Figure 4.

The evolution diagram of (left), (middle), and (right).

Remark 3.

Figure 1 and Figure 2 clearly show that the system (24) exhibits chaotic phenomena. Fractional-order derivatives consider the entire history of the system; this property can lead to more complex nonlinear phenomena [42]. In impulsive neural networks, the state of the system can change abruptly at a certain moment; therefore, associating the memory of fractional-order derivatives to the abrupt changes of impulsive neural networks can result in a rich variety of dynamical behaviors.

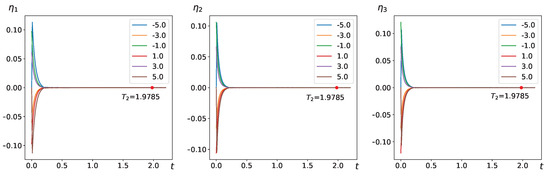

Remark 4.

As mentioned in the introduction section, there are cases where it is necessary to set the synchronization time in advance. Theorem 2 provided the necessary conditions for PDT synchronization. Now, we validate these conditions numerically, taking into account the PDT synchronization of drive–response systems (24) and (25). All parameters are as previously delineated in the FXT synchronization context. Set the preassigned-time as ; then,

where . This ensures that the conditions specified in Theorem 2 are satisfied. Consequently, as demonstrated in Figure 5 and Figure 6, in accordance with Theorem 2, the drive–response systems (24) and (25) achieve synchronization within PDT .

Figure 5.

The time evolution diagram of (left), (middle), and (right).

Figure 6.

The time evolution diagram of (left), (middle), and (right).

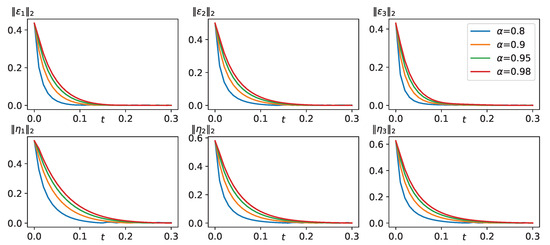

Finally, we examine the impact of the fractional-order parameter on synchronization by analyzing the outcomes for various values of , such as .

As observed in Figure 7, the convergence of and is more rapid for smaller values. Nonetheless, when , there is oscillation around , indicating a transient instability.

Figure 7.

The time evolution diagram of () and () for , respectively.

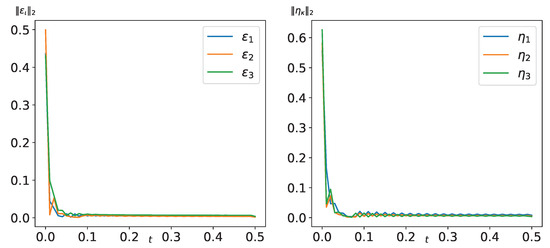

To further investigate this behavior, we set , and Figure 8 reveals more pronounced chattering effects compared to those observed in Figure 7.

Figure 8.

The time evolution diagram of () (left) and () (right) for .

5. Conclusions

In this study, the FXT and PDT synchronizations are investigated for the impulsive fractional-order BAM neural networks with diffusion terms. Initially, we presented fundamental knowledge of the fractional-order calculus and the FXT and PDT synchronizations of neural networks. Expanding on prior studies, we integrated the effects of the impulse and fractional-order within reaction–diffusion BAM neural networks. Since, fractional-order calculus and reaction–diffusion processes associated with impulsive BAM neural networks provide a more comprehensive representation of real-world systems. These systems exhibit not only temporal changes but also spatial factors and diffusion effects. Consequently, the scope of applications for BAM neural networks has been broadened in fields such as environmental science and secure communication [43]. We have developed an innovative controller for the system and have formulated adequate conditions for the FXT and PDT synchronizations of drive–response systems, employing the Lyapunov function approach. To substantiate the theoretical results of our proposed model, a numerical example was provided. Nevertheless, stochastic perturbations are frequently inevitable in many dynamical systems. These perturbations enhance the network’s ability to generalize and accurately forecast outcomes in uncertain environments, thus bolstering its robustness and adaptability [34,44,45,46]. In future studies, we will focus on the synchronization of fractional-order BAM neural networks with randomness, thereby enhancing the applicability of our dynamic system.

Author Contributions

Conceptualization, R.M.; Methodology, A.M.; Software, R.M.; Supervision, A.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the National Natural Science Foundation of China (Grant no. 62266042), the Outstanding Youth Program of Xinjiang, China (Grant no. 2022D01E10).

Data Availability Statement

There is no data associated with this paper.

Conflicts of Interest

The authors declare that they have no any competing interests regarding the publication of this article.

References

- Song, C.; Cao, J. Dynamics in fractional-order neural networks. Neurocomputing 2014, 142, 494–948. [Google Scholar] [CrossRef]

- Chen, J.; Chen, B.; Zeng, Z. Global asymptotic stability and adaptive ultimate mittag-leffler synchronization for a fractional-order complex-valued memristive neural networks with delays. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 2519–2535. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, Y. Bipartite synchronization analysis of fractional order coupled neural networks with hybrid control. Neural Process. Lett. 2020, 52, 1969–1981. [Google Scholar] [CrossRef]

- Podlubny, I. Geometrical and physical interpretation of fractional integration and fractional differentiation. Fract. Calc. Appl. Anal. 2002, 5, 367–386. [Google Scholar]

- Luo, R.; Liu, S.; Song, Z.; Zhang, F. Fixed-time control of a class of fractional-order chaotic systems via backstepping method. Chaos Soliton. Fract. 2023, 167, 113076. [Google Scholar] [CrossRef]

- Balamash, A.S.; Bettayeb, M.; Djennoune, S.; Al-Saggaf, U.M.; Moinuddin, M. Fixed-time terminal synergetic observer for synchronization of fractional-order chaotic systems. Chaos 2020, 30, 073124. [Google Scholar] [CrossRef]

- He, Y.; Peng, J.; Zheng, S. Fractional-Order Financial System and Fixed-Time Synchronization. Fractal Fract. 2022, 6, 507. [Google Scholar] [CrossRef]

- Ren, J.; Lei, H.; Song, J. An improved lattice Boltzmann model for variable-order time-fractional generalized Navier–Stokes equations with applications to permeability prediction. Chaos Soliton Fract. 2024, 189, 115616. [Google Scholar]

- Sun, J.; Zhao, Y.; Li, X.; Wang, S.; Wei, J.; Lu, Y. Fractional Order Spectrum of Cumulant in Edge Detection. In Proceedings of the 2024 IEEE 2nd International Conference on Image Processing and Computer Applications (ICIPCA), Shenyang, China, 28–30 June 2024; pp. 408–412. [Google Scholar]

- Ding, D.; Jin, F.; Zhang, H.; Yang, Z.; Chen, S.; Zhu, H.; Xu, X.; Liu, X. Fractional-order heterogeneous neuron network based on coupled locally-active memristors and its application in image encryption and hiding. Chaos Soliton Fract. 2024, 187, 115397. [Google Scholar]

- Chen, L.; Yin, H.; Huang, T.; Yuan, L.; Zheng, S.; Yin, L. Chaos in fractional-order discrete neural networks with application to image encryption. Neural Netw. 2020, 125, 174–184. [Google Scholar] [CrossRef]

- Wei, T.; Lin, P.; Wang, Y.; Wang, L. Stability of stochastic impulsive reaction–diffusion neural networks with S-type distributed delays and its application to image encryption. Neural Netw. 2019, 116, 35–45. [Google Scholar] [CrossRef] [PubMed]

- Kowsalya, P.; Kathiresan, S.; Kashkynbayev, A.; Rakkiyappan, R. Fixed-time synchronization of delayed multiple inertial neural network with reaction–diffusion terms under cyber–physical attacks using distributed control and its application to multi-image encryption. Neural Netw. 2024, 180, 106743. [Google Scholar] [CrossRef] [PubMed]

- Vilas, C.; García, M.R.; Banga, J.R.; Alonso, A.A. Robust feed-back control of travelling waves in a class of reaction–diffusion distributed biological systems. Phys. D Nonlinear Phenom. 2008, 237, 2353–2364. [Google Scholar] [CrossRef]

- Song, Q.; Cao, J. Global exponential robust stability of cohen-grossberg neural network with time-varying delays and reaction–diffusion terms. J. Frankl. Inst. 2006, 343, 705–719. [Google Scholar] [CrossRef]

- Cao, J.; Stamov, G.; Stamova, I.; Simeonov, S. Almost periodicity in impulsive fractional-order reaction–diffusion neural networks with time-varying delay. IEEE Trans. Cybern. 2021, 51, 151–161. [Google Scholar] [CrossRef]

- Ali, M.S.; Hymavathi, M.; Rajchakit, G.; Saroha, S.; Palanisamy, L.; Hammachukiattikul, P. Synchronization of Fractional Order Fuzzy BAM Neural Networks with Time Varying Delays and Reaction Diffusion Terms. IEEE Access 2020, 8, 186551–186571. [Google Scholar] [CrossRef]

- Lin, J.; Xu, R.; Li, L. Spatio-temporal synchronization of reaction–diffusion BAM neural networks via impulsive pinning control. Neurocomputing 2020, 418, 300–313. [Google Scholar] [CrossRef]

- Chen, H.; Jiang, M.; Hu, J. Global exponential synchronization of BAM memristive neural networks with mixed delays and reaction–diffusion terms. Commun. Nonlinear Sci. Numer. Simulat. 2024, 137, 108137. [Google Scholar] [CrossRef]

- Kowsalya, P.; Mohanrasu, S.S.; Kashkynbayev, A.; Gokul, P.; Rakkiyappan, R. Fixed-time synchronization of Inertial Cohen–Grossberg Neural Networks with state dependent delayed impulse control and its application to multi-image encryption. Chaos Soliton. Fract. 2024, 181, 114693. [Google Scholar] [CrossRef]

- Li, H.; Li, C.; Ouyang, D.; Nguang, S.K. Impulsive synchronization of unbounded delayed inertial neural networks with actuator saturation and sampled-data control and its application to image encryption. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 1460–1473. [Google Scholar] [CrossRef]

- Bishop, S.A.; Eke, K.S.; Okagbue, H.I. Advances on asymptotic stability of impulsive stochastic evolution equations. Comput. Sci. 2021, 16, 99–109. [Google Scholar]

- Zhang, W.; Li, C.; Huang, T.; He, X. Synchronization of Memristor-Based Coupling Recurrent Neural Networks with Time-Varying Delays and Impulses. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 3308–3313. [Google Scholar] [CrossRef] [PubMed]

- Pratap, A.; Cao, J.; Lim, C.P.; Bagdasar, O. Stability and pinning synchronization analysis of fractional order delayed Cohen–Grossberg neural networks with discontinuous activations. Appl. Math. Comput. 2019, 359, 241–260. [Google Scholar] [CrossRef]

- Velmurugan, G.; Rakkiyappan, R.; Cao, J. Finite-time synchronization of fractional-order memristor-based neural networks with time delays. Neural Netw. 2016, 73, 36–46. [Google Scholar] [CrossRef] [PubMed]

- Du, F.; Lu, J. New criterion for finite-time synchronization of fractional order memristor-based neural networks with time delay. Appl. Math. Comput. 2021, 389, 125616. [Google Scholar] [CrossRef]

- Polyakov, A. Nonlinear feedback design for fixed-time stabilization of linear control systems. IEEE Trans. Automat. Control 2012, 57, 2106–2110. [Google Scholar] [CrossRef]

- Podlubny, I. Fractional Differential Equations, Mathematics in Science and Engineering; Academic Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Huang, S.; Xiong, L.; Wang, J.; Li, P.; Wang, Z.; Ma, M. Fixed-time fractional-order sliding mode controller for multimachine power systems. IEEE Trans. Power Syst. 2021, 36, 2866–2876. [Google Scholar] [CrossRef]

- Xiao, J.; Wen, S.; Yang, X.; Zhong, S. New approach to global Mittag–Leffler synchronization problem of fractional-order quaternion-valued BAM neural networks based on a new inequality. Neural Netw. 2020, 122, 320–337. [Google Scholar] [CrossRef]

- Khanzadeh, A.; Mohammadzaman, I. Comment on ‘Fractional-order fixed-time nonsingular terminal sliding mode synchronization and control of fractional-order chaotic systems’. Nonlinear Dynam. 2018, 94, 3145–3153. [Google Scholar] [CrossRef]

- Lee, L.; Liu, Y.; Liang, J.; Cai, X. Finite time stability of nonlinear impulsive systems and its applications in sampled-data systems. ISA Trans. 2015, 57, 172–178. [Google Scholar] [CrossRef]

- Li, H.; Li, C.; Huang, T.; Ouyang, D. Fixed-time stability and stabilization of impulsive dynamical systems. J. Frankl. Inst. 2017, 354, 8626–8644. [Google Scholar] [CrossRef]

- Abudusaimaiti, M.; Abdurahman, A.; Jiang, H. Fixed/predefined-time synchronization of fuzzy neural networks with stochastic perturbations. Chaos Solitons Fractals 2022, 154, 111596. [Google Scholar] [CrossRef]

- Li, H.; Shen, Y.; Han, Y.; Dong, J.; Li, J. Determining Lyapunov exponents of fractional-order systems: A General method based on memory principle. Chaos Solitons Fractals 2023, 168, 113167. [Google Scholar] [CrossRef]

- Echenausía-Monroy, J.L.; Quezada-Tellez, L.A.; Gilardi-Velázquez, H.E.; Ruíz-Martínez, O.F.; Heras-Sánchez, M.D.C.; Lozano-Rizk, J.E.; Álvarez, J. Beyond chaos in fractional-order systems: Keen insight in the dynamic effects. Fractal Fract. 2024, 9, 22. [Google Scholar] [CrossRef]

- Sadik, H.; Abdurahman, A.; Tohti, R. Fixed-Time synchronization of reaction–diffusion fuzzy neural networks with stochastic perturbations. Mathematics 2023, 11, 1493. [Google Scholar] [CrossRef]

- Song, X.; Man, J.; Song, S.; Zhang, Y.; Ning, Z. Finite/fixed-time synchronization for Markovian complex-valued memristive neural networks with reaction–diffusion terms and its application. Neurocomputing 2020, 414, 131–142. [Google Scholar] [CrossRef]

- Evans, L.C. Partial Differential Equation, 2nd ed.; American Mathematical Society: Providence, RI, USA, 2010. [Google Scholar]

- Lubich, C. Discretized fractional calculus. SIAM J. Math. Anal. 1986, 17, 704–719. [Google Scholar] [CrossRef]

- Lin, Y.; Xu, C. Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 2007, 225, 1533–1552. [Google Scholar] [CrossRef]

- Huang, C.; Wang, H.; Liu, H.; Cao, J. Bifurcations of a delayed fractional-order BAM neural network via new parameter perturbations. Neural Netw. 2023, 168, 123–142. [Google Scholar] [CrossRef]

- Wang, Z.; Zhang, W.; Zhang, H.; Chen, H.; Cao, J.; Abdel-Aty, M. Finite-time quasi-projective synchronization of fractional-order reaction–diffusion delayed neural networks. Inform. Sci. 2025, 686, 121365. [Google Scholar] [CrossRef]

- Abdurahman, A.; Abudusaimaiti, M.; Jiang, H. Fixed/predefined-time lag synchronization of complex-valued BAM neural networks with stochastic perturbations. Appl. Math. Comput. 2023, 444, 127811. [Google Scholar] [CrossRef]

- Yin, J.; Khoo, S.; Man, Z. Finite-time stability and instability of stochastic nonlinear systems. Automatica 2011, 47, 2671–2677. [Google Scholar] [CrossRef]

- Torres, J.J.; Muñoz, M.A.; Cortés, J.M.; Mejías, J.F. Special issue on emergent effects in stochastic neural networks with application to learning and information processing. Neurocomputing 2021, 461, 632–634. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).