1. Introduction

Since its inception in 2009, cryptocurrency has experienced an unprecedentedly high growth rate and market capitalization share over a mere decade, disrupting traditional financial assets and derivatives [

1,

2], thus emerging as a highly promising investment avenue [

3]. By November 2021, the total market capitalization of cryptocurrencies had surged to USD 3 trillion. As the cryptocurrency market has evolved, the urgent need for cryptocurrency price prediction has intensified among investors and research entities due to its substantial returns and prolonged periods of heightened volatility [

4,

5]. This urgency has made cryptocurrency price prediction a focal point within the field of financial time series forecasting. Accurate forecasts of cryptocurrency prices can furnish investors and research entities with valuable insights, facilitating well-informed decisions, deterring unethical speculation and fraud, and mitigating unwarranted market panic. Therefore, the enhancement of cryptocurrency prediction methodologies, the augmentation of prediction precision, and the mitigation of investment risks for stakeholders will play a pivotal role in fostering the healthy and stable development of the cryptocurrency market.

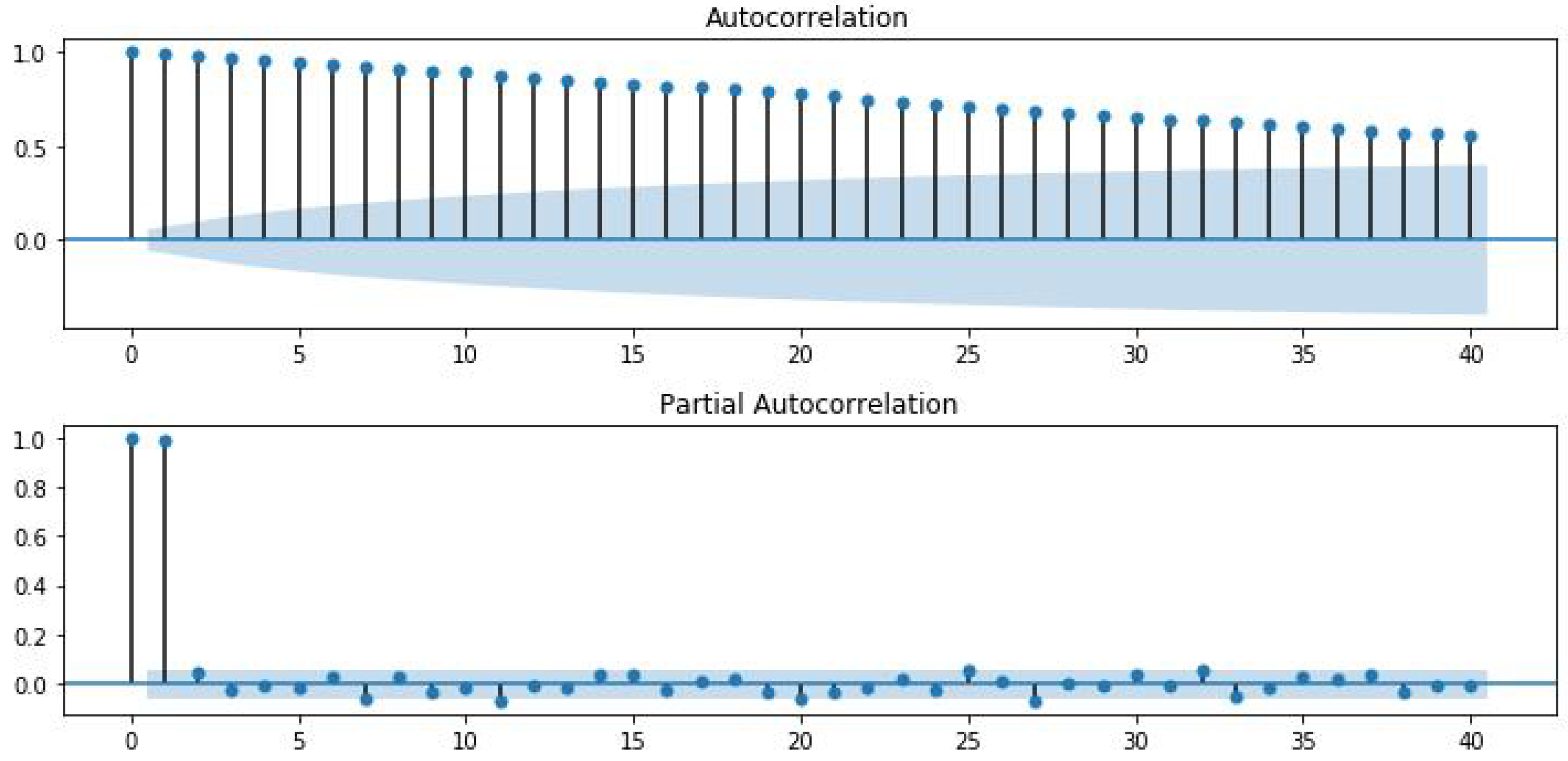

Traditional statistical and econometric models in linear time series forecasting such as ARIMA, as well as non-linear models such as autoregressive conditional heteroskedasticity (ARCH) and vector autoregressive (VAR), are all rooted in the assumption of stationarity. These methods require non-stationary data to be transformed into stationary data before prediction. Additionally, their handling of non-linear issues is not optimal, leading to inherent limitations when applied to financial time series predictions. Furthermore, these methods struggle to meet the demands for prediction accuracy and efficiency. In this context, the emergence of machine learning and deep learning methods has showcased their advantages in forecasting cryptocurrency prices [

6,

7].

With the rise of research in the field of artificial intelligence, epitomized by machine learning, an increasing number of machine learning models have been integrated into time series forecasting tasks. Unlike traditional statistical and econometric models, these non-linear and non-parametric models have demonstrated higher predictive accuracy in time series forecasting. Classical machine learning models, such as decision trees, random forests, and support vector machines, often rely on feature engineering, necessitating preprocessing and feature selection. However, for larger-scale financial data, feature selection becomes challenging. Moreover, when dealing with multidimensional financial data, issues such as the curse of dimensionality and overfitting can arise.

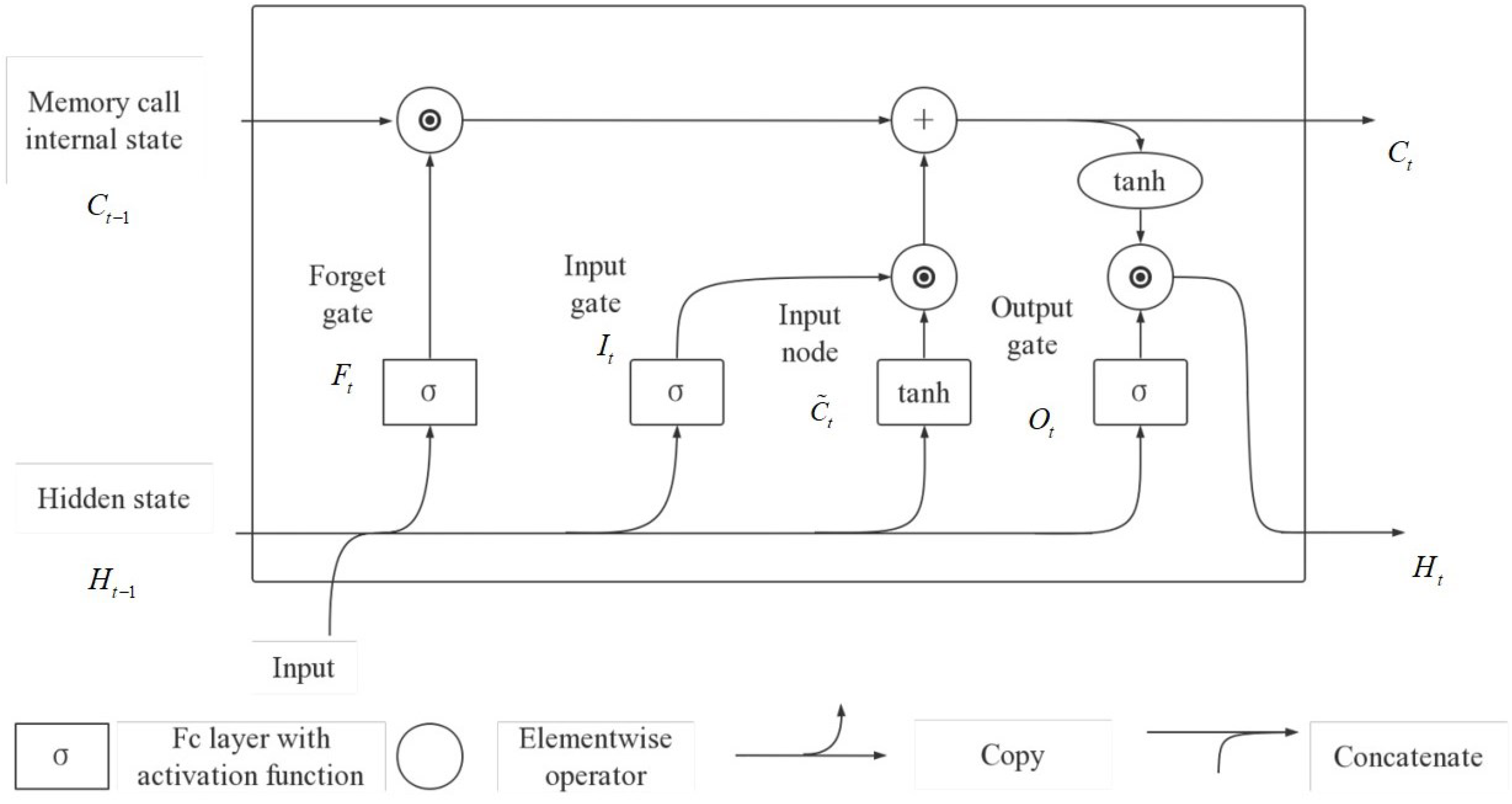

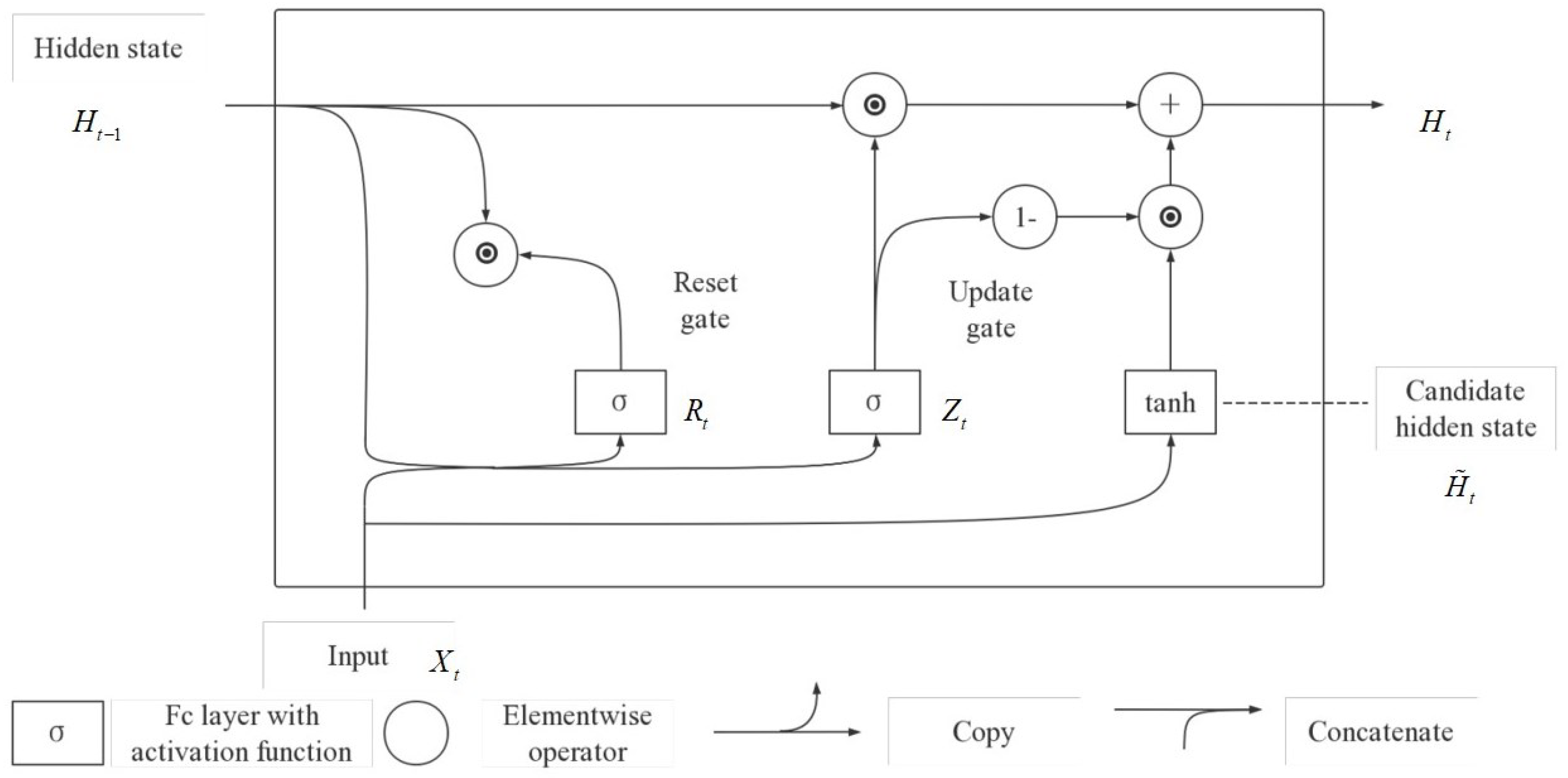

As the size of datasets continues to grow, accompanied by remarkable advancements in data storage capacity and computing power, the expansion of computer memory has progressed relatively slower. This has led to the possibility of constructing larger models with a higher number of parameters while striving to enhance memory efficiency. Consequently, numerous deep learning methods have found application in financial time series forecasting. Deep learning does not necessitate the assumption of stationarity, granting it an innate advantage in predicting financial time series. The architecture of deep neural networks contributes to their strong generalization capabilities. Networks such as gated recurrent units (GRU) and long short-term memory (LSTM) utilize their internal structures to extract temporal correlations from time series data. A study showed that the incorporation of recurrent dropout in a GRU model notably enhanced the predictive performance for Bitcoin price compared to baseline models [

8]. Hence, in this study, a GRU model is adopted as the foundational predictor within a hybrid model structure.

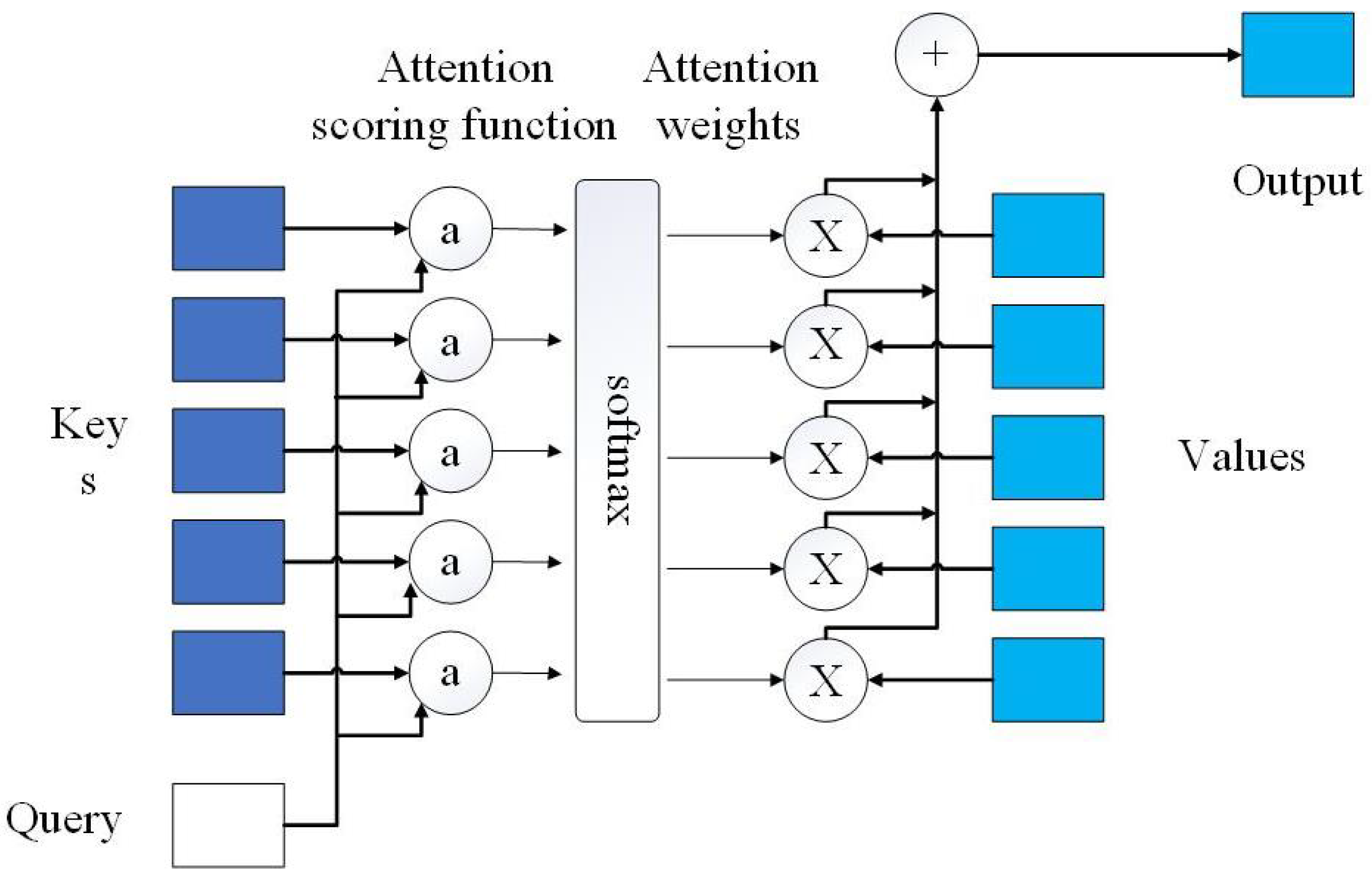

Despite the commendable performance of deep learning models in time series forecasting, these models still encounter challenges in identifying the importance of input features. Attention mechanisms address this issue by enabling deep learning models to adaptively discern the significance of input features. Therefore, this study employs a combination of a GRU network and an attention mechanism for forecasting.

Compared to traditional financial markets, the cryptocurrency market exhibits an intense speculative nature, contributing to higher volatility and non-stationarity in cryptocurrency price sequences compared to general financial time series. Conventional standalone deep learning models often struggle to achieve outstanding predictive accuracy when forecasting such sequences [

9].

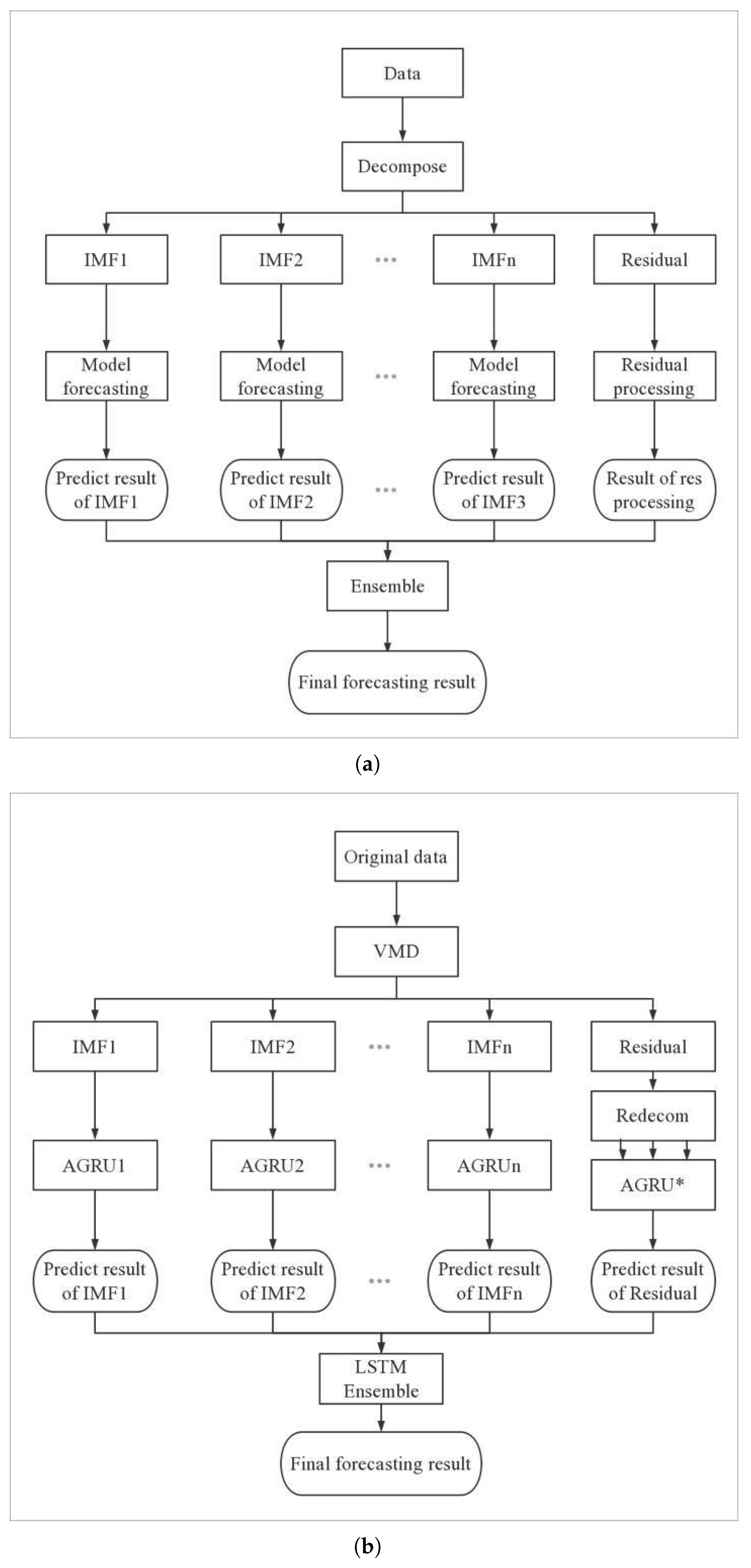

Extracting underlying features from the original sequence can further enhance predictive performance for the raw data. By decomposing the original time series into relatively simpler modal components, estimating them separately, and then integrating them to generate the final prediction output, it is possible to achieve enhanced predictive performance. Modal decomposition involves breaking down complex and non-stationary cryptocurrency price sequences characterized by challenging market volatility into a series of relatively simple modal components. This approach facilitates the model’s ability to capture the intrinsic characteristics of the time series. This ensemble model, based on the decomposition–integration framework, demonstrates higher predictive accuracy in financial time series forecasting compared to single models [

10]. Hence, we adopt the decomposition–integration framework as the architecture for our hybrid model.

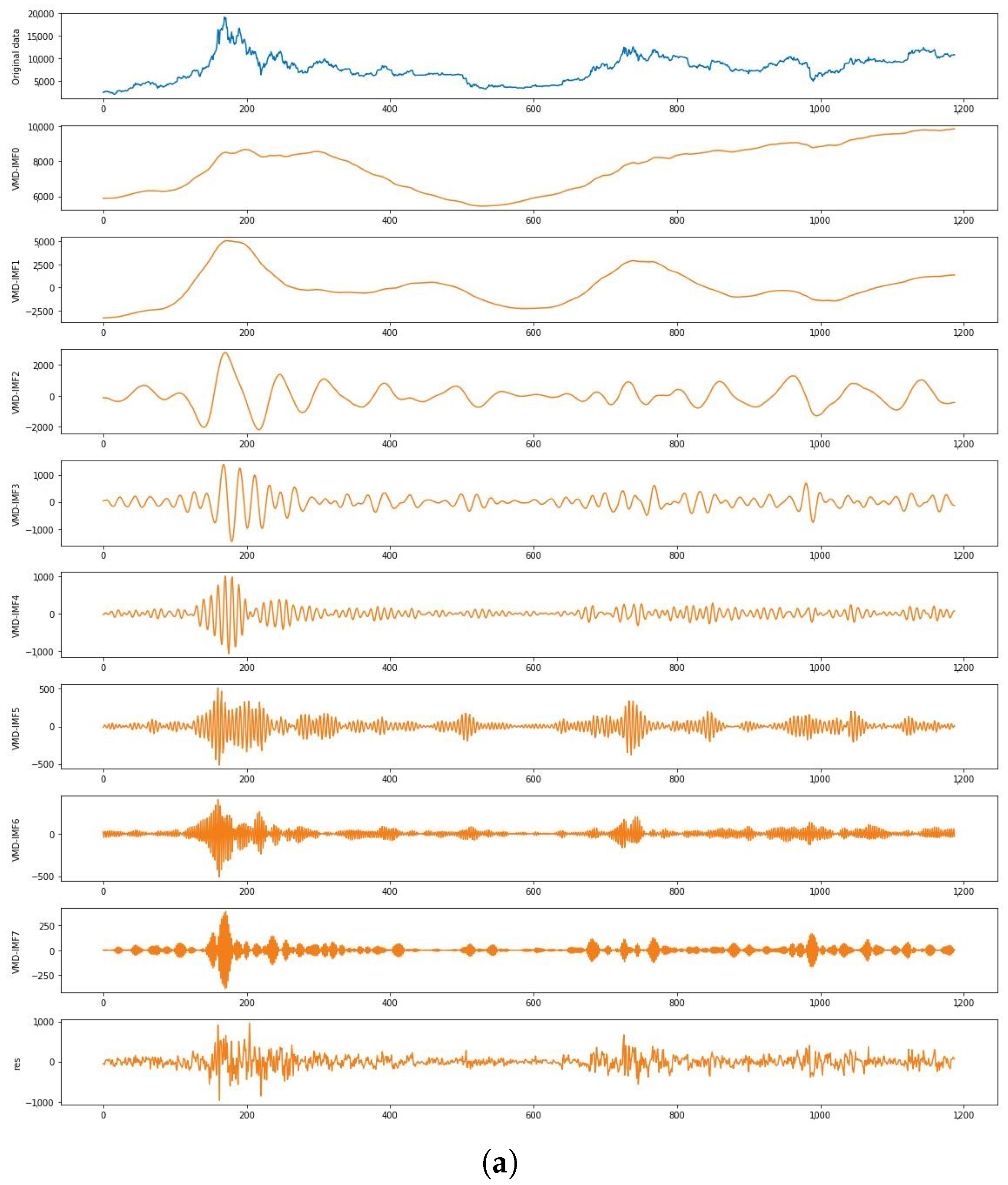

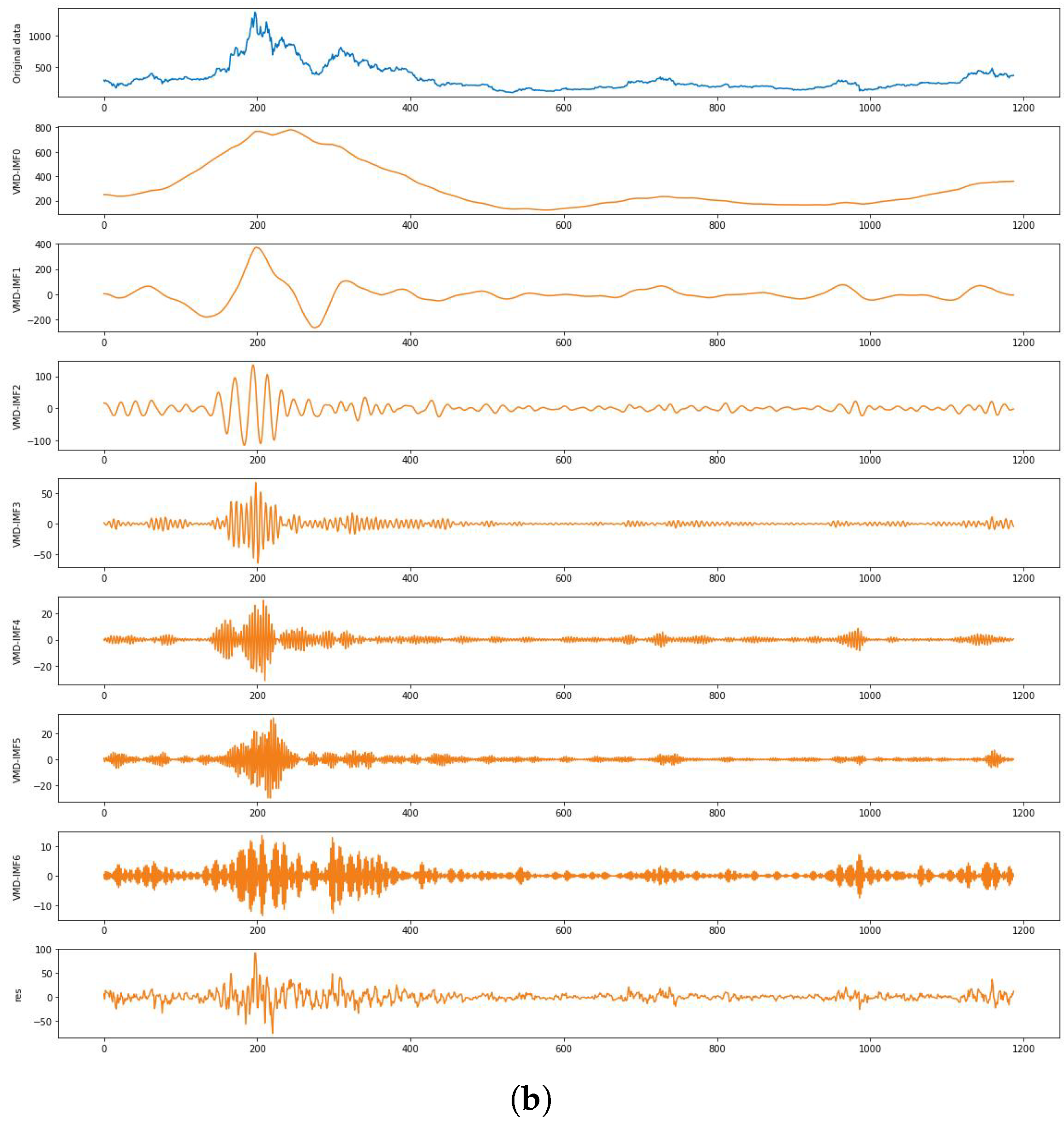

The commonly used empirical mode decomposition (EMD) is a primary method for decomposing non-stationary time series data into intrinsic mode functions (IMFs). EMD decomposition is advantageous in dealing with noisy data by leveraging the time scale characteristics inherent to the data itself. It does not require predefined basis functions, making it particularly effective for processing non-stationary and non-linear data. However, EMD suffers from endpoint effects and mode mixing issues, leading to increased prediction errors. In contrast, the variational mode decomposition (VMD) method can overcome challenges, such as mode mixing, improper envelopes, and boundary instability, that often arise during the decomposition process [

11]. Consequently, VMD maintains stability when handling non-linear and non-stationary data. Moreover, sequences obtained through VMD tend to be smoother, making it easier for deep learning models to capture the patterns within these subsequences.

Nonetheless, a drawback of VMD is that it leaves behind a residual component. In current research [

12,

13], either this residual is treated as an additional mode for prediction, which is challenging due to its complex characteristics, leading to potential errors in the final ensemble prediction, or the residual is directly ignored. In the latter case, disregarding the residual can cause the sum of modal components to deviate from the original sequence, resulting in information loss and ultimately leading to significant prediction errors. To address this issue, this study proposes a residual re-decomposition prediction method that effectively predicts the residual while retaining it, thereby enhancing the accuracy of the final prediction.

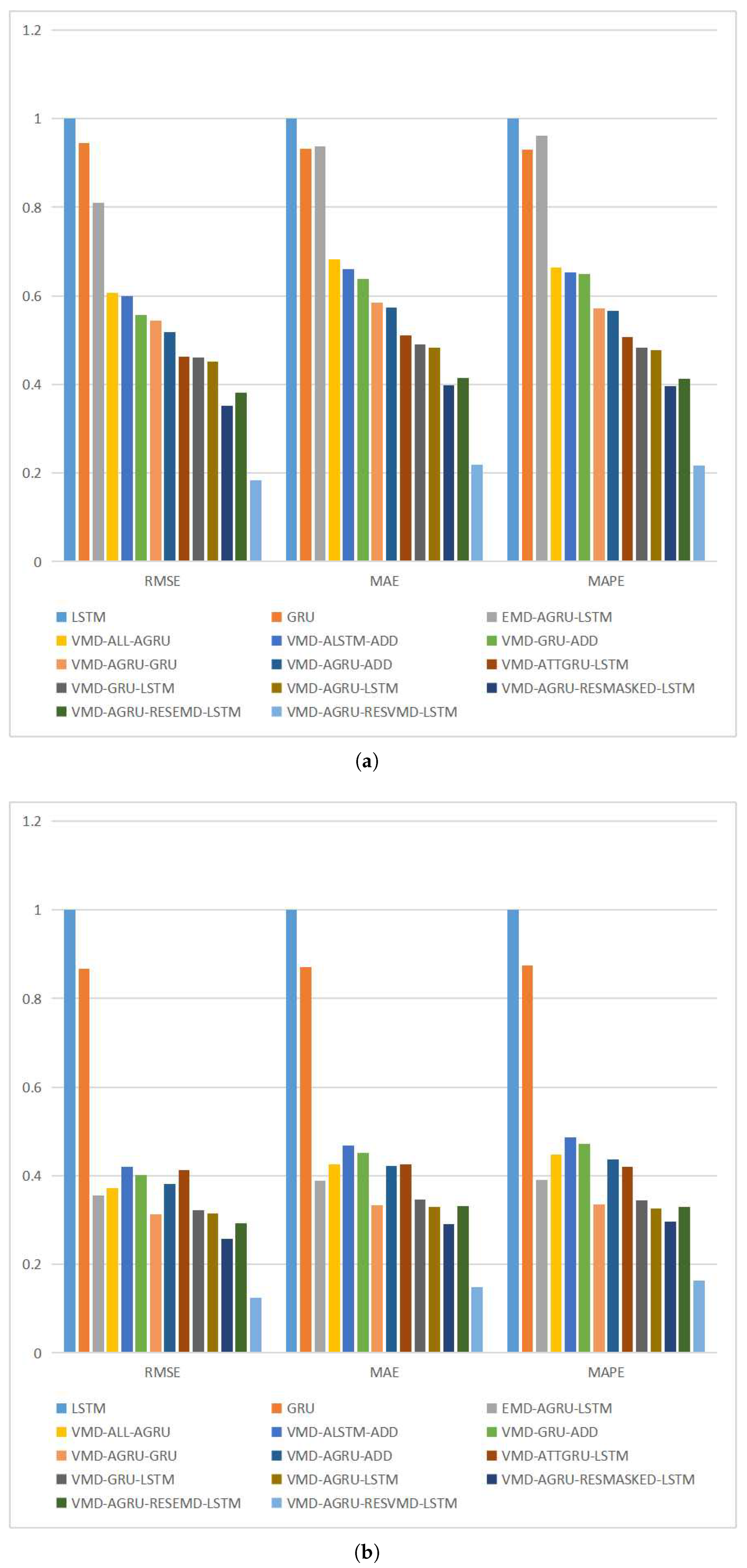

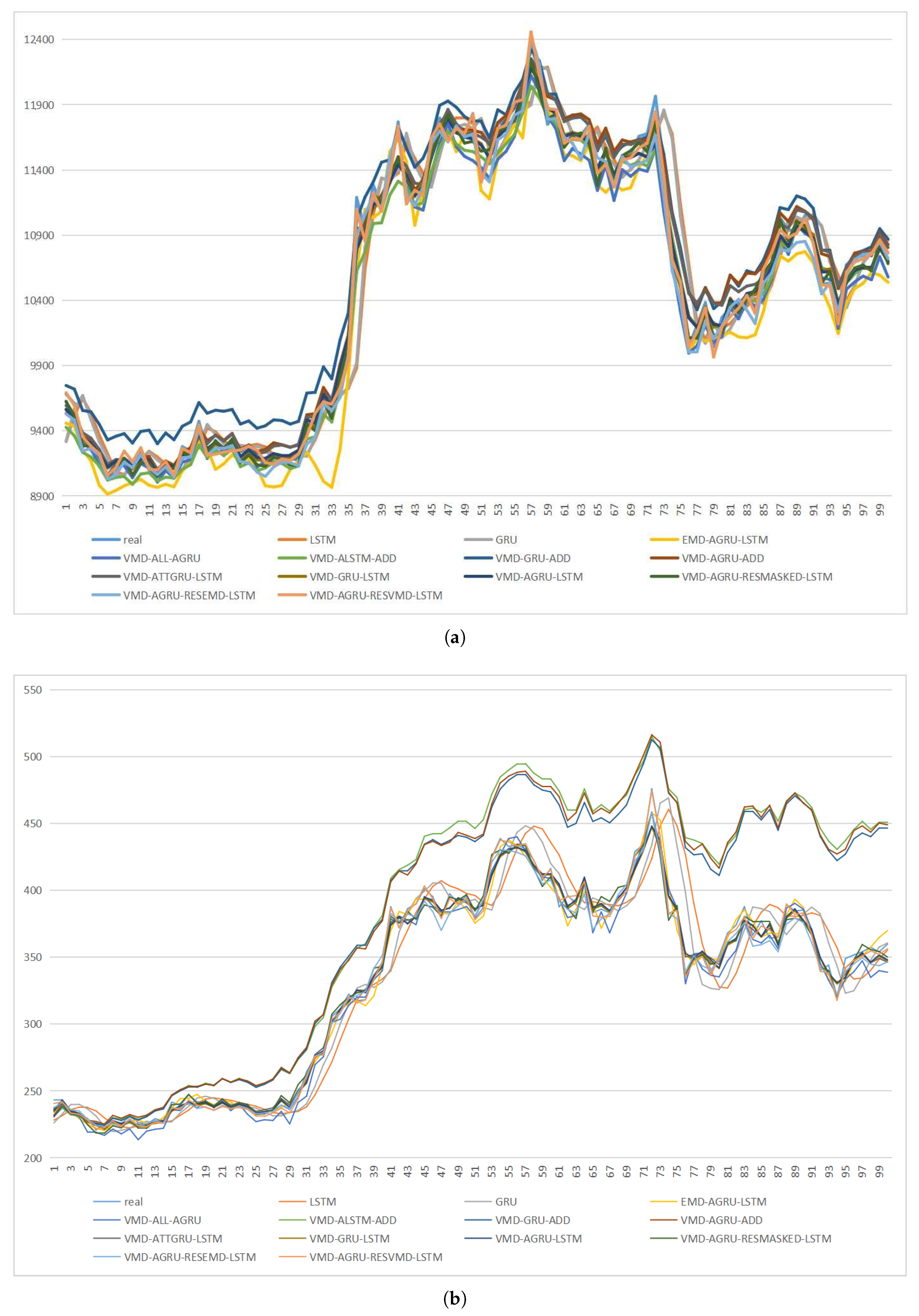

In conclusion, this paper introduces a novel hybrid prediction model, namely the VMD-AGRU-RESVMD-LSTM model, designed for single-step forecasting of cryptocurrency prices. The model integrates decomposition ensemble methods, variational mode decomposition (VMD), the gated recurrent unit (GRU) and long short-term memory (LSTM) neural network models, and attention mechanisms. The VMD process decomposes the data, resulting in smoother subsequences that make it easier for the model to capture internal patterns. AGRU subsequence predictors handle various modes, while the attention mechanism effectively identifies significant input features. The RESVMD process re-decomposes residuals before prediction, mitigating errors introduced by residual components. LSTM executes integrated forecasting.

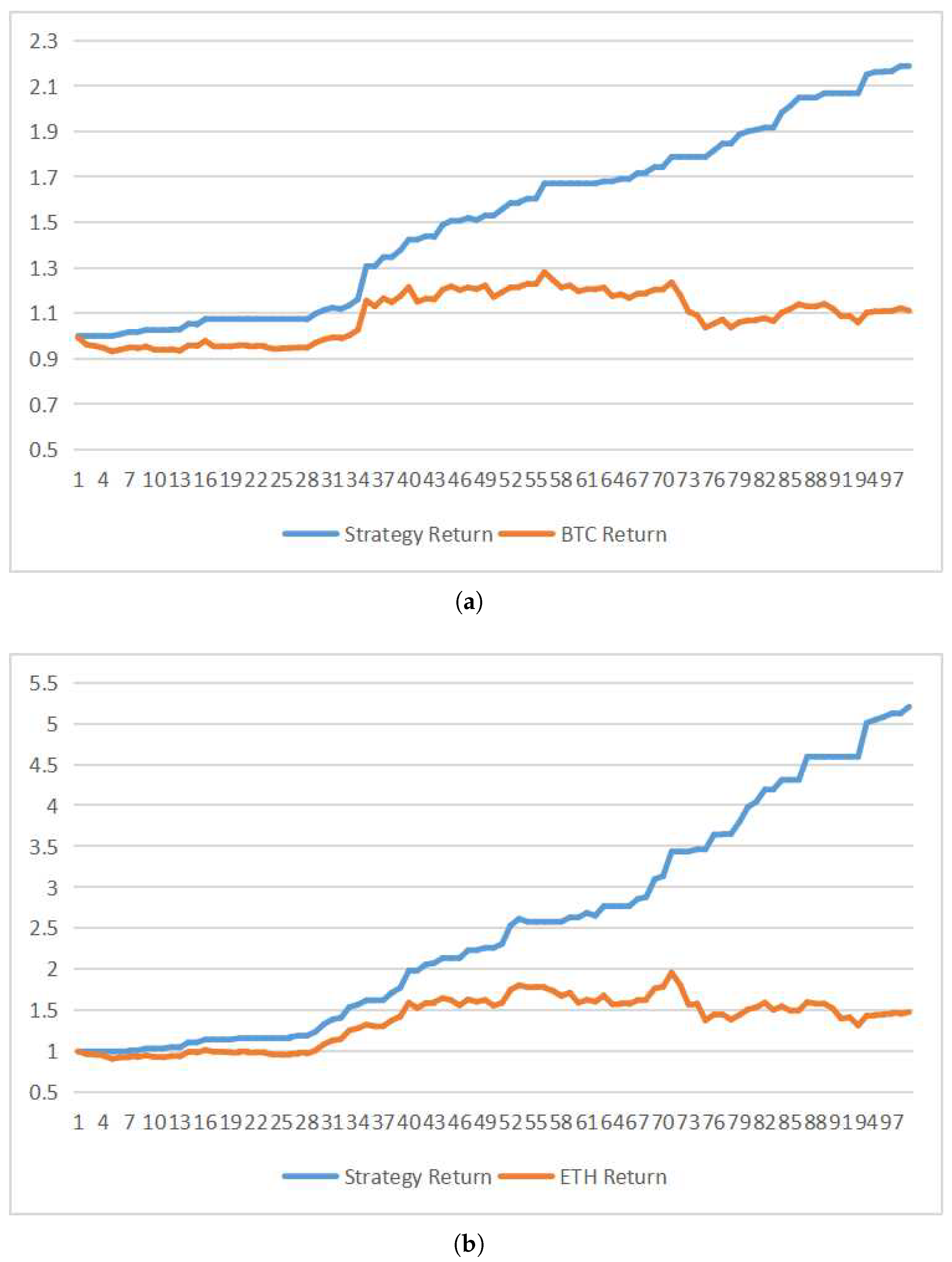

To validate the superiority and robustness of the proposed model, empirical analyses are performed using BTC and ETH datasets. A comprehensive evaluation of predictive performance is conducted using standard evaluation metrics. The robustness of the model is tested through DM tests on datasets with different time spans. Lastly, the predictive results of the VMD-AGRU-RESVMD-LSTM model are subjected to profit and loss back-testing, thus confirming the practical economic significance of the predictive model.

In summary, the contributions of this study are as follows:

The study introduces a novel hybrid prediction model, VMD-AGRU-RESVMD-LSTM. Empirical results demonstrate the model’s superiority and robustness in both prediction accuracy and simulated profit outcomes.

In addressing the residuals obtained from VMD decomposition, a novel residual re-decomposition prediction method is proposed in this paper. In contrast to previous approaches to handling residuals, this method mitigates errors, consequently improving predictive accuracy.

This research presents an attention mechanism that offers a deeper exploration of the internal mechanisms within cryptocurrency price sequences. When combined with the GRU network model, this mechanism effectively mines the inherent features of time series data, resulting in enhanced prediction accuracy.

The remaining sections of the paper are organized as follows.

Section 2 provides a comprehensive review of relevant literature in the field.

Section 3 explains in detail the methodologies and techniques employed, including variational mode decomposition (VMD), gated recurrent unit (GRU), long short-term memory (LSTM), attention mechanisms, normalization, and the experimental procedure.

Section 4 outlines the data used in the study and presents the empirical testing process. It also includes a comparison and analysis of the impacts of various enhancement methods on the experimental outcomes.

Section 5 summarizes the entire paper and provides key takeaways from the study.

2. Related Work Literature Review

Commonly used cryptocurrency prediction methods fall into two main categories: econometric and statistical models and machine learning and deep learning models. Most traditional econometric and statistical methods, such as SES, ARIMA, and other models and their variants, are not accurate enough in forecasting highly volatile prices over long periods to capture long-term dependence in the presence of high volatility. Ref. [

14] showed that these models have difficulty meeting the volatility and non-stationarity of the cryptocurrency market, and these traditional models are inelastic in predicting different types of cryptocurrencies. Compared with traditional time series models, machine learning and deep learning models have advantages in analyzing nonlinear multivariate data and are robust to noise values. When sufficient data are available, compared with traditional econometric and statistical models, many classical ML models have achieved higher prediction accuracy [

15]. With the progress of research on neural networks, today’s deep learning models have achieved higher accuracy in predicting time series. Ref. [

8] shows that the recurrent neural network (RNN) using GRU and LSTM is superior to the traditional ML model and that the GRU model with cyclic dropout significantly improves the performance of the Bitcoin price prediction baseline. However, it is difficult for a single model to accurately capture the characteristics of time series, and it is difficult to obtain accurate prediction results in the face of complex and volatile cryptocurrency prices.

The decomposition–integration method shows its superiority in many time series prediction fields, such as carbon prices [

16,

17], wind power [

18,

19,

20], oil prices [

11,

21], and air quality [

22,

23]. The decomposition–integration method has been widely used in the field of financial time series prediction [

24,

25]. In particular, the research shows that the decomposition–integration method has excellent performance in the prediction of stock prices [

10,

26] and exchange rates [

27]. However, it has an existing application in the field of cryptocurrency price forecasting. The research [

28] shows that both cryptocurrencies and traditional financial time series such as stock prices are non-stationary and highly volatile. Ref. [

29] shows that, after years of development, cryptocurrencies have become closely related to traditional financial markets such as currencies, stocks, and commodities. At the level of a single time series, cryptocurrencies and foreign exchange markets have almost indistinguishable complex features. The research [

30] has shown considerable similarities in the erratic behavior of stocks and cryptocurrencies. According to new research from the International Monetary Fund [

31], the correlation between cryptocurrencies and traditional assets such as stocks has increased significantly as market recognition has increased. Cryptocurrencies have become more correlated with the stock market than stocks are with other assets such as gold, investment-grade bonds, and major currencies.

Due to the similar characteristics of cryptocurrency price series and traditional financial time series, this paper attempts to use the decomposition–integration method to predict cryptocurrency price. Empirical mode decomposition (EMD) is the main method used to decompose non-stationary time series data into intrinsic mode functions (IMFs). Ref. [

32] used EMD decomposition to decompose financial time series and compared it with wavelet decomposition, proving that the effect of EMD decomposition was better than wavelet decomposition. However, EMD is affected by the endpoint effect and mode mixing, which will lead to an increase in prediction error. In contrast, the VMD method can overcome modal aliasing, improper envelopes, unstable boundaries, and other problems that often occur in the decomposition process, so it still has good stability when dealing with nonlinear and unstable data [

11].

Moreover, due to the great success of the self-attention-based Transformer [

33] in NLP, attention mechanisms have recently been applied in various fields to solve different problems. The essence of the attention mechanism is to assign global dependencies from input to output. It is a general framework independent of any model and can find the internal relationship between input vectors and output. Research by [

34] shows that the attention mechanism has excellent performance in predicting time series. In this study, the attention mechanism and GRU model are combined and applied to the decomposition and integration framework to better mine the features of time series.

This study proposes an emerging hybrid model for cryptocurrency price prediction based on VMD and deep learning methods. Compared with the benchmark model and other hybrid models, our model effectively improves the accuracy of cryptocurrency price prediction.

5. Conclusions

In pursuit of achieving excellent and robust predictive performance for Bitcoin price sequences, this paper proposes a hybrid model, VMD-AGRU-RESVMD-LSTM, which integrates the variational mode decomposition (VMD) algorithm, attention mechanism, GRU model, and LSTM model. Additionally, the model incorporates residual sequence re-decomposition as a means of handling residuals resulting from VMD decomposition.

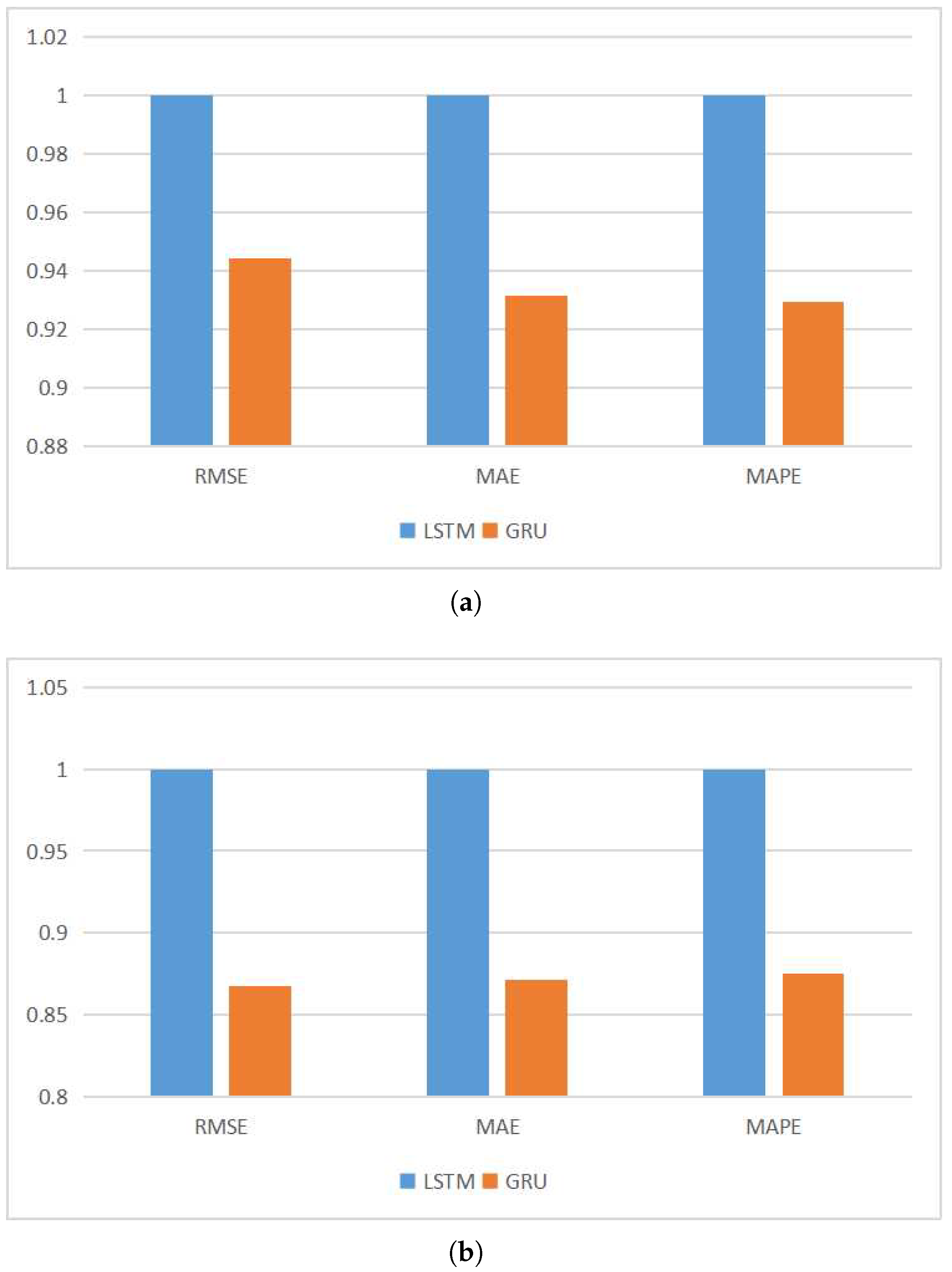

The superiority of GRU in the domain of time series forecasting has been extensively verified through research. However, individual deep learning models struggle to discern the importance of distinct time series features. To enhance the feature recognition ability of the predictive model, the incorporation of an attention mechanism becomes imperative.

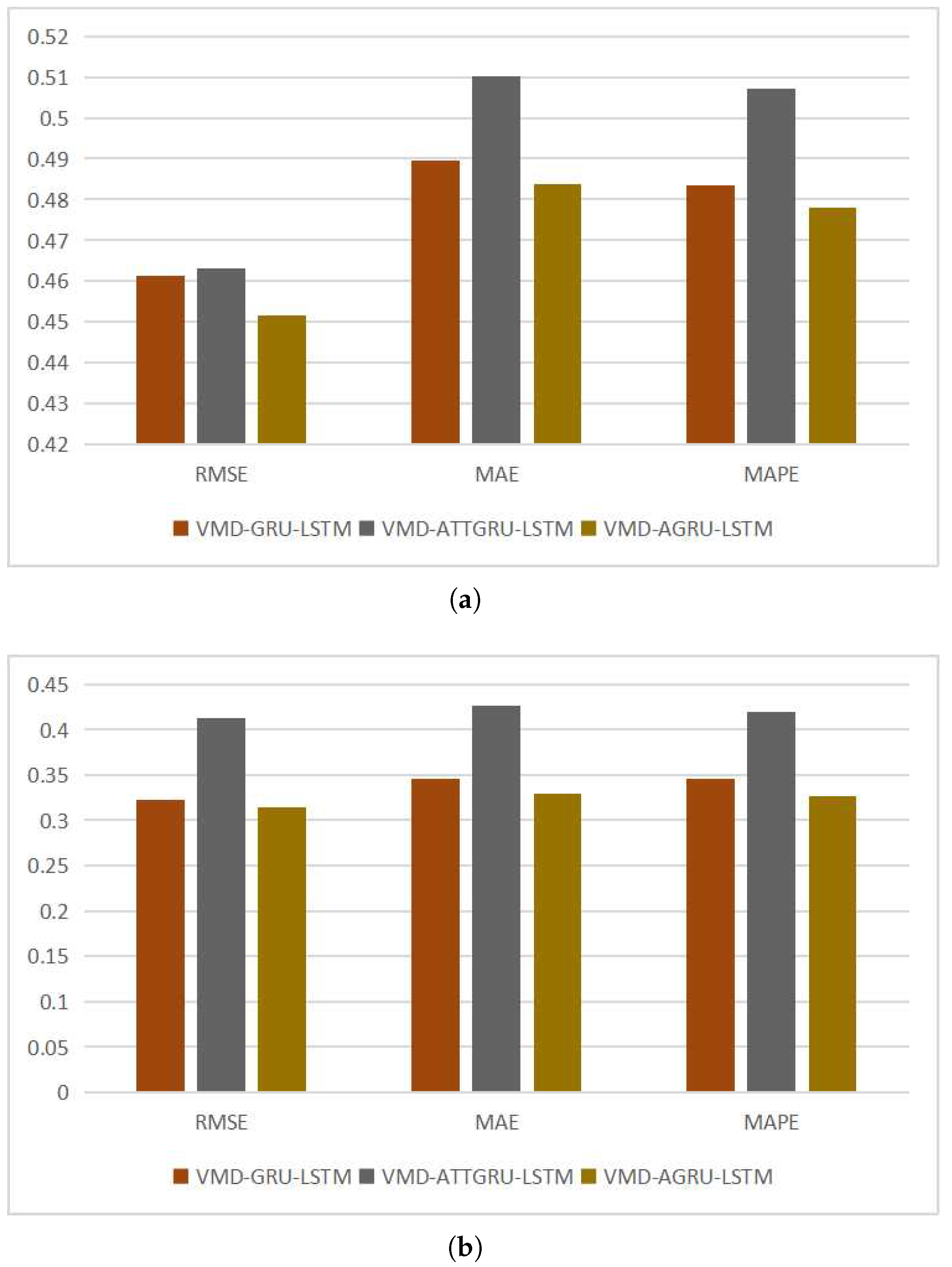

In the realm of attention mechanisms, self-attention mechanisms are often employed to identify features within time series. However, for the task of forecasting cryptocurrency price sequences, the effectiveness of such self-attention mechanisms is not optimal. Hence, a more tailored attention mechanism that aligns better with the characteristics of time series data is constructed to synergize with the GRU model.

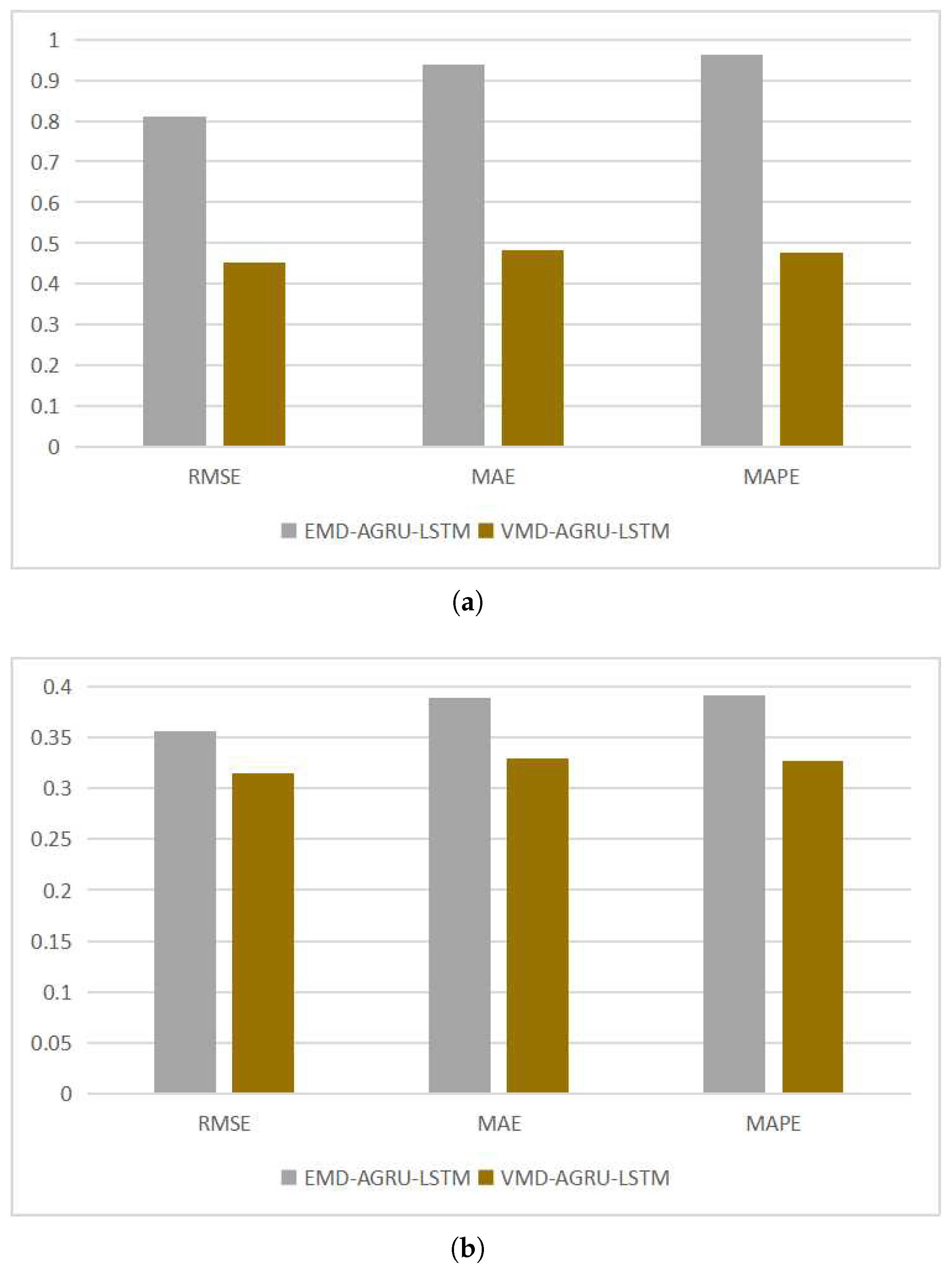

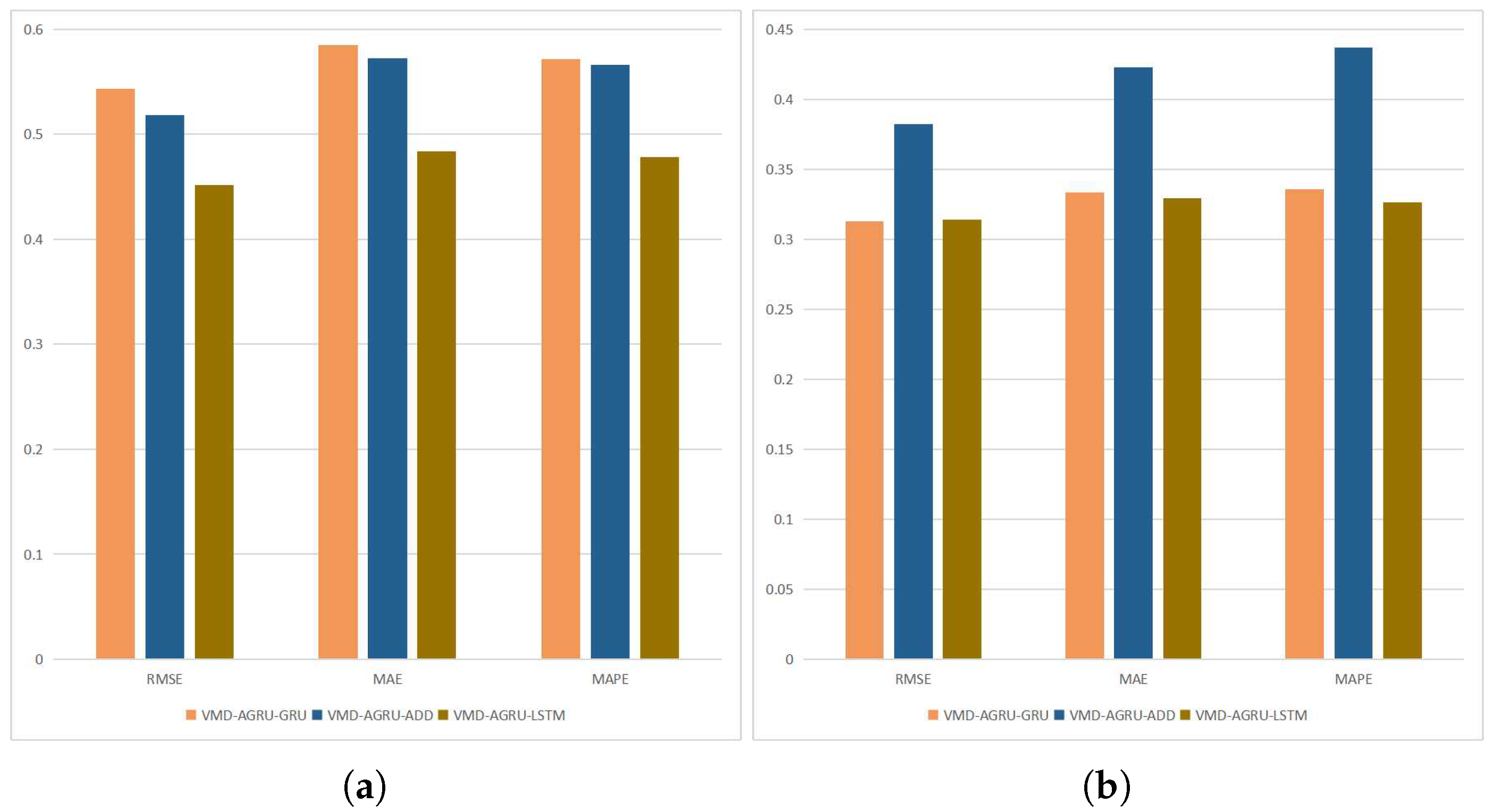

The exploration of underlying features within time series can significantly enhance predictive performance of models. Therefore, leveraging the capabilities of the VMD algorithm to adeptly uncover these latent features within the original time series is essential. After reviewing numerous related studies, it becomes evident that, despite the respectable performance of decomposition ensemble methods across various problems, these methods exhibit a bias in handling residuals, leading to errors in final predictions. By employing a re-decomposition approach for handling residuals, we mitigate the errors introduced by residuals and further boost predictive performance.

The study conducted a profit and loss back-testing of the proposed model’s predictive results, and the final outcomes demonstrated strong performance in real-world back-testing scenarios. This outcome underscores the practical economic value of the model, as it showcases its efficacy in generating tangible profits and losses, thereby validating its potential impact on investment strategies and decision-making processes.

The experiments focus on the two largest cryptocurrencies, Bitcoin and Ethereum, by evaluating the proposed hybrid model against other models using standard evaluation metrics and DNA tests. By comparing the hybrid model to other models, the results demonstrate that the proposed model outperforms not only basic decomposition ensemble models but also other mainstream models. Furthermore, the experiments confirm the effectiveness of residual re-decomposition in cryptocurrency price sequence prediction and the improvement in predictive performance due to the attention mechanism. The use of VMD decomposition is proven to be more effective than EMD decomposition in uncovering the latent features within time series.

Cryptocurrency price fluctuations are influenced by a multitude of factors, including macroeconomic aspects such as taxes and regulations, investor sentiments, cryptocurrency mining costs, and more. Relying solely on historical data for predictions may not adequately capture the mechanisms through which real-world factors impact cryptocurrency variations. In future research, a multi-modal predictive model for cryptocurrency price forecasting is a potential avenue. This involves incorporating economic indicators, policy indicators, or other time series variables, along with historical cryptocurrency data, as inputs to an extended predictive model. Furthermore, natural language processing (NLP) techniques could be employed to process cryptocurrency-related news texts from the web, extracting market sentiment indicators as additional inputs to the predictive model. This combination of time series and textual data has been shown to enhance prediction accuracy in stock price forecasting, yet such research is relatively scarce in the cryptocurrency domain, making multi-modal cryptocurrency price prediction research highly significant.

Another area of future study involves adapting the existing variational mode decomposition (VMD) algorithm to handle multi-variable decomposition. Since VMD is primarily developed for single-variable decomposition, exploring how existing multi-variable VMD algorithms perform in cryptocurrency prediction scenarios would be valuable.

Optimizing model hyperparameters is also a critical consideration. In this paper, hyperparameter selection was mainly guided by existing research and trial and error. Incorporating suitable hyperparameter optimization algorithms such as particle swarm optimization could enhance the interpretability and effectiveness of the hyperparameter selection process.

Addressing regime change issues in cryptocurrency price prediction is another important aspect. Major external events, such as the COVID-19 pandemic or geopolitical shifts, can disrupt the underlying patterns within cryptocurrency sequences, rendering existing models ineffective. Hence, developing methods to detect regime changes and adapt model structures accordingly to account for new features induced by such changes is crucial for constructing robust cryptocurrency price prediction models.

The predictive accuracy of the cryptocurrency hybrid forecasting model, VMD-AGRU-RESVMD-LSTM, holds great importance for investors, as effective predictions can help mitigate investment losses. Furthermore, the model’s predictive outcomes can serve as valuable references for regulatory bodies in various countries, aiding in the formulation of more rational policies. Confidence among investors and regulatory institutions is pivotal in the financial markets, and this predictive model has the potential to enhance market confidence by providing reliable forecasts.

Lastly, the predictive results of this model can offer insights for diversified asset allocation strategies, thereby enhancing the efficiency of asset management and risk control capabilities. In essence, the utilization of the VMD-AGRU-RESVMD-LSTM hybrid model extends its benefits beyond individual investors to influence regulatory decisions, market confidence, and overall asset management efficiency.