Abstract

In this article, we examine the local convergence analysis of an extension of Newton’s method in a Banach space setting. Traub introduced the method (also known as the Arithmetic-Mean Newton’s Method and Weerakoon and Fernando method) with an order of convergence of three. All the previous works either used higher-order Taylor series expansion or could not derive the desired order of convergence. We studied the local convergence of Traub’s method and two of its modifications and obtained the convergence order for these methods without using Taylor series expansion. The radii of convergence, basins of attraction, comparison of iterations of similar iterative methods, approximate computational order of convergence (ACOC), and a representation of the number of iterations are provided.

1. Introduction

Many problems in engineering and natural sciences can be modeled into an equation of the form

where is a Fréchet differentiable function on a convex subset and Y are Banach spaces [1]. We are interested in finding the local unique solution of Equation (1). Typically, no analytical or closed-form solution exists in general. Therefore, we turn to iterative methods. Newton’s method, defined as

where is very popular. Almost all methods in the literature are some modification or extension of this method; see [2]. Many authors have considered multi-step methods in order to increase efficiency, as well as the order of convergence [3,4,5,6].

Among the multi-step methods, the Mean Newton methods are well studied (see [7]). Traub introduced a modification of Newton’s method in [5] (see also [8]), defined as follows:

where This method, also called the Arithmetic-Mean Newton’s method, has an order of convergence of three. Later, Weerakoon and Fernando [9] approached the method using a trapezoidal approximation of the interpolatory quadrature formula. Frontini and Sormani in [10] showed that this method is one of the most efficient variants of Newton’s method, which uses the quadrature formula. Later, Cordero et al. [11] extended the method defined for by

where However, the method used Taylor series expansion and assumed the existence of derivatives up to order six. Sharma and Parhi [12] removed these assumptions and studied this method in Banach spaces. Nevertheless, they were unable to obtain the desired order. Many local convergences, as well as semi-local convergence studies, have been conducted on this method [7,13,14,15].

This method has an order of six. Parhi [15] has used the above extension to obtain an efficient sixth-order method using a linear interpolation of .

Our paper is divided as follows. In Section 2, each method’s preliminary functions, definitions, and auxiliary results are given in order. Some numerical examples to show the radii of convergence, approximate computational order of convergence (ACOC), and an example to illustrate the basins of attractions are given in Section 3. Section 3 also contains a representation of the number of iterations as a heatmap and tables that compare the iterates of methods (2)–(4) with corresponding methods in [16]. Finally, the paper ends with conclusions in Section 4.

2. Main Results

Firstly, we introduce some functions required in the proofs, along with necessary notations and definitions.

Definition 1

([5] Page 9). If for a sequence converging to there exist some such that the limit defined as

exists. Then, p is called the order of convergence of sequence

The above definition is somewhat restrictive. Ortega and Rheinboldt discussed a more general concept of R-order and Q-order in [2]. Nevertheless, with an additional condition on , there is equivalence between the above definition and and orders (see [17,18]).

We use the order of convergence defined as follows (see [19,20]).

A sequence converges to with order at least p if there are constants and such that

Note that

for implies (5) provided

For practical computation of the order of convergence, one may use the Approximate Computational Order of Convergence (ACOC) [21], defined as

Throughout this paper, the open and closed balls centered at with radius are denoted as

respectively. Furthermore, is denoted by , is denoted by , and is denoted by Let and M be positive constants in ; our proofs are subject to the following conditions.

Assumption 1.

Assume

- 1.

- is the root of and exists, where

- 2.

- There exists such that for all

- 3.

- There exists such that

- 4.

- There exists such that for all

First, we define function as

Let Note that is an increasing function in the interval Furthermore, and That is,

Define as below.

Let

Clearly, since and as , by the intermediate value theorem, there exist a smallest positive root for , say in the interval So,

Consider,

Let

Note that and as Intermediate value theorem guarantees a smallest positive root in such that

Let

then

Furthermore, one can see that is an increasing function in and hence

Theorem 1.

Proof.

First, we will show that is bounded. Using Assumption 1 and (12),

Hence, by Banach’s lemma on invertible operators [22], is invertible and

From , we have

hence, by Assumption 1, Equations (13) and (16),

so, iterate Next, we employ Banach’s lemma of invertible operators [22], Equation (13), and Assumption 1 to show that is bounded.

So, is bounded and

From (2),

Let and Now, we split up as follows.

where

Using Assumption 1, we have

So, using (19), (20), (21), (22), and (13),

Next, to provide the convergence analysis of method (3), we define some functions and parameterss below.

Define by

and

Then, and as Hence, by the intermediate value theorem, has a smallest zero and

Define by

and by

Observe that and as Using the intermediate value theorem, has a smallest zero in the interval Let

then

It is clear that is an increasing function in In particular,

Theorem 2.

Proof.

We use induction to prove the theorem. Clearly, one can mimic the proof of Theorem 1 to obtain

Now, we will show that is bounded using Assumption 1 and (28),

Hence, is invertible and

The rest of the proof follows as in Theorem 1. □

To prove the convergence of method (4), we introduce some more functions and parameters. Let be defined as

and as

Then, and as By the intermediate value theorem there exists a smallest zero in the interval such that

Lastly, we define functions by

and by

Then, and as The intermediate value theorem gives a smallest root in such that

If

then

Furthermore, observe that is an increasing function in Specifically,

Theorem 3.

Proof.

In extension (4), only the last step is different from extension (3). So, we can easily repeat the proof to obtain

Now, as in previous case, we will show that exists using Assumptions 1 and (40).

Hence, is invertible and

Hence, by using (43), Assumptions 1 and (40), it follows that

so, . Furthermore, from (41) and (45), we have

Hence, (42) is satisfied for Now, the proof follows as in Theorem 2. □

The conditions that guarantee a unique solution are given in the following lemma.

Lemma 1.

Suppose Assumption 1 holds and is a simple solution of the equation Then, has a unique solution in provided

Proof.

Let be such that Define Then, by Assumption 1, we have, in turn

It follows that J is invertible, and hence by the identity □

3. Illustrations and Numerical Examples

In this section, we will illustrate our results using numerical examples. In the first three examples, we compute the radii of convergence. The next example compares the iterations of methods (2)–(4) with the corresponding methods in [16]. We also compute the ACOC for Examples 2 and 4 (the iterations of Examples 1 and 3 converge within three iterations on almost all initial points, so we have not computed ACOC for these examples). An illustration of the basins of attraction and a representation of the number of iterates as a heatmap follows.

The values of for the examples (Examples 1–3) are given in Table 1, and the ACOC of Examples 2 and 4 are given in Table 2.

Table 1.

The parameters of the Examples 1–3.

Table 2.

ACOC of Examples 2 and 4.

Example 1.

Let and be defined by

Here, . and . For instance, if we take from Table 1, we obtain the values of , and as , and , respectively.

Example 2.

Let Define function on Ω for by

Here, . We have Furthermore, and Similar to the previous case, we obtain and (see Table 1).

Example 3.

In the next example, we compare the performance of the methods (2)–(4) with that of Noor–Waseem-type methods studied in [16].

Example 4.

The system of equations

has solutions , and The solution is considered for approximating using the methods (2)–(4) and the corresponding methods studied in [16]. We use the initial point in our computation. Table 3, Table 4 and Table 5 provide the obtained results.

Table 3.

Traub’s Method (2) and the Noor–Waseem Method in [16].

Table 4.

Fifth-Order Method (3) and the Noor–Waseem Fifth-order Extension Method in [16].

Table 5.

The Sixth-Order Method (4) and the Noor–Waseem Sixth-order Extension Method in [16].

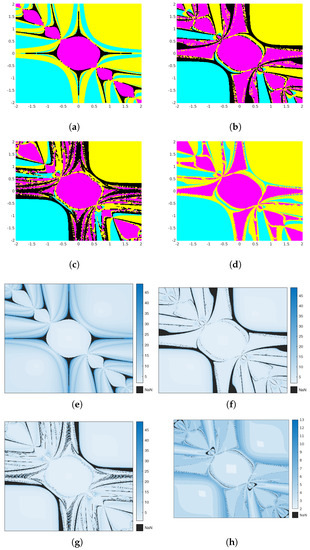

The next example is to compare basins of attraction for each of the discussed methods.

Example 5.

Define F on by

with roots and .

The sub-figures (a), (b), (c), and (d) in the Figure 1 are generated using equally spaced grid points from the rectangular region as initial points for the iterations. The points that converge to and are colored cyan, magenta, and yellow, respectively. The points that do not converge to any roots after 50 iterations are marked black. The stopping criterion used is The algorithm used is the same as in [23]. The sub-figures (e), (f), (g), and (h) in Figure 1 are generated with the same grid for the corresponding methods representing the number of iterations required to converge by each point of the grid. It represents the number of iterations required to converge on each grid point. In black, the initial points that did not converge within 50 iterations are represented. The technique used can be found in Ardelean [24].

We used a PC with Intel Core i7 processor running Ubuntu 22.4.1 LTS. The programs were executed using MATLAB programming language with version code R2022b.

4. Conclusions

Traub’s method (also known as Arithmetic-Mean Newton’s Method and Weerakoon and Fernando method) and its two extensions were studied in this paper using assumptions on the derivatives of the operator up to the order two. The theoretical parameters are verified using examples. The dynamics of the methods are also included in this study.

Author Contributions

Conceptualization, M.S.K., K.R., S.G., J.P. and I.K.A.; methodology, M.S.K., K.R., S.G., J.P. and I.K.A.; software, M.S.K., K.R., S.G., J.P. and I.K.A.; validation, M.S.K., K.R., S.G., J.P. and I.K.A.; formal analysis, M.S.K., K.R., S.G., J.P. and I.K.A.; investigation, M.S.K., K.R., S.G., J.P. and I.K.A.; resources, M.S.K., K.R., S.G., J.P. and I.K.A.; data curation, M.S.K., K.R., S.G., J.P. and I.K.A.; writing—original draft preparation, M.S.K., K.R., S.G., J.P. and I.K.A.; writing—review and editing, M.S.K., K.R., S.G., J.P. and I.K.A.; visualization, M.S.K., K.R., S.G., J.P. and I.K.A.; supervision, M.S.K., K.R., S.G., J.P. and I.K.A.; project administration, M.S.K., K.R., S.G., J.P. and I.K.A.; funding acquisition, M.S.K., K.R., S.G., J.P. and I.K.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

Santhosh George and Jidesh P are supported by SERB Project, Grant No. CRG/2021/004776. Krishnendu R thanks UGC, Govt of India, for the support. Muhammed Saeed K would like to thank National Institute of Technology Karnataka, India, for the support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Magrenán, A.A.; Argyros, I.K. A Contemporary Study of Iterative Methods: Convergence. Dynamics and Applications; Academic Press: London, UK, 2018. [Google Scholar]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solution of Nonlinear Equations in Several Variables; Computer Science and Applied Mathematics; Academic Press: New York, NY, USA, 1970; ISBN 978-0-12-528550-6. [Google Scholar]

- Behla, R.; Arora, H. CMMSE: A novel scheme having seventh-order convergence for nonlinear systems. J. Comput. Appl. Math. 2022, 404, 113301. [Google Scholar] [CrossRef]

- Shakhno, S.M. On an iterative algorithm with superquadratic convergence for solving nonlinear operator equations. J. Comput. Appl. Math. 2009, 231, 222–235. [Google Scholar] [CrossRef]

- Traub, J.F. Iterative Methods for the Solution of Equations; Chelsea Publishing: New York, NY, USA, 1982; ISBN 978-0-8284-0312-2. [Google Scholar]

- Petković, M. Multipoint Methods for Solving Nonlinear Equations; Elsevier/Academic Press: Amsterdam, The Netherlands, 2013. [Google Scholar]

- Özban, A.Y. Some new variants of Newton’s method. Appl. Math. Lett. 2004, 17, 677–682. [Google Scholar] [CrossRef]

- Petković, L.D.; Petković, M.S. A note on some recent methods for solving nonlinear equations. Appl. Math. Comput. 2007, 185, 368–374. [Google Scholar] [CrossRef]

- Weerakoon, S.; Fernando, T.G.I. A variant of Newton’s method with accelerated third-order convergence. Appl. Math. Lett. 2000, 13, 87–93. [Google Scholar] [CrossRef]

- Frontini, M.; Sormani, E. Some variant of Newton’s method with third-order convergence. Appl. Math. Comput. 2003, 140, 419–426. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martínez, E.; Torregrosa, J.R. Increasing the convergence order of an iterative method for nonlinear systems. Appl. Math. Lett. 2012, 25, 2369–2374. [Google Scholar] [CrossRef]

- Sharma, D.; Parhi, S.K. On the local convergence of modified Weerakoon’s method in Banach spaces. J. Anal. 2020, 28, 867–877. [Google Scholar] [CrossRef]

- Argyros, I.K.; Cho, Y.J.; George, S. Local convergence for some third-order iterative methods under weak conditions. J. Korean Math. Soc. 2016, 53, 781–793. [Google Scholar] [CrossRef]

- Nishani, H.P.S.; Weerakoon, S.; Fernando, T.G.I.; Liyanage, M. Weerakoon-Fernando Method with accelerated third-order convergence for systems of nonlinear equations. IJMMNO 2018, 8, 287. [Google Scholar] [CrossRef]

- Parhi, S.K.; Gupta, D.K. A sixth order method for nonlinear equations. Appl. Math. Comput. 2008, 203, 50–55. [Google Scholar] [CrossRef]

- George, S.; Sadan, A.R.; Jidesh, P.; Argyros, I.K. On the Order of Convergence of Noor-Waseem Method. Mathematics 2022, 10, 4544. [Google Scholar] [CrossRef]

- Beyer, W.A.; Ebanks, B.R.; Qualls, C.R. Convergence rates and convergence-order profiles for sequences. Acta Appl. Math. 1990, 20, 267–284. [Google Scholar] [CrossRef]

- Petković, M.S.; Neta, B.; Petković, L.D.; Džunić, J. Multipoint methods for solving nonlinear equations: A survey. Appl. Math. Comput. 2014, 226, 635–660. [Google Scholar] [CrossRef]

- Ezquerro, J.A.; Hernández, M.A. On the R-order of convergence of Newton’s method under mild differentiability conditions. J. Comput. Appl. Math. 2006, 197, 53–61. [Google Scholar] [CrossRef]

- Potra, F.A.; Pták, V. Nondiscrete Induction and Iterative Processes; Pitman: New York, NY, USA, 1984. [Google Scholar]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s Method using fifth-order quadrature formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar] [CrossRef]

- Argyros, I.K. The Theory and Applications of Iteration Methods, 2nd ed.; Engineering Series; CRC Press: Boca Raton, FL, USA; Taylor and Francis Group: Abingdon, UK, 2022. [Google Scholar]

- Chicharro, F.I.; Cordero, A.; Torregrosa, J.R. Drawing Dynamical and Parameters Planes of Iterative Families and Methods. Sci. World J. 2013, 2013, e780153. [Google Scholar] [CrossRef] [PubMed]

- Ardelean, G. A comparison between iterative methods by using the basins of attraction. Appl. Math. Comput. 2011, 218, 88–95. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).