MS-PreTE: A Multi-Scale Pre-Training Encoder for Mobile Encrypted Traffic Classification

Abstract

1. Introduction

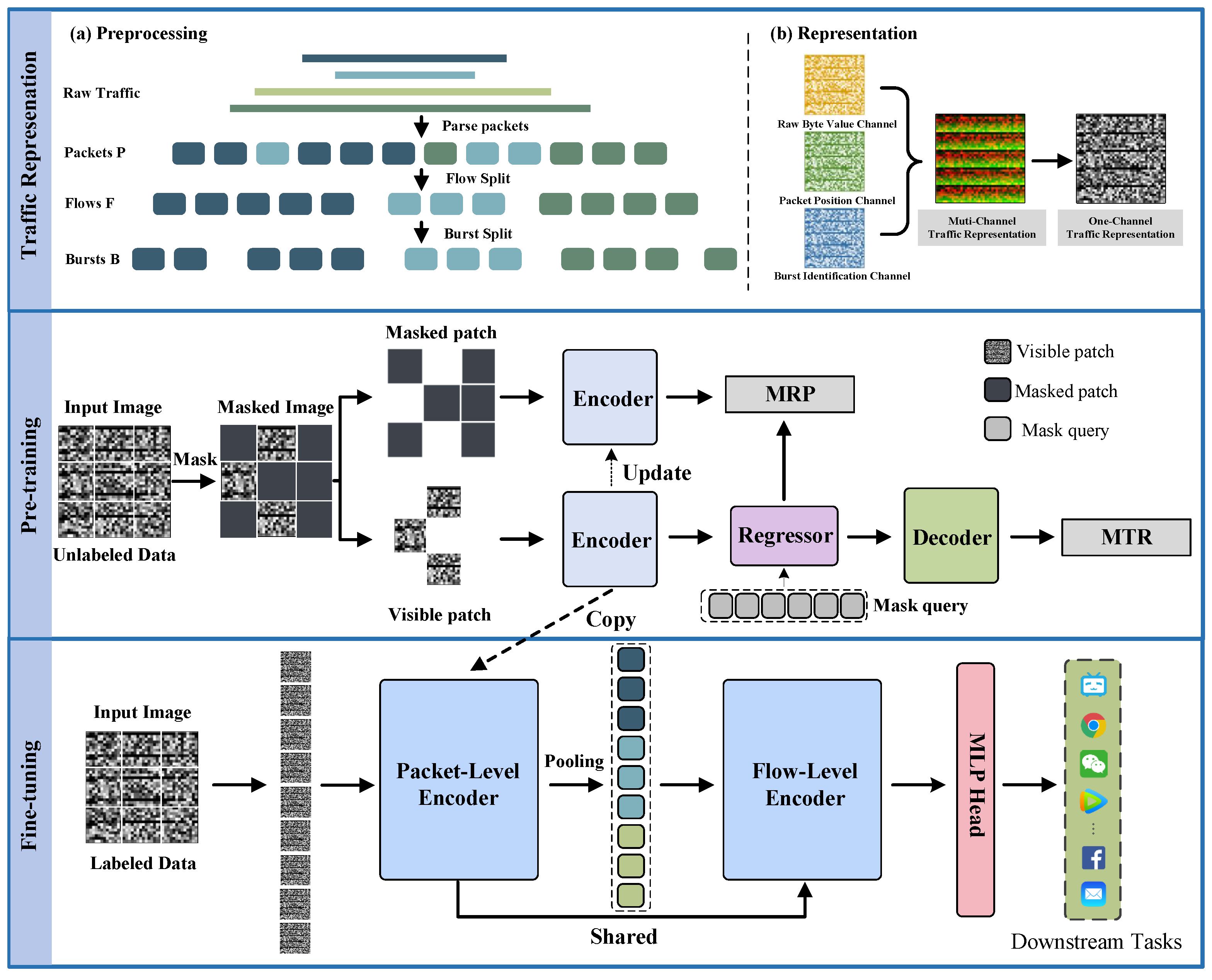

- To more effectively distinguish mobile traffic, we propose a novel multi-level representation mechanism combined with a focal attention mechanism. Specifically, the multi-level representation constructs three distinct information channels to preserve critical traffic features across multiple dimensions. Simultaneously, the focal-attention mechanism introduces an amplitude modulation strategy based on the original attention architecture, which enables the model to focus more precisely on key features during class probability prediction.

- We propose MS-PreTE, a two-phase learning framework for mobile traffic classification. In the first phase, MS-PreTE employs self-supervised pre-training on large-scale unlabeled encrypted traffic to extract universal representations. The second phase incorporates task-specific fine-tuning with focal-attention mechanisms to capture spatiotemporal patterns, which enables accurate mobile traffic classification.

- We conduct extensive experimental evaluations of MS-PreTE. Specifically, we compare our method with existing state-of-the-art approaches on three mobile application datasets and four real-world traffic, and perform ablation studies to validate the effectiveness of MS-PreTE. Experimental results demonstrate that MS-PreTE achieves state-of-the-art performance on three mobile application datasets, boosting the F1 score for Cross-platform (iOS) to 99.34% (up by 2.1%), Cross-platform (Android) to 98.61% (up by 1.6%), and NUDT-Mobile-Traffic to 87.70% (up by 2.47%), and also performs well on other general traffic classification tasks.

2. Related Works

2.1. Machine Learning Based Traffic Classification Methods

2.2. Deep Learning Based Traffic Classification Methods

2.3. Pre-Training Based Traffic Classification Methods

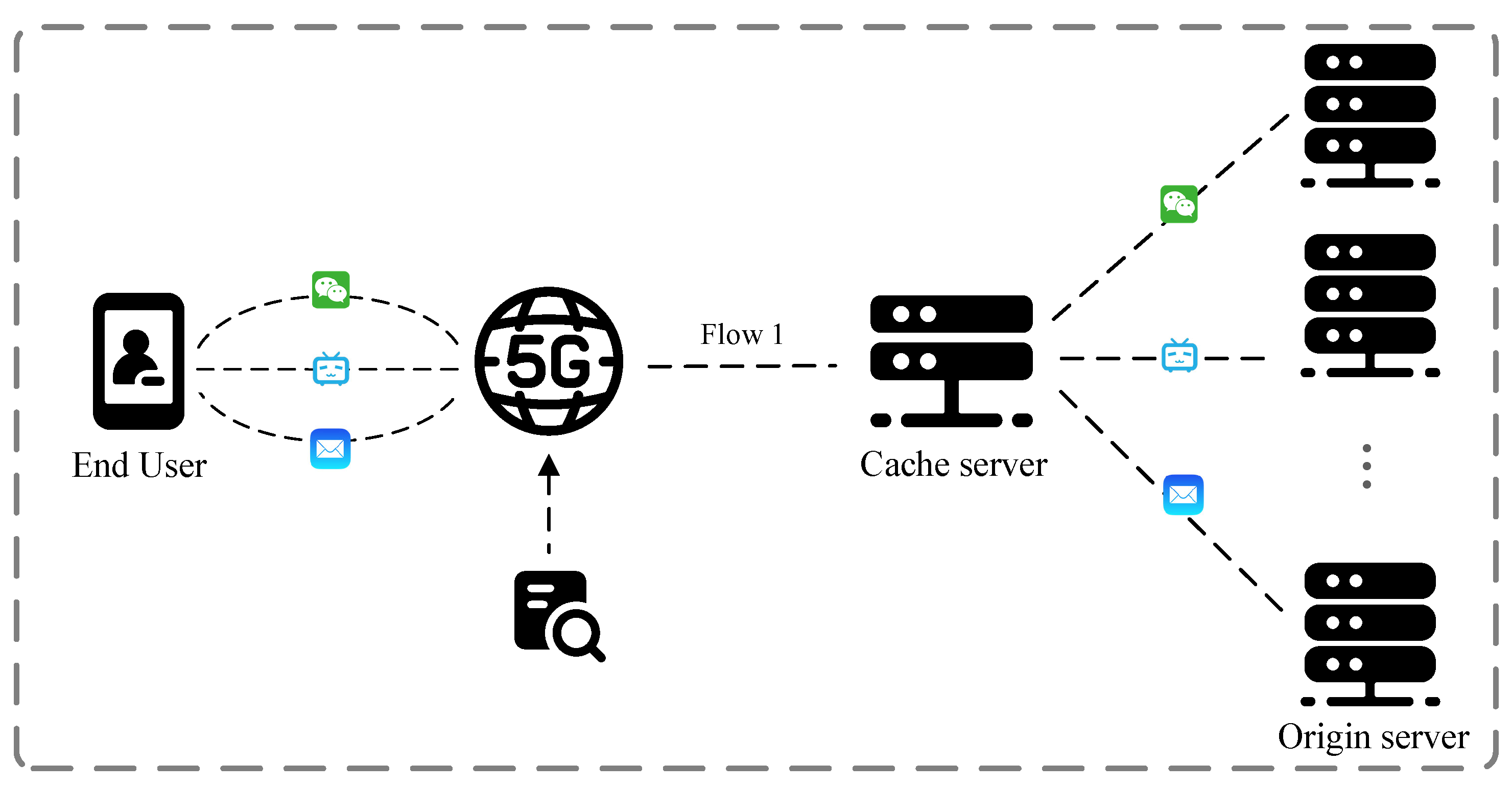

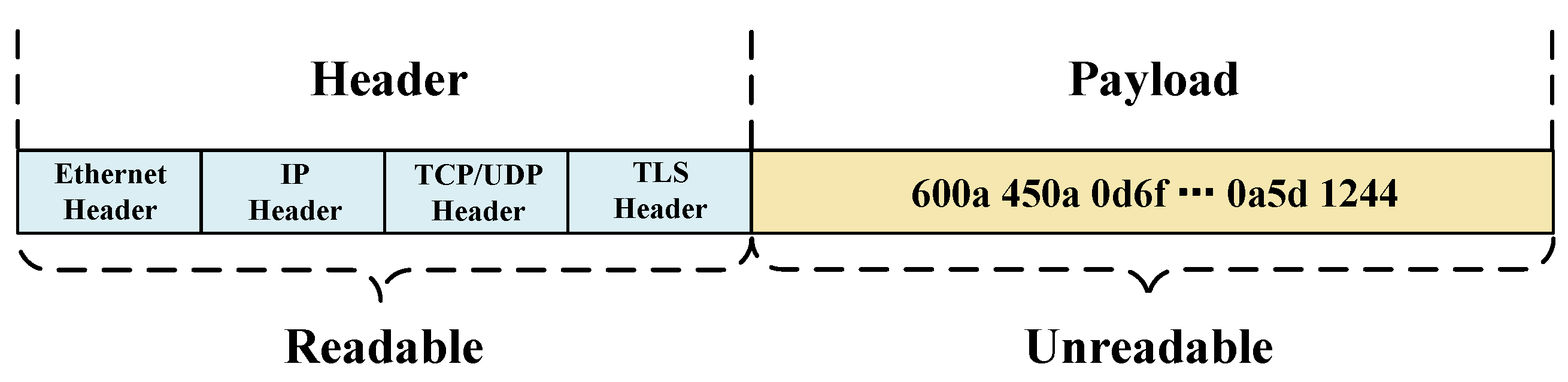

3. Observation and Motivation

4. MS-PreTE

4.1. Preprocessing

4.2. Flow Representation Model

- The raw byte value channel stores the raw value of each byte in a packet, aiming to preserve the complete content of the packet and provide fine-grained data information to support various complex analysis tasks:

- The packet position channel records the specific positions of each byte in its corresponding packet, which is essential for understanding the packet structure and the underlying relationship of its internal bytes:

- The burst identification channel is designed to preserve the dynamic characteristics of traffic flows, such as periodic transmission patterns in voice communications, which captures temporal relationships and burst traffic features within the data stream. The temporal information of packets within burst sequences is encoded as pixel values. For any packet belonging to burst , all corresponding burst information positions are labeled with k, which defines the as:

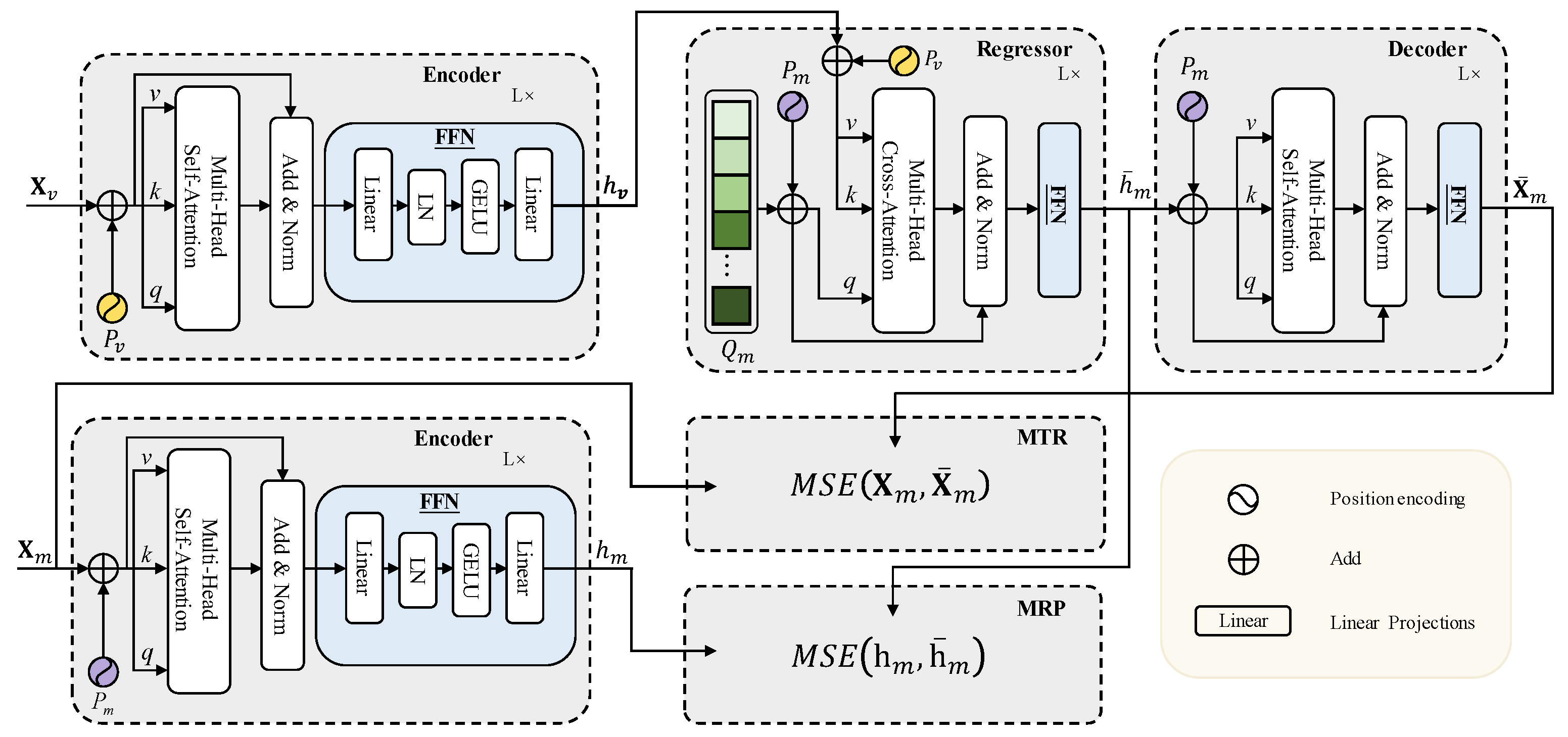

4.3. Pre-Training Phase

4.4. Fine-Tuning Phase

5. Experiments

5.1. Experiment Setup

5.1.1. Datasets

Encrypted Traffic Classification on the General Application (ETCGA) Task

Encrypted Traffic Classification on the Malware Application (ETCMA) Task

Encrypted Traffic Classification on VPN (ETCV) Task

Encrypted Traffic Classification on Tor (ETCT) Task

Traffic Classification on the Malware Application (TCIoT) Task

5.1.2. Implementation Details

5.1.3. Evaluation Metrics

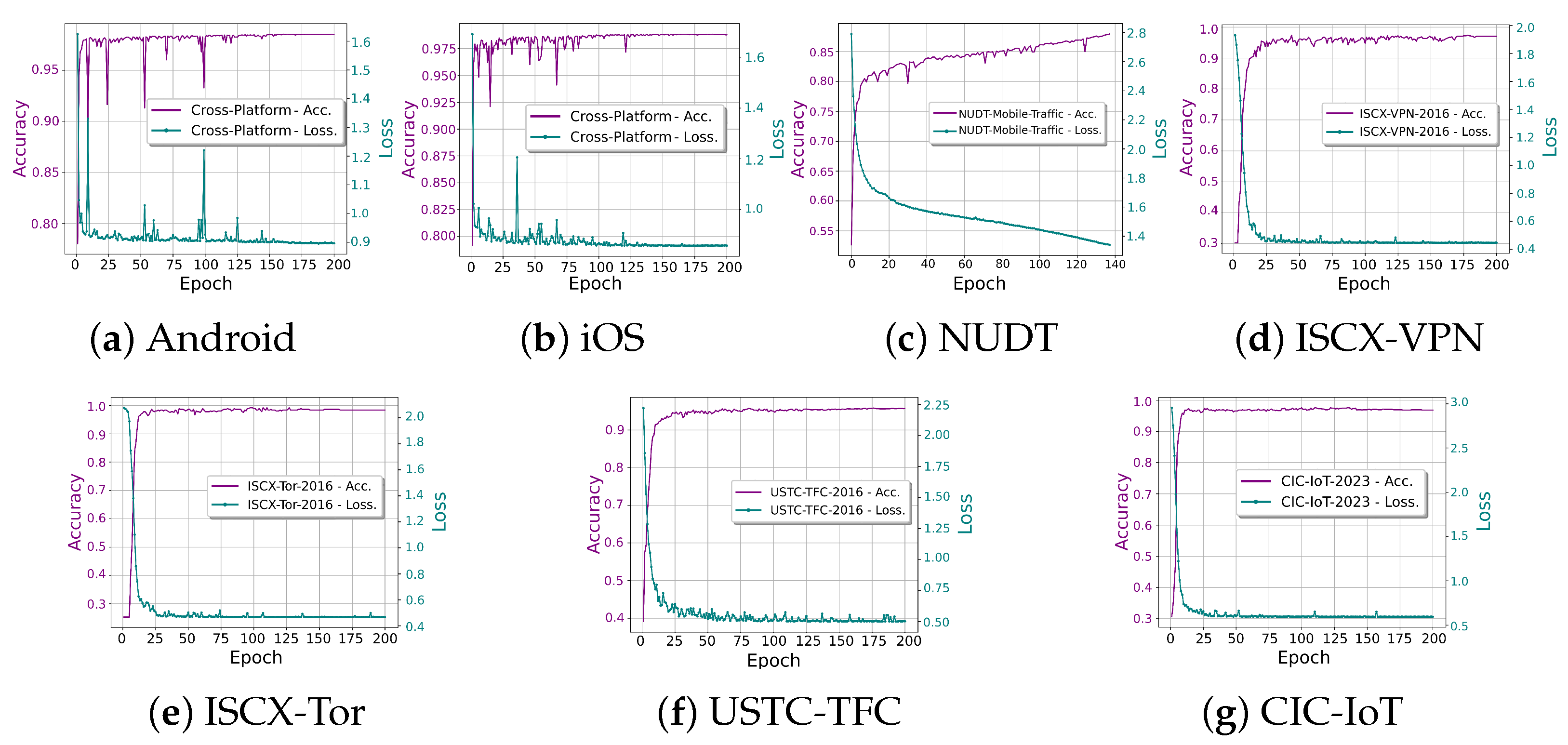

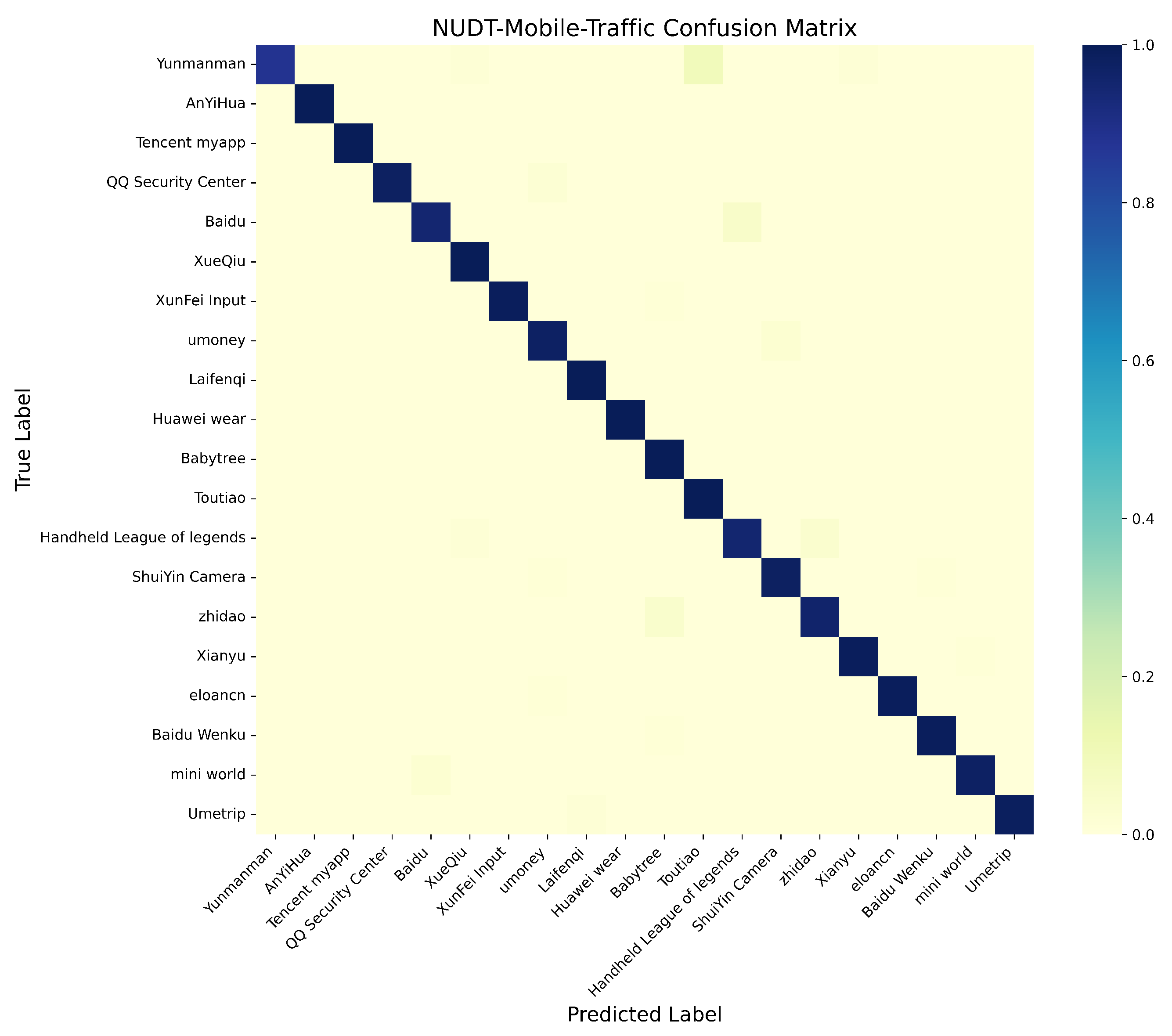

5.2. Classification Performance

5.3. Comparison with State-of-the-Art Methods

5.4. Ablation Study

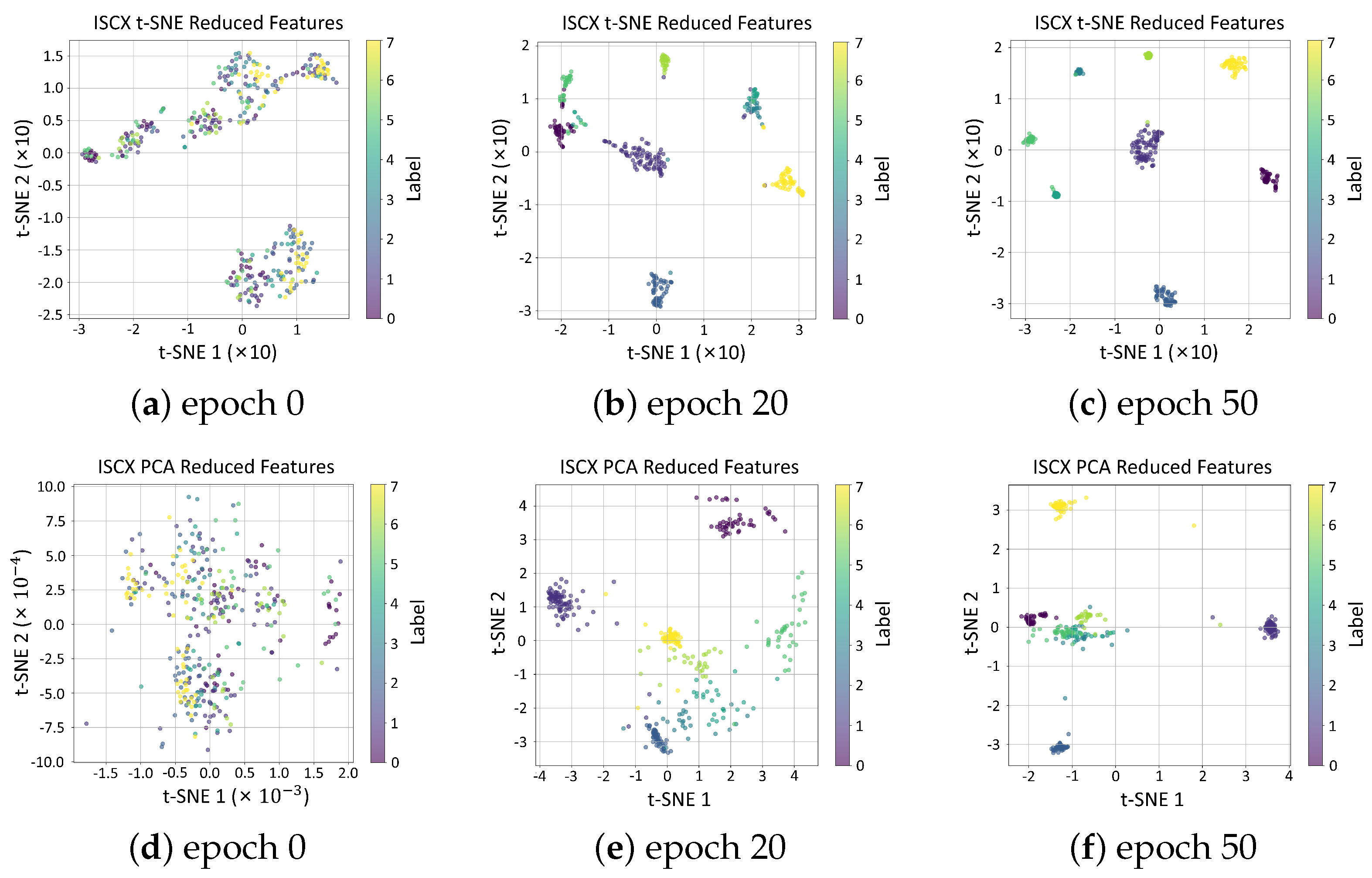

5.5. t-SNE Visualization on Latent Feature Representations

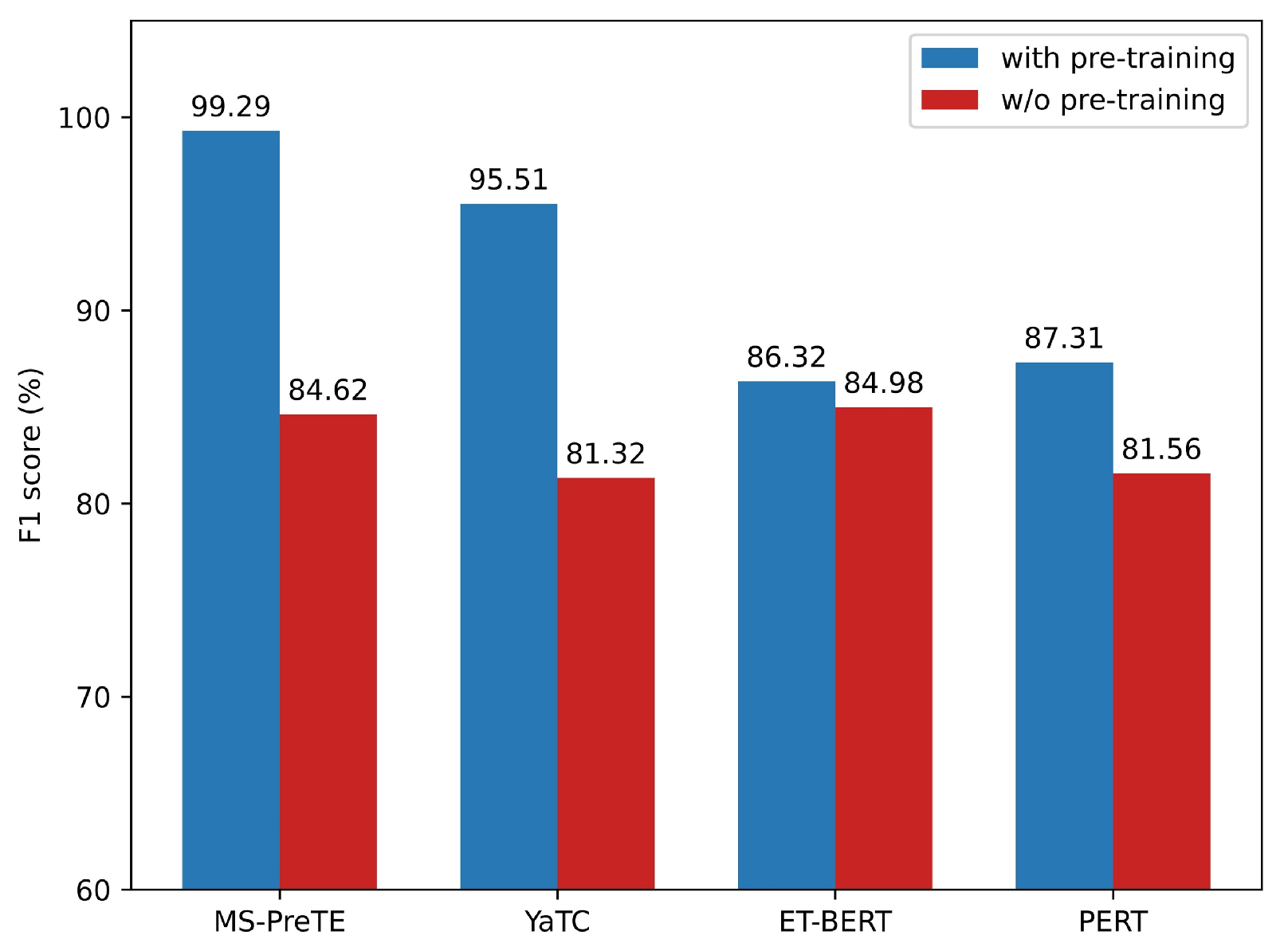

5.6. Transfer Learning Analysis

5.7. Resource Consumption Analysis

6. Discussion

- Model Lightweighting

- Interpretability

- Adaptation Capability for Unknown Traffic Patterns

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Papadogiannaki, E.; Ioannidis, S. A Survey on Encrypted Network Traffic Analysis Applications, Techniques, and Countermeasures. ACM Comput. Surv. 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Chen, Y.; You, W.; Lee, Y.; CHen, K.; Wang, X. Mass Discovery of Android Traffic Imprints through Instantiated Partial Execution. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 815–828. [Google Scholar]

- Dai, S.; Tongaonkar, A.; Wang, X.; Nucci, A.; Song, D. NetworkProfiler: Towards Automatic Fingerprinting of Android Apps. In Proceedings of the 2013 Proceedings IEEE INFOCOM, Turin, Italy, 14–19 April 2013; pp. 809–817. [Google Scholar]

- Chen, Z.; Yu, B.; Zhang, Y.; Zhang, J.; Xu, J. Automatic Mobile Application Traffic Identification by Convolutional Neural Networks. In Proceedings of the 2016 IEEE Trustcom/BigDataSE/ISPA, Tianjin, China, 23–26 August 2016; pp. 301–307. [Google Scholar]

- Choi, Y.; Chung, J.Y.; Park, B.; Hong, J. Automated Classifier Generation for Application-Level Mobile Traffic Identification. In Proceedings of the 2012 IEEE Network Operations and Management Symposium, Maui, HI, USA, 16–20 April 2012; pp. 1075–1081. [Google Scholar]

- Liu, C.; He, L.; Xiong, G.; Cao, Z.; Li, Z. FS-Net: A Flow Sequence Network for Encrypted Traffic Classification. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 1171–1179. [Google Scholar]

- Sirinam, P.; Imani, M.; Juarez, M.; Wright, M. Deep Fingerprinting: Undermining Website Fingerprinting Defenses with Deep Learning. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, Canada, 15–19 October 2018; pp. 1928–1943. [Google Scholar]

- Lin, K.; Xu, X.; Gao, H. TSCRNN: A Novel Classification Scheme of Encrypted Traffic Based on Flow Spatiotemporal Features for Efficient Management of IIoT. Comput. Netw. 2021, 190, 107974. [Google Scholar] [CrossRef]

- Wang, W.; Zhu, M.; Wang, J.; Zeng, X.; Yang, Z. End-to-End Encrypted Traffic Classification with One-Dimensional Convolution Neural Networks. In Proceedings of the 2017 IEEE International Conference on Intelligence and Security Informatics (ISI), Beijing, China, 22–24 July 2017; pp. 43–48. [Google Scholar]

- Maniwa, R.; Ichijo, N.; Nakahara, Y.; Matsushima, T. Boosting-Based Sequential Meta-Tree Ensemble Construction for Improved Decision Trees. arXiv 2024, arXiv:2402.06386. [Google Scholar]

- Pu, F.; Wang, Y.; Ye, T.; Jin, Y.; Liu, Q.; Zou, Q. Detecting Unknown Encrypted Malicious Traffic in Real Time via Flow Interaction Graph Analysis. In Proceedings of the 2023 Network and Distributed System Security Symposium (NDSS), San Diego, CA, USA, 27 February–3 March 2023. [Google Scholar]

- Lotfollahi, M.; Siavoshani, M.J.; Hossein Zade, R.S.; Saberian, M. Deep Packet: A Novel Approach for Encrypted Traffic Classification Using Deep Learning. Soft Comput. 2020, 24, 1999–2012. [Google Scholar] [CrossRef]

- Rezaei, S.; Liu, X. Deep Learning for Encrypted Traffic Classification: An Overview. IEEE Commun. Mag. 2019, 57, 76–81. [Google Scholar] [CrossRef]

- Wang, Q.; Qian, C.; Li, X.; Zhao, W.; Xu, K. Lens: A Foundation Model for Network Traffic in Cybersecurity. arXiv 2024, arXiv:2404.12345. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16×16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Lan, Z.; Chen, M.; Goodman, S.; Gimpel, K.; Sharma, P.; Soricut, R. ALBERT: A Lite BERT for Self-Supervised Learning of Language Representations. arXiv 2019, arXiv:1909.11942. [Google Scholar]

- Liu, T.; Ma, X.; Liu, L.; Xu, W.; Zhu, Z.; Wang, Z. LAMBERT: Leveraging Attention Mechanisms to Improve the BERT Fine-Tuning Model for Encrypted Traffic Classification. Mathematics 2024, 12, 1624. [Google Scholar] [CrossRef]

- He, H.Y.; Yang, Z.G.; Chen, X.N. PERT: Payload Encoding Representation from Transformer for Encrypted Traffic Classification. In Proceedings of the 2020 ITU Kaleidoscope: Industry-Driven Digital Transformation (ITU K), Ha Noi, Vietnam, 7–11 December 2020; pp. 1–8. [Google Scholar]

- Lin, X.; Xiong, G.; Gou, G.; Li, Z.; Shi, J.; Yu, J. ET-BERT: A Contextualized Datagram Representation with Pre-Training Transformers for Encrypted Traffic Classification. In Proceedings of the WWW’22: The ACM Web Conference 2022, Lyon, France, 25–29 April 2022; pp. 633–642. [Google Scholar]

- Zhao, R.; Zhan, M.; Deng, X.; Wang, Y.; Gui, G.; Xue, Z. Yet Another Traffic Classifier: A Masked Autoencoder Based Traffic Transformer with Multi-Level Flow Representation. Proc. AAAI Conf. Artif. Intell. 2023, 37, 5420–5427. [Google Scholar] [CrossRef]

- Chen, X.; Ding, M.; Wang, X.; Xin, Y.; Mo, S.; Wang, Y.; Han, S.; Luo, P.; Zeng, G.; Wang, J. Context Autoencoder for Self-Supervised Representation Learning. Int. J. Comput. Vis. 2024, 132, 208–223. [Google Scholar] [CrossRef]

- Zhao, Y.; Medvidovic, N. A Microservice Architecture for Online Mobile App Optimization. In Proceedings of the 2019 IEEE/ACM 6th International Conference on Mobile Software Engineering and Systems (MOBILESoft), Montreal, QC, Canada, 25 May 2019; pp. 45–49. [Google Scholar]

- Aceto, G.; Ciuonzo, D.; Montieri, A.; Pescapè, A. Mobile Encrypted Traffic Classification Using Deep Learning: Experimental Evaluation, Lessons Learned, and Challenges. IEEE Trans. Netw. Serv. Manag. 2019, 16, 445–458. [Google Scholar] [CrossRef]

- Van Ede, T.; Bortolameotti, R.; Continella, A.; Ren, J.; Dubois, D.; Lindorfer, M.; Choffnes, D.; Steen, M.; Peter, A. Flowprint: Semi-Supervised Mobile-App Fingerprinting on Encrypted Network Traffic. Proc. NDSS Symp. 2020, 27, 1909020. [Google Scholar]

- Shen, M.; Liu, Y.; Zhu, L.; Xu, K.; Du, X.; Guizani, N. Optimizing Feature Selection for Efficient Encrypted Traffic Classification: A Systematic Approach. IEEE Netw. 2020, 34, 20–27. [Google Scholar] [CrossRef]

- Taylor, V.F.; Spolaor, R.; Conti, M.; Martinovic, I. Robust Smartphone App Identification via Encrypted Network Traffic Analysis. IEEE Trans. Inf. Forensics Secur. 2017, 13, 63–78. [Google Scholar] [CrossRef]

- Hayes, J.; Danezis, G. k-Fingerprinting: A Robust Scalable Website Fingerprinting Technique. In Proceedings of the 25th USENIX Security Symposium, Austin, TX, USA, 10–12 August 2016; pp. 1357–1374. [Google Scholar]

- Taylor, V.F.; Spolaor, R.; Conti, M.; Berthome, P. Appscanner: Automatic Fingerprinting of Smartphone Apps from Encrypted Network Traffic. In Proceedings of the IEEE European Symposium on Security and Privacy (EuroS&P), Saarbruecken, Germany, 21–24 March 2016; pp. 439–454. [Google Scholar]

- Al-Naami, K.; Chandra, S.; Mustafa, A.; Khan, L.; Lin, Z.; Hamlen, K.; Thuraisingham, B. Adaptive Encrypted Traffic Fingerprinting with Bi-Directional Dependence. In Proceedings of the 2016 Annual Computer Security Applications Conference, Los Angeles, CA, USA, 5–8 December 2016; pp. 177–188. [Google Scholar]

- He, Y.; Li, W. Image-Based Encrypted Traffic Classification with Convolutional Neural Networks. In Proceedings of the 2020 IEEE Fifth International Conference on Data Science in Cyberspace (DSC), Hong Kong, China, 27–30 July 2020; pp. 271–278. [Google Scholar]

- Okonkwo, Z.; Foo, E.; Li, Q.; Hou, Z. A CNN Based Encrypted Network Traffic Classifier. In Proceedings of the 2022 Australasian Computer Science Week, Brisbane, Australia, 14–18 February 2022; pp. 74–83. [Google Scholar]

- Sengupta, S.; Ganguly, N.; De, P.; Chakraborty, S. Exploiting Diversity in Android TLS Implementations for Mobile App Traffic Classification. In Proceedings of the WWW ’19: The World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 1657–1668. [Google Scholar]

- Canard, S.; Diop, A.; Kheir, N.; Paindavoine, M.; Sabt, M. BlindIDS: Market-Compliant and Privacy-Friendly Intrusion Detection System over Encrypted Traffic. In Proceedings of the 2017 ACM on Asia Conference on Computer and Communications Security, Abu Dhabi, United Arab Emirates, 2–6 April 2017; pp. 561–574. [Google Scholar]

- Wei, H.; Xie, R.; Cheng, H.; Feng, L.; Li, Y. Mitigating Neural Network Overconfidence with Logit Normalization. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 23631–23644. [Google Scholar]

- Zhao, S.; Chen, S.; Wang, F.; Wei, Z.; Zhong, J.; Liang, J. A Large-Scale Mobile Traffic Dataset for Mobile Application Identification. Comput. J. 2024, 67, 1501–1513. [Google Scholar] [CrossRef]

- Wang, W.; Zhu, M.; Zeng, X.; Ye, X.; Sheng, Y. Malware Traffic Classification Using Convolutional Neural Network for Representation Learning. In Proceedings of the 2017 International Conference on Information Networking (ICOIN), Da Nang, Vietnam, 11–13 January 2017; pp. 712–717. [Google Scholar]

- Gil, G.D.; Lashkari, A.H.; Mamun, M.; Ghorbani, A. Characterization of Encrypted and VPN Traffic Using Time-Related Features. In Proceedings of the 2nd International Conference on Information Systems Security and Privacy (ICISSP), Rome, Italy, 19–21 February 2016; pp. 407–414. [Google Scholar]

- Lashkari, A.H.; Gil, G.D.; Mamun, M.; Ghorbani, A. Characterization of Tor Traffic Using Time Based Features. In Proceedings of the 3rd International Conference on Information Systems Security and Privacy, Lisbon, Portugal, 22–24 February 2017; pp. 253–262. [Google Scholar]

- Neto, E.C.P.; Dadkhah, S.; Ferreira, R.; Zohourian, A.; Lu, R.; Ghorbani, A. CICIoT2023: A Real-Time Dataset and Benchmark for Large-Scale Attacks in IoT Environment. Sensors 2023, 23, 5941. [Google Scholar] [CrossRef]

- Xie, G.; Li, Q.; Jiang, Y.; Dai, T.; Shen, G.; Li, R.; Sinnott, R.; Xia, S. SAM: Self-Attention Based Deep Learning Method for Online Traffic Classification. In Proceedings of the Workshop on Network Meets AI & ML, Virtual, 10–14 August 2020; pp. 14–20. [Google Scholar]

- Shen, M.; Zhang, J.; Zhu, L.; Xu, K.; Du, X. Accurate Decentralized Application Identification via Encrypted Traffic Analysis Using Graph Neural Networks. IEEE Trans. Inf. Forensics Secur. 2021, 16, 2367–2380. [Google Scholar] [CrossRef]

- Zhang, H.; Yu, L.; Xiao, X.; Li, Q.; Mercaldo, F.; Luo, X.; Liu, Q. TFE-GNN: A Temporal Fusion Encoder Using Graph Neural Networks for Fine-Grained Encrypted Traffic Classification. In Proceedings of the ACM Web Conference 2023, Austin, TX, USA, 30 April–4 May 2023; pp. 2066–2075. [Google Scholar]

- Panchenko, A.; Lanze, F.; Pennekamp, J.; Wehrle, K.; Engel, T. Website Fingerprinting at Internet Scale. Proc. NDSS 2016, 1, 23477. [Google Scholar]

- Wang, X.; Chen, S.; Su, J. App-Net: A Hybrid Neural Network for Encrypted Mobile Traffic Classification. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Toronto, ON, Canada, 6–9 July 2020; p. 23477. [Google Scholar]

- Schuster, R.; Shmatikov, V.; Tromer, E. Beauty and the Burst: Remote Identification of Encrypted Video Streams. In Proceedings of the 26th USENIX Security Symposium, Vancouver, BC, Canada, 16–18 August 2017; pp. 1357–1374. [Google Scholar]

- Yang, L.; Li, Y.; Song, H.; Lv, Y.; Liu, J. Flow-Based Encrypted Network Traffic Classification with Graph Neural Networks. IEEE Trans. Netw. Serv. Manag. 2023, 20, 1504–1516. [Google Scholar] [CrossRef]

| Task | Dataset | Label | Flow | Packet |

|---|---|---|---|---|

| ETCGA | Cross-Platform (Android) [25] | 215 | 0.68 M | 3.4 M |

| ETCGA | Cross-Platform (iOS) [25] | 196 | 0.50 M | 2.5 M |

| ETCGA | NUDT-Mobile-Traffic [36] | 350 | 2.88 M | 14.4 M |

| ETCMA | USTC-TFC-2016 [37] | 20 | 4.8 K | 24 K |

| ETCV | ISCX-VPN-2016 [38] | 7 | 2.8 K | 14 K |

| ETCT | ISCX-Tor-2016 [39] | 8 | 3.6 K | 18 K |

| TCIoT | CIC-IoT-2023 [40] | 33 | 3.5 K | 17.5 K |

| Method | Cross-Platform (iOS) | Cross-Platform (Android) | NUDT-Mobile-Traffic | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AC | PR | RC | F1 | AC | PR | RC | F1 | AC | PR | RC | F1 | |

| AppScanner | 0.4219 | 0.2991 | 0.2628 | 0.2638 | 0.4365 | 0.4847 | 0.4701 | 0.4767 | 0.6426 | 0.6446 | 0.6118 | 0.6202 |

| CUMUL | 0.3077 | 0.1774 | 0.1810 | 0.1675 | 0.3098 | 0.3783 | 0.3818 | 0.3307 | 0.5780 | 0.5528 | 0.5437 | 0.5354 |

| BIND | 0.5281 | 0.4007 | 0.3712 | 0.3738 | 0.6076 | 0.6040 | 0.5495 | 0.5535 | 0.7587 | 0.7650 | 0.7365 | 0.7480 |

| Beauty | 0.1878 | 0.0606 | 0.0601 | 0.0509 | 0.2794 | 0.2799 | 0.2172 | 0.1834 | 0.1806 | 0.1827 | 0.1233 | 0.1121 |

| FS-Net | 0.4728 | 0.3710 | 0.3369 | 0.3359 | 0.4763 | 0.4635 | 0.4196 | 0.4291 | 0.6276 | 0.6154 | 0.5821 | 0.5847 |

| AppNet | 0.3971 | 0.3399 | 0.2774 | 0.2855 | 0.4050 | 0.3600 | 0.3350 | 0.3263 | 0.6265 | 0.6457 | 0.6038 | 0.6165 |

| DF | 0.3390 | 0.2065 | 0.1920 | 0.1856 | 0.3337 | 0.2477 | 0.2682 | 0.2671 | 0.5633 | 0.6024 | 0.5260 | 0.5402 |

| SAM | 0.9572 | 0.9805 | 0.9384 | 0.9562 | 0.9048 | 0.8899 | 0.9129 | 0.8999 | 0.1133 | 0.1895 | 0.1085 | 0.0969 |

| GraphDApp | 0.2541 | 0.1925 | 0.1530 | 0.1576 | 0.2762 | 0.2113 | 0.1871 | 0.1781 | 0.5815 | 0.5897 | 0.5445 | 0.5567 |

| TFE-GNN | 0.3472 | 0.2657 | 0.2517 | 0.2307 | 0.3269 | 0.3027 | 0.2859 | 0.2785 | 0.0833 | 0.0541 | 0.0831 | 0.0444 |

| FB-GNN | 0.3469 | 0.2342 | 0.2461 | 0.2400 | 0.3373 | 0.2977 | 0.2761 | 0.2600 | 0.0815 | 0.0573 | 0.0791 | 0.0431 |

| PERT | 0.8380 | 0.8440 | 0.7615 | 0.7879 | 0.7323 | 0.7279 | 0.6909 | 0.6928 | 0.7863 | 0.7980 | 0.7588 | 0.7697 |

| ET-BERT | 0.9663 | 0.9669 | 0.9250 | 0.9370 | 0.9221 | 0.8874 | 0.7908 | 0.7994 | 0.2222 | 0.1003 | 0.0247 | 0.0366 |

| YaTC | 0.9736 | 0.9753 | 0.9736 | 0.9728 | 0.9707 | 0.9738 | 0.9707 | 0.9709 | 0.8502 | 0.8600 | 0.8502 | 0.8523 |

| MS-PreTE | 0.9942 | 0.9957 | 0.9942 | 0.9934 | 0.9881 | 0.9877 | 0.9880 | 0.9861 | 0.8760 | 0.8780 | 0.8760 | 0.8770 |

| Method | ISCX-VPN-2016 | CIC-IoT-2023 | USTC-TFC-2016 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AC | PR | RC | F1 | AC | PR | RC | F1 | AC | PR | RC | F1 | |

| AppScanner | 0.7127 | 0.7425 | 0.6473 | 0.6470 | 0.5365 | 0.5847 | 0.4701 | 0.4767 | 0.8750 | 0.8779 | 0.8333 | 0.8385 |

| CUMUL | 0.5769 | 0.4524 | 0.5157 | 0.4670 | 0.5098 | 0.4783 | 0.4818 | 0.4307 | 0.7162 | 0.6606 | 0.6541 | 0.6422 |

| BIND | 0.7341 | 0.7209 | 0.6204 | 0.6569 | 0.6076 | 0.6040 | 0.5495 | 0.5535 | 0.8835 | 0.8791 | 0.8403 | 0.8468 |

| Beauty | 0.3951 | 0.3434 | 0.2046 | 0.1959 | 0.2794 | 0.2799 | 0.2172 | 0.1834 | 0.6097 | 0.5352 | 0.3899 | 0.3815 |

| FS-Net | 0.6829 | 0.6013 | 0.6094 | 0.6024 | 0.7763 | 0.5635 | 0.4196 | 0.4291 | 0.7863 | 0.5983 | 0.5968 | 0.5968 |

| AppNet | 0.5822 | 0.4918 | 0.4936 | 0.4850 | 0.5050 | 0.4600 | 0.4350 | 0.4263 | 0.8878 | 0.8160 | 0.8154 | 0.8120 |

| DF | 0.6230 | 0.5442 | 0.4940 | 0.5061 | 0.5337 | 0.5477 | 0.4682 | 0.4671 | 0.7055 | 0.5879 | 0.5537 | 0.5415 |

| SAM | 0.8432 | 0.8653 | 0.7913 | 0.8190 | 0.7048 | 0.3899 | 0.3129 | 0.2999 | 0.8610 | 0.8973 | 0.8729 | 0.8721 |

| GraphDApp | 0.5305 | 0.4841 | 0.4460 | 0.4587 | 0.4762 | 0.4113 | 0.3871 | 0.3781 | 0.8654 | 0.9123 | 0.8689 | 0.8726 |

| TFE-GNN | 0.6900 | 0.6600 | 0.6001 | 0.6080 | 0.3269 | 0.3027 | 0.2859 | 0.2785 | 0.9167 | 0.8264 | 0.8250 | 0.8245 |

| FB-GNN | 0.6825 | 0.6551 | 0.6003 | 0.6124 | 0.5041 | 0.4816 | 0.4553 | 0.4627 | 0.5820 | 0.5602 | 0.5410 | 0.5465 |

| PERT | 0.7265 | 0.6474 | 0.6227 | 0.6249 | 0.7323 | 0.7279 | 0.6909 | 0.6928 | 0.9748 | 0.9798 | 0.9754 | 0.9746 |

| ET-BERT | 0.9025 | 0.8877 | 0.8818 | 0.8806 | 0.9221 | 0.8874 | 0.7908 | 0.7994 | 0.8984 | 0.9243 | 0.9009 | 0.9054 |

| YaTC | 0.9650 | 0.9653 | 0.9650 | 0.9647 | 0.9610 | 0.9630 | 0.9610 | 0.9594 | 0.9693 | 0.9707 | 0.9693 | 0.9692 |

| MS-PreTE | 0.9685 | 0.9689 | 0.9685 | 0.9680 | 0.9587 | 0.9598 | 0.9587 | 0.9586 | 0.9756 | 0.9756 | 0.9756 | 0.9755 |

| Method | Cross-Platform (iOS) | Cross-Platform (Android) | NUDT-Mobile-Traffic | |||

|---|---|---|---|---|---|---|

| AC | F1 | AC | F1 | AC | F1 | |

| MS-PreTE | 0.9942 | 0.9934 | 0.9881 | 0.9861 | 0.8760 | 0.8770 |

| Single Encoder | 0.9773 | 0.9782 | 0.9755 | 0.9753 | 0.8463 | 0.8462 |

| w/o Focal-Attention | 0.9854 | 0.9829 | 0.9789 | 0.9784 | 0.8583 | 0.8582 |

| w/o FRM | 0.9891 | 0.9892 | 0.9817 | 0.9812 | 0.8681 | 0.8680 |

| w/o Pre-train | 0.9539 | 0.9510 | 0.9562 | 0.9549 | 0.8352 | 0.8315 |

| Method | Cross-Platform (iOS) | Cross-Platform (Android) | NUDT-Mobile-Traffic | |||

|---|---|---|---|---|---|---|

| AC | F1 | AC | F1 | AC | F1 | |

| MS-PreTE | 0.9942 | 0.9934 | 0.9881 | 0.9861 | 0.8760 | 0.8770 |

| Single Encoder | 0.9773 | 0.9782 | 0.9755 | 0.9753 | 0.8463 | 0.8462 |

| w/o Focal-Attention | 0.9854 | 0.9829 | 0.9789 | 0.9784 | 0.8583 | 0.8582 |

| w/o FRM | 0.9891 | 0.9892 | 0.9817 | 0.9812 | 0.8681 | 0.8680 |

| w/o Pre-train | 0.9539 | 0.9510 | 0.9562 | 0.9549 | 0.8352 | 0.8315 |

| Model | FLOPs (M) | Parameters (M) |

|---|---|---|

| GraphDApp | ||

| TFE-GNN | ||

| PERT | ||

| ET-BERT | ||

| YaTC | ||

| MS-PreTE |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Qiu, Y.; Liu, Y.; Zhang, S.; Liu, X. MS-PreTE: A Multi-Scale Pre-Training Encoder for Mobile Encrypted Traffic Classification. Big Data Cogn. Comput. 2025, 9, 216. https://doi.org/10.3390/bdcc9080216

Wang Z, Qiu Y, Liu Y, Zhang S, Liu X. MS-PreTE: A Multi-Scale Pre-Training Encoder for Mobile Encrypted Traffic Classification. Big Data and Cognitive Computing. 2025; 9(8):216. https://doi.org/10.3390/bdcc9080216

Chicago/Turabian StyleWang, Ziqi, Yufan Qiu, Yaping Liu, Shuo Zhang, and Xinyi Liu. 2025. "MS-PreTE: A Multi-Scale Pre-Training Encoder for Mobile Encrypted Traffic Classification" Big Data and Cognitive Computing 9, no. 8: 216. https://doi.org/10.3390/bdcc9080216

APA StyleWang, Z., Qiu, Y., Liu, Y., Zhang, S., & Liu, X. (2025). MS-PreTE: A Multi-Scale Pre-Training Encoder for Mobile Encrypted Traffic Classification. Big Data and Cognitive Computing, 9(8), 216. https://doi.org/10.3390/bdcc9080216