A Comparison of Data Quality Frameworks: A Review

Abstract

1. Introduction

2. Data Management Frameworks

2.1. Total Data Quality Management (TDQM)

2.2. Total Quality Data Management (TQdM)

3. ISO Standards

3.1. ISO 8000

3.2. ISO 25012

4. Government and International Standards

4.1. Fair Information Practice Principles (FIPPS)

- FIPS 180-4: Specifies secure hashing algorithms used to generate message digests, which help detect changes in messages and ensure data integrity during transfer and communication [89].

- FIPS 199: Provides a framework for categorising federal information and information systems based on the level of confidentiality, integrity, and availability required. It helps in assessing the impact of data breaches and ensuring appropriate security measures [90].

- FIPS 200: Outlines minimum security requirements for federal information and information systems, promoting consistent and repeatable security practices to protect data integrity and privacy [91].

4.2. Quality Assurance Framework of the European Statistical System (ESS QAF)

4.3. The UK Government Data Quality Framework

Data Management Body of Knowledge (DAMA DMBoK)

5. Financial Frameworks

5.1. IMF Data Quality Assessment Framework (DQAF)

5.2. Basel Committee on Banking Supervision Standard (BCBS 239)

6. Healthcare Frameworks

6.1. ALCOA+ Principles

6.2. WHO Data Quality Assurance

7. Discussion

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- ISO 8000-1:2022; Data Quality—Part 1: Overview. International Organization for Standardization: Geneva, Switzerland, 2022.

- Miller, R.; Whelan, H.; Chrubasik, M.; Whittaker, D.; Duncan, P.; Gregório, J. A Framework for Current and New Data Quality Dimensions: An Overview. Data 2024, 9, 151. [Google Scholar] [CrossRef]

- Levene, M.; Adel, T.; Alsuleman, M.; George, I.; Krishnadas, P.; Lines, K.; Luo, Y.; Smith, I.; Duncan, P. A Life Cycle for Trustworthy and Safe Artificial Intelligence Systems; Technical Report; NPL Publications: Teddington, UK, 2024. [Google Scholar]

- ISO 9001:2015; Quality Management Systems—Requirements. ISO: Geneva, Switzerland, 2015.

- ISO/IEC 25012:2008; Software Engineering—Software Product Quality Requirements and Evaluation (SQuaRE)—Data Quality Model. International Organization for Standardization: Geneva, Switzerland, 2008.

- MIT Information Quality Program. Total Data Quality Management (TDQM) Program. 2024. Available online: http://mitiq.mit.edu/ (accessed on 14 August 2024).

- Federal Privacy Council. Fair Information Practice Principles (FIPPS). 2024. Available online: https://www.fpc.gov/ (accessed on 14 August 2024).

- Eurostat. European Statistics Code of Practice—Revised Edition 2017; Publications Office of the European Union: Luxembourg, 2018. [Google Scholar] [CrossRef]

- Government Data Quality Hub. The Government Data Quality Framework. 2020. Available online: https://www.gov.uk/government/organisations/government-data-quality-hub (accessed on 1 October 2024).

- International Monetary Fund. Data Quality Assessment Framework (DQAF). 2003. Available online: https://www.imf.org/external/np/sta/dsbb/2003/eng/dqaf.htm (accessed on 1 October 2024).

- Basel Committee on Banking Supervision. Principles for Effective Risk Data Aggregation and Risk Reporting; Technical Report; Bank for International Settlements: Basel, Switzerland, 2013. [Google Scholar]

- Leach, C.D. Enhancing Data Governance Solutions to Optimize ALCOA+ Compliance for Life Sciences Cloud Service Providers. Ph.D. Thesis, Colorado Technical University, Colorado Springs, CO, USA, 2024. [Google Scholar]

- World Health Organization. Data Quality Assurance: Module 1: Framework and Metrics; World Health Organization: Geneva, Switzerland, 2022; p. vi, 30p. [Google Scholar]

- Cichy, C.; Rass, S. An overview of data quality frameworks. IEEE Access 2019, 7, 24634–24648. [Google Scholar] [CrossRef]

- Mashoufi, M.; Ayatollahi, H.; Khorasani-Zavareh, D.; Boni, T.T.A. Data quality in health care: Main concepts and assessment methodologies. Methods Inf. Med. 2023, 62, 005–018. [Google Scholar] [CrossRef]

- Fadahunsi, K.P.; O’Connor, S.; Akinlua, J.T.; Wark, P.A.; Gallagher, J.; Carroll, C.; Car, J.; Majeed, A.; O’Donoghue, J. Information quality frameworks for digital health technologies: Systematic review. J. Med. Internet Res. 2021, 23, e23479. [Google Scholar] [CrossRef]

- Landu, M.; Mota, J.H.; Moreira, A.C.; Bandeira, A.M. Factors influencing the quality of financial information: A systematic literature review. South Afr. J. Account. Res. 2024, 1–28. [Google Scholar] [CrossRef]

- English, L.P. Total quality data management (TQdM). In Information and Database Quality; Springer: Boston, MA, USA, 2002; pp. 85–109. [Google Scholar]

- European Parliament and Council of the European Union. Regulation (EC) No 223/2009 of the European Parliament and of the Council of 11 March 2009 on European Statistics; Technical Report; OJ L 87, 31.3.2009; European Union: Brussels, Belgium, 2009; pp. 164–173. [Google Scholar]

- European Union. Official Journal of the European Union, C 202; Technical Report; European Union: Maastricht, The Netherlands, 7 June 2016. [Google Scholar]

- Government Data Quality Hub. The Government Data Quality Framework: Guidance. 2020. Available online: https://www.gov.uk/government/publications/the-government-data-quality-framework/the-government-data-quality-framework-guidance (accessed on 1 October 2024).

- DAMA International. DAMA-DMBOK Data Management Body of Knowledge, 2nd ed.; Technics Publications: Sedona, AZ, USA, 2017; Available online: https://technicspub.com/dmbok/ (accessed on 1 October 2024).

- DAMA International. Body of Knowledge. 2024. Available online: https://www.dama.org/cpages/body-of-knowledge (accessed on 1 October 2024).

- Durá, M.; Sánchez-García, A.; Sáez, C.; Leal, F.; Chis, A.E.; González-Vélez, H.; García-Gómez, J.M. Towards a computational approach for the assessment of compliance of ALCOA+ Principles in pharma industry. In Challenges of Trustable AI and Added-Value on Health; IOS Press: Amsterdam, The Netherlands, 2022; pp. 755–759. [Google Scholar]

- Wang, R.Y. A product perspective on total data quality management. Commun. ACM 1998, 41, 58–65. [Google Scholar] [CrossRef]

- Bowo, W.A.; Suhanto, A.; Naisuty, M.; Ma’mun, S.; Hidayanto, A.N.; Habsari, I.C. Data quality assessment: A case study of PT JAS using TDQM Framework. In Proceedings of the 2019 Fourth International Conference on Informatics and Computing (ICIC), Semarang, Indonesia, 16–17 October 2019; pp. 1–6. [Google Scholar]

- Francisco, M.M.; Alves-Souza, S.N.; Campos, E.G.; De Souza, L.S. Total data quality management and total information quality management applied to costumer relationship management. In Proceedings of the 9th International Conference on Information Management and Engineering, Barcelona, Spain, 9–11 October 2017; pp. 40–45. [Google Scholar]

- Rahmawati, R.; Ruldeviyani, Y.; Abdullah, P.P.; Hudoarma, F.M. Strategies to Improve Data Quality Management Using Total Data Quality Management (TDQM) and Data Management Body of Knowledge (DMBOK): A Case Study of M-Passport Application. CommIT (Commun. Inf. Technol. J. 2023, 17, 27–42. [Google Scholar] [CrossRef]

- Wijnhoven, F.; Boelens, R.; Middel, R.; Louissen, K. Total data quality management: A study of bridging rigor and relevance. In Proceedings of the Fifteenth European Conference on Information Systems, ECIS 2007, St. Gallen, Switzerland, 7–9 June 2007. Number 15. [Google Scholar]

- Otto, B.; Wende, K.; Schmidt, A.; Osl, P. Towards a framework for corporate data quality management. In Proceedings of the Fifteenth European Conference on Information Systems, ECIS 2007, St. Gallen, Switzerland, 7–9 June 2007. Number 109. [Google Scholar]

- Wahyudi, T.; Isa, S.M. Data Quality Assessment Using Tdqm Framework: A Case Study of Pt Aid. J. Theor. Appl. Inf. Technol. 2023, 101, 3576–3589. [Google Scholar]

- Zhang, L.; Jeong, D.; Lee, S. Data quality management in the internet of things. Sensors 2021, 21, 5834. [Google Scholar] [CrossRef]

- Cao, J.; Diao, X.; Jiang, G.; Du, Y. Data lifecycle process model and quality improving framework for tdqm practices. In Proceedings of the 2010 International Conference on E-Product E-Service and E-Entertainment, Henan, China, 7–9 November 2010; pp. 1–6. [Google Scholar]

- Moges, H.T.; Dejaeger, K.; Lemahieu, W.; Baesens, B. A total data quality management for credit risk: New insights and challenges. Int. J. Inf. Qual. 2012, 3, 1–27. [Google Scholar] [CrossRef]

- Radziwill, N.M. Foundations for quality management of scientific data products. Qual. Manag. J. 2006, 13, 7–21. [Google Scholar] [CrossRef]

- Kovac, R.; Weickert, C. Starting with Quality: Using TDQM in a Start-Up Organization. In Proceedings of the ICIQ, Cambridge, MA, USA, 8–10 November 2002; pp. 69–78. [Google Scholar]

- Wilantika, N.; Wibowo, W.C. Data Quality Management in Educational Data: A Case Study of Statistics Polytechnic. J. Sist. Inf. J. Inf. Syst. 2019, 15, 52. [Google Scholar] [CrossRef]

- Shankaranarayanan, G.; Cai, Y. Supporting data quality management in decision-making. Decis. Support Syst. 2006, 42, 302–317. [Google Scholar] [CrossRef]

- Kovac, R.; Lee, Y.W.; Pipino, L. Total Data Quality Management: The Case of IRI. In Proceedings of the IQ; 1997; pp. 63–79. Available online: http://mitiq.mit.edu/documents/publications/TDQMpub/IRITDQMCaseOct97.pdf (accessed on 6 April 2025).

- Vaziri, R.; Mohsenzadeh, M. A questionnaire-based data quality methodology. Int. J. Database Manag. Syst. 2012, 4, 55. [Google Scholar] [CrossRef]

- Alhazmi, E.; Bajunaid, W.; Aziz, A. Important success aspects for total quality management in software development. Int. J. Comput. Appl. 2017, 157, 8–11. [Google Scholar]

- Shankaranarayanan, G. Towards implementing total data quality management in a data warehouse. J. Inf. Technol. Manag. 2005, 16, 21–30. [Google Scholar]

- Glowalla, P.; Sunyaev, A. Process-driven data quality management: A critical review on the application of process modeling languages. J. Data Inf. Qual. (JDIQ) 2014, 5, 1–30. [Google Scholar] [CrossRef]

- Otto, B. Quality management of corporate data assets. In Quality Management for IT Services: Perspectives on Business and Process Performance; IGI Global: Hershey, PA, USA, 2011; pp. 193–209. [Google Scholar]

- Otto, B. Enterprise-Wide Data Quality Management in Multinational Corporations. Ph.D. Thesis, Universität St. Gallen, St. Gallen, Switzerland, 2012. [Google Scholar]

- Caballero, I.; Vizcaíno, A.; Piattini, M. Optimal data quality in project management for global software developments. In Proceedings of the 2009 Fourth International Conference on Cooperation and Promotion of Information Resources in Science and Technology, Beijing, China, 21–23 November 2009; pp. 210–219. [Google Scholar]

- Siregar, D.Y.; Akbar, H.; Pranidhana, I.B.P.A.; Hidayanto, A.N.; Ruldeviyani, Y. The importance of data quality to reinforce COVID-19 vaccination scheduling system: Study case of Jakarta, Indonesia. In Proceedings of the 2022 2nd International Conference on Information Technology and Education (ICIT&E), Malang, Indonesia, 22 January 2022; pp. 262–268. [Google Scholar]

- Ofner, M.; Otto, B.; Österle, H. A maturity model for enterprise data quality management. Enterp. Model. Inf. Syst. Archit. (EMISAJ) 2013, 8, 4–24. [Google Scholar] [CrossRef]

- Fürber, C.; Fürber, C. Data quality. In Data Quality Management with Semantic Technologies; Springer Gabler: Wiesbaden, Germany, 2016; pp. 20–55. [Google Scholar]

- He, X.; Liu, R.; Anumba, C.J. Theoretical architecture for Data-Quality-Aware analytical applications in the construction firms. In Proceedings of the Construction Research Congress 2022, Arlington, VA, USA, 9–12 March 2022; pp. 335–343. [Google Scholar]

- Wende, K.; Otto, B. A Contingency Approach To Data Governance. In Proceedings of the ICIQ, Cambridge, MA, USA, 9–11 November 2007; pp. 163–176. [Google Scholar]

- Aljumaili, M.; Karim, R.; Tretten, P. Metadata-based data quality assessment. VINE J. Inf. Knowl. Manag. Syst. 2016, 46, 232–250. [Google Scholar] [CrossRef]

- Perez-Castillo, R.; Carretero, A.G.; Caballero, I.; Rodriguez, M.; Piattini, M.; Mate, A.; Kim, S.; Lee, D. DAQUA-MASS: An ISO 8000-61 based data quality management methodology for sensor data. Sensors 2018, 18, 3105. [Google Scholar] [CrossRef]

- Rivas, B.; Merino, J.; Caballero, I.; Serrano, M.; Piattini, M. Towards a service architecture for master data exchange based on ISO 8000 with support to process large datasets. Comput. Stand. Interfaces 2017, 54, 94–104. [Google Scholar] [CrossRef]

- Carretero, A.G.; Gualo, F.; Caballero, I.; Piattini, M. MAMD 2.0: Environment for data quality processes implantation based on ISO 8000-6X and ISO/IEC 33000. Comput. Stand. Interfaces 2017, 54, 139–151. [Google Scholar] [CrossRef]

- Carretero, A.G.; Caballero, I.; Piattini, M. MAMD: Towards a data improvement model based on ISO 8000-6X and ISO/IEC 33000. In Proceedings of the Software Process Improvement and Capability Determination: 16th International Conference, SPICE 2016, Dublin, Ireland, 9–10 June 2016; Proceedings 16. Springer: Cham, Switzerland, 2016; pp. 241–253. [Google Scholar]

- ISO 8000-8:2015; Data Quality—Part 8: Information and Data Quality: Concepts and Measuring. ISO: Geneva, Switzerland, 2015.

- Mohammed, A.G.; Eram, A.; Talburt, J.R. ISO 8000-61 Data Quality Management Standard, TDQM Compliance, IQ Principles. In Proceedings of the MIT International Conference on Information Quality, Little Rock, AR, USA, 6–7 October 2017. [Google Scholar]

- ISO 8000-61:2016; Data Quality—Part 61: Data Quality Management: Process Reference Model. ISO: Geneva, Switzerland, 2016.

- ISO/IEC 25000:2014; Systems and Software Engineering—Systems and Software Quality Requirements and Evaluation (SQuaRE)—Guide to SQuaRE. ISO: Geneva, Switzerland, 2014.

- Gualo, F.; Rodríguez, M.; Verdugo, J.; Caballero, I.; Piattini, M. Data quality certification using ISO/IEC 25012: Industrial experiences. J. Syst. Softw. 2021, 176, 110938. [Google Scholar] [CrossRef]

- Nwasra, N.; Basir, N.; Marhusin, M.F. A framework for evaluating QinU based on ISO/IEC 25010 and 25012 standards. In Proceedings of the 2015 9th Malaysian Software Engineering Conference (MySEC), Kuala Lumpur, Malaysia, 16–17 December 2015; pp. 70–75. [Google Scholar]

- Guerra-García, C.; Nikiforova, A.; Jiménez, S.; Perez-Gonzalez, H.G.; Ramírez-Torres, M.; Ontañon-García, L. ISO/IEC 25012-based methodology for managing data quality requirements in the development of information systems: Towards Data Quality by Design. Data Knowl. Eng. 2023, 145, 102152. [Google Scholar] [CrossRef]

- Verdugo, J.; Rodríguez, M. Assessing data cybersecurity using ISO/IEC 25012. Softw. Qual. J. 2020, 28, 965–985. [Google Scholar] [CrossRef]

- Pontes, L.; Albuquerque, A. Business Intelligence Development Process: An Approach with the Principles of Design Thinking, ISO 25012, and RUP. In Proceedings of the 2021 16th Iberian Conference on Information Systems and Technologies (CISTI), Chaves, Portugal, 23–26 June 2021; pp. 1–5. [Google Scholar]

- Galera, R.; Gualo, F.; Caballero, I.; Rodríguez, M. DQBR25K: Data Quality Business Rules Identification Based on ISO/IEC 25012. In Proceedings of the International Conference on the Quality of Information and Communications Technology, Aveiro, Portugal, 11–13 September 2023; pp. 178–190. [Google Scholar]

- Stamenkov, G. Genealogy of the fair information practice principles. Int. J. Law Manag. 2023, 65, 242–260. [Google Scholar] [CrossRef]

- Rasheed, A. Prioritizing Fair Information Practice Principles Based on Islamic Privacy Law. Berkeley J. Middle East. Islam. Law 2020, 11, 1. [Google Scholar]

- Paul, P.; Aithal, P.; Bhimali, A.; Kalishankar, T.; Rajesh, R. FIPPS & Information Assurance: The Root and Foundation. In Proceedings of the National Conference on Advances in Management, IT, Education, Social Sciences-Manegma, Mangalore, India, 27 April 2019; pp. 27–34. [Google Scholar]

- Klemovitch, J.; Sciabbarrasi, L.; Peslak, A. Current privacy policy attitudes and fair information practice principles: A macro and micro analysis. Issues Inf. Syst. 2021, 22, 145–159. [Google Scholar]

- Bruening, P.; Patterson, H. A Context-Driven Rethink of the Fair Information Practice Principles. SSRN 2016. [Google Scholar] [CrossRef]

- Gellman, R. Willis Ware’s Lasting Contribution to Privacy: Fair Information Practices. IEEE Secur. Priv. 2014, 12, 51–54. [Google Scholar] [CrossRef]

- Schwaig, K.S.; Kane, G.C.; Storey, V.C. Compliance to the fair information practices: How are the Fortune 500 handling online privacy disclosures? Inf. Manag. 2006, 43, 805–820. [Google Scholar] [CrossRef]

- Herath, S.; Gelman, H.; McKee, L. Privacy Harm and Non-Compliance from a Legal Perspective. J. Cybersecur. Educ. Res. Pract. 2023, 2023, 3. [Google Scholar] [CrossRef]

- Zeide, E. Student privacy principles for the age of big data: Moving beyond FERPA and FIPPS. Drexel Law Rev. 2015, 8, 339. [Google Scholar]

- Rotenberg, M. Fair information practices and the architecture of privacy (What Larry doesn’t get). Stan. Tech. Law Rev. 2001, 1, 1. [Google Scholar]

- Hartzog, W. The inadequate, invaluable fair information practices. Md. Law Rev. 2016, 76, 952. [Google Scholar]

- Proia, A.; Simshaw, D.; Hauser, K. Consumer cloud robotics and the fair information practice principles: Recognizing the challenges and opportunities ahead. Minn. J. Law Sci. Technol. 2015, 16, 145. [Google Scholar] [CrossRef]

- Karyda, M.; Gritzalis, S.; Hyuk Park, J.; Kokolakis, S. Privacy and fair information practices in ubiquitous environments: Research challenges and future directions. Internet Res. 2009, 19, 194–208. [Google Scholar] [CrossRef]

- Cavoukian, A. Evolving FIPPs: Proactive approaches to privacy, not privacy paternalism. In Reforming European Data Protection Law; Springer: Berlin/Heidelberg, Germany, 2014; pp. 293–309. [Google Scholar]

- Ohm, P. Changing the rules: General principles for data use and analysis. Privacy, Big Data, Public Good: Fram. Engagem. 2014, 1, 96–111. [Google Scholar]

- da Veiga, A. An online information privacy culture: A framework and validated instrument to measure consumer expectations and confidence. In Proceedings of the 2018 Conference on Information Communications Technology and Society (ICTAS), Durban, South Africa, 8–9 March 2018; pp. 1–6. [Google Scholar]

- Regan, P.M. A design for public trustee and privacy protection regulation. Seton Hall Legis. J. 2020, 44, 487. [Google Scholar]

- da Veiga, A. An Information Privacy Culture Index Framework and Instrument to Measure Privacy Perceptions across Nations: Results of an Empirical Study. In Proceedings of the HAISA, Adelaide, Australia, 28–30 November 2017; pp. 188–201. [Google Scholar]

- Da Veiga, A. An information privacy culture instrument to measure consumer privacy expectations and confidence. Inf. Comput. Secur. 2018, 26, 338–364. [Google Scholar] [CrossRef]

- Gillon, K.; Branz, L.; Culnan, M.; Dhillon, G.; Hodgkinson, R.; MacWillson, A. Information security and privacy—Rethinking governance models. Commun. Assoc. Inf. Syst. 2011, 28, 33. [Google Scholar] [CrossRef]

- European Parliament and Council. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the Protection of Natural Persons with Regard to the Processing of Personal Data and on the Free Movement of Such Data, and Repealing Directive 95/46/EC (General Data Protection Regulation). 2016. Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj/eng (accessed on 14 August 2024).

- National Institute of Standards and Technology. Federal Information Processing Standards (FIPS) Publications. 2024. Available online: https://csrc.nist.gov/publications/fips (accessed on 14 August 2024).

- National Institute of Standards and Technology. Secure Hash Standard (SHS); Technical Report FIPS PUB 180-4; U.S. Department of Commerce: Washington, DC, USA, 2015. [Google Scholar]

- National Institute of Standards and Technology. Standards for Security Categorization of Federal Information and Information Systems; Technical Report FIPS PUB 199; U.S. Department of Commerce: Washington, DC, USA, 2004. [Google Scholar]

- National Institute of Standards and Technology. Minimum Security Requirements for Federal Information and Information Systems; Technical Report FIPS PUB 200; U.S. Department of Commerce: Washington, DC, USA, 2006. [Google Scholar]

- Sæbø, H.V. Quality in Statistics—From Q2001 to 2016. Stat. Stat. Econ. J. 2016, 96, 72–79. [Google Scholar]

- Revilla, P.; Piñán, A. Implementing a Quality Assurance Framework Based on the Code of Practice at the National Statistical Institute of Spain; Instituto Nacional de Estatistica (INE) Statistics Spain, Work. Pap.; Instituto Nacional de Estadística: Madrid, Spain, 2012. [Google Scholar]

- Nielsen, M.G.; Thygesen, L. Implementation of Eurostat Quality Declarations at Statistics Denmark with cost-effective use of standards. In Proceedings of the European Conference on Quality in Official Statistics, Vienna, Austria, 3–5 June 2014; pp. 2–5. [Google Scholar]

- Radermacher, W.J. The European statistics code of practice as a pillar to strengthen public trust and enhance quality in official statistics. J. Stat. Soc. Inq. Soc. Irel. 2013, 43, 27. [Google Scholar]

- Brancato, G.; D’Assisi Barbalace, F.; Signore, M.; Simeoni, G. Introducing a framework for process quality in National Statistical Institutes. Stat. J. IAOS 2017, 33, 441–446. [Google Scholar] [CrossRef]

- Stenström, C.; Söderholm, P. Applying Eurostat’s ESS handbook for quality reportson Railway Maintenance Data. In Proceedings of the International Heavy Haul STS Conference (IHHA 2019), Narvik, Norway, 12–14 June 2019; pp. 473–480. [Google Scholar]

- Mekbunditkul, T. The Development of a Code of Practice and Indicators for Official Statistics Quality Management in Thailand. In Proceedings of the 2017 International Conference on Economics, Finance and Statistics (ICEFS 2017), Hanoi, Vietnam, 25–27 February 2017; pp. 184–191. [Google Scholar]

- Radermacher, W.J.; Radermacher, W.J. Official Statistics—Public Informational Infrastructure. In Official Statistics 4.0: Verified Facts for People in the 21st Century; Springer: Cham, Switzerland, 2020; pp. 11–52. [Google Scholar]

- Sæbø, H.V.; Holmberg, A. Beyond code of practice: New quality challenges in official statistics. Stat. J. IAOS 2019, 35, 171–178. [Google Scholar] [CrossRef]

- Zschocke, T.; Beniest, J. Adapting a quality assurance framework for creating educational metadata in an agricultural learning repository. Electron. Libr. 2011, 29, 181–199. [Google Scholar] [CrossRef]

- Daraio, C.; Bruni, R.; Catalano, G.; Daraio, A.; Matteucci, G.; Scannapieco, M.; Wagner-Schuster, D.; Lepori, B. A tailor-made data quality approach for higher educational data. J. Data Inf. Sci. 2020, 5, 129–160. [Google Scholar] [CrossRef]

- Stagars, M. Data Quality in Southeast Asia: Analysis of Official Statistics and Their Institutional Framework as a Basis for Capacity Building and Policy Making in the ASEAN; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Cox, N.; McLaren, C.H.; Shenton, C.; Tarling, T.; Davies, E.W. Developing Statistical Frameworks for Administrative Data and Integrating It into Business Statistics. Experiences from the UK and New Zealand. In Advances in Business Statistics, Methods and Data Collection; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2023; pp. 291–313. [Google Scholar]

- Ricciato, F.; Wirthmann, A.; Giannakouris, K.; Skaliotis, M. Trusted smart statistics: Motivations and principles. Stat. J. IAOS 2019, 35, 589–603. [Google Scholar] [CrossRef]

- Government Data Quality Hub. The Government Data Quality Framework: Case Studies. 2020. Available online: https://www.gov.uk/government/publications/the-government-data-quality-framework/the-government-data-quality-framework-case-studies (accessed on 1 October 2024).

- DAMA International. Mission, Vision, Purpose, and Goals. 2024. Available online: https://www.dama-belux.org/mission-vision-purpose-and-goals-2024/ (accessed on 1 October 2024).

- de Figueiredo, G.B.; Moreira, J.L.R.; de Faria Cordeiro, K.; Campos, M.L.M. Aligning DMBOK and Open Government with the FAIR Data Principles. In Proceedings of the Advances in Conceptual Modeling, Salvador, Brazil, 4–7 November 2019; pp. 13–22. [Google Scholar]

- Carson, C.S.; Laliberté, L.; Murray, T.; Neves, P. Toward a Framework for Assessing Data Quality. 2001. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=879374 (accessed on 1 October 2024).

- Kiatkajitmun, P.; Chanton, C.; Piboonrungroj, P.; Natwichai, J. Data Quality Assessment Framework and Economic Indicators. In Proceedings of the Advances in Networked-Based Information Systems, Chiang Mai, Thailand, 6–8 September 2023; pp. 97–105. [Google Scholar]

- Chakravorty, R. BCBS239: Reasons, impacts, framework and route to compliance. J. Secur. Oper. Custody 2015, 8, 65–81. [Google Scholar] [CrossRef]

- Prorokowski, L.; Prorokowski, H. Solutions for risk data compliance under BCBS 239. J. Invest. Compliance 2015, 16, 66–77. [Google Scholar] [CrossRef]

- Orgeldinger, J. The Implementation of Basel Committee BCBS 239: Short analysis of the new rules for Data Management. J. Cent. Bank. Theory Pract. 2018, 7, 57–72. [Google Scholar] [CrossRef]

- Harreis, H.; Tavakoli, A.; Ho, T.; Machado, J.; Rowshankish, K.; Merrath, P. Living with BCBS 239; McKinsey & Company: New York, NY, USA, 2017. [Google Scholar]

- Grody, A.D.; Hughes, P.J. Risk Accounting-Part 1: The risk data aggregation and risk reporting (BCBS 239) foundation of enterprise risk management (ERM) and risk governance. J. Risk Manag. Financ. Institutions 2016, 9, 130–146. [Google Scholar] [CrossRef]

- Elhassouni, J.; El Qadi, A.; El Madani El Alami, Y.; El Haziti, M. The implementation of credit risk scorecard using ontology design patterns and BCBS 239. Cybern. Inf. Technol. 2020, 20, 93–104. [Google Scholar] [CrossRef]

- Kavasidis, I.; Lallas, E.; Leligkou, H.C.; Oikonomidis, G.; Karydas, D.; Gerogiannis, V.C.; Karageorgos, A. Deep Transformers for Computing and Predicting ALCOA+ Data Integrity Compliance in the Pharmaceutical Industry. Appl. Sci. 2023, 13, 7616. [Google Scholar] [CrossRef]

- Sembiring, M.H.; Novagusda, F.N. Enhancing Data Security Resilience in AI-Driven Digital Transformation: Exploring Industry Challenges and Solutions Through ALCOA+ Principles. Acta Inform. Medica 2024, 32, 65. [Google Scholar] [CrossRef]

- Charitou, T.; Lallas, E.; Gerogiannis, V.C.; Karageorgos, A. A network modelling and analysis approach for pharma industry regulatory assessment. IEEE Access 2024, 12, 46470–46483. [Google Scholar] [CrossRef]

- Alosert, H.; Savery, J.; Rheaume, J.; Cheeks, M.; Turner, R.; Spencer, C.; Farid, S.S.; Goldrick, S. Data integrity within the biopharmaceutical sector in the era of Industry 4.0. Biotechnol. J. 2022, 17, 2100609. [Google Scholar] [CrossRef]

- World Health Organization. Data Quality Assurance: Module 2: Discrete Desk Review of Data Quality; World Health Organization: Geneva, Switzerland, 2022; p. vi, 47p. [Google Scholar]

- World Health Organization. Data Quality Assurance: Module 3: Site Assessment of Data Quality: Data Verification and System Assessment; World Health Organization: Geneva, Switzerland, 2022; p. viii, 80p. [Google Scholar]

- World Health Organization. Manual on Use of Routine Data Quality Assessment (RDQA) Tool for TB Monitoring; Technical report; World Health Organization: Geneva, Switzerland, 2011. [Google Scholar]

- World Health Organization. Data Quality Assessment of National and Partner HIV Treatment and Patient Monitoring Data and Systems: Implementation Tool; Technical report; World Health Organization: Geneva, Switzerland, 2018. [Google Scholar]

- World Health Organization. Preventive Chemotherapy: Tools for Improving the Quality of Reported Data and Information: A Field Manual for Implementation; Technical report; World Health Organization: Geneva, Switzerland, 2019. [Google Scholar]

- Yourkavitch, J.; Prosnitz, D.; Herrera, S. Data quality assessments stimulate improvements to health management information systems: Evidence from five African countries. J. Glob. Health 2019, 9, 010806. [Google Scholar] [CrossRef]

- Hilbert, M.; López, P. The world’s technological capacity to store, communicate, and compute information. Science 2011, 332, 60–65. [Google Scholar] [CrossRef]

- Bhat, W.A. Bridging data-capacity gap in big data storage. Future Gener. Comput. Syst. 2018, 87, 538–548. [Google Scholar] [CrossRef]

- Dash, S.; Shakyawar, S.K.; Sharma, M.; Kaushik, S. Big data in healthcare: Management, analysis and future prospects. J. Big Data 2019, 6, 1–25. [Google Scholar] [CrossRef]

- Abouelmehdi, K.; Beni-Hessane, A.; Khaloufi, H. Big healthcare data: Preserving security and privacy. J. Big Data 2018, 5, 1–18. [Google Scholar] [CrossRef]

- Janev, V.; Graux, D.; Jabeen, H.; Sallinger, E. Knowledge Graphs and Big Data Processing; Springer Nature: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Venkatasubramanian, V.; Zhao, C.; Joglekar, G.; Jain, A.; Hailemariam, L.; Suresh, P.; Akkisetty, P.; Morris, K.; Reklaitis, G.V. Ontological informatics infrastructure for pharmaceutical product development and manufacturing. Comput. Chem. Eng. 2006, 30, 1482–1496. [Google Scholar] [CrossRef]

- Yerashenia, N.; Bolotov, A. Computational modelling for bankruptcy prediction: Semantic data analysis integrating graph database and financial ontology. In Proceedings of the 2019 IEEE 21st Conference on Business Informatics (CBI), Moscow, Russia, 15–17 July 2019; Volume 1, pp. 84–93. [Google Scholar]

- Villalobos, P.; Ho, A.; Sevilla, J.; Besiroglu, T.; Heim, L.; Hobbhahn, M. Will we run out of data? Limits of LLM scaling based on human-generated data. arXiv 2022, arXiv:2211.04325. [Google Scholar]

- Hoseini, S.; Burgdorf, A.; Paulus, A.; Meisen, T.; Quix, C.; Pomp, A. Challenges and Opportunities of LLM-Augmented Semantic Model Creation for Dataspaces. In Proceedings of the European Semantic Web Conference, Crete, Greece, 26–30 May 2024; pp. 183–200. [Google Scholar]

- Cigliano, A.; Fallucchi, F. The Convergence of Open Data, Linked Data, Ontologies, and Large Language Models: Enabling Next-Generation Knowledge Systems. In Proceedings of the Research Conference on Metadata and Semantics Research, Athens, Greece, 19–22 November 2024; pp. 197–213. [Google Scholar]

- Hassani, S. Enhancing legal compliance and regulation analysis with large language models. In Proceedings of the 2024 IEEE 32nd International Requirements Engineering Conference (RE), Reykjavik, Iceland, 24–28 June 2024; pp. 507–511. [Google Scholar]

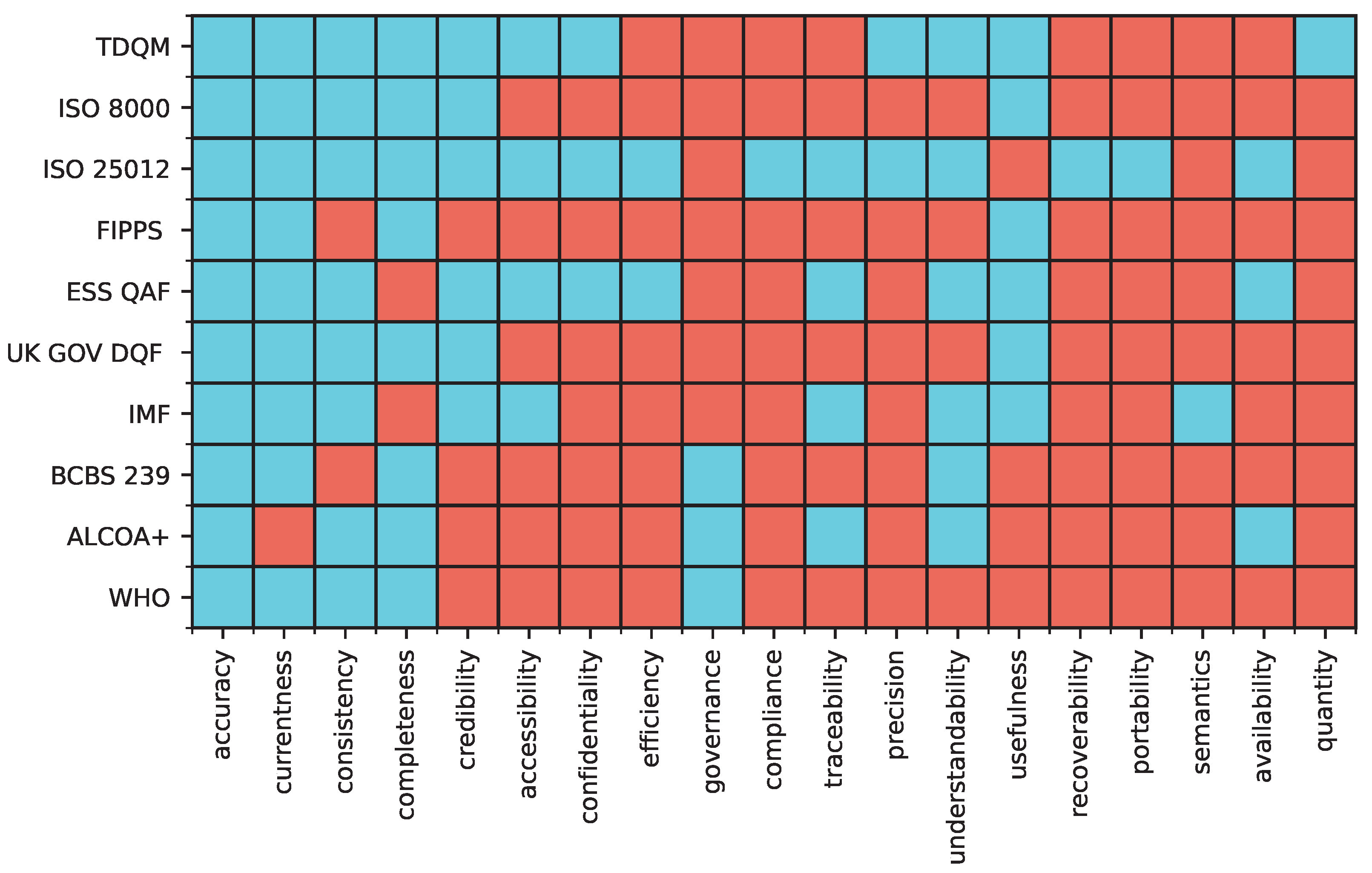

| Framework | DQ Dimensions | DQ Common Nomenclature |

|---|---|---|

| TDQM | Accuracy | Accuracy |

| Objectivity | Precision | |

| Believability | Credibility | |

| Reputation | Credibility | |

| Access | Accessibility | |

| Security | Confidentiality | |

| Relevancy | Usefulness | |

| Value-added | ||

| Timeliness | Currenctness | |

| Completeness | Completeness | |

| Amount of Data | Quantity | |

| Interpretability | Understandability | |

| Ease of Understanding | ||

| Concise Representation | Precision | |

| Consistent Representation | Consistency | |

| ISO 8000 | Accuracy | Accuracy |

| Completeness | Completeness | |

| Consistency | Consistency | |

| Timeliness | Currentness | |

| Uniqueness | Usefulness | |

| Validity | Credibility | |

| ISO 25012 | Accuracy | Accuracy |

| Accessibility | Accessibility | |

| Availability | Availability | |

| Completeness | Completeness | |

| Compliance | Compliance | |

| Confidentiality | Confidentiality | |

| Consistency | Consistency | |

| Credibility | Credibility | |

| Currentness | Currentness | |

| Efficiency | Efficiency | |

| Precision | Precision | |

| Portability | Portability | |

| Recoverability | Recoverability | |

| Traceability | Traceability | |

| Understandability | Understandability | |

| FIPPS | Accuracy | Accuracy |

| Relevancy | Usefulness | |

| Timeliness | Currency | |

| Completeness | Completeness | |

| ESS QAF | Statistical Confidentiality and Data Protection | Confidentiality |

| Accessibility and Clarity | Accessibility | |

| Availability | ||

| Understandability | ||

| Traceability | ||

| Relevance | Usefulness | |

| Timeliness and Punctuality | Currentness | |

| Accuracy and Reliability | Accuracy | |

| Impartiality and Objectivity | Credibility | |

| Cost Effectiveness | Efficiency | |

| Coherence and Comparability | Consistency | |

| UK GOV DQF (DAMA DMBoK) | Completeness | Completeness |

| Consistency | Consistency | |

| Timeliness | Currentness | |

| Uniqueness | Usefulness | |

| Validity | Credibility | |

| Accuracy | Accuracy | |

| IMF | Prerequisites of quality | Usefulness |

| Assurance of Integrity | Credibility | |

| Traceability | ||

| Methodological Soundness | Semantics | |

| Accuracy and Reliability | Accuracy | |

| Serviceability | Consistency | |

| Currency | ||

| Traceability | ||

| Accessibility | Accessibility | |

| Understandability | ||

| BCBS 239 | Accuracy | Accuracy |

| Clarity and Usefulness | Understandability | |

| Comprehensiveness | Completeness | |

| Frequency | Currentness | |

| Distribution | Governance | |

| ALCOA+ | Accurate | Accuracy |

| Attributable | Traceability | |

| Available | Availability | |

| Complete | Completeness | |

| Consistent | Consistency | |

| Enduring | Governance | |

| Legible | Understandability | |

| Original | Traceability | |

| WHO | Completeness | Completeness |

| Timeliness | Currentness | |

| Internal Consistency | Consistency | |

| Accuracy | ||

| External Consistency | Consistency | |

| Accuracy | ||

| Consistency of Population Data | Governance |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Miller, R.; Chan, S.H.M.; Whelan, H.; Gregório, J. A Comparison of Data Quality Frameworks: A Review. Big Data Cogn. Comput. 2025, 9, 93. https://doi.org/10.3390/bdcc9040093

Miller R, Chan SHM, Whelan H, Gregório J. A Comparison of Data Quality Frameworks: A Review. Big Data and Cognitive Computing. 2025; 9(4):93. https://doi.org/10.3390/bdcc9040093

Chicago/Turabian StyleMiller, Russell, Sai Hin Matthew Chan, Harvey Whelan, and João Gregório. 2025. "A Comparison of Data Quality Frameworks: A Review" Big Data and Cognitive Computing 9, no. 4: 93. https://doi.org/10.3390/bdcc9040093

APA StyleMiller, R., Chan, S. H. M., Whelan, H., & Gregório, J. (2025). A Comparison of Data Quality Frameworks: A Review. Big Data and Cognitive Computing, 9(4), 93. https://doi.org/10.3390/bdcc9040093