A Verifiable, Privacy-Preserving, and Poisoning Attack-Resilient Federated Learning Framework

Abstract

1. Introduction

- To eliminate the single point of exposure of the training data and ensure that all parties are equally blind to the complete training data, we exploit additive secret sharing with an oblivious transfer protocol by splitting the training data between the two servers. This minimizes the risk of exposing the training data, enhances protection against reconstruction attacks, and enables privacy preservation even if one server is compromised.

- To enhance robustness against poisoning attacks by malicious participants, we implement a weight selection and filtering strategy that identifies the coherent cores of benign updates and filters out boosted updates based on their L2 norm. The weight selection, filtering, and cross-validation across servers achieve a synergy that creates a resilient barrier against poisoning attacks.

- To reduce reliance on trusting a single party and ensure that all participant contributions are proportionally represented, we utilize our dual-server architecture to exploit the additive secret-sharing scheme. Specifically, this allows each server to provide proof of aggregation and enables the participants to verify the correctness of the aggregated results returned by the servers. This helps to foster a zero-trust architecture and maintain data integrity and confidentiality throughout the training process.

2. Related Studies

3. Preliminaries

- (1)

- FL System

- The server randomly selects participants for model training and distributes the global model for training.

- Participants selected for model training use their local data to train the model, compute their model weights, and individually send their updates to the servers for aggregation.

- The servers use the agreed protocol to collectively aggregate the updates to form a new global model without knowing the participants’ private data.

- The aggregator server sends the aggregated new global model to the participants for another round of training.

- (A)

- Local training dataset (: Within an FL process, assume that there are M participants and each participant holds a local training dataset:

- (B)

- Global dataset (D): This is the union of all individual datasets from M participants consisting of the training data from all the participants in an FL setting. Let the global dataset be:

- (C)

- Neural Network (NN) and Loss Function (): For an NN function defined as f(U, N) where the model input is still and the trainable parameters (weight and biases) are represented as . As a Mean Squared Error (MSE), the loss function can be defined as:

- (2)

- Proof of Aggregation (PoA) [51]

- (3)

- Zero-Trust Architecture

4. System Architecture

- A.

- Description of components

- B.

- Threat Model

- The two servers will not collude with each other and will use the agreed arrangement to execute the protocol but may independently try to infer the data privacy of the participants.

- Poison attacks from malicious participants corrupt the global model through the exchange of malicious local updates. We consider a strong threat model with diverse attack rates (10% to 40%) of participants in the FL process to be malicious. The assumption is that the participants may launch poisoning attacks such as label flipping, sign flipping, gradient manipulation, backdoor, and backdoor embedding attacks.

- Modifying aggregated results and forging proof of aggregated results from any server to deceive other entities within the framework.

- C.

- Design Goal

- Privacy: The scheme should prevent an attacker or a malicious server from interfering with the privacy of the exchanged gradients and further ensure that there is no privacy leakage of the information used to detect poisoning attacks.

- Fidelity: The accuracy of the global model should not be compromised due to the robustness of the framework. As a baseline, we use FedAVG [57], a standard algorithm resilient to poisoning attacks with no privacy preservation method. Therefore, the framework should achieve a level of accuracy comparable to FedAvg.

- Robustness: The framework should ensure the robustness of the global model by preventing malicious participants from poisoning the global model. Specifically, the framework should be resilient to poisoning attacks from malicious participants.

- Efficiency: Our framework should minimize the computation and communication overheads by enabling the preservation of the privacy of participants with limited computational resources.

- D.

- System Interpretation

5. Secret Sharing Schemes

5.1. Secret Sharing for Privacy Preservation

- The learning knowledge of or more shares permits the computation of the original confidential secrets . That is, the complete reconstruction of the secret has a combination of at least shares.

- The learning knowledge of or fewer shares makes completely unknown. That is, the potential value of the secret remains unknown when aware of up to shares, just as there would be knowledge of zero shares. It is vital to note that the reconstruction of the secret requires the presence of at least shares. If then the complete shares are required for the reconstruction of the secret .

5.2. Mathematical Formulation of SSS

5.3. Additive Secret Sharing (ASS)

| Algorithm 1: Additive Secret Sharing |

| Given Split into Such that Apply Oblivious Transfer Send and Given two secret values Shares of can be computed locally by each server setting setting Where L = 2 |

5.4. Oblivious Transfer (OT)

| Algorithm 2: Dual Server Secure Aggregation and PoA Protocol |

2 Procedure 3 for k = 1 ≤ k: 4 (I) Participants 5 for Pi Є Pn do: 6 (a) Split LD between Pi Є Pn using SSS 7 (b) Train WG using LD 8 // Weight Splitting 9 (c) Split each participant W using ASS into and 10 Participants splits into subject to 11 Such that 12 // OT Protocol 13 (d) Use OT protocol to send to Sa and to Sb 14 holds the weight but does not know of 15 holds and no knowledge of 16 end 17 // Poisoning attack detection 18 (II) Sa and Sb 19 for Sa do: 20 (a) Compute 21 (b) Send to Sb 22 end 23 for Sb do: 24 (a) Compute 25 (d) Send to Sa 26 end 27 // Both servers recover weight 28 Sa, Sb performs 29 Sb sends the computation of to Sa 30 Sa sends the computation of to Sb 31 // Distance (D) computation on both servers 32 for all do 33 Sa via 34 Sb via 35 36 end 37 // Determination of median (M) 38 Sa and Sb gets the median M of via 39 for all do: 40 Sa and Sb computes = 41 end 42 // Participants N with the smallest similarity are selected 43 44 // Secure aggregation and proof of PoA 45 Sa computes 46 Sb computes 47 Sa sends to Sb and SP; Sb sends to Sa and SP 48 Sa and Sb computes 49 Sa updates global model and sends W to Sb and (W, to SP for PoA 50 Sb updates its global model and sends W to Sa and (W, to SP for PoA 51 if : 52 reject PoA 53 else: 54 accept PoA 55 end 56 return Aggregated final model W |

5.5. Poisoning Attack Detection

- i.

- Central Tendency Calculation

- ii.

- Euclidean Distance Calculation

- iii.

- Malicious Threshold

- iv.

- Malicious Update Detection

- (1)

- Classification: Classify U as a poisoned update if a significant proportion of outliers within its K nearest weights exist.

5.6. Application in Federated Learning

6. Security Analysis

7. Evaluation

- A.

- Implementation

- B.

- Experimental Setup

- (1)

- MNIST dataset: The MNIST dataset is among the most popular datasets used in ML and computer vision. As a standardized dataset, it consists of handwritten digits 0–9 as grayscale images sized 28 × 28 pixels. It has training and test sets of 60,000 and 10,000 images, respectively. We utilize CNN and MLP models to experiment with the IID and non-IID datasets.

- CIFAR-10 dataset: The CIFAR-10 dataset is a 32 × 32-pixel image consisting of 10 different classes of color images of objects with a training set of 50,000 and a test set of 10,000 images. It has a total of 3072 pixels per image (32 × 32 × 3) with integer values of 0–9 representing the object class. We also use CNN and MLP models

- (2)

- Data Splits: We experimented by splitting each participant’s dataset into IID and non-IID. For IID, the total number of MNIST and CIFAR dataset samples were shuffled and divided evenly among participants. For non-IID, the data distribution is based on class where each participant may possess data from a few classes. To create class-specific data distributions, we utilized a stratified sampling method based on class labels.

- (3)

- Baselines: As benchmarks, we utilized a standard aggregation rule, a poisoning attack detection scheme, an SMC protocol with filtering and clipping method, and a secret-sharing secure aggregation scheme resilient to poisoning attacks.

- FedAvg [57]: This standard algorithm is used in FL and is resistant to poisoning attacks without any privacy preservation method.

- Krum [72]: A poisoning attack detection method in FL that optimally selects an update from returned post-trained models and uses the updates’ Euclidean distance as a baseline of comparison with other received updates.

- EPPRFL [24]: A resistant poisoning attack privacy preservation scheme that utilizes SMC protocols for filtering and clipping poisoned updates.

- LSFL [25]: An ASS scheme for privacy preservation as well as a K-nearest weight selection aggregation method that is robust to Byzantine participants.

- (4)

- Hyper-Parameters: In our setup, we conducted experiments to test our framework using the following cases: MNIST CNN IID, MNIST CNN non-IID, MNIST MLP IID, MNIST MLP non-IID, CIFAR CNN IID, CIFAR CNN non-IID, CIFAR MLP IID, and CIFAR MLP non-IID. The learning rate for local training was set at 0.0001, an epoch as 5, and a batch size of 32 was chosen while the server learning rate was set to 0.5. For each round of training in FL, we had at least 70% of selected participants N for model training.

- (5)

- Poisoning Attack: We considered the following poisoning attacks such as sign-flipping, label-flipping, backdoor, backdoor embedding, and gradient manipulation attacks. We carried out experiments to demonstrate the resilience and robustness of our framework to these attacks and conducted a comparison with some SOTA research

- C.

- Experimental Results

- (1)

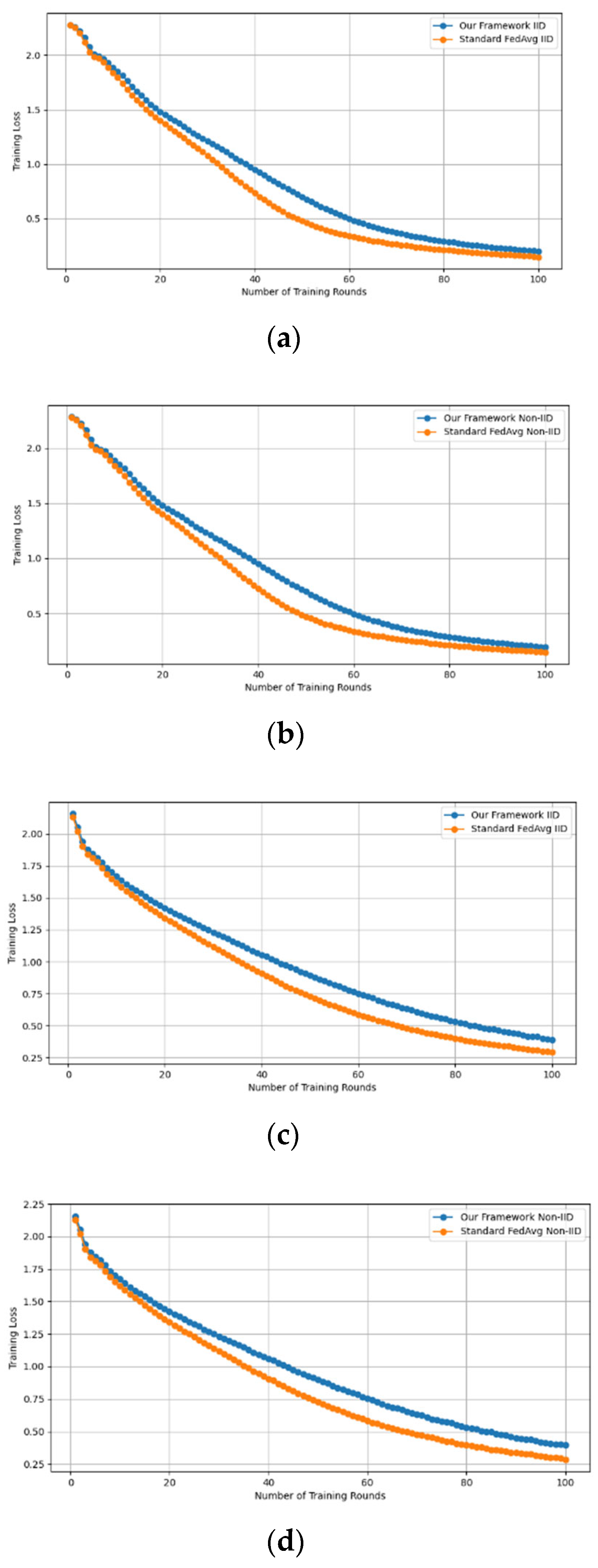

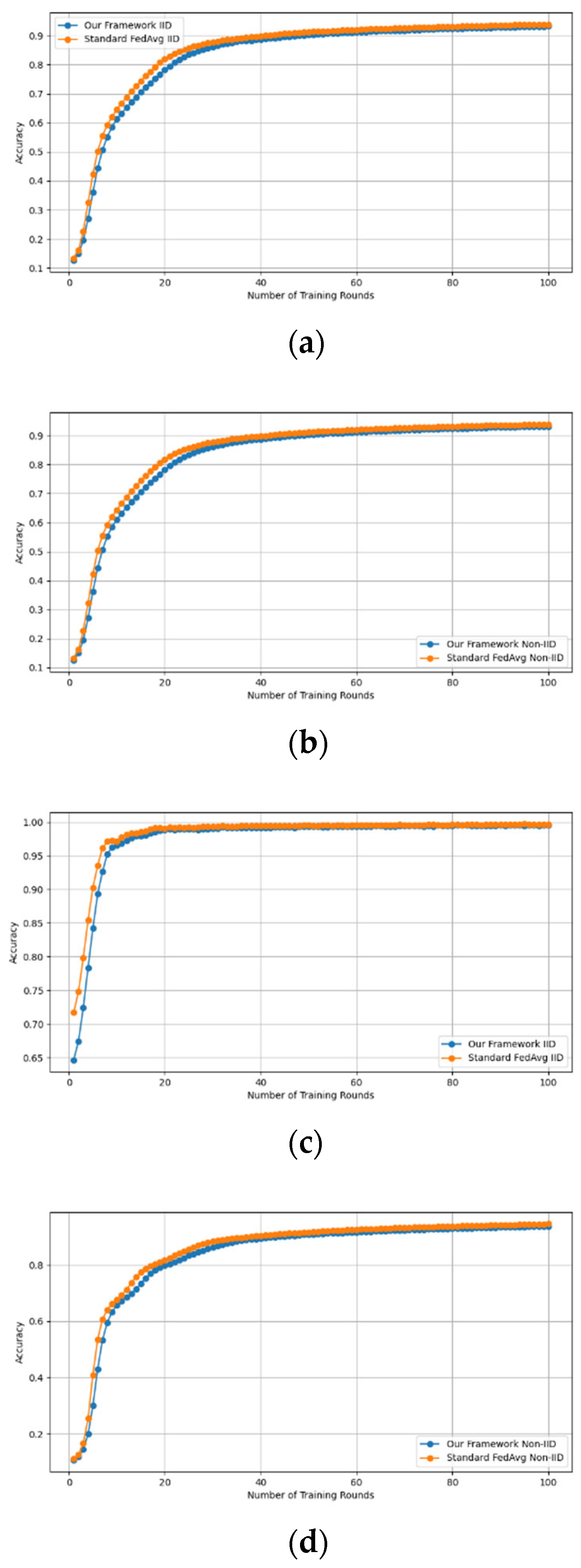

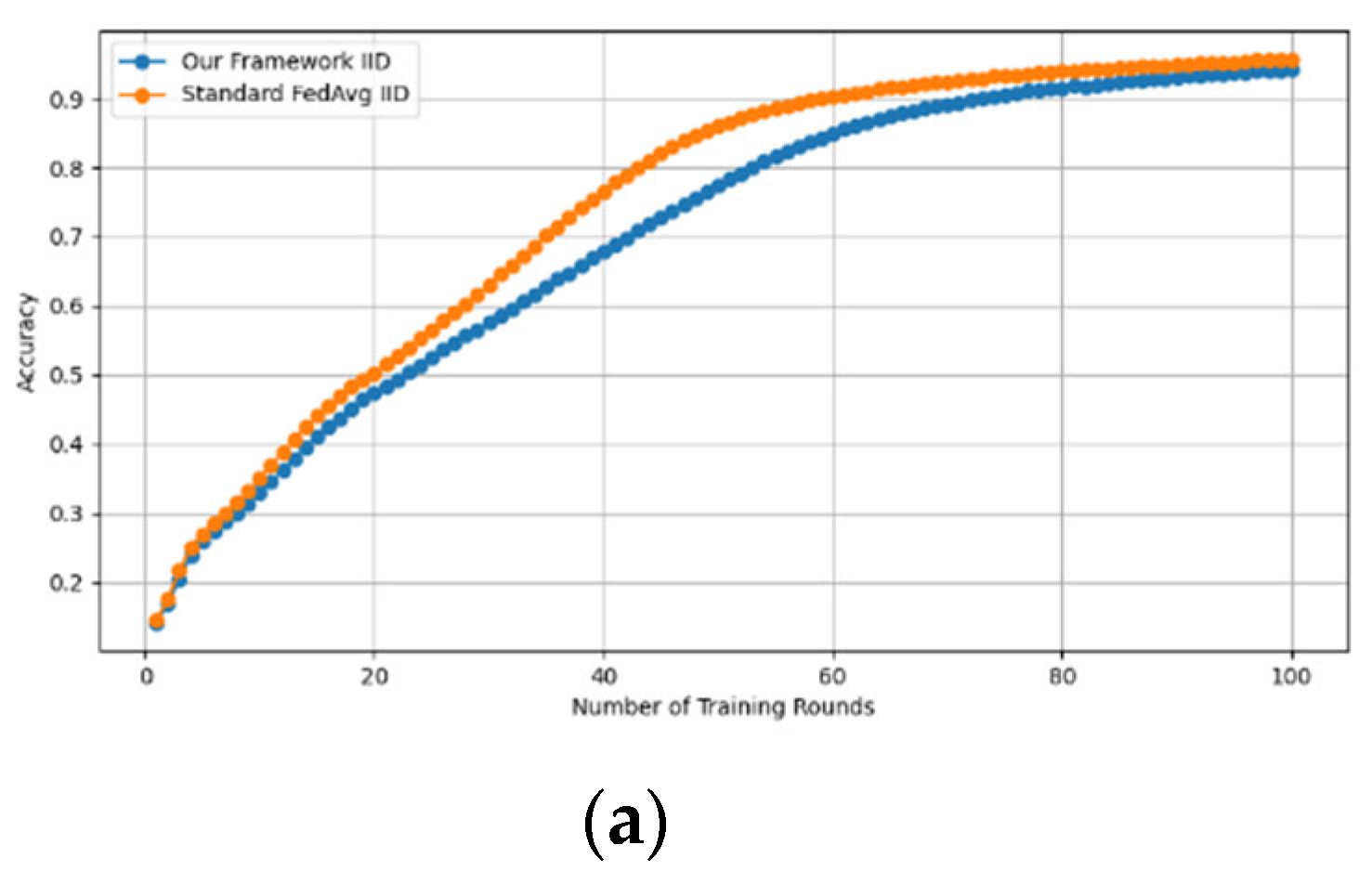

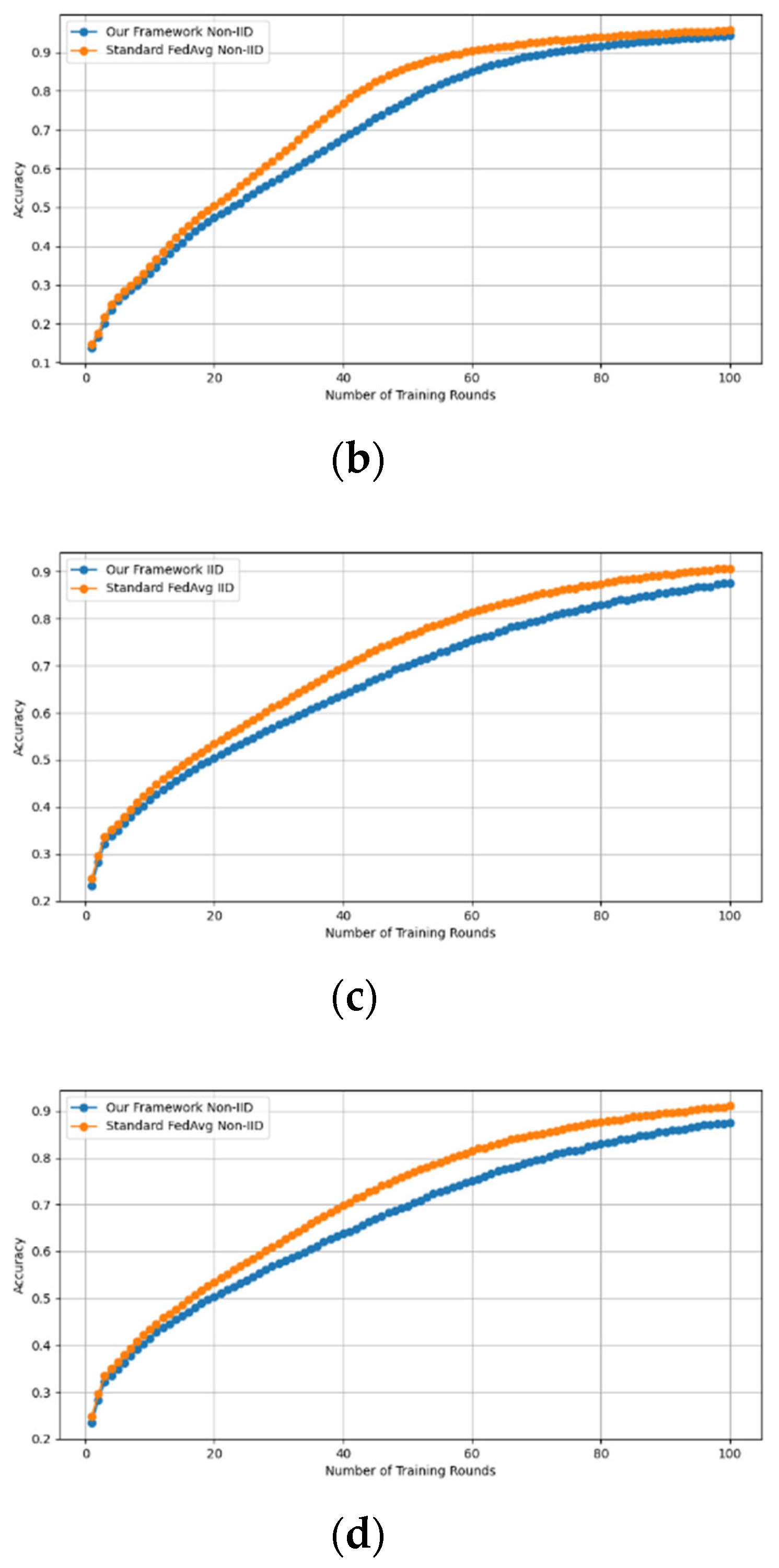

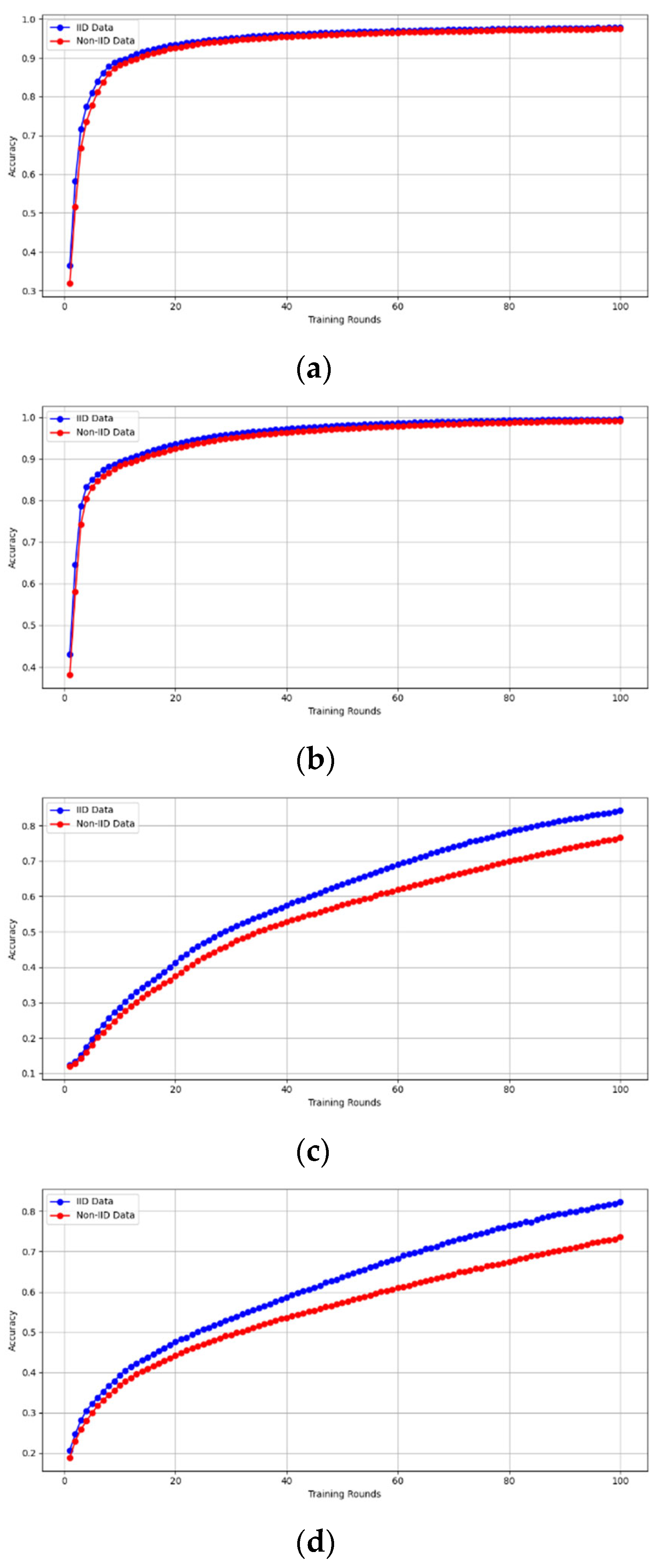

- Fidelity Evaluation: We compared FedAvg and our framework in terms of accuracy and loss to evaluate the fidelity goal when there are no poisoning attackers. In this experiment, the number of participants and communication rounds was set to 100, respectively, with the evaluation carried out using the MNIST and CIFAR-10 datasets over the CNN and MLP models in both IID and non-IID settings. The convergence of our framework and the FedAvg are shown in Figure 2 and Figure 3, using the MNIST and CIFAR-10 datasets over the CNN and MLP models in both IID and non-IID settings, respectively. The two figures illustrate the comparable convergence performance of our framework and a standard FedAvg in terms of training loss and the number of training rounds using both the MNIST and CIFAR-10 datasets over the CNN and MLP models in IID and non-IID settings. In terms of convergence, our framework has no impact on the model’s performance and, as observed, it yields approximately the same result as a standard FedAVG without any privacy-preserving mechanisms or poisoning attack-resilient methods. The results show that our framework does not impact model performance but performs equally well as a standard FedAvg offering additional benefits such as stability and robustness.

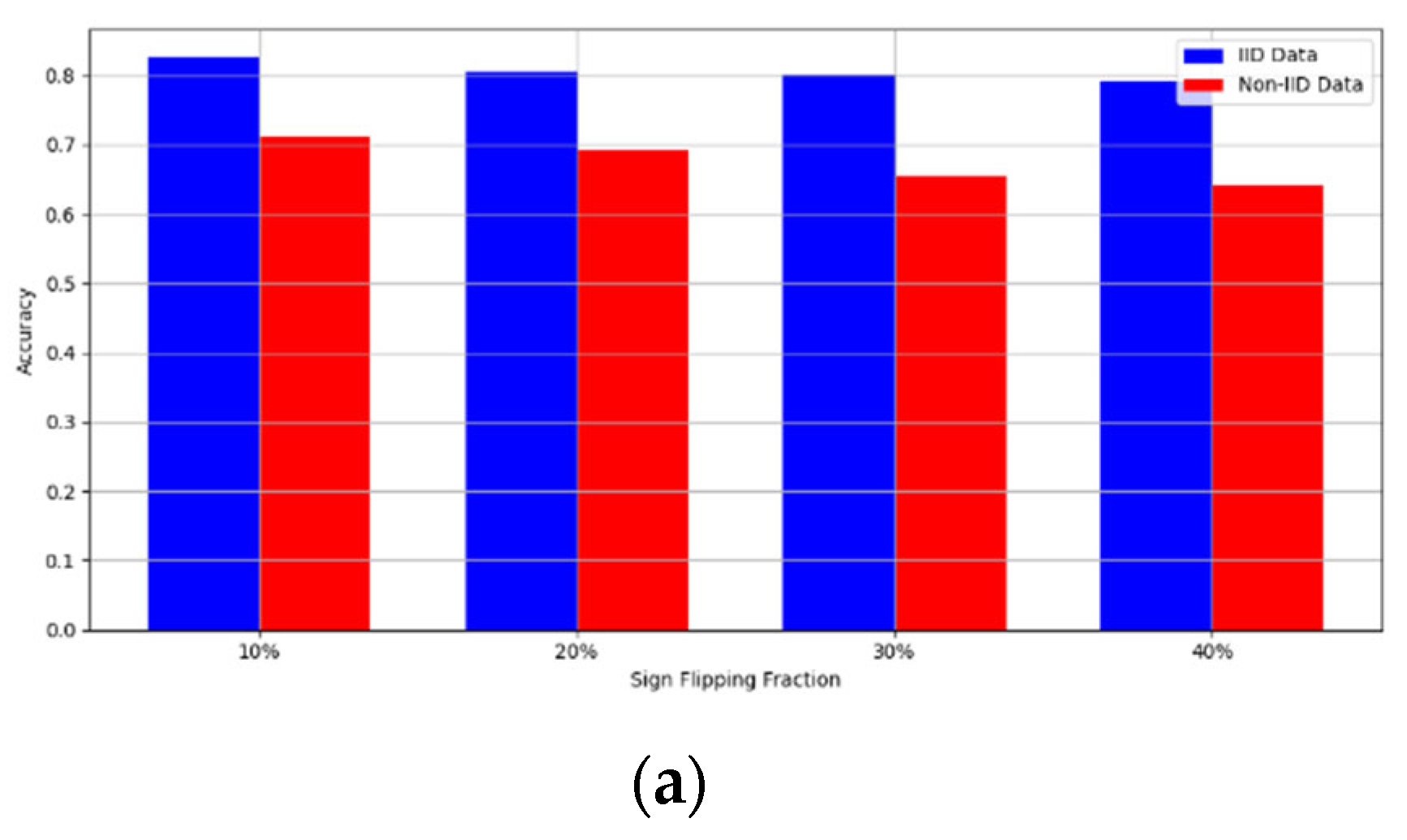

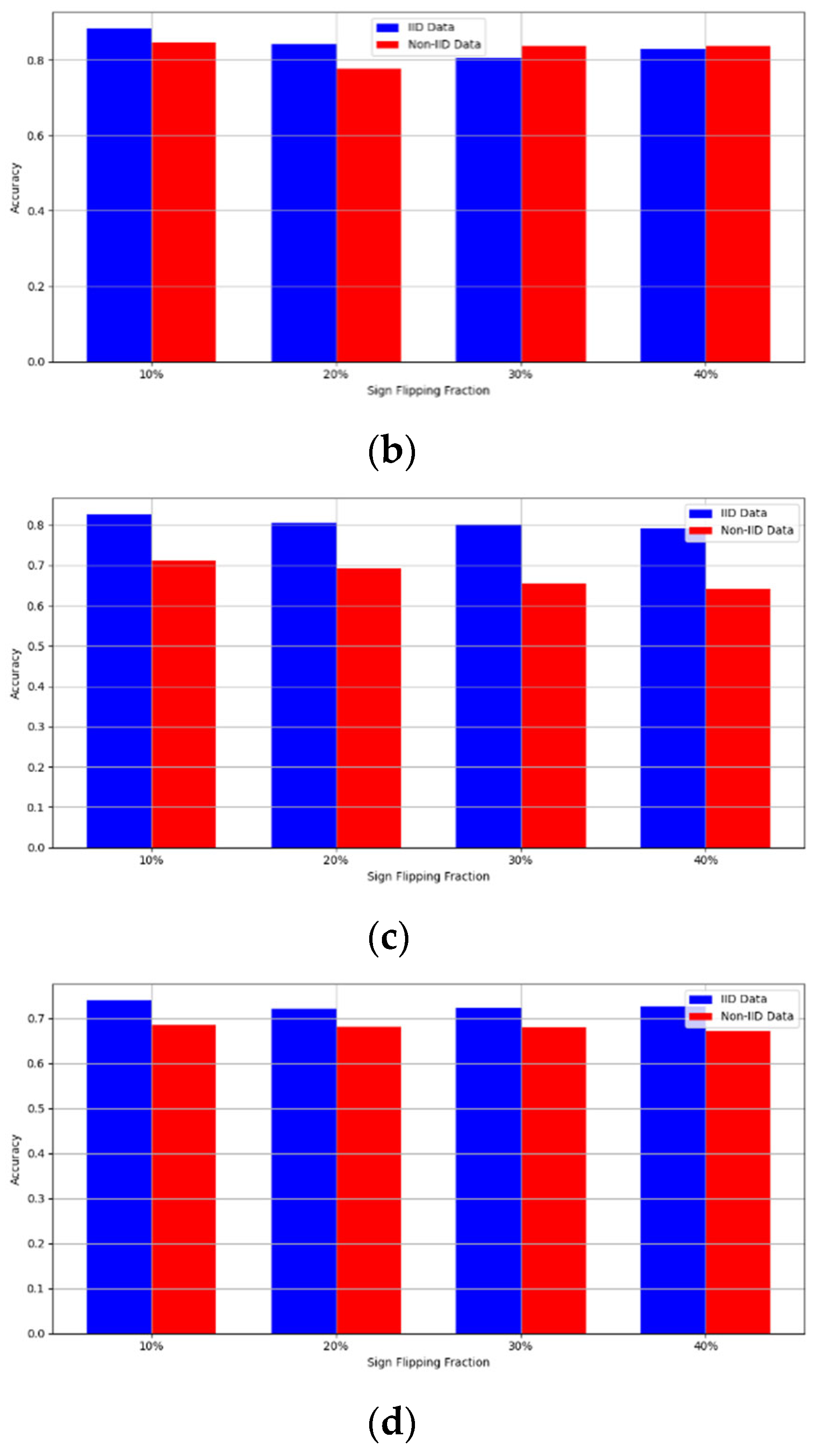

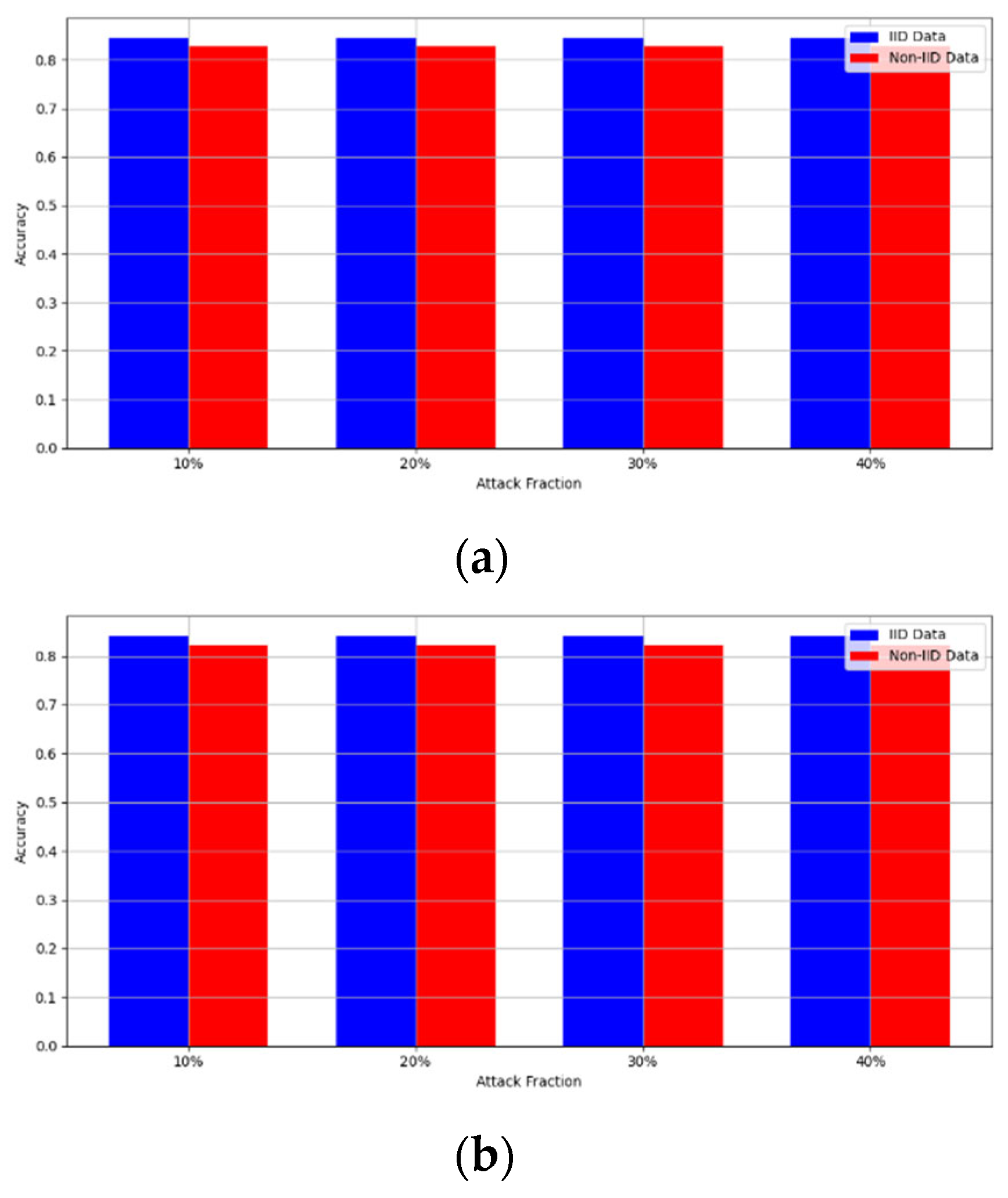

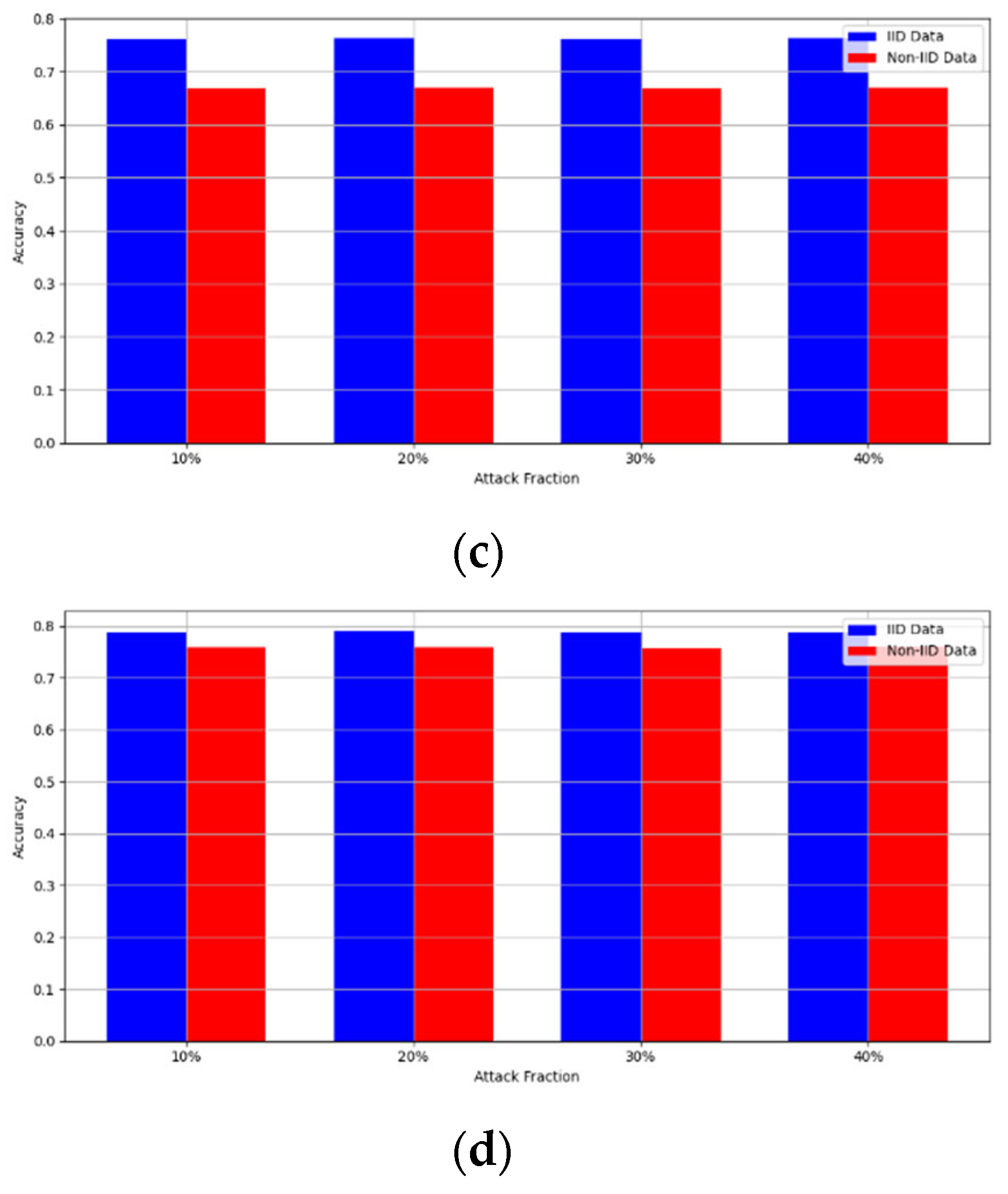

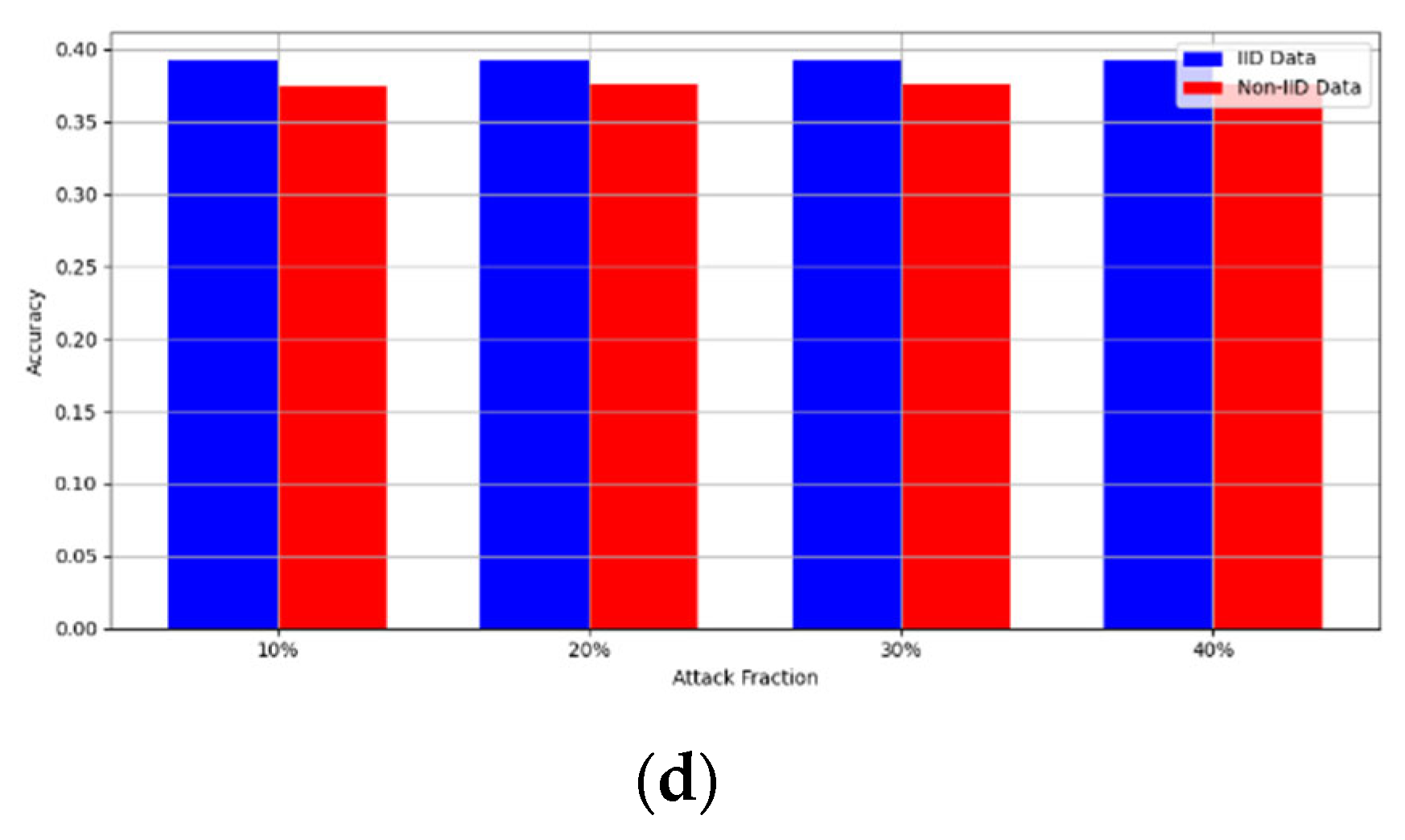

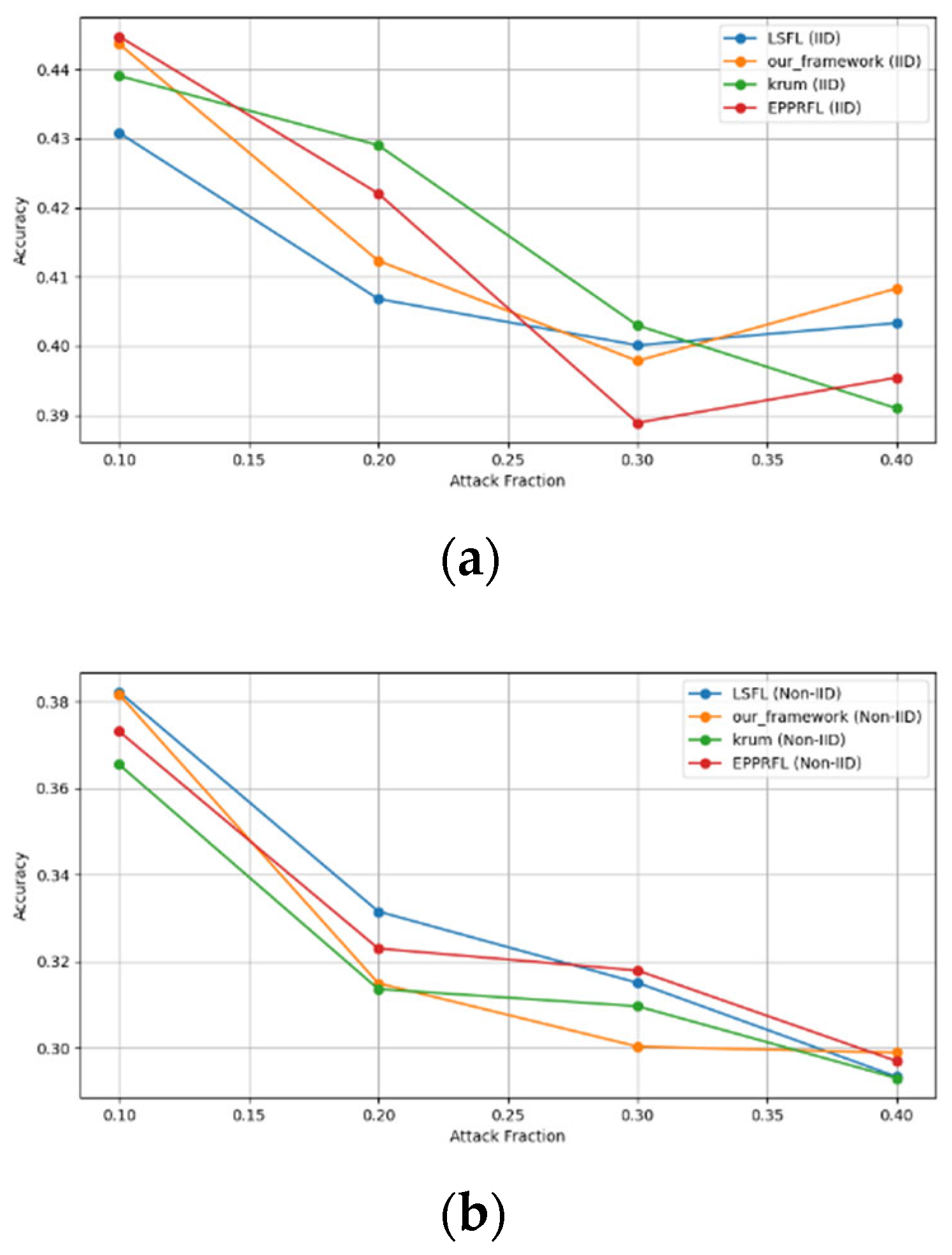

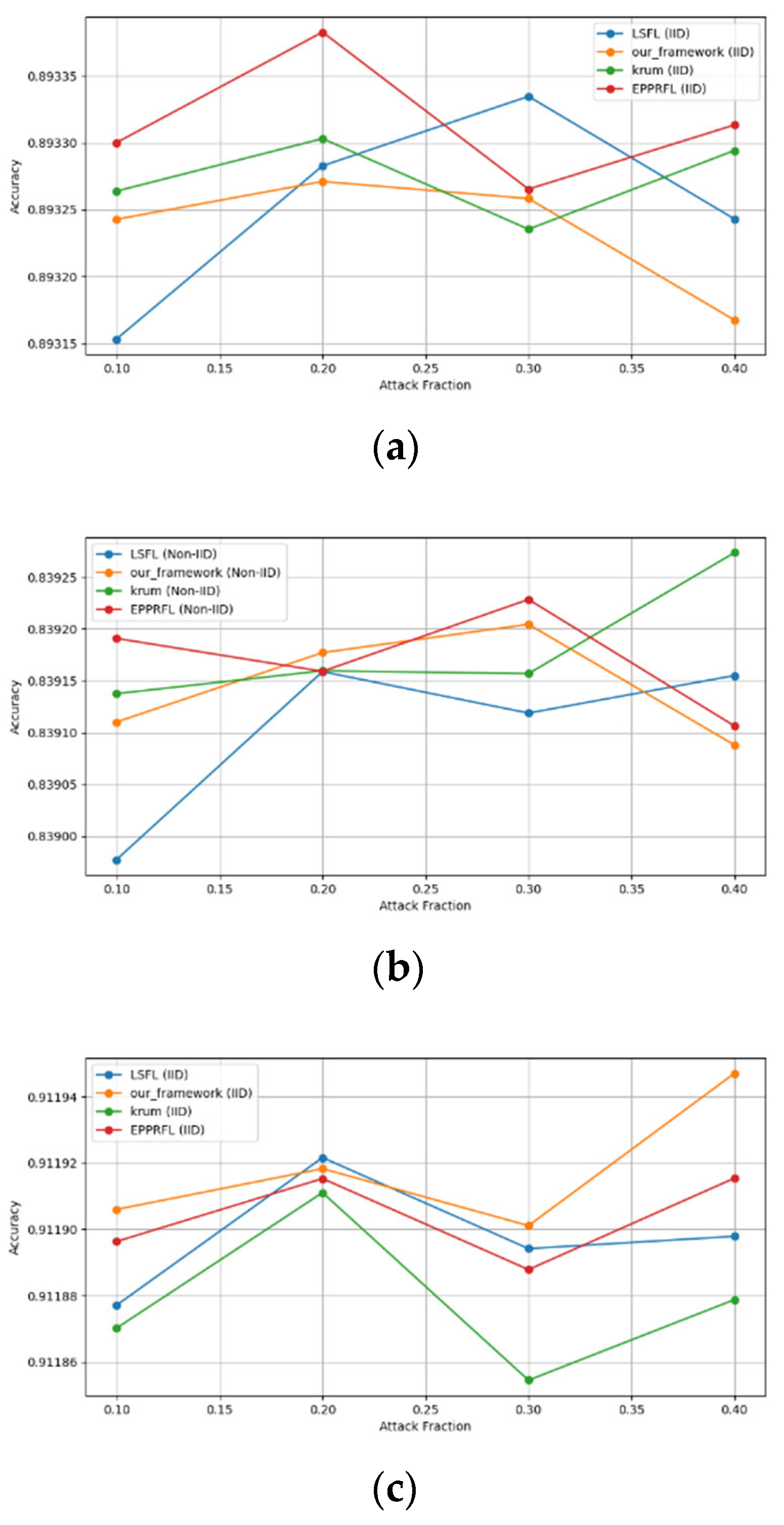

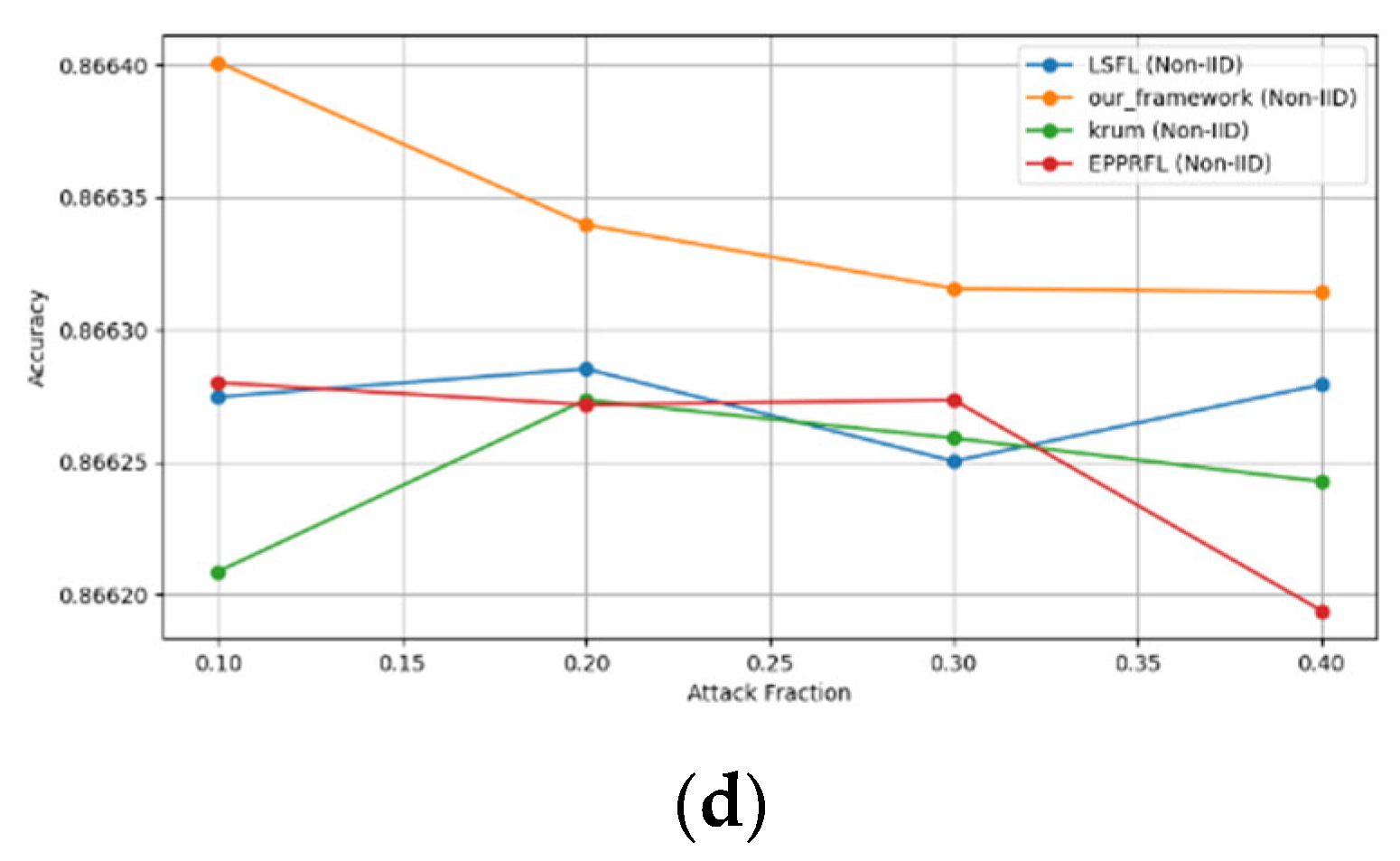

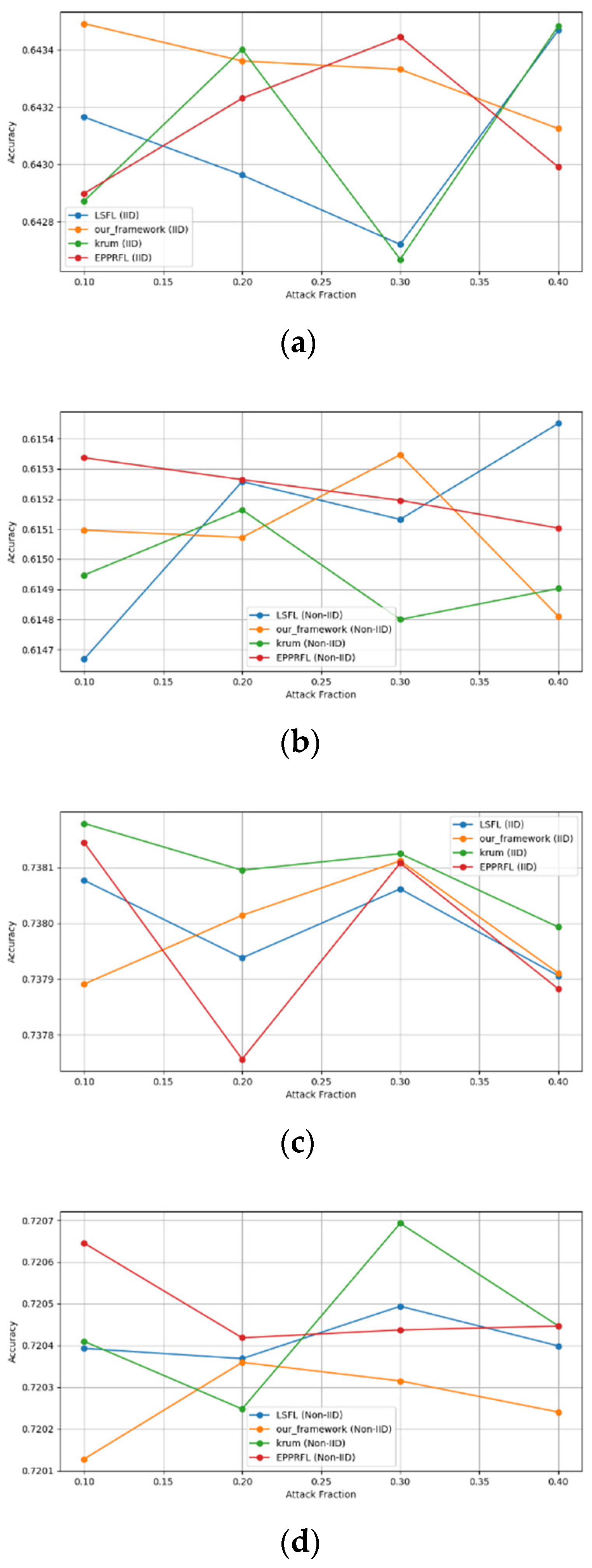

- (2)

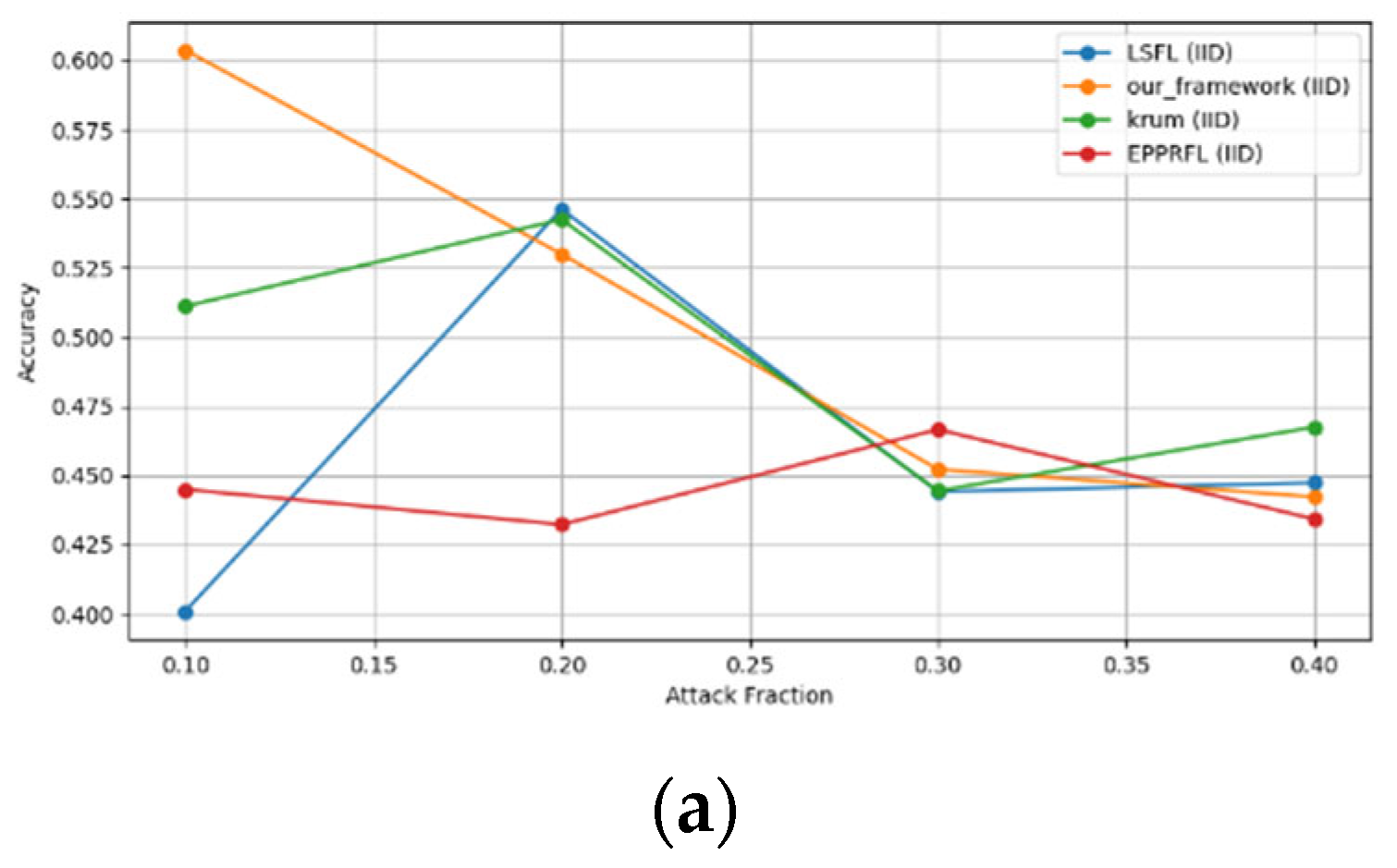

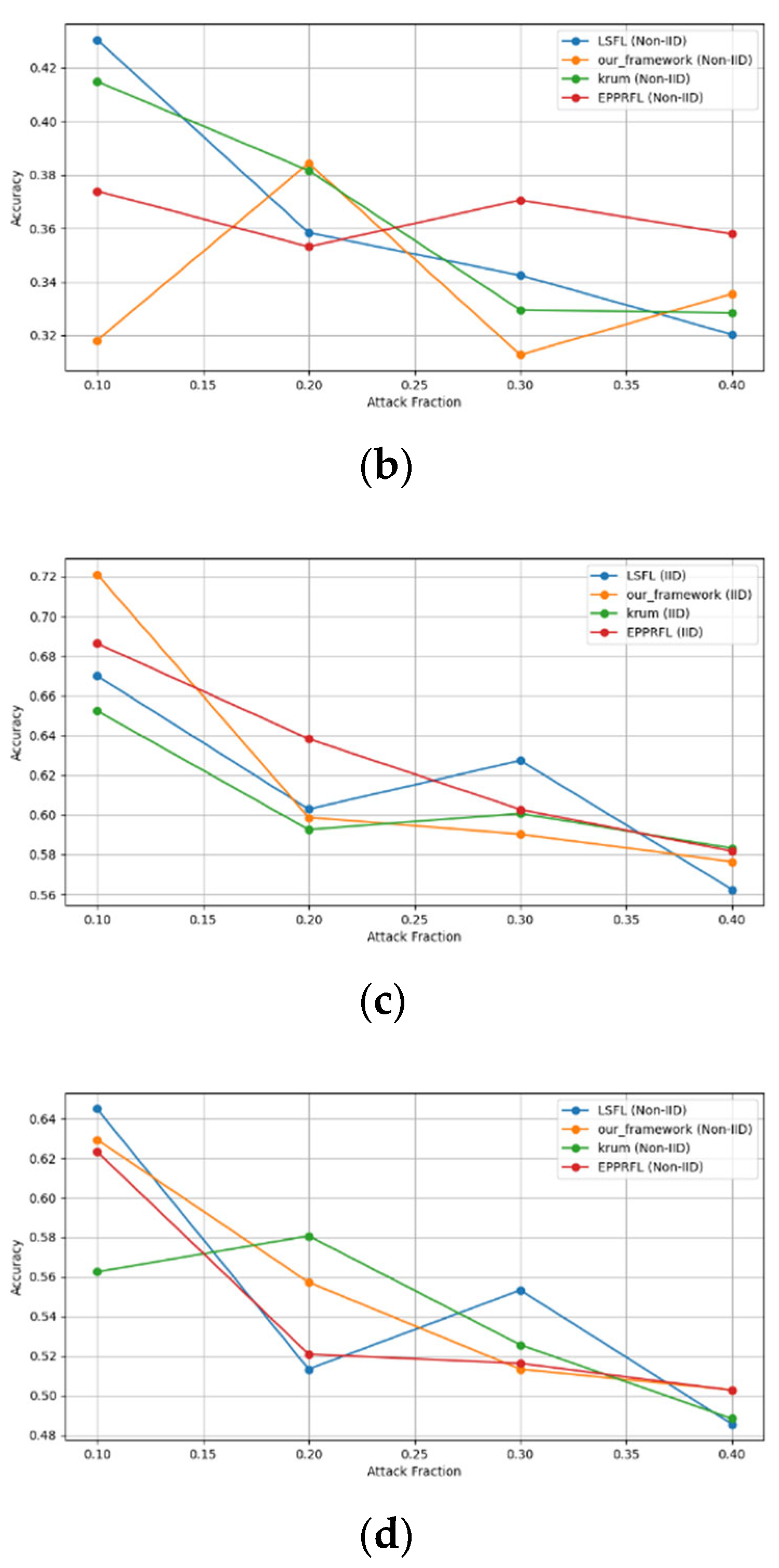

- Robustness Evaluation: We considered label flipping, sign flipping, backdoor, backdoor embedding, and gradient manipulation attacks on the MNIST and CIFAR-10 datasets in both IID and non-IID settings. The effectiveness of these attacks was evaluated by their impact on the model’s accuracy. First, we considered the attacks in situations where 10%, 20%, 30%, and 40% of the participants were poisoning attackers and evaluated the impact on the model’s accuracy. Figure 6, Figure 7, Figure 8, Figure 9 and Figure 10 illustrate the accuracy of our framework with varying numbers of label flipping, sign flipping, backdoor, backdoor embedding, and gradient manipulation attacks, respectively, using the MNIST and CIFAR-10 datasets in both IID and non-IID settings. Our framework demonstrated graceful degradation in accuracy as the attack fraction increased, maintaining an accuracy level of above 60% on all attacks, even at a 40% attack fraction, suggesting that the model is resilient to data heterogeneity and robust to poisoning attacks.

- (3)

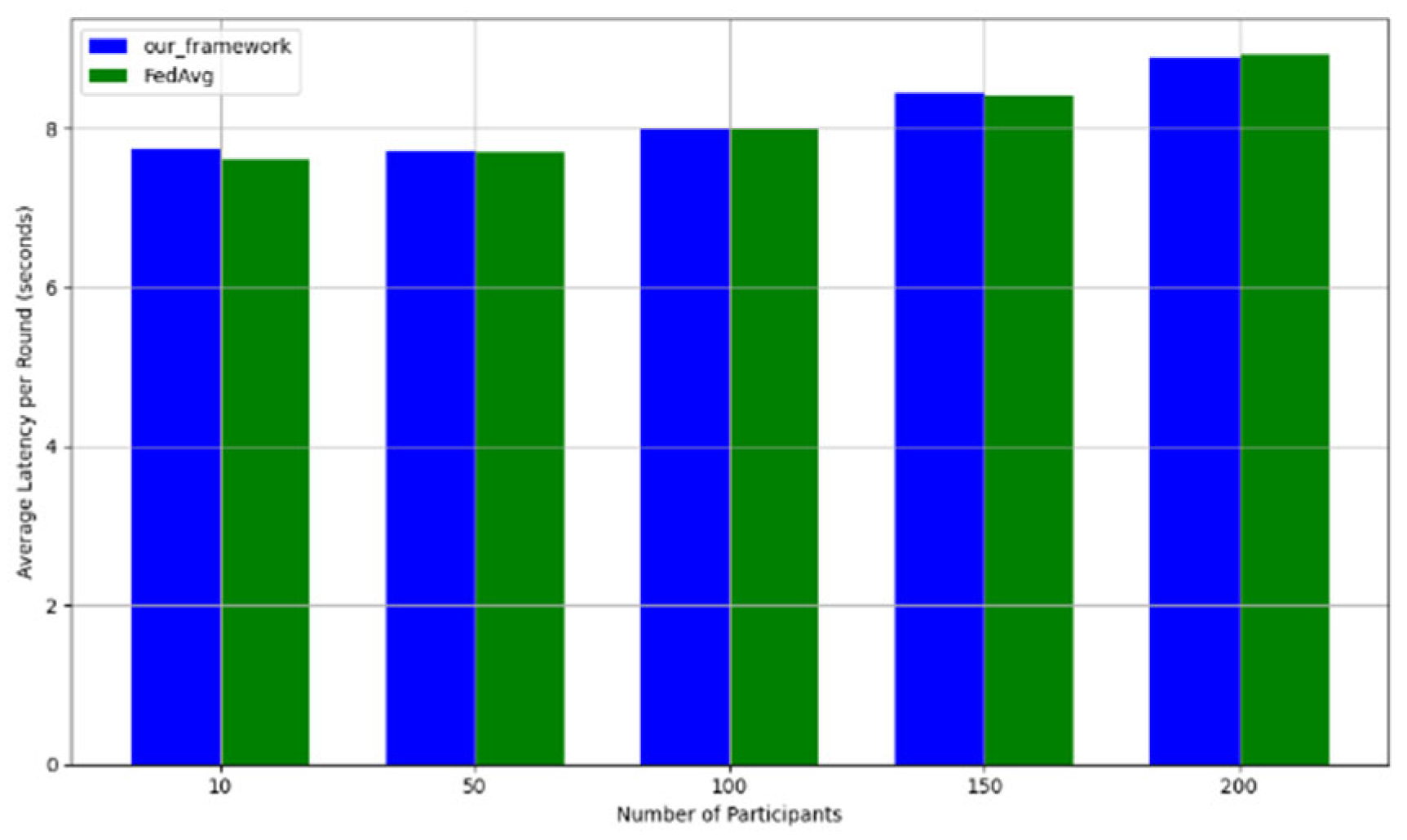

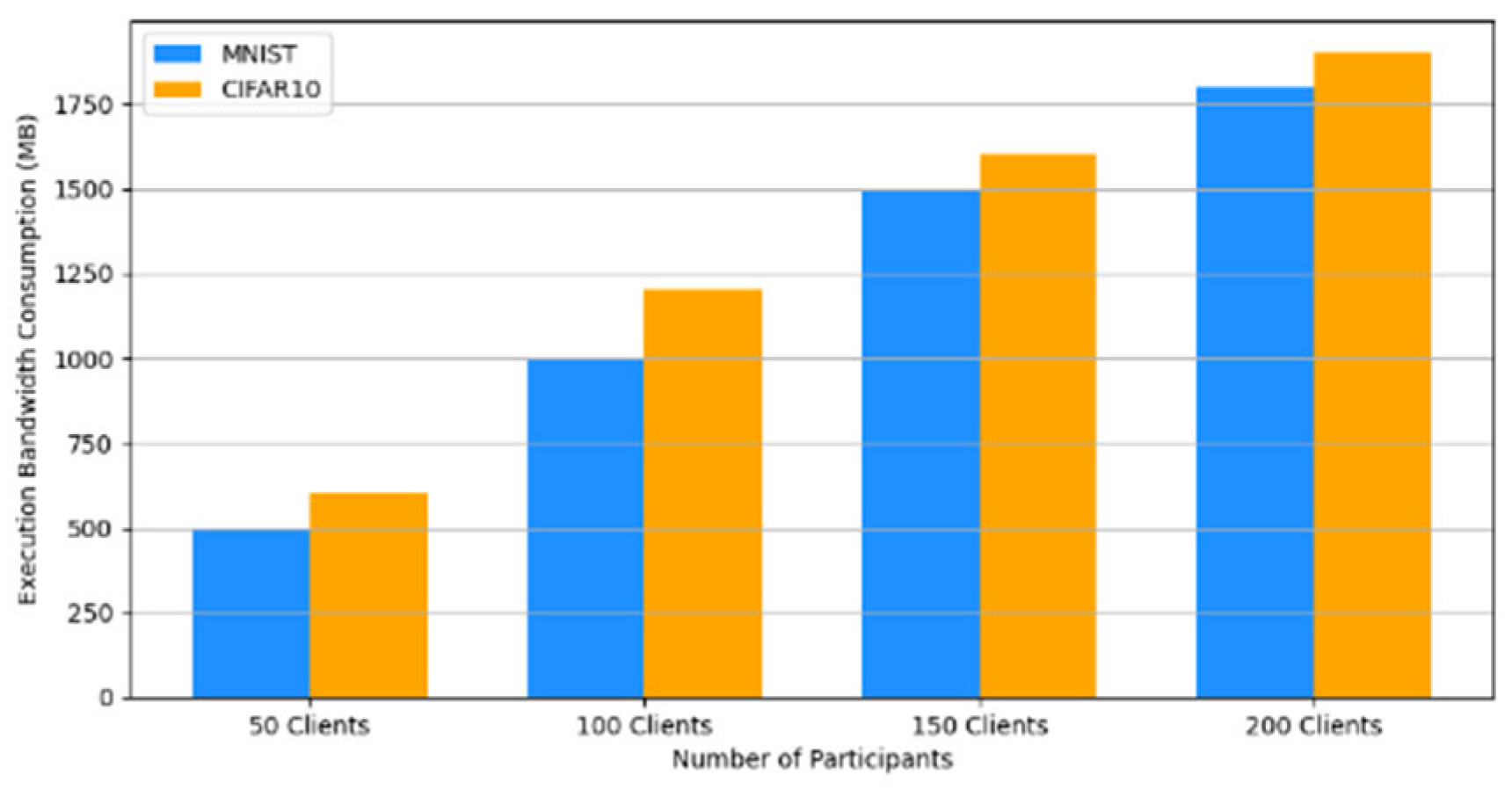

- Efficiency Evaluation: To evaluate the efficiency of our framework, we measured and compared the latency of FedAVG and our framework for various participants, providing a real-world efficiency comparison. Therefore, we tested FedAVG and our framework with varying numbers of participants (10, 50, 100, 150, and 200), and the result showed no difference despite the robustness of our framework. Figure 17 shows latency iteration over a varying number of participants. The average latency per round is the ratio of the total time to the number of rounds. This is conducted using MNIST and CIFAR10 datasets over the CNN model in an IID setting with the number of rounds set at 20. Moreover, we demonstrate that the communication overheads of our framework do not result in a drastic increase as the number of participants increases. Instead, it linearly increases with an increased number of participants, as shown in Figure 18. This implies that our framework would work with large-scale deployments. Finally, we show our framework’s computation cost per round in Figure 19 to reflect real-world performance.

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Abbas, Z.; Ahmad, S.F.; Syed, M.H.; Anjum, A.; Rehman, S. Exploring Deep Federated Learning for the Internet of Things: A GDPR-Compliant Architecture. IEEE Access 2024, 12, 10548–10574. [Google Scholar] [CrossRef]

- Zhang, M.; Meng, W.; Zhou, Y.; Ren, K. CSCHECKER: Revisiting GDPR and CCPA Compliance of Cookie Banners on the Web. In Proceedings of the IEEE/ACM 46th International Conference on Software Engineering, Lisbon, Portugal, 14–20 April 2024; IEEE Computer Society: Washington, DC, USA, 2024; pp. 2147–2158. [Google Scholar] [CrossRef]

- Baskaran, H.; Yussof, S.; Abdul Rahim, F.; Abu Bakar, A. Blockchain and The Personal Data Protection Act 2010 (PDPA) in Malaysia. In Proceedings of the 2020 8th International Conference on Information Technology and Multimedia (ICIMU), Selangor, Malaysia, 24–26 August 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Yuan, L.; Wang, Z.; Sun, L.; Yu, P.S.; Brinton, C.G. Decentralized Federated Learning: A Survey and Perspective. IEEE Internet Things J. 2024, 11, 34617–34638. [Google Scholar] [CrossRef]

- Rauniyar, A.; Hagos, D.H.; Jha, D.; Håkegård, J.E.; Bagci, U.; Rawat, D.B.; Vlassov, V. Federated Learning for Medical Applications: A Taxonomy, Current Trends, Challenges, and Future Research Directions. IEEE Internet Things J. 2024, 11, 7374–7398. [Google Scholar] [CrossRef]

- Tang, Y.; Liu, Z. A Credit Card Fraud Detection Algorithm Based on SDT and Federated Learning. IEEE Access 2024, 12, 182547–182560. [Google Scholar] [CrossRef]

- Parekh, R.; Patel, N.; Gupta, R.; Jadav, N.K.; Tanwar, S.; Alharbi, A.; Tolba, A.; Neagu, B.C.; Raboaca, M.S. GeFL: Gradient Encryption-Aided Privacy Preserved Federated Learning for Autonomous Vehicles. IEEE Access 2023, 11, 1825–1839. [Google Scholar] [CrossRef]

- Mothukuri, V.; Khare, P.; Parizi, R.M.; Pouriyeh, S.; Dehghantanha, A.; Srivastava, G. Federated-Learning-Based Anomaly Detection for IoT Security Attacks. IEEE Internet Things J. 2022, 9, 2545–2554. [Google Scholar] [CrossRef]

- Li, Q.; Wen, Z.; Wu, Z.; Hu, S.; Wang, N.; Li, Y.; Liu, X.; He, B. A Survey on Federated Learning Systems: Vision, Hype and Reality for Data Privacy and Protection. IEEE Trans. Knowl. Data Eng. 2023, 35, 3347–3366. [Google Scholar] [CrossRef]

- Jawadur Rahman, K.M.; Ahmed, F.; Akhter, N.; Hasan, M.; Amin, R.; Aziz, K.E.; Islam, A.M.; Mukta, M.S.H.; Islam, A.N. Challenges, Applications and Design Aspects of Federated Learning: A Survey. IEEE Access 2021, 9, 124682–124700. [Google Scholar] [CrossRef]

- Xu, G.; Li, H.; Zhang, Y.; Xu, S.; Ning, J.; Deng, R.H. Privacy-Preserving Federated Deep Learning with Irregular Users. IEEE Trans. Dependable Secure Comput. 2022, 19, 1364–1381. [Google Scholar] [CrossRef]

- Wang, Z.; Song, M.; Zhang, Z.; Song, Y.; Wang, Q.; Qi, H. Beyond Inferring Class Representatives: User-Level Privacy Leakage from Federated Learning. arXiv 2018, arXiv:1812.00535. [Google Scholar]

- Zhao, B.; Mopuri, K.R.; Bilen, H. iDLG: Improved Deep Leakage from Gradients. arXiv 2020, arXiv:2001.02610. [Google Scholar]

- Zhang, J.; Chen, B.; Cheng, X.; Binh, H.T.T.; Yu, S. PoisonGAN: Generative Poisoning Attacks against Federated Learning in Edge Computing Systems. IEEE Internet Things J. 2021, 8, 3310–3322. [Google Scholar] [CrossRef]

- Xia, G.; Chen, J.; Yu, C.; Ma, J. Poisoning Attacks in Federated Learning: A Survey. IEEE Access 2023, 11, 10708–10722. [Google Scholar] [CrossRef]

- Sun, G.; Cong, Y.; Dong, J.; Wang, Q.; Lyu, L.; Liu, J. Data Poisoning Attacks on Federated Machine Learning. IEEE Internet Things J. 2022, 9, 11365–11375. [Google Scholar] [CrossRef]

- Xu, G.; Li, H.; Liu, S.; Yang, K.; Lin, X. VerifyNet: Secure and Verifiable Federated Learning. IEEE Trans. Inf. Forensics Secur. 2020, 15, 911–926. [Google Scholar] [CrossRef]

- Sav, S.; Pyrgelis, A.; Troncoso-Pastoriza, J.R.; Froelicher, D.; Bossuat, J.P.; Sousa, J.S.; Hubaux, J.P. POSEIDON: Privacy-Preserving Federated Neural Network Learning. arXiv 2020, arXiv:2009.00349. [Google Scholar]

- Byrd, D.; Polychroniadou, A. Differentially private secure multi-party computation for federated learning in financial applications. In Proceedings of the ICAIF 2020—1st ACM International Conference on AI in Finance, New York, NY, USA, 15–16 October 2020; Association for Computing Machinery, Inc.: New York, NY, USA, 2021. [Google Scholar] [CrossRef]

- Cao, D.; Chang, S.; Lin, Z.; Liu, G.; Sun, D. Understanding distributed poisoning attack in federated learning. In Proceedings of the International Conference on Parallel and Distributed Systems—ICPADS, Tianjin, China, 4–6 December 2019; IEEE Computer Society: Washington, DC, USA, 2019; pp. 233–239. [Google Scholar] [CrossRef]

- Cao, X.; Fang, M.; Liu, J.; Gong, N.Z. FLTrust: Byzantine-robust Federated Learning via Trust Bootstrapping. In Proceedings of the 28th Annual Network and Distributed System Security Symposium, NDSS 2021, Virtual, 21–25 February 2021; The Internet Society: Reston, VA, USA, 2021. [Google Scholar] [CrossRef]

- Fu, A.; Zhang, X.; Xiong, N.; Gao, Y.; Wang, H.; Zhang, J. VFL: A Verifiable Federated Learning with Privacy-Preserving for Big Data in Industrial IoT. IEEE Trans. Ind. Inform. 2022, 18, 3316–3326. [Google Scholar] [CrossRef]

- Bagdasaryan, E.; Veit, A.; Hua, Y.; Estrin, D.; Shmatikov, V. How To Backdoor Federated Learning. arXiv 2018, arXiv:1807.00459. [Google Scholar]

- Li, X.; Yang, X.; Zhou, Z.; Lu, R. Efficiently Achieving Privacy Preservation and Poisoning Attack Resistance in Federated Learning. IEEE Trans. Inf. Forensics Secur. 2024, 19, 4358–4373. [Google Scholar] [CrossRef]

- Zhang, Z.; Wu, L.; Ma, C.; Li, J.; Wang, J.; Wang, Q.; Yu, S. LSFL: A Lightweight and Secure Federated Learning Scheme for Edge Computing. IEEE Trans. Inf. Forensics Secur. 2023, 18, 365–379. [Google Scholar] [CrossRef]

- Rathee, M.; Shen, C.; Wagh, S.; Popa, R.A. ELSA: Secure Aggregation for Federated Learning with Malicious Actors. In Proceedings of the 2023 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 21–25 May 2023. [Google Scholar] [CrossRef]

- Wahab, O.A.; Mourad, A.; Otrok, H.; Taleb, T. Federated Machine Learning: Survey, Multi-Level Classification, Desirable Criteria and Future Directions in Communication and Networking Systems. IEEE Commun. Surv. Tutor. 2021, 23, 1342–1397. [Google Scholar] [CrossRef]

- Zhao, Z.; Liu, D.; Cao, Y.; Chen, T.; Zhang, S.; Tang, H. U-shaped Split Federated Learning: An Efficient Cross-Device Learning Framework with Enhanced Privacy-preserving. In Proceedings of the 2023 9th International Conference on Computer and Communications, ICCC 2023, Chengdu, China, 8–11 December 2023; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2023; pp. 2182–2186. [Google Scholar] [CrossRef]

- Li, B.; Shi, Y.; Guo, Y.; Kong, Q.; Jiang, Y. Incentive and Knowledge Distillation Based Federated Learning for Cross-Silo Applications. In Proceedings of the INFOCOM WKSHPS 2022—IEEE Conference on Computer Communications Workshops, New York, NY, USA, 2–5 May 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022. [Google Scholar] [CrossRef]

- Iqbal, M.; Tariq, A.; Adnan, M.; Din, I.U.; Qayyum, T. FL-ODP: An Optimized Differential Privacy Enabled Privacy Preserving Federated Learning. IEEE Access 2023, 11, 116674–116683. [Google Scholar] [CrossRef]

- Zhang, G.; Liu, B.; Zhu, T.; Ding, M.; Zhou, W. PPFed: A Privacy-Preserving and Personalized Federated Learning Framework. IEEE Internet Things J. 2024, 11, 19380–19393. [Google Scholar] [CrossRef]

- Ye, D.; Shen, S.; Zhu, T.; Liu, B.; Zhou, W. One Parameter Defense—Defending Against Data Inference Attacks via Differential Privacy. IEEE Trans. Inf. Forensics Secur. 2022, 17, 1466–1480. [Google Scholar] [CrossRef]

- Hijazi, N.M.; Aloqaily, M.; Guizani, M.; Ouni, B.; Karray, F. Secure Federated Learning with Fully Homomorphic Encryption for IoT Communications. IEEE Internet Things J. 2024, 11, 4289–4300. [Google Scholar] [CrossRef]

- Zhang, L.; Xu, J.; Vijayakumar, P.; Sharma, P.K.; Ghosh, U. Homomorphic Encryption-Based Privacy-Preserving Federated Learning in IoT-Enabled Healthcare System. IEEE Trans. Netw. Sci. Eng. 2023, 10, 2864–2880. [Google Scholar] [CrossRef]

- Cai, Y.; Ding, W.; Xiao, Y.; Yan, Z.; Liu, X.; Wan, Z. SecFed: A Secure and Efficient Federated Learning Based on Multi-Key Homomorphic Encryption. IEEE Trans. Dependable Secure Comput. 2024, 21, 3817–3833. [Google Scholar] [CrossRef]

- Cao, X.K.; Wang, C.D.; Lai, J.H.; Huang, Q.; Chen, C.L.P. Multiparty Secure Broad Learning System for Privacy Preserving. IEEE Trans. Cybern. 2023, 53, 6636–6648. [Google Scholar] [CrossRef] [PubMed]

- Sotthiwat, E.; Zhen, L.; Li, Z.; Zhang, C. Partially encrypted multi-party computation for federated learning. In Proceedings of the 21st IEEE/ACM International Symposium on Cluster, Cloud and Internet Computing, CCGrid 2021, Melbourne, Australia, 10–13 May 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2021; pp. 828–835. [Google Scholar] [CrossRef]

- Mbonu, W.E.; Maple, C.; Epiphaniou, G. An End-Process Blockchain-Based Secure Aggregation Mechanism Using Federated Machine Learning. Electronics 2023, 12, 4543. [Google Scholar] [CrossRef]

- Dragos, L.; Togan, M. Privacy-Preserving Machine Learning Using Federated Learning and Secure Aggregation. In Proceedings of the 12th International Conference on Electronics, Computers and Artificial Intelligence—ECAI-2020, Bucharest, Romania, 25–27 June 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Ma, Z.; Ma, J.; Miao, Y.; Li, Y.; Deng, R.H. ShieldFL: Mitigating Model Poisoning Attacks in Privacy-Preserving Federated Learning. IEEE Trans. Inf. Forensics Secur. 2022, 17, 1639–1654. [Google Scholar] [CrossRef]

- Liu, J.; Li, X.; Liu, X.; Zhang, H.; Miao, Y.; Deng, R.H. DefendFL: A Privacy-Preserving Federated Learning Scheme Against Poisoning Attacks. IEEE Trans. Neural Netw. Learn. Syst. 2024, 1–14. [Google Scholar] [CrossRef]

- Khazbak, Y.; Tan, T.; Cao, G. MLGuard: Mitigating Poisoning Attacks in Privacy Preserving Distributed Collaborative Learning. In Proceedings of the ICCCN 2020: The 29th International Conference on Computer Communication and Networks: Final Program, Honolulu, HI, USA, 3–6 August 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Liu, X.; Li, H.; Xu, G.; Chen, Z.; Huang, X.; Lu, R. Privacy-Enhanced Federated Learning against Poisoning Adversaries. IEEE Trans. Inf. Forensics Secur. 2021, 16, 4574–4588. [Google Scholar] [CrossRef]

- Li, X.; Wen, M.; He, S.; Lu, R.; Wang, L. A Privacy-Preserving Federated Learning Scheme Against Poisoning Attacks in Smart Grid. IEEE Internet Things J. 2024, 11, 16805–16816. [Google Scholar] [CrossRef]

- Sakazi, I.; Grolman, E.; Elovici, Y.; Shabtai, A. STFL: Utilizing a Semi-Supervised, Transfer-Learning, Federated-Learning Approach to Detect Phishing URL Attacks. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2024; pp. 1–10. [Google Scholar] [CrossRef]

- Liu, Y.; Yuan, X.; Zhao, R.; Wang, C.; Niyato, D.; Zheng, Y. Poisoning Semi-supervised Federated Learning via Unlabeled Data: Attacks and Defenses. arXiv 2020, arXiv:2012.04432. [Google Scholar]

- Xu, R.; Li, B.; Li, C.; Joshi, J.B.; Ma, S.; Li, J. TAPFed: Threshold Secure Aggregation for Privacy-Preserving Federated Learning. IEEE Trans. Dependable Secure Comput. 2024, 21, 4309–4323. [Google Scholar] [CrossRef]

- Yazdinejad, A.; Dehghantanha, A.; Karimipour, H.; Srivastava, G.; Parizi, R.M. A Robust Privacy-Preserving Federated Learning Model Against Model Poisoning Attacks. IEEE Trans. Inf. Forensics Secur. 2024, 19, 6693–6708. [Google Scholar] [CrossRef]

- Hao, M.; Li, H.; Xu, G.; Chen, H.; Zhang, T. Efficient, Private and Robust Federated Learning. In Proceedings of the ACM International Conference Proceeding Series, ACSAC’21: Annual Computer Security Applications Conference, Virtual Event, 6–10 December 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 45–60. [Google Scholar] [CrossRef]

- Dong, Y.; Chen, X.; Li, K.; Wang, D.; Zeng, S. FLOD: Oblivious Defender for Private Byzantine-Robust Federated Learning with Dishonest-Majority. In Computer Security–ESORICS 2021, Proceedings of the 26th European Symposium on Research in Computer Security, Darmstadt, Germany, 4–8 October 2021; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics; Springer Science and Business Media Deutschland GmbH: Cham, Switzerland, 2021; pp. 497–518. [Google Scholar] [CrossRef]

- Kumar, S.S.; Joshith, T.S.; Lokesh, D.D.; Jahnavi, D.; Mahato, G.K.; Chakraborty, S.K. Privacy-Preserving and Verifiable Decentralized Federated Learning. In Proceedings of the 5th International Conference on Energy, Power, and Environment: Towards Flexible Green Energy Technologies, ICEPE 2023, Shillong, India, 15–17 June 2023; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2023. [Google Scholar] [CrossRef]

- Liu, T.; Xie, X.; Zhang, Y. ZkCNN: Zero Knowledge Proofs for Convolutional Neural Network Predictions and Accuracy. In Proceedings of the ACM Conference on Computer and Communications Security, Virtual Event, 15–19 November 2021; Association for Computing Machinery: New York, NY, USA, 2021; pp. 2968–2985. [Google Scholar] [CrossRef]

- Wang, Z.; Dong, N.; Sun, J.; Knottenbelt, W.; Guo, Y. zkFL: Zero-Knowledge Proof-based Gradient Aggregation for Federated Learning. IEEE Trans. Big Data 2024, 11, 447–460. [Google Scholar] [CrossRef]

- Sun, X.; Yu, F.R.; Zhang, P.; Sun, Z.; Xie, W.; Peng, X. A Survey on Zero-Knowledge Proof in Blockchain. IEEE Netw. 2021, 35, 198–205. [Google Scholar] [CrossRef]

- Nasr, M.; Shokri, R.; Houmansadr, A. Comprehensive privacy analysis of deep learning: Passive and active white-box inference attacks against centralized and federated learning. In Proceedings of the IEEE Symposium on Security and Privacy, San Francisco, CA, USA, 19–23 May 2019; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2019; pp. 739–753. [Google Scholar] [CrossRef]

- Akhtar, N.; Mian, A.; Kardan, N.; Shah, M. Advances in adversarial attacks and defenses in computer vision: A survey. arXiv 2021, arXiv:2108.00401. [Google Scholar]

- Brendan McMahan, H.; Moore, E.; Ramage, D.; Hampson, S.; Agüera y Arcas, B. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics, AISTATS 2017, Fort Lauderdale, FL, USA, 20–22 April 2017; PMLR: Cambridge, MA, USA, 2017. [Google Scholar]

- Tan, L.; Yu, K.; Yang, C.; Bashir, A.K. A blockchain-based Shamir’s threshold cryptography for data protection in industrial internet of things of smart city. In Proceedings of the 6G-ABS 2021—Proceedings of the 1st ACM Workshop on Artificial Intelligence and Blockchain Technologies for Smart Cities with 6G, Part of ACM MobiCom 2021, New Orleans, LA, USA, 25–29 October 2021; Association for Computing Machinery, Inc.: New York, NY, USA, 2021; pp. 13–18. [Google Scholar] [CrossRef]

- Tang, J.; Xu, H.; Wang, M.; Tang, T.; Peng, C.; Liao, H. A Flexible and Scalable Malicious Secure Aggregation Protocol for Federated Learning. IEEE Trans. Inf. Forensics Secur. 2024, 19, 4174–4187. [Google Scholar] [CrossRef]

- Zhang, L.; Xia, R.; Tian, W.; Cheng, Z.; Yan, Z.; Tang, P. FLSIR: Secure Image Retrieval Based on Federated Learning and Additive Secret Sharing. IEEE Access 2022, 10, 64028–64042. [Google Scholar] [CrossRef]

- Fazli Khojir, H.; Alhadidi, D.; Rouhani, S.; Mohammed, N. FedShare: Secure Aggregation based on Additive Secret Sharing in Federated Learning. In ACM International Conference Proceeding Series, Proceedings of the IDEAS’23: International Database Engineered Applications Symposium, Heraklion, Greece, 5–7 May 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 25–33. [Google Scholar] [CrossRef]

- More, Y.; Ramachandran, P.; Panda, P.; Mondal, A.; Virk, H.; Gupta, D. SCOTCH: An Efficient Secure Computation Framework for Secure Aggregation. arXiv 2022, arXiv:2201.07730. [Google Scholar]

- Asare, B.A.; Branco, P.; Kiringa, I.; Yeap, T. AddShare+: Efficient Selective Additive Secret Sharing Approach for Private Federated Learning. In Proceedings of the 2024 IEEE 11th International Conference on Data Science and Advanced Analytics (DSAA), San Diego, CA, USA, 6–10 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–10. [Google Scholar] [CrossRef]

- Wang, X.; Kuang, X.; Li, J.; Li, J.; Chen, X.; Liu, Z. Oblivious Transfer for Privacy-Preserving in VANET’s Feature Matching. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4359–4366. [Google Scholar] [CrossRef]

- Long, H.; Zhang, S.; Zhang, Y.; Zhang, L.; Wang, J. A Privacy-Preserving Method Based on Server-Aided Reverse Oblivious Transfer Protocol in MCS. IEEE Access 2019, 7, 164667–164681. [Google Scholar] [CrossRef]

- Wei, X.; Xu, L.; Wang, H.; Zheng, Z. Permutable Cut-and-Choose Oblivious Transfer and Its Application. IEEE Access 2020, 8, 17378–17389. [Google Scholar] [CrossRef]

- Traugott, S. Why Order Matters: Turing Equivalence in Automated Systems Administration. In Proceedings of the Sixteenth Systems Administration Conference: (LISA XVI), Philadelphia, PA, USA, 3–8 November 2000; USENIX Association: Berkeley, CA, USA, 2002. [Google Scholar]

- Wei, X.; Jiang, H.; Zhao, C.; Zhao, M.; Xu, Q. Fast Cut-and-Choose Bilateral Oblivious Transfer for Malicious Adversaries. In Proceedings of the 2016 IEEE Trustcom/BigDataSE/ISPA, Tianjin, China, 23–26 August 2016. [Google Scholar] [CrossRef]

- Fan, J.; Yan, Q.; Li, M.; Qu, G.; Xiao, Y. A Survey on Data Poisoning Attacks and Defenses. In Proceedings of the 2022 7th IEEE International Conference on Data Science in Cyberspace, DSC 2022, Guilin, China, 11–13 July 2022; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2022; pp. 48–55. [Google Scholar] [CrossRef]

- Aly, S.; Almotairi, S. Deep Convolutional Self-Organizing Map Network for Robust Handwritten Digit Recognition. IEEE Access 2020, 8, 107035–107045. [Google Scholar] [CrossRef]

- Kundroo, M.; Kim, T. Demystifying Impact of Key Hyper-Parameters in Federated Learning: A Case Study on CIFAR-10 and FashionMNIST. IEEE Access 2024, 12, 120570–120583. [Google Scholar] [CrossRef]

- Blanchard, P.; El Mhamdi, E.M.; Guerraoui, R.; Stainer, J. Machine Learning with Adversaries: Byzantine Tolerant Gradient Descent. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

| Solution | Privacy Preserved | Poisoning Attack Resilience | PoA | Techniques/Strategy Adopted | Server Architecture |

|---|---|---|---|---|---|

| [47] TAPFed | ✓ | ✓ | ✗ | Decisional Diffie–Hellman | Multi |

| Yazdinejad et al. [48] | ✓ | ✓ | ✗ | Additive homomorphic encryption, Gaussian mixture model (GMM), Mahalanobis distance | Single |

| [26] ELSA | ✓ | ✓ | ✗ | L2 defense, additive secret sharing, oblivious transfer protocol | Dual |

| [17] VerifyNET | ✓ | ✗ | ✓ | Homomorphic hash function, pseudorandom technology, secret sharing | Single |

| [24] EPPRFL | ✓ | ✓ | ✗ | Euclidean distance filtering and clipping, additive secret sharing, secure multiplication protocol | Dual |

| [25] LSFL | ✓ | ✓ | ✗ | Euclidean distance, additive secret sharing, Gaussian noise | Dual |

| [45] STFL | ✓ | ✓ | ✗ | Minmax optimization | Single |

| [49] SecureFL | ✓ | ✓ | ✗ | Additive homomorphic encryption, multiparty computation, FLTrust | Dual |

| [50] FLOD | ✓ | ✓ | ✗ | Multiparty computation, Sign-SGD, FLTrust | Dual |

| Our Framework | ✓ | ✓ | ✓ | Euclidean distance, L2 norm, additive secret sharing, oblivious transfer protocol | Dual |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mbonu, W.E.; Maple, C.; Epiphaniou, G.; Panchev, C. A Verifiable, Privacy-Preserving, and Poisoning Attack-Resilient Federated Learning Framework. Big Data Cogn. Comput. 2025, 9, 85. https://doi.org/10.3390/bdcc9040085

Mbonu WE, Maple C, Epiphaniou G, Panchev C. A Verifiable, Privacy-Preserving, and Poisoning Attack-Resilient Federated Learning Framework. Big Data and Cognitive Computing. 2025; 9(4):85. https://doi.org/10.3390/bdcc9040085

Chicago/Turabian StyleMbonu, Washington Enyinna, Carsten Maple, Gregory Epiphaniou, and Christo Panchev. 2025. "A Verifiable, Privacy-Preserving, and Poisoning Attack-Resilient Federated Learning Framework" Big Data and Cognitive Computing 9, no. 4: 85. https://doi.org/10.3390/bdcc9040085

APA StyleMbonu, W. E., Maple, C., Epiphaniou, G., & Panchev, C. (2025). A Verifiable, Privacy-Preserving, and Poisoning Attack-Resilient Federated Learning Framework. Big Data and Cognitive Computing, 9(4), 85. https://doi.org/10.3390/bdcc9040085