A Comprehensive Survey of MapReduce Models for Processing Big Data

Abstract

1. Introduction

- ⮚

- To attain a deep knowledge of map-reduce-based big data processing, this survey aims to interpret various map-reducing models focused on the methodology, the performance metrics, their advantages, utilized datasets, and limitations.

- ⮚

- Around 75 articles related to MapReduce for processing big data are reviewed in this research, which provides good insight into choosing the appropriate model for processing various data, the challenges faced, and future directions.

- ⮚

- More specifically, this review describes different types of map-reduce models, including Hadoop, Hive, Spark, Pig, MongoDB, and Cassandra.

- ⮚

- In addition, this review analyzed various metrics for evaluating MapReduce frameworks’ performance for big data processing.

- ⮚

- The overview of the research benefits the researchers in overcoming the limitations in the existing approaches to develop more effective and sustainable models in the future.

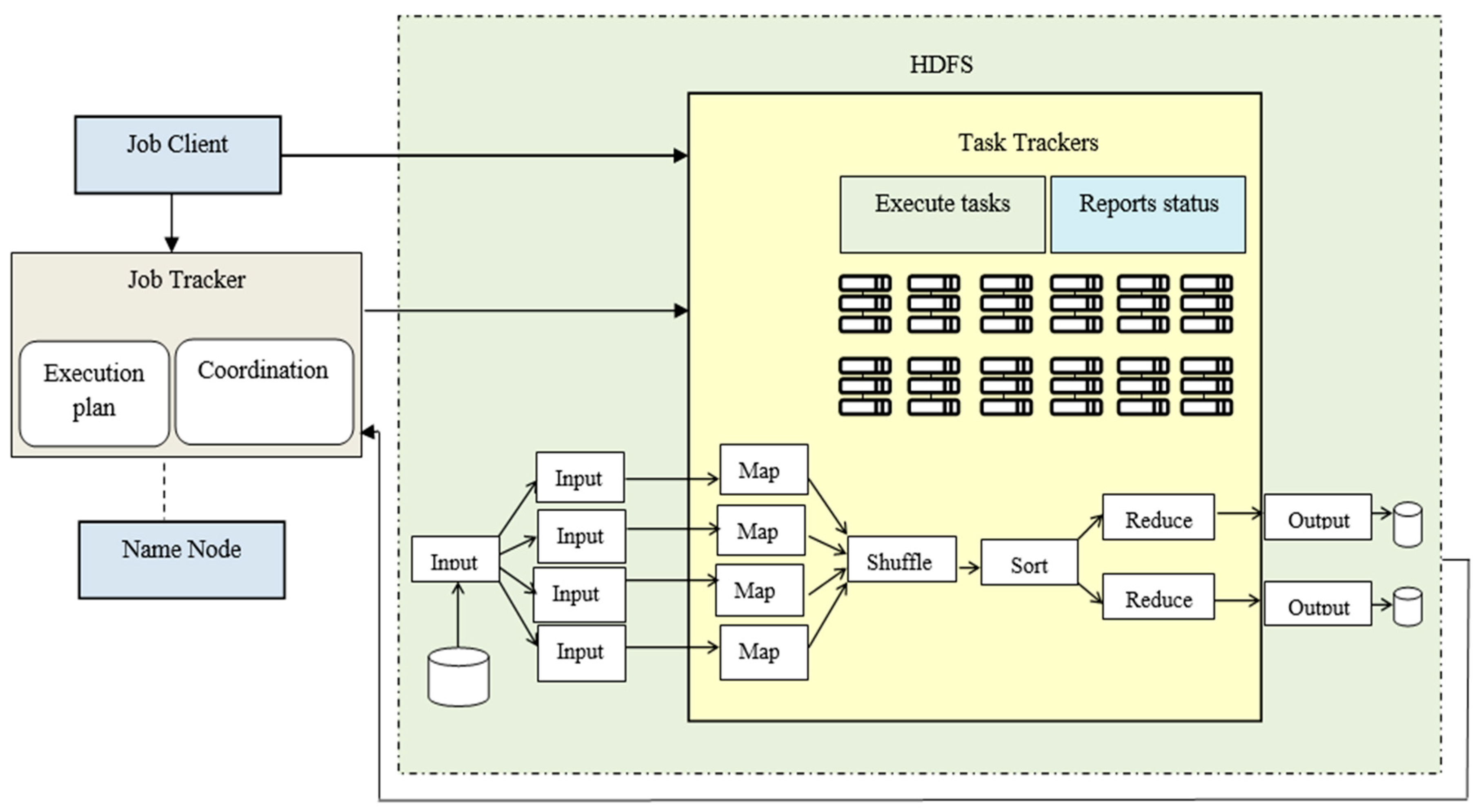

2. Background of MapReduce Model

3. Methodology

3.1. Article Selection Process

3.2. Literature Review

- What are the advantages and limitations of utilizing the MapReduce framework for big data classification?

- Which methods are predominantly employed in the reviewed research articles, and how do they contribute to the framework’s effectiveness?

- What challenges and gaps exist in the current use of MapReduce for big data classification, and how can they be addressed?

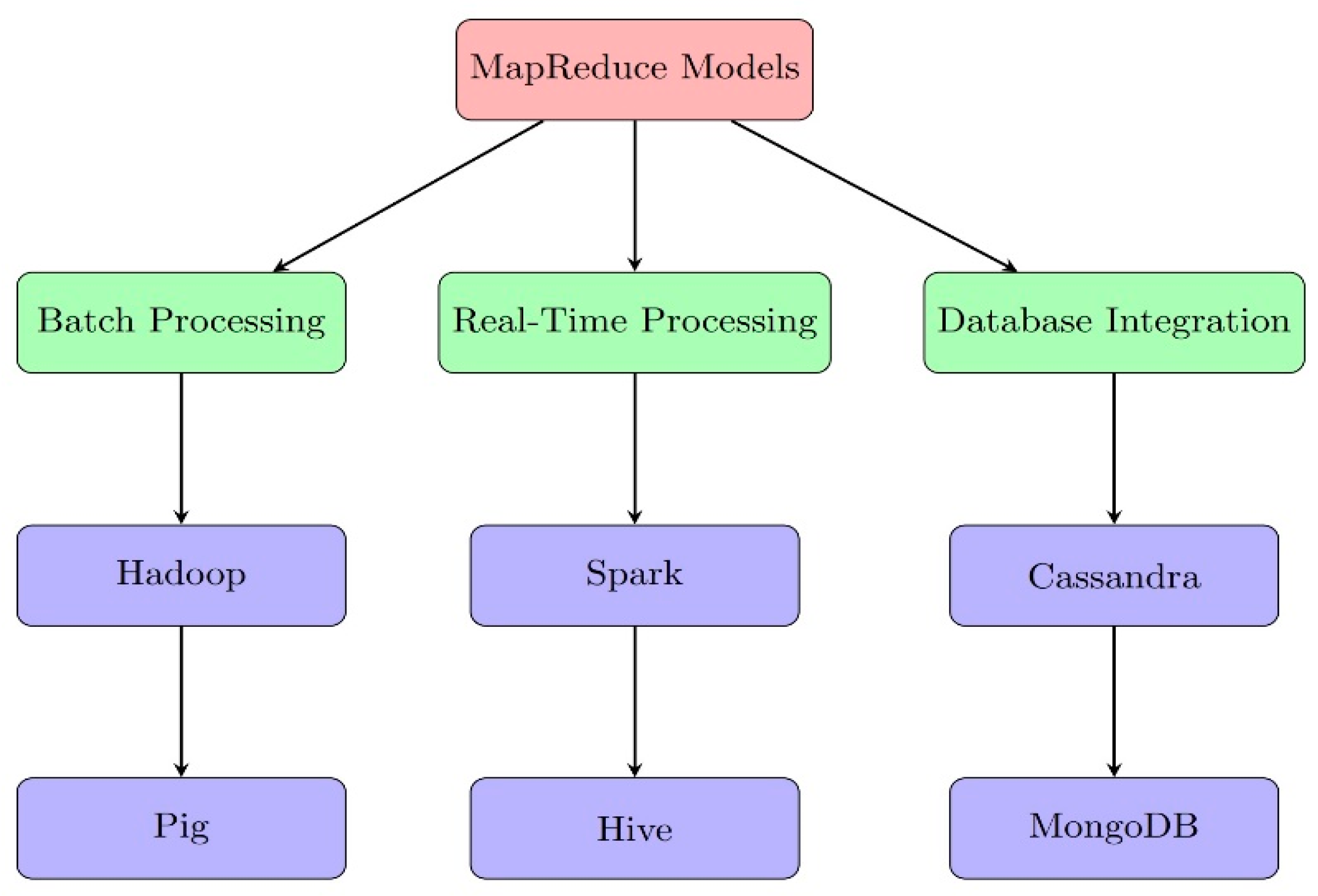

3.3. Types of Map-Reduce Models

3.3.1. Hadoop Map-Reduce Model

3.3.2. Spark MapReduce Model

3.3.3. Hive MapReduce Model

3.3.4. MongoDB MapReduce Model

3.3.5. Cassandra MapReduce Model

3.3.6. Pig MapReduce Model

3.3.7. Others

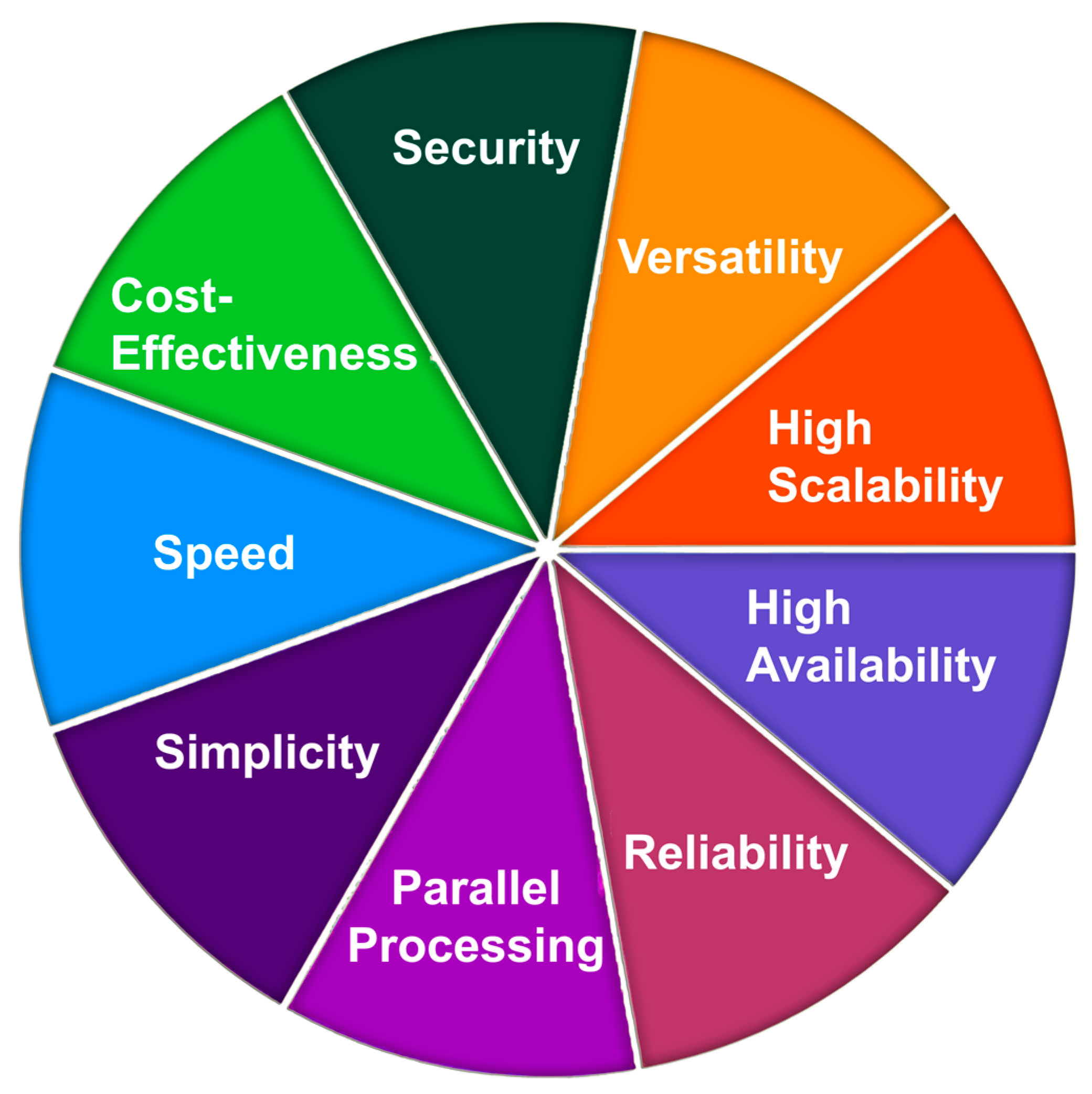

3.4. Key Features of MapReduce

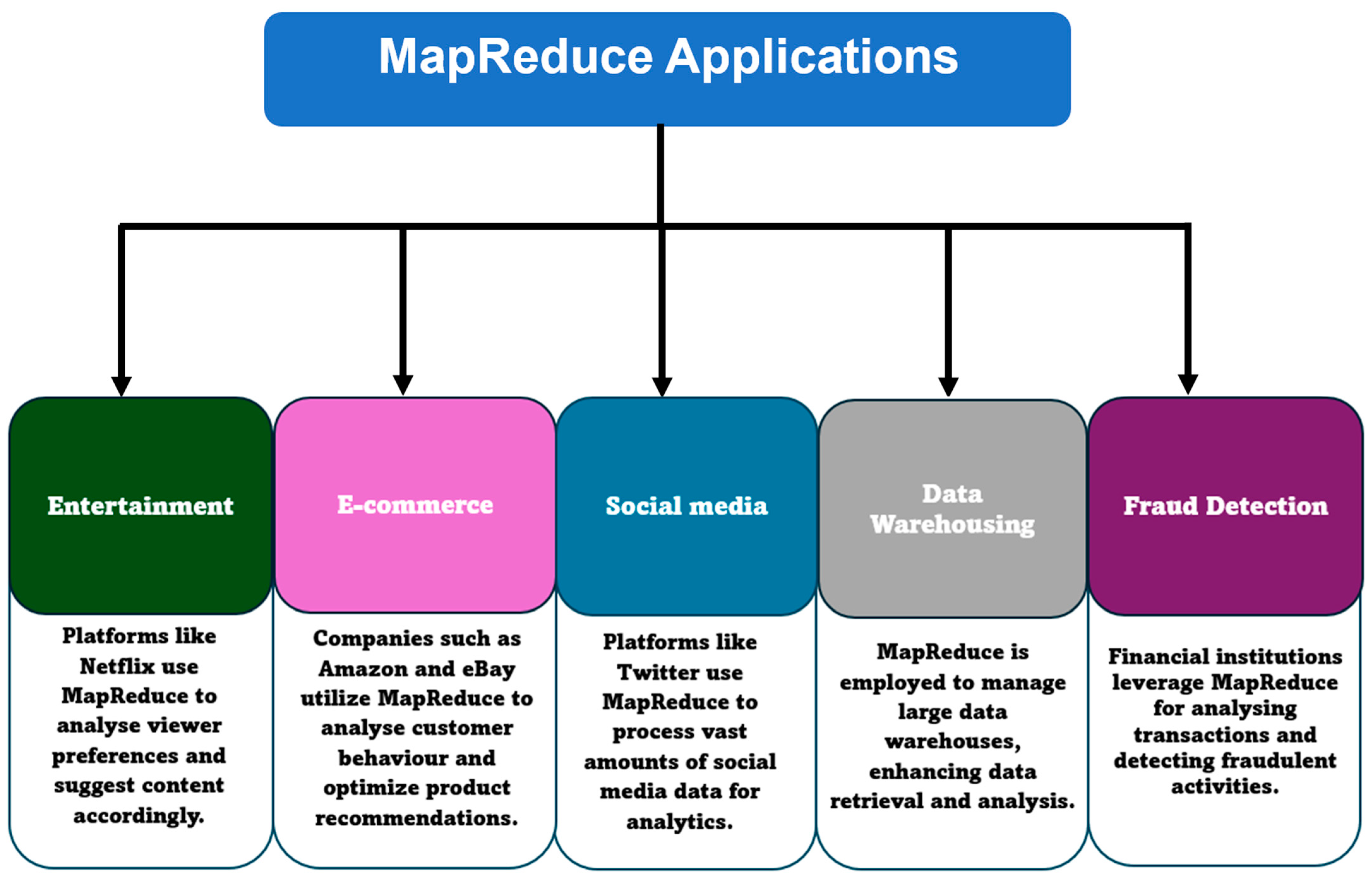

3.5. Applications of MapReduce

3.6. Overall Analysis of the MapReduce Models

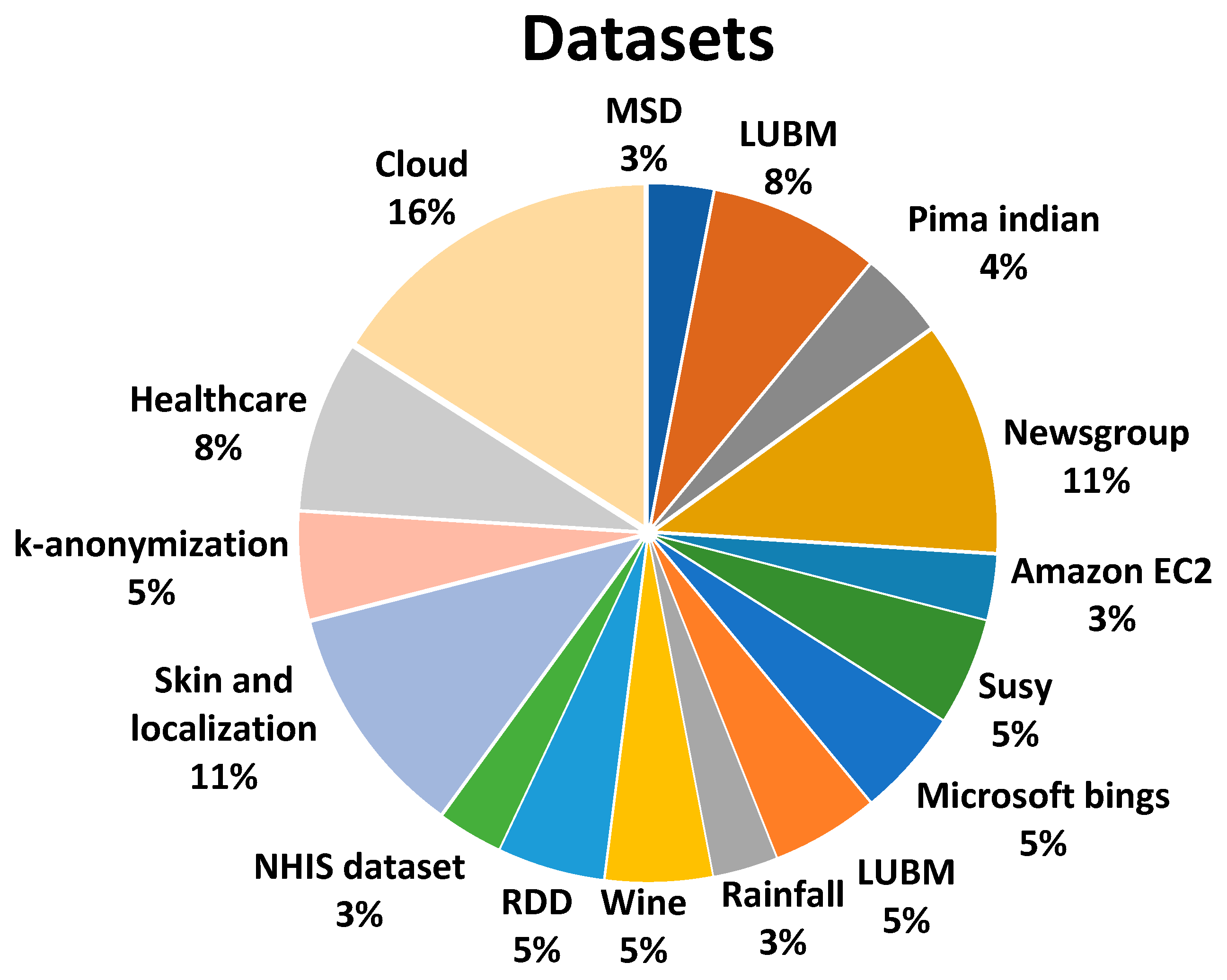

4. Analysis Based on the Utilized Datasets

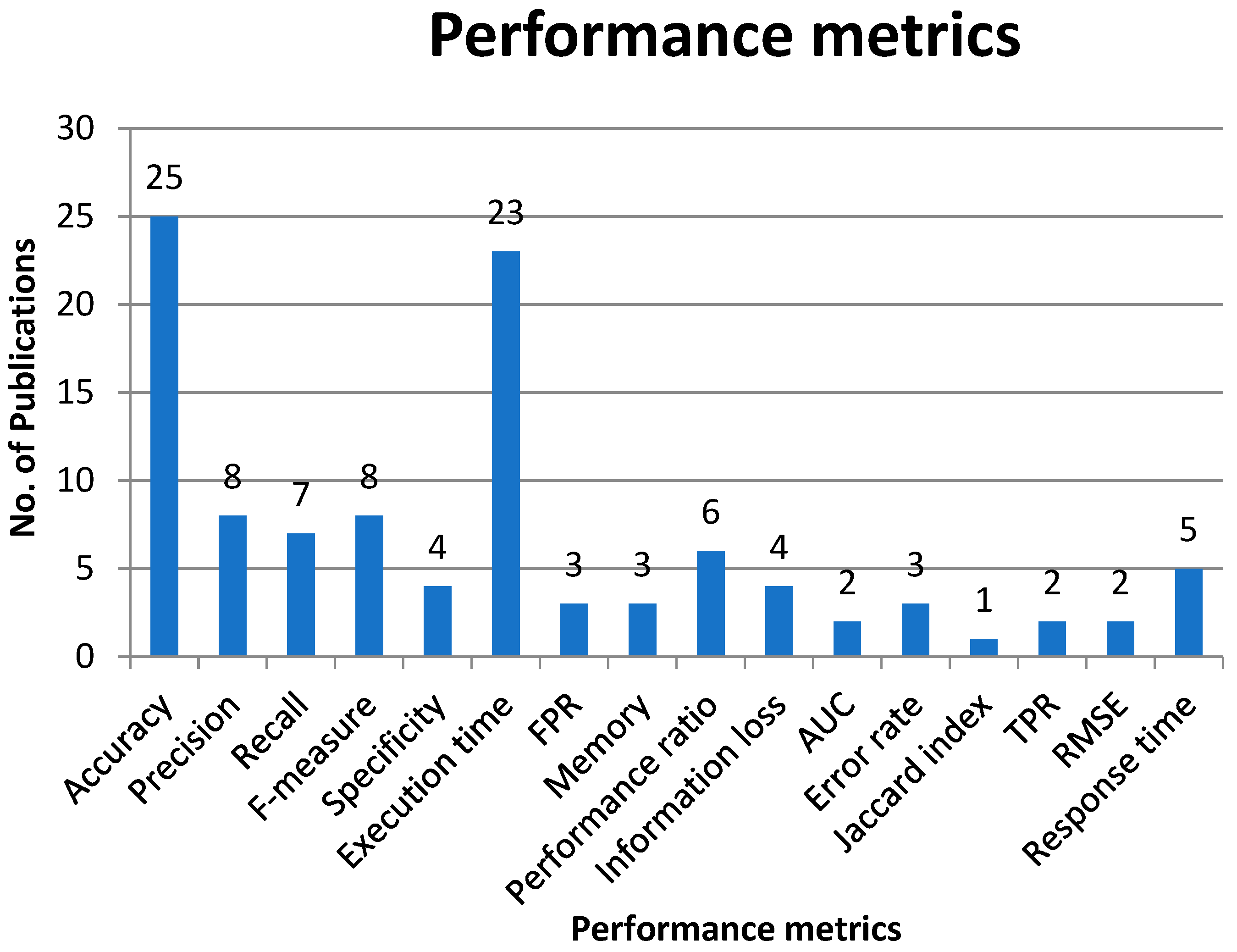

5. Evaluation Based on the Performance Metrics

5.1. Overview of Performance Metrics

- ⮚

- Accuracy: The proportion of correctly classified instances representing the model’s reliability in predicting true outcomes.

- ⮚

- Precision: The ratio of true positives to the total predicted positives, indicating the accuracy of positive predictions, which is especially important when false positives carry a high cost.

- ⮚

- Recall: The sensitivity of the model, showing the proportion of true positives among actual positives. High recall is valuable in applications where identifying all relevant instances is crucial.

- ⮚

- F1 Score: The harmonic mean of precision and recall, providing a balanced metric when there is an imbalance in class distributions.

- ⮚

- Jaccard Index: A similarity measure between predicted and actual classifications, where a higher index indicates better overlap between predicted and actual results.

- ⮚

- Response Time: The time taken by the system to respond to requests, a critical metric for real-time applications where low latency is preferred.

- ⮚

- Execution Time: The overall time taken for the task’s completion, critical in large-scale applications where processing time can impact operational efficiency.

- ⮚

- Information Loss: Measures any degradation in data quality that may arise from distributed processing. Lower information loss suggests better retention of original data integrity.

- ⮚

- Specificity: Indicates the proportion of true negatives among all actual negatives, relevant in scenarios where avoiding false positives is important.

5.2. Detailed Analysis of Metrics

5.2.1. Accuracy

5.2.2. F-1 Score

5.2.3. Precision

5.2.4. Jaccard Index

5.2.5. Response Time

5.2.6. Recall

5.2.7. Execution Time

5.2.8. Information Loss

5.2.9. Specificity

5.2.10. Aggregate Performance Score (APS)

5.3. Speedup

- Re-evaluate Speedup with Varying Feature Counts: How computational load impacts speedup.

- Increase Sample Size for Testing: To simulate larger dataset handling and observe performance changes.

5.3.1. Key Observations

- Speedup and Feature Count: As the feature count increases from 250 to 750, response time slightly improves. However, the speedup remains modest across feature counts, with 250 features yielding a speedup of 0.007 s, 500 features achieving 0.014 s, and 750 features reaching 0.016 s. These results indicate that increasing feature complexity in a multi-node setup does not significantly enhance speedup, as the processing overhead of handling additional features counteracts the potential gains.

- Accuracy and Feature Complexity: Increasing the feature count generally improves classification accuracy and other metrics. For instance, with 250 features, the model attains an accuracy of 0.428, whereas, with 750 features, accuracy increases to 0.672; this illustrates the balance between feature complexity and accuracy, as higher feature counts capture more data details, improving classification quality.

- Performance Implications: Lower feature counts result in higher speedup but reduced accuracy and precision. This trade-off suggests that while a minimal feature set may be suitable for speed-focused applications, tasks requiring high accuracy and moderate speedup would benefit from feature counts around 500–750, achieving a balance between computational efficiency and classification quality.

- Optimal Feature Configuration for Speedup: The analysis suggests a feature count of 500–750 offers a reasonable trade-off between processing time and classification performance. While a smaller feature set can enhance response time, it adversely impacts model accuracy. Thus, applications requiring speed and quality should consider a moderate feature count to maintain performance stability in MapReduce tasks.

- Consistency Across Nodes: The classification accuracy, precision, recall, F1 score, and Jaccard index remained stable across single-node and multi-node configurations; this suggests that distributing data across nodes did not impact classification performance when the feature count was optimized. This stability underscores that the model effectively retains classification quality even with distributed data.

- Response Time and Speedup Limitations: Although MapReduce is designed to expedite data processing, the speedup in this configuration is limited. Even at 750 features, the speedup remains at 0.016 s, indicating that the data distribution and coordination overhead constrain the benefits of parallel processing; this reflects the reality that multi-node setups cannot achieve the ideal linear speedup due to significant parallel computing and communication overheads, which should be a focus in future research to optimize MapReduce efficiency.

- Scalability Implications: The findings highlight that increasing nodes or features alone may not significantly improve response times as dataset complexity grows. Instead, a scalable approach in MapReduce applications should consider optimized feature counts and efficient node utilization to balance processing speed and classification quality in large-scale data processing tasks.

5.3.2. Speedup with Node Scaling

- ⮚

- Single-Node Baseline: The single-node baseline configuration is the reference point for speedup calculations. The initial response time, indicated in this table, is considered the benchmark.

- ⮚

- Two-Node Configuration: In the two-node setup, the response time is approximately 1.8087 s, and the corresponding speedup is 0.0114; this indicates some benefits from distributed processing but is not near ideal, suggesting initial overhead impacts the gains.

- ⮚

- Four-Node Configuration: Expanding to four nodes, the response time reduces to about 1.4693 s, yielding a speedup of 0.0496. Although the performance improves, the speedup achieved is still far from an ideal scaling factor, likely due to coordination costs.

- ⮚

- Eight-Node Configuration: With eight nodes, the response time is 1.7569 s, with a speedup of 0.0341. The results reflect diminishing returns, with performance gains becoming less significant as the number of nodes increases. The overhead of communication and synchronization among nodes becomes more pronounced at this scale.

5.4. Case Study: Application of MapReduce on Text Classification Using the “20 Newsgroups” Dataset

- ⮚

- The baseline multi-node configuration (utilizing 500 features) was congruent with the single-node metrics, offering a balanced demonstration of MapReduce’s impact in a standard setup.

- ⮚

- The computations adhered to conventional big data performance metrics, rendering the results indicative of MapReduce’s efficacy in processing extensive text datasets.

5.4.1. Dataset Overview

5.4.2. Experiment Setup

- ⮚

- Processor: Intel Core i7-10710U, with six physical cores and 12 logical threads, each operating at a base frequency of 1.1 GHz and turbo frequency of up to 1.6 GHz.

- ⮚

- Memory (RAM): 16 GB.

- ⮚

- Operating System: Windows with DirectX 12 support.

5.4.3. Performance Metrics Evaluation

5.4.4. Results

6. Research Gaps and Future Scopes

- ⮚

- The Hadoop map-reducing framework faced severe incompatibility issues when processed with diverse nodes, and the security features administered by Hadoop and Linux were not effective. Moreover, the system was not compatible when there were no dedicated nodes or servers for processing [1].

- ⮚

- The CRMAP model failed to mine high-standard sequential structures when evaluated with big data, and the selection of the patterns for mining was not accurate. In addition, the model required updating the patterns when the data were modified or removed [10].

- ⮚

- The MR-MVPP system did not employ a set similarity join approach and utilized a hashing technique, which did not produce accurate results. The coverage rate of the stored views was lower, reducing the analytical queries’ responsive time [29].

- ⮚

- The SEWAAN model lacked learning algorithms for evaluating the weights and extracting distinct patterns. The model faced difficulty allocating the appropriate nodes for processing the straggler tasks. Moreover, the large set of input data and the failures reduced accuracy [4].

- ⮚

- The effectiveness of text clustering in the CICC-BDVMR model was reduced since the feature selection and feature reduction process were performed separately. Upgraded optimization strategies, including Harris Hawk optimization and Salp Swarm optimization, were required for improved performance [32].

- ⮚

- The multi-dimensional geospatial mining model necessitated distributed processing approaches for improved clustering performance. The model failed to capture the geospatial data at various levels of speculation due to the lack of a visualization interface and required a workflow pipeline for scheduling the tasks [33].

- ⮚

- The MM-MGSMO model faced difficulties handling massive amounts of complex data against the cloud servers. The utilized swarm-optimized algorithm was ineffective in maintaining privacy; hence, the information could be extracted from the leaked orders. However, the delay in processing the wide range of information was longer [52].

- ⮚

- The MFOB-MP faced vulnerabilities in handling high-dimensional features, reducing the classification accuracy. The FCM algorithm was ineffective with the prototype-based clustering methods, and the model evaluation was not analyzed using various criteria [59].

- ⮚

- The KSC-CMER model was evaluated with a smaller database, yet all the data points available in the dataset were not tested. The standard of the clusters needs to be increased, and the lack of optimal parameters reduced the clustering outcome [46].

- ⮚

- The intensive complications and the fault tolerating factors in the TMaR model were not evaluated. Furthermore, the partition sizes in the intermediate data have to be estimated earlier for improved performance. In addition, the processing time and the energy consumption were higher [40].

- ⮚

- Due to the communication and parallel computing management overhead, multiple MapReduce models are suffering from low speedup [80]. For example, in some cases, the speedup of the MapReduce model is no more than 4 in 48 machines. Low resource utilization significantly limits the scalability of MapReduce models.

7. Conclusions and Future Direction

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kumar, A.; Varshney, N.; Bhatiya, S.; Singh, K.U. Replication-Based Query Management for Resource Allocation Using Hadoop and MapReduce over Big Data. Big Data Min. Anal. 2023, 6, 465–477. [Google Scholar]

- Ali, I.M.S.; Hariprasad, D. MapReduce Based Hyper Parameter Optimised Extreme Learning Machine For Big Data Classification. Eur. Chem. Bull. 2023, 12, 7198–7210. [Google Scholar]

- Choi, S.Y.; Chung, K. Knowledge process of health big data using MapReduce-based associative mining. Pers. Ubiquitous Comput. 2020, 24, 571–581. [Google Scholar]

- Farhang, M.; Safi-Esfahani, F. Recognizing mapreduce straggler tasks in big data infrastructures using artificial neural networks. J. Grid Comput. 2020, 18, 879–901. [Google Scholar] [CrossRef]

- Peddi, P. An efficient analysis of stocks data using MapReduce. JASC J. Appl. Sci. Comput. 2019, VI, 4076–4087. [Google Scholar]

- Lin, H.; Su, Z.; Meng, X.; Jin, X.; Wang, Z.; Han, W.; An, H.; Chi, M.; Wu, Z. Combining Hadoop with MPI to Solve Metagenomics Problems that are both Data-and Compute-intensive. Int. J. Parallel Program. 2018, 46, 762–775. [Google Scholar]

- Iwendi, C.; Ponnan, S.; Munirathinam, R.; Srinivasan, K.; Chang, C.Y. An efficient and unique TF/IDF algorithmic model-based data analysis for handling applications with big data streaming. Electronics 2019, 8, 1331. [Google Scholar] [CrossRef]

- Jain, P.; Gyanchandani, M.; Khare, N. Enhanced secured MapReduce layer for big data privacy and security. J. Big Data 2019, 6, 30. [Google Scholar]

- Venkatesh, G.; Arunesh, K. MapReduce for big data processing based on traffic aware partition and aggregation. Clust. Comput. 2019, 22 (Suppl. 5), 12909–12915. [Google Scholar] [CrossRef]

- Saleti, S.; Subramanyam, R.B.V. A MapReduce solution for incremental mining of sequential patterns from big data. Expert Syst. Appl. 2019, 133, 109–125. [Google Scholar]

- Asif, M.; Abbas, S.; Khan, M.A.; Fatima, A.; Khan, M.A.; Lee, S.W. MapReduce based intelligent model for intrusion detection using machine learning technique. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 9723–9731. [Google Scholar]

- Yange, T.S.; Gambo, I.P.; Ikono, R.; Soriyan, H.A. A multi-nodal implementation of apriori algorithm for big data analytics using MapReduce framework. Int. J. Appl. Inf. Syst. 2020, 12, 8–28. [Google Scholar]

- Chen, W.; Liu, B.; Paik, I.; Li, Z.; Zheng, Z. QoS-aware data placement for MapReduce applications in geo-distributed data centers. IEEE Trans. Eng. Manag. 2020, 68, 120–136. [Google Scholar]

- Vats, S.; Sagar, B.B. An independent time optimized hybrid infrastructure for big data analytics. Mod. Phys. Lett. B 2020, 34, 2050311. [Google Scholar]

- Ramachandran, M.; Patan, R.; Kumar, A.; Hosseini, S.; Gandomi, A.H. Mutual informative MapReduce and minimum quadrangle classification for brain tumor big data. IEEE Trans. Eng. Manag. 2021, 70, 2644–2655. [Google Scholar]

- Alwasel, K.; Calheiros, R.N.; Garg, S.; Buyya, R.; Pathan, M.; Georgakopoulos, D.; Ranjan, R. BigDataSDNSim: A simulator for analyzing big data applications in software-defined cloud data centers. Softw. Pract. Exp. 2021, 51, 893–920. [Google Scholar]

- Kumar, D.; Jha, V.K. An improved query optimization process in big data using ACO-GA algorithm and HDFS MapReduce technique. Distrib. Parallel Databases 2021, 39, 79–96. [Google Scholar]

- Kaur, K.; Garg, S.; Kaddoum, G.; Kumar, N. Energy and SLA-driven MapReduce job scheduling framework for cloud-based cyber-physical systems. ACM Trans. Internet Technol. (TOIT) 2021, 21, 1–24. [Google Scholar]

- Rajendran, S.; Khalaf, O.I.; Alotaibi, Y.; Alghamdi, S. MapReduce-based big data classification model using feature subset selection and hyperparameter tuned deep belief network. Sci. Rep. 2021, 11, 24138. [Google Scholar]

- Banane, M.; Belangour, A. A new system for massive RDF data management using Big Data query languages Pig, Hive, and Spark. Int. J. Comput. Digit. Syst. 2020, 9, 259–270. [Google Scholar]

- Dahiphale, D. Mapreduce for graphs processing: New big data algorithm for 2-edge connected components and future ideas. IEEE Access 2023, 11, 54986–55001. [Google Scholar]

- Verma, N.; Malhotra, D.; Singh, J. Big data analytics for retail industry using MapReduce-Apriori framework. J. Manag. Anal. 2020, 7, 424–442. [Google Scholar] [CrossRef]

- Sardar, T.H.; Ansari, Z. Distributed big data clustering using MapReduce-based fuzzy C-medoids. J. Inst. Eng. (India) Ser. B 2022, 103, 73–82. [Google Scholar]

- Guan, S.; Zhang, C.; Wang, Y.; Liu, W. Hadoop-based secure storage solution for big data in cloud computing environment. Digit. Commun. Netw. 2024, 10, 227–236. [Google Scholar]

- Dang, T.D.; Hoang, D.; Nguyen, D.N. Trust-based scheduling framework for big data processing with MapReduce. IEEE Trans. Serv. Comput. 2019, 15, 279–293. [Google Scholar]

- Jo, J.; Lee, K.W. MapReduce-based D_ELT framework to address the challenges of geospatial Big Data. ISPRS Int. J. Geo-Inf. 2019, 8, 475. [Google Scholar] [CrossRef]

- Meena, K.; Sujatha, J. Reduced time compression in big data using mapreduce approach and hadoop. J. Med. Syst. 2019, 43, 239. [Google Scholar]

- Kulkarni, O.; Jena, S.; Ravi Sankar, V. MapReduce framework based big data clustering using fractional integrated sparse fuzzy C means algorithm. IET Image Process. 2020, 14, 2719–2727. [Google Scholar]

- Azgomi, H.; Sohrabi, M.K. MR-MVPP: A map-reduce-based approach for creating MVPP in data warehouses for big data applications. Inf. Sci. 2021, 570, 200–224. [Google Scholar] [CrossRef]

- Chiang, D.L.; Wang, S.K.; Wang, Y.Y.; Lin, Y.N.; Hsieh, T.Y.; Yang, C.Y.; Shen, V.R.; Ho, H.W. Modeling and analysis of Hadoop MapReduce systems for big data using Petri Nets. Appl. Artif. Intell. 2021, 35, 80–104. [Google Scholar] [CrossRef]

- Abukhodair, F.; Alsaggaf, W.; Jamal, A.T.; Abdel-Khalek, S.; Mansour, R.F. An intelligent metaheuristic binary pigeon optimization-based feature selection and big data classification in a MapReduce environment. Mathematics 2021, 9, 2627. [Google Scholar] [CrossRef]

- Xu, Z. Computational intelligence based sustainable computing with classification model for big data visualization on MapReduce environment. Discov. Internet Things 2022, 2, 2. [Google Scholar]

- Alkathiri, M.; Jhummarwala, A.; Potdar, M.B. Multi-dimensional geospatial data mining in a distributed environment using MapReduce. J. Big Data 2019, 6, 82. [Google Scholar] [CrossRef]

- Gandomi, A.; Reshadi, M.; Movaghar, A.; Khademzadeh, A. HybSMRP: A hybrid scheduling algorithm in Hadoop MapReduce framework. J. Big Data 2019, 6, 106. [Google Scholar]

- Banchhor, C.; Srinivasu, N. Holoentropy based Correlative Naive Bayes classifier and MapReduce model for classifying the big data. Evol. Intell. 2022, 15, 1037–1050. [Google Scholar]

- Gheisari, M.; Wang, G.; Bhuiyan, M.Z.A. A survey on deep learning in big data. In Proceedings of the 2017 IEEE International Conference on Computational Science and Engineering (CSE) and IEEE International Conference on Embedded and Ubiquitous Computing (EUC), Guangzhou, China, 21–24 July 2017; Volume 2, pp. 173–180. [Google Scholar]

- Ramsingh, J.; Bhuvaneswari, V. An efficient MapReduce-based hybrid NBC-TFIDF algorithm to mine the public sentiment on diabetes mellitus–a big data approach. J. King Saud Univ.-Comput. Inf. Sci. 2021, 33, 1018–1029. [Google Scholar] [CrossRef]

- Xia, D.; Zhang, M.; Yan, X.; Bai, Y.; Zheng, Y.; Li, Y.; Li, H. A distributed WND-LSTM model on MapReduce for short-term traffic flow prediction. Neural Comput. Appl. 2021, 33, 2393–2410. [Google Scholar]

- Tripathi, A.K.; Sharma, K.; Bala, M.; Kumar, A.; Menon, V.G.; Bashir, A.K. A parallel military-dog-based algorithm for clustering big data in cognitive industrial internet of things. IEEE Trans. Ind. Inform. 2020, 17, 2134–2142. [Google Scholar] [CrossRef]

- Maleki, N.; Faragardi, H.R.; Rahmani, A.M.; Conti, M.; Lofstead, J. TMaR: A two-stage MapReduce scheduler for heterogeneous environments. Hum. -Centric Comput. Inf. Sci. 2020, 10, 1–26. [Google Scholar]

- Narayanan, U.; Paul, V.; Joseph, S. A novel system architecture for secure authentication and data sharing in cloud enabled Big Data Environment. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 3121–3135. [Google Scholar] [CrossRef]

- Selvi, R.T.; Muthulakshmi, I. Modelling the MapReduce based optimal gradient boosted tree classification algorithm for diabetes mellitus diagnosis system. J. Ambient Intell. Humaniz. Comput. 2021, 12, 1717–1730. [Google Scholar]

- Chawla, T.; Singh, G.; Pilli, E.S. MuSe: A multi-level storage scheme for big RDF data using MapReduce. J. Big Data 2021, 8, 130. [Google Scholar]

- Natesan, P.; Sathishkumar, V.E.; Mathivanan, S.K.; Venkatasen, M.; Jayagopal, P.; Allayear, S.M. A distributed framework for predictive analytics using Big Data and MapReduce parallel programming. Math. Probl. Eng. 2023, 2023, 6048891. [Google Scholar]

- Bhattacharya, N.; Mondal, S.; Khatua, S. A MapReduce-based association rule mining using Hadoop cluster—An application of disease analysis. In Innovations in Computer Science and Engineering: Proceedings of the Sixth ICICSE 2018; Springer: Singapore, 2019; pp. 533–541. [Google Scholar]

- Maheswari, K.; Ramakrishnan, M. Kernelized Spectral Clustering based Conditional MapReduce function with big data. Int. J. Comput. Appl. 2021, 43, 601–611. [Google Scholar] [CrossRef]

- Sardar, T.H.; Ansari, Z. MapReduce-based fuzzy C-means algorithm for distributed document clustering. J. Inst. Eng. (India) Ser. B 2022, 103, 131–142. [Google Scholar]

- Krishnaswamy, R.; Subramaniam, K.; Nandini, V.; Vijayalakshmi, K.; Kadry, S.; Nam, Y. Metaheuristic based clustering with deep learning model for big data classification. Comput. Syst. Sci. Eng. 2023, 44, 391–406. [Google Scholar]

- Akhtar, M.N.; Saleh, J.M.; Awais, H.; Bakar, E.A. Map-Reduce based tipping point scheduler for parallel image processing. Expert Syst. Appl. 2020, 139, 112848. [Google Scholar] [CrossRef]

- Rodrigues, A.P.; Chiplunkar, N.N. A new big data approach for topic classification and sentiment analysis of Twitter data. Evol. Intell. 2022, 15, 877–887. [Google Scholar]

- Madan, S.; Goswami, P. A privacy preservation model for big data in map-reduced framework based on k-anonymisation and swarm-based algorithms. Int. J. Intell. Eng. Inform. 2020, 8, 38–53. [Google Scholar] [CrossRef]

- Nithyanantham, S.; Singaravel, G. Resource and cost aware glowworm mapreduce optimization based big data processing in geo distributed data center. Wirel. Pers. Commun. 2021, 117, 2831–2852. [Google Scholar] [CrossRef]

- Liu, J.; Tang, S.; Xu, G.; Ma, C.; Lin, M. A novel configuration tuning method based on feature selection for Hadoop MapReduce. IEEE Access 2020, 8, 63862–63871. [Google Scholar] [CrossRef]

- Shirvani, M.H. An energy-efficient topology-aware virtual machine placement in cloud datacenters: A multi-objective discrete jaya optimization. Sustain. Comput. Inform. Syst. 2023, 38, 100856. [Google Scholar]

- Mirza, N.M.; Ali, A.; Musa, N.S.; Ishak, M.K. Enhancing Task Management in Apache Spark Through Energy-Efficient Data Segregation and Time-Based Scheduling. IEEE Access 2024, 12, 105080–105095. [Google Scholar]

- Sanaj, M.S.; Prathap, P.J. An efficient approach to the map-reduce framework and genetic algorithm based whale optimization algorithm for task scheduling in cloud computing environment. Mater. Today Proc. 2021, 37, 3199–3208. [Google Scholar]

- Kadkhodaei, H.; Moghadam, A.M.E.; Dehghan, M. Big data classification using heterogeneous ensemble classifiers in Apache Spark based on MapReduce paradigm. Expert Syst. Appl. 2021, 183, 115369. [Google Scholar]

- Usha Lawrance, J.; Nayahi Jesudhasan, J.V. Privacy preserving parallel clustering based anonymization for big data using MapReduce framework. Appl. Artif. Intell. 2021, 35, 1587–1620. [Google Scholar]

- Ravuri, V.; Vasundra, S. Moth-flame optimization-bat optimization: Map-reduce framework for big data clustering using the Moth-flame bat optimization and sparse Fuzzy C-means. Big Data 2020, 8, 203–217. [Google Scholar]

- Li, X.; Xue, F.; Qin, L.; Zhou, K.; Chen, Z.; Ge, Z.; Chen, X.; Song, K. A recursively updated Map-Reduce based PCA for monitoring the time-varying fluorochemical engineering processes with big data. Chemom. Intell. Lab. Syst. 2020, 206, 104167. [Google Scholar]

- Wang, N.; Chen, F.; Yu, B.; Qin, Y. Segmentation of large-scale remotely sensed images on a Spark platform: A strategy for handling massive image tiles with the MapReduce model. ISPRS J. Photogramm. Remote Sens. 2020, 162, 137–147. [Google Scholar] [CrossRef]

- Tan, X.; Di, L.; Zhong, Y.; Yao, Y.; Sun, Z.; Ali, Y. Spark-based adaptive Mapreduce data processing method for remote sensing imagery. Int. J. Remote Sens. 2021, 42, 191–207. [Google Scholar]

- Salloum, S.; Huang, J.Z.; He, Y. Random sample partition: A distributed data model for big data analysis. IEEE Trans. Ind. Inform. 2019, 15, 5846–5854. [Google Scholar]

- Krishna, K.B.; Nagaseshudu, M.; Kumar, M.K. An Effective Way of Processing Big Data by Using Hierarchically Distributed Data Matrix. Int. J. Res. 2019, VIII, 1628–1635. [Google Scholar]

- Ramakrishnan, U.; Nachimuthu, N. An Enhanced Memetic Algorithm for Feature Selection in Big Data Analytics with MapReduce. Intell. Autom. Soft Comput. 2022, 31, 1547–1559. [Google Scholar] [CrossRef]

- Seifhosseini, S.; Shirvani, M.H.; Ramzanpoor, Y. Multi-objective cost-aware bag-of-tasks scheduling optimization model for IoT applications running on heterogeneous fog environment. Comput. Netw. 2024, 240, 110161. [Google Scholar] [CrossRef]

- Lamrini, L.; Abounaima, M.C.; Talibi Alaoui, M. New distributed-topsis approach for multi-criteria decision-making problems in a big data context. J. Big Data 2023, 10, 97. [Google Scholar]

- Dhamodharavadhani, S.; Rathipriya, R. Region-wise rainfall prediction using mapreduce-based exponential smoothing techniques. In Advances in Big Data and Cloud Computing: Proceedings of ICBDCC18; Springer: Singapore, 2019; pp. 229–239. [Google Scholar]

- Narayana, S.; Chandanapalli, S.B.; Rao, M.S.; Srinivas, K. Ant cat swarm optimization-enabled deep recurrent neural network for big data classification based on MapReduce framework. Comput. J. 2022, 65, 3167–3180. [Google Scholar]

- Kong, F.; Lin, X. The method and application of big data mining for mobile trajectory of taxi based on MapReduce. Clust. Comput. 2019, 22 (Suppl. 5), 11435–11442. [Google Scholar]

- Rao, P.S.; Satyanarayana, S. Privacy preserving data publishing based on sensitivity in context of Big Data using Hive. J. Big Data 2018, 5, 1–20. [Google Scholar]

- Brahim, A.A.; Ferhat, R.T.; Zurfluh, G. Model Driven Extraction of NoSQL Databases Schema: Case of MongoDB. In Proceedings of the 11th International Joint Conference on Knowledge Discovery, Knowledge Engineering and Knowledge Management (IC3K 2019), Vienna, Austria, 17–19 September 2019; pp. 145–154. [Google Scholar]

- Akhtar, M.M.; Shatat, A.S.A.; Al-Hashimi, M.; Zamani, A.S.; Rizwanullah, M.; Mohamed, S.S.I.; Ayub, R. MapReduce with deep learning framework for student health monitoring system using IoT technology for big data. J. Grid Comput. 2023, 21, 67. [Google Scholar] [CrossRef]

- Madan, S.; Goswami, P. k-DDD measure and mapreduce based anonymity model for secured privacy-preserving big data publishing. Int. J. Uncertain. Fuzziness Knowl.-Based Syst. 2019, 27, 177–199. [Google Scholar] [CrossRef]

- Zhu, Y.; Samsudin, J.; Kanagavelu, R.; Zhang, W.; Wang, L.; Aye, T.T.; Goh, R.S.M. Fast Recovery MapReduce (FAR-MR) to accelerate failure recovery in big data applications. J. Supercomput. 2020, 76, 3572–3588. [Google Scholar] [CrossRef]

- Banchhor, C.; Srinivasu, N. FCNB: Fuzzy Correlative naive bayes classifier with mapreduce framework for big data classification. J. Intell. Syst. 2019, 29, 994–1006. [Google Scholar] [CrossRef]

- Vennila, V.; Kannan, A.R. Hybrid parallel linguistic fuzzy rules with canopy mapreduce for big data classification in cloud. Int. J. Fuzzy Syst. 2019, 21, 809–822. [Google Scholar] [CrossRef]

- Gupta, Y.K.; Kamboj, S.; Kumar, A. Proportional exploration of stock exchange data corresponding to various sectors using Apache Pig. Int. J. Adv. Sci. Technol. (IJAST) 2020, 29, 2858–2867. [Google Scholar]

- Mitchell, T. Twenty Newsgroups [Dataset]. UCI Machine Learning Repository. 1997. Available online: https://doi.org/10.24432/C5C323 (accessed on 1 February 2020).

- Zhao, Y.; Yoshigoe, K.; Xie, M.; Bian, J.; Xiong, K. L-PowerGraph: A lightweight distributed graph-parallel communication mechanism. J. Supercomput. 2020, 76, 1850–1879. [Google Scholar] [CrossRef]

- Zhao, Y.; Yoshigoe, K.; Xie, M.; Zhou, S.; Seker, R.; Bian, J. Lightgraph: Lighten communication in distributed graph-parallel processing. In Proceedings of the 2014 IEEE International Congress on Big Data, Anchorage, AK, USA, 27 June–2 July 2014; pp. 717–724. [Google Scholar]

- Zhao, Y.; Yoshigoe, K.; Xie, M.; Zhou, S.; Seker, R.; Bian, J. Evaluation and analysis of distributed graph-parallel processing frameworks. J. Cyber Secur. Mobil. 2014, 3, 289–316. [Google Scholar] [CrossRef][Green Version]

| S. No | Authors | Year | Method | Dataset | Achievements | Pros | Cons |

|---|---|---|---|---|---|---|---|

| 1 | Issa Mohammed Saeed Ali and Dr. D. Hariprasad [2] | 2023 | MRHPOELM | Higgs dataset, glass, Diabetes, Cleveland, Vehicle, and Wine dataset | Higgs dataset: Accuracy 99.32%, precision 96.12, recall 97.64%, F-measure 96.87%, specificity 97.11%, and time 51.09 s | The hybrid feature selection minimized the imbalance issues | Failed to manage multi-source data |

| 2 | Ankit Kumar et al. [1] | 2023 | Hadoop map-reducing framework | Hadoop | Data processing time by 30% | The output key and the value pairs were reset for the effective retrieval of a wide range of information | Incompatibility of diverse nodes |

| 3 | DEVENDRA DAHIPHALE [21] | 2023 | Distributed and parallel algorithm for 2-ECCs BiECCA | - | Processing time 144 s for 8 input records | The vast database enabled the stream processing of data | The small and large star process manages only one edge at a time |

| 4 | Md. Mobin Akhtar et al. [73] | 2023 | IoT-based Student Health Monitoring System | Healthcare dataset | Accuracy 94.02%, precision 89.73%, False positive rate (FPR) 6.44, F1 score 92% | The auto-encoder and one-dimension convolutional neural network effectively extracted the deep characteristics | Low precision |

| 5 | M.S. Sanaj and P.M. Joe Prathap [56] | 2021 | M-CWOA | Healthcare dataset | - | Features are effectively reduced with the application of MRQFLDA algorithm. | The model ignored the important performance parameters |

| 6 | Neha Verma et al. [22] | 2020 | GA-WOA | - | Processing time 55.68 ms, map process execution time 31.5 ms, virtual memory 25.08 bytes | The Apriori algorithm employed with cloud architecture led to the effective mining of data. | Lower data handling ability |

| 7 | So-Young Choi and Kyungyong Chung [3] | 2020 | Hadoop platform-based MapReduce system | Peer-to-peer dataset | Recall 67.39%, precision 81.12%, F-measure 73.62% | The reduction in key values improved the processing time | Limited to processing unstructured data |

| 8 | Tanvir H. Sardar and Zahid Ansar [23] | 2022 | MapReduce-based Fuzzy C-Medoids | 20_newsgroups | Execution time 8 node: 285 s, performance ratio 1.31 | The utilized algorithm was scalable with large-scale datasets | The quality of the clusters was not evaluated |

| 9 | Priyank Jain et al. [8] | 2019 | SMR model | Twitter dataset | Information loss 5.1%, running time 12.19 ms | The difference in processing time minimized with a larger data size | The security of the big data was not maintained in real-world conditions. |

| 10 | Surendran Rajendran et al. [19] | 2021 | CPIO-FS | ECBDL14-ROS dataset, epsilon dataset | Training time 318.54 s, AUC 72.70% | The HHO-based deep belief network (DBN) model improved the classification performance. | Limited quality of service parameters |

| 11 | Shaopeng Guan et al. [24] | 2024 | The Hadoop-based secure storage scheme | Cloud big data | encryption storage efficiency-improved by 27.6% | The dual-thread encryption mode improved the speed of encryption | Required advanced computing framework for the efficient calculation of cipher text. |

| 12 | Sumalatha Saleti, Subramanyam R.B.V. [10] | 2019 | CRMAP | Kosarak, BMSWebView2, MSNBC, C20 − D2 − N1K − T20 | Minimum Support 0.25%, execution time 25 s | Resolved the sequential pattern issues of big data | The map-reducing framework was limited to a single data-processing model |

| 13 | Thanh Dat Dang et al. [25] | 2019 | Trust-based MapReduce framework | - | Execution time 5.5 s | Low data processing costs | Lower security in processing |

| 14 | Hamidreza Kadkhodaei et al. [57] | 2021 | Distributed Heterogeneous Boosting-inspired ensemble classifier | CEN, EPS, COV, POK, KDD, SUSY, HIG | Classification accuracy of 75%, performance of 16 for 32 mappers, and scalability | The performance of the distributed heterogeneous ensemble classifier was effective. | Lower accuracy for certain datasets |

| 15 | G. Venkatesh and K. Arunesh [9] | 2019 | Layers 3 traffic-aware clustering method | Reuters-21578, 20 Newsgroup, Web ace, TREC | Accuracy 89%, Execution time 14.165 s | Improved accuracy with the three-layered structure | Higher execution time |

| 16 | Junghee Jo and Kang-Woo Lee [26] | 2019 | DELT | Marmot | Data preparation time 116 s | Smaller performance degradation with optimization | Poor performance in dealing with spatial queries with geospatial big data |

| 17 | K. Meena and J. Sujatha [27] | 2019 | Co-EANFS | UCI datasets | Accuracy 96.45%, error rate 3.55% | The success ratio of the recommender system was higher | Required regression models for effective performance |

| 18 | Omkaresh Kulkarni et al. [28] | 2020 | FrSparse FCM | Skin dataset and localization dataset (UCI) | Accuracy 90.6012%, DB Index 5.33 | The sparse algorithm effectively computed the optimal centroid in the mapper phase. | Lower classification accuracy |

| 19 | Hossein Azgomi, Mohammad Karim Sohrabi [29] | 2021 | MR-MVPP | TCP-H dataset, TPC BENCHMARK H | A coverage rate of 60% for 5 queries | Better performance in terms of coverage rate | The responsive time for the analytical queries in online platforms was higher. |

| 20 | Dai-Lun Chiang et al. [30] | 2021 | Petri net model | Corporate datasets | - | The file and rule checker reduced the errors in the analysis system. | No real-time performance evaluation |

| 21 | Suman Madan and Puneet Goswami [74] | 2019 | DDDG anonymization | UC Irvine dataset | Classification accuracy 89.77% | The DDDG algorithm modifies the map-reduce technique and preserves data privacy. | Needed modifications in the optimization algorithms |

| 22 | Yongqing Zhu et al. [75] | 2020 | FAR-MR | Apache Hadoop YARN | Performance time 500 s | Improved performance in task failure recovery | Higher task failures |

| 23 | Mandana Farhang and Faramarz Safi-Esfahani [4] | 2020 | SWANN | Apache, W.E | Execution time | The neural network algorithm accurately estimated the execution time of the tasks. | Limited in learning algorithms for computing weights |

| 24 | Felwa Abukhodair et al. [31] | 2021 | MOBDC-MR | Healthcare dataset | Computational time 5.92 s, accuracy 92.86% | The hyperparameter tuning of the LSTM model enhanced the performance of the classifier. | The classification accuracy of the system was lower. |

| 25 | Manikandan Ramachandran et al. [15] | 2021 | MIMR-MQC | CBTRUS dataset | Detection time 40 ms, accuracy 90%, computational complexity 40 ms | Better handling of large-scale data | Reduced range of test cases |

| 26 | Chitrakant Banchhor and N. Srinivasu [76] | 2019 | FCNB | Skin segmentation and localization dataset | Sensitivity 94.79%, specificity 88.89%, accuracy 91.78% | Low time complexity | Classification performance was lower for large data conditions |

| 27 | Josephine Usha Lawrancea and Jesu Vedha Nayahi Jesudhasan [58] | 2021 | PCAA | Shlearn dataset | Execution time 40 ms, completion time 88 ms | Map-reduce and ML technologies identified the intrusions | Higher compatibility issues |

| 28 | V. Vennila and A. Rajiv Kannan [77] | 2019 | LFR-CM | Imbalance DNA dataset | Input/output cost 35 ms, classification accuracy 90%, classification time 20 ms, run time 30 ms | The linguistic fuzzy reduced the classification time | Generalized fuzzy Euler graphs were needed for better classification |

| 29 | Fansheng Kong and Xiaola Lin [70] | 2019 | Map-reduce-based moving trajectory | MapReduce and HBase distributed database | - | Trajectory characteristics were obtained with the mining algorithm | Higher complexity in data mining |

| 30 | Ankit Kumar et al. [78] | 2020 | Replication-Based Query Management system | High indexing database | Execution time 40 ms, completion time 88 ms | The execution time of the Hadoop architecture was lower | Higher complexity issues during processing |

| 31 | Dr. Prasadu Peddi [5] | 2019 | HDFS | Stocks dataset | - | Better performance with unstructured log data | Higher replications of block data |

| 32 | Deepak Kumar and Vijay Kumar Jha [17] | 2021 | NKM | Hospital Compare Datasets | Execution time 350 ms, retrieval time 20,000 mb, F-measure 85%, precision 96%, recall 92.5% | The query optimization process enhanced the performance with larger datasets. | Higher retrieval time |

| 33 | Zheng Xu [32] | 2022 | CICC-BDVMR | Skin segmentation dataset | Accuracy 82.80%, TPR 86.27%, TNR 79.06% | Map-reduce and ML technologies identified the intrusions | The detection outcome was lesser with more data |

| 34 | Mazin Alkathiri [33] | 2019 | Multi-dimensional geospatial data mining based on Apache Hadoop ecosystem | Spatial dataset | Processing time 140 s | Big geospatial data processing and mining | Lacks in visualization interface |

| 35 | Abolfazl Gandomi et al. [34] | 2019 | HybSMRP | - | Completion time 103 s | Delay scheduling reduced the number of local tasks | The effectiveness of the scheduler was not evaluated with larger datasets |

| 36 | Muhammad Asif et al. [11] | 2022 | MR-IMID | NSL-KDD dataset | Training and validation detection accuracy 97.6%, detection miss rate 2.4% | Map-reduce and ML technologies identified the intrusions | The detection outcome was lesser with more data |

| 37 | Amal Ait Brahim et al. [72] | 2019 | Automatic model-driven extraction | K-anonymization based dataset | Information loss 1.33%, classification accuracy 79.76% | Higher data privacy with optimization | Low effectiveness |

| 38 | Chitrakant Banchhor and N. Srinivasu [35] | 2022 | HCNB-MRM | Localization dataset and skin dataset | Accuracy of 93.5965%, and 94.3369% | The map-reduce framework parallelly handled the data from distributed sources. | Not evaluated with deep learning approaches |

| 39 | P. Srinivasa Rao and S. Satyanarayana [71] | 2018 | NSB | A real-world GPS trajectory dataset | - | The Apriori algorithm effectively mined large amounts of data | The absence of cloud computing systems increased the execution in real-time conditions |

| 40 | Suman Madan and Puneet Goswami [51] | 2020 | K-anonymization and swarm-optimized map-reduce framework | K-anonymization based dataset | Information loss 1.33%, classification accuracy 79.76% | Higher data privacy with optimization | Low effectiveness |

| 41 | J. Ramsingh and V. Bhuvaneswari [37] | 2021 | NBC-TFIDF | - | Execution time 54 s, precision 72%, recall 75%, F-measure 73 | Higher performance with the multi-mode cluster | Low precision |

| 42 | Khaled Alwasel et al. [16] | 2021 | BigDataSDNSim | - | Completion time158.96 s, transmission time 26.98 s, processing time 130 s | Seamless task scheduling | High transmission delay in cloud environments |

| 43 | Dawen Xia et al. [38] | 2021 | WND-LSTM | A real-world GPS trajectory dataset | MAPE 65.1%, RMSE 1.4, MAE 0.10, ME 0.44 | The efficiency of short-term traffic prediction was improved | The accuracy and scalability of the model were not evaluated |

| 44 | S. Nithyanantham and G. Singaravel [52] | 2021 | MM-MGSMO | Amazon EC2 big dataset | Computational cost, storage capacity 45 mb, FPR 12.5%, data allocation efficiency 90% | Processed massive data by selecting cost-optimized virtual systems | Low data privacy |

| 45 | Vasavi Ravuri and S. Vasundra [59] | 2020 | MFOB-MP | Global Terrorism Database | Classification accuracy of 95.806%, Dice coefficient of 99.181%, and Jaccard coefficient of 98.376% | Handled big data with large sample size | High dimensionality characteristics were not considered |

| 46 | Han Lin et al. [6] | 2018 | Map-reduce classification | - | - | Parallel clustering approach improved the potential of big data clustering | Load balancing issues |

| 47 | Terungwa Simon Yange et al. [12] | 2020 | BDAM4N | NHIS data | Response time 0.93 s, throughput 84.73% | Processed both structured and unstructured database | Delay in processing data |

| 48 | Xintong Li et al. [60] | 2020 | RMPCA | - | Computational cost 0.250 s | Detected the errors in time-varying processes | Reduced compatibility of the updated Bayesian fusion method |

| 49 | Ning Wang et al. [61] | 2020 | A distributed image segmentation strategy | - | Response time 0.93 s, throughput 84.73% | Efficiently refined the raw data before training | Low effectiveness in the context of complex data |

| 50 | Xicheng Tan et al. [62] | 2021 | Adaptive Spark-based remote sensing data processing method | Resilient distributed dataset (RDD) | Execution time 45 Vms | Higher efficiency with map-reduce-based remote processing | Limited to pixel-based classification |

| 51 | Salman Salloum et al. [63] | 2019 | RSP | RDD | Execution 12 s | The probability distribution in data subsets was similar for the entire dataset | Necessitated partitioning algorithm for random subspace models |

| 52 | K BALA KRISHNA et al. [64] | 2019 | HDM | RDD | Time consumption 10 ms | Better execution control of HDM bundles | Low fault resilience |

| 53 | Celestine Iwendi et al. [7] | 2019 | Temporal Louvain approach with TF/IDF Algorithm | Reuters-21578 and 20 Newsgroups | Execution time 69.68 s, accuracy 94% | The complicated structure of the streaming data was effectively obtained | Higher execution time for complex data |

| 54 | JUN LIU et al. [53] | 2020 | Novel configuration parameter tuning based on a feature selection algorithm | - | Execution time 32.5 s | The K-means algorithm amplified the performance of parameter selection | Higher running time in the Hadoop model |

| 55 | Umanesan Ramakrishnan and Nandhagopal Nachimuthu [65] | 2022 | Memetic algorithm for feature selection in big data analytics with MapReduce | Epsilon and ECBDL14-ROS datasets | AUC 0.75 | The classification process improved the partial outcome | Lacked parameter tuning |

| 56 | Namrata Bhattacharya et al. [45] | 2019 | Association Rule Mining-based MapReduce framework | Comma-separated file | Transaction time 140 ms | The Apriori algorithm effectively mined large amounts of data | The absence of cloud computing systems increased the execution in real-time conditions |

| 57 | K. Maheswari and M. Ramakrishnan [46] | 2021 | KSC-CMEMR | HTRU2 dataset and Hippar cos star dataset | Clustering accuracy 77%, clustering time 45 ms, space complexity 44 MB | KSC-CMEMR improved the clustering of large data with minimum time | Fewer data points were evaluated |

| 58 | Tanvir H. Sardar and Zahid Ansar [47] | 2022 | Novel MapReduce-based fuzzy C-means for big document clustering | 20_newsgroups dataset | Execution time 108.27 s | Low cluster overhead | The quality of clusters was not evaluated |

| 59 | R. Krishnaswamy et al. [48] | 2023 | MR-HDBCC | Iris, Wine, Glass, Yeast-1 dataset, Yeast-2 dataset | Computational time 11.85 s, accuracy 95.43%, formation of clusters 25.42 ms | Effective performance on density-based clustering | Large sets of data were not classified |

| 60 | Seifhossein, et al. [66] | 2024 | MoDGWA | BoT-IoT dataset | The model attained a reduction of 0.55%, 7.28%, 10.20%, and 45.83% over other existing models in terms of makespan, ToC, TSFF, and the cost score. | Reduced the execution cost and improved the overall reliability | Require improving the efficiency |

| 61 | Nada M. Mirza [55] | 2024 | Dynamic Smart Flow Scheduler | Real-time load data | The average resource consumption was minimized by 19.9%, from an average of 94.1 MiB to 75.6 MiB, accompanied by a 24.3% reduction in the number of tasks | The model offers more effective and responsive distributed data-processing environment | Required enhancing the scalability |

| 62 | Mohammad Nishat Akhtar et al. [49] | 2020 | Tipping point scheduling algorithm-based map-reducing framework | Image dataset | Running time 60 s for 2 nodes, data processed 12/s | Higher substantial speed for more number of nodes | A higher gap in sequential processing |

| 63 | S. Dhamodharavadhani and R. Rathipriya [68] | 2019 | MapReduce-based Exponential Smoothing | Indian rainfall dataset, Tamil Nadu state rainfall dataset | MSE accuracy 83.8% | Highly parallelizable algorithms were executed over large databases | Optimization was required for a better initialization process |

| 64 | Satyala Narayana et al. [69] | 2022 | ACSO-enabled Deep RNN | Cleveland, Switzerland, and Hungarian datasets | Specificity 88.4%, accuracy 89.3%, sensitivity 90%, threat score 0.827 | Data from the diverse distributed sources were managed in a concurrent way | Lower privacy in healthcare datasets |

| 65 | Anisha P. Rodrigues and Niranjan N. Chiplunkar [50] | 2022 | HL-NBC | Hadoop, Flume, Hive datasets | Accuracy 82%, precision 71%, recall 70%, F-measure 71% | Topic classification classified the texts effectively | Cross-lingual sentimental classification issues |

| 66 | Mouad Banane and Abdessamad Belangour [20] | 2020 | Model-Driven Approach | LUBM1, LUBM3, LUBM2 datasets | Runtime 400 ms | The driven engineering-based approach effectively transformed the queries into Hive, Pig, or Spark scripts. | Efficiency issues in processing semantic data flow |

| 67 | Wuhui Chen et al. [13] | 2020 | Map-reduce-based QoS data placement technique | Microsoft Bing’s data centers | Execution time 10,000 s, communication cost 5000 MB | Minimized the joint data placement issues in map-reduce applications | Communicational costs and higher energy costs |

| 68 | Ashish Kumar Tripathi et al. [39] | 2020 | MR-MDBO | Susy, pkerhand, DLeOM, sIoT, IoT-Bonet | Computation time 9.10 × 103, F-measure 0.846 | The speed analysis of the algorithm exhibited higher scalability | Not evaluated with real-world applications of big data |

| 69 | Neda Maleki et al. [40] | 2020 | TMaR | Amazon EC2 big dataset | Completion time 1500 s, time duration 110 s, relative start time 150 s | Reduced the makespan with network traffic | No parallelism between map and reduced tasks |

| 70 | Satvik Vats and B. B. Sagar [14] | 2020 | Independent time-optimized hybrid infrastructure | Newsgroup dataset | Time consumption 41 s | Data independence with sharing of resources | Effective with fewer data nodes |

| 71 | Loubna Lamrini et al. [67] | 2023 | Topsis approach for multi-criteria decision-making | Generated dataset, mobile price dataset, credit card clients dataset | Execution time 90 s | Dealt with uncertainties in iterations and larger datasets | Longer computation time |

| 72 | Uma Narayanan et al. [41] | 2022 | SADS-Cloud | - | Information loss 0.02%, compression ratio 0.06%, throughput 7 mbps, encryption 0.07 s, decryption time 0.03 s, efficiency 58.12 s | Managed big data over a cloud environment | Encryption and decryption issues |

| 73 | R. Thanga Selvi and I. Muthulakshmi [42] | 2021 | MRODC | Pima Indian dataset | Precision 91.8%, recall 90.89%, accuracy 88.67%, F1 score 91.34% | Improved clustering efficiency | No real-time diagnosis of patient information |

| 74 | Tanvi Chawla et al. [43] | 2021 | MuSe | LUBM and WatDiv | Execution time 2500 ms | Effectively solved triple pattern matching queries in big data | Low SPARQL optimization process |

| 75 | P. Natesan et al. [44] | 2023 | MR-MLR | MSD dataset | Mean absolute 20, R-squared error (R2error) 0.90, RMSE 200 | Constant performance even for an increased subset of datasets | Higher complexity |

| Metric | Value |

|---|---|

| Accuracy | 0.707 |

| Precision | 0.718 |

| Recall | 0.697 |

| F1 Score | 0.694 |

| Jaccard Index | 0.542 |

| Response Time | 0.014 |

| Execution Time | 0.014 |

| Information Loss | 0.293 |

| Specificity | 0.697 |

| APS | 3.734 |

| Feature Count | Accuracy | Precision | Recall | F1 Score | Jaccard Index | Response Time (s) | Speedup |

|---|---|---|---|---|---|---|---|

| 250 | 0.428 | 0.482 | 0.420 | 0.402 | 0.261 | 1.829 | 0.007 |

| 500 | 0.601 | 0.621 | 0.591 | 0.586 | 0.424 | 1.168 | 0.014 |

| 750 | 0.672 | 0.681 | 0.662 | 0.657 | 0.501 | 1.117 | 0.016 |

| Node Count | Single-Node Time (s) | Multi-Node Time (s) | Speedup |

|---|---|---|---|

| 2 | 0.0205 | 1.8087 | 0.0114 |

| 4 | 0.0728 | 1.4693 | 0.0496 |

| 8 | 0.0599 | 1.7569 | 0.0341 |

| Metric | Single-Node Result | Multi-Node Result | Improvement/Speedup | Number of Nodes |

|---|---|---|---|---|

| Accuracy | 0.672 | 0.672 | N/A | 1 (Single), 2 (Multi) |

| Precision | 0.681 | 0.681 | N/A | 1 (Single), 2 (Multi) |

| Recall | 0.662 | 0.662 | N/A | 1 (Single), 2 (Multi) |

| F1 Score | 0.657 | 0.657 | N/A | 1 (Single), 2 (Multi) |

| Jaccard Index | 0.501 | 0.501 | N/A | 1 (Single), 2 (Multi) |

| Response Time (s) | 3.191 | 5.261 | Speedup: 0.607 s | 1 (Single), 2 (Multi) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdalla, H.B.; Kumar, Y.; Zhao, Y.; Tosi, D. A Comprehensive Survey of MapReduce Models for Processing Big Data. Big Data Cogn. Comput. 2025, 9, 77. https://doi.org/10.3390/bdcc9040077

Abdalla HB, Kumar Y, Zhao Y, Tosi D. A Comprehensive Survey of MapReduce Models for Processing Big Data. Big Data and Cognitive Computing. 2025; 9(4):77. https://doi.org/10.3390/bdcc9040077

Chicago/Turabian StyleAbdalla, Hemn Barzan, Yulia Kumar, Yue Zhao, and Davide Tosi. 2025. "A Comprehensive Survey of MapReduce Models for Processing Big Data" Big Data and Cognitive Computing 9, no. 4: 77. https://doi.org/10.3390/bdcc9040077

APA StyleAbdalla, H. B., Kumar, Y., Zhao, Y., & Tosi, D. (2025). A Comprehensive Survey of MapReduce Models for Processing Big Data. Big Data and Cognitive Computing, 9(4), 77. https://doi.org/10.3390/bdcc9040077