PK-Judge: Enhancing IP Protection of Neural Network Models Using an Asymmetric Approach

Abstract

1. Introduction

- Application of public-key digital signatures: PK-Judge embeds cryptographic digital signatures into neural networks, enabling secure ownership validation independent of the watermark extraction process.

- Challenge-Response Mechanism: A robust challenge-response protocol is incorporated to mitigate the risk of replay attacks, ensuring that ownership claims are trustworthy and resilient to adversarial strategies.

- Error Correction Codes (ECC): By employing ECC, PK-Judge achieves an Effective Bit Error Rate (EBER) of zero, ensuring the integrity of embedded watermarks even under adversarial scenarios, such as model fine-tuning, pruning, or overwriting.

- Ownership Verification: A new requirement for ownership verification is proposed, leveraging the principle of separation of privilege to enhance security and guide future watermarking designs.

2. Related Work

2.1. White Box Watermarking

2.2. Black-Box Watermarks

3. Watermarking Requirements

- Fidelity: The watermarked model must retain its accuracy and effectiveness in performing its intended task. The probability of misclassifying a data point x from the dataset D (excluding the trigger dataset T) should be bounded by , where is a negligible parameter.

- Uniqueness: The embedded watermark should be distinct and specific to a particular model and its owner.

- Integrity: The probability that a non-watermarked model is incorrectly identified as watermarked should be bounded by the parameter , i.e., . Furthermore, any legal author must be able to prove their ownership with a probability of , specifically , where and are negligible parameters.

- Efficiency: The watermarking process—embedding, extraction, and verification—should be computationally efficient, ensuring minimal overhead in model operation, as highlighted in [11].

- Universality: The watermarking technique should be versatile, making it applicable across various architectures and types of neural network models.

- 8.

- Ownership Verification Requirement: A watermarking scheme must authenticate ownership via a process independent of watermark extraction, ensuring adversarial corruption of extraction does not compromise verification.

4. Background

4.1. Digital Signatures

- KeyGen: Generate asymmetric key pair

- Sign: Create signature

- Verify: Output

4.2. Error Correction Codes (ECC)

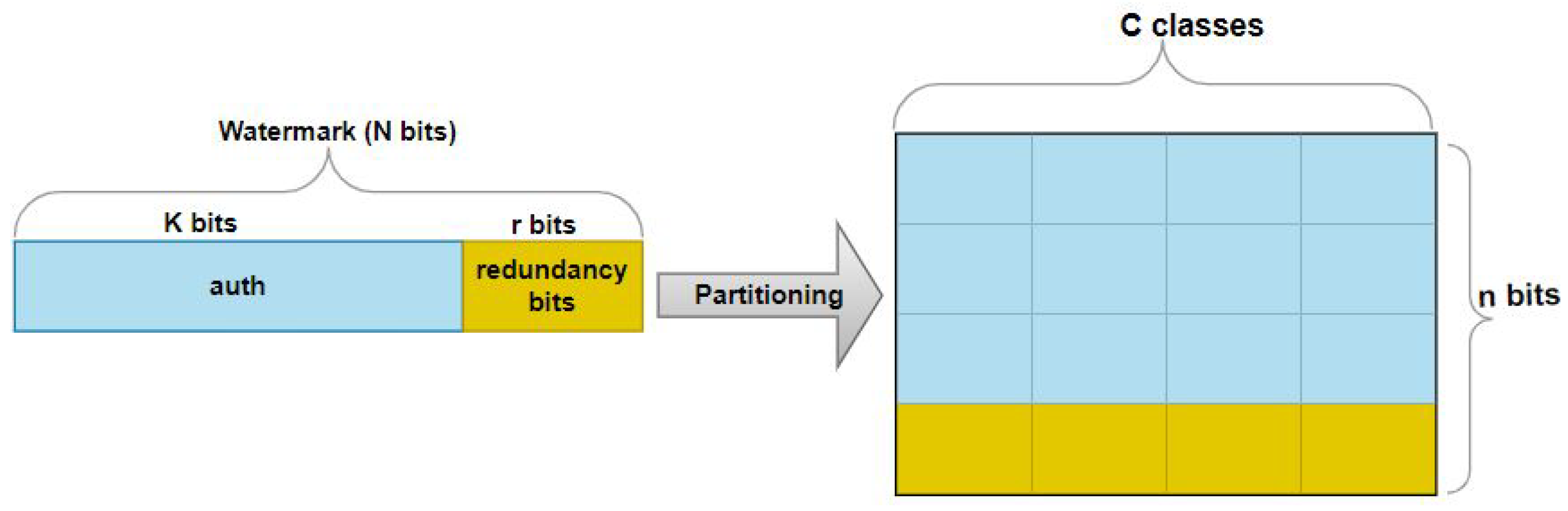

- Encoding: Transforms message (k-bit) to codeword (N-bit, ) via , adding redundancy for error resilience.

- Decoding: Recovers original message from corrupted using .

5. Materials and Methods

5.1. Security Model

5.2. Neural Network Watermarking as a Noisy Channel

5.3. Increasing Capacity and Reducing the Threshold

5.4. Why Symmetric Key Schemes Are Not Suitable Authenticators

5.5. Applying Digital Signatures as Authenticators in the Watermark

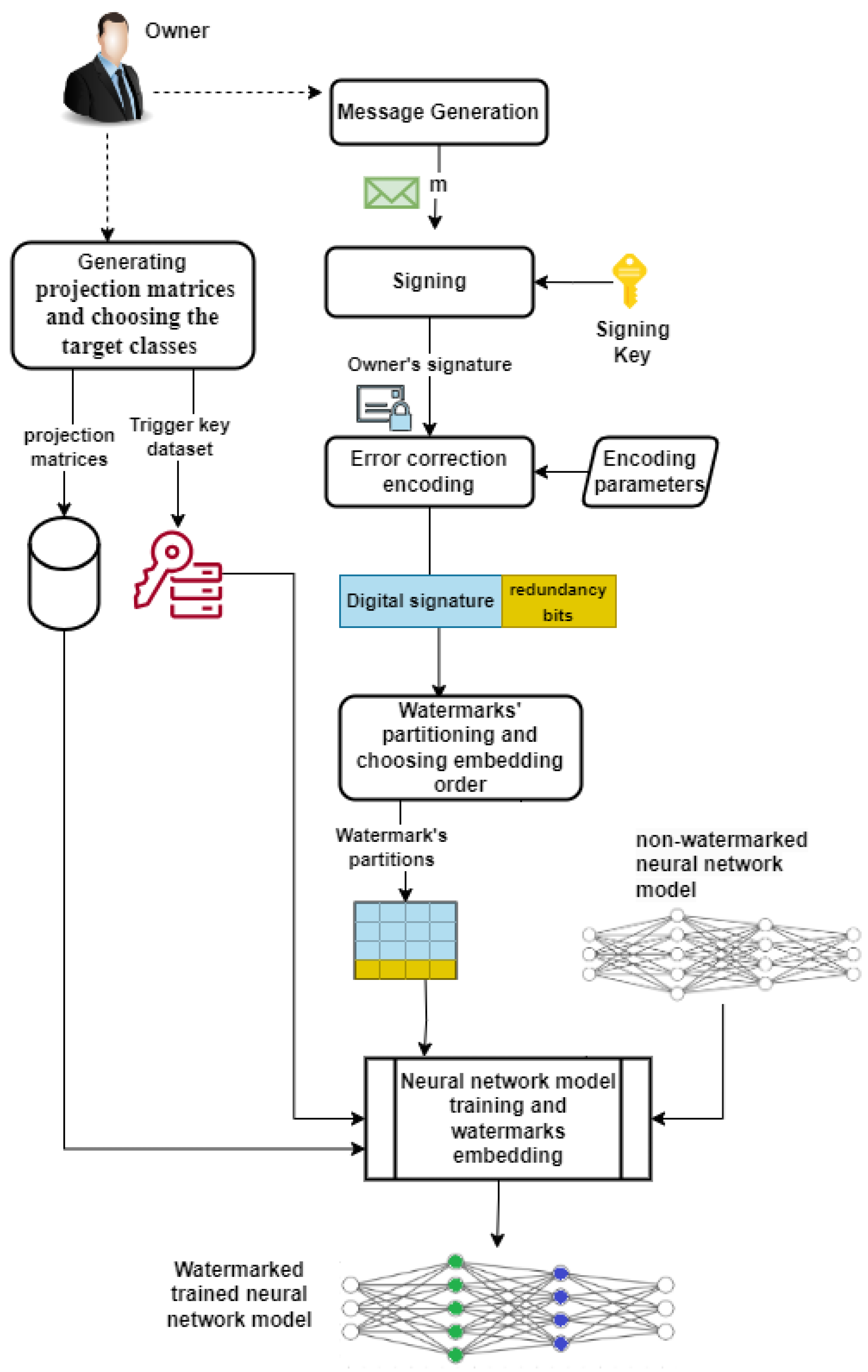

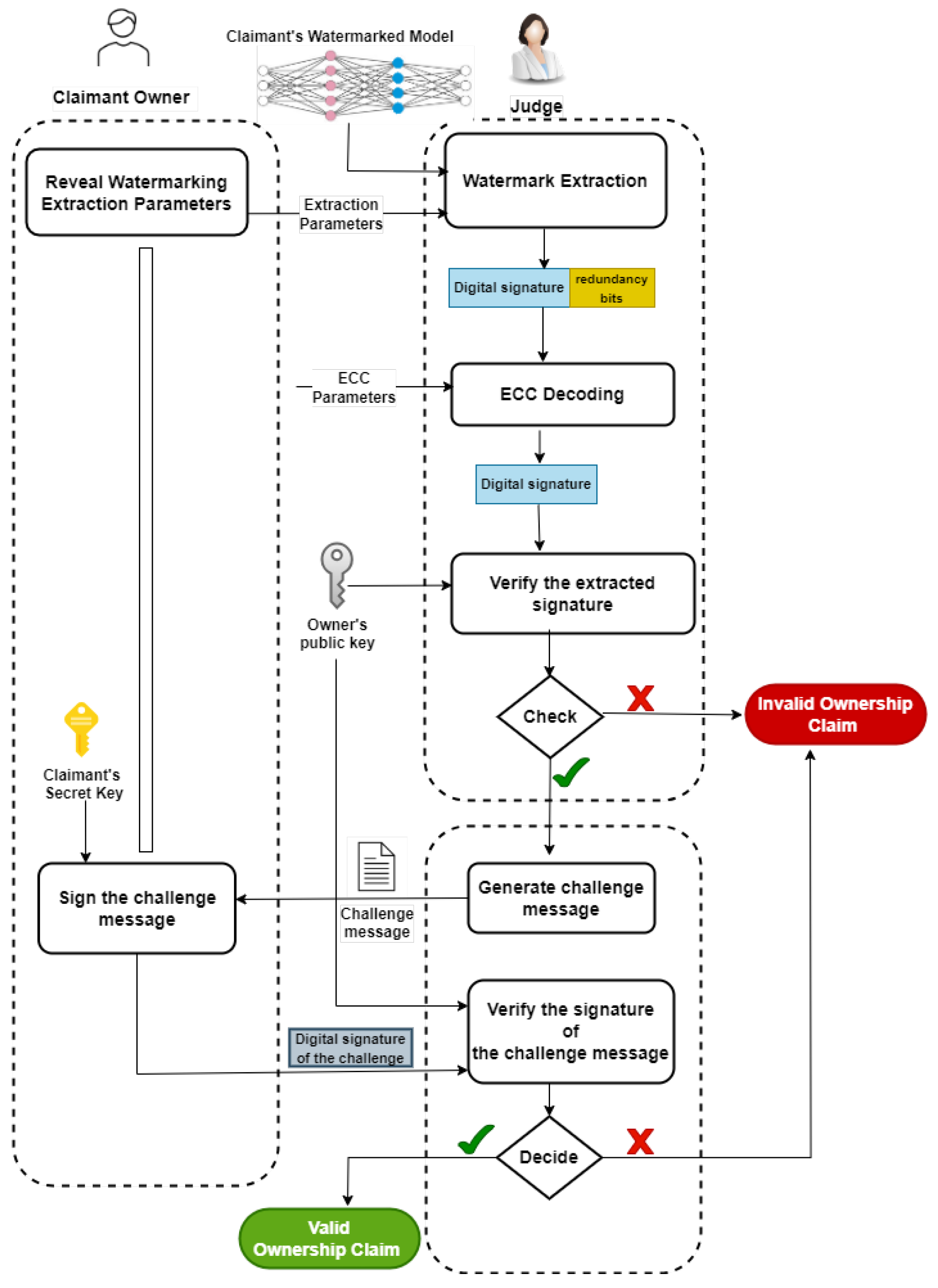

5.6. PK-Judge: Error-Corrected Cryptographic Ownership Verification

| Algorithm 1 PK-Judge: Error-Corrected Digital Signatures for Verifiable Ownership |

| Input: m: A designated message for ownership : Owner’s private signing key, : Owner’s public key t: Error correction code parameters : Certificate associating the owner’s identity with : Embedding and extraction hyperparameters Param: Parameters for challenge-response verification Embedding Phase: 1: 2: 3: 4: Extraction Phase: 1: 2: if not then 3: return False, “Invalid Certificate” 4: end if 5: 6: Ownership Verification Phase: 1: if then 2: if then 3: return True, “Verification Successful” 4: else 5: return False, “Additional Verification Failed” 6: end if 7: else 8: return False, “Signature Verification Failed” 9: end if |

6. Results and Discussion

6.1. Implementation Across Watermarking Frameworks and Model Architectures

6.2. Watermark Generation

- Generate 32-byte BLS private key using BLS12-381 curve and compute the corresponding public key .

- Compute message digest: While we typically include a nonce to ensure message uniqueness (e.g., by setting ), this is not strictly mandatory since the subsequent challenge–response phase already enforces fresh ownership proofs.)

- Sign digest:

- Error Correction Code (RS) encode:

6.3. Fidelity Evaluation

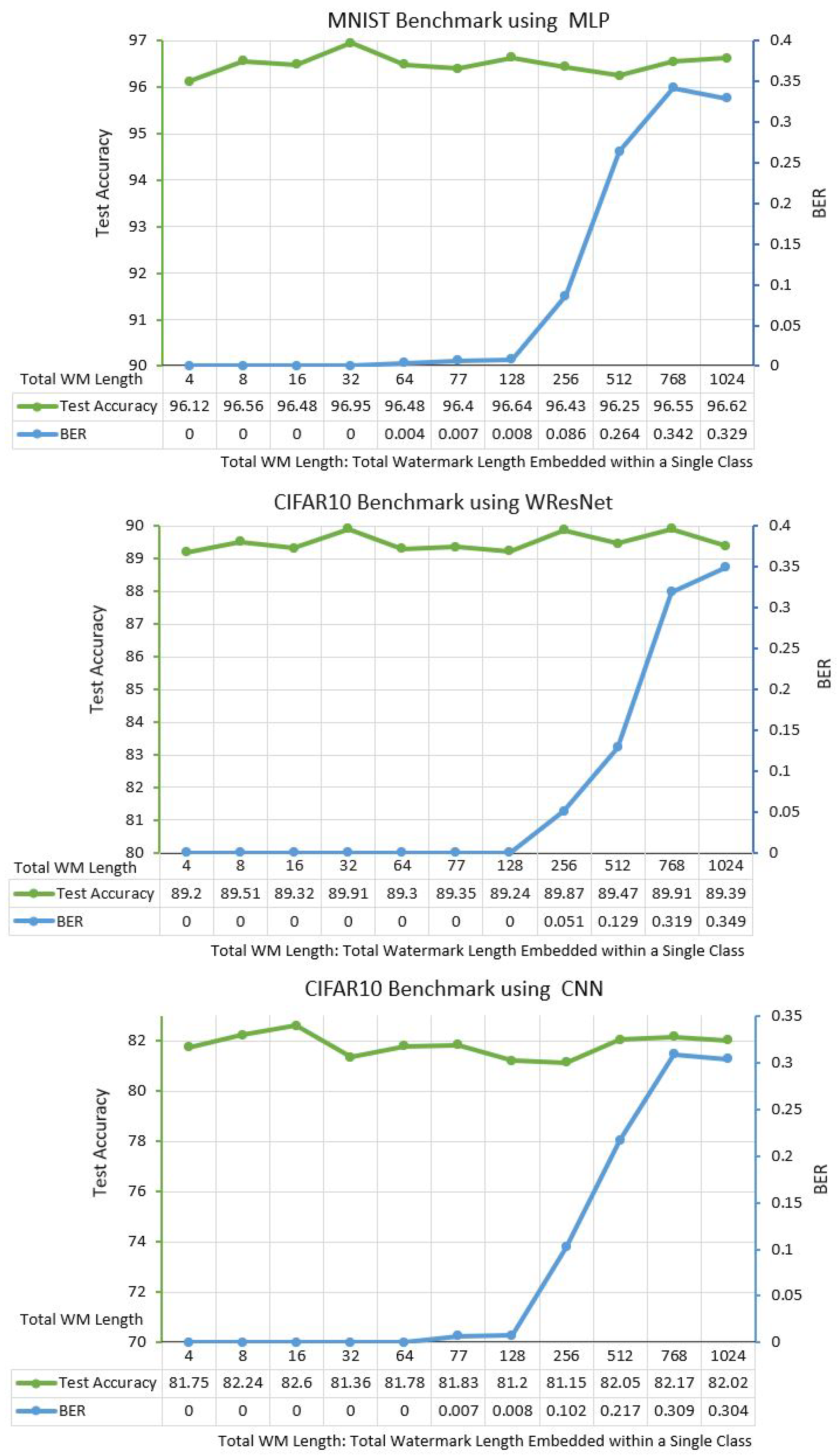

6.3.1. Experiment 1: Single-Class Watermark Capacity

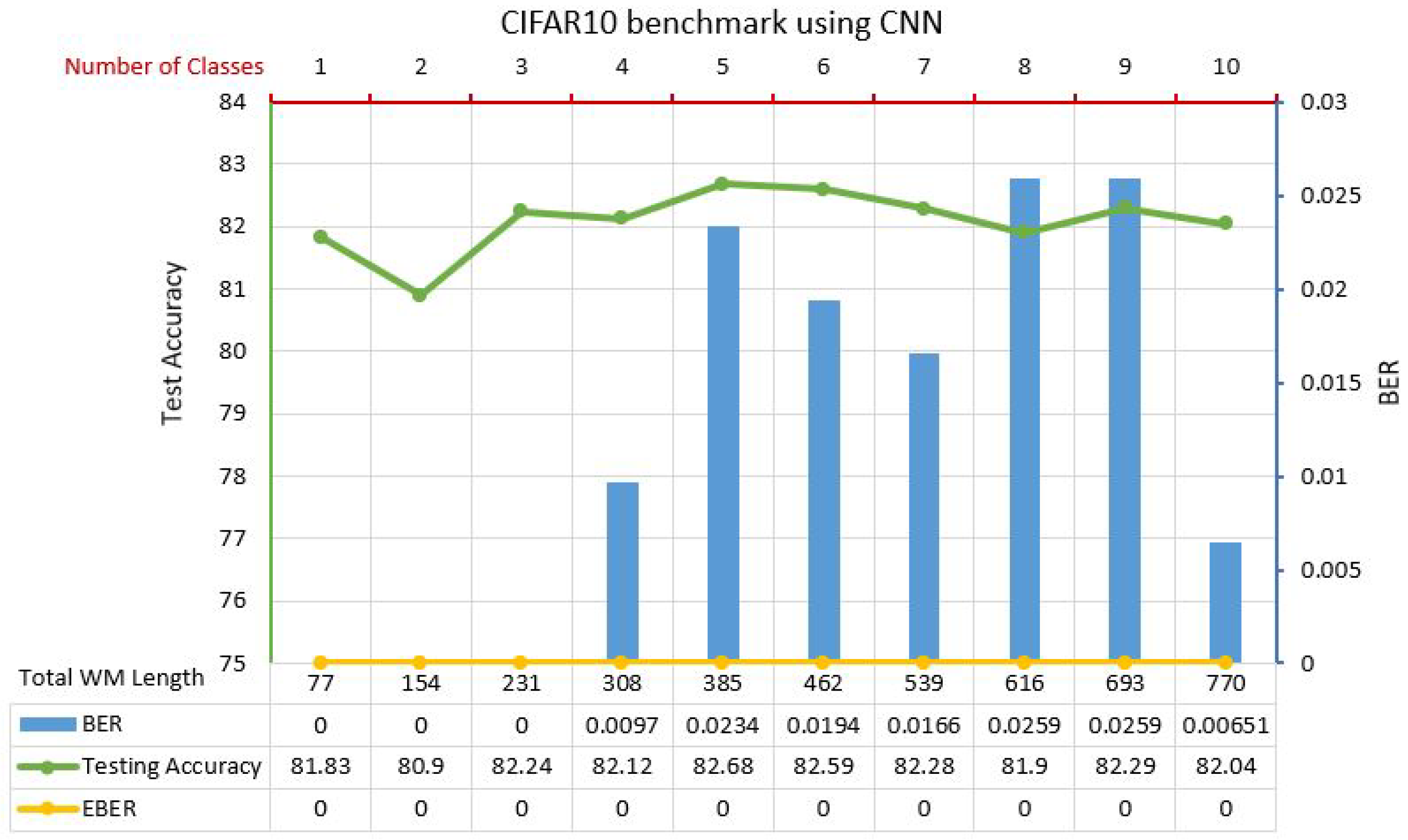

6.3.2. Experiment 2: Multi-Class Watermark Distribution Efficacy

6.3.3. Experiment 3: Watermark Distribution Across Multiple Classes

6.4. Fidelity Experiments with RIGA and DeepiSigns Watermarking Protocols

6.5. Robustness Against Adversarial Attack Scenarios

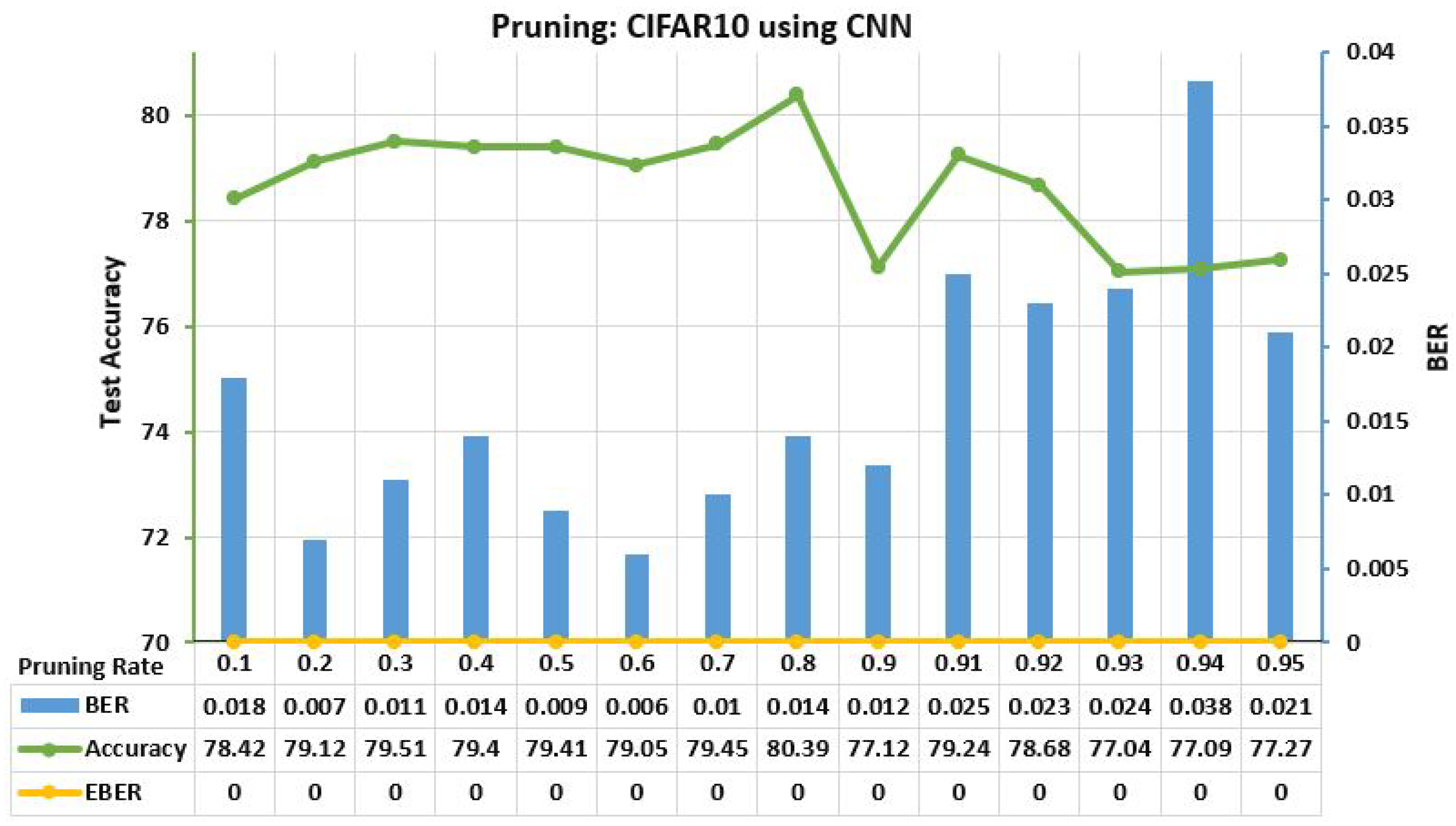

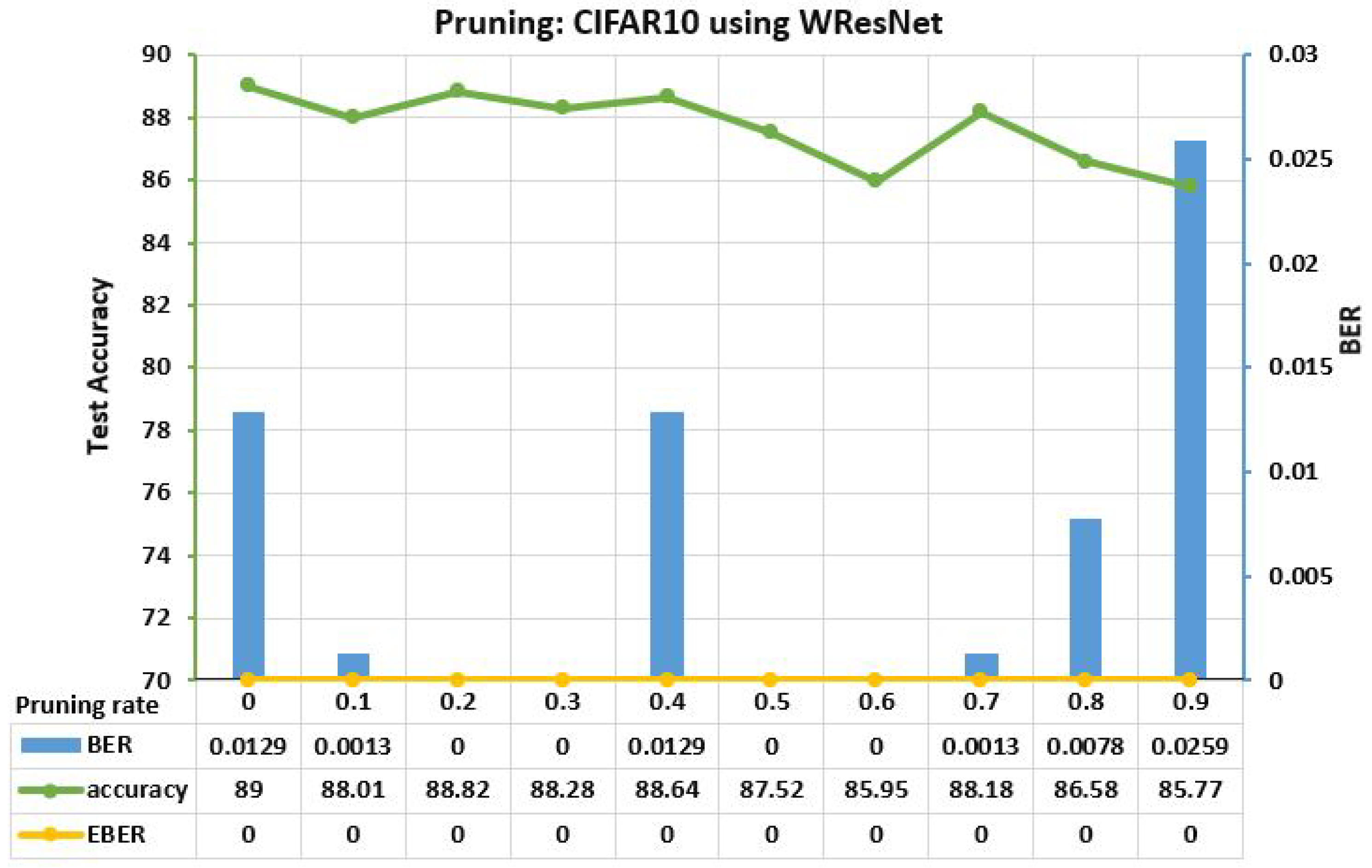

6.5.1. Parameter Pruning Attack

6.5.2. Model Fine-Tuning Attack

6.5.3. Watermark Overwriting Attack

6.6. Evaluation of the Other Watermarking Requirements

6.7. Applying Error Correction Codes in DeepSigns

6.8. Comparison with Prior Work

6.9. Adaptive Error Correction for Verification Challenges

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Choi, J.; Woo, S.; Ferrell, A. Artificial intelligence assisted telehealth for nursing: A scoping review. J. Telemed. Telecare 2025, 31, 140–149. [Google Scholar] [CrossRef] [PubMed]

- OpenAI. ChatGPT: Optimizing Language Models for Dialogue. 2023. Available online: https://www.openai.com/chatgpt (accessed on 1 October 2024).

- Team, G.; Anil, R.; Borgeaud, S.; Alayrac, J.B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; Millican, K.; et al. Gemini: A family of highly capable multimodal models. arXiv 2023, arXiv:2312.11805. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Fan, L.; Wang, Y. Rethinking Deep Neural Network Ownership Verification: Embedding Passports To Defeat Ambiguity Attacks. In Proceedings of the NeurIPS, Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide residual networks. arXiv 2016, arXiv:1605.07146. [Google Scholar]

- Hartung, F.; Kutter, M. Multimedia watermarking techniques. Proc. IEEE 1999, 87, 1079–1107. [Google Scholar] [CrossRef]

- Cox, I.J.; Kilian, J.; Leighton, F.T.; Shamoon, T. Secure spread spectrum watermarking for multimedia. IEEE Trans. Image Process. 1997, 6, 1673–1687. [Google Scholar] [CrossRef]

- Quan, Y.; Teng, H.; Chen, Y.; Ji, H. Watermarking deep neural networks in image processing. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 1852–1865. [Google Scholar] [CrossRef]

- Barni, M.; Bartolini, F. Data hiding for fighting piracy. IEEE Signal Process. Mag. 2004, 21, 28–39. [Google Scholar] [CrossRef]

- Uchida, Y.; Nagai, Y.; Sakazawa, S.; Satoh, S. Embedding Watermarks Into Deep Neural Networks. In Proceedings of the 2017 ACM on International Conference on Multimedia Retrieval, Bucharest, Romania, 6–9 June 2017; pp. 269–277. [Google Scholar]

- Darvish Rouhani, B.; Chen, H.; Koushanfar, F. Deepsigns: An End-To-End Watermarking Framework For Ownership Protection Of Deep Neural Networks. In Proceedings of the Twenty-Fourth International Conference on Architectural Support for Programming Languages and Operating Systems, Providence, RI, USA, 13–17 April 2019; pp. 485–497. [Google Scholar]

- Chen, H.; Rouhani, B.D.; Fu, C.; Zhao, J.; Koushanfar, F. Deepmarks: A Secure Fingerprinting Framework For Digital Rights Management Of Deep Learning Models. In Proceedings of the 2019 on International Conference on Multimedia Retrieval, Ottawa, ON, Canada, 10–13 June 2019; pp. 105–113. [Google Scholar]

- Abuadbba, A.; Kim, H.; Nepal, S. DeepiSign: Invisible Fragile Watermark To Protect The Integrity And Authenticity of CNN. In Proceedings of the 36th Annual ACM Symposium on Applied Computing, Virtual, 22–26 March 2021; pp. 952–959. [Google Scholar]

- Tang, R.; Du, M.; Hu, X. Deep Serial Number: Computational Watermark for DNN Intellectual Property Protection. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Turin, Italy, 18–22 September 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 157–173. [Google Scholar]

- Fielding, R.; Gettys, J.; Mogul, J.; Frystyk, H.; Masinter, L.; Leach, P.; Berners-Lee, T. Hypertext Transfer Protocol—HTTP/1.1. RFC 2616. 1999. Available online: https://datatracker.ietf.org/doc/html/rfc2616 (accessed on 1 October 2024).

- Rescorla, E. HTTP Over TLS. RFC 2818. 2000. Available online: https://datatracker.ietf.org/doc/html/rfc2818 (accessed on 1 October 2024).

- Wang, T.; Kerschbaum, F. Attacks on Digital Watermarks For Deep Neural Networks. In Proceedings of the ICASSP 2019-2019 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; pp. 2622–2626. [Google Scholar]

- Namba, R.; Sakuma, J. Robust watermarking of neural network models against query modification attacks. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1256–1265. [Google Scholar]

- Liu, H.; Weng, Z.; Zhu, Y. Watermarking Deep Neural Networks with Greedy Residuals. In Proceedings of the ICML, Online, 18–24 July 2021; pp. 6978–6988. [Google Scholar]

- Wang, T.; Kerschbaum, F. RIGA: Covert and robust white-box watermarking of deep neural networks. In Proceedings of the Web Conference, Ljubljana, Slovenia, 19–23 April 2021; pp. 993–1004. [Google Scholar]

- Fan, L.; Ng, K.W.; Chan, C.S.; Yang, Q. Deepipr: Deep neural network ownership verification with passports. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6122–6139. [Google Scholar] [CrossRef]

- Li, P.; Huang, J.; Zhang, S. LicenseNet: Proactively safeguarding intellectual property of AI models through model license. J. Syst. Archit. 2025, 159, 103330. [Google Scholar] [CrossRef]

- Li, Y.; Zhu, L.; Jia, X.; Bai, Y.; Jiang, Y.; Xia, S.T.; Cao, X. Move: Effective and harmless ownership verification via embedded external features. arXiv 2022, arXiv:2208.02820. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Chen, T.; Zhang, Z.; Wang, Z. You are caught stealing my winning lottery ticket! making a lottery ticket claim its ownership. Adv. Neural Inf. Process. Syst. 2021, 34, 1780–1791. [Google Scholar]

- Zhao, X.; Yao, Y.; Wu, H.; Zhang, X. Structural Watermarking To Deep Neural Networks Via Network Channel Pruning. In Proceedings of the 2021 IEEE International Workshop on Information Forensics and Security (WIFS), Montpellier, France, 7–10 December 2021; pp. 1–6. [Google Scholar]

- Adi, Y.; Baum, C.; Cisse, M.; Pinkas, B.; Keshet, J. Turning Your Weakness Into A Strength: Watermarking Deep Neural Networks by Backdooring. In Proceedings of the 27th USENIX Security Symposium (USENIX Security 18), Baltimore, MD, USA, 15–17 August 2018; pp. 1615–1631. [Google Scholar]

- Zhang, J.; Gu, Z.; Jang, J.; Wu, H.; Stoecklin, M.P.; Huang, H.; Molloy, I. Protecting Intellectual Property Of Deep Neural Networks with Watermarking. In Proceedings of the 2018 on Asia Conference on Computer and Communications Security, Incheon, Republic of Korea, 4–8 June 2018; pp. 159–172. [Google Scholar]

- Jia, H.; Choquette-Choo, C.A.; Chandrasekaran, V.; Papernot, N. Entangled Watermarks As A Defense Against Model Extraction. In Proceedings of the 30th USENIX security symposium (USENIX Security 21), Vancouver, BC, Canada, 11–13 August 2021; pp. 1937–1954. [Google Scholar]

- Jia, J.; Wu, Y.; Li, A.; Ma, S.; Liu, Y. Subnetwork-lossless robust watermarking for hostile theft attacks in deep transfer learning models. IEEE Trans. Dependable Secur. Comput. 2022. [Google Scholar] [CrossRef]

- Li, P.; Huang, J.; Wu, H.; Zhang, Z.; Qi, C. SecureNet: Proactive intellectual property protection and model security defense for DNNs based on backdoor learning. Neural Netw. 2024, 174, 106199. [Google Scholar] [CrossRef]

- Peng, S.; Chen, Y.; Wang, C.; Jia, X. Intellectual Property Protection of Diffusion Models Via the Watermark Diffusion Process. In Proceedings of the International Conference on Web Information Systems Engineering, Doha, Qatar, 2–5 December 2025; pp. 290–305. [Google Scholar]

- Xu, H.; Xiang, L.; Ma, X.; Yang, B.; Li, B. Hufu: A Modality-Agnositc Watermarking System for Pre-Trained Transformers via Permutation Equivariance. arXiv 2024, arXiv:2403.05842. [Google Scholar]

- Kim, B.; Lee, S.; Lee, S.; Son, S.; Hwang, S.J. Margin-Based Neural Network Watermarking. In Proceedings of the International Conference on Machine Learning. PMLR, Honolulu, HI, USA, 23–29 July 2023; pp. 16696–16711. [Google Scholar]

- Mehta, D.; Mondol, N.; Farahmandi, F.; Tehranipoor, M. Aime: Watermarking ai Models by Leveraging Errors. In Proceedings of the 2022 Design, Automation & Test in Europe Conference & Exhibition (DATE), Antwerp, Belgium, 14–23 March 2022; pp. 304–309. [Google Scholar]

- Yang, P.; Lao, Y.; Li, P. Robust Watermarking For Deep Neural Networks Via Bi-Level Optimization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 14841–14850. [Google Scholar]

- Bansal, A.; Chiang, P.y.; Curry, M.J.; Jain, R.; Wigington, C.; Manjunatha, V.; Dickerson, J.P.; Goldstein, T. Certified Neural Network Watermarks With Randomized Smoothing. In Proceedings of the International Conference on Machine Learning. PMLR, Baltimore, MA, USA, 17–23 July 2022; pp. 1450–1465. [Google Scholar]

- Nie, H.; Lu, S.; Wu, J.; Zhu, J. Deep Model Intellectual Property Protection with Compression-Resistant Model Watermarking. IEEE Trans. Artif. Intell. 2024, 5, 3362–3373. [Google Scholar] [CrossRef]

- Szyller, S.; Atli, B.G.; Marchal, S.; Asokan, N. Dawn: Dynamic Adversarial Watermarking of Neural Networks. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021; pp. 4417–4425. [Google Scholar]

- Lv, P.; Li, P.; Zhang, S.; Chen, K.; Liang, R.; Ma, H.; Zhao, Y.; Li, Y. A robustness-assured white-box watermark in neural networks. IEEE Trans. Dependable Secur. Comput. 2023, 20, 5214–5229. [Google Scholar] [CrossRef]

- Wang, R.; Ren, J.; Li, B.; She, T.; Zhang, W.; Fang, L.; Chen, J.; Wang, L. Free Fine-Tuning: A Plug-And-Play Watermarking Scheme for Deep Neural Networks. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 8463–8474. [Google Scholar]

- Pautov, M.; Bogdanov, N.; Pyatkin, S.; Rogov, O.; Oseledets, I. Probabilistically Robust Watermarking of Neural Networks. arXiv 2024, arXiv:2401.08261. [Google Scholar]

- Wang, S.; Chang, C.H. Fingerprinting deep neural networks—A deepfool approach. In Proceedings of the 2021 IEEE International Symposium on Circuits and Systems (ISCAS), Virtual, 22–28 May 2021; pp. 1–5. [Google Scholar]

- Cao, X.; Jia, J.; Gong, N.Z. IPGuard: Protecting Intellectual Property Of Deep Neural Networks Via Fingerprinting The Classification Boundary. In Proceedings of the 2021 ACM Asia Conference on Computer and Communications Security, Virtual Event, 7–11 June 2021; pp. 14–25. [Google Scholar]

- Lukas, N.; Zhang, Y.; Kerschbaum, F. Deep neural network fingerprinting by conferrable adversarial examples. arXiv 2019, arXiv:1912.00888. [Google Scholar]

- İşler, D.; Hwang, S.; Nakatsuka, Y.; Laoutaris, N.; Tsudik, G. Puppy: A Publicly Verifiable Watermarking Protocol. arXiv 2023, arXiv:2312.09125. [Google Scholar]

- Saltzer, J.H.; Schroeder, M.D. The protection of information in computer systems. Proc. IEEE 1975, 63, 1278–1308. [Google Scholar] [CrossRef]

- Stallings, W.; Brown, L. Computer Security Principles and Practice; Pearson: London, UK, 2015. [Google Scholar]

- Rivest, R.L.; Shamir, A.; Adleman, L. A method for obtaining digital signatures and public-key cryptosystems. Commun. ACM 1978, 21, 120–126. [Google Scholar] [CrossRef]

- ElGamal, T. A public key cryptosystem and a signature scheme based on discrete logarithms. IEEE Trans. Inf. Theory 1985, 31, 469–472. [Google Scholar] [CrossRef]

- Boneh, D.; Lynn, B.; Shacham, H. Short Signatures from the Weil Pairing. In Proceedings of the International Conference on the Theory and Application of Cryptology and Information Security, Gold Coast, Australia, 9–13 December 2001; pp. 514–532. [Google Scholar]

- Reed, I.S.; Solomon, G. Polynomial codes over certain finite fields. J. Soc. Ind. Appl. Math. 1960, 8, 300–304. [Google Scholar] [CrossRef]

- Hamming, R.W. Error detecting and error correcting codes. Bell Syst. Tech. J. 1950, 29, 147–160. [Google Scholar] [CrossRef]

- Bose, R.; Ray-Chaudhuri, D.K. On a class of error correcting binary group codes. Inf. Control. 1960, 3, 68–79. [Google Scholar] [CrossRef]

- Berrou, C.; Glavieux, A.; Thitimajshima, P. Near Shannon limit error-correcting coding and decoding: Turbo-codes. 1. In Proceedings of the ICC’93-IEEE International Conference on Communications, Geneva, Switzerland, 23–26 May 1993; Volume 2, pp. 1064–1070. [Google Scholar]

- Gallager, R. Low-density parity-check codes. IRE Trans. Inf. Theory 1962, 8, 21–28. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Bottou, L. Large-Scale Machine Learning with Stochastic Gradient Descent. In Proceedings of the COMPSTAT’2010: 19th International Conference on Computational Statistics, Paris, France, 22–27 August 2010; Keynote, Invited and Contributed Papers. Springer: Berlin/Heidelberg, Germany, 2010; pp. 177–186. [Google Scholar]

- Giry, D. Keylength—Cryptographic Key Length Recommendation. Online, 2020. Version 32.3, Last Updated: May 24, 2020. Available online: https://www.keylength.com/ (accessed on 1 October 2024).

- Katz, J.; Lindell, Y. Introduction to Modern Cryptography: Principles and Protocols; Chapman and Hall/CRC: Boca Raton, FL, USA, 2007. [Google Scholar]

- Chen, Y.; Tian, J.; Chen, X.; Zhou, J. Effective Ambiguity Attack Against Passport-Based Dnn Intellectual Property Protection Schemes Through Fully Connected Layer Substitution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 8123–8132. [Google Scholar]

- International Telecommunication Union. The ITU-T Recommendation X.509: Information Technology—Open Systems Interconnection—The Directory: Public-Key and Attribute Certificate Frameworks. Available online: https://www.itu.int/rec/T-REC-X.509/en (accessed on 5 October 2024).

- Prins, J.R.; Cybercrime, B.U. Diginotar certificate authority breach “operation black tulip”. Fox-IT 2011, 18, 1–13. Available online: https://www.bitsoffreedom.nl/wp-content/uploads/rapport-fox-it-operation-black-tulip-v1-0.pdf (accessed on 11 November 2024).

- Diffie, W.; Hellman, M.E. New Directions in Cryptography. IEEE Trans. Inf. Theory 1976, 22, 644–654. [Google Scholar] [CrossRef]

- Cooper, M.J.; Schaffer, K.B. Security Requirements for Cryptographic Modules. 2019. Available online: https://nvlpubs.nist.gov/nistpubs/FIPS/NIST.FIPS.140-3.pdf (accessed on 30 October 2024).

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning For Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. 2009. Available online: http://www.cs.utoronto.ca/~kriz/learning-features-2009-TR.pdf (accessed on 7 October 2024).

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Chia Network. BLS Signatures. 2021. Available online: https://github.com/Chia-Network/bls-signatures (accessed on 15 November 2024).

- Davis, J. Reed Solomon Encoder/Decoder. 2018. Available online: https://pypi.org/project/reedsolo/ (accessed on 30 October 2024).

- Han, S.; Pool, J.; Tran, J.; Dally, W. Learning both weights and connections for efficient neural network. Adv. Neural Inf. Process. Syst. 2015, 28, 1135–1143. [Google Scholar]

- Howard, J.; Ruder, S. Universal language model fine-tuning for text classification. arXiv 2018, arXiv:1801.06146. [Google Scholar]

- Chen, H.; Rouhani, B.D.; Koushanfar, F. Blackmarks: Blackbox multibit watermarking for deep neural networks. arXiv 2019, arXiv:1904.00344. [Google Scholar]

| MNIST MLP | RN18 | CNN | RN101 | DN201 | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Epochs | 50 | 100 | 200 | 300 | 50 | 100 | 200 | 300 | 50 | 100 | 200 | 300 | 50 | 100 | 200 | 300 | 50 | 100 | 200 | 300 |

| Acc | 96.4 | 96.1 | 96.1 | 96.7 | 87.5 | 88.6 | 87.6 | 90.1 | 79.3 | 79.2 | 80.9 | 82.0 | 84.5 | 82.1 | 85.2 | 83.0 | 84.1 | 83.8 | 83.9 | 84.2 |

| BER | 0.005 | 0.003 | 0.004 | 0.005 | 0.001 | 0.004 | 0.001 | 0 | 0.016 | 0.018 | 0.027 | 0.018 | 0 | 0 | 0 | 0.003 | 0 | 0 | 0 | 0.013 |

| EBER | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| WM | # | MNIST | RN18 | CNN | RN101 | DN201 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Len/Cls | Cls | Acc% | BER | EBER | Acc% | BER | EBER | Acc% | BER | EBER | Acc% | BER | EBER | Acc% | BER | EBER |

| 77 | 10 | 96.7 | 0.005 | 0 | 90.1 | 0 | 0 | 82.0 | 0.018 | 0 | 85.0 | 0 | 0 | 84.2 | 0.013 | 0 |

| 86 | 9 | 96.4 | 0.004 | 0 | 87.2 | 0.003 | 0 | 78.3 | 0.028 | 0 | 87.8 | 0.004 | 0 | 84.7 | 0 | 0 |

| 96 | 8 | 96.3 | 0.003 | 0 | 87.6 | 0.001 | 0 | 80.2 | 0.020 | 0 | 82.3 | 0.019 | 0 | 83.0 | 0.0052 | 0 |

| 110 | 7 | 96.7 | 0.005 | 0 | 89.2 | 0.004 | 0 | 80.2 | 0.023 | 0 | 85.0 | 0 | 0 | 83.4 | 0 | 0 |

| 128 | 6 | 96.7 | 0.014 | 0 | 87.1 | 0.002 | 0 | 80.0 | 0.025 | 0 | 79.1 | 0 | 0 | 83.4 | 0 | 0 |

| 154 | 5 | 96.6 | 0.021 | 0 | 88.3 | 0.003 | 0 | 80.0 | 0.038 | NZ | 79.6 | 0 | 0 | 82.8 | 0 | 0 |

| 192 | 4 | 96.5 | 0.043 | NZ | 85.6 | 0.018 | 0 | 78.2 | 0.066 | NZ | 78.7 | 0 | 0 | 82.9 | 0 | 0 |

| 256 | 3 | 96.4 | 0.068 | NZ | 88.6 | 0.047 | NZ | 79.1 | 0.055 | NZ | 86.2 | 0 | 0 | 83.8 | 0 | 0 |

| 384 | 2 | 96.9 | 0.149 | NZ | 86.7 | 0.103 | NZ | 79.8 | 0.148 | NZ | 81.5 | 0.016 | 0 | 82.6 | 0 | 0 |

| 770 | 1 | 96.6 | 0.342 | NZ | 89.9 | 0.319 | NZ | 80.2 | 0.314 | NZ | 80.1 | 0.020 | 0 | 82.6 | 0.017 | 0 |

| Protocol & Model | Acc. Before WM (%) | Acc. After WM (%) | BER | EBER |

|---|---|---|---|---|

| RIGA (ResNet18, CIFAR10) | 97.7 | 97.53 | 0.000 | 0.000 |

| DeepiSigns (ResNet18, CIFAR10) | 88.32 | 87.86 | 0.018 | 0.000 |

| Metric | Model | 10% | 20% | 30% | 40% | 50% | 60% | 70% | 80% | 90% | 91% | 92% | 93% | 94% | 95% | 96% | 97% | 98% | 99% |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Acc% | RN101 | 81.06 | 82.02 | 79.16 | 78.57 | 82.64 | 79.78 | 81.10 | 82.13 | 83.33 | 82.83 | 76.84 | 80.31 | 83.04 | 80.66 | 79.64 | 79.81 | 78.98 | 70.66 |

| BER | 0 | 0 | 0.021 | 0.038 | 0 | 0 | 0 | 0.019 | 0 | 0 | 0.003 | 0.003 | 0 | 0 | 0 | 0.019 | 0.018 | 0.014 | |

| EBER | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | |

| Acc% | DN201 | 84.16 | 84.16 | 84.16 | 84.16 | 84.16 | 84.16 | 84.16 | 84.16 | 84.18 | 84.17 | 84.17 | 84.26 | 84.11 | 83.96 | 84.02 | 83.50 | 83.14 | 72.60 |

| BER | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0.001 | 0.019 | 0.013 | 0.013 | 0.003 | 0.031 | 0.031 | 0.031 | |

| EBER | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Model | Metric | 50 Epochs | 100 Epochs | 200 Epochs |

|---|---|---|---|---|

| MNIST_MLP | Accuracy | 96.67 | 96.33 | 96.62 |

| BER | 0.0082 | 0.0271 | 0.0293 | |

| EBER | 0 | 0 | 0 | |

| CIFAR10_WideResNet | Accuracy | 88.08 | 87.73 | 90.44 |

| BER | 0.00311 | 0.0207 | 0.0234 | |

| EBER | 0 | 0 | 0 | |

| CIFAR10_CNN | Accuracy | 79.39 | 79.49 | 81.02 |

| BER | 0.0237 | 0.0251 | 0.026 | |

| EBER | 0 | 0 | 0 | |

| ResNet101 | Accuracy | 84.88 | 84.66 | 85.61 |

| BER | 0.0273 | 0.0273 | 0.0104 | |

| EBER | 0 | 0 | 0 | |

| DenseNet201 | Accuracy | 82.5 | 82.85 | 82.85 |

| BER | 0.0195 | 0.0299 | 0.0299 | |

| EBER | 0 | 0 | 0 |

| Model Name | Detection Success | EBER |

|---|---|---|

| MNIST_MLP | Yes | 0 |

| CIFAR10_CNN | Yes | 0 |

| CIFAR10_ResNet18 | Yes | 0 |

| CIFAR10_ResNet101 | Yes | 0 |

| CIFAR10_DenseNet201 | Yes | 0 |

| Method | Verification Mechanism | Cryptographic Technique | Challenge-Response |

|---|---|---|---|

| PK-Judge | Asymmetric | Digital Signatures | Yes |

| Hufu [33] | Symmetric | None | No |

| BlackMark [74] | Symmetric | None | No |

| Margin-based [34] | Symmetric | None | No |

| PTYNet [41] | Symmetric | None | No |

| DeepiSign [14] | Symmetric | Hash Functions | No |

| AIME [35] | Symmetric | None | No |

| Entangled [29] | Symmetric | None | No |

| RIGA [21] | Symmetric | None | No |

| Puppy [46] | Symmetric | Garbled Circuits | No |

| DeepIPR [22] | Symmetric | None | No |

| DeepMarks [13] | Symmetric | None | No |

| DeepSigns [12] | Symmetric | None | No |

| Uchida et al. [11] | Symmetric | None | No |

| Adi et al. [27] | Symmetric | None | No |

| Namba et al. [19] | Symmetric | None | No |

| Yang et al. [36] | Symmetric | None | No |

| Li et al. [24] | Symmetric | None | No |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kanakri, W.; King, B. PK-Judge: Enhancing IP Protection of Neural Network Models Using an Asymmetric Approach. Big Data Cogn. Comput. 2025, 9, 66. https://doi.org/10.3390/bdcc9030066

Kanakri W, King B. PK-Judge: Enhancing IP Protection of Neural Network Models Using an Asymmetric Approach. Big Data and Cognitive Computing. 2025; 9(3):66. https://doi.org/10.3390/bdcc9030066

Chicago/Turabian StyleKanakri, Wafaa, and Brian King. 2025. "PK-Judge: Enhancing IP Protection of Neural Network Models Using an Asymmetric Approach" Big Data and Cognitive Computing 9, no. 3: 66. https://doi.org/10.3390/bdcc9030066

APA StyleKanakri, W., & King, B. (2025). PK-Judge: Enhancing IP Protection of Neural Network Models Using an Asymmetric Approach. Big Data and Cognitive Computing, 9(3), 66. https://doi.org/10.3390/bdcc9030066