Dependency Reduction Techniques for Performance Improvement of Hyperledger Fabric Blockchain

Abstract

1. Introduction

2. Related Work

2.1. Hyperledger Fabric Distributed Ledger

2.2. P2P Energy Trading System Using Hyperledger Fabric [13]

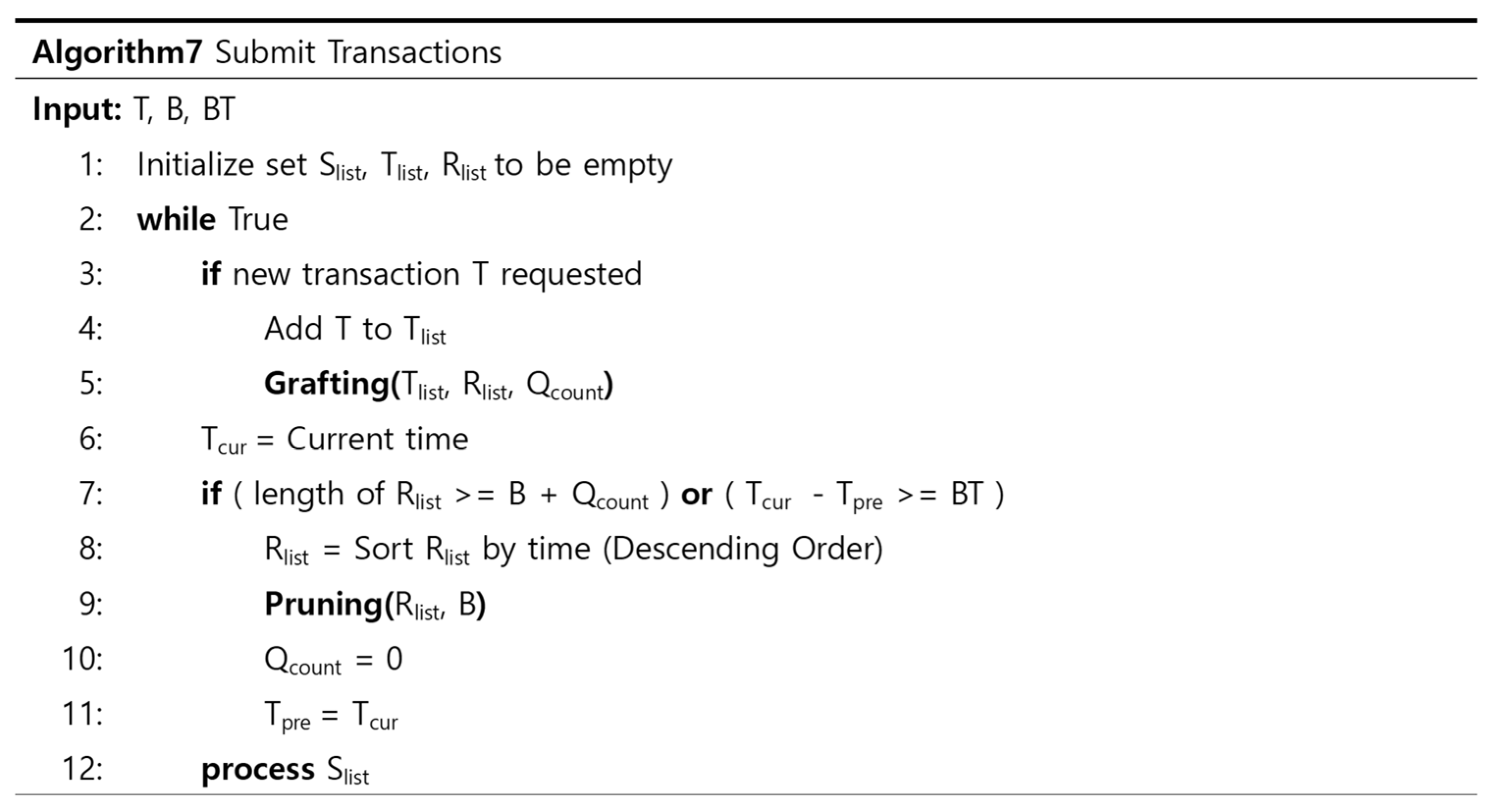

2.3. Hyperledger Fabric High-Throughput

2.4. ParBlockchain

2.5. HTFabric

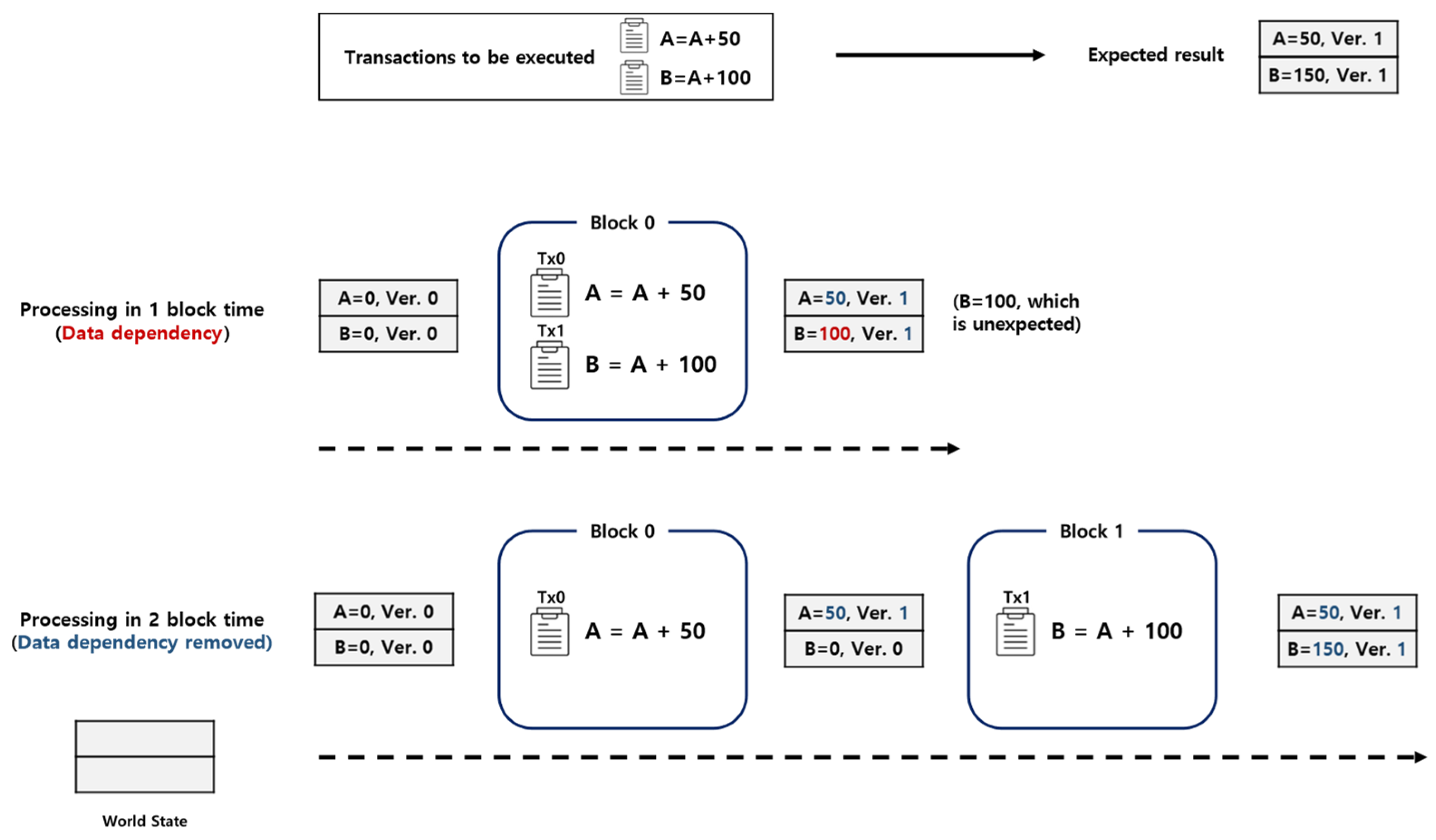

3. Data Dependency in Hyperledger Fabric Blockchain: A Bottleneck for Speed-Up

4. Proposed Method

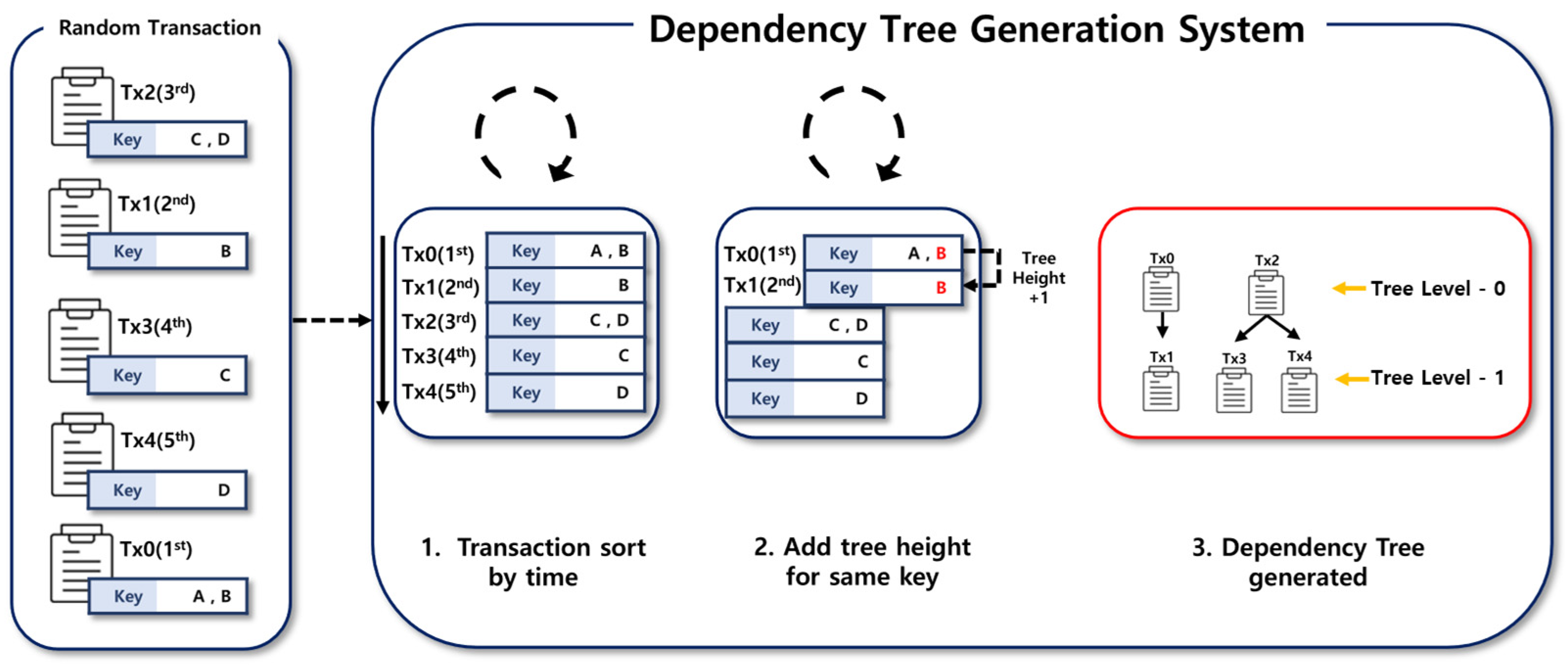

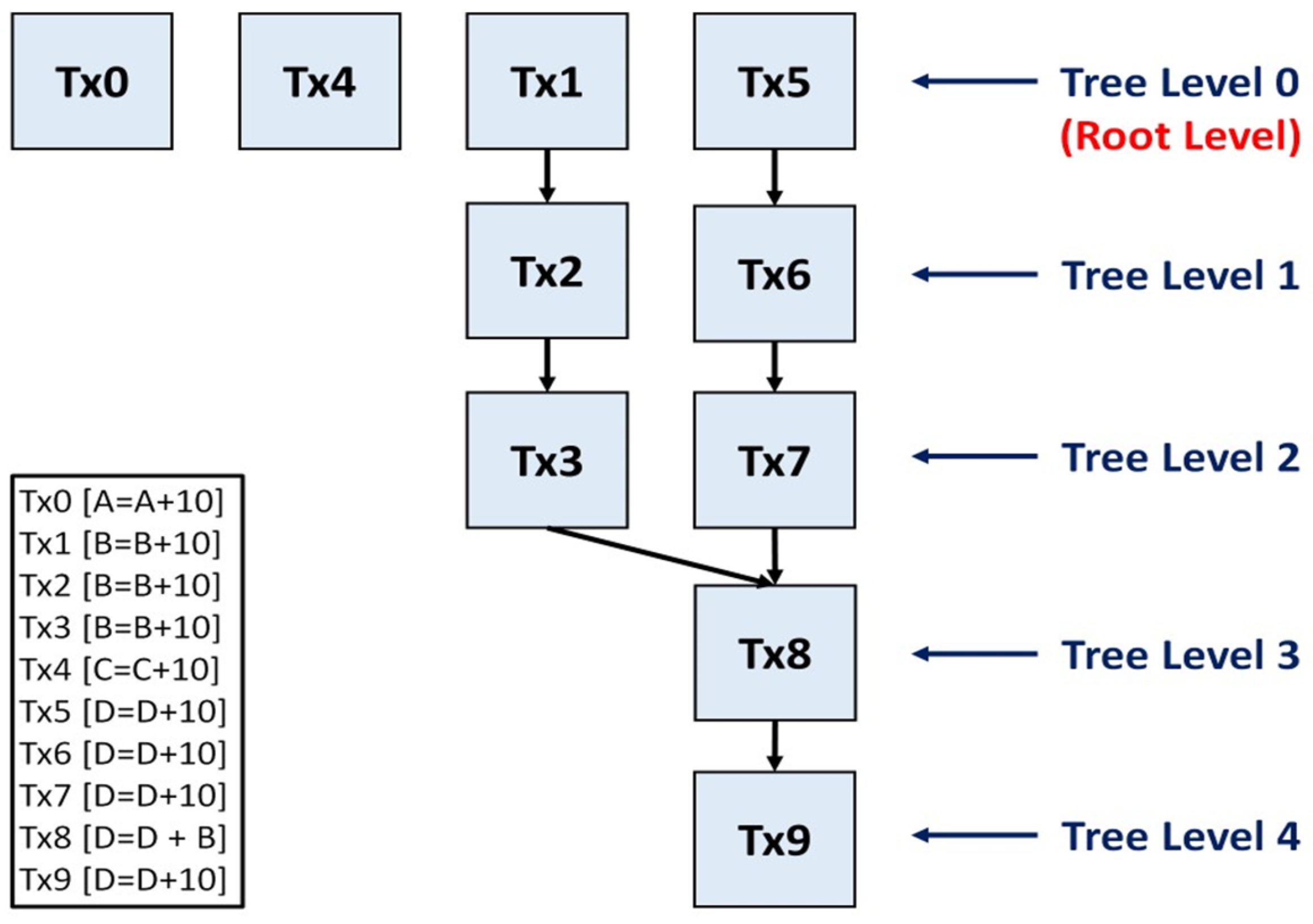

4.1. Transaction Processing with Dependency Tree

4.1.1. Deep-First Method

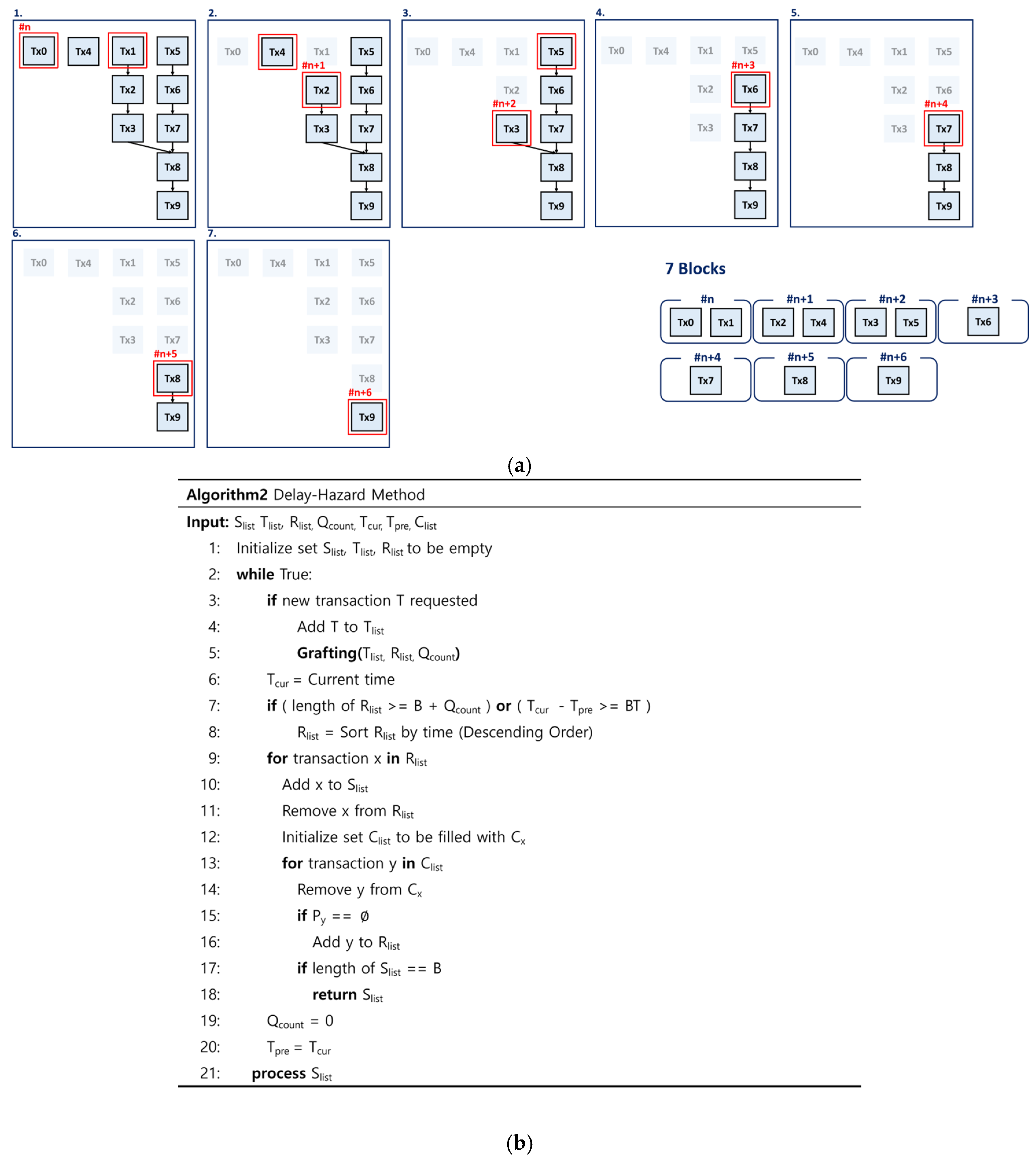

4.1.2. Delay-Hazard Method

4.1.3. Starve-Avoid Method

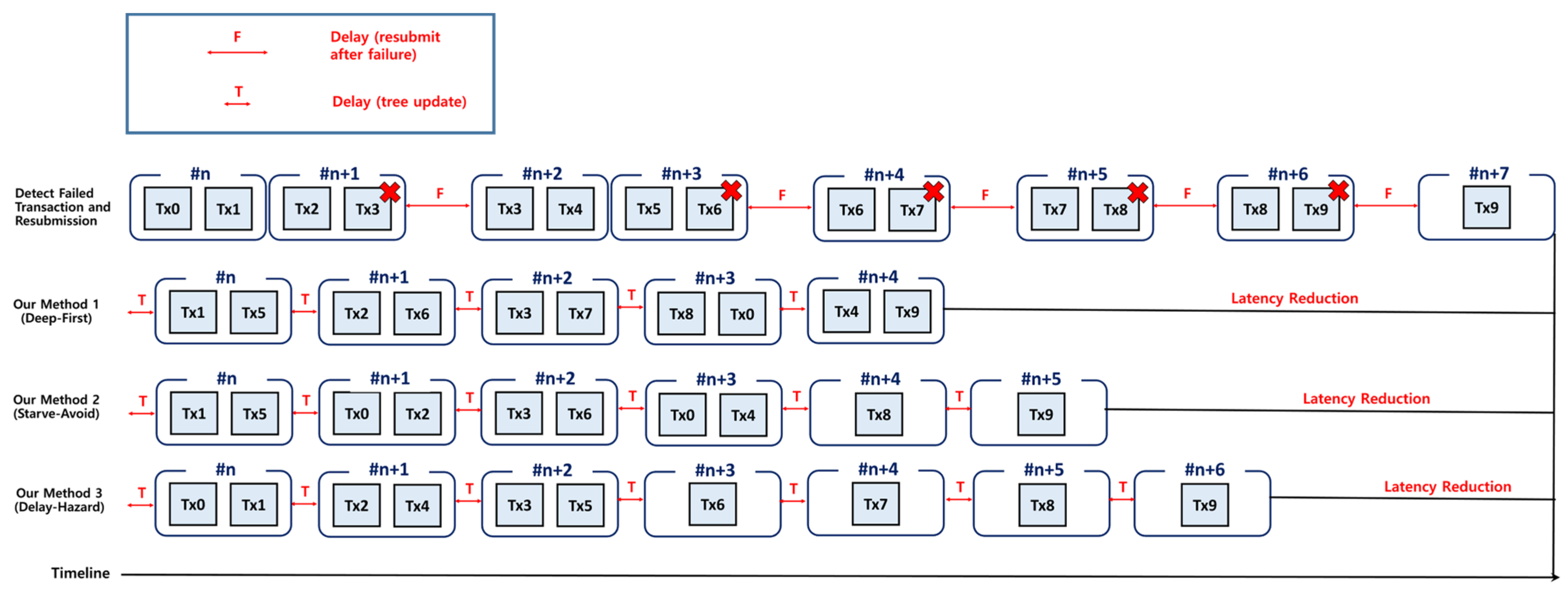

4.1.4. Method Comparison

4.2. Dependency Priority Factors

4.2.1. Tree Level

4.2.2. Time

4.2.3. Height

4.2.4. Starvation Limit

4.3. Dependency Check Methods

4.3.1. Three Types of Dependency Check Methods

- Deep-First

- 2.

- Delay-Hazard

- 3.

- Starve-Avoid

| Priority | Conventional Hyperledger Fabric | Deep-First | Delay-Hazard | Starve-Avoid |

|---|---|---|---|---|

| 1 | Time | Lowest Tree Level | Lowest Tree Level | Transaction beyond Starvation Limit |

| 2 | - | Highest Height | Fastest Arrival Time | Lowest Tree Level |

| 3 | - | Fastest Arrival Time | - | Highest Height |

| 4 | - | - | - | Fastest Arrival Time |

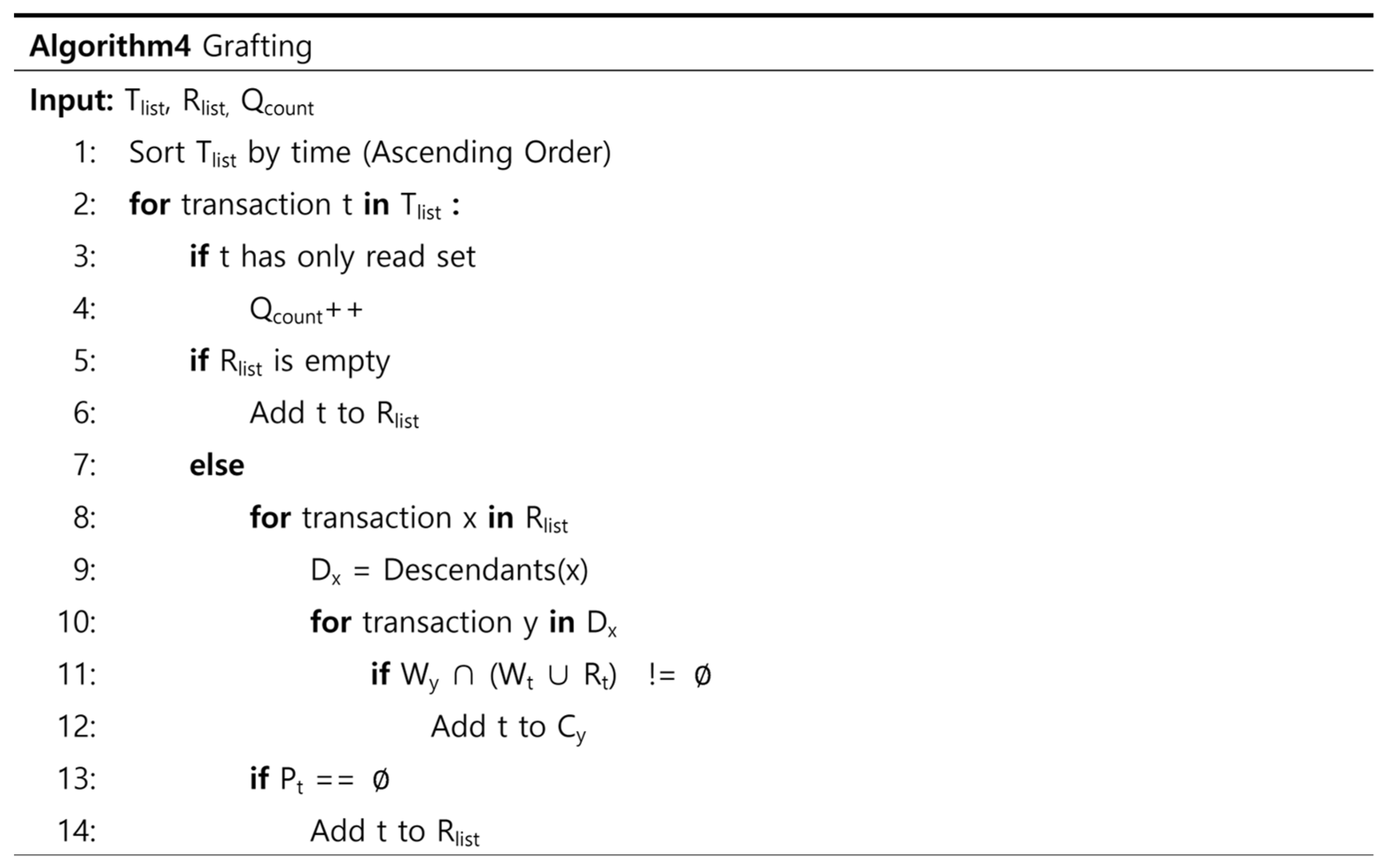

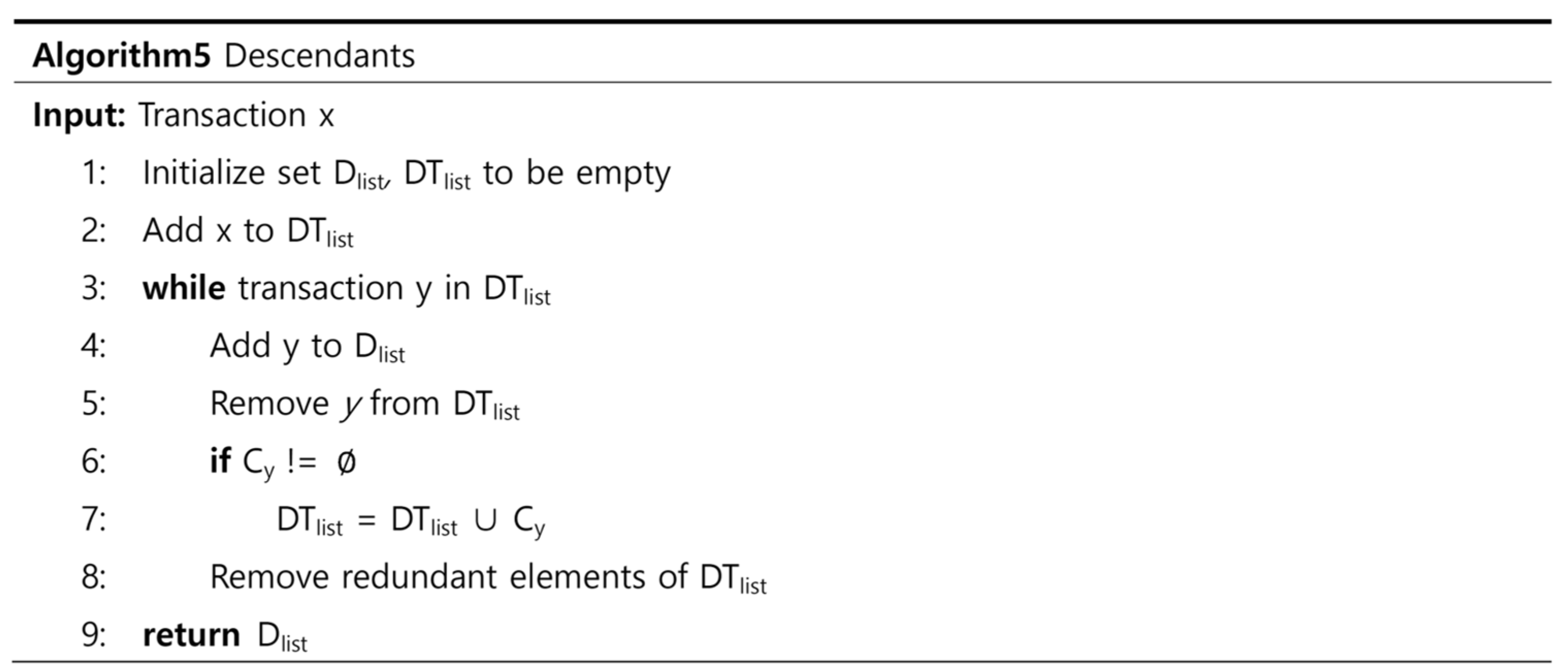

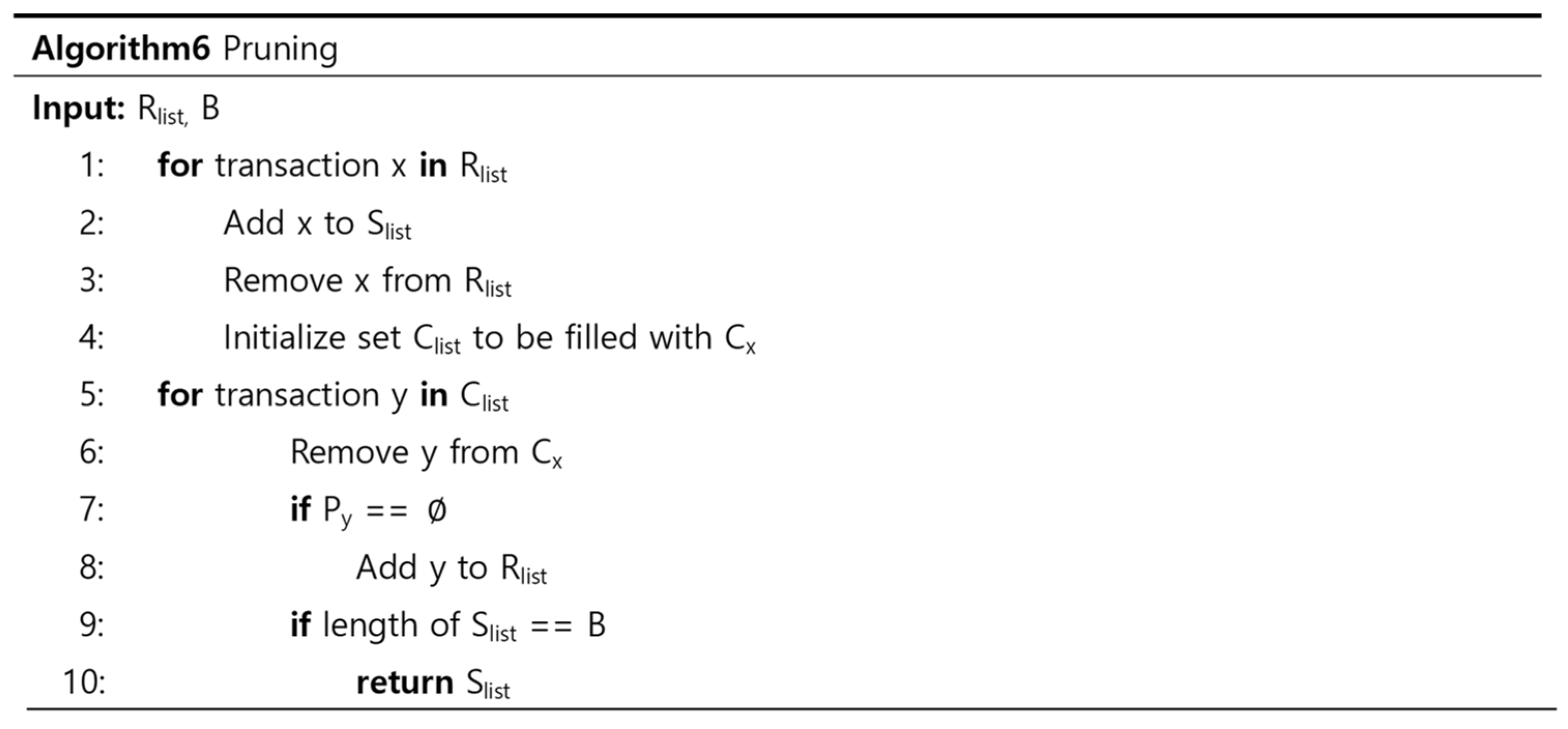

4.3.2. Details of Dependency Check Methods

5. Experiments and Results

5.1. Experimental Setup

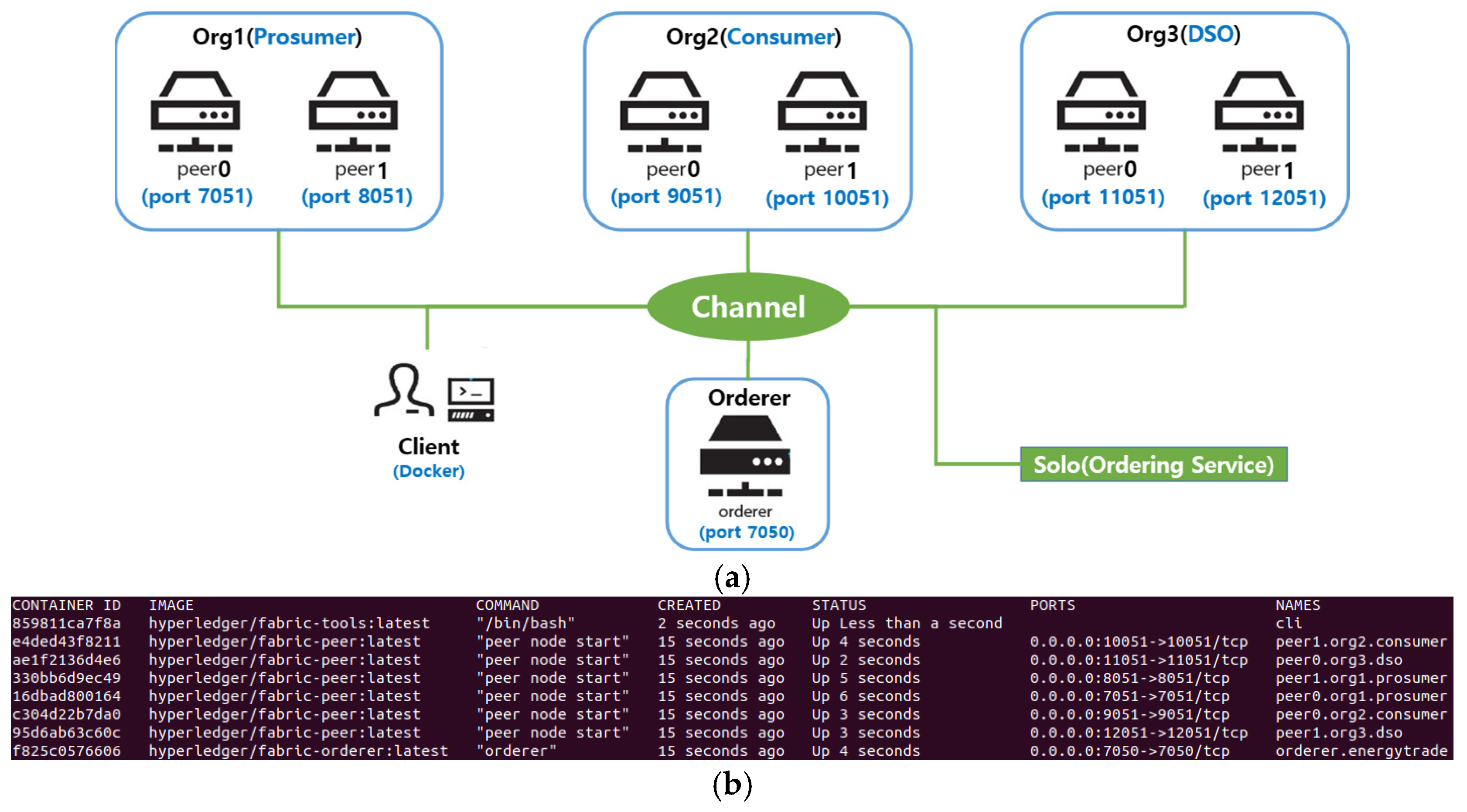

5.1.1. API-Integrated Experimental Setup for Dependency Management in Hyperledger Fabric

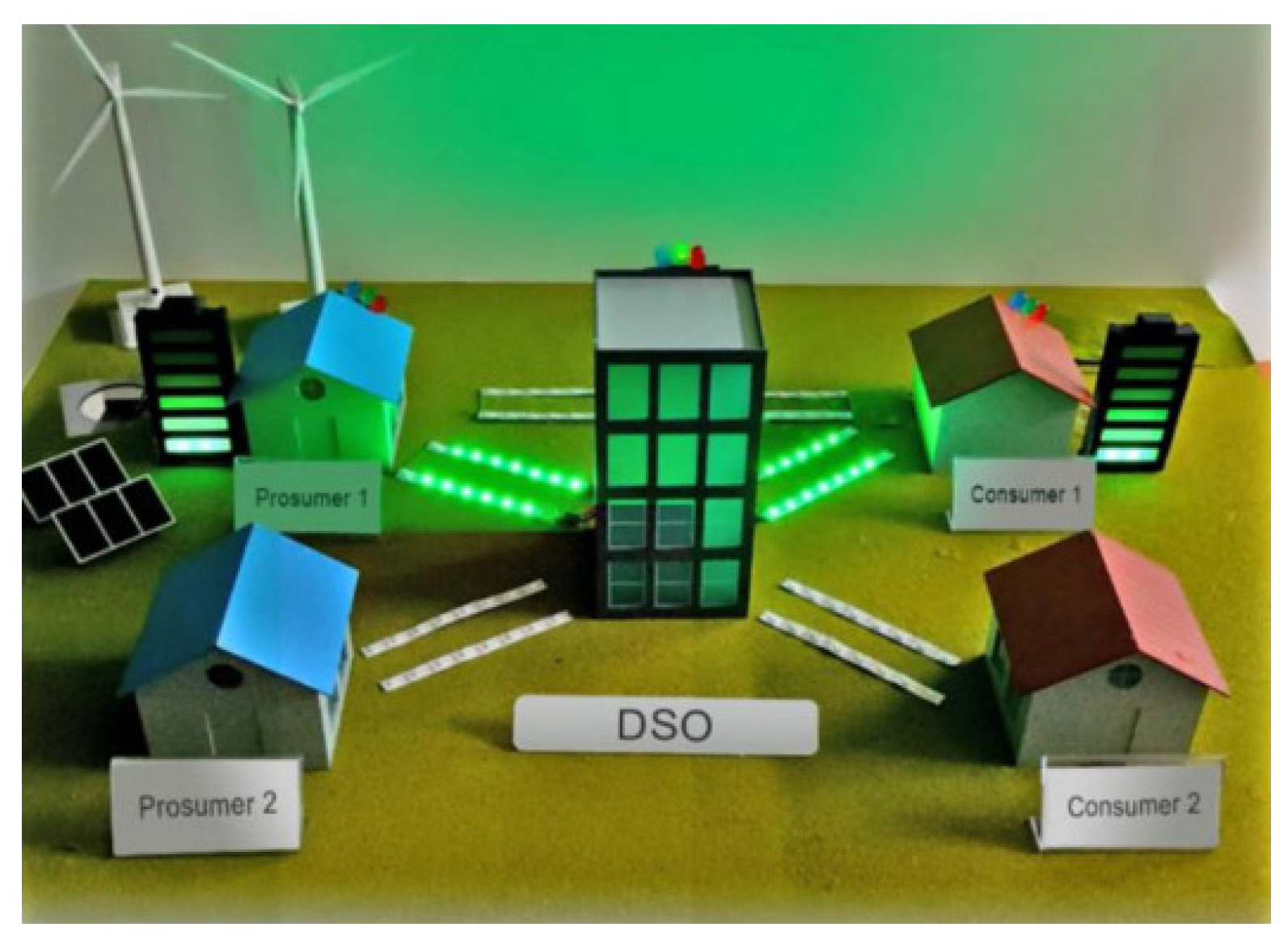

5.1.2. P2P Energy Trading System Using Hyperledger Fabric

5.2. Results

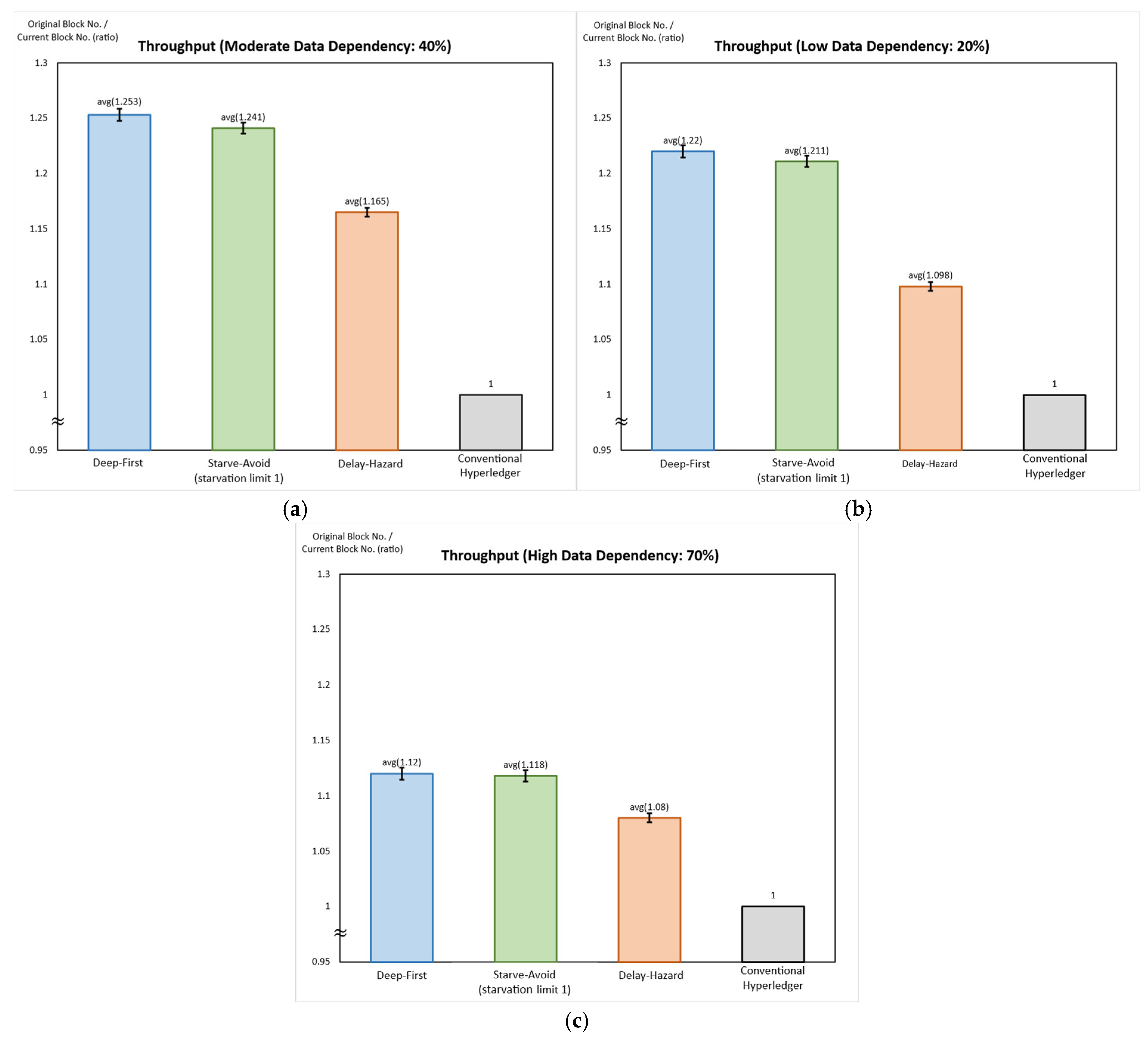

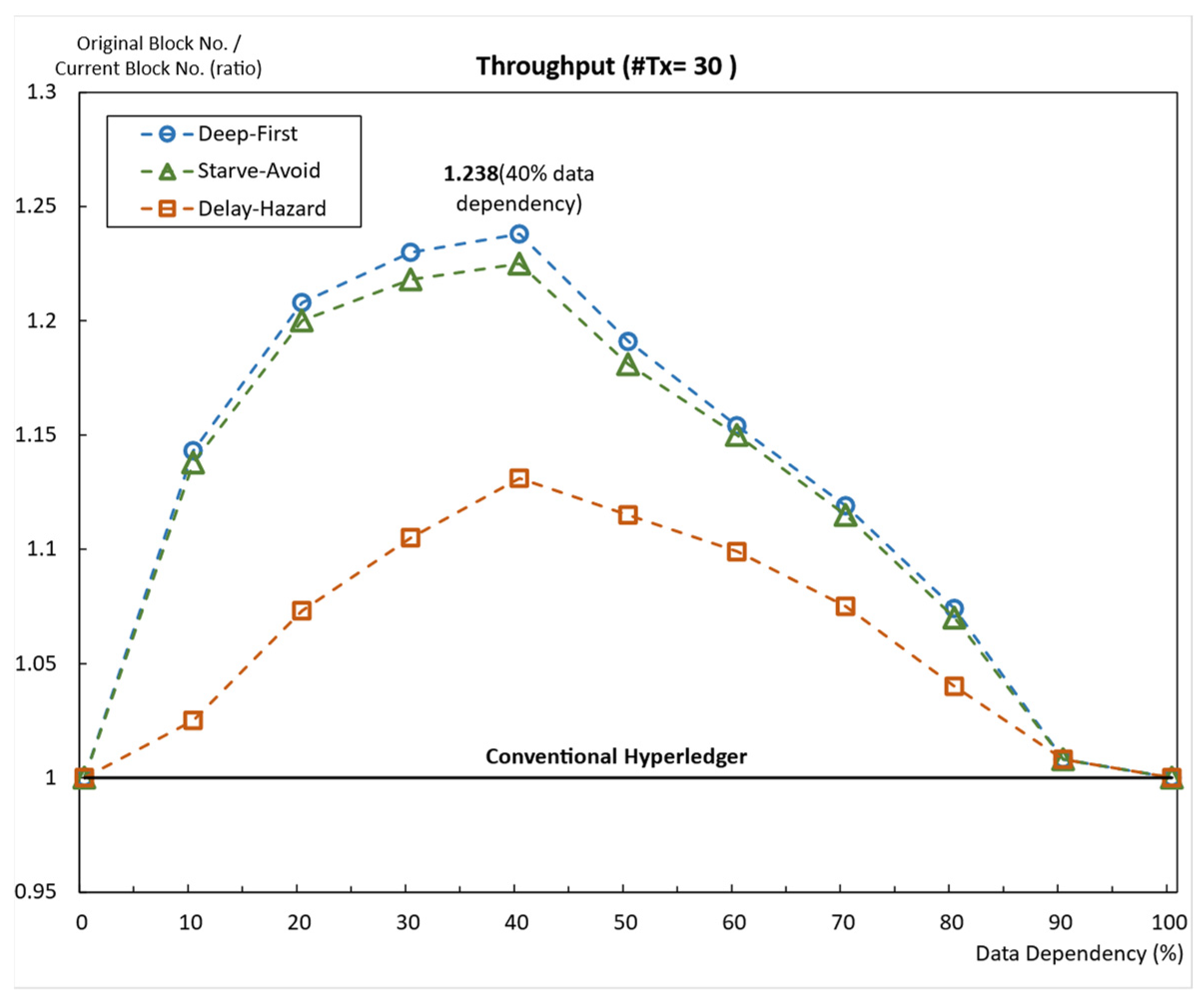

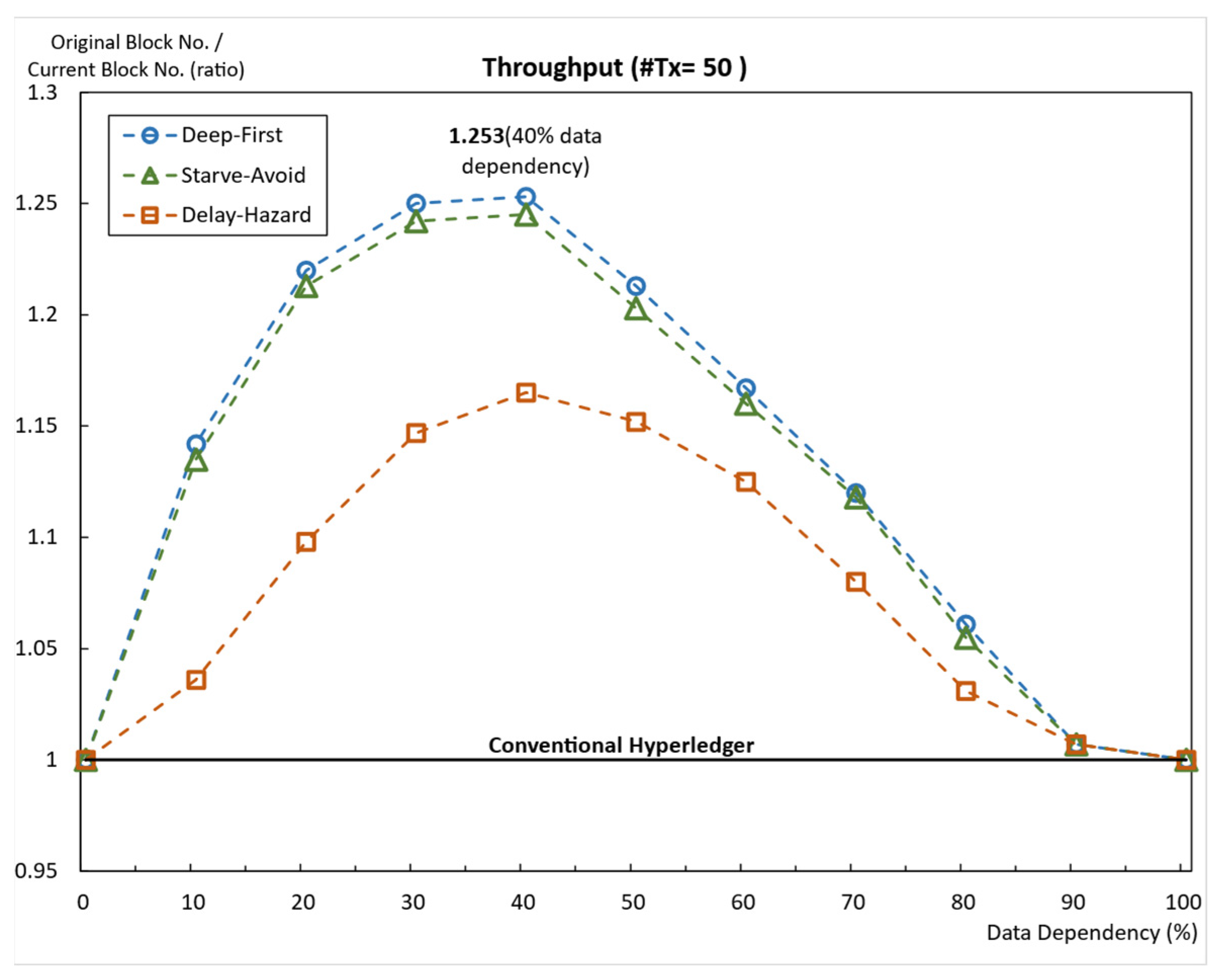

5.2.1. Throughput Comparison According to Data Dependency

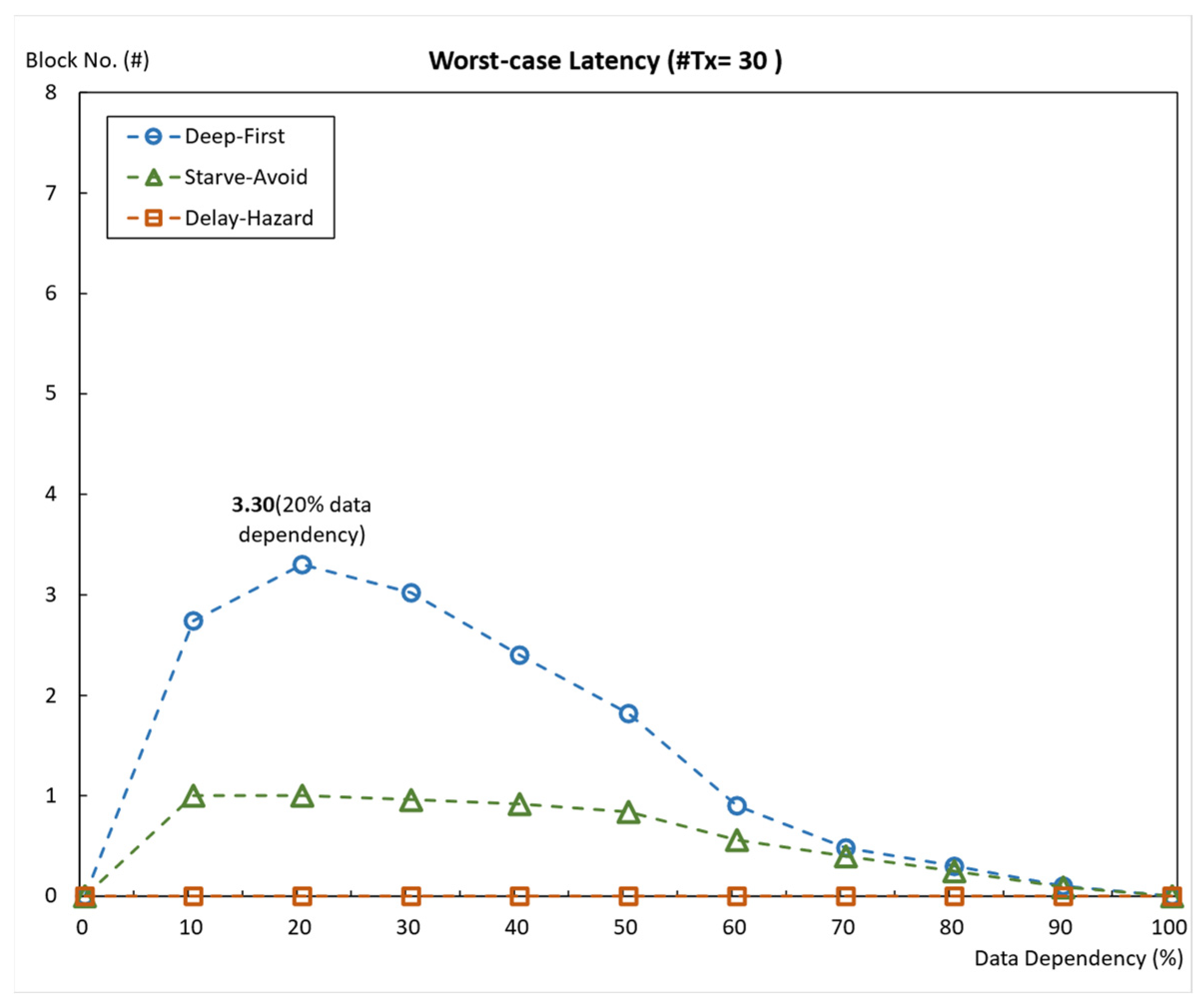

5.2.2. Average Throughput and Average Worst-Case Latency When #Tx = 30

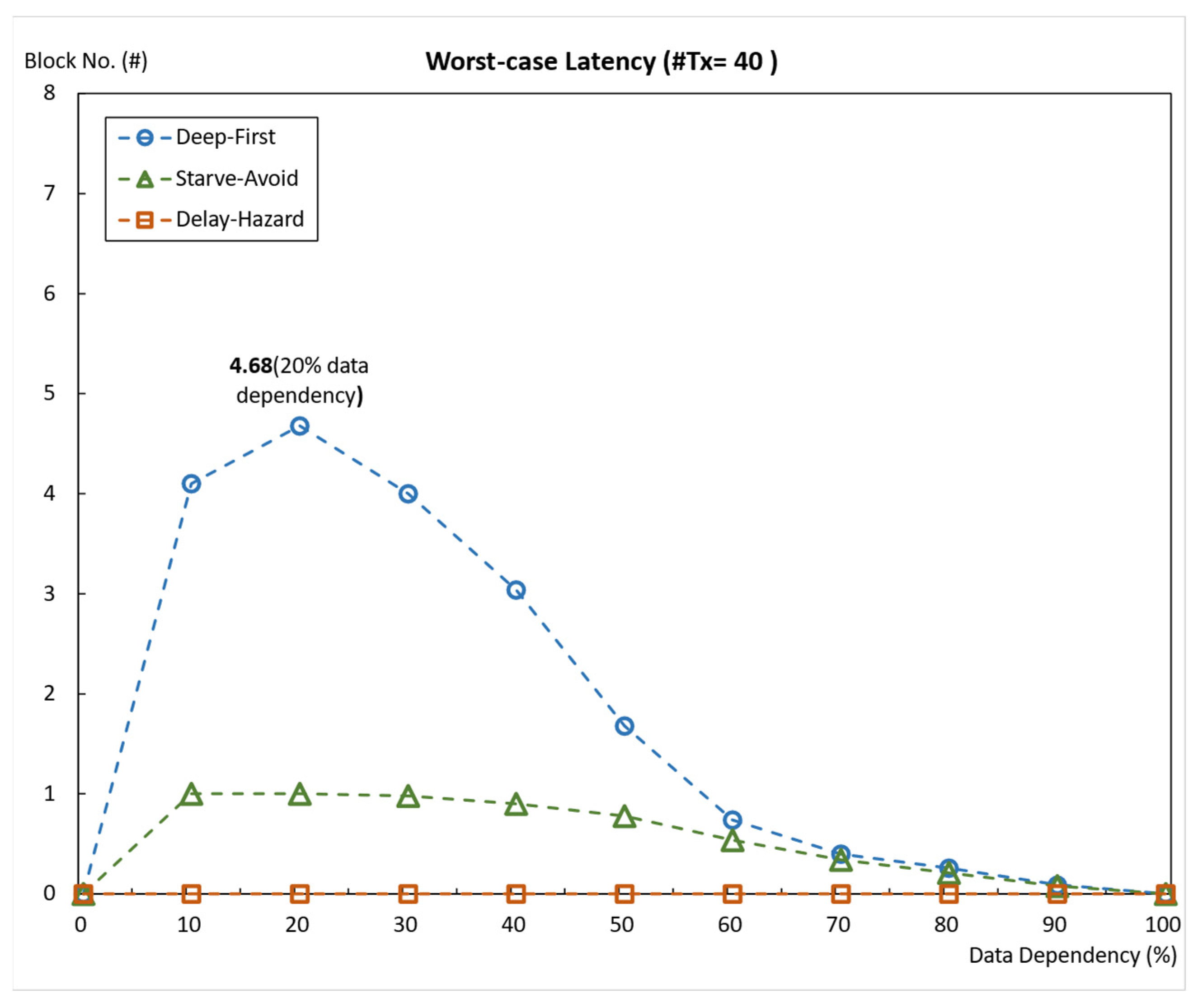

5.2.3. Average Throughput and Average Worst-Case Latency When #Tx = 40

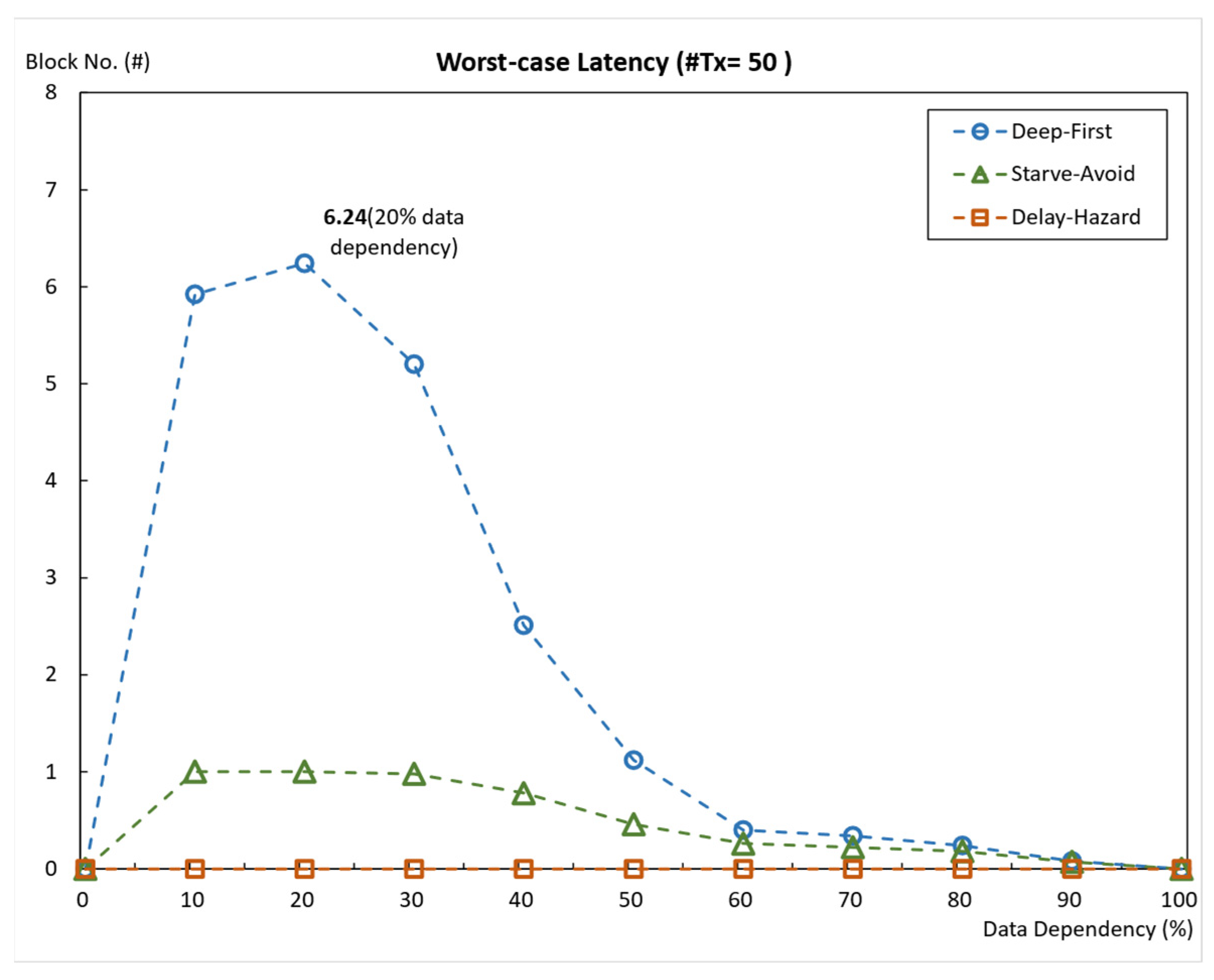

5.2.4. Average Throughput and Average Worst-Case Latency When #Tx = 50

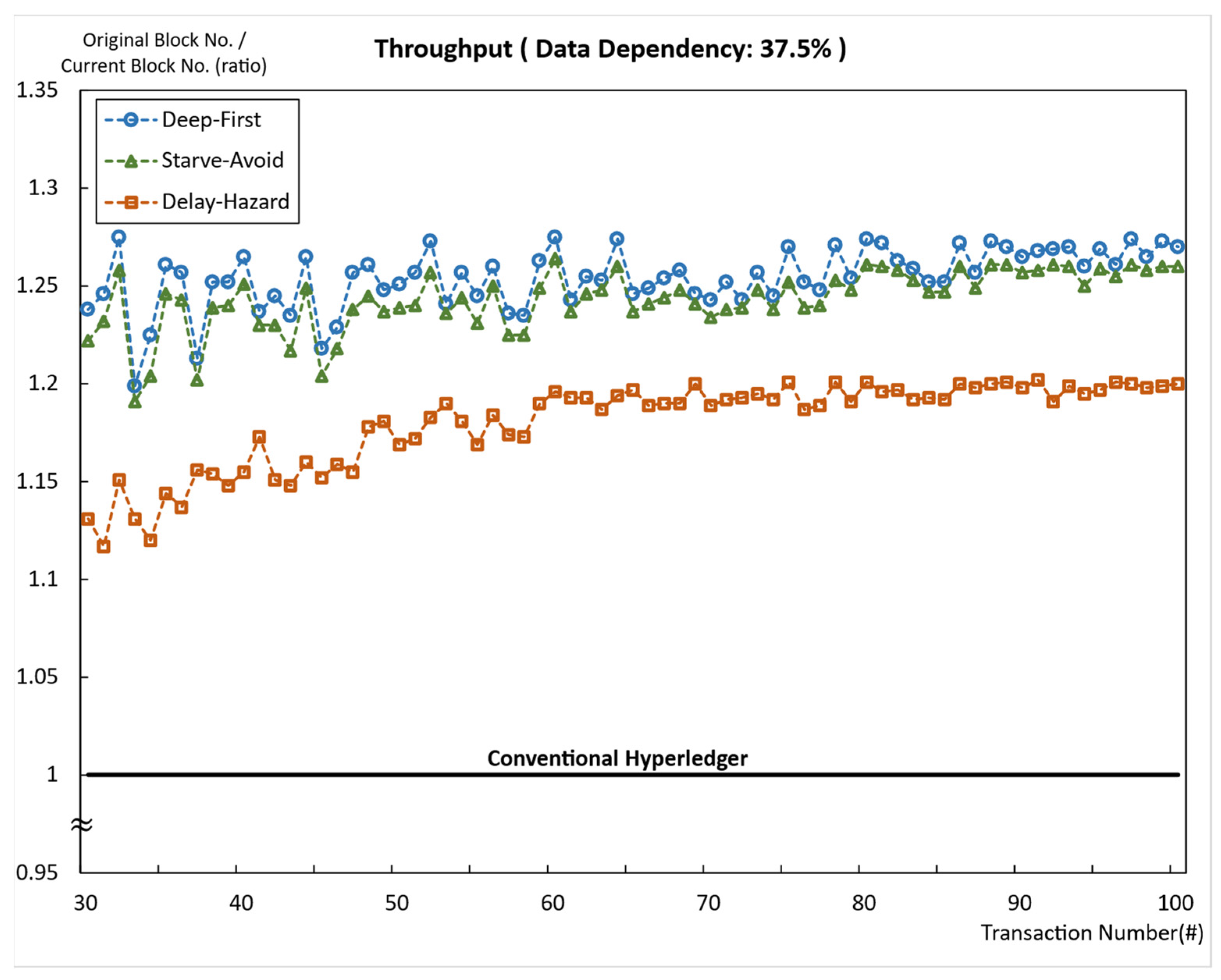

5.2.5. Average Throughput at Fixed Data Dependency of 37.5%

5.2.6. Latency Distribution with 50 Transactions

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tapscott, D.; Tapscott, A.; Revolution, B. How the technology behind bitcoin is changing money, business, and the world. Inf. Syst. 2016, 100–150. [Google Scholar]

- Zikratov, I.; Kuzmin, A.; Akimenko, V.; Niculichev, V.; Yalansky, L. Ensuring data integrity using blockchain technology. In Proceedings of the 2017 20th Conference of Open Innovations Association (FRUCT), St. Petersburg, Russia, 3–7 April 2017; pp. 534–539. [Google Scholar]

- Introduction to Hyperledger Fabric. Available online: https://hyperledger-fabric.readthedocs.io/en/release-2.5/whatis.html (accessed on 12 December 2024).

- Nakamoto, S. Bitcoin: A Peer-to-Peer Electronic Cash System. 2008. Available online: https://bitcoin.org/bitcoin.pdf (accessed on 3 February 2025).

- Ethereum. Available online: https://www.ethereum.org (accessed on 12 December 2024).

- Surjandari, I.; Yusuf, H.; Laoh, E.; Maulida, R. Designing a Permissioned Blockchain Network for the Halal Industry using Hyperledger Fabric with multiple channels and the raft consensus mechanism. J. Big Data 2021, 8, 10. [Google Scholar] [CrossRef]

- Zhang, W.; Anand, T. Ethereum architecture and overview. In Blockchain and Ethereum Smart Contract Solution Development: Dapp Programming with Solidity; Apress: Berkeley, CA, USA, 2022; pp. 209–244. [Google Scholar]

- Ke, Z.; Park, N. Performance modeling and analysis of Hyperledger Fabric. Clust. Comput. 2023, 26, 2681–2699. [Google Scholar] [CrossRef]

- Wen, Y.F.; Hsu, C.M. A performance evaluation of modular functions and state databases for Hyperledger Fabric blockchain systems. J. Supercomput. 2023, 79, 2654–2690. [Google Scholar] [CrossRef]

- Yang, G.; Lee, K.; Lee, K.; Yoo, Y.; Lee, H.; Yoo, C. Resource analysis of blockchain consensus algorithms in hyperledger fabric. IEEE Access 2022, 10, 74902–74920. [Google Scholar] [CrossRef]

- Baliga, A.; Solanki, N.; Verekar, S.; Pednekar, A.; Kamat, P.; Chatterjee, S. Performance characterization of hyperledger fabric. In Proceedings of the 2018 Crypto Valley Conference on Blockchain Technology (CVCBT), Zug, Switzerland, 20–22 June 2018; pp. 65–74. [Google Scholar]

- Khatri, S.; al-Sulbi, K.; Attaallah, A.; Ansari, M.T.J.; Agrawal, A.; Kumar, R. Enhancing Healthcare Management during COVID-19: A Patient-Centric Architectural Framework Enabled by Hyperledger Fabric Blockchain. Information 2023, 14, 425. [Google Scholar] [CrossRef]

- Park, I.H.; Moon, S.J.; Lee, B.S.; Jang, J.W. A p2p surplus energy trade among neighbors based on hyperledger fabric blockchain. In Information Science and Applications: ICISA 2019; Springer: Singapore, 2020; pp. 65–72. 2p. [Google Scholar]

- Rehan, M.; Javed, A.R.; Kryvinska, N.; Gadekallu, T.R.; Srivastava, G.; Jalil, Z. Supply chain management using an industrial internet of things hyperledger fabric network. Hum. Centric Comput. Inf. Sci. 2023, 13, 4. [Google Scholar]

- Chacko, J.A.; Mayer, R.; Jacobsen, H.A. Why do my blockchain transactions fail? In A study of hyperledger fabric. In Proceedings of the 2021 International Conference on Management of Data, Xi’an, China, 20–25 June 2021; pp. 221–234. [Google Scholar]

- World State. Available online: https://hyperledger-fabric.readthedocs.io/en/release-2.5/ledger/ledger.html#world-state (accessed on 12 December 2024).

- Read and Write Operations. Available online: https://hyperledger-fabric.readthedocs.io/en/release-2.5/readwrite.html (accessed on 12 December 2024).

- Ji, B.J.; Kuo, T.W. Better Clients, Less Conflicts: Hyperledger Fabric Conflict Avoidance. In Proceedings of the 2024 IEEE International Conference on Blockchain and Cryptocurrency (ICBC), Dublin, Ireland, 27–31 May 2024; pp. 368–376. [Google Scholar]

- Bappy, F.H.; Zaman, T.S.; Sajid, M.S.I.; Pritom, M.M.A.; Islam, T. Maximizing Blockchain Performance: Mitigating Conflicting Transactions through Parallelism and Dependency Management. In Proceedings of the 2024 IEEE International Conference on Blockchain (Blockchain), Copenhagen, Denmark, 19–22 August 2024; pp. 140–147. [Google Scholar]

- What Is a Ledger? Available online: https://hyperledger-fabric.readthedocs.io/en/release-2.5/ledger/ledger.html#what-is-a-ledger (accessed on 12 December 2024).

- High-Throughput. Available online: https://github.com/hyperledger/fabric-samples/tree/main/high-throughput (accessed on 12 December 2024).

- Amiri, M.J.; Agrawal, D.; El Abbadi, A. Parblockchain: Leveraging transaction parallelism in permissioned blockchain systems. In Proceedings of the 2019 IEEE 39th International Conference on Distributed Computing Systems (ICDCS), Dallas, TX, USA, 7–9 July 2019; pp. 1337–1347. [Google Scholar]

- Serra, E.; Spezzano, F. HTFabric: A Fast Re-ordering and Parallel Re-execution Method for a High-Throughput Blockchain. In Proceedings of the 33rd ACM International Conference on Information and Knowledge Management, Boise, ID, USA, 21–25 October 2024; pp. 2118–2127. [Google Scholar]

- Leal, F.; Chis, A.E.; González–Vélez, H. Performance evaluation of private ethereum networks. SN Comput. Sci. 2020, 1, 285. [Google Scholar] [CrossRef]

- Hogg, R.V.; Tanis, E.A. Probability and Statistical Inference; Prentice Hall: Upper Saddle River, NJ, USA, 2001. [Google Scholar]

- Jang, J.W.; Choi, S.B.; Prasanna, V.K. Energy-and time-efficient matrix multiplication on FPGAs. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2005, 13, 1305–1319. [Google Scholar] [CrossRef]

| Ethereum | Conventional Hyperledger Fabric | Our Method | Speed Up (Our Method) | |

|---|---|---|---|---|

| Blockchain type | Private | Private | Private | - |

| Throughput (TPS) at 0% data dependency | 21 | 253 (12 ×) | 253 (12 ×) | 0% |

| 40% data dependency | 21 | 135 (6.4 ×) | 171 (8.14 ×) | 27% |

| 50% data dependency | 21 | 108 (5.1 ×) | 132 (6.2 ×) | 22% |

| 100% data dependency | 21 | 8 (0.4 ×) | 8 (0.4 ×) | 0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, J.-W.; Song, J.-G.; Park, I.-H.; Jo, D.-H.; Kim, Y.-J.; Jang, J.-W. Dependency Reduction Techniques for Performance Improvement of Hyperledger Fabric Blockchain. Big Data Cogn. Comput. 2025, 9, 32. https://doi.org/10.3390/bdcc9020032

Kim J-W, Song J-G, Park I-H, Jo D-H, Kim Y-J, Jang J-W. Dependency Reduction Techniques for Performance Improvement of Hyperledger Fabric Blockchain. Big Data and Cognitive Computing. 2025; 9(2):32. https://doi.org/10.3390/bdcc9020032

Chicago/Turabian StyleKim, Ju-Won, Jae-Geun Song, In-Hwan Park, Dong-Hwan Jo, Yong-Jin Kim, and Ju-Wook Jang. 2025. "Dependency Reduction Techniques for Performance Improvement of Hyperledger Fabric Blockchain" Big Data and Cognitive Computing 9, no. 2: 32. https://doi.org/10.3390/bdcc9020032

APA StyleKim, J.-W., Song, J.-G., Park, I.-H., Jo, D.-H., Kim, Y.-J., & Jang, J.-W. (2025). Dependency Reduction Techniques for Performance Improvement of Hyperledger Fabric Blockchain. Big Data and Cognitive Computing, 9(2), 32. https://doi.org/10.3390/bdcc9020032