1. Introduction

Social Media affects all aspects of human lives; the way they think, act, talk, and behave, and what they buy, dress, and eat. It transcends boundaries, connecting diverse global audiences and cultures [

1]. Social media usage spans across various demographics and is expected to grow to new users over time. While it has brought numerous benefits and opportunities, it has also raised concerns about privacy, misinformation, addiction, and other psychological impacts [

1]. As social media evolves and shapes society, it is crucial to understand its effects and implications. Like any tool, social media can be used for both constructive and harmful purposes, such as sharing useful information, saving people’s time and money, or manipulation and scamming others for personal gains [

2].

Like any enterprise striving to flourish and surpass competitors in its industry, social media platforms are constantly experimenting with various algorithms and techniques to determine which content should be prioritized and promoted to attract more users and generate greater profits, while burying and concealing other content. The primary measure for this is the level of engagement a user receives on their content, which can manifest in various forms and names, contingent on the platform. These may include actions such as “like,” “dislike,” “love,” “angry,” “comment,” “share,” “retweet,” “report,” “follow,” and “unfollow,” among others. A prevalent method for gauging a user’s popularity is by tallying their number of followers, as well as the amount of likes or retweets their content receives. Malicious actors can exploit this fact through various ways to reach people, spread their poisons and achieve their goals [

3].

In the real world, a person can be easily identified and verified through some unique official documents that are issued only to him and hard to forfeit (ID, driving license, and passport). These documents contain a set of identifiers that cannot be duplicated by any means, such as name, fingerprints, photograph, birth date, and blood type, etc. The situation is much different on social media, where each user is identified by a profile, including some basic information about him, such as his name, photo, and birth date, with no real authority to check and validate this data. To create an account, most of the platforms require only one unique email. So, with no real unified checking authority, it has become a very easy task to forge or fake your identity on social media [

4].

The identity of a person on social media can be either real, false, or fake. A real identity, having only one unique account that is created using his information and being used solely and freely by the person himself. In the case of a false identity, a user would use someone else’s (mostly a celebrity, famous figure, or organization) identity and information to create an account and disguise himself as the celebrity. In case of a fake identity, a person would create accounts using invented names and information that may coincide with real persons [

5]. Detecting false identities is relatively easier than detecting fake identities. The focus of this study is to capture these fake accounts on Twitter created using some invented identities for various malicious causes such as sharing fake news, misleading web ratings, and spam.

Problem Definition and Motivation

Social media has brought an overwhelming influx of news and information spanning across every corner of the globe. With a diverse range of individuals behind these posts, each driven by their own unique intentions and agendas, it becomes increasingly challenging, and sometimes even impossible, to ensure that every piece of content shared on these platforms is both ethical and reliable. Hence, it is important to have an automated process capable of differentiating fake from real content. This includes identifying accounts that are dedicated to broadcasting false information, known as fake accounts. This study aims to address the significant threat posed by fake accounts on social media, which can propagate various forms of harmful content, spam, and misinformation. Examples of fraudulent activities that fake accounts can engage in include posting harmful links, spamming mass follow/unfollow requests, repeatedly sharing unrelated links and ads, stealing personal information, as well as disrupting online communities by ways of trolling, hate speech, and harassment. The goal of this study is to differentiate between fake and real accounts on X (formerly Twitter) by leveraging user information and tweets.

2. Related Work

Social media researchers and platforms use various methods and techniques to detect fraudulent accounts. [

3] broadly categorized these methods into two types:

Techniques that focus on analyzing the traits and relationships of individual accounts (profile-based and graph-based methods);

Techniques that aim to detect organized activity by large groups of accounts (e.g.,crowdturfing).

In [

4], with an accuracy of 84.5%, the authors have recognized 23 characteristics for categorizing Twitter spammer/non spammer accounts. Ref. [

6] utilized a smaller set of 10 features, with less promising results. The work described in [

7] employs an SVM to classify fake profiles in a Facebook game. To do so, they extract 13 features for each game user. Instead of using some individual profile-based features, other approaches relied on graph-based features in this classification task [

8,

9]. The work described in [

10] relies on the observation that fake profiles tend to establish connections predominantly with other fake profiles rather than legitimate ones. As a result, a distinct separation exists between the subgraphs comprising fake and non-fake accounts in the graph. While on the other hand, the authors of [

11] claim the opposite; fake accounts do not exclusively associate with other fake accounts, and present a new detection methodology centered around the analysis of features exhibited by victims’ accounts. Another approach used by researchers in this context is the study of the change of user behavior, which may happen in cases like the exploitation of a legitimate account that temporally acts maliciously. For example, anomalous behavior can be detected by monitoring some features such as timing and the origin of messages, language, and message topic, URLs, use of direct interaction, geographical proximity [

12].

In the approaches trying to capture coordinated activities of large groups of accounts, the black market and fake accounts online sellers play a major role, with a large segment of customers that may vary from celebrities, politicians, to cyber criminals. Ref. [

13] describes the characteristics of the Twitter follower market, arguing that there are two major types of accounts that may follow a customer: either fake accounts or compromised accounts. The work described in [

14] shows the danger of crowdturfing, in which workers are paid to express a false digital impression. They start first by describing the operational structure of such systems and how it can be used to build a whole fake campaign. The authors build a benign campaign on their own to show how easy and dangerous the process can be. Ref. [

15] conducted research on the feasibility of using machine learning techniques to identify crowdturfing campaigns, as well as the resilience of these methods against potential evasive tactics employed by malicious actors. According to their findings, traditional machine learning methods can effectively detect crowdturfing workers with an accuracy rate of 95–99%. However, these detection mechanisms can be circumvented if the workers modify their behavior.

Most of the works mentioned earlier require historical data as they heavily depend on extensive content or behavior. Therefore, they encounter a significant drawback of time delay, whereby the fake user may have already executed numerous malicious activities before being identified and eliminated. To address this concern, Ref. [

16] developed a system to detect fake accounts directly after registration. Their approach involves analyzing both user behavior and content to identify any suspicious patterns or activities. The methodology includes the extraction of synchronization-based and anomaly-based features. The authors in [

17,

18] present a method for detecting social spammers by using social honeypots and machine learning. Initially, multiple honeypots were deployed to lure social spammers, and then an ML model is trained using features derived from the gathered data of these honeypots. The work described in [

19] examines various features used in detecting Twitter accounts, creating a dataset comprising both human and fake follower accounts. They then evaluate several machine learning classifiers using this dataset and introduce their own classifier. The authors found that features based on the friends and followers of the account being investigated yielded the best results among all the analyzed features .

Another approach to tackling the issue of detecting spam on Twitter is to concentrate on the tweet itself instead of user information. Ref. [

20] proposed a method in which features were extracted from the content of the tweet, such as the presence of particular keywords, URLs, and hashtags, as well as tweet structure (e.g., length, capitalization, and punctuation usage). Additionally, the temporal pattern of tweets was analyzed as a feature, with the authors discovering that spam tweets are frequently posted in bursts, while genuine tweets are more evenly distributed over time. The authors utilized machine learning algorithms to discover patterns in these features and differentiate between spam and genuine tweets. In [

21], the authors suggest a method for performing sentiment analysis on Twitter data by utilizing the Naïve Bayes classifier. Using a dataset of 10,000 tweets collected from Twitter and manually categorized as positive, negative, or neutral. Various features are extracted from the preprocessed data, such as specific words or phrases, and used to train the Naïve Bayes classifier. The results of the study indicated an accuracy of 80.5% for sentiment classification of Twitter data using this approach. To tackle evasion techniques used by spammers, some works try to use more robust features; Refs. [

22,

23] propose some hybrid frameworks; Ref. [

22] exploits users’metadata, graph-based and text-based features, and found that Random Forest gives the best performance. Similarly, Ref. [

23] use four sets of features in theirclassification, including account-based, text-based, graph-based, and automation-based features. Other approaches rely on DL techniques, e.g., Ref. [

24] uses Word2Vec deep learning technique to preprocess their dataset and then assign a multi-dimensional vector to a tweet. Ref. [

25] developed two classifiers: a text-based classifier that only considers user tweet text, and a combined classifier that uses CNNs to identify relevant features from high-dimensional vector representations of tweet text and applies Dense layers on users’ metadata information. Overall, the combined model shows better results.

Recent works utilize more complex architectures and hybrid approaches to enhance fake account detection on platforms like X. For instance, Ref. [

26] introduced BotMoE, a mixture-of-experts model using community-aware features for Twitter bots, achieving high precision via modal-specific experts. Ref. [

27] developed Twibot-22, a graph benchmark integrating profiles, text, and networks for scalable detection. Ref. [

28] proposed BotArtist, a semi-automatic pipeline on profile features across nine datasets, outperforming 35 baselines (F1: 83.19%) with low API costs. Recent multimodal works align with our hybrid approach. For instance, the work described in [

29] presented TMTM, combining RoBERTa text embeddings, user features (46 attributes), and relational graph neural networks (R-GCN) on Twibot-22, improving F1 by 5.48% via enriched features, highlighting text’s marginal gains, echoing our findings. The work described in [

30] used CNNs on Twitter profile images/metadata, reaching 92% accuracy for bots/cyborgs, but noted real-world drops due to dynamic behaviors. Ref. [

31] offered an unsupervised Sliding Histogram for coordinated fake-follower campaigns, detecting anomalies in follow patterns without labels, scalable for large-scale analysis. Ref. [

32] explored human-bot detection psychology, finding feature overlaps challenge simple classifiers. Globally, the work described in [

33] compared bot/human traits, revealing 20% bot chatter with distinct patterns. Ref. [

34] characterized spam prevalence, linking 22% of news links to top bots.

Our work will build on these as a baseline, Simple Dense and LSTM hybrids for reproducibility, focusing on optimizer impacts amid evolving platforms [

34]. Unlike transformer-heavy models [

26,

29], we prioritize scalability over complexity.

To contextualize our approach,

Table 1 summarizes and compares key characteristics of some representative recent fake account detection works, including methods, datasets, and reported performance metrics.

Shortcomings of the related work may be enumerated as follows:

Narrow Focus: Many of the works discussed in the literature review target specific types of fake users or spammers within a narrow domain. This limited focus may not capture the full range of fraudulent activities on social media platforms.

Dependency on Historical Data: Several approaches heavily rely on historical data, either content-based or behavior-based, which poses a significant drawback of time delay. By the time the fake user is identified and eliminated, they may have already executed numerous malicious activities.

Limited Robustness: Some of the detection methods can be evaded or circumvented if the fraudulent actors modify their behavior or tactics. This undermines the robustness of the detection mechanisms and raises concerns about their effectiveness in real-world scenarios.

Costly Implementation: Certain approaches, such as graph-based methods, can be computationally expensive and require extensive resources for implementation. This can limit their applicability in large-scale settings.

Lack of Comprehensive Features: Some studies focus on a limited set of features, either profile-based or tweet-based, without considering a comprehensive range of indicators. This may result in incomplete detection and overlook certain patterns or characteristics of fake accounts.

Limited Generalizability: The performance of some detection methods may be limited to specific datasets or contexts, making it challenging to generalize their effectiveness to different social media platforms or scenarios.

Lack of Comparative Analysis: Some works present results without a comparative analysis of different methods, making it difficult to assess the relative performance and strengths of each approach.

Incomplete Coverage of Spam Detection: While some studies concentrate on fake user detection, there is a lack of comprehensive coverage of spam detection in the context of Twitter, with limited focus on analyzing tweet content and sentiment analysis.

Overall, the shortcomings of the related work include limited scope, dependency on historical data, potential evasion by fraudulent actors, costly implementation, lack of comprehensive features, limited generalizability, lack of comparative analysis, and incomplete coverage of spam detection. Addressing these limitations would contribute to more effective and robust methods for detecting fraudulent accounts and spam on social media platforms.

5. Train and Test Plan

Data preprocessing was performed on a high-memory virtual machine (96 GB RAM, 24-core Intel Xeon E5-2699 v4 @ 2.20 GHz), while all model training and evaluation were conducted using Google Colab notebooks with GPU/TPU support [

43]. This environment provides pre-installed deep learning libraries (TensorFlow, Keras, PyTorch, Pandas, NumPy) and can be integrated with Weights and Biases (WandB) for experiment tracking [

44].

A total of seven model architectures were implemented: four one-branch models (F-1 to F-4) using only user metadata, and three two-branch models (2B1 to 2B3) combining metadata with tweet embeddings. Each model was trained with four optimizers: Stochastic Gradient Descent (SGD) [

45], RMSprop [

46], Adam [

47], and Adadelta [

48]. Additionally, two variants per architecture used SGD with a learning rate reducer (ReduceLROnPlateau, factor = 0.1, patience = 5 on validation loss) [

49].

All models were trained for up to 200 epochs with a batch size of 64 and an initial learning rate of 0.015 (or 0.001 for learning rate reducer variants). Early stopping (patience = 7 epochs on validation loss) [

50] was applied universally to prevent overfitting. Hyperparameters were initialized based on values commonly used in prior social media classification studies and refined through iterative validation to ensure stable convergence under resource-constrained settings.

Hyperparameter configurations were evaluated through independent training runs for each optimizer and learning rate setting, with model selection guided by early-stopped validation performance and final comparison on the held-out test set. All experiments were tracked using Weights and Biases (WandB), ensuring full traceability of configurations, metrics, and training dynamics across all trials. The complete logs, including loss curves, confusion matrices, and epoch-level metrics for all models, are archived and accessible through the Python notebook and this

https://drive.google.com/drive/folders/1-AaK8v68WeYvw8KqpOd1TMINqLS3fCIT, accessed on 11 November 2025.

The naming convention follows:

One-branch: F-number-optimizer (e.g., F1-SGD)

Two-branch: 2Bnumber-optimizer (e.g., 2B1-Adam)

With reducer: …-SGD-lr (0.015)

Performance was evaluated on the test set using a comprehensive set of metrics: accuracy, loss, AUC, precision, recall, specificity, F1/F2 scores, and Matthews Correlation Coefficient (MCC) [

51,

52].

5.1. Models

This section details the seven deep learning architectures evaluated in this study, categorized into one-branch (F-1 to F-4) and two-branch (2B1 to 2B3) models. Each model is described with its structure, layer configuration, and design rationale. For full architectural transparency, the complete Keras model summary -including layer types, output shapes, and parameter counts for each variant is provided in

Appendix C.

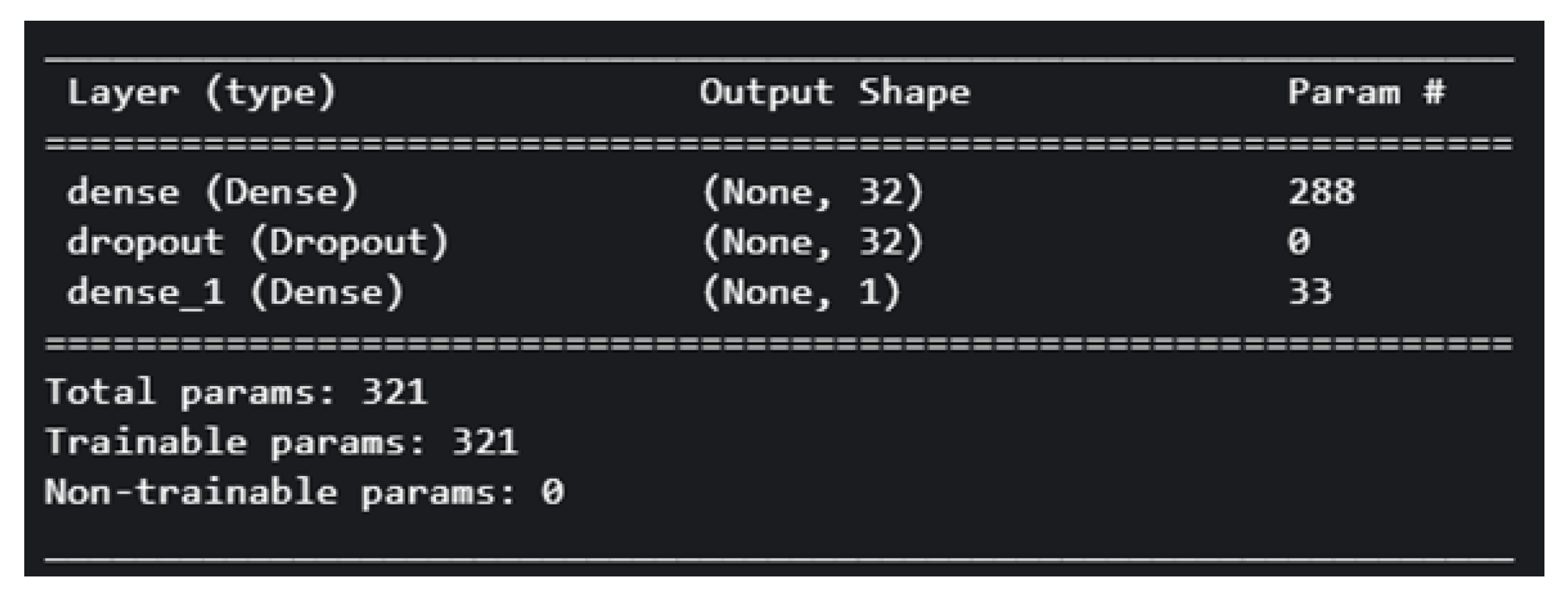

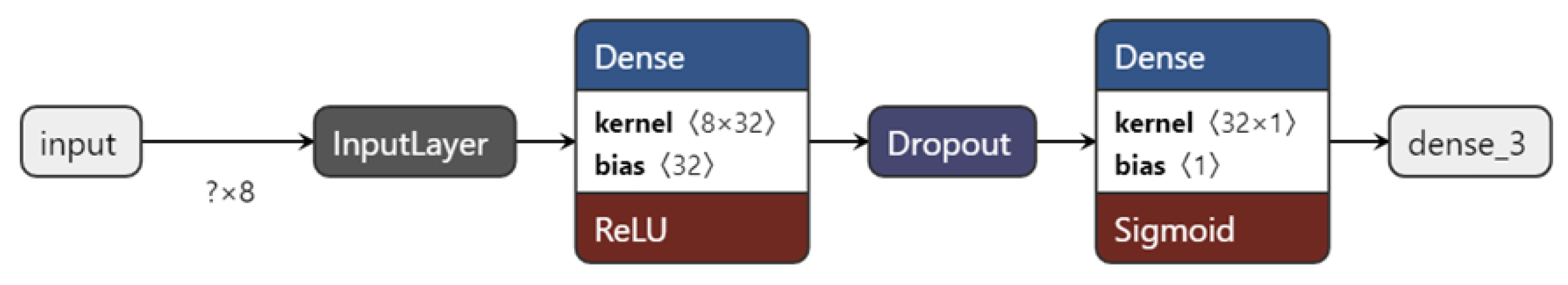

5.1.1. F-1 Model

The implemented model described here is the simplest one in this work, comprising only two Dense layers and Dropout. Dense layers, also known as fully connected layers, connect every neuron to all neurons in the previous and subsequent layers, allowing for the learning of non-linear relationships in data [

53,

54]. Dropout is a technique that randomly selects and deactivates nodes in the network during each training iteration. The selected nodes’ outputs are set to zero, which helps prevent overfitting and promotes a more robust and generalized model [

55]. The F-1 model has a total of 321 trainable parameters.

Figure 8 demonstrate the architecture of the model.

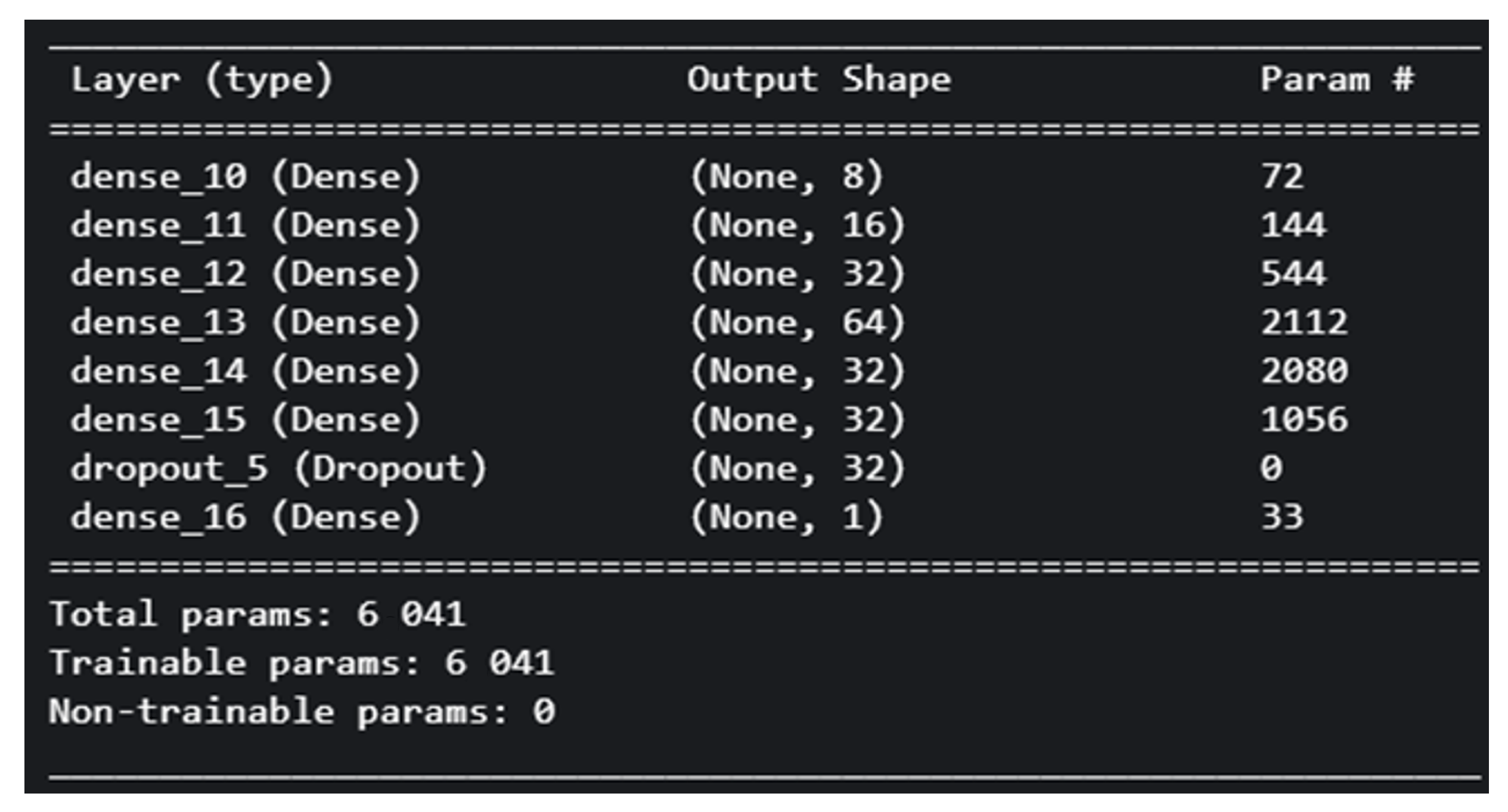

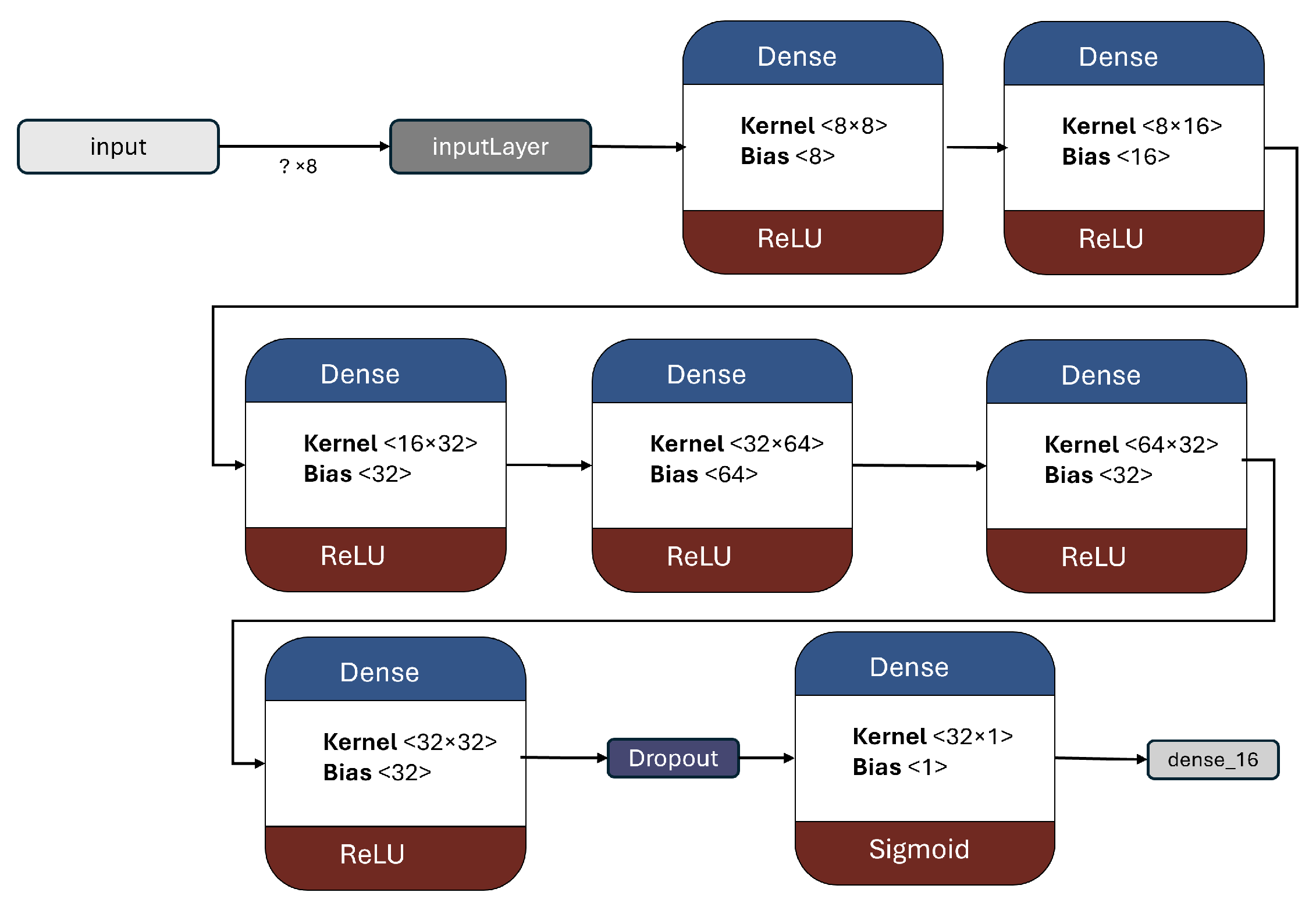

5.1.2. F-2 Model

This model is slightly more complex, featuring 7 Dense layers with a greater number of nodes. Having 6041 trainable parameters.

Figure 9 showcase the architecture of the model and provide a summary of its key components.

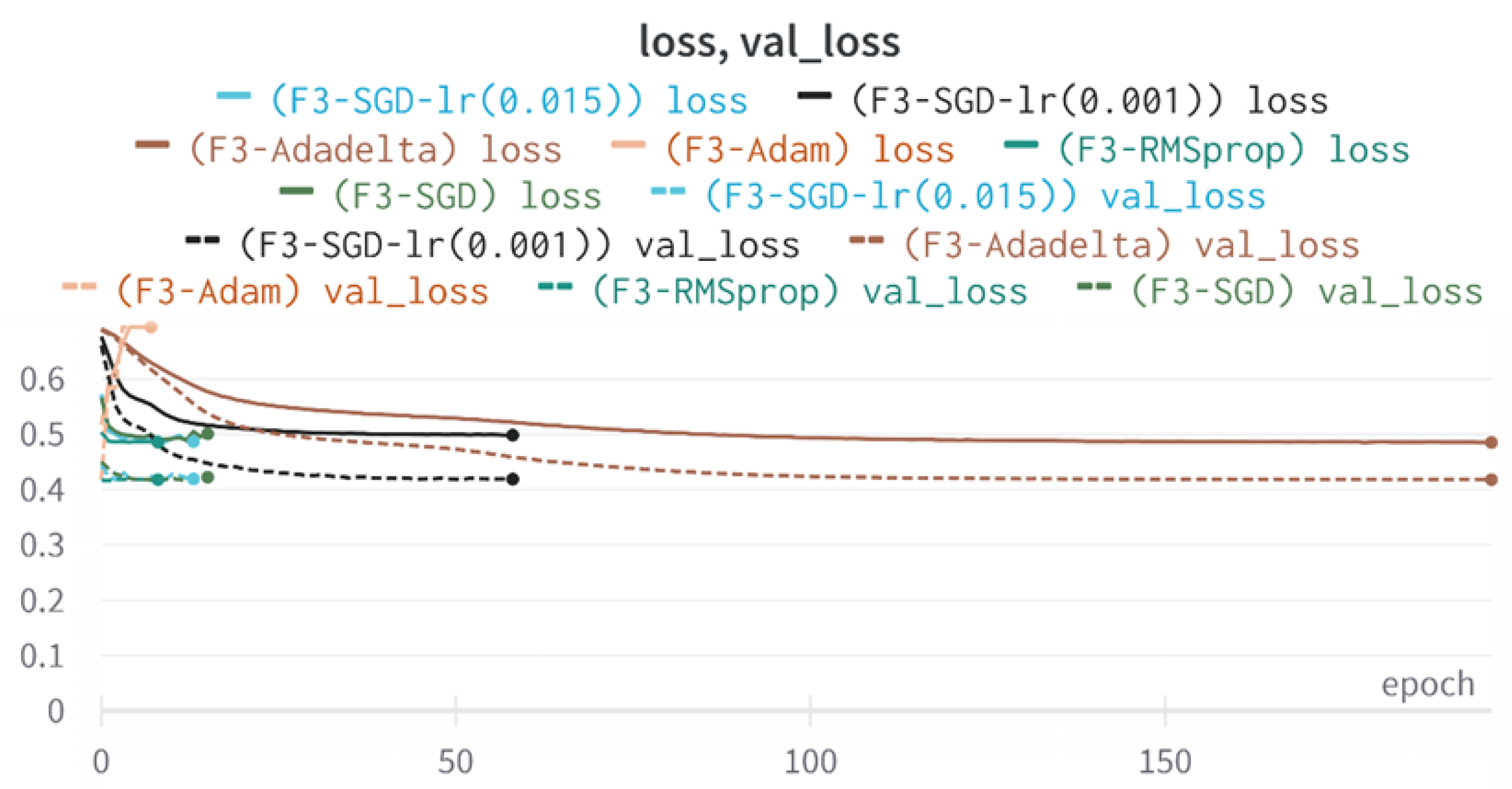

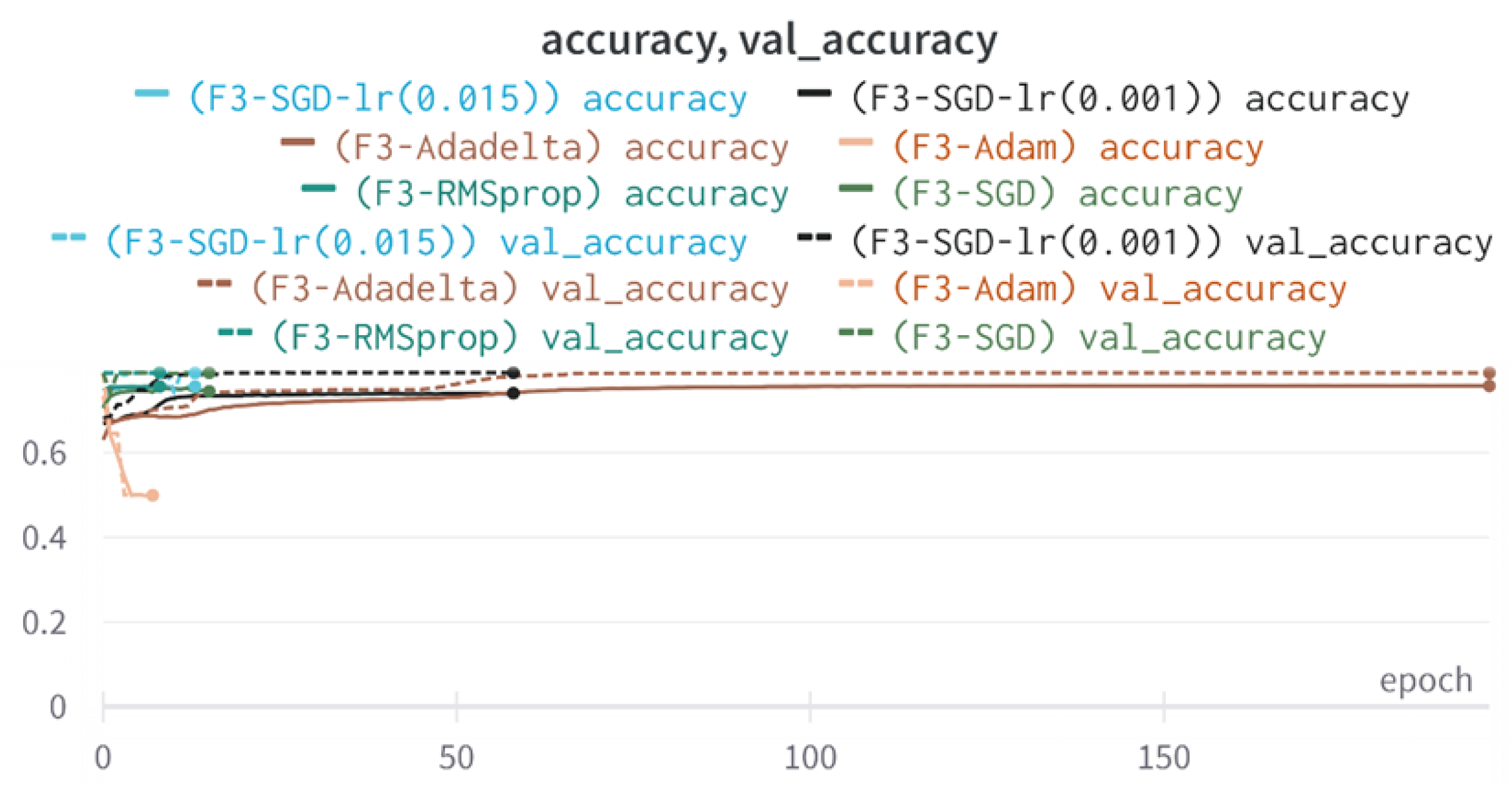

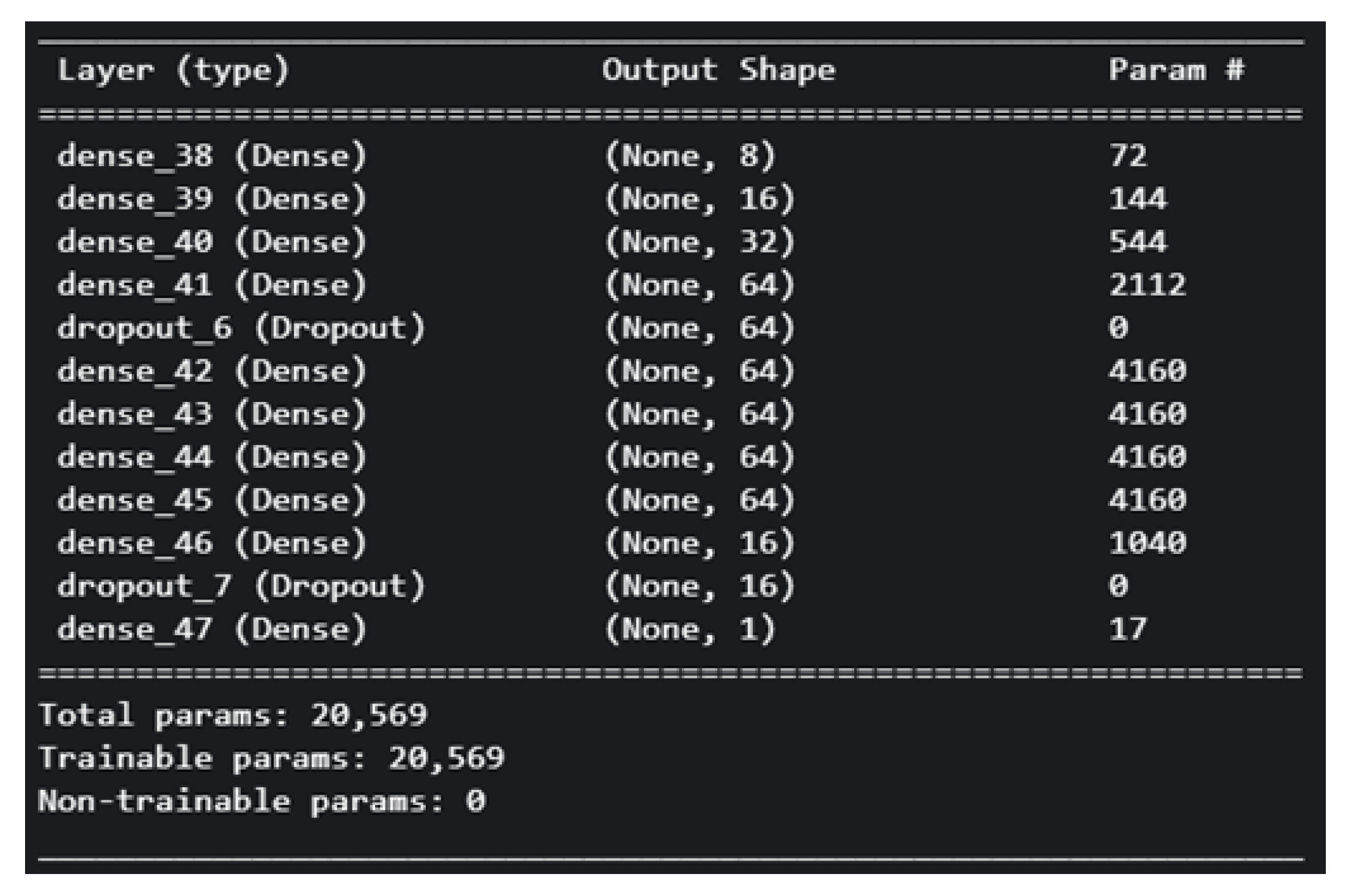

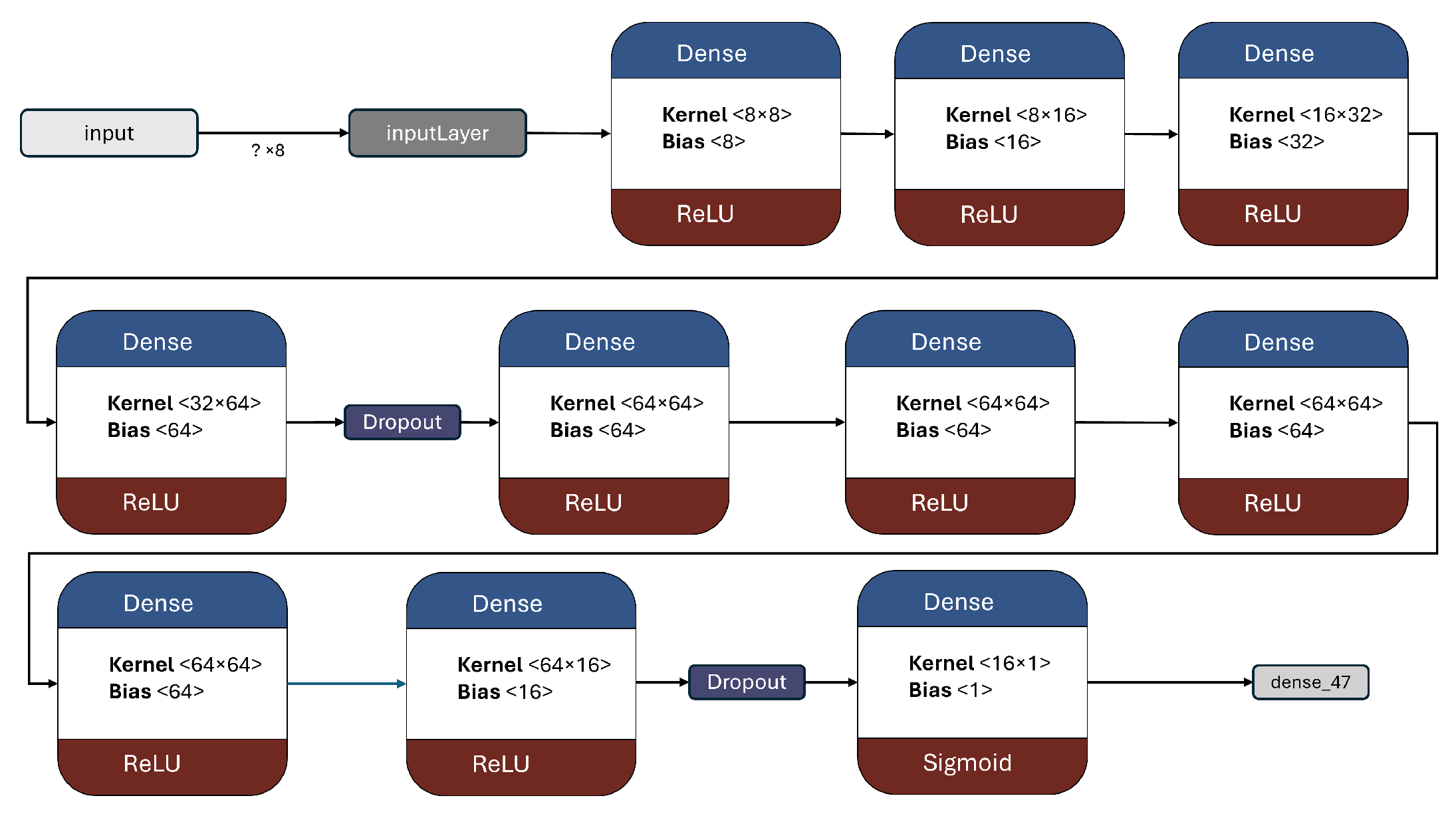

5.1.3. F-3 Model

With approximately 3.5 times the number of parameters as F-2, this model boasts a total of 20,569 trainable parameters. Additional layers were incorporated, and the dropout rate was slightly reduced.

Figure 10 visually represent the architecture of the model.

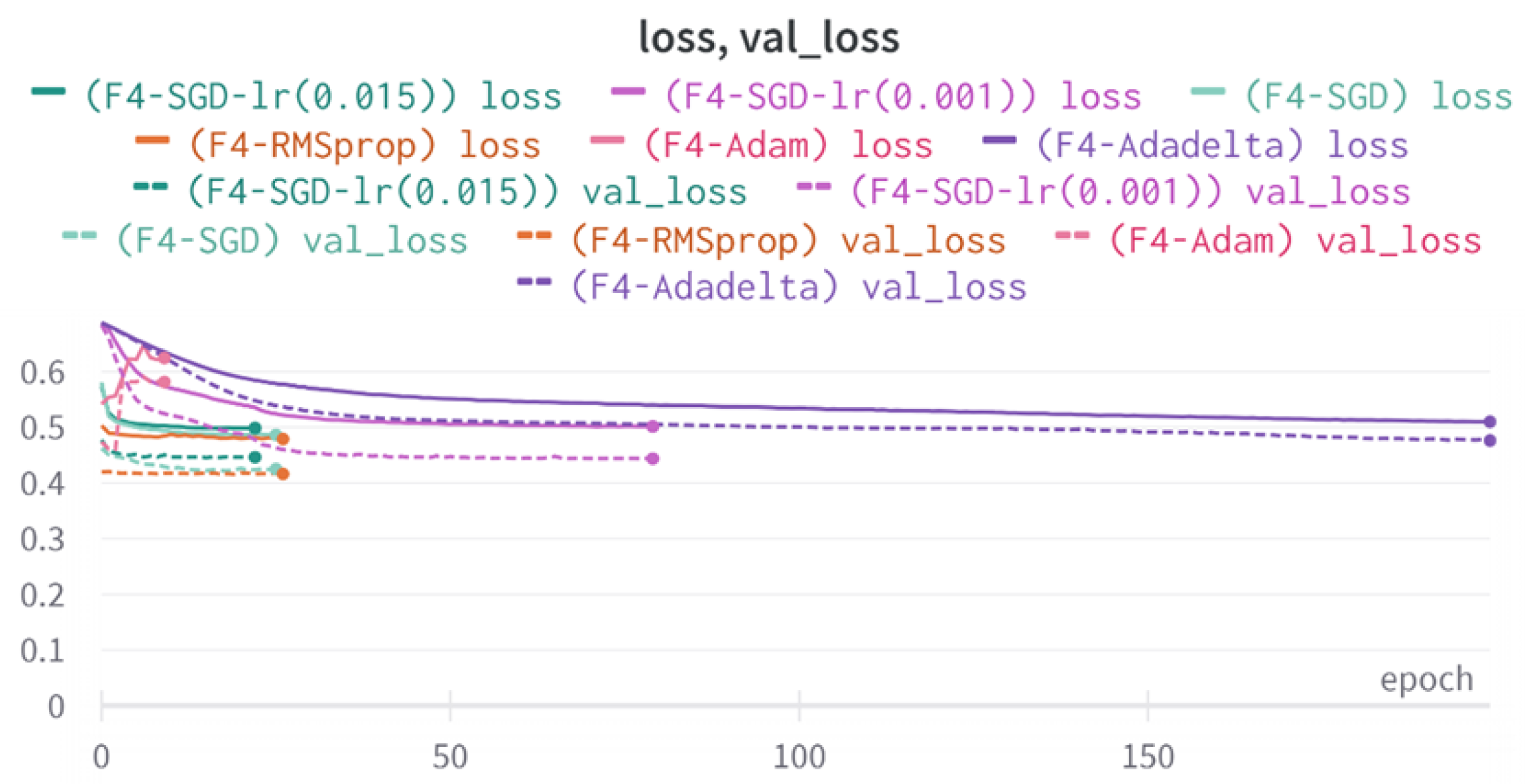

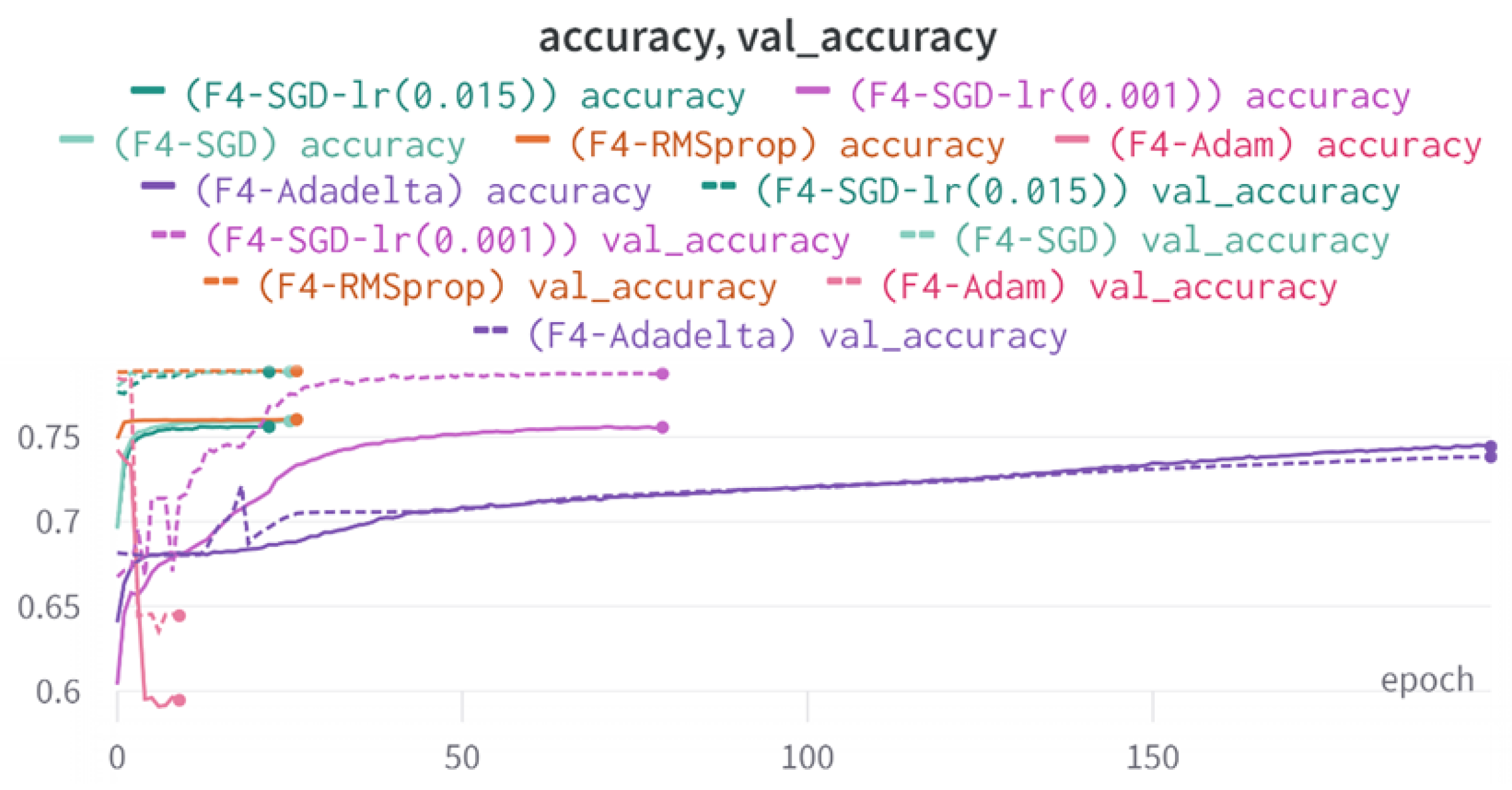

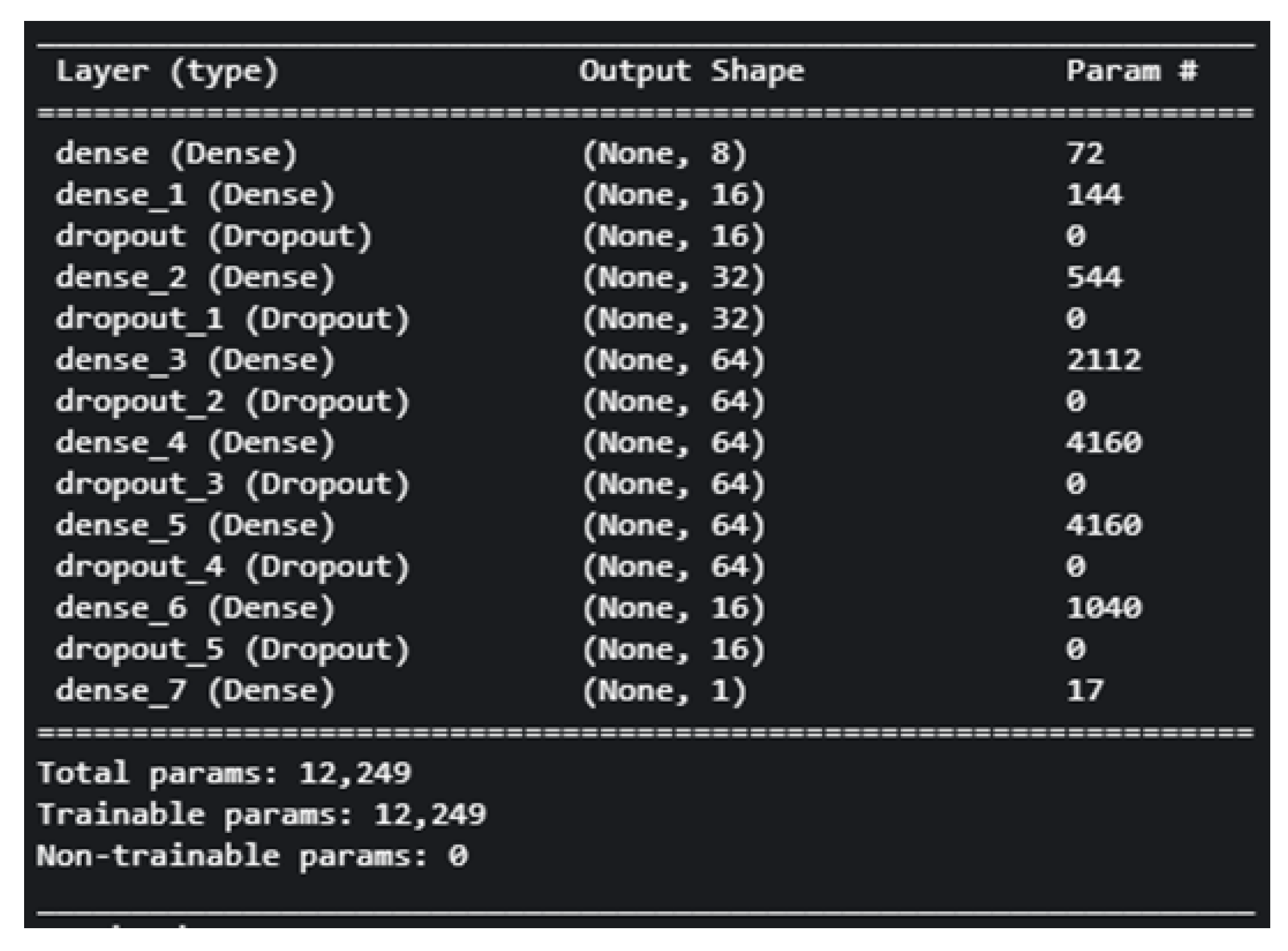

5.1.4. F-4 Model

In order to enhance the stability of loss and potentially improve performance, this model introduced additional dropout layers. This approach aimed to assess whether a reduced number of parameters could still yield favorable outcomes. By incorporating more dropout layers, the model aimed to mitigate overfitting and promote generalization.

Figure 11 demonstrate the model architecture and characteristics.

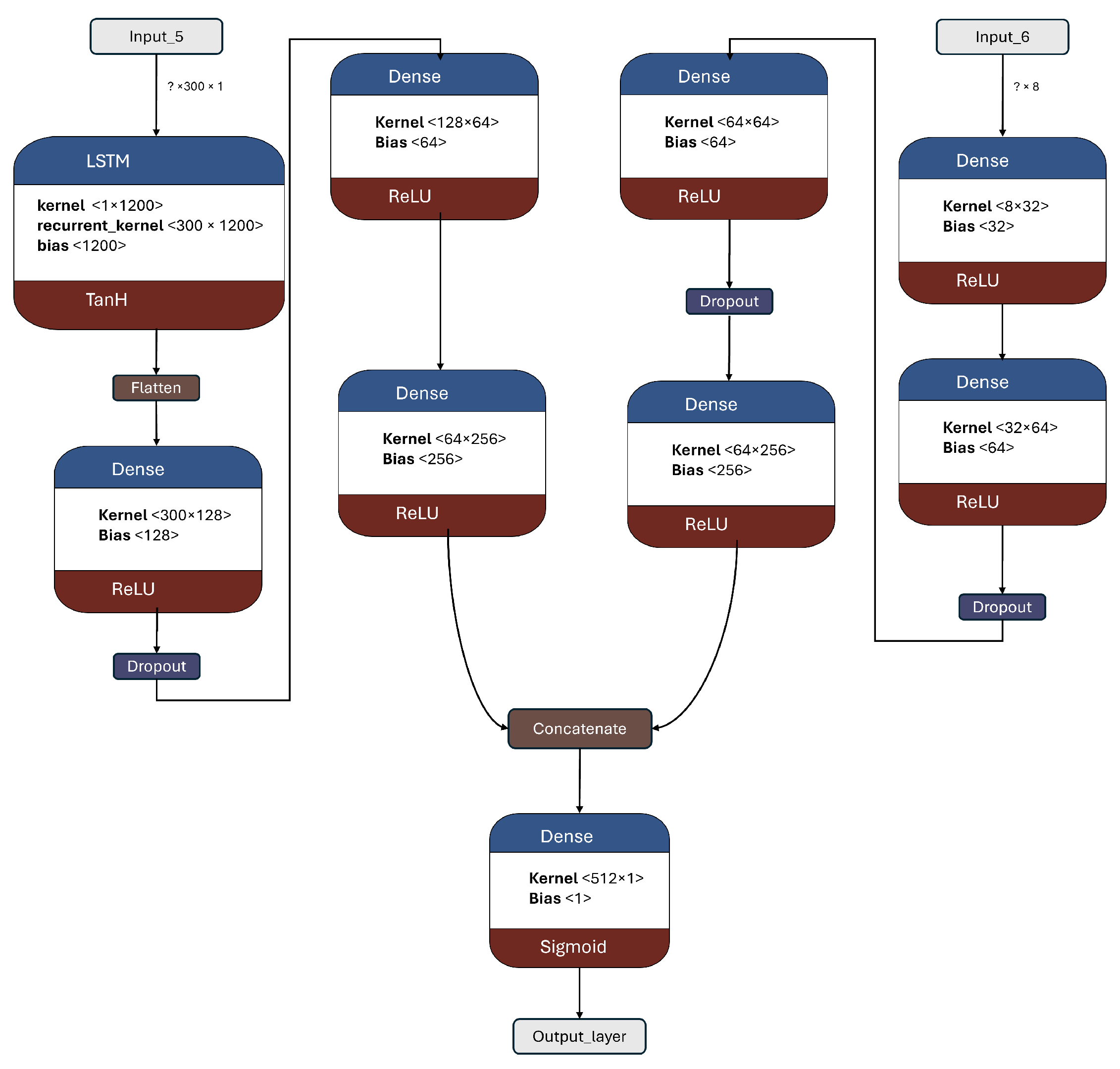

5.1.5. 2B1 Model

This model represents a significant advancement in this work, as it incorporates both user metadata and tweet content in a two-branched architecture. The utilization of LSTM in this model allows for the analysis of contextual relationships within the textual representation of tweets. LSTM has demonstrated its effectiveness in various applications, making it a suitable choice for this task.

During the experimentation process, different types of layers were tested on the tweet representation. However, their performance was either negligible or significantly poorer compared to LSTM. As a result, they were not deemed noteworthy for inclusion in this work. The focus remained on leveraging the capabilities of LSTM to extract meaningful information from the tweet text, thereby enhancing the overall performance of the model. While newer architectures like transformers and GNNs achieve high performance, they require substantial computational resources, complex infrastructure, and often external APIs for graph construction, making them impractical for lightweight, real-time deployment. In contrast, LSTM-based models offer a compelling balance; as they can effectively capture sequential text patterns with minimal overhead, enabling efficient training and deployment [

26,

29,

56].

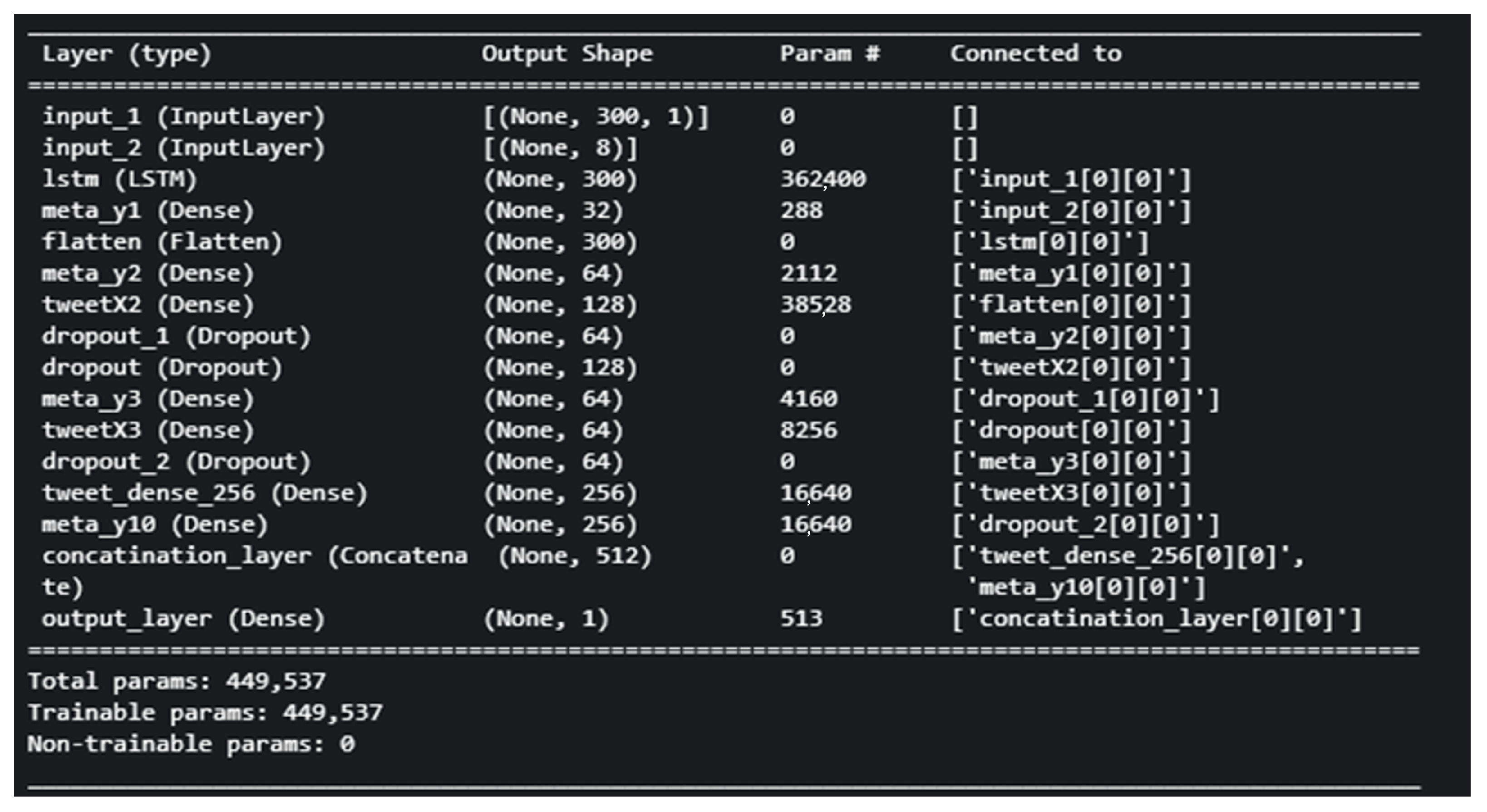

The architecture of the 2B1 model is presented in

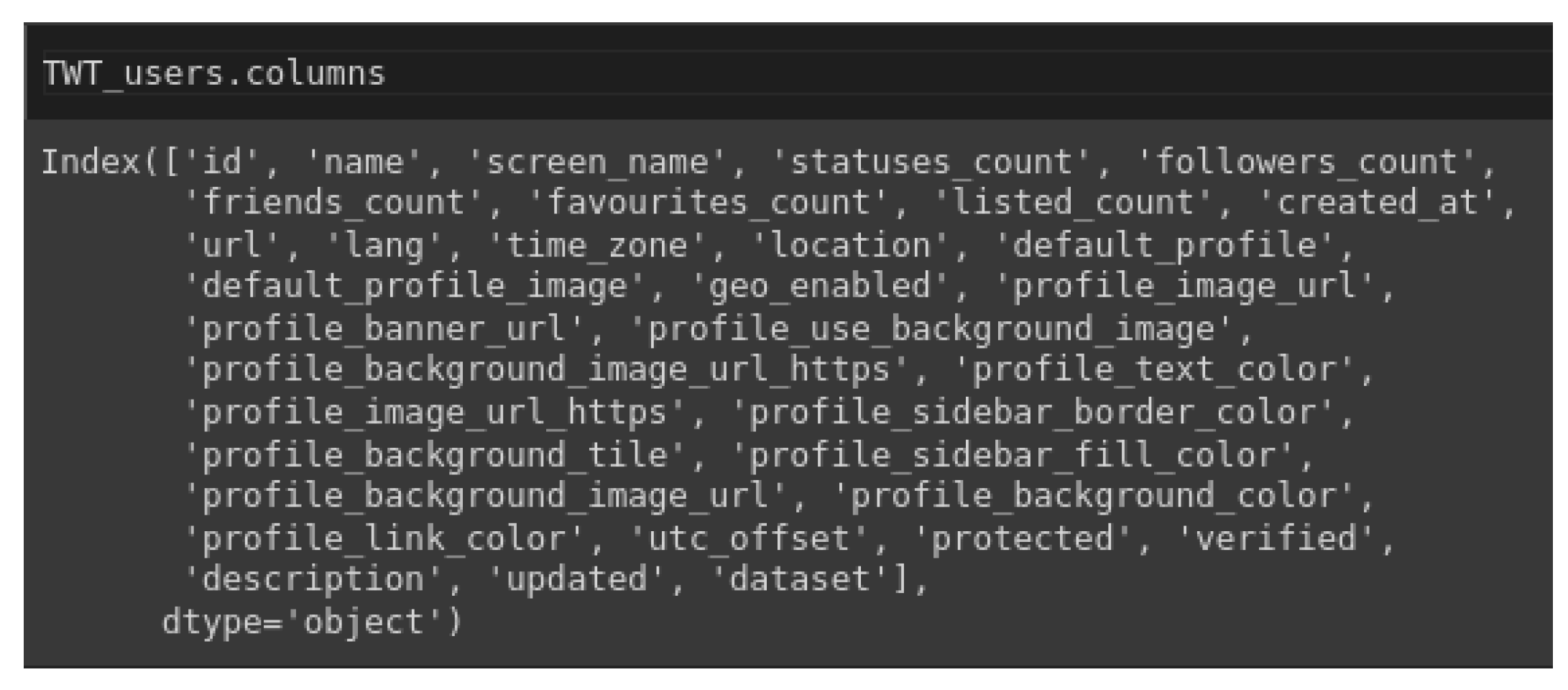

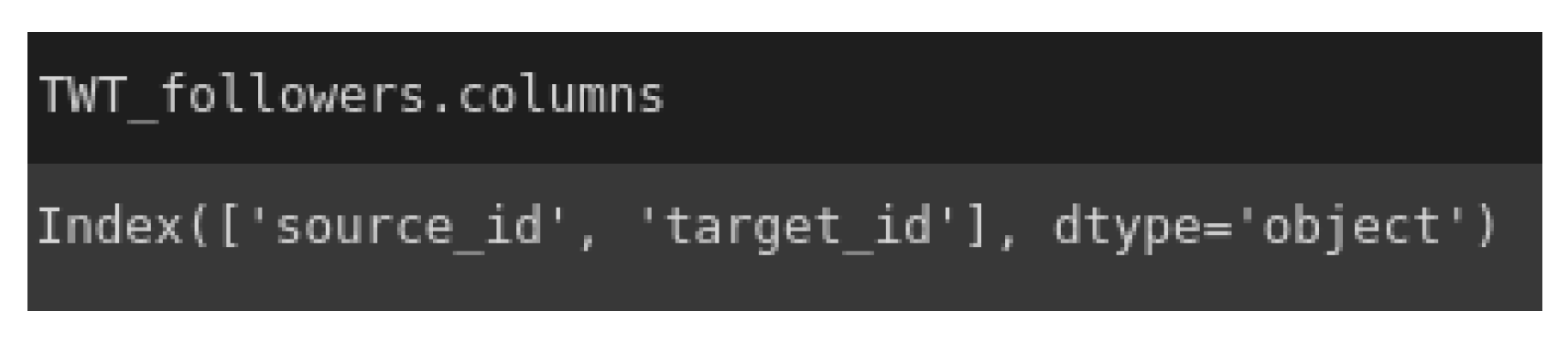

Figure 12. This model is the first two-branched model introduced in this work, with a total of 449,537 trainable parameters. The left branch takes the 300-dimensional tweet representation, which undergoes an LSTM layer [

52] followed by a flatten layer to ensure a one-dimensional array as the next input. Subsequently, several dense layers and dropouts are applied. On the right branch, which receives the eight metadata features as input and passes them through a sequence of four dense layers with dropouts. The two branches are then merged using a concatenation layer, resulting in a one-dimensional output of size 512. Finally, the output layer produces the final prediction.

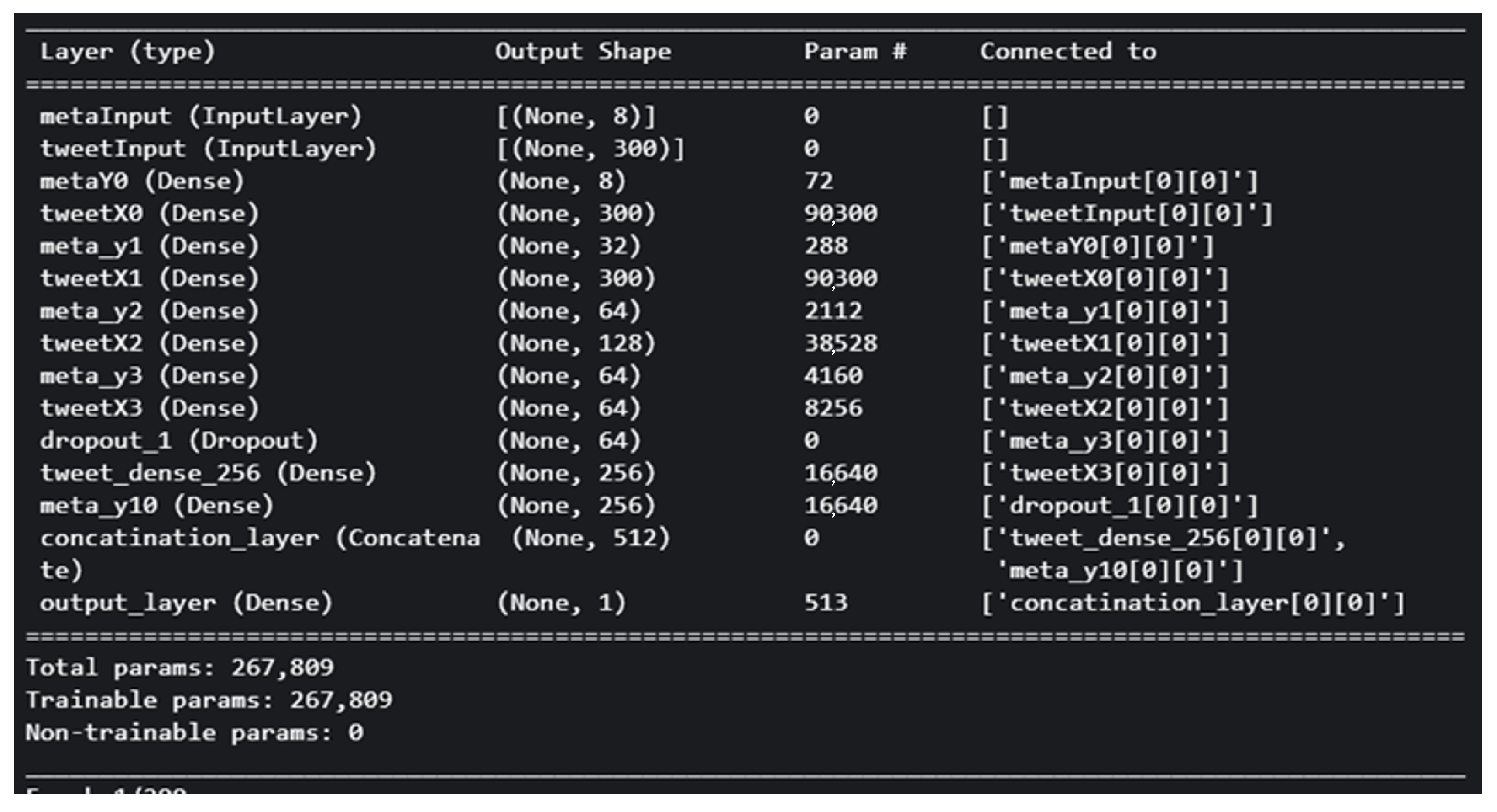

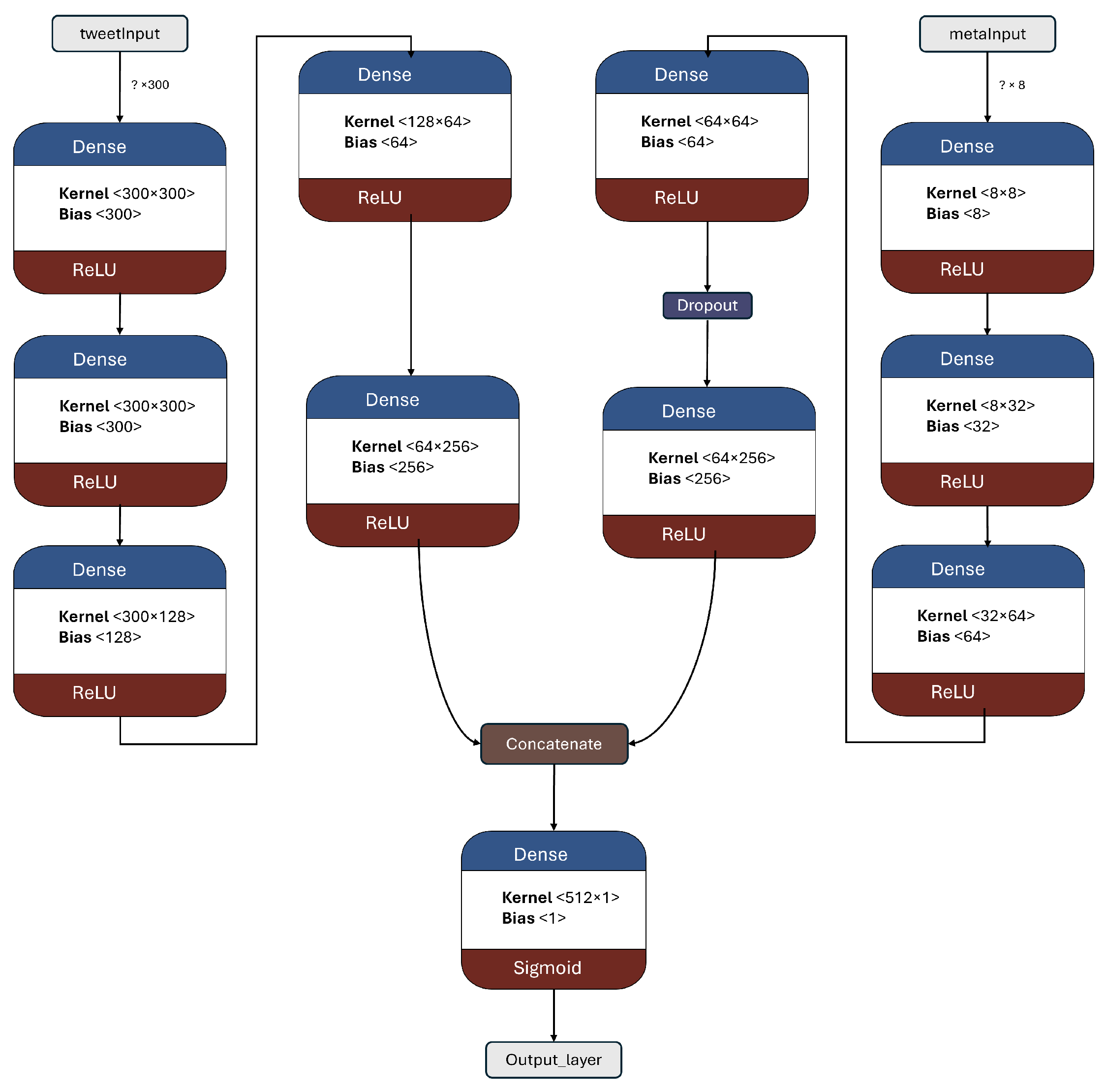

5.1.6. 2B2 Model

In this model, Dense layers are utilized in both branches to explore the possibility of replacing LSTM with a fully connected layer. The objective is to determine whether the model can still capture the main features and relationships within the text representation, or if its performance will deteriorate and become similar to random guessing.

By substituting LSTM with Dense layers, the total number of trainable parameters reduces to 267,809. This modification also leads to a decrease in the training time and memory requirements. LSTM is known for being computational-intensive and is often recommended to be used in conjunction with a GPU and high RAM for efficient computation. The model architecture and summary are demonstrated in

Figure 13. This visualization highlights the changes made to the model. In the tweet text branch, instead of using an LSTM layer, five dense layers are employed. Additionally, an extra dense layer is added to the right branch.

5.1.7. 2B3 Model

This model shares the same architecture and specifications as the 2B1 model (

Figure 12), with the exception that all dropout layers have been removed. In some computer vision tasks, it has been shown that certain regularization techniques like weight decay and dropout may not be necessary when sufficient data augmentation is applied, challenging the conventional belief of their significance [

57]. In this model, the objective is to explore the impact of removing all dropout layers, in this classification context. By eliminating dropouts, the aim is to understand how it affects the model’s performance and generalization capabilities.

6. Results and Discussion

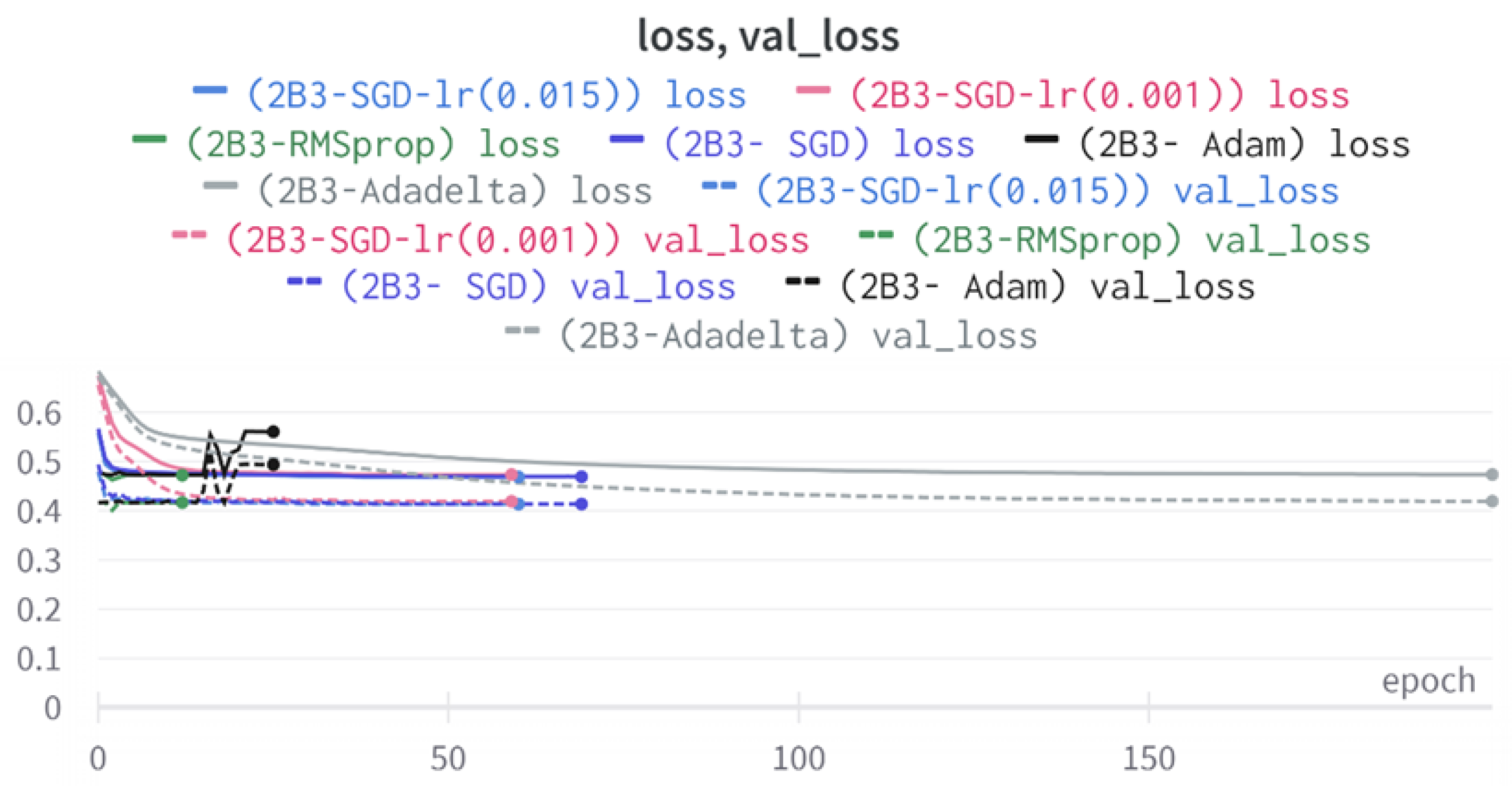

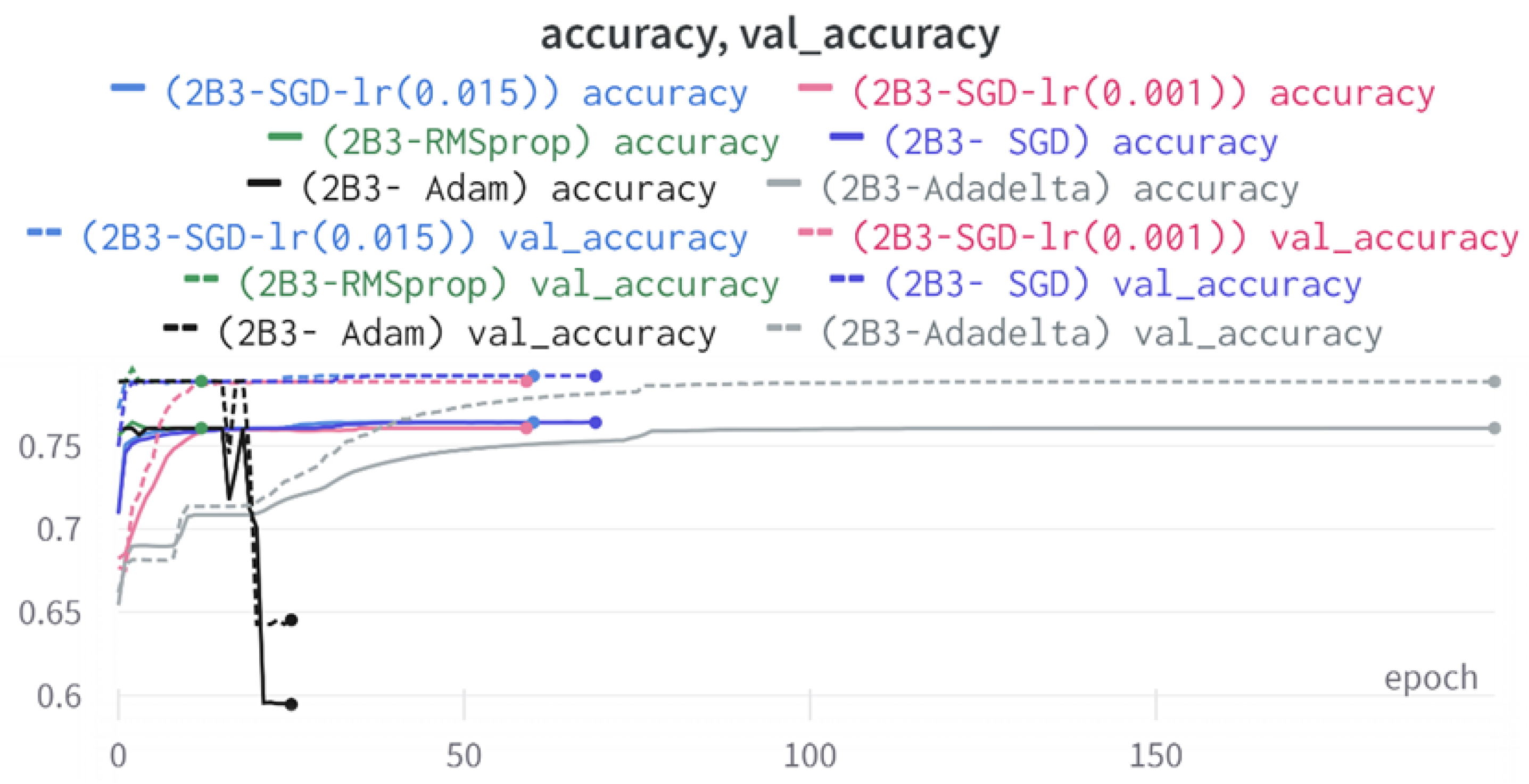

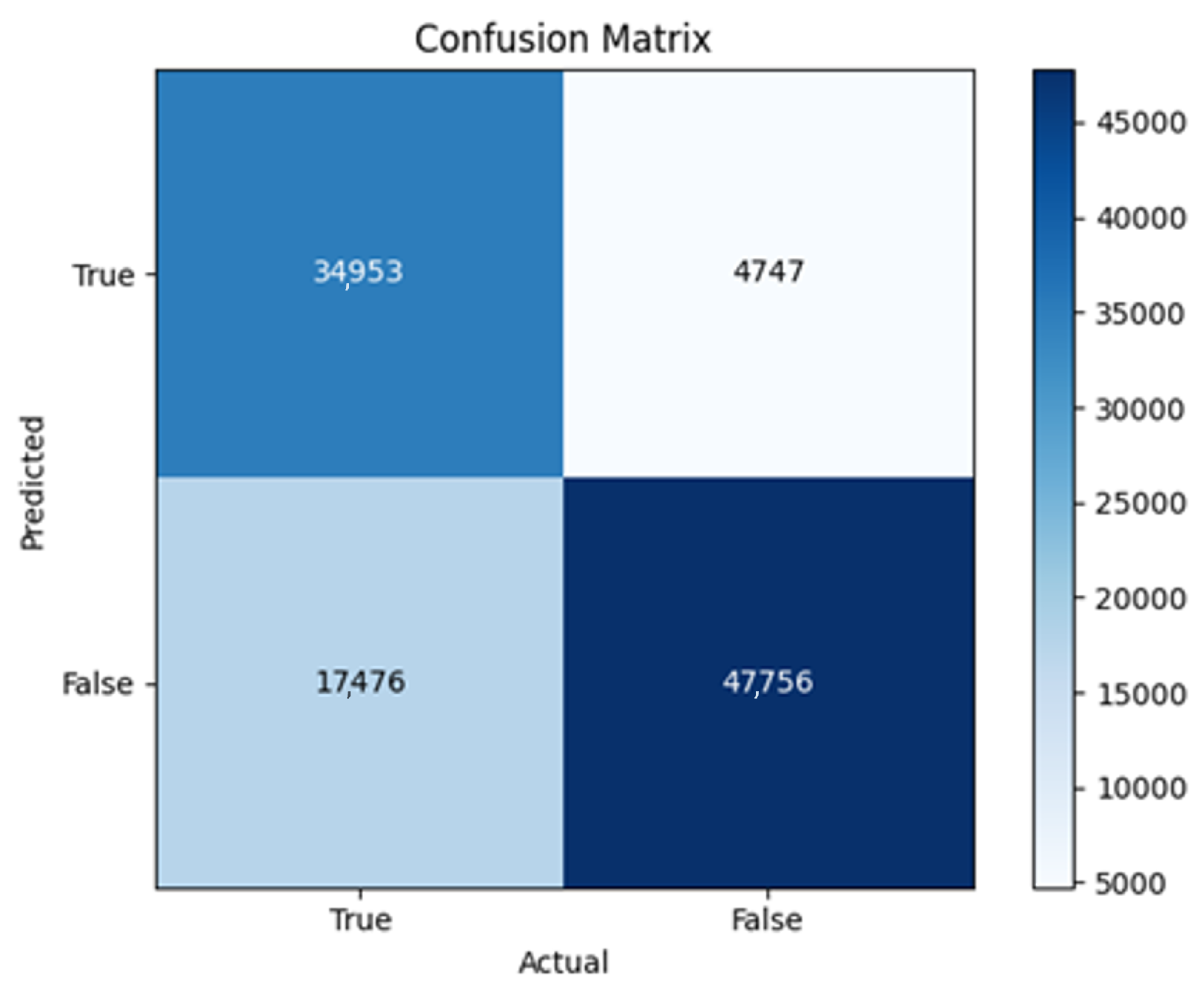

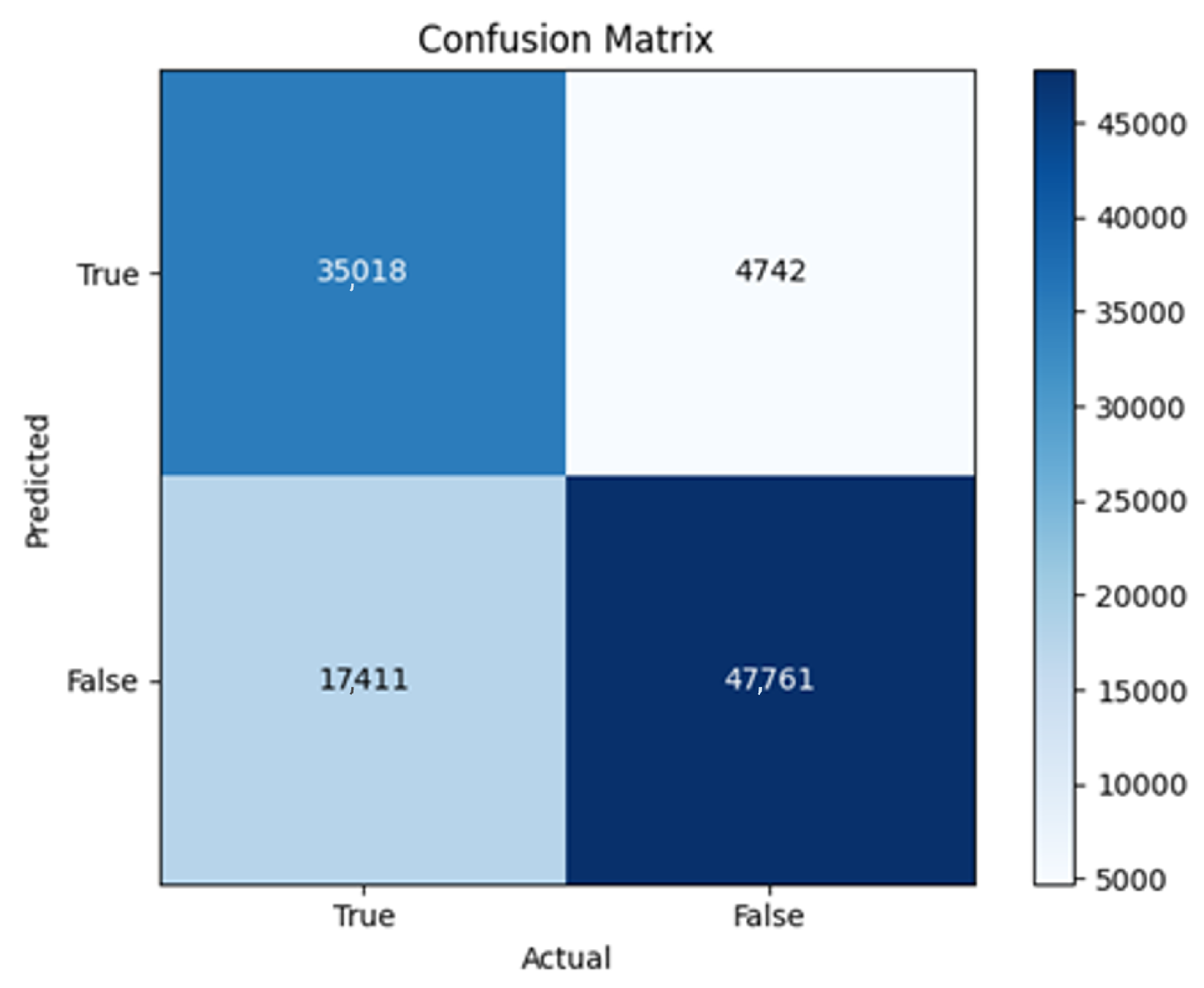

As mentioned earlier, models were monitored and saved on WandB and Google Colab Notebook [

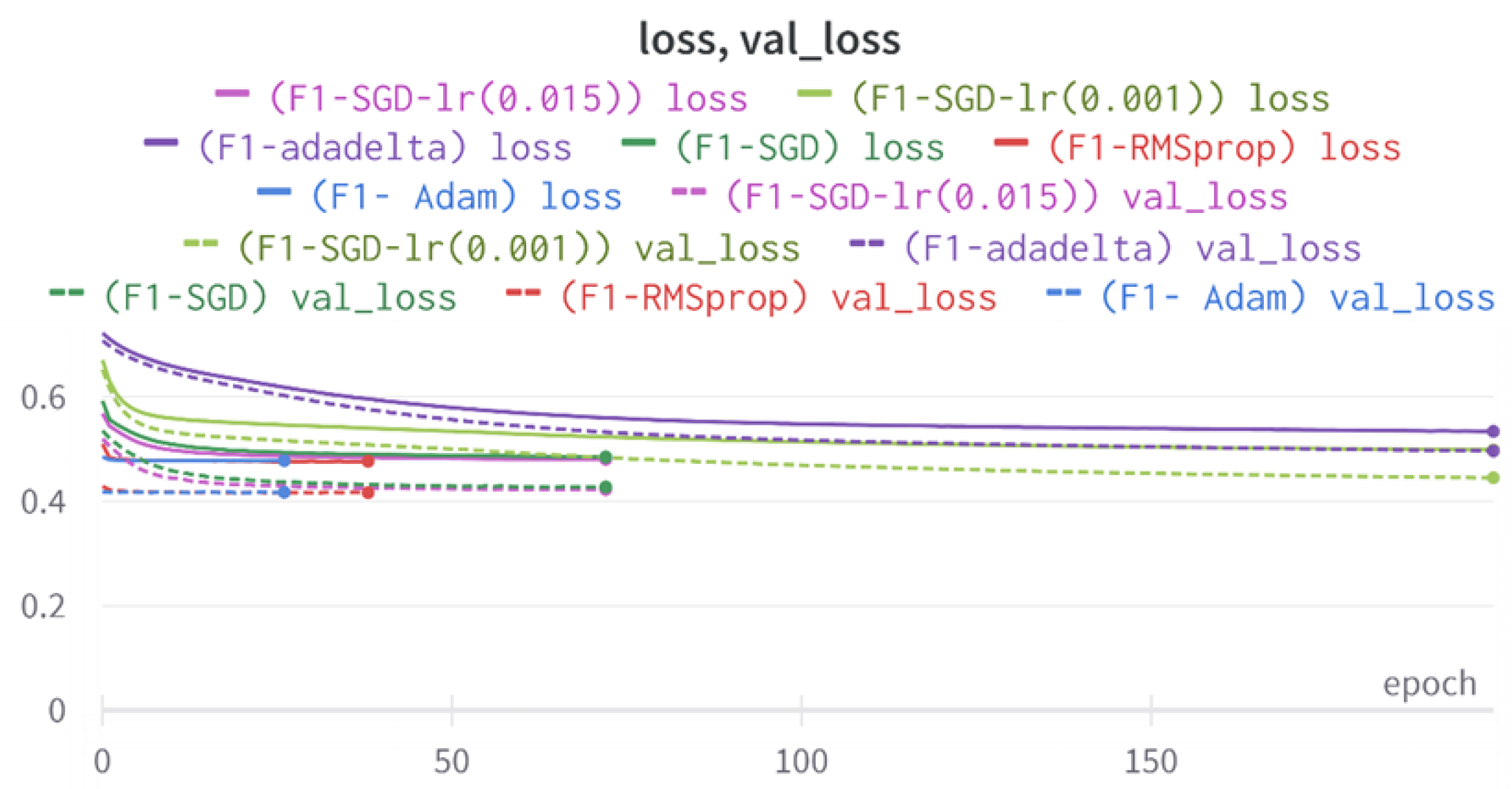

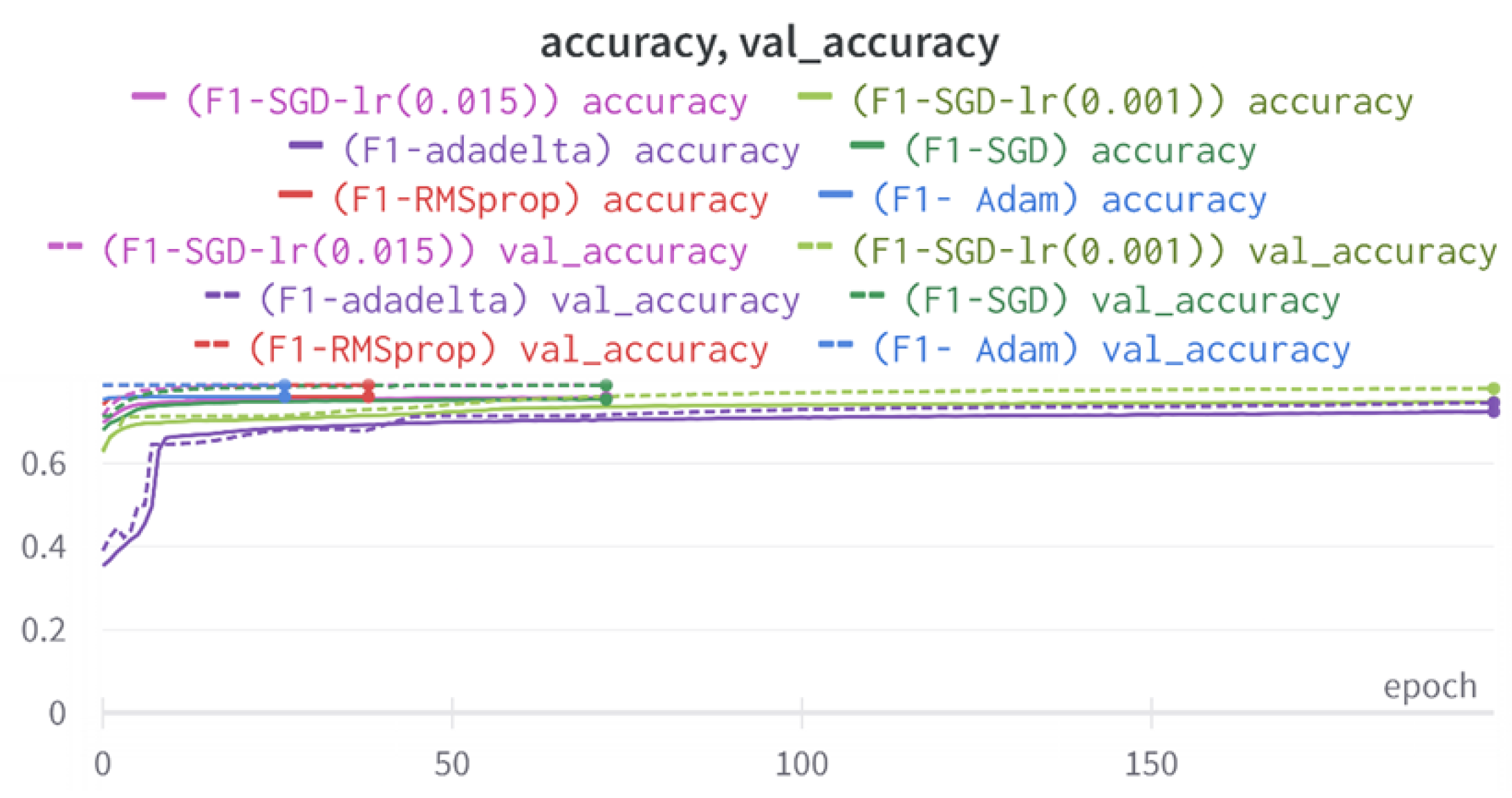

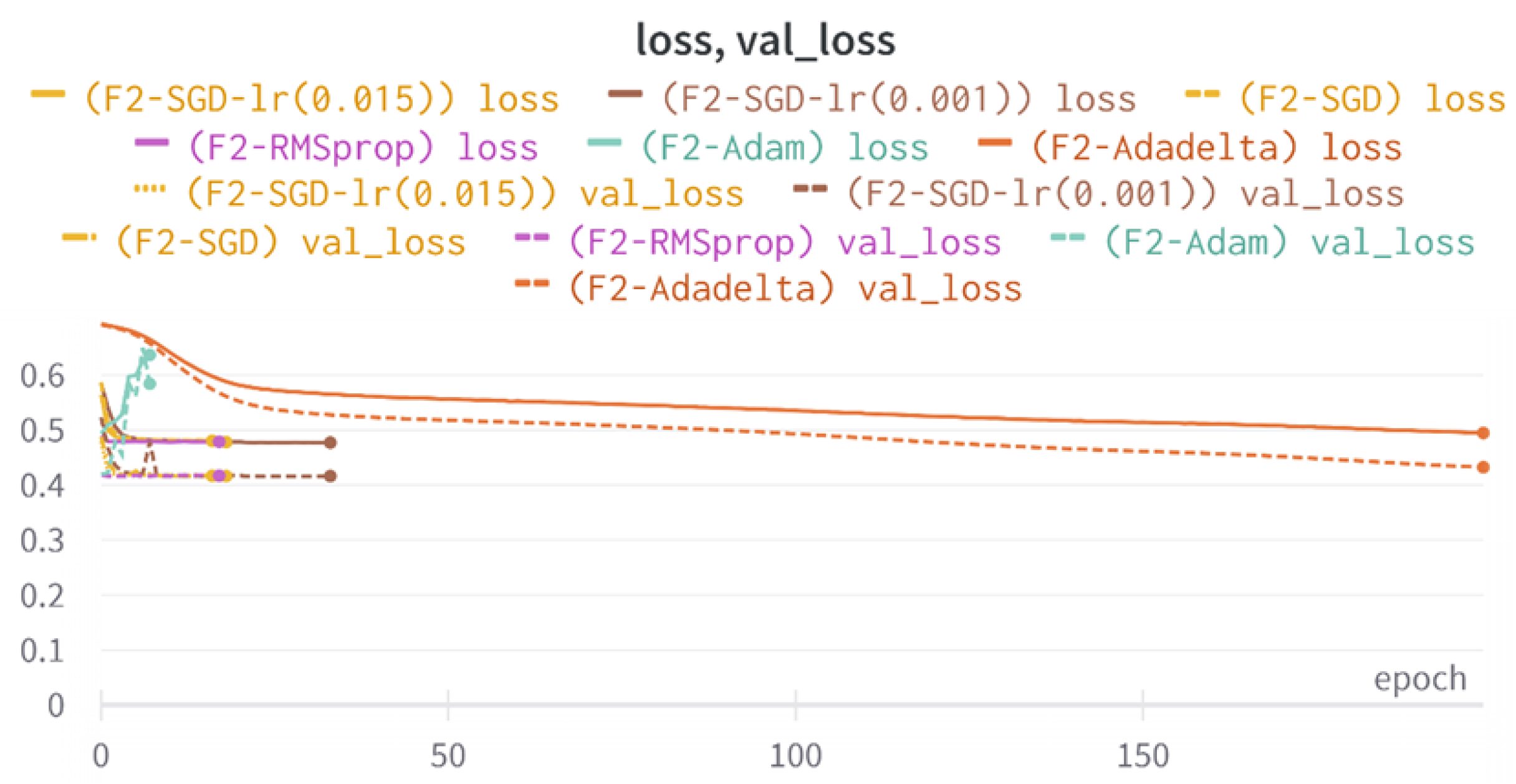

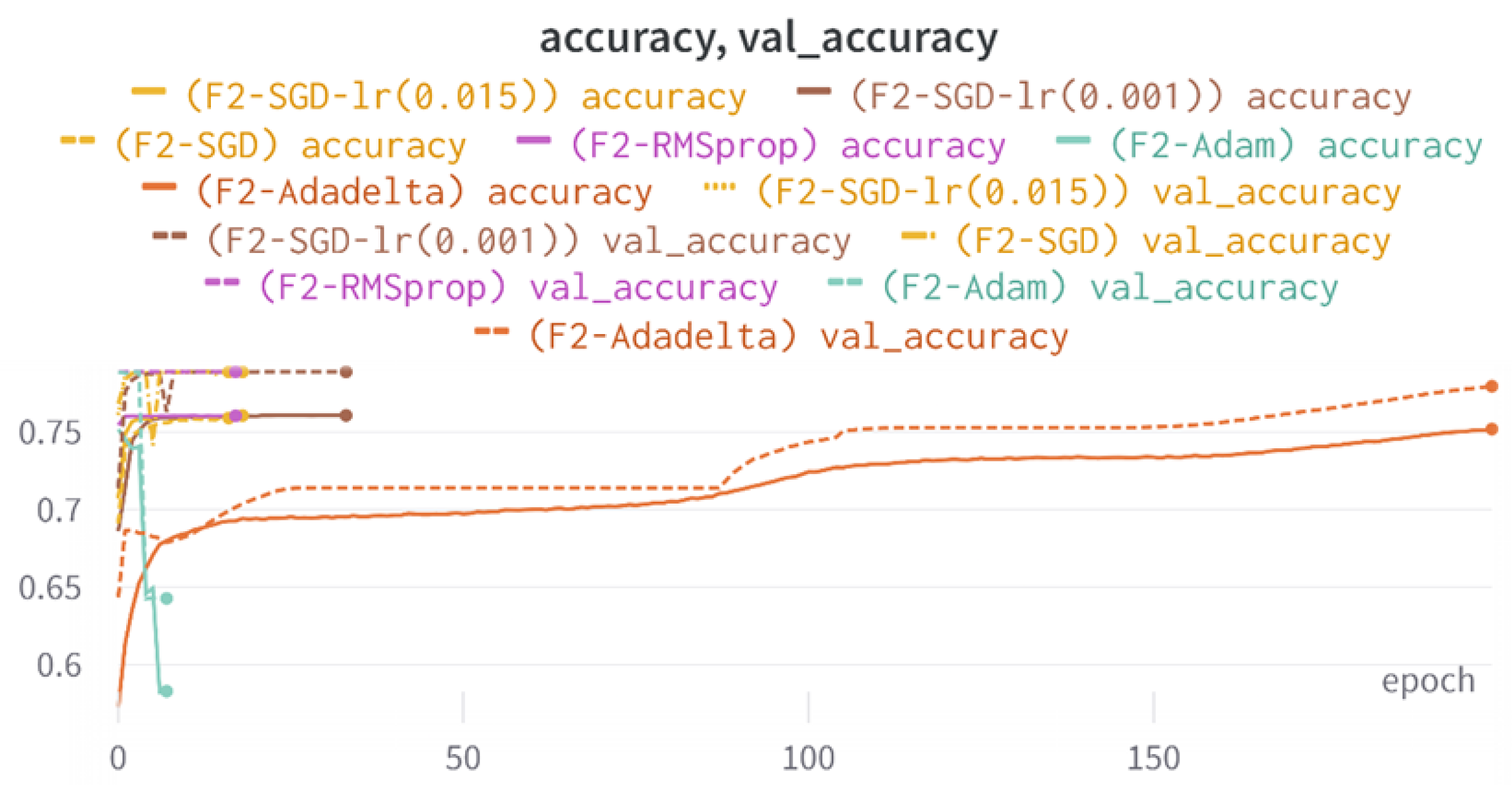

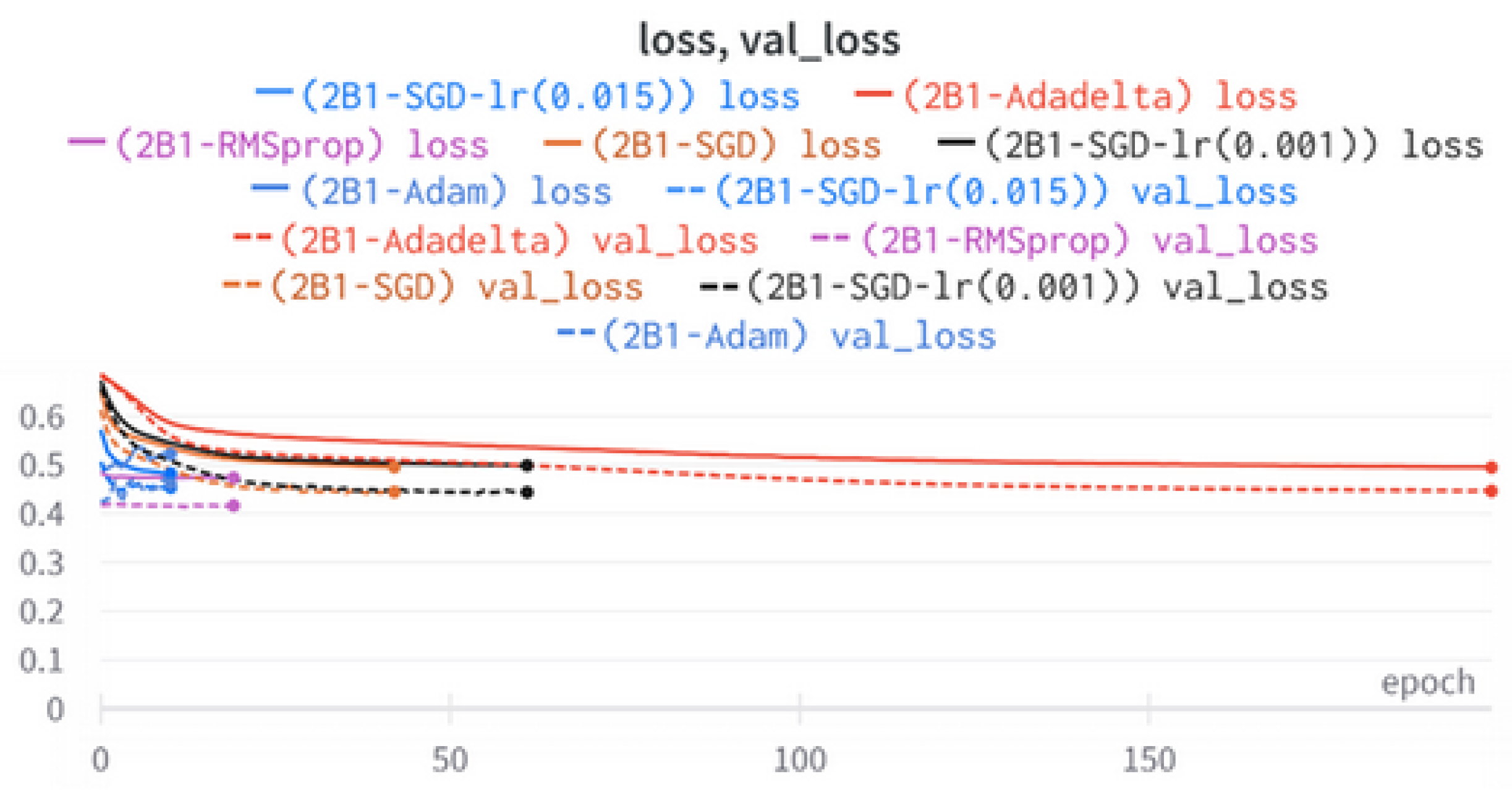

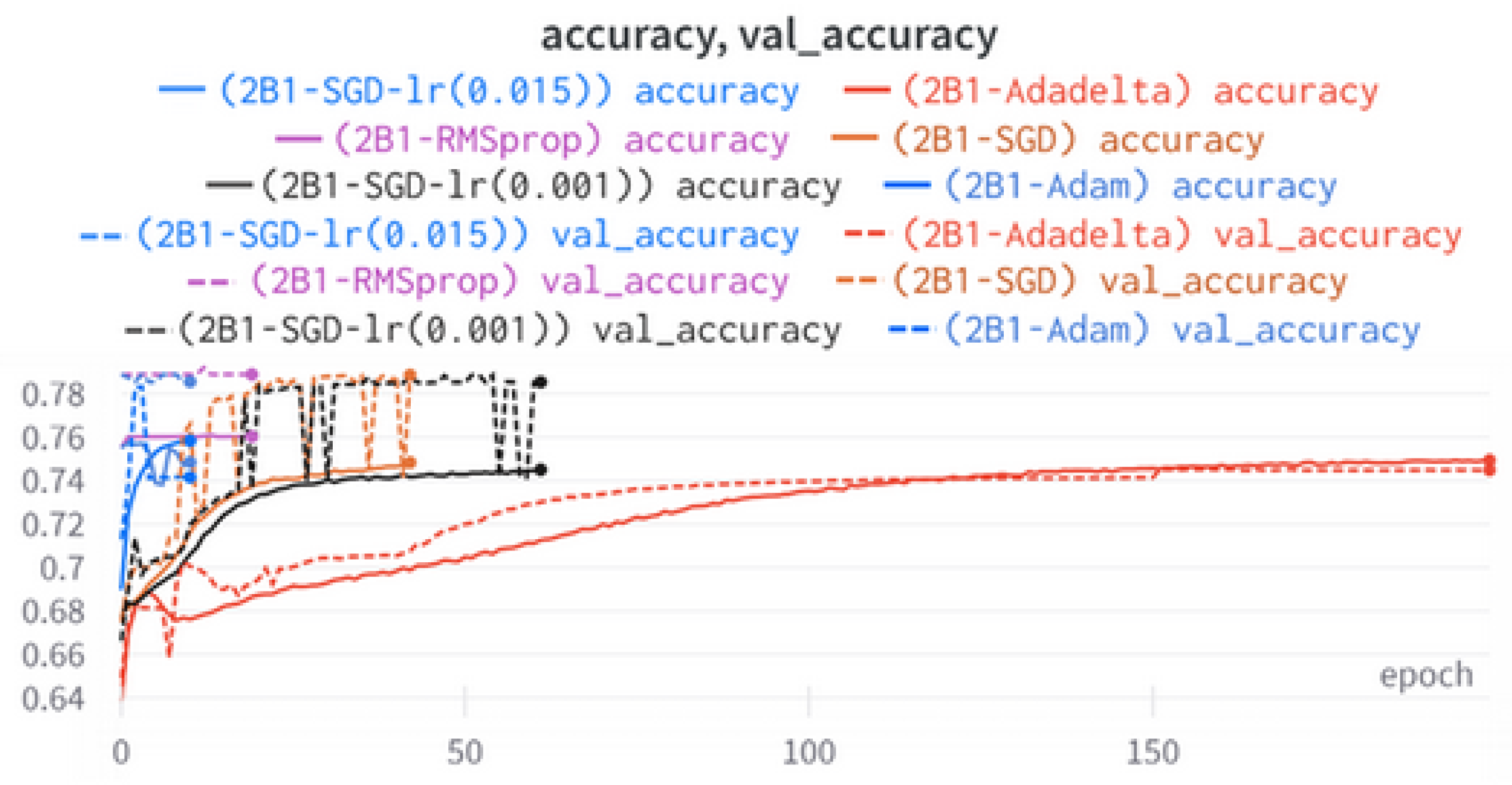

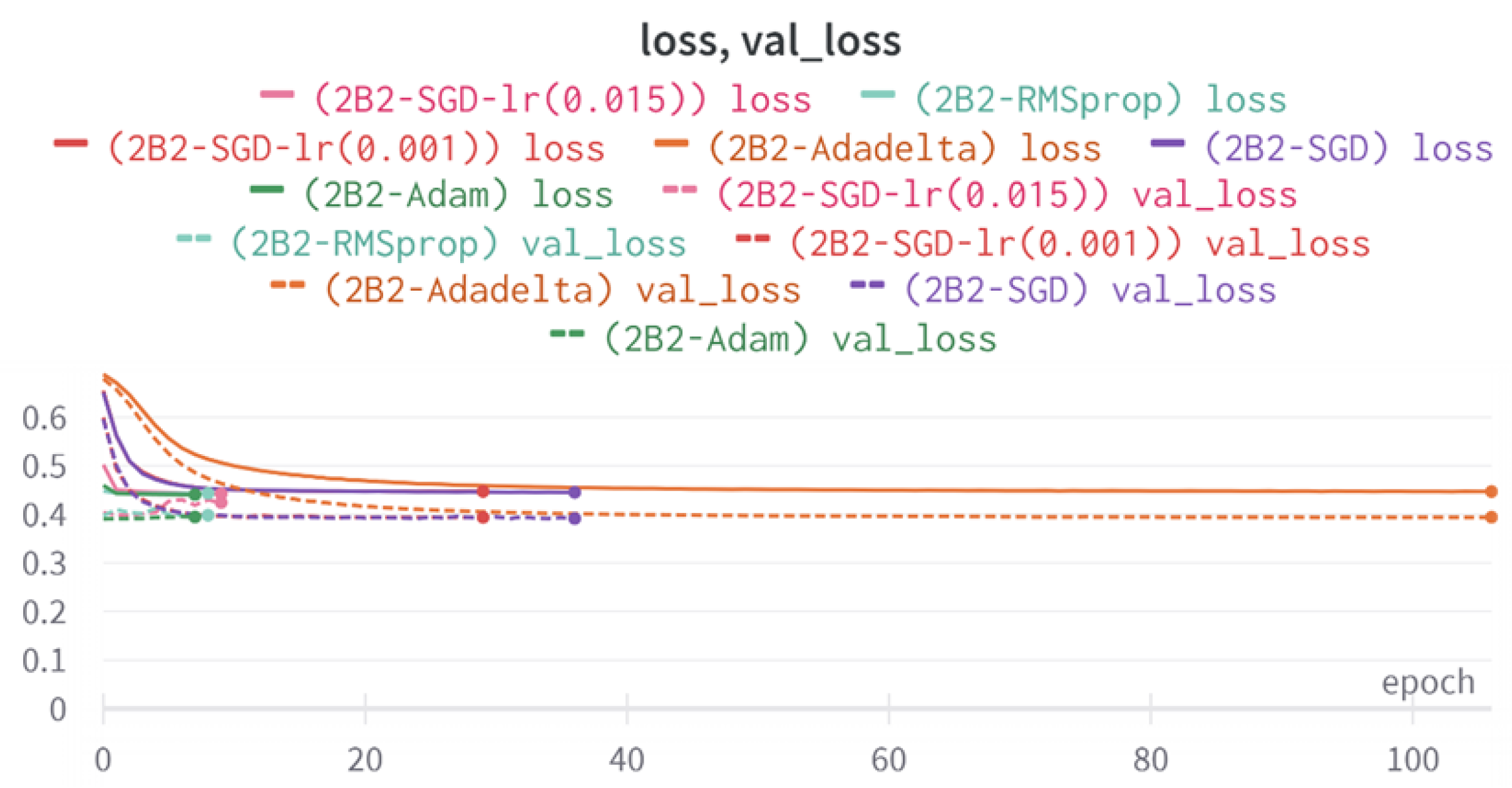

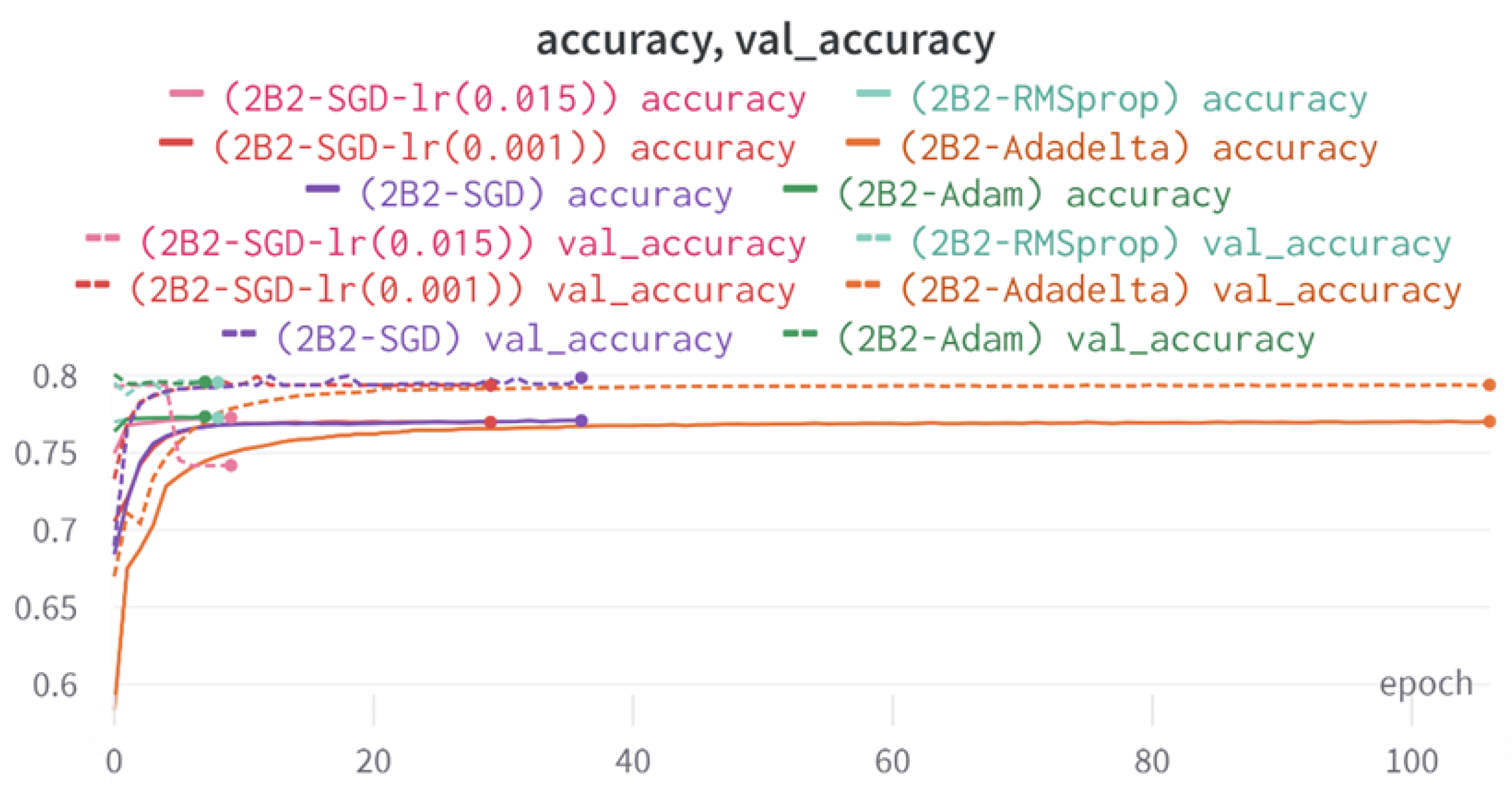

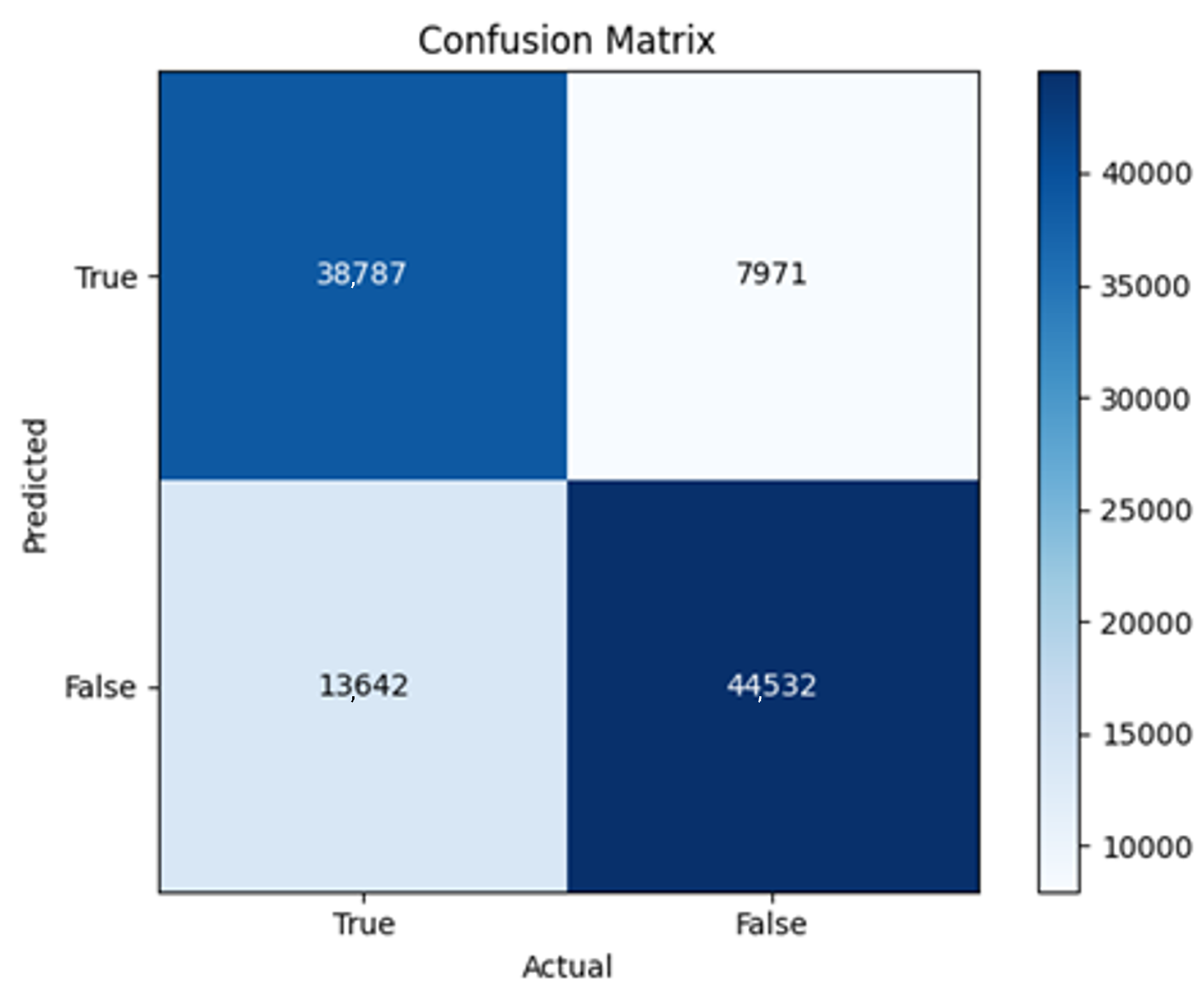

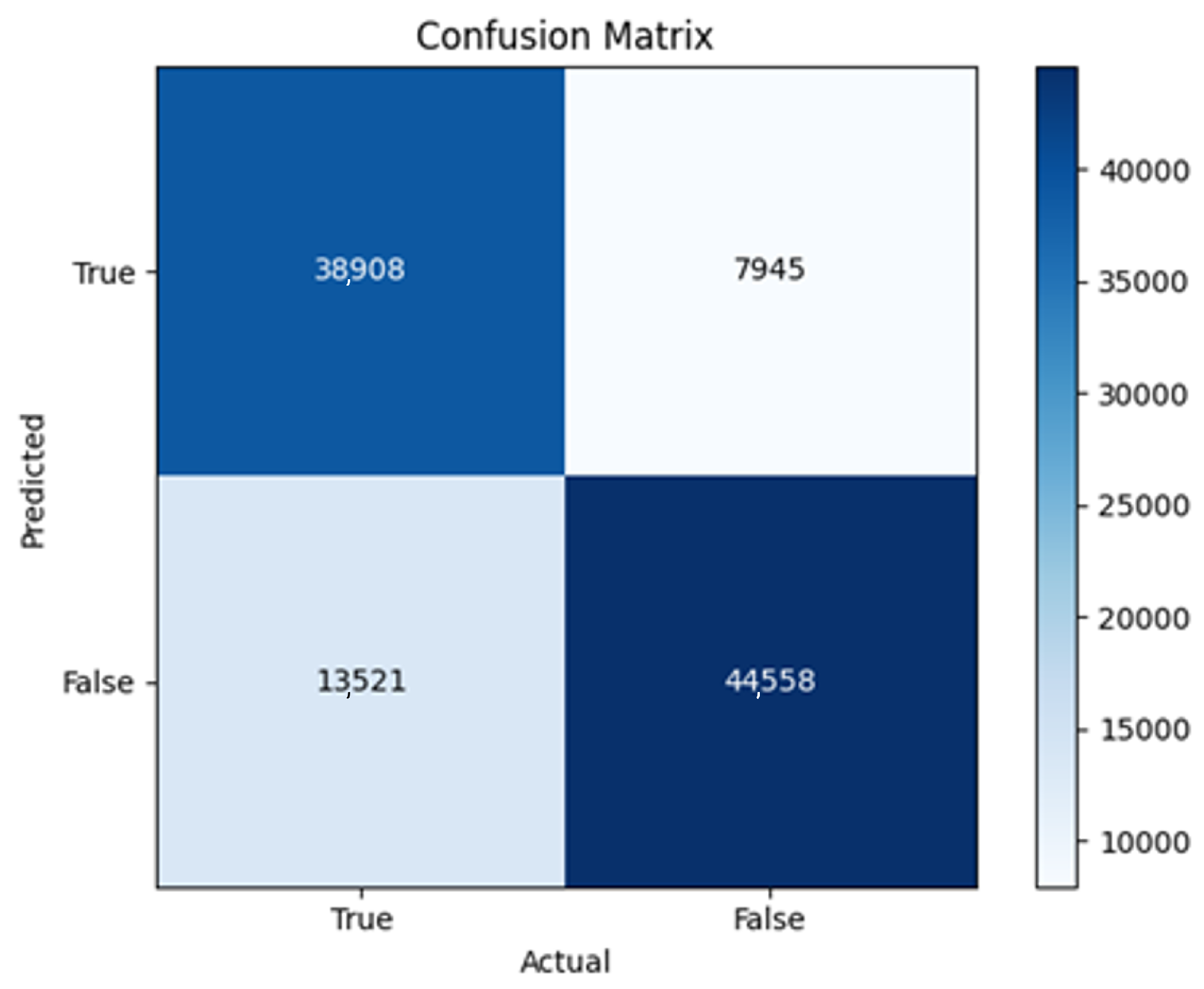

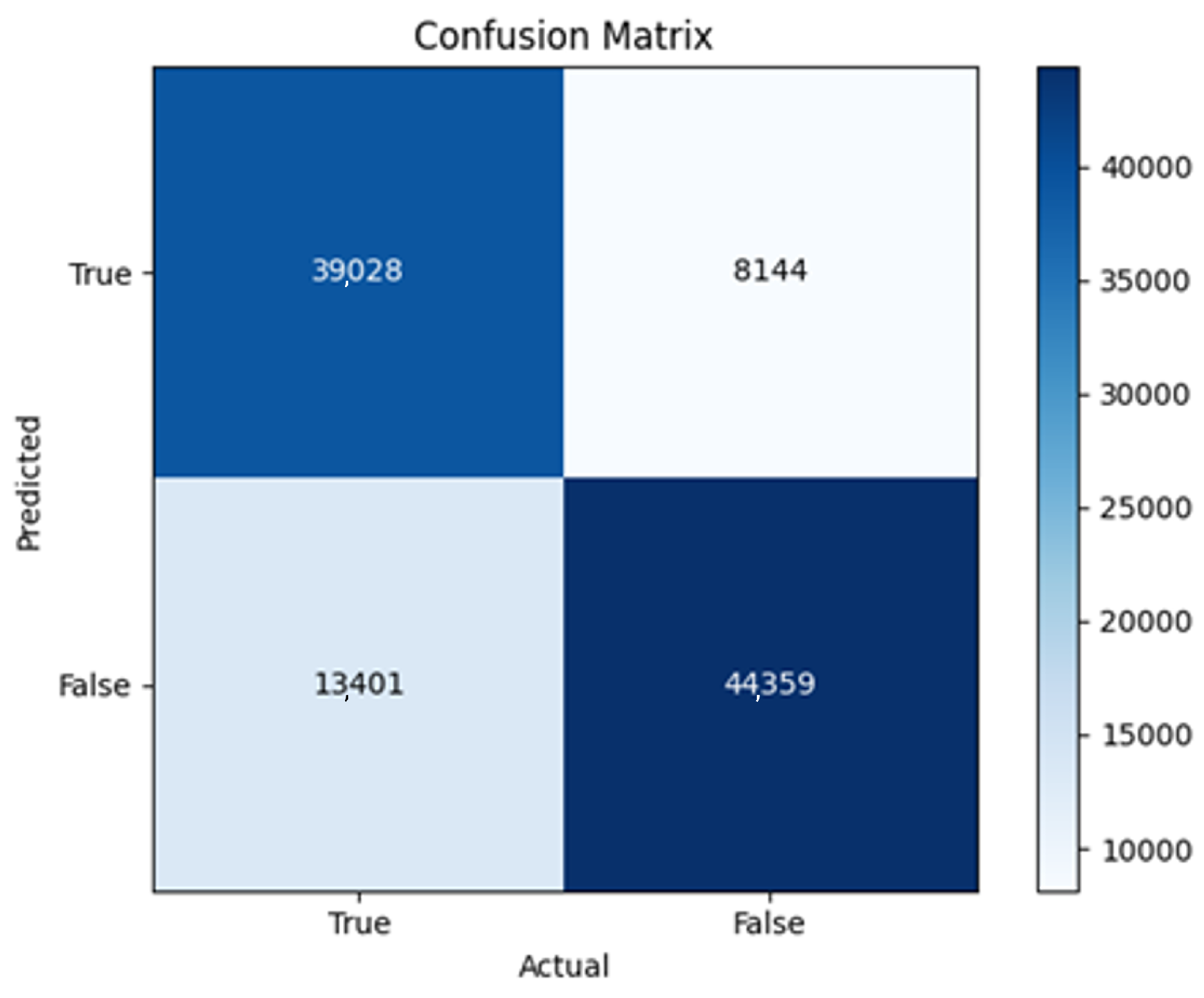

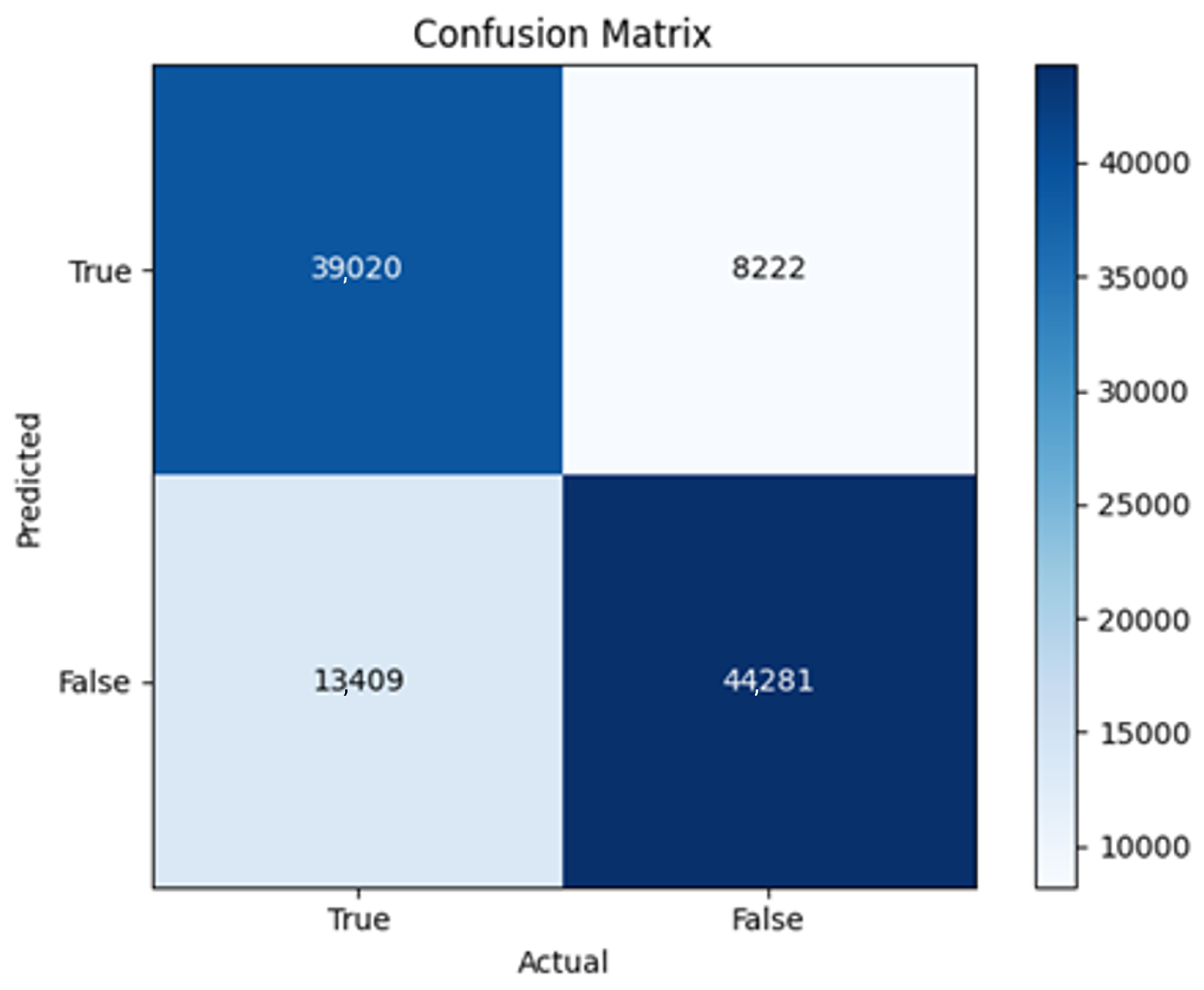

43]. Every model underwent training using four different optimizers, and in addition, two with a learning rate reducer. In this section, we present and discuss the results of each model. Several visualizations are provided, comparing accuracy, validation accuracy, loss, validation loss, and the confusion matrix for all five utilized optimizers. This approach provides and facilitates an effective comparison between different optimizers. In terms of evaluation metrics, the primary objective is to minimize the loss, false positives, and false negatives as much as possible, while maximizing all other relevant metrics. The training and accuracy plots serve as valuable tools for monitoring metric changes throughout the training process. These plots provide insights into various aspects of the model’s performance, such as determining if the model converges on the data and identifying potential instances of underfitting or overfitting.

In a well-fitted model, the training loss typically exhibits a consistent decrease throughout the training process, indicating effective learning and improved predictive performance. As training progresses, the loss should reach a stable level near the end. The validation loss, following a similar curve, is expected to be lower than the training loss. Regarding accuracy, the training accuracy generally increases at the beginning of training and stabilizes towards the end. The validation accuracy, although slightly lower, should also exhibit a similar trend.

Typically, since the model is optimized and learns from the training data, the training loss is expected to be lower than the validation loss, and the training accuracy is expected to be higher than the validation accuracy. However, it is important to note that this general expectation may not hold true in all cases, as the behavior of these metrics can vary depending on the specific dataset and model complexity. The split of the dataset also affects the whole process, as the classes considered by the model may not be well represented in the training and validation sets, and hence skewed data in the training or validation may lead to diverse results. This could be solved by applying stratified cross-validation, which guarantees a fair distribution of the data for effective testing and a reasonable outcome.

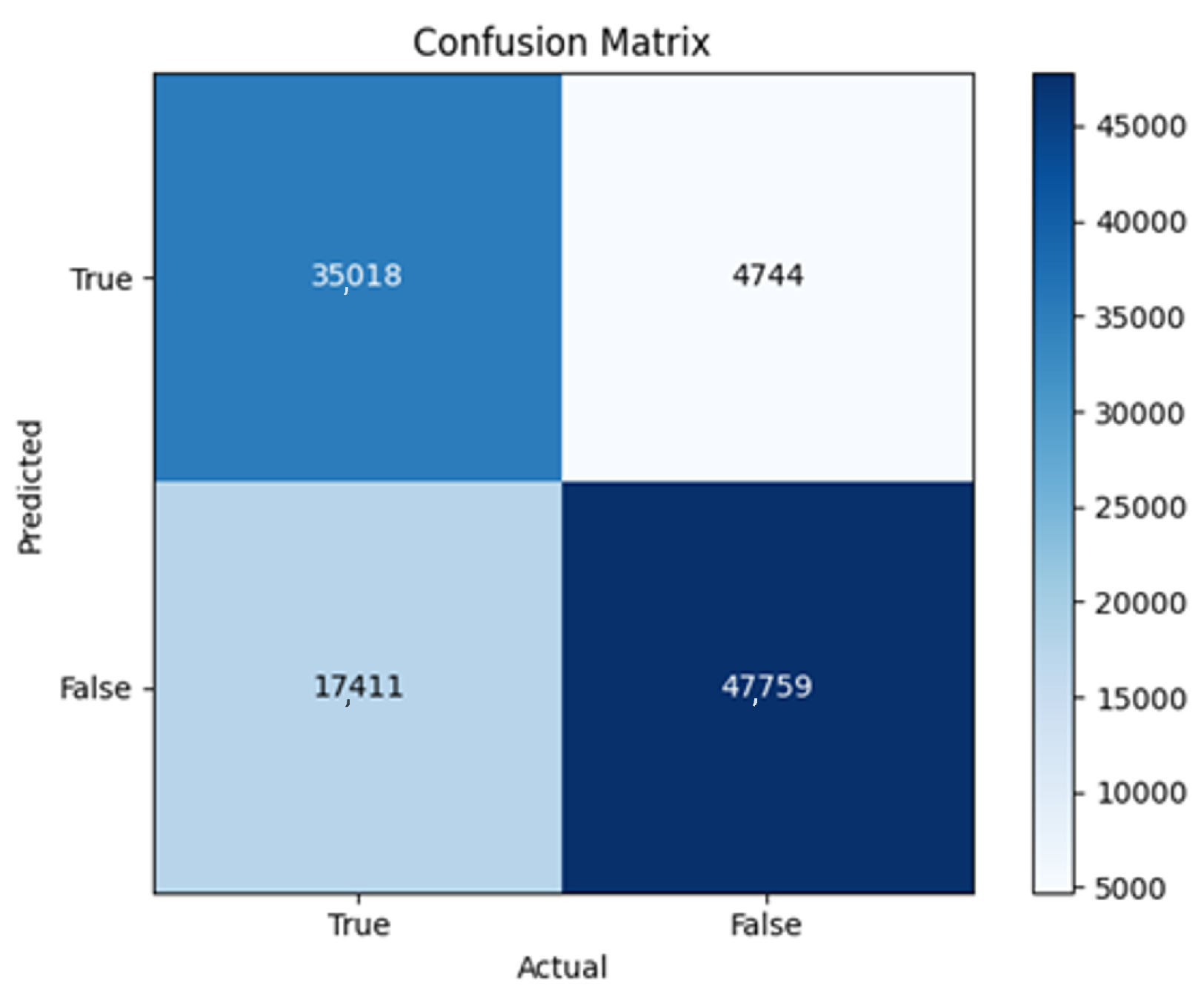

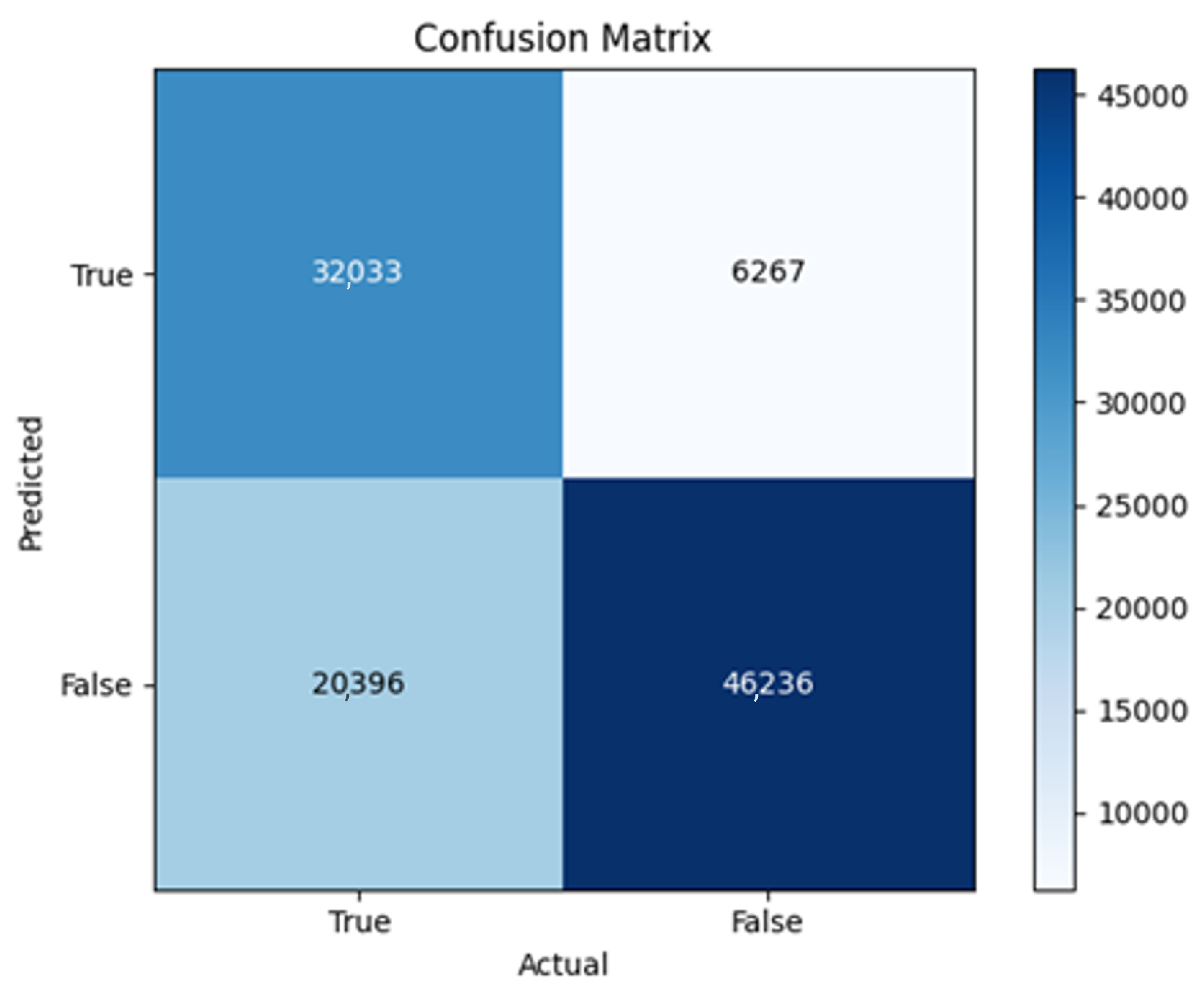

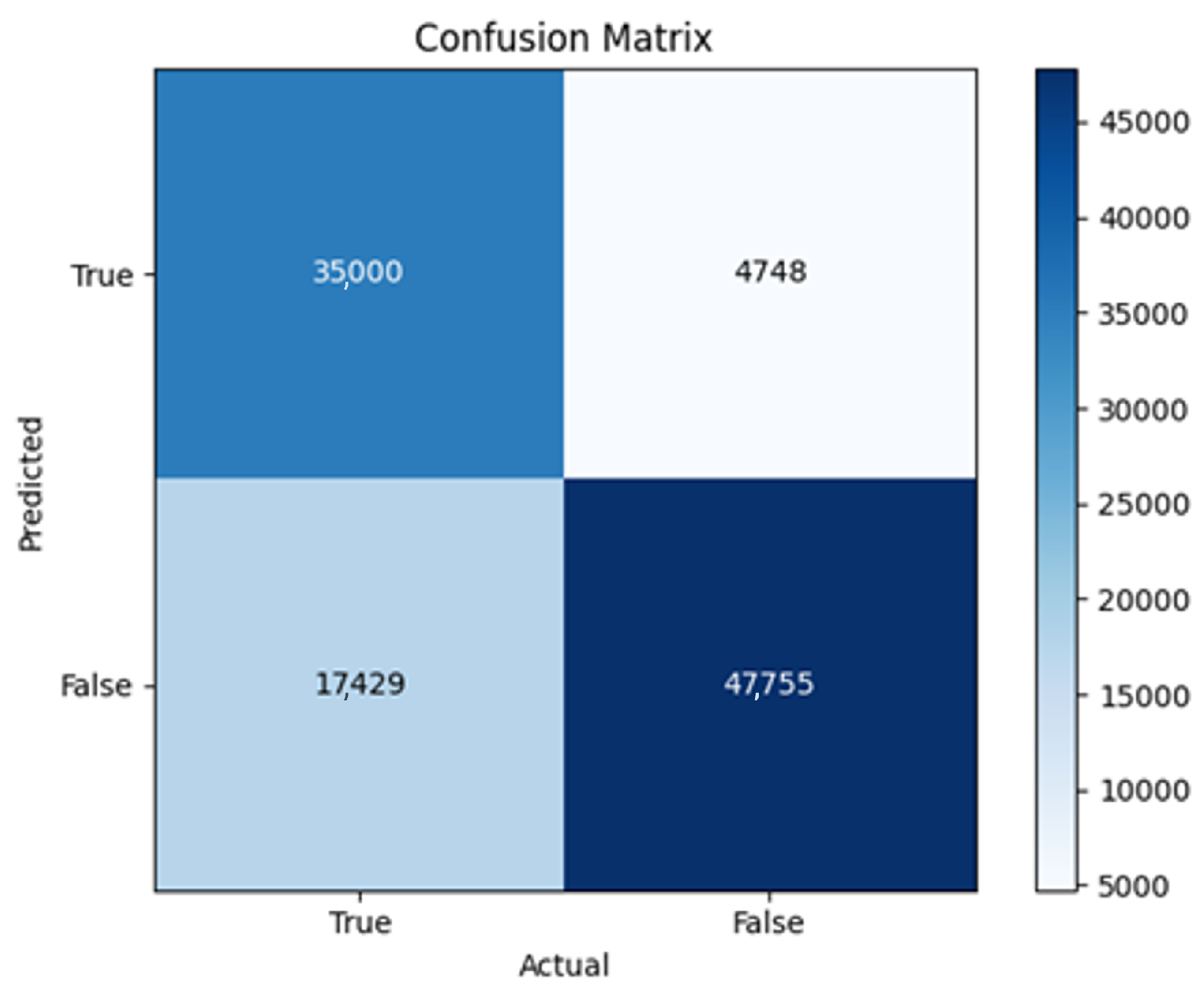

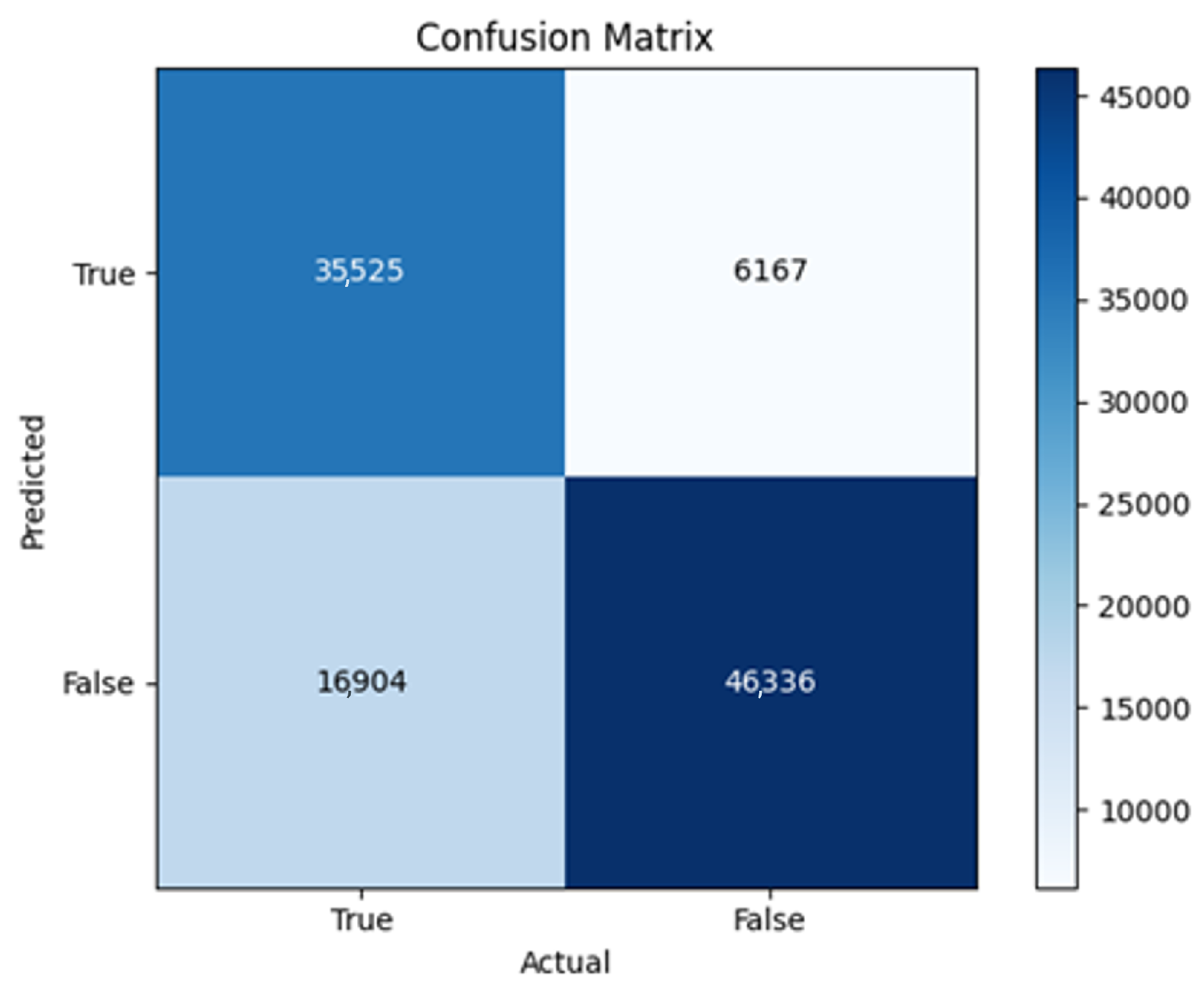

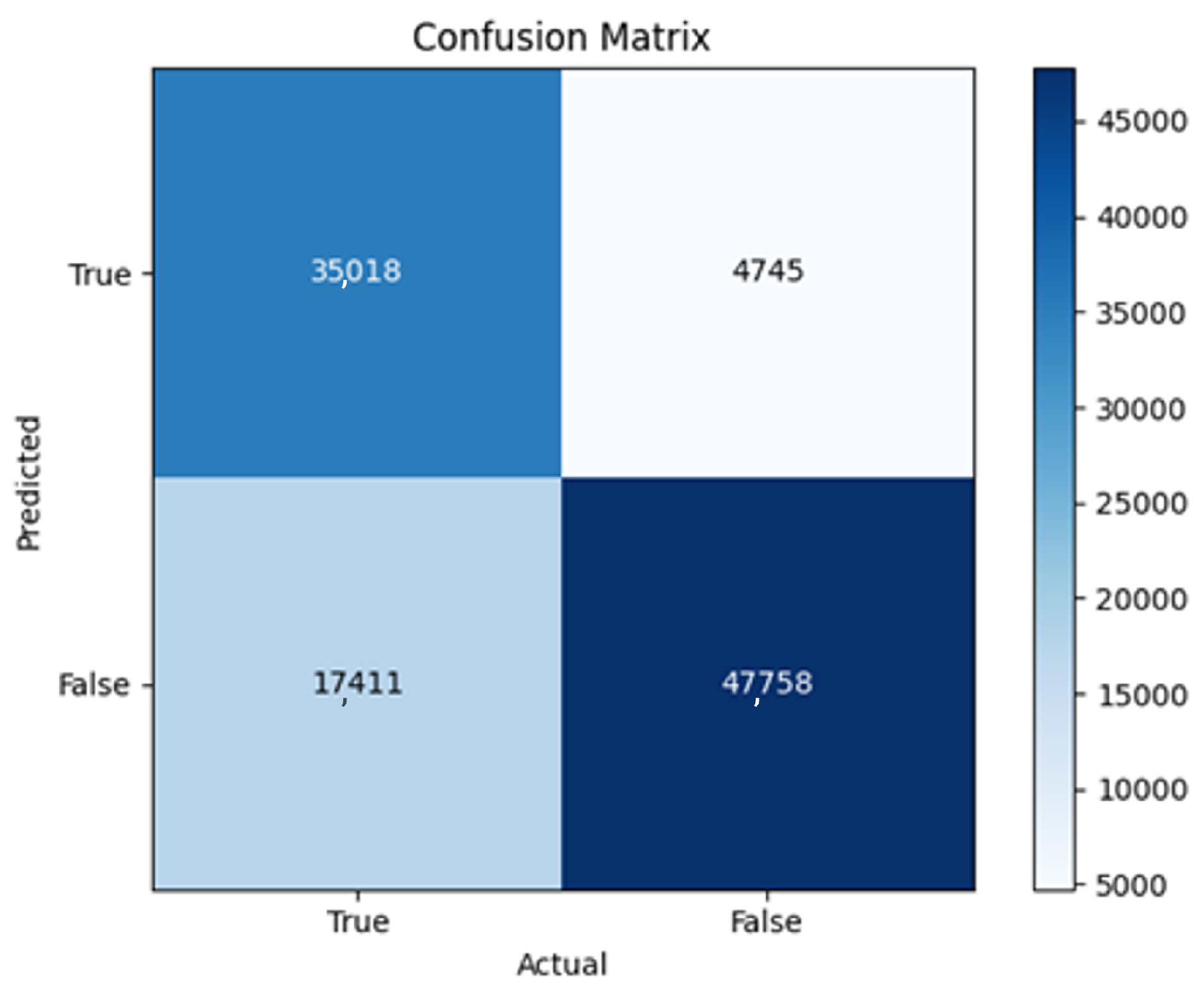

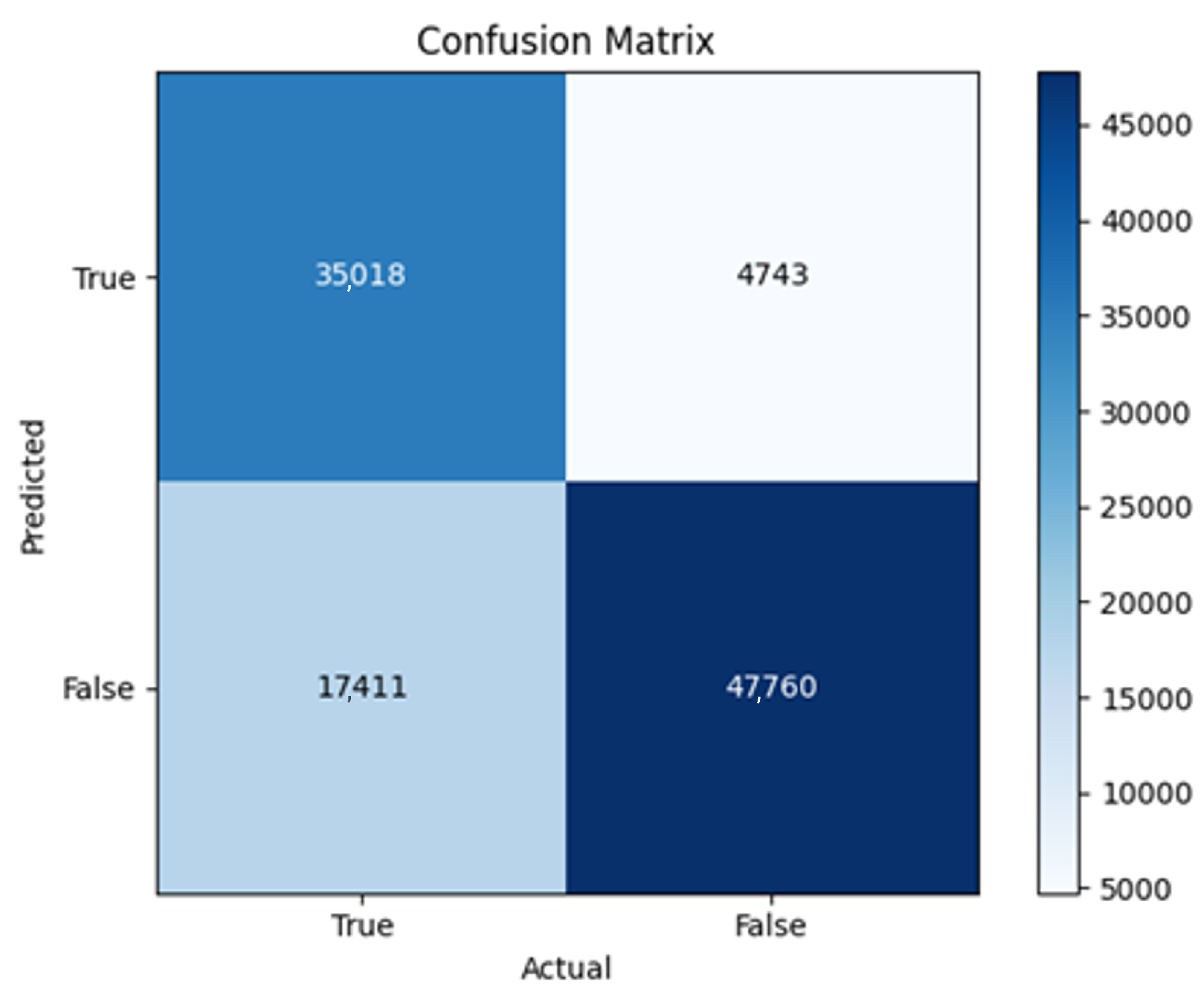

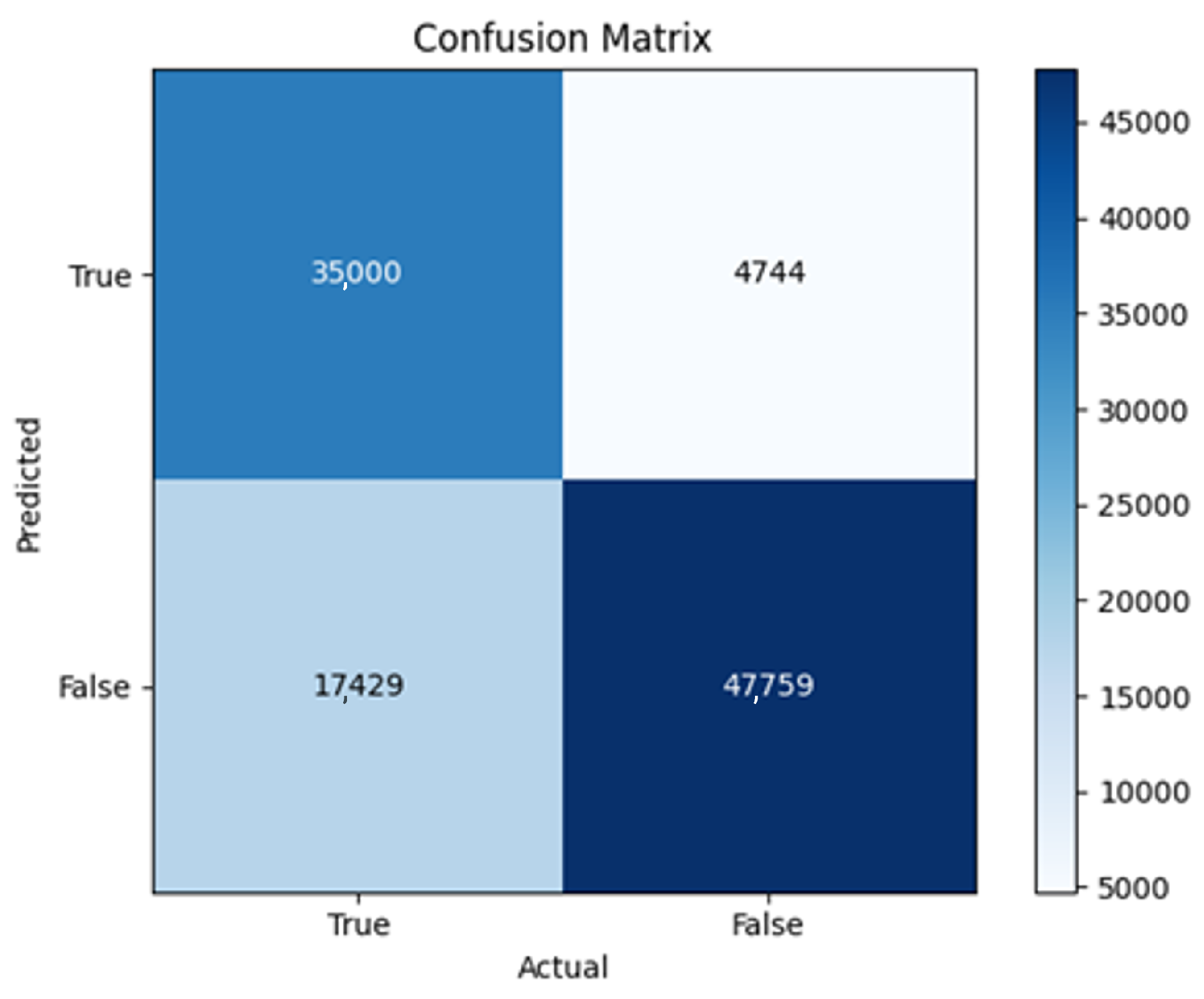

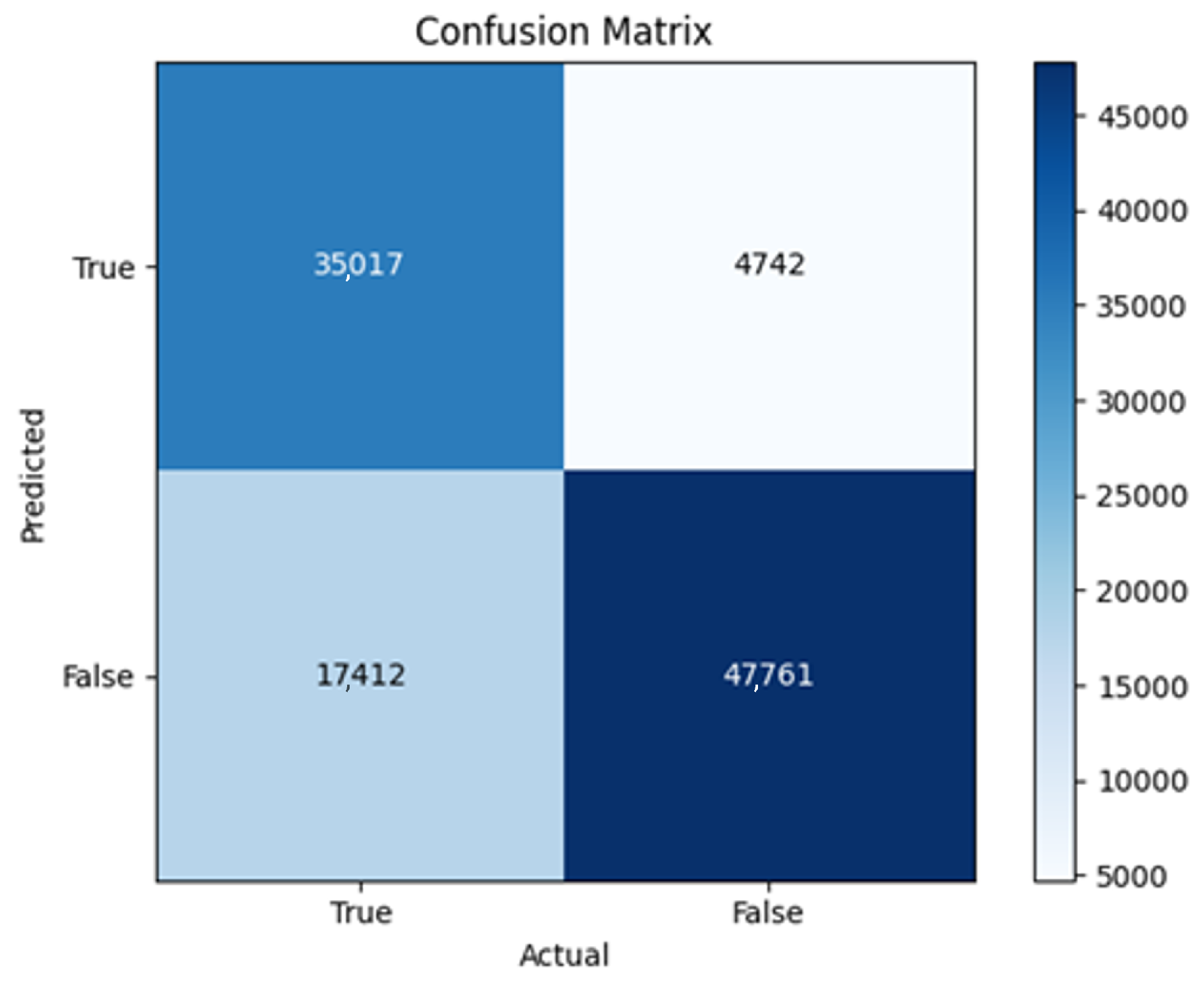

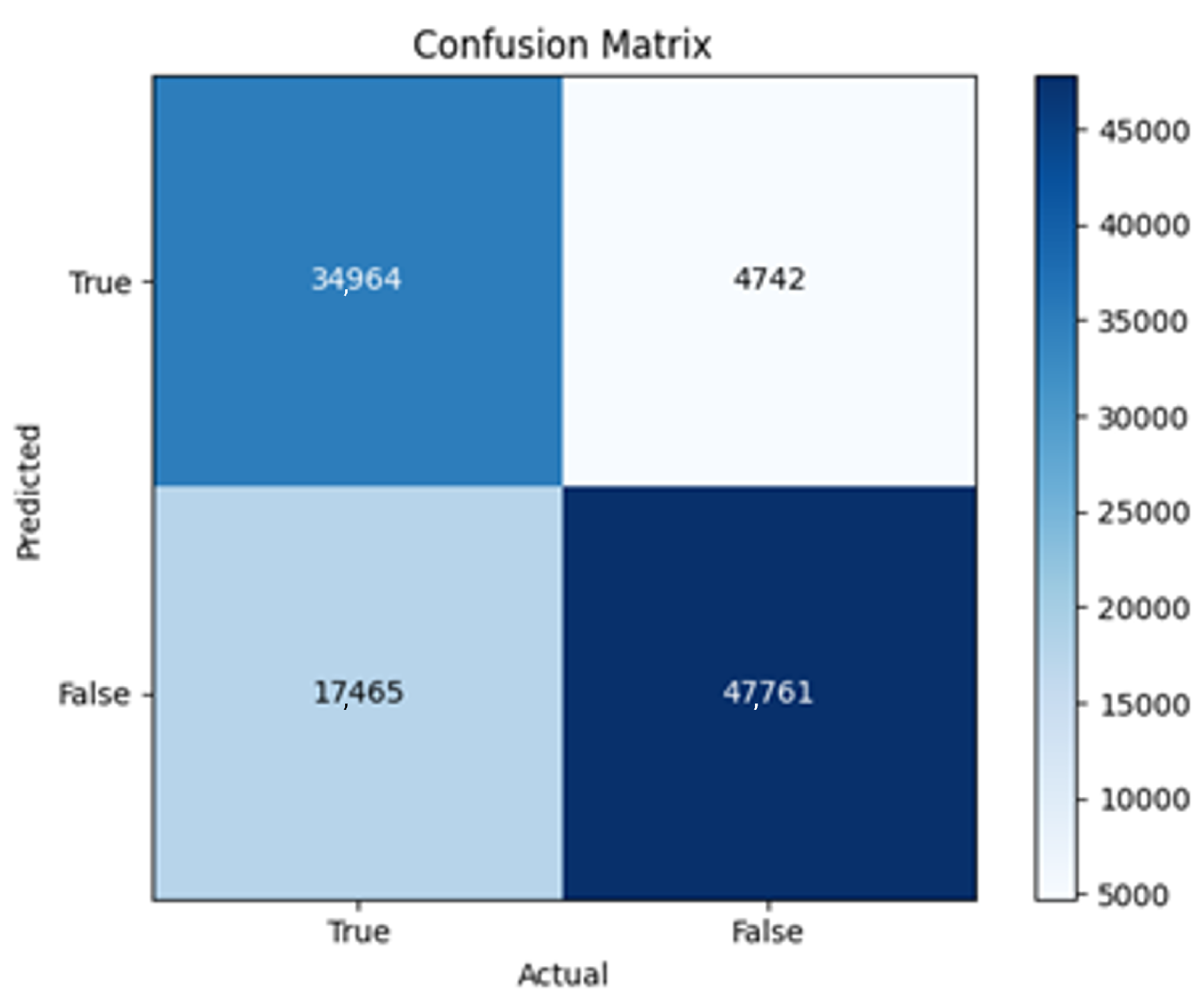

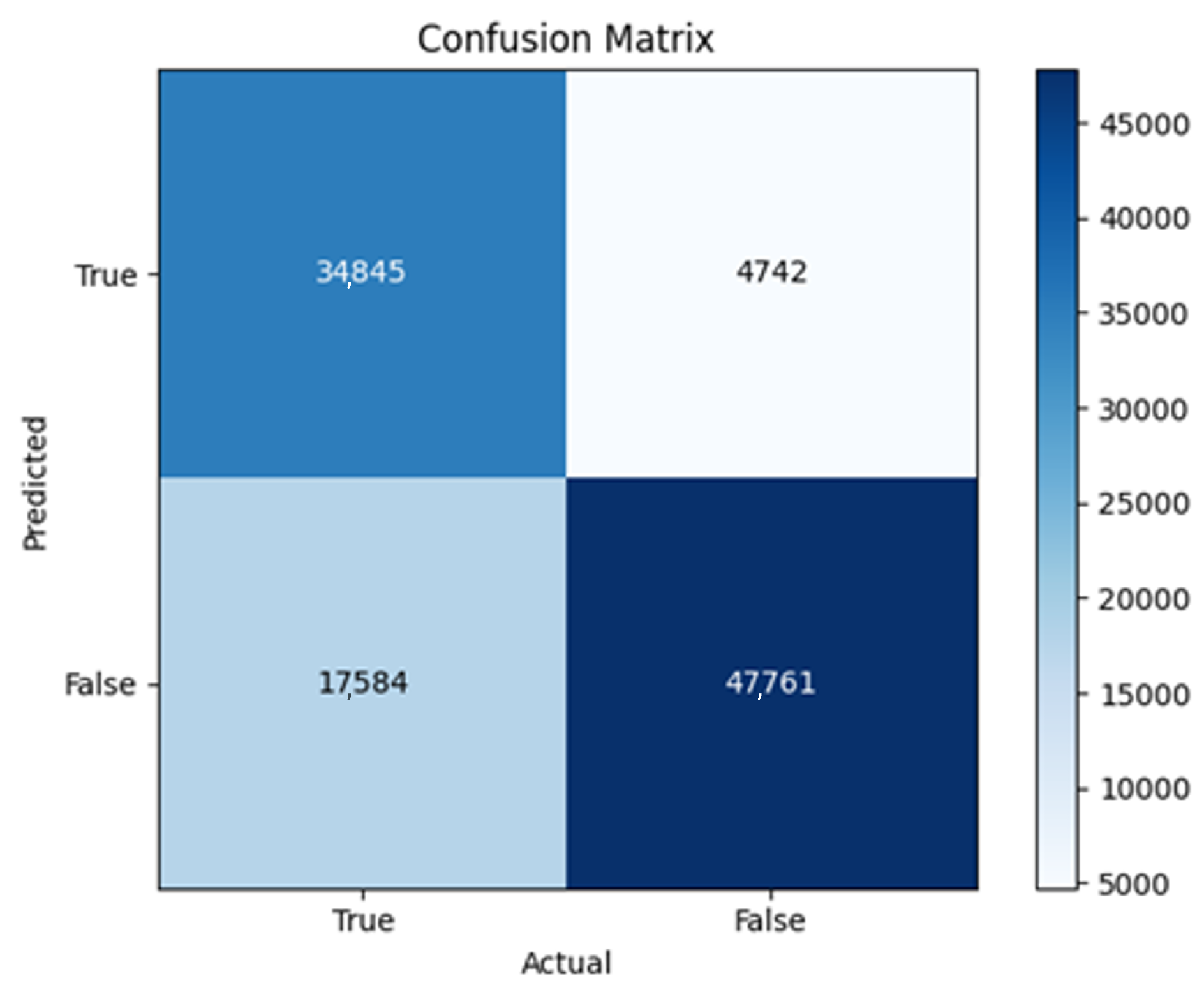

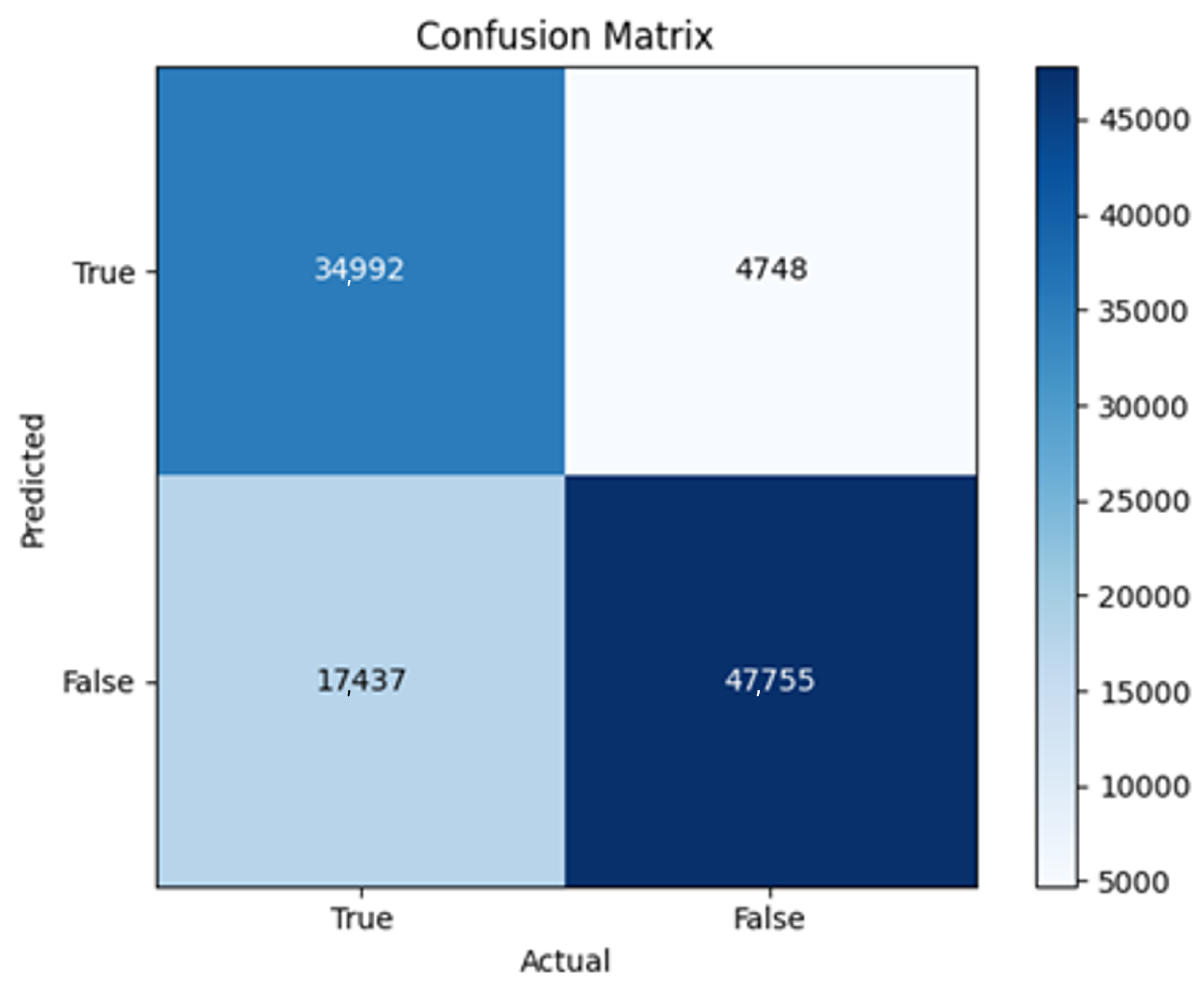

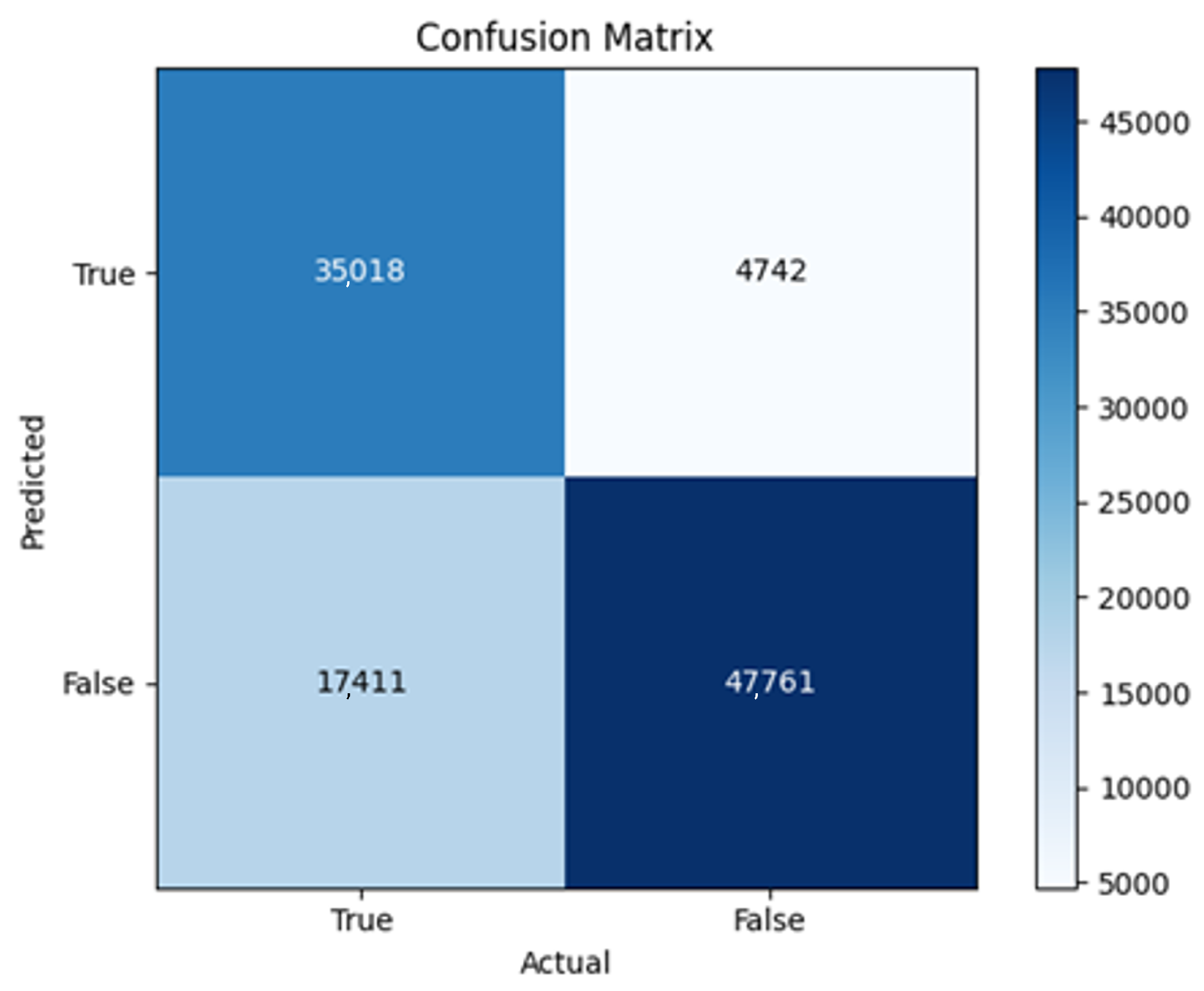

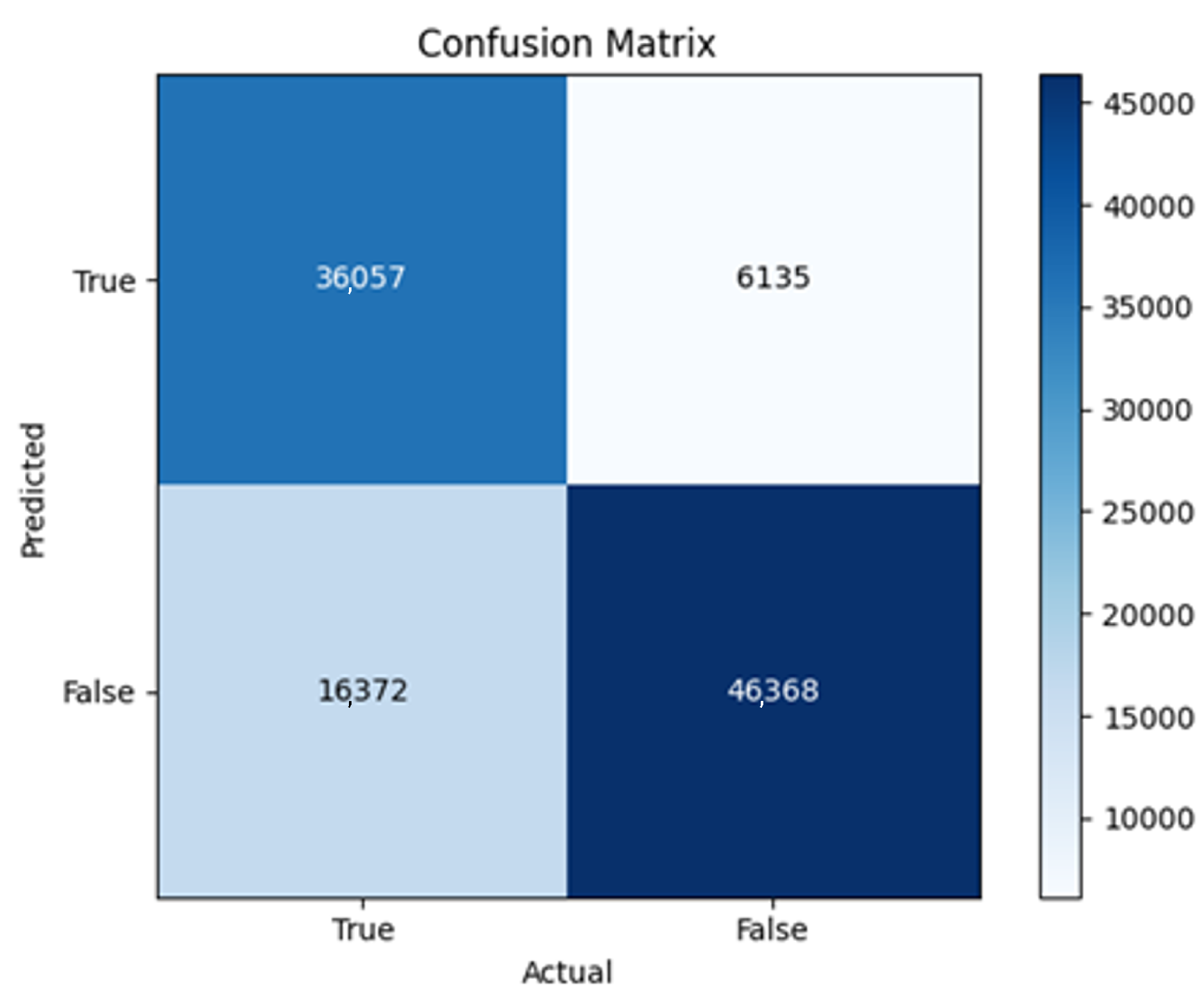

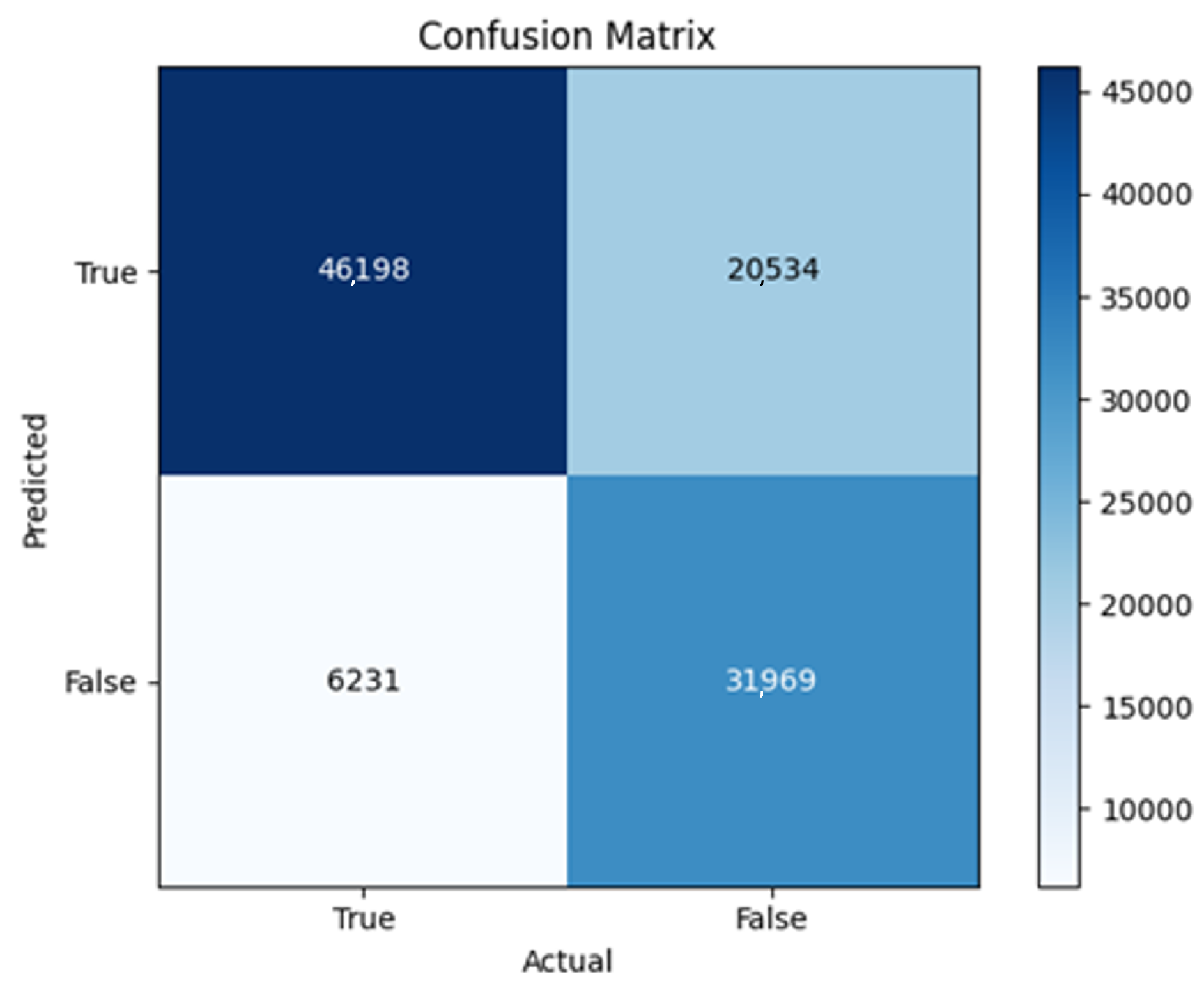

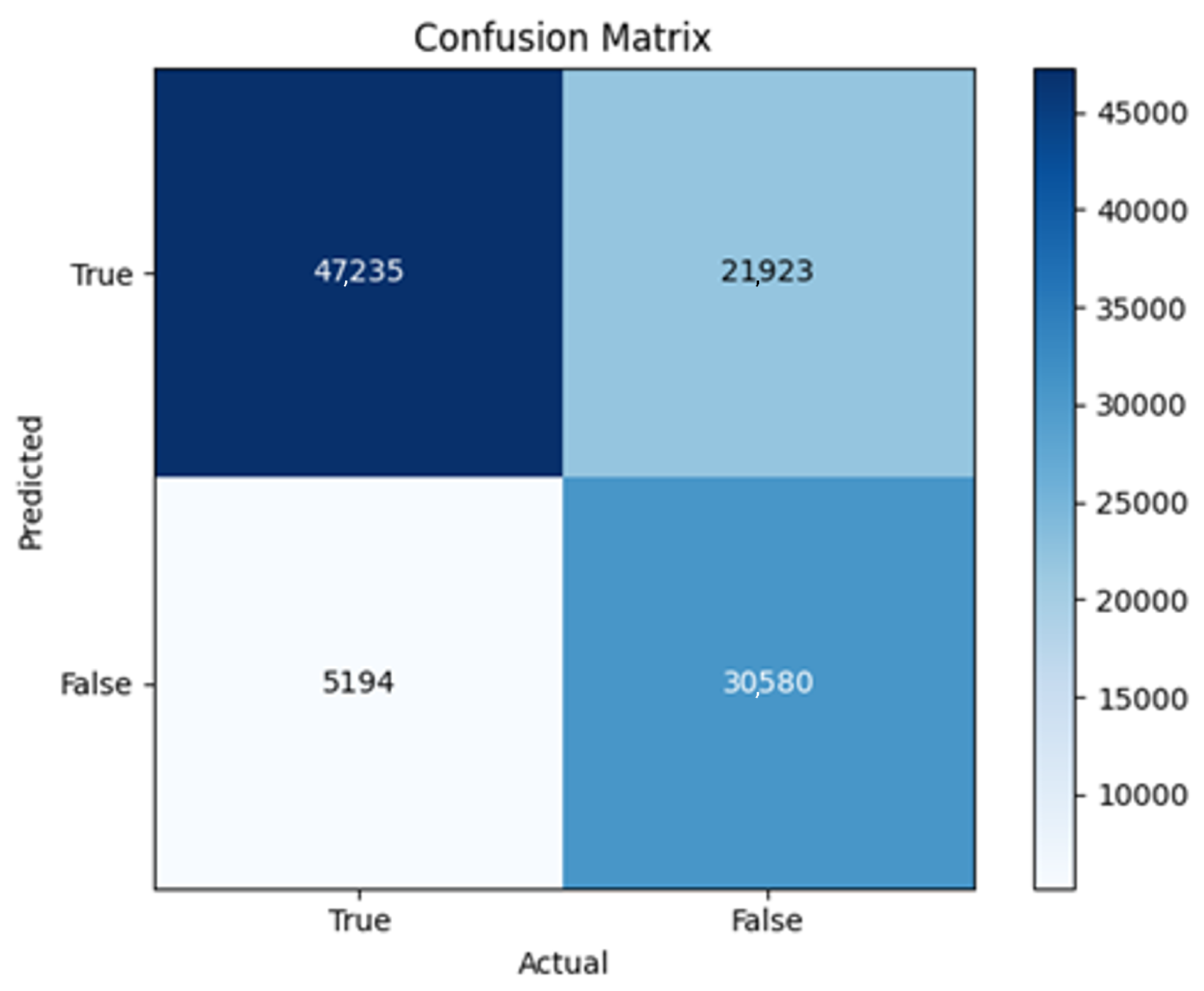

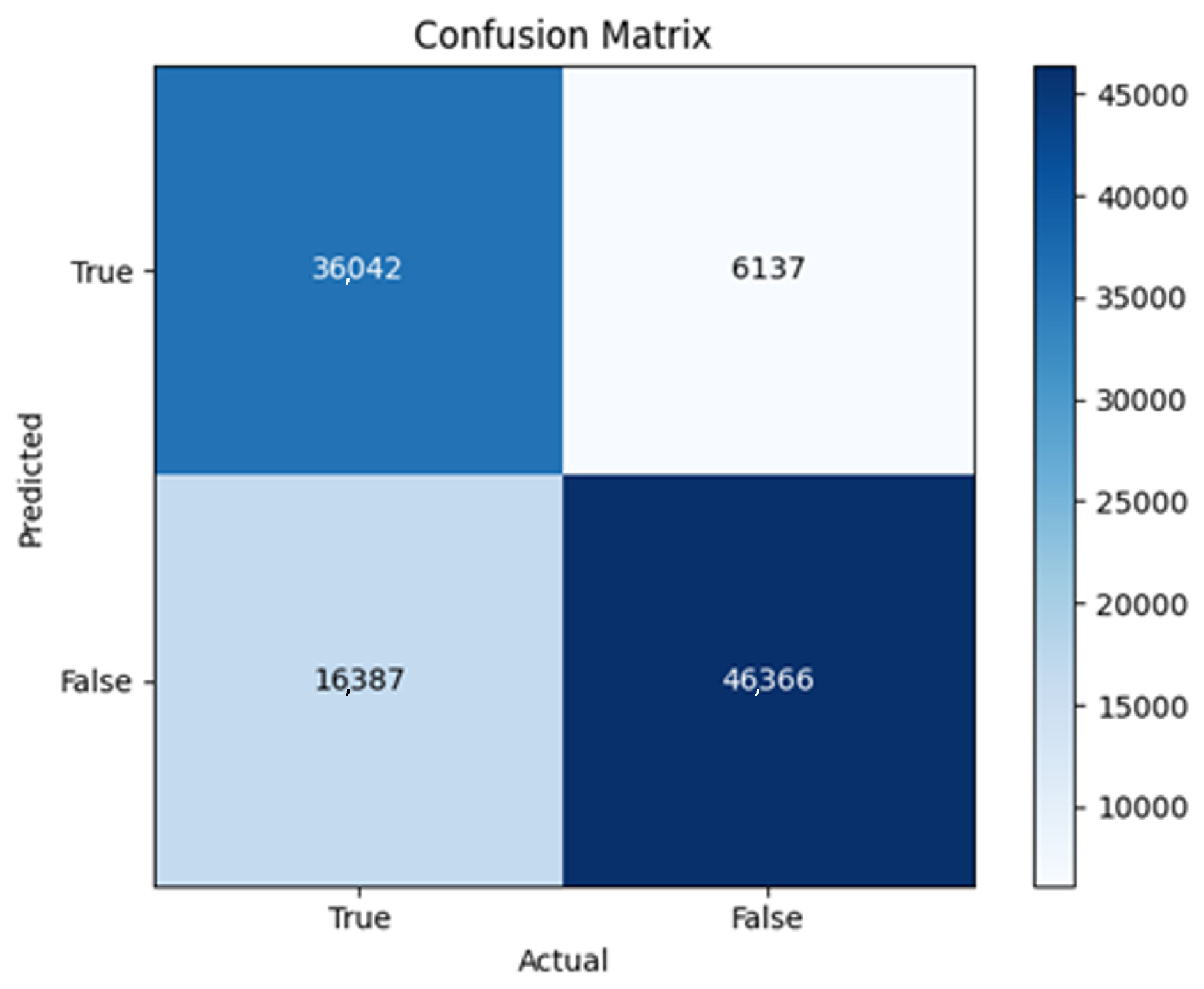

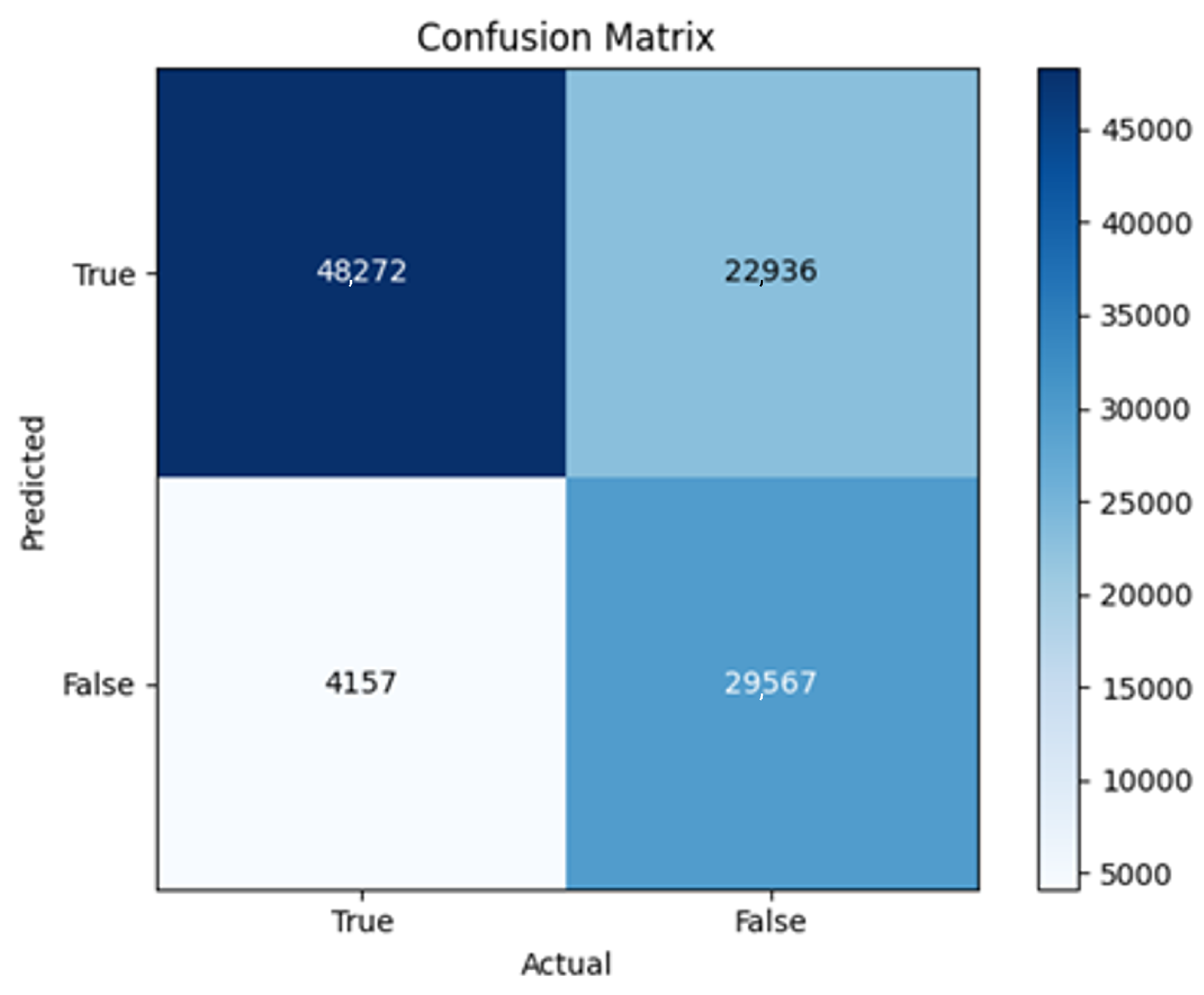

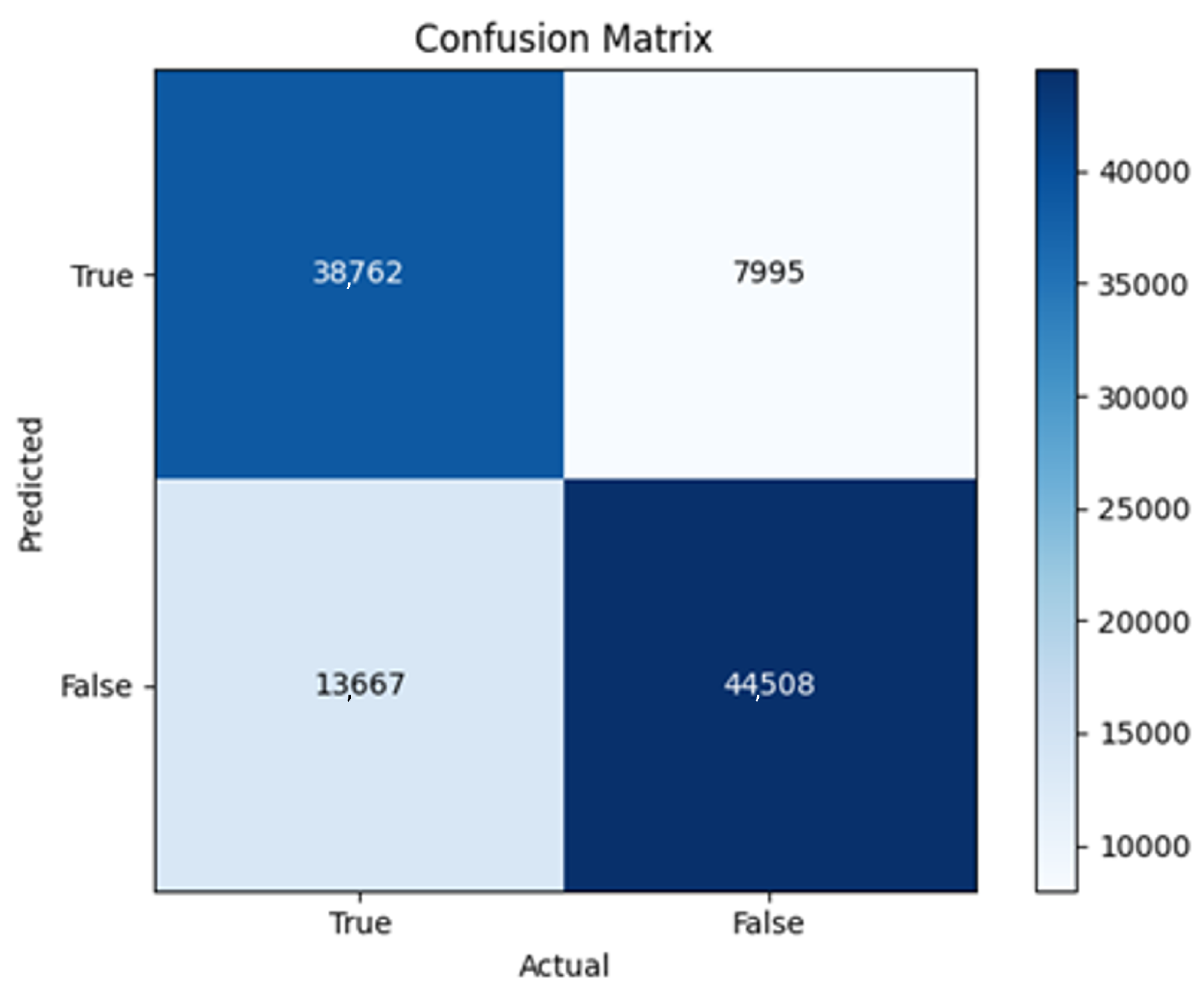

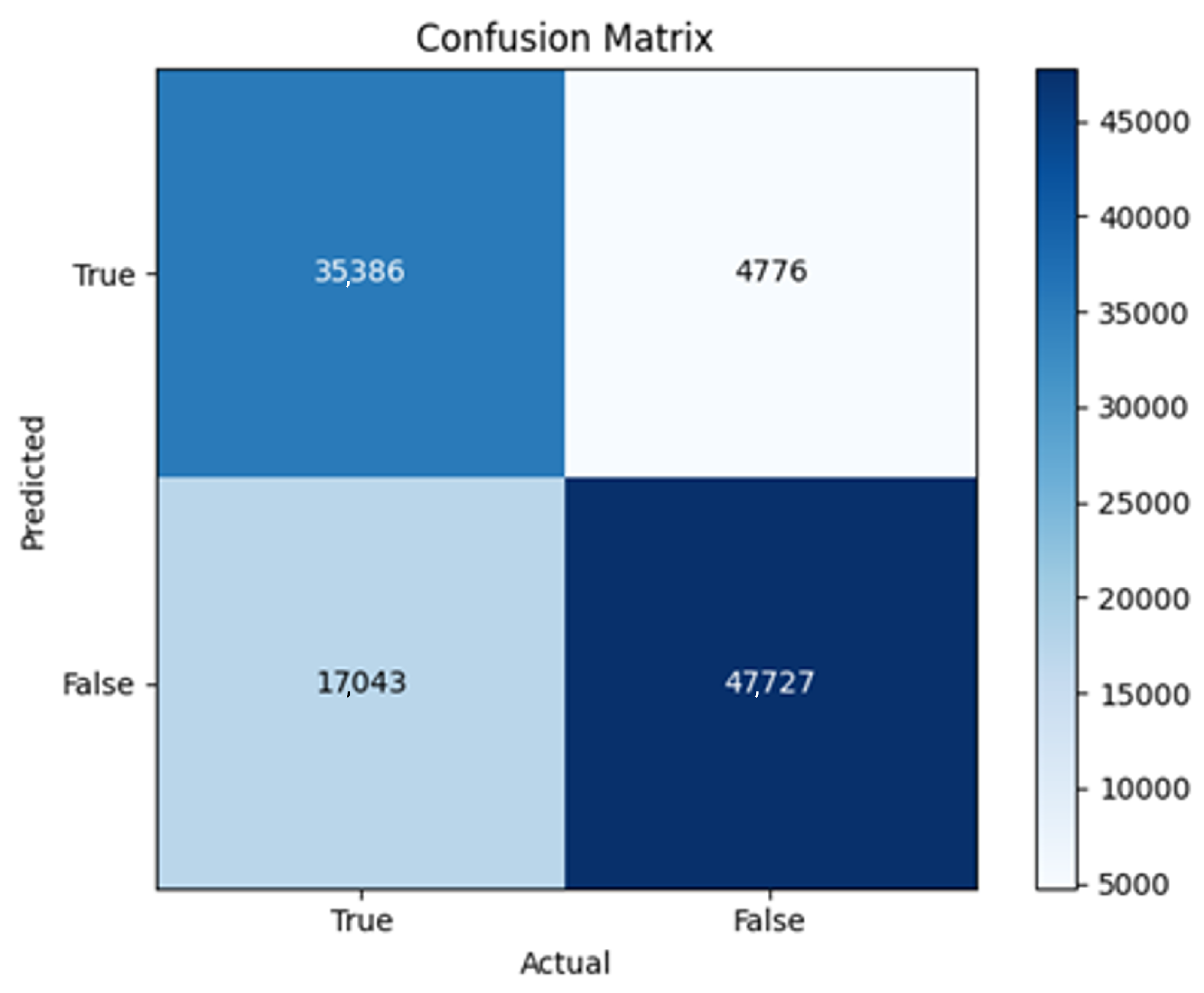

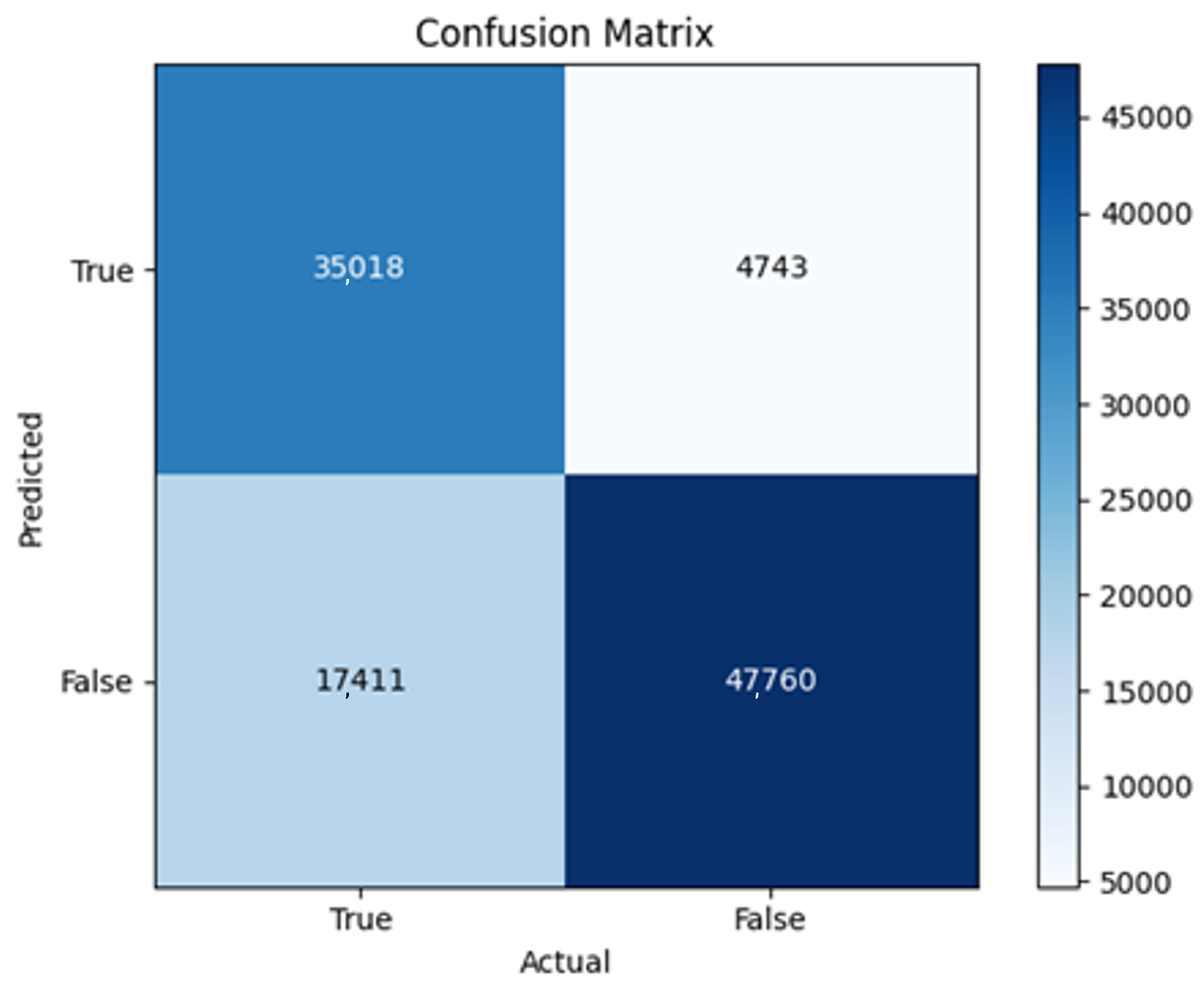

In the following, we summarize key trends across model families and optimizer behaviors, highlighting consistent patterns and notable anomalies. Full per-model results, including epoch-wise metrics, confusion matrices, and training curves, are provided in the (Appendixes

Appendix A and

Appendix B).

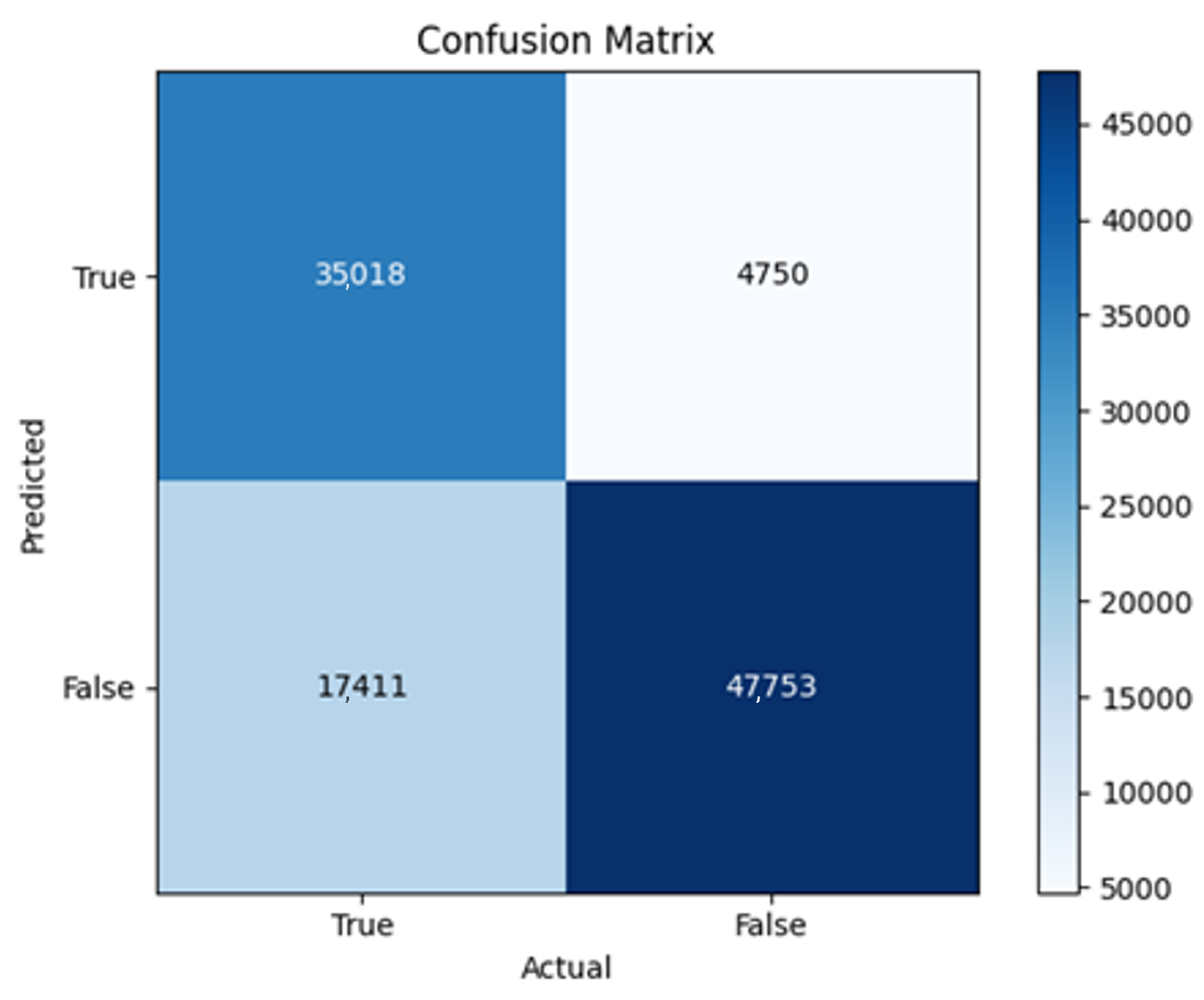

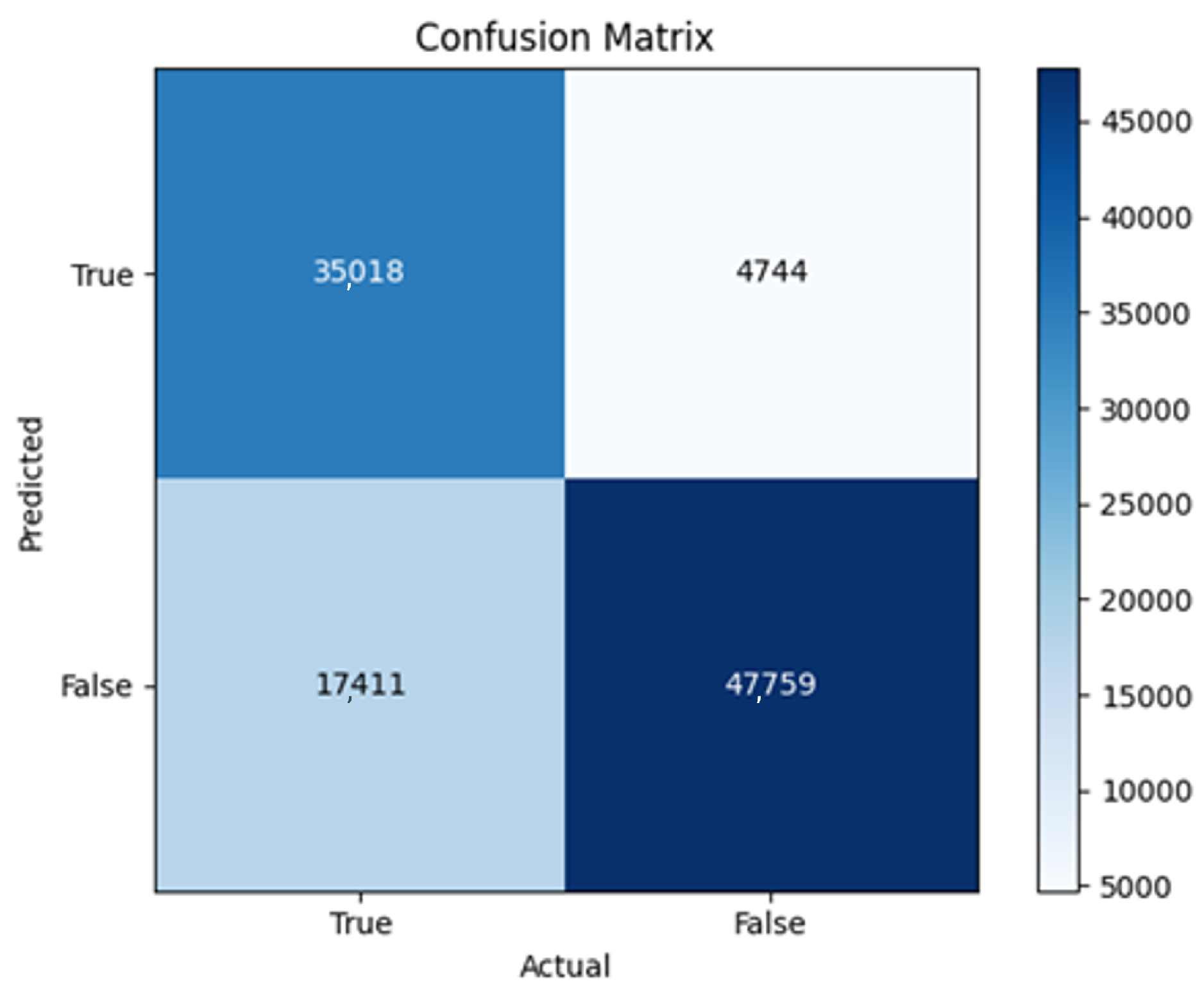

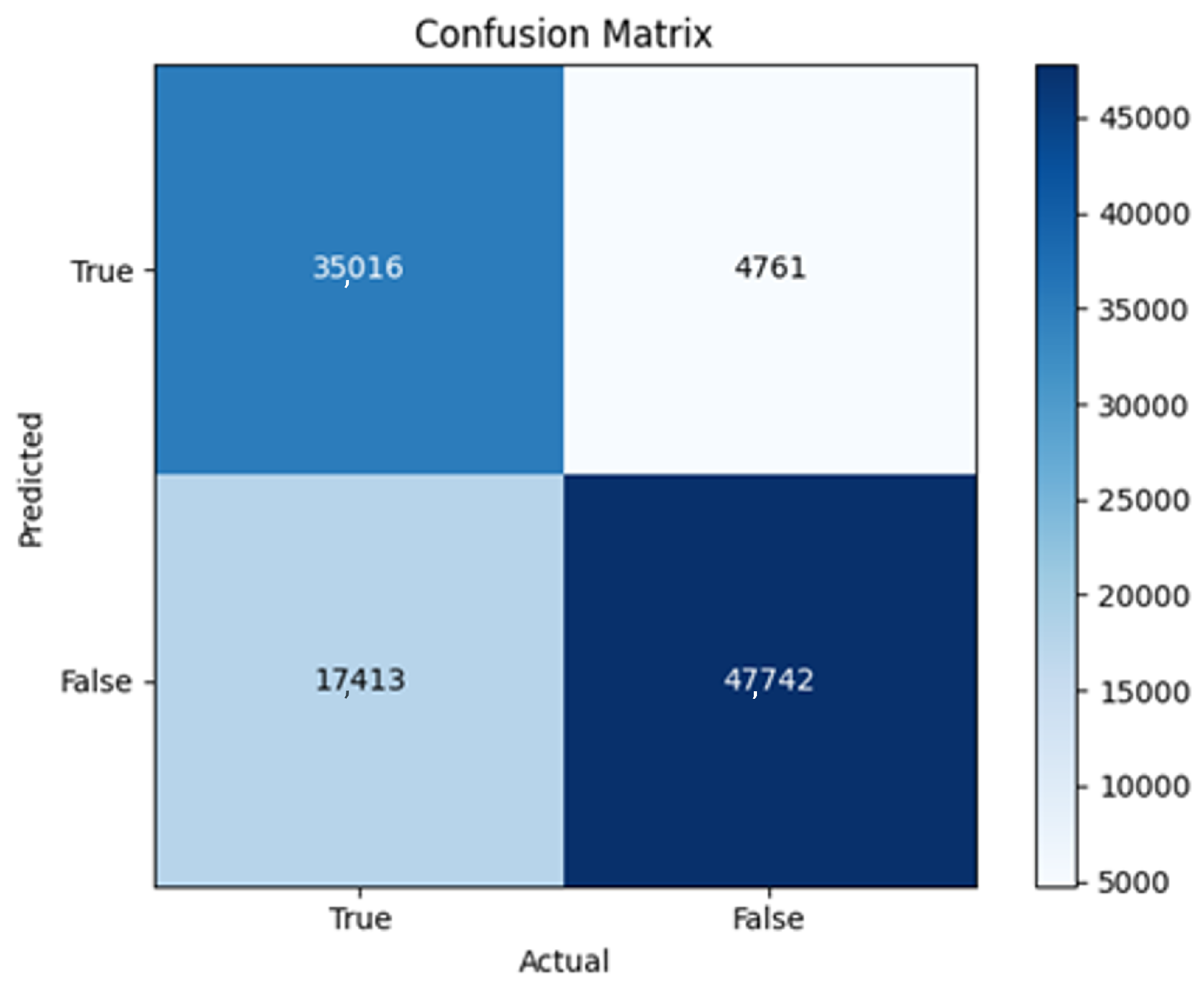

6.1. One-Branch Models (F1–F4)

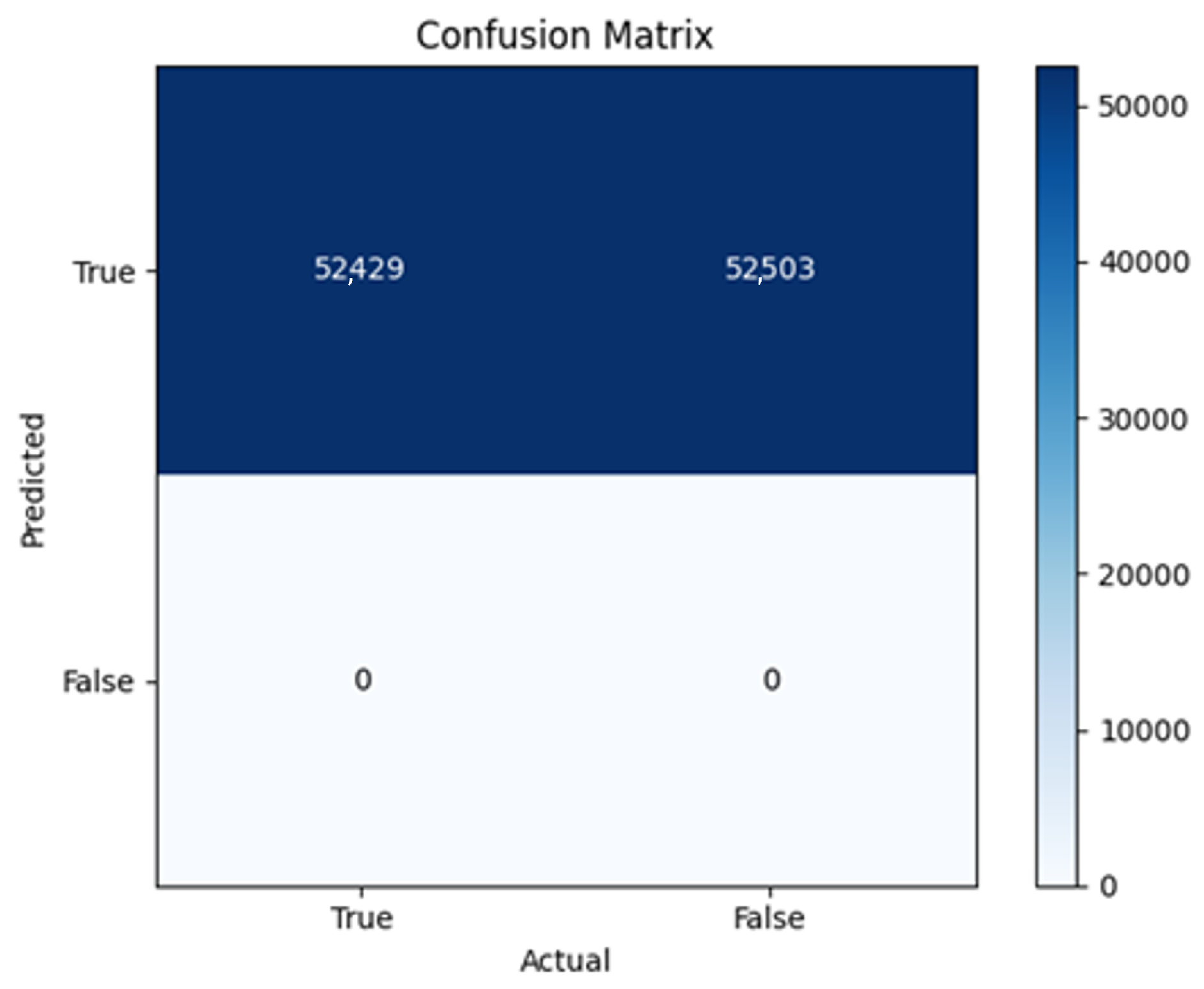

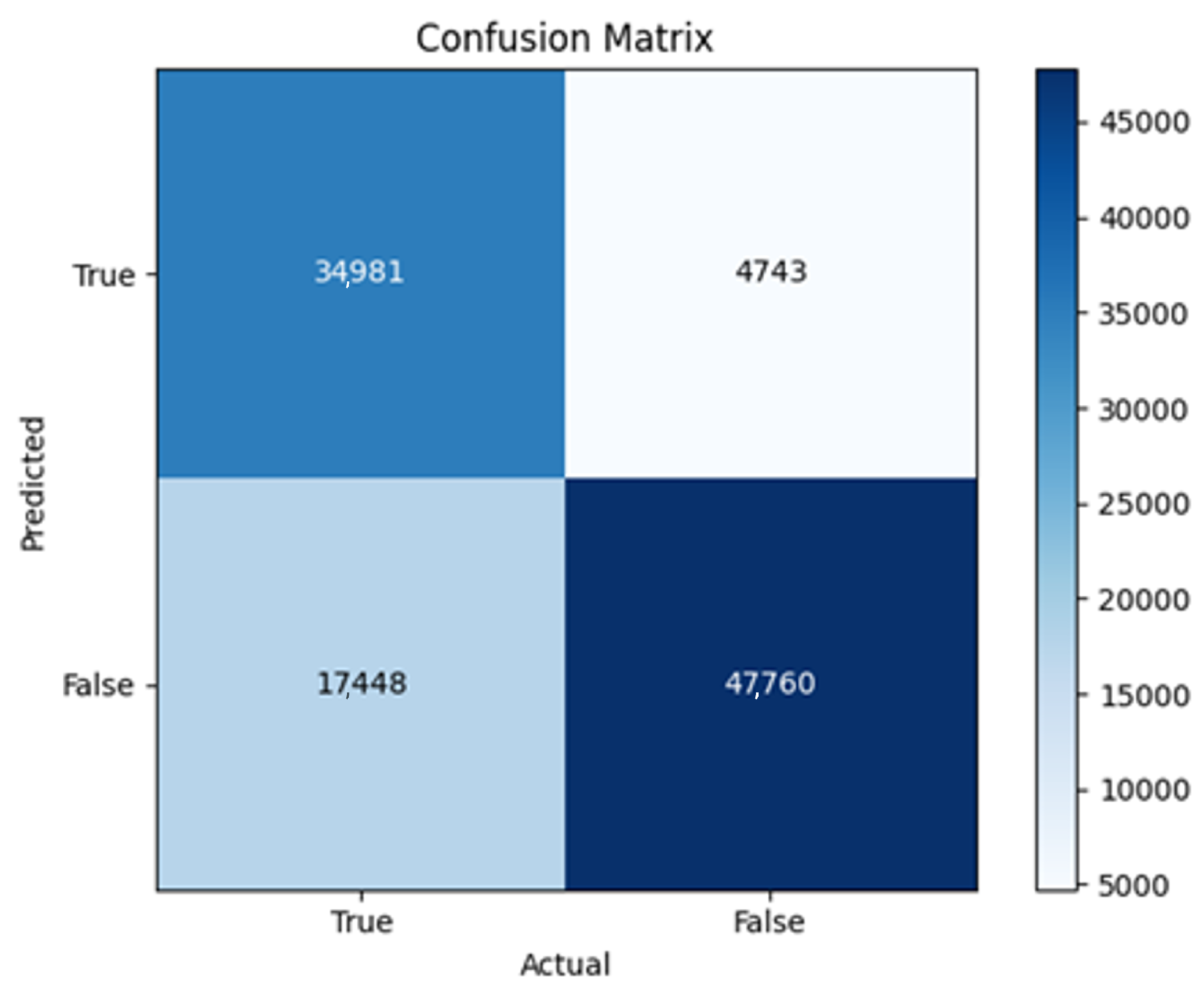

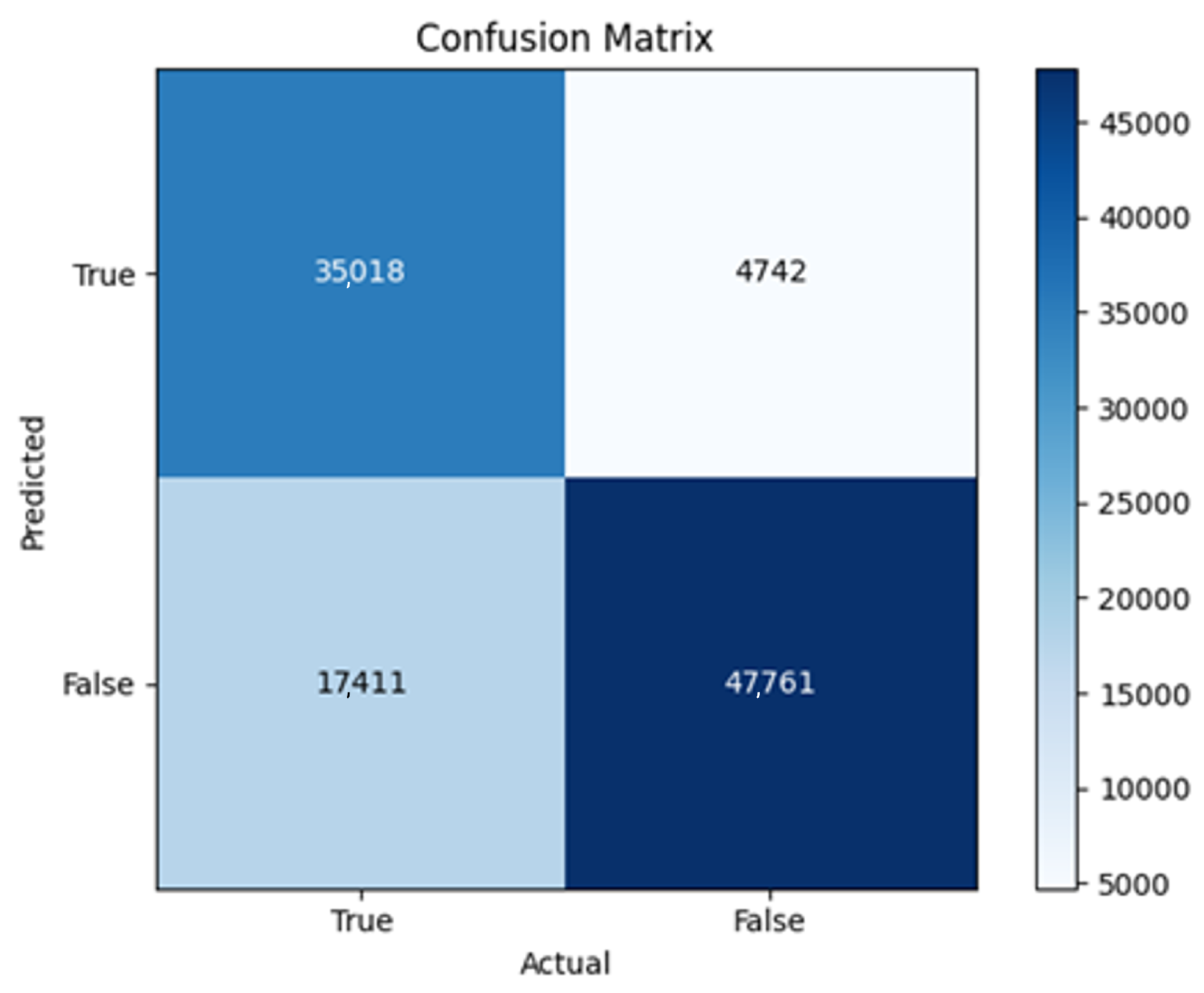

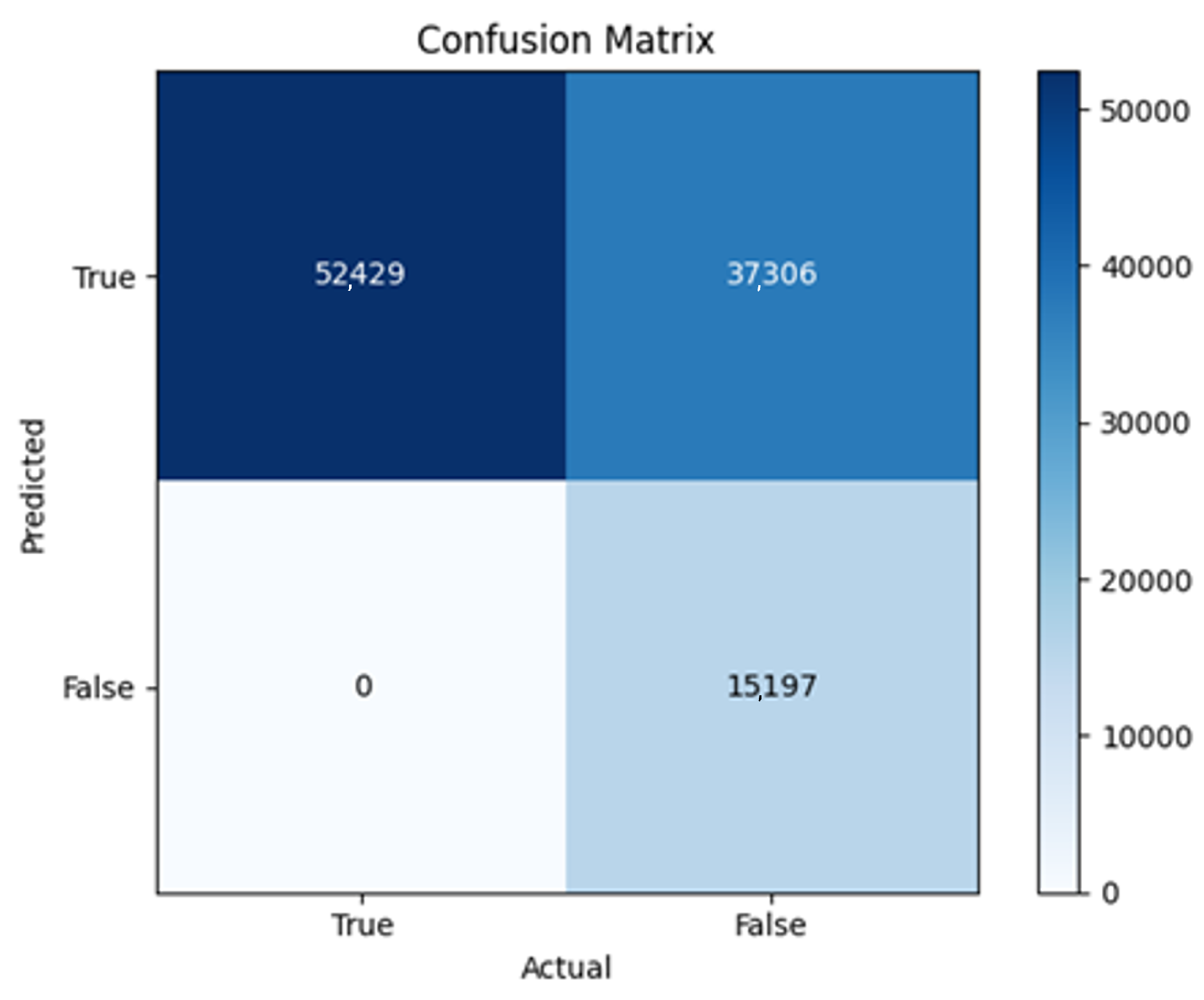

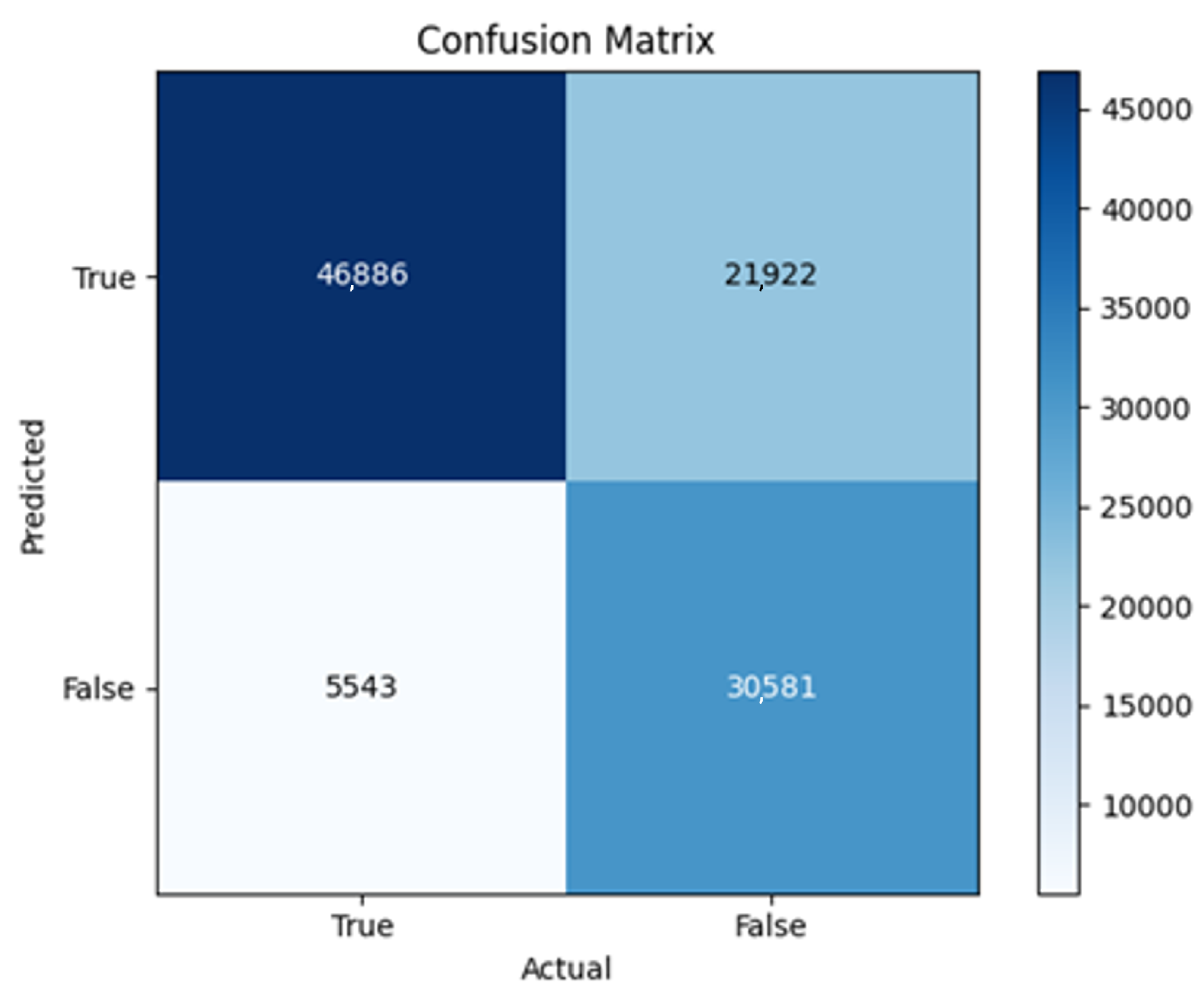

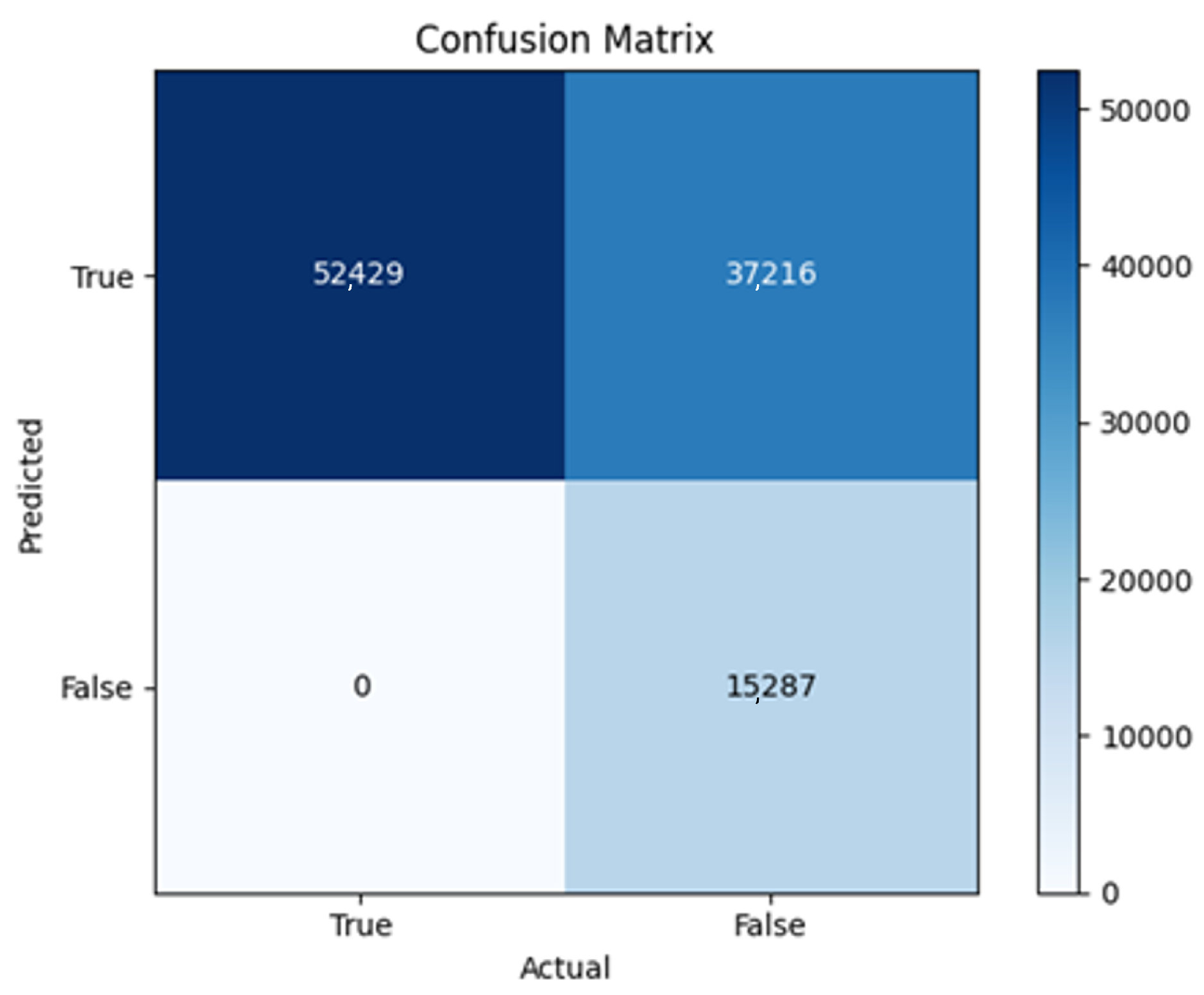

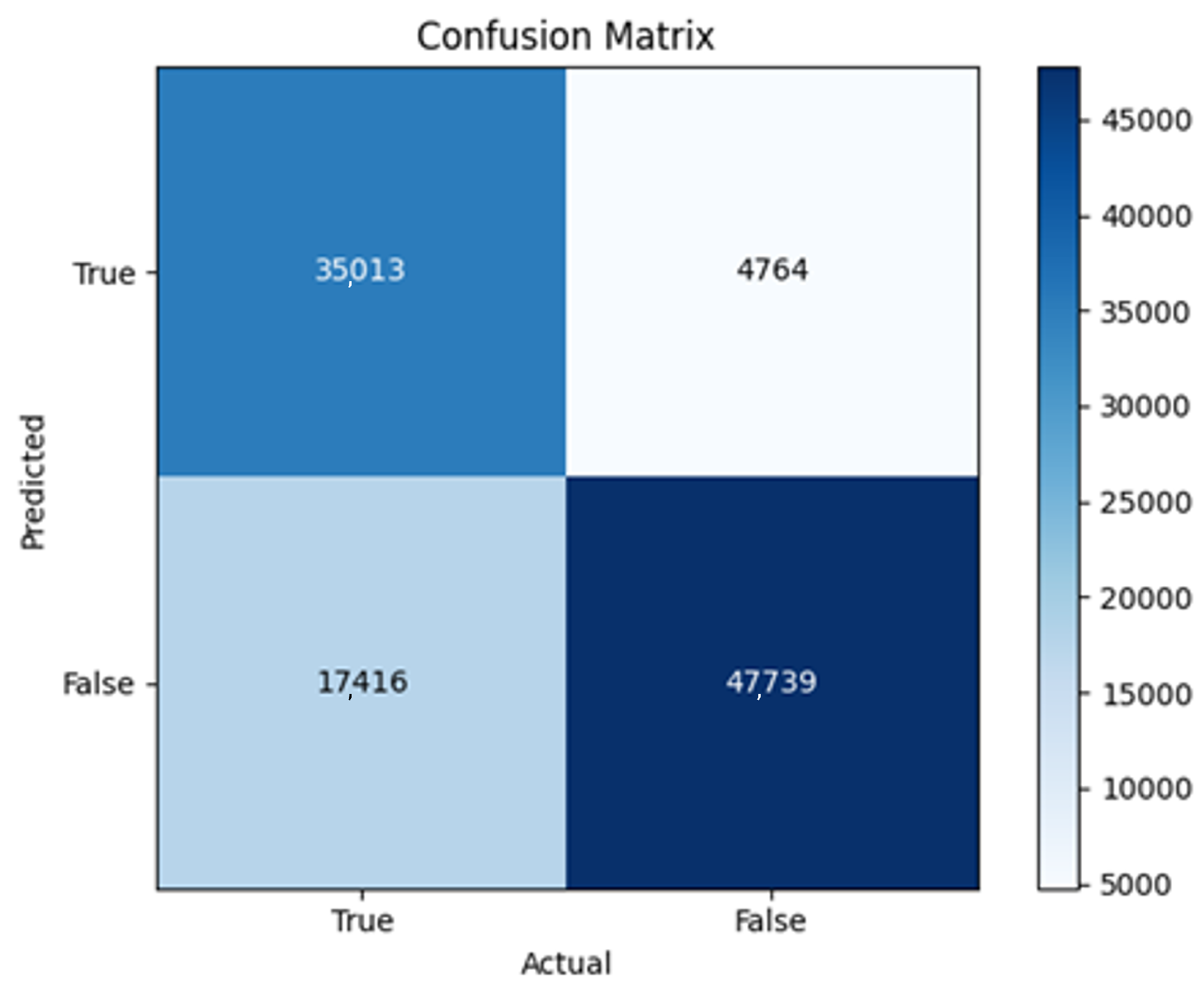

All four one-branch architectures, which rely solely on user metadata, achieved remarkably consistent performance despite varying depth and regularization. Across optimizers, SGD consistently yielded stable convergence (72–79 epochs) with ≈79% accuracy, , and ≈0.595, while Adam frequently collapsed into trivial solutions. Notably, in F2-Adam and F3-Adam, it predicted nearly all samples as fake (). RMSprop showed fast convergence but minor overfitting tendencies, and Adadelta often failed to stabilize within 200 epochs, sometimes producing high false positive rates (e.g., F4-Adadelta: FP = 21,922). Interestingly, increasing model depth () or adding dropout (F4) did not significantly improve performance, suggesting that metadata alone contains sufficient signal for robust classification under this setup, and that architectural complexity offers diminishing returns.

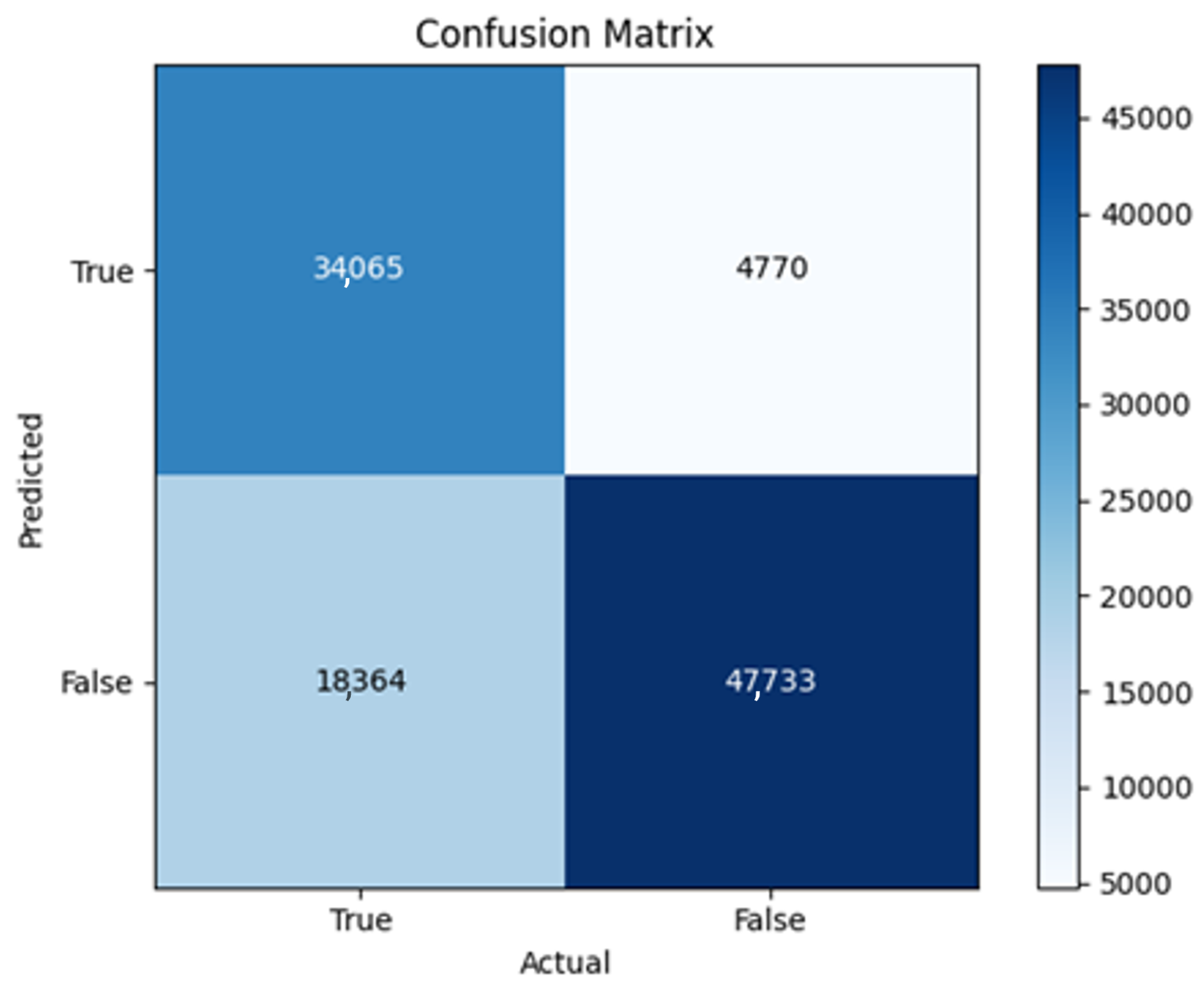

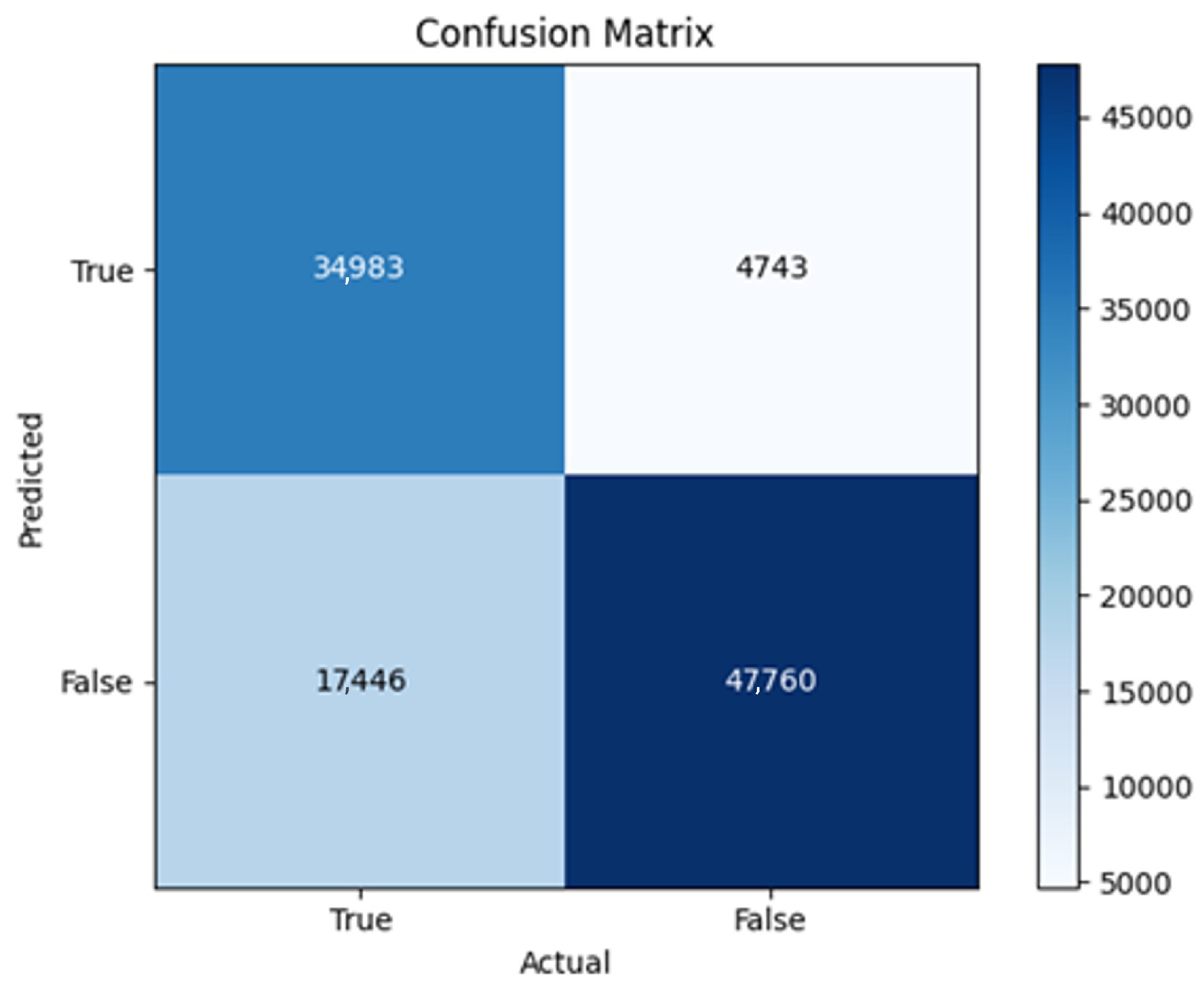

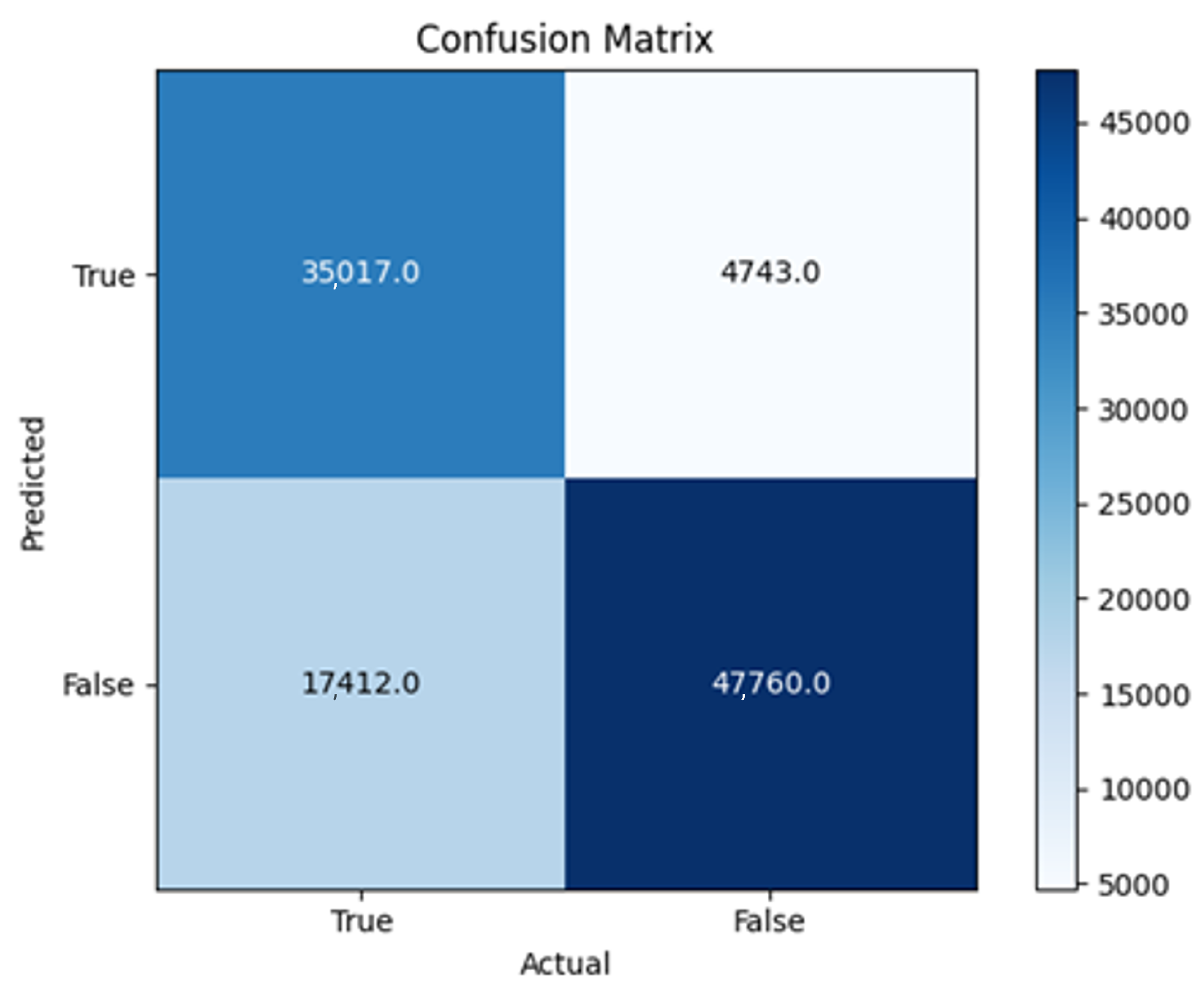

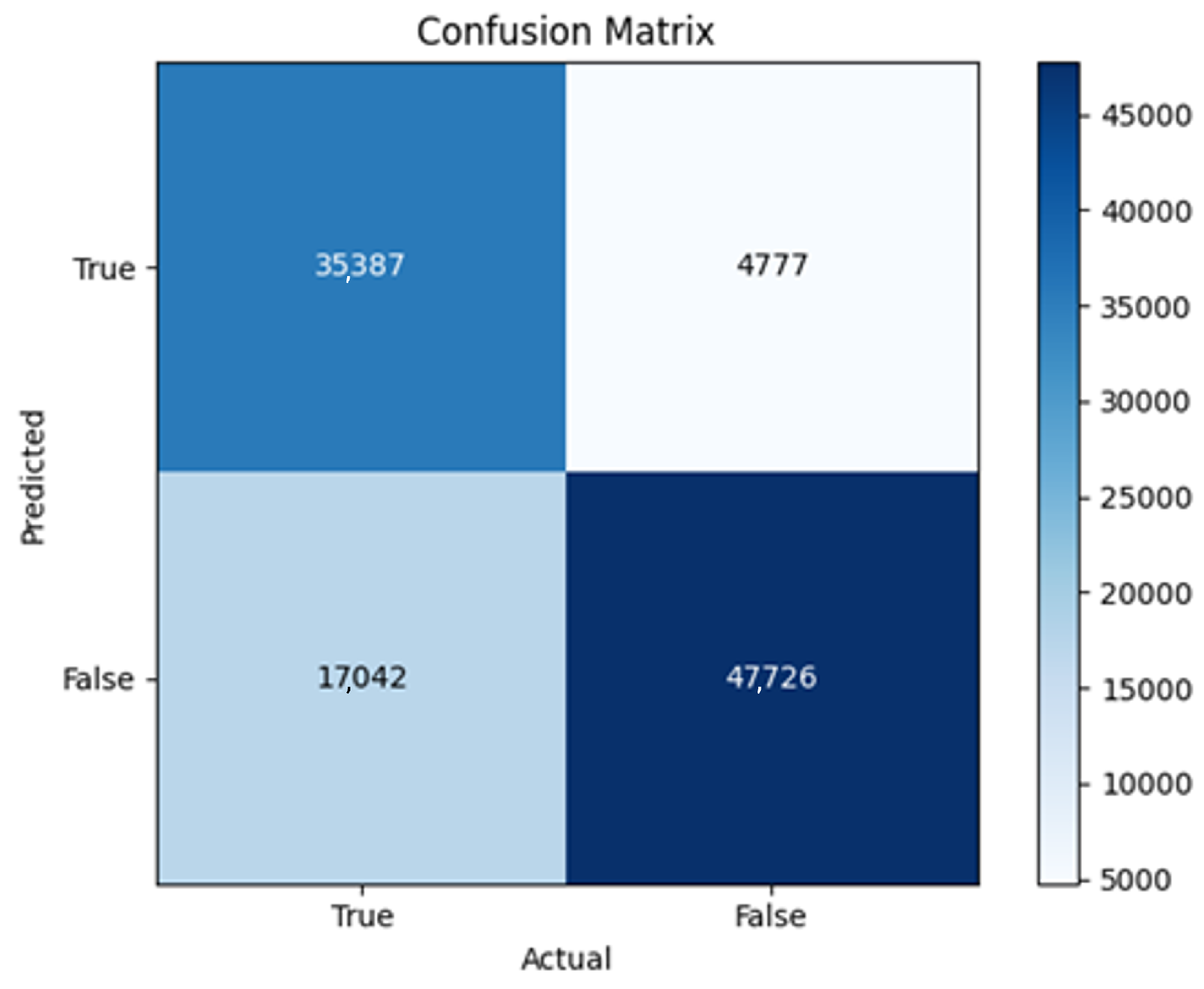

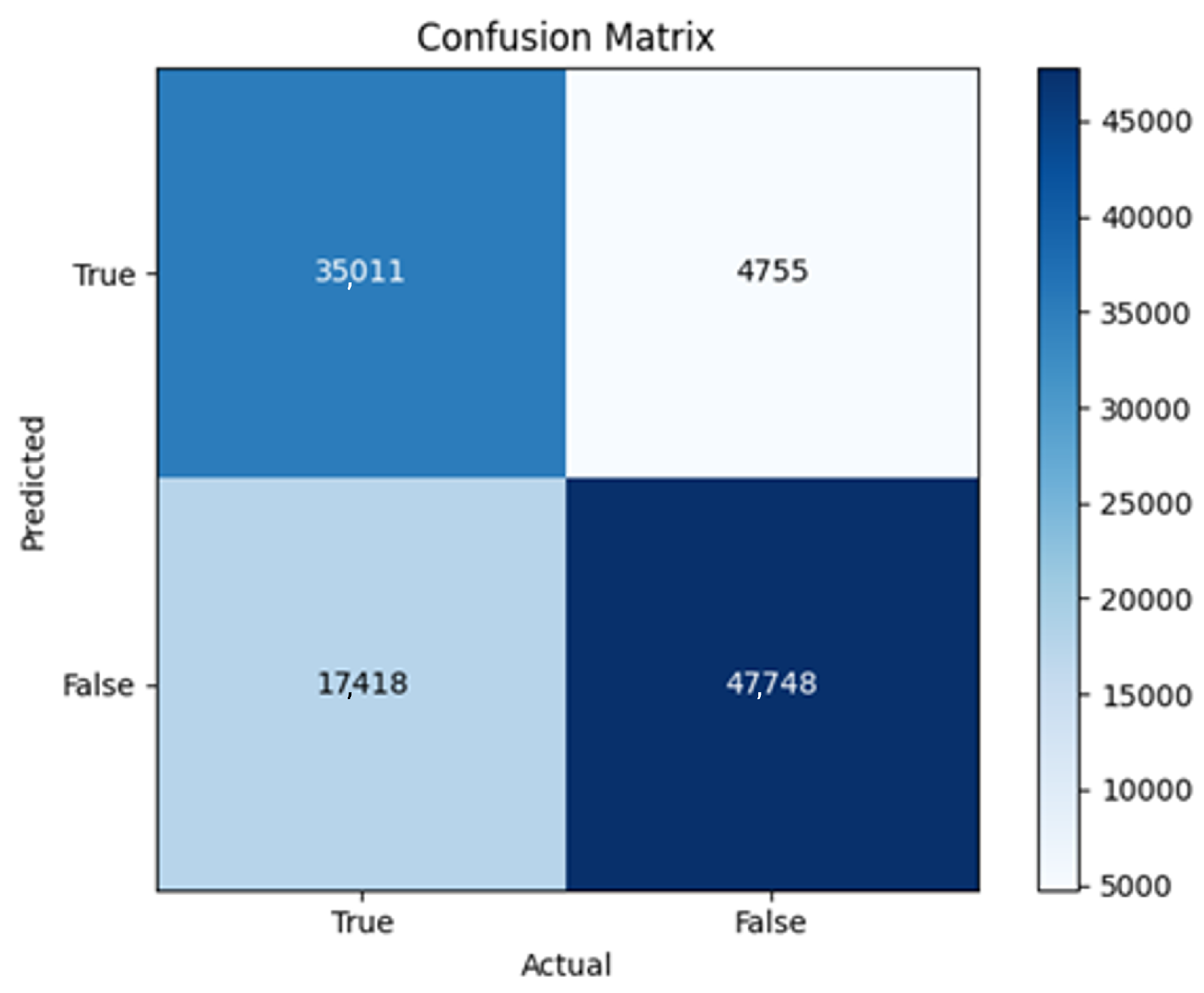

6.2. Two-Branch Models (2B1–2B3)

The three two-branch models, which combine metadata with tweet embeddings, largely mirrored the performance of their one-branch counterparts, reinforcing the study’s central finding that tweet content adds limited value when fused via simple concatenation and encoded with SpaCy 300-d vectors. The best-performing model overall was 2B3-SGD, achieving MCC = 0.6007, a marginal gain over metadata-only models -while 2B2 (dense-only text branch) confirmed that shallow networks struggle to extract meaningful signals from static tweet embeddings. Again, SGD proved the most reliable optimizer, whereas Adam exhibited instability (e.g., 2B3-Adam: 100% recall but 58% precision due to aggressive false positives). Notably, removing dropout (2B3) did not degrade performance, suggesting that data augmentation may partially substitute for explicit regularization in this context. Overall, the multimodal architectures did not outperform metadata-only baselines in a statistically meaningful way, underscoring the dominance of metadata features in this experimental framework.

6.3. Discussion

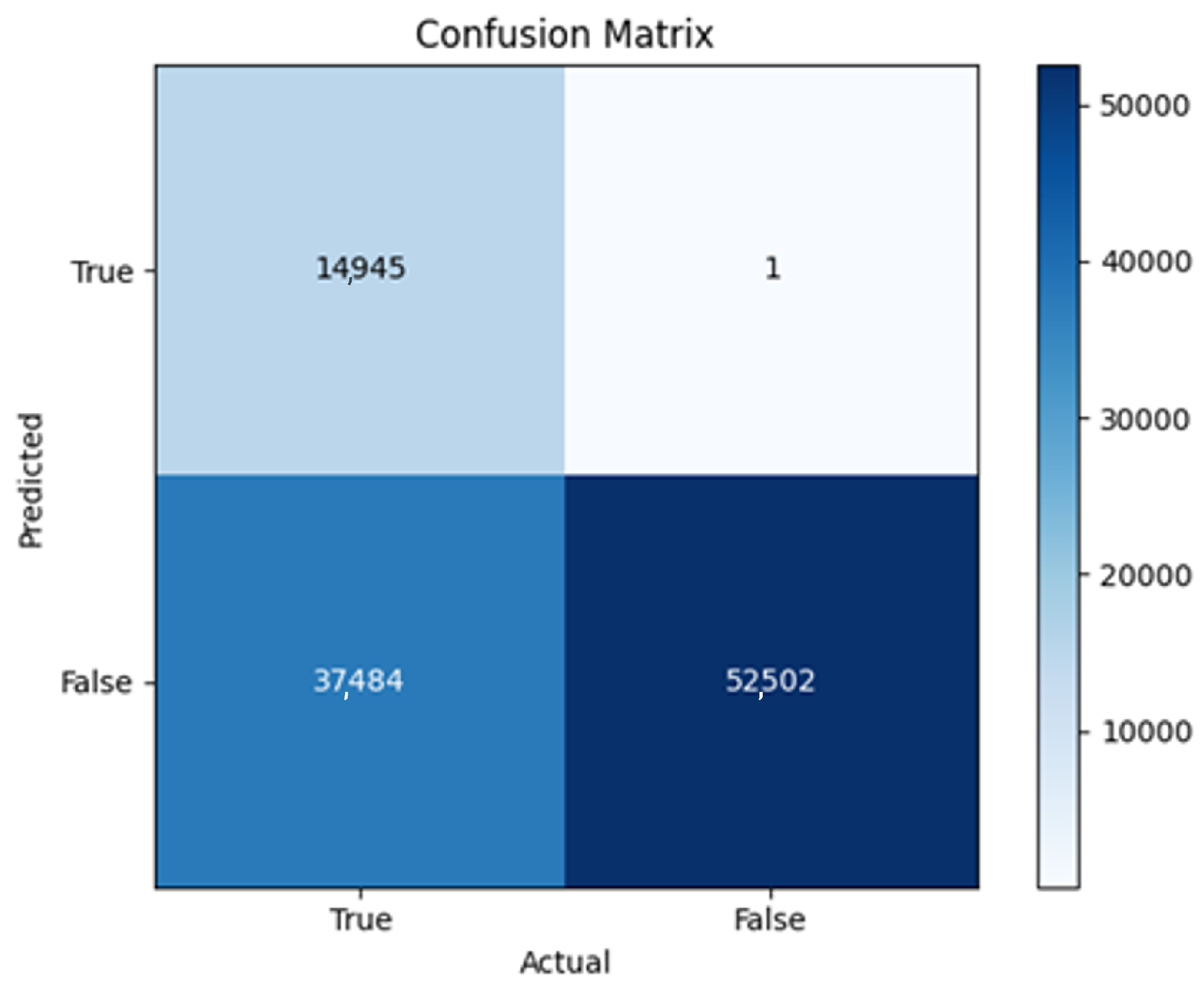

In this study, multiple models were trained using different optimizers, revealing significant performance disparities tied to architectural and data-related vulnerabilities. It should be noted that augmented samples were included in both training and test sets. While this was intended to evaluate robustness under diverse synthetic scenarios, it deviates from standard practices and may have led to optimistic performance estimates, a pattern reflected in the recurrent anomaly of validation loss falling below training loss. While the SGD optimizer consistently demonstrated robust convergence and accuracy, Adam exhibited instability, particularly in models like F2-Adam, F3-Adam, and 2B3-Adam, where it collapsed into trivial solutions (FP = 0, TN = 0) due to sensitivity to skewed gradients. RMSprop and Adadelta performed moderately but struggled with architectures prone to overfitting, such as F4-Adadelta, which misclassified 21,922 legitimate accounts as fake (FP approximately 42% of TP). This could be caused by amplified noise in augmented data.

The observed anomaly of validation loss remaining persistently lower than training loss -most pronounced in F4-Adadelta and 2B1-SGD-LR (0.015) suggests data leakage during augmentation, where synthetic samples mirrored training patterns, or flawed stratification skewed class representation. For instance, metadata-only suffered from overlapping features between highly active legitimate accounts and fake accounts, while some two-branched models failed to harmonize tweet embeddings with metadata, resulting in erratic loss curves. Notably, models lacking dropout, such as 2B3-Adadelta, overfit to honeypot-specific features, and architectures relying on Adam, like F3-Adam, degenerated into predicting all samples as one class, highlighting optimizer-induced problems. These patterns underscore the fragility of deep learning frameworks in detecting fake accounts, where optimizer choice, data representativeness, and feature diversity critically mediate performance. To address these issues, future work should prioritize stratified cross-validation to ensure balanced class representation, refine data augmentation to avoid synthetic biases, and replace unstable optimizers like Adam with SGD or RMSprop in architectures prone to collapse.

The F4-Adadelta model’s extreme FP count (21,922) and TN (30,581) reflect a failure to distinguish nuanced metadata patterns, likely exacerbated by the Social Honeypot dataset’s synthetic biases, which disproportionately represented bot-like behaviors. Similarly, F2-Adam’s near-zero FP and TN values (FP = 1, TN = 52,502) reveal a collapse into trivial solutions, where the model prioritized minimizing loss by ignoring class boundaries entirely. This behavior aligns with Adam’s susceptibility to gradient noise in imbalanced datasets, a flaw less pronounced in SGD’s fixed learning rate. Meanwhile, 2B3-SGD ’s superior MCC (0.6007) underscores the value of combining tweet embeddings with metadata. The F1-Adadelta model’s prolonged training (199 epochs) without convergence suggests that adaptive optimizers like Adadelta may require stricter early stopping criteria or dynamic learning rate schedules to avoid overfitting to augmented data.

The persistent issue of validation loss dipping below training loss across models points to systemic flaws in data partitioning. Augmented samples from the Social Honeypot dataset, designed to mimic real-world spam, may have inadvertently mirrored training data, creating an artificial overlap between training and validation sets. This overlap allowed models to exploit synthetic patterns rather than generalize. For example, F4-Adadelta ’s high FP rate (42% of TP) likely stemmed from overemphasizing follower ratios, a feature easily manipulated in honeypot data.

The F3-Adam, F2-Adam, and F4-Adam models’ inability to converge, halting after 7–9 epochs, illustrates Adam’s instability in sparse-feature environments. Metadata features like “listed count” or “favorites count,” which lack clear discriminatory power, may have produced noisy gradients, destabilizing Adam’s adaptive learning rate. In contrast, SGD’s fixed rate allowed gradual convergence in models like 2B3-SGD, which balanced tweet and metadata signals effectively. The 2B2-SGD model’s reliance on dense layers for tweet processing, instead of LSTM, highlights another limitation: shallow networks struggle to model sequential dependencies in text, reducing tweet data’s utility. This aligns with the study’s broader finding that combining modalities (text + metadata) did not always boost performance, likely due to suboptimal feature fusion and insufficient text preprocessing.

It is important to note that our findings, showing that tweet content provides limited improvement over metadata alone, are contingent on our specific design choices: (1) the use of static SpaCy word vectors for tweet representation, which lack contextual awareness compared to transformer-based embeddings, and (2) a simple concatenation-based fusion strategy that does not model cross-modal interactions. More recent works like [

29] have demonstrated that advanced fusion mechanisms -such as gated units or attention, can better exploit complementary signals between text and metadata. Therefore, our results should not be interpreted as evidence that tweet content is inherently uninformative, but rather that its utility depends critically on the quality of text encoding and fusion architecture.

Finally, the F1-SGD-LR (0.001) model’s erratic learning rate reduction (from 0.001 to 1.5 × 10−7) without performance gains underscores the need for adaptive scheduling tailored to dataset characteristics. Overly aggressive rate decay may have trapped the model in shallow local minima, while insufficient decay in F4-SGD-LR (0.001) prolonged training without improving generalization. These findings collectively emphasize that robust fake account detection requires not only sophisticated architectures but also careful optimizer selection, data curation, and validation strategies to mitigate biases and synthetic artifacts inherent in augmented datasets.

7. Conclusions and Future Works

This work manifests the significance and widespread influence of social media, along with its implications and potential threats. It specifically emphasizes the importance of identifying abnormal actors on social media platforms. The study’s main focus is on the detection of fake accounts on Twitter using a binary classification approach. Through an extensive review of related works and surveys, various approaches and techniques employed in previous studies are examined, while also highlighting the significant limitations and drawbacks present in the current literature that this study aims to overcome. Overall, the shortcomings of the related work include limited scope, dependency on historical data, potential evasion by fraudulent actors, costly implementation, lack of comprehensive features, limited generalizability, lack of comparative analysis, and incomplete coverage of spam detection. By recognizing and addressing these limitations, this study seeks to contribute to the advancement of fake account detection on social media platforms, particularly on Twitter. While our approach does not match the architectural complexity or input modality richness of recent state-of-the-art frameworks, it offers a lightweight, reproducible, and deployment-friendly alternative that achieves competitive performance using only user metadata and raw tweet text -without requiring graph construction, external APIs, or extensive computational resources. To tackle the problem, a dataset was collected and carefully prepared from diverse sources and perspectives. To cover a wide range of real-life scenarios, data augmentation techniques were applied. The dataset was structured into two formats, aligning with the requirements of two types of models. The first format consisted of user metadata alone, while the second format incorporated both user metadata and one of their tweets. This approach, which combines numerical and textual data, has been found to be more efficient in this particular task, according to several studies.

Drawing upon deep learning techniques, seven different architectures were proposed and implemented. These models were tested using four different optimizers: SGD, RMSprop, Adam, and Adadelta. Furthermore, to explore the impact of the learning rate, models utilizing the SGD optimizer were trained twice, incorporating a learning rate reducer. The implemented models comprised four single-branched models that utilized only user metadata, and three two-branched models that incorporated both user metadata and tweets.

To assess the performance of each model, a range of metrics were employed, including loss, accuracy, area under the curve (AUC), confusion matrix, precision, recall, specificity, F1 and F2 scores, and the Matthews Correlation Coefficient (MCC). These metrics offered comprehensive insights into the models’ performance and effectiveness in classification tasks. Contrary to the claims made previously, the incorporation of tweets alongside user metadata did not significantly impact the models’ ability to classify fake accounts. The SGD optimizer consistently demonstrated good performance, while RMSprop and Adadelta also yielded favorable results in certain models. However, the Adam optimizer exhibited unstable behavior and struggled to converge.

Overall, SGD emerged as the most efficient optimizer in the majority of cases, whereas Adam showed comparatively less effectiveness. It is crucial to focus on optimizing the learning rate and closely monitor metrics such as loss, accuracy, and convergence during training. In general, the results indicated that SGD optimizers and learning rate reducers showed promising outcomes in most cases, while RMSprop and Adam optimizers exhibited some issues. Adadelta performed well in certain models but had its limitations.

Considering the similarities observed in the results of this study and the scope for improvement, several avenues for future work can be explored, including:

exploring additional datasets and features: incorporating diverse datasets and additional features may enhance the accuracy and robustness of classification.

Conduct feature importance analysis (e.g., SHAP, LIME) to quantify the contribution of individual metadata and textual features to model predictions.

Re-evaluate all models on a strictly unaugmented test set to obtain unbiased generalization metrics.

Implement stratified, grouped K-fold cross-validation (grouped by user account) to ensure robust performance estimation and quantify fold-level variance.

Generalization to different platforms: Extend the study to encompass multiple social media platforms, as each platform may have unique characteristics and challenges regarding fake account detection. Investigate the transferability of the models and their performance on different platforms.

Investigate the use of more advanced deep learning architectures, such as transformers, and how such architectures can improve classification performance.

Explore transformer-based text representations to assess whether richer semantic encoding can unlock the value of tweet content in multimodal fake account detection.

use of transfer learning: by using some pretrained models on some related tasks in the domain of fake account detection. This may leverage existing models and improve their performance.

Additional training and fine-tuning: Focus on further refining the proposed models that exhibit a good fit and classification ability. Emphasize additional training and fine-tuning strategies, particularly for models that have successfully converged and completed all training epochs without reaching a fixed and stable loss.

Conduct repeated experiments across multiple random seeds to compute confidence intervals for all metrics, and include strong non-deep-learning baselines (e.g., XGBoost, Random Forest) for comprehensive comparison.

Exploring advanced synthetic data generation methods, such as GANs or diffusion-based models for simulating evolving fake account behaviors to enhance dataset diversity and model robustness against emerging threats.

Evaluate model robustness via cross-dataset testing, and ablate provenance-sensitive features to isolate generalizable signals.

Explore emerging representation learning paradigms, such as state-space sequence models (e.g., DyG-Mamba [

58,

59]) and dynamic graph clustering techniques [

60,

61] for enhanced modeling of temporal and relational patterns in social media accounts.

Extend the framework to incorporate visual modalities, such as profile pictures and posted images, using CNN and transformer-based architectures for image analysis and segmentation (e.g., [

62,

63]).

By pursuing these future directions, the field of fake account detection can continue to advance, leading to more effective and reliable approaches for identifying non-real human actors on social media platforms.