Source Robust Non-Parametric Reconstruction of Epidemic-like Event-Based Network Diffusion Processes Under Online Data

Abstract

1. Introduction

- Problem Formulation—A novel online network diffusion reconstruction model is proposed that improves predictions of real-world online temporal networks.

- Simple Approach The dynamic costs of high-risk source candidates are evaluated at each step, which creates variation in costs. This enables robust and controllable detours and redundant sources to span the activated nodes over time.

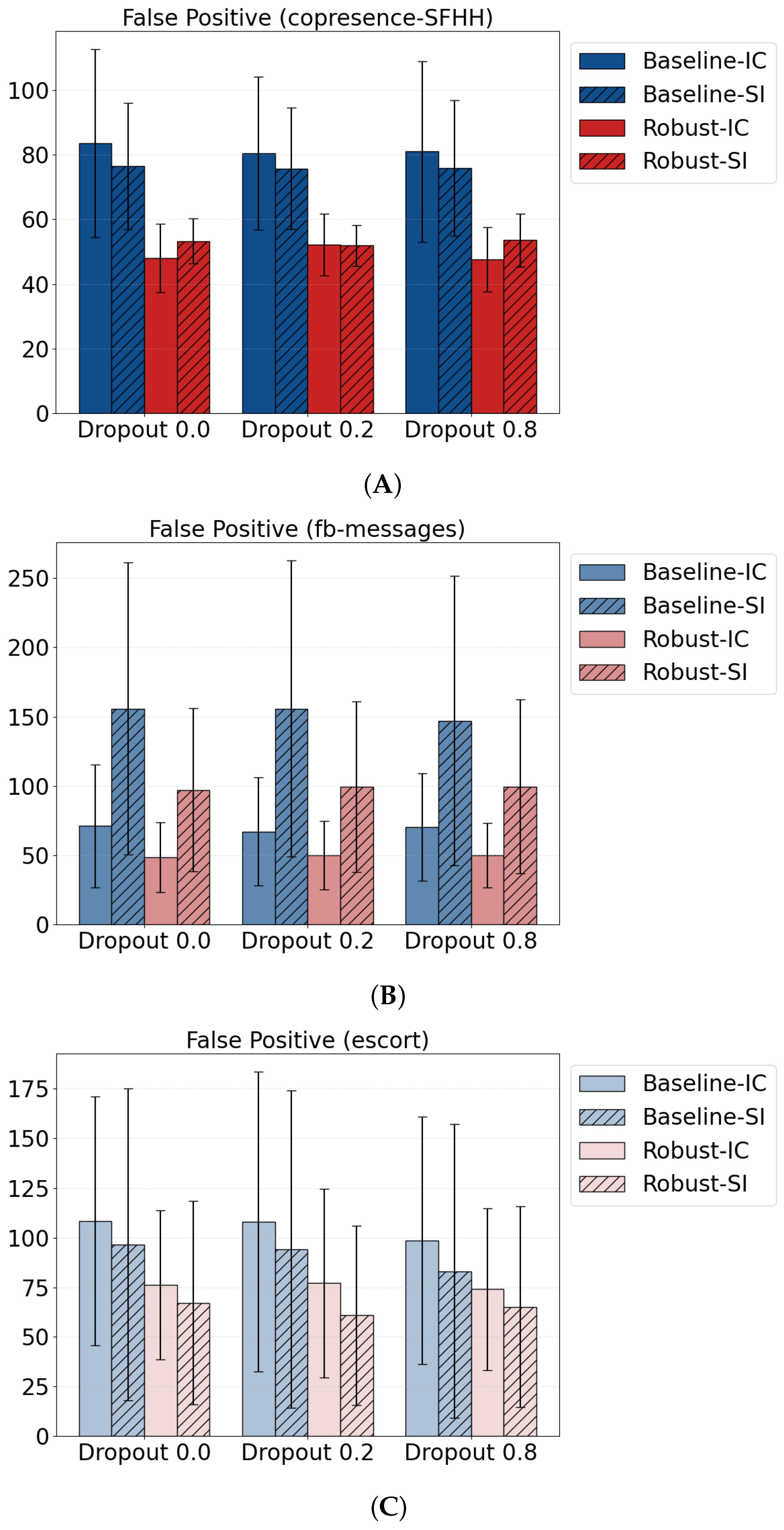

- Error Bounds—This study theoretically establishes improved regret bounds on false positives compared to the baseline implementation of the approximation algorithm. This improvement is achieved through weight and cost adjustments, naming it Robust-Ada Sources, ensuring that the adjusted touring reduces errors, see the second minus term shown below. The general improved false-positive error bounds were identified through a regret analysis of the proposed approach.

- Linearly Model Improvement—Assuming that positive cases follow logistic growth. Without weight adjustment, the additional errors grow linearly and are always greater than or equal to those observed with adjustment, provided that the regularization parameter , is shown below.

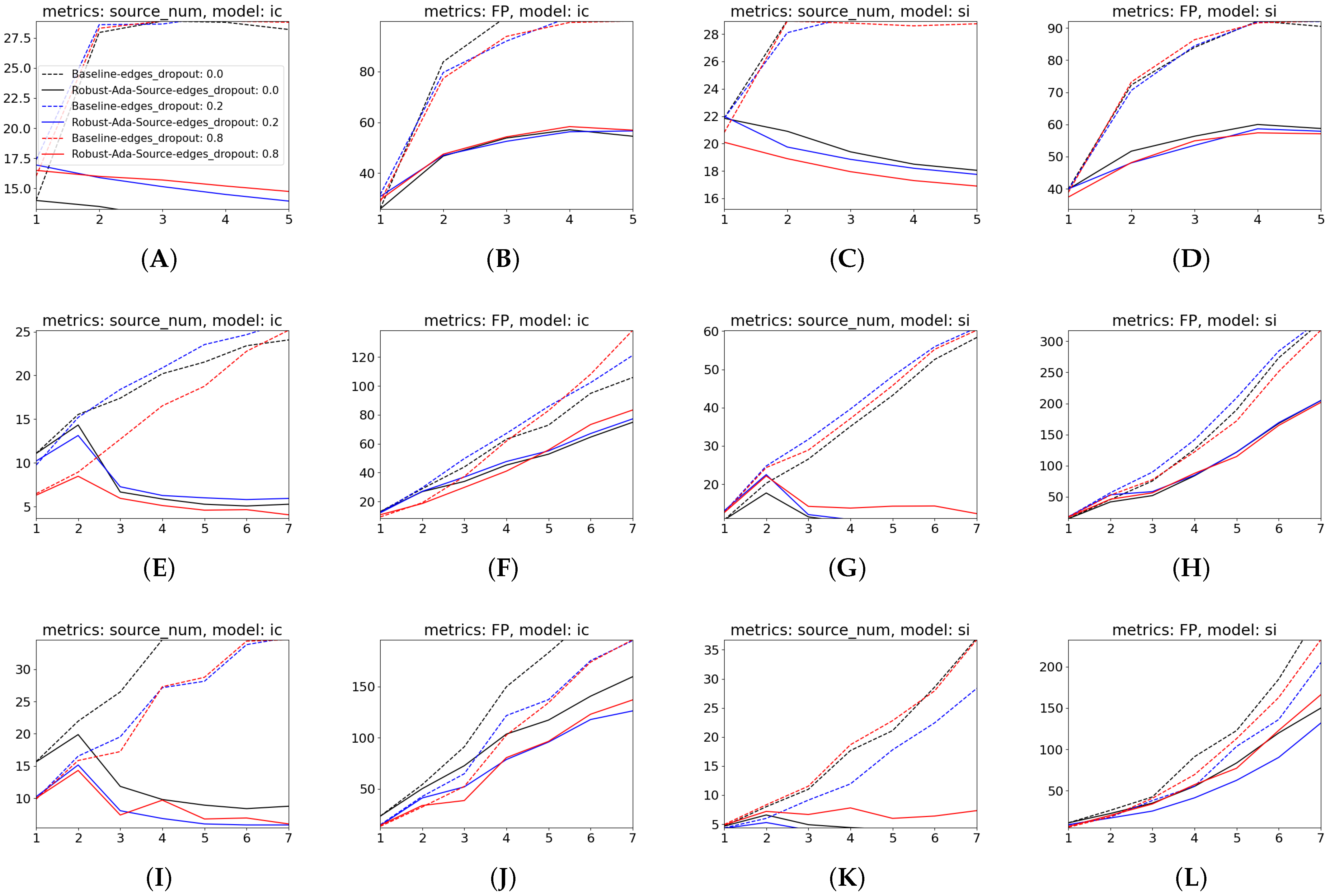

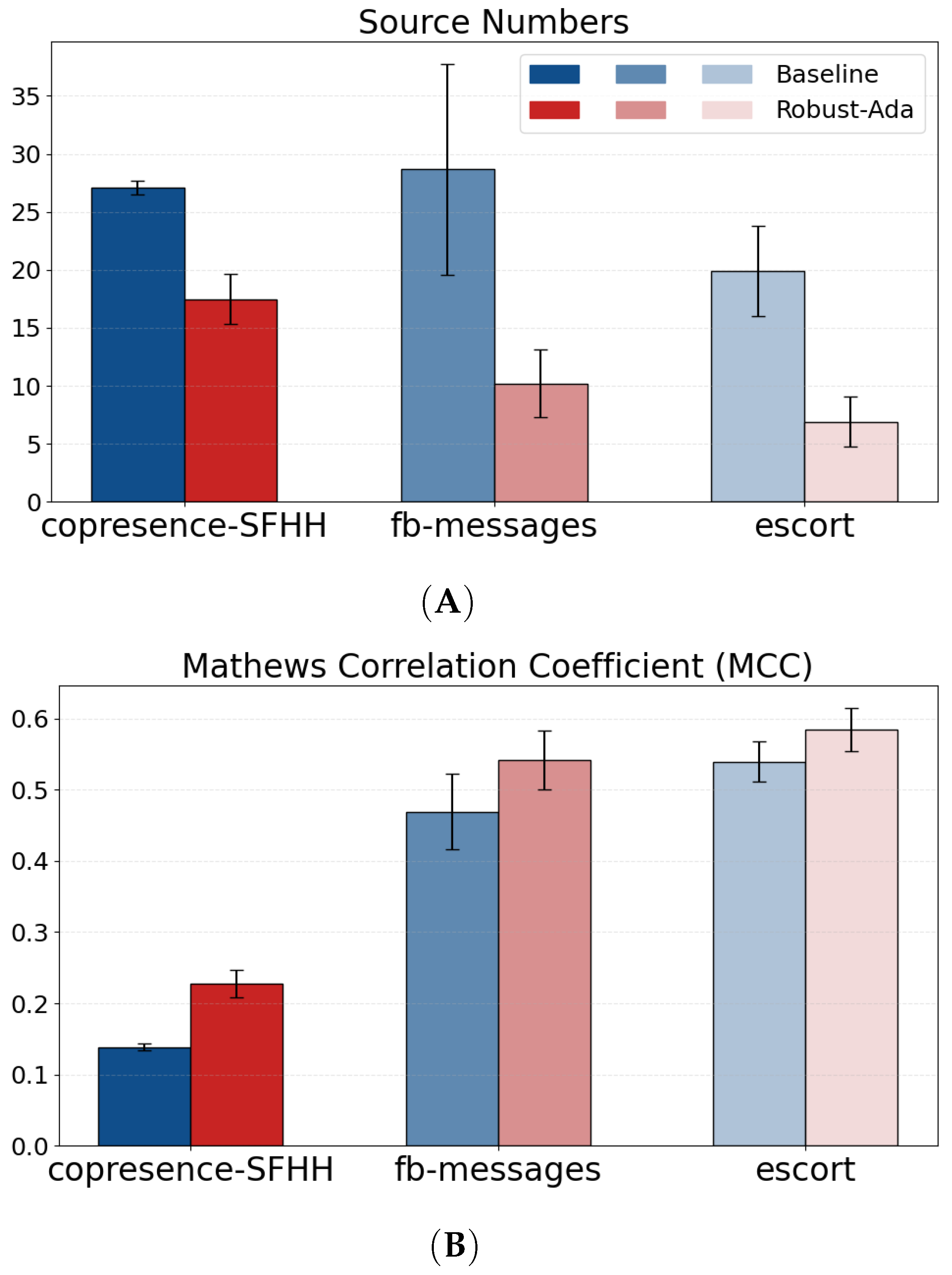

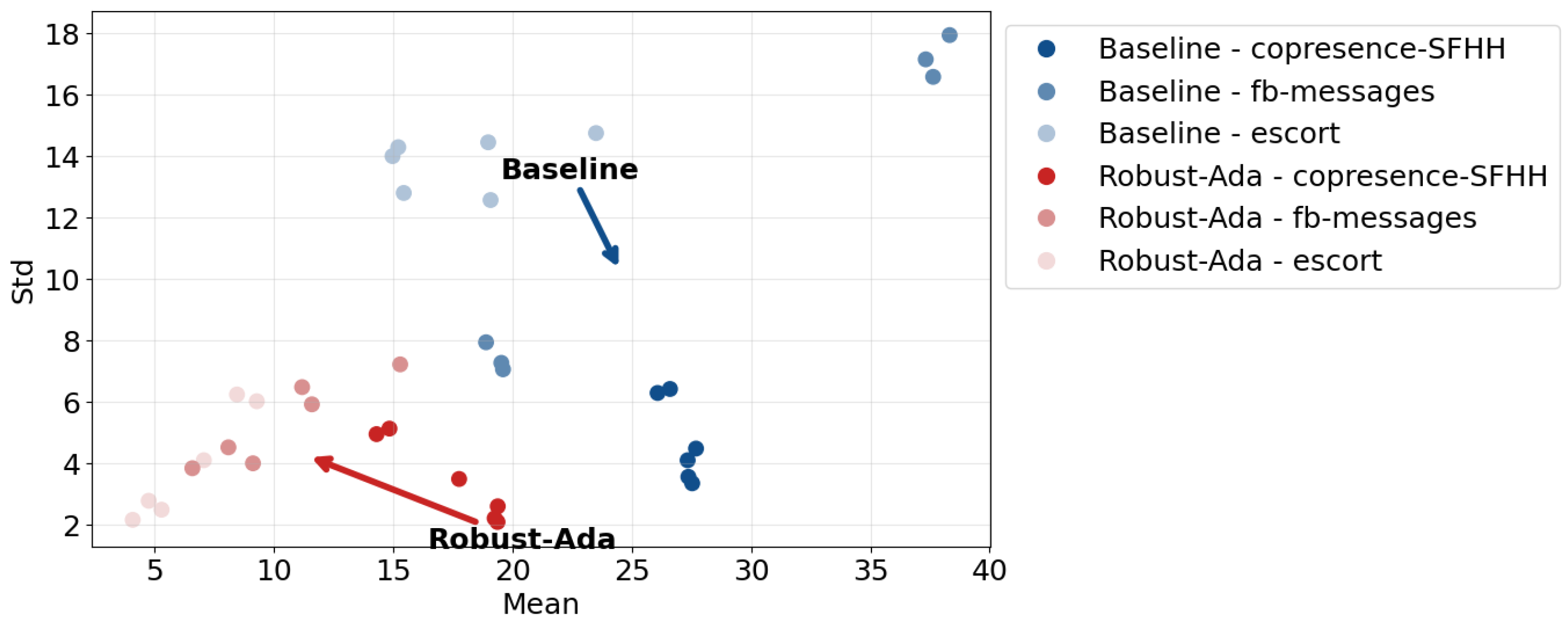

- Empirical validation—Empirical experiments demonstrate that the novelly proposed approach exhibits greater robustness and consistency in both offline and online settings. Results indicate an average twofold reduction in redundant sources and a 50% decrease in source variance. Additionally, the approach achieved a 5% increase in Matthews correlation coefficient (MCC) accuracy by reducing false positives in the presence of missing edges across various scales.

2. Related Works

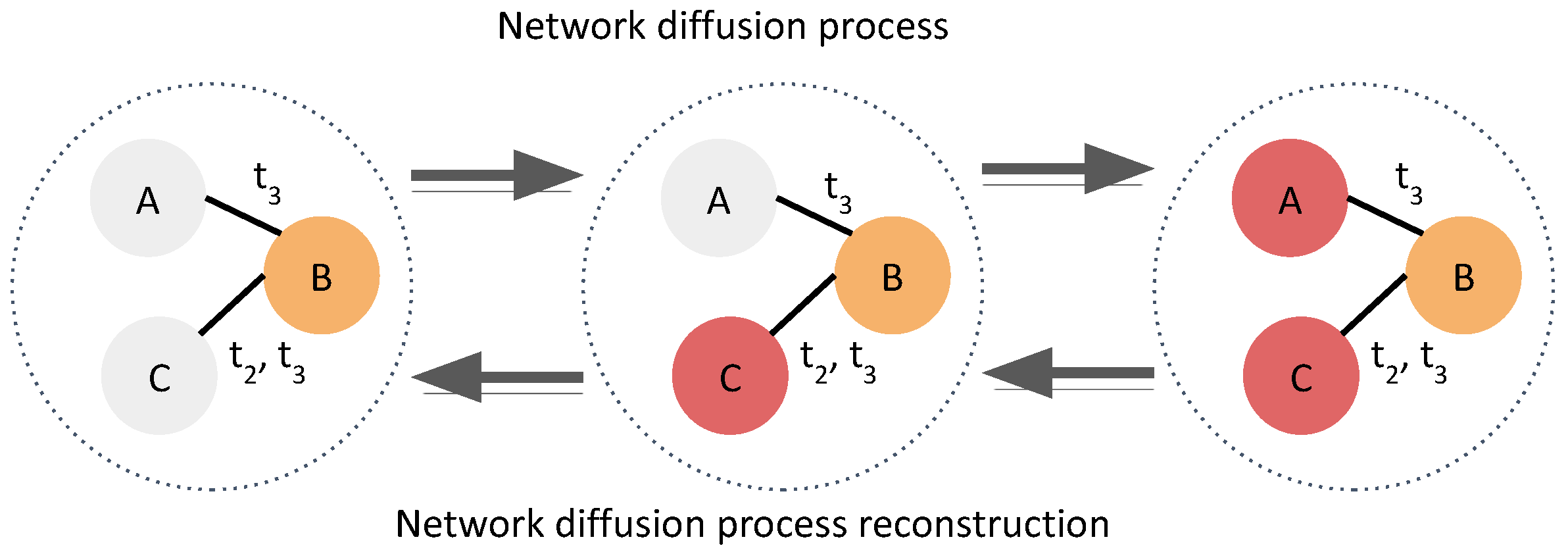

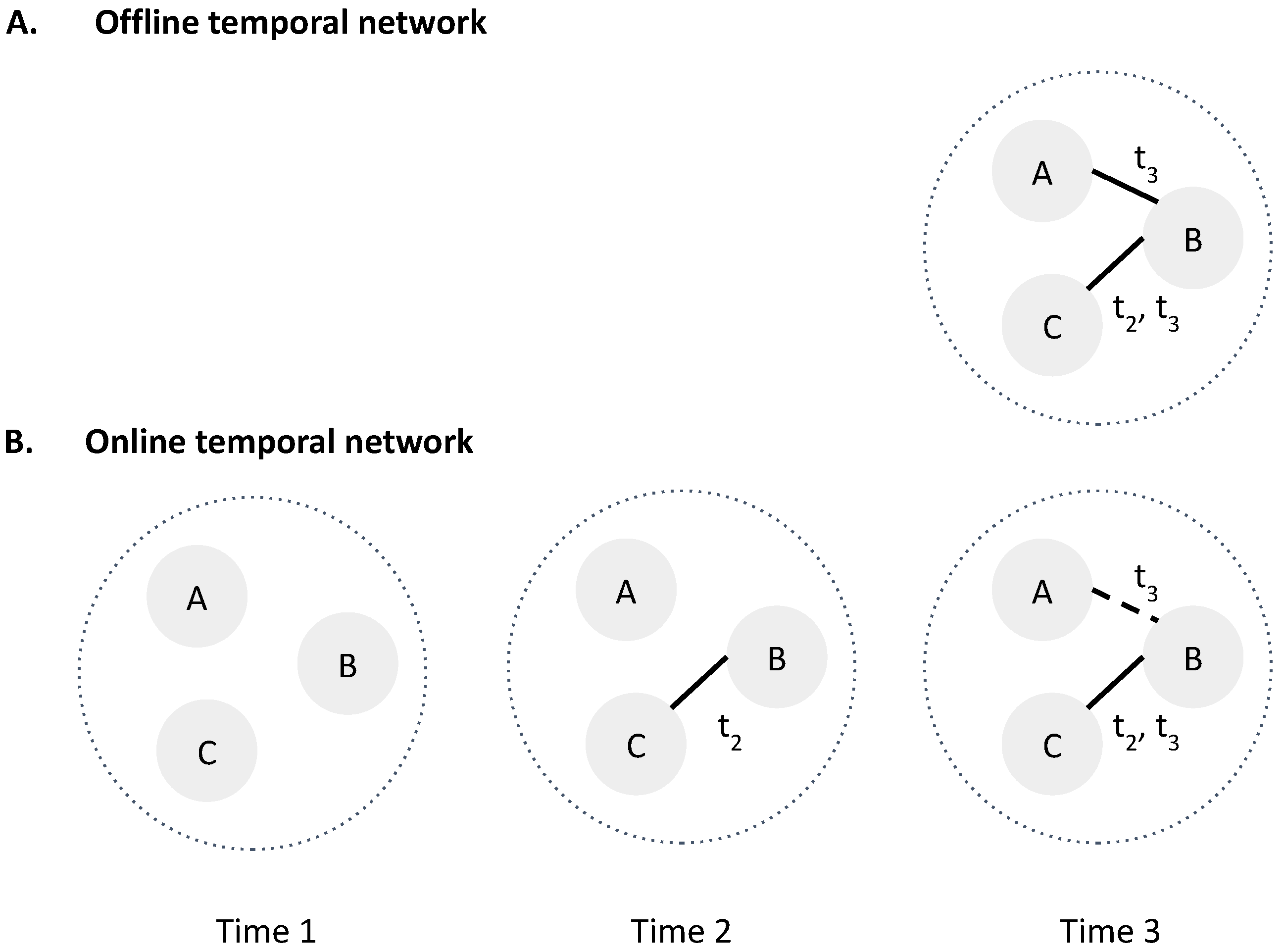

3. Materials and Methods

3.1. Notations

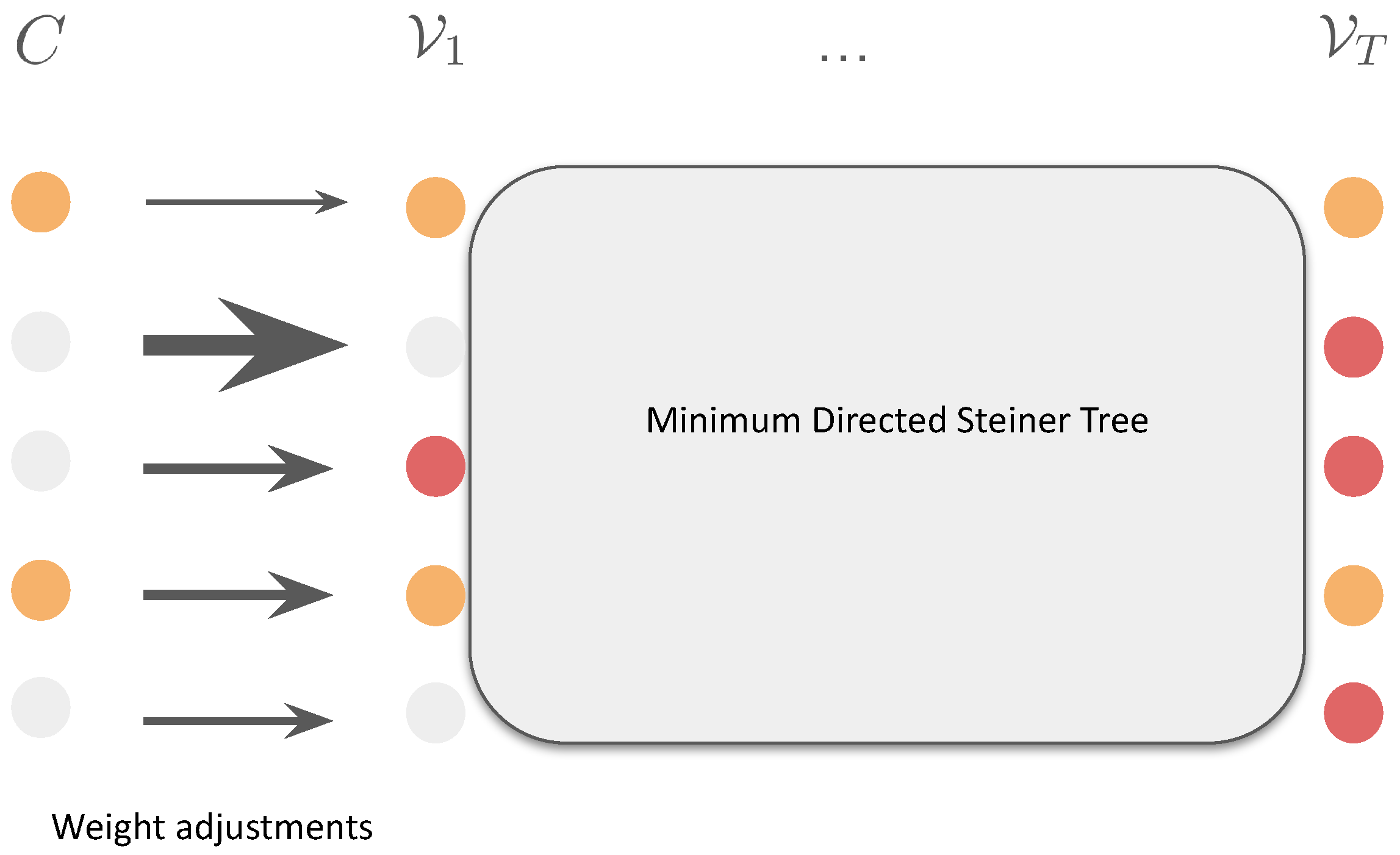

3.2. Minimum Directed Steiner Tree

3.3. Our Weight-Adjustment Framework: Robust-Ada-Source

| Algorithm 1 Online Algorithm |

Input: C Parameter: , T,

|

3.4. Our Level-2 Approximation Heuristic

4. Theoretical Results

4.1. General Upper Bound on Additional False Positives

4.2. A Case Study on Logistic Growth with Independent Parameter

5. Experimental Results and Discussion

5.1. Experiments Setup

5.1.1. Temporal Networks

5.1.2. Diffusion Models and False Positive

5.1.3. Comparable Approaches

- 1.

- 2.

- Robust-Ada-Source: the same approximation implemented by Algorithm 1 with the dynamic costs defined in Equation (3).

5.1.4. Cross-Edges Dropout

5.1.5. Metrics

5.2. Analysis

5.2.1. Robust-Ada-Source Outperforms All of the Baselines by Controlling Source Numbers over Time Under Various Hypothetical Growths of Positives and Perturbations

5.2.2. Robust-Ada-Source Prefers Bounded Detours to Control Source Numbers Under Missing-Edges Perturbations While Maintaining Decent MCC Scores Against All Baselines

5.2.3. The Increase in MCC Is Attributed to Robustly Controlled False Positive Cases Aligning Well with Theorems 1 and 2: The Method Is Effective Under Offline Settings

5.2.4. Robust-Ada-Source Yield Lower Variance in Source Numbers, Indicating Better Consistency of Sources

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Prerequisites and Setups

- 1.

- , where f is a distance function

- 2.

- x spans and obey the order.

- 3.

- Sources of .

- 4.

- The number of sources .

Details of Weights Adjustment

Appendix B. Theorems

Appendix B.1. Proof of Theorem 1

Appendix B.2. Proof of Theorem 2

Appendix B.3. Level-2 Is a Approximation

Appendix B.4. Level-2 Approximation Can Be Solved by Linear Programming

- 1.

- For each and each , create a node .

- 2.

- For each and , add the forward “stay” arc to .

- 3.

- For each with , add the same-time cross arcs and to .

Appendix B.5. An Adversarial Scenario Will Always Cause a Linear Error Lower Bound

Appendix C. Additional Experiment Results

| Dataset | Model | Approach | Dropout | MCC | Sources |

|---|---|---|---|---|---|

| Copresence–SFHH | IC | Robust–Ada | 0% | ||

| 20% | |||||

| 80% | |||||

| Baseline | 0% | ||||

| 20% | |||||

| 80% | |||||

| SI | Robust–Ada | 0% | |||

| 20% | |||||

| 80% | |||||

| Baseline | 0% | ||||

| 20% | |||||

| 80% | |||||

| fb–messages | IC | Robust–Ada | 0% | ||

| 20% | |||||

| 80% | |||||

| Baseline | 0% | ||||

| 20% | |||||

| 80% | |||||

| SI | Robust–Ada | 0% | |||

| 20% | |||||

| 80% | |||||

| Baseline | 0% | ||||

| 20% | |||||

| 80% | |||||

| escort | IC | Robust–Ada | 0% | ||

| 20% | |||||

| 80% | |||||

| Baseline | 0% | ||||

| 20% | |||||

| 80% | |||||

| SI | Robust–Ada | 0% | |||

| 20% | |||||

| 80% | |||||

| Baseline | 0% | ||||

| 20% | |||||

| 80% |

References

- Islam, M.S.; Sarkar, T.; Khan, S.H.; Kamal, A.H.M.; Hasan, S.M.; Kabir, A.; Yeasmin, D.; Islam, M.A.; Chowdhury, K.I.A.; Anwar, K.S.; et al. COVID-19–related infodemic and its impact on public health: A global social media analysis. Am. J. Trop. Med. Hygen. 2020, 103, 1621. [Google Scholar]

- Li, Z.; Xia, L.; Hua, H.; Zhang, S.; Wang, S.; Huang, C. DiffGraph: Heterogeneous Graph Diffusion Model. In Proceedings of the Eighteenth ACM International Conference on Web Search and Data Mining, Hannover, Germany, 10–14 March 2025; Association for Computing Machinery: New York, NY, USA, 2025; pp. 40–49. [Google Scholar]

- Xiao, H.; Rozenshtein, P.; Tatti, N.; Gionis, A. Reconstructing a cascade from temporal observations. In Proceedings of the 2018 SIAM International Conference on Data Mining; SIAM: Philadelphia, PA, USA, 2018; pp. 666–674. [Google Scholar]

- Xiao, H.; Aslay, C.; Gionis, A. Robust cascade reconstruction by steiner tree sampling. In Proceedings of the 2018 IEEE International Conference on Data Mining (ICDM), Singapore, 17–20 November 2018; pp. 637–646. [Google Scholar]

- Rozenshtein, P.; Gionis, A.; Prakash, B.A.; Vreeken, J. Reconstructing an epidemic over time. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 1835–1844. [Google Scholar]

- Jang, H.; Pai, S.; Adhikari, B.; Pemmaraju, S.V. Risk-aware temporal cascade reconstruction to detect asymptomatic cases: For the cdc mind healthcare network. In Proceedings of the 2021 IEEE International Conference on Data Mining (ICDM), Auckland, New Zealand, 7–10 December 2021; pp. 240–249. [Google Scholar]

- Jang, H.; Fu, A.; Cui, J.; Kamruzzaman, M.; Prakash, B.A.; Vullikanti, A.; Adhikari, B.; Pemmaraju, S.V. Detecting sources of healthcare associated infections. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 4347–4355. [Google Scholar]

- Mishra, R.; Heavey, J.; Kaur, G.; Adiga, A.; Vullikanti, A. Reconstructing an epidemic outbreak using steiner connectivity. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 11613–11620. [Google Scholar]

- Kleinman, R.A.; Merkel, C. Digital contact tracing for COVID-19. CMAJ 2020, 192, E653–E656. [Google Scholar] [CrossRef] [PubMed]

- Lo, B.; Sim, I. Ethical framework for assessing manual and digital contact tracing for COVID-19. Ann. Intern. Med. 2021, 174, 395–400. [Google Scholar] [CrossRef]

- Swain, V.D.; Xie, J.; Madan, M.; Sargolzaei, S.; Cai, J.; De Choudhury, M.; Abowd, G.D.; Steimle, L.N.; Prakash, B.A. WiFi mobility models for COVID-19 enable less burdensome and more localized interventions for university campuses. medRxiv 2021, 16, 2021. [Google Scholar]

- Dar, A.B.; Lone, A.H.; Zahoor, S.; Khan, A.A.; Naaz, R. Applicability of mobile contact tracing in fighting pandemic (COVID-19): Issues, challenges and solutions. Comput. Sci. Rev. 2020, 38, 100307. [Google Scholar] [CrossRef]

- Chang, S.; Pierson, E.; Koh, P.W.; Gerardin, J.; Redbird, B.; Grusky, D.; Leskovec, J. Mobility network models of COVID-19 explain inequities and inform reopening. Nature 2021, 589, 82–87. [Google Scholar] [CrossRef]

- Surkova, E.; Nikolayevskyy, V.; Drobniewski, F. False-positive COVID-19 results: Hidden problems and costs. Lancet Respir. Med. 2020, 8, 1167–1168. [Google Scholar] [CrossRef]

- Bakioğlu, F.; Korkmaz, O.; Ercan, H. Fear of COVID-19 and positivity: Mediating role of intolerance of uncertainty, depression, anxiety, and stress. Int. J. Ment. Health Addict. 2021, 19, 2369–2382. [Google Scholar]

- Rocha, L.E.; Liljeros, F.; Holme, P. Simulated epidemics in an empirical spatiotemporal network of 50,185 sexual contacts. PLoS Comput. Biol. 2011, 7, e1001109. [Google Scholar] [CrossRef]

- Charikar, M.; Chekuri, C.; Cheung, T.Y.; Dai, Z.; Goel, A.; Guha, S.; Li, M. Approximation algorithms for directed Steiner problems. J. Algorithms 1999, 33, 73–91. [Google Scholar] [CrossRef]

- Osthus, D.; Daughton, A.R.; Priedhorsky, R. Even a good influenza forecasting model can benefit from internet-based nowcasts, but those benefits are limited. PLoS Comput. Biol. 2019, 15, e1006599. [Google Scholar] [CrossRef] [PubMed]

- Kamarthi, H.; Rodríguez, A.; Prakash, B.A. Back2Future: Leveraging Backfill Dynamics for Improving Real-time Predictions in Future. arXiv 2021, arXiv:2106.04420. [Google Scholar]

- Kim, M.; Leskovec, J. The Network Completion Problem: Inferring Missing Nodes and Edges in Networks. In Proceedings of the 2011 SIAM International Conference on Data Mining (SDM); SIAM: Philadelphia, PA, USA, 2011; pp. 47–58. [Google Scholar] [CrossRef]

- Teji, B.; Roy, S.; Dhami, D.S.; Bhandari, D.; Guzzi, P.H. Graph embedding techniques for predicting missing links in biological networks: An empirical evaluation. IEEE Trans. Emerg. Top. Comput. 2023, 12, 190–201. [Google Scholar] [CrossRef]

- Kong, W.; Wong, B.J.H.; Gao, H.; Guo, T.; Liu, X.; Du, X.; Wong, L.; Goh, W.W.B. PROTREC: A probability-based approach for recovering missing proteins based on biological networks. J. Proteom. 2022, 250, 104392. [Google Scholar] [CrossRef]

- Hao, Q.; Diwan, N.; Yuan, Y.; Apruzzese, G.; Conti, M.; Wang, G. It doesn’t look like anything to me: Using diffusion model to subvert visual phishing detectors. In Proceedings of the 33rd USENIX Security Symposium (USENIX Security 24), Philadelphia, PA, USA, 14–16 August 2024; pp. 3027–3044. [Google Scholar]

- Wang, T.; Zhuo, L.; Chen, Y.; Fu, X.; Zeng, X.; Zou, Q. ECD-CDGI: An efficient energy-constrained diffusion model for cancer driver gene identification. PLoS Comput. Biol. 2024, 20, e1012400. [Google Scholar] [CrossRef]

- Xie, J.; Tandon, R.; Mitchell, C.S. Network Diffusion-Constrained Variational Generative Models for Investigating the Molecular Dynamics of Brain Connectomes Under Neurodegeneration. Int. J. Mol. Sci. 2025, 26, 1062. [Google Scholar] [CrossRef]

- Keeling, M.J.; Hollingsworth, T.D.; Read, J.M. Efficacy of contact tracing for the containment of the 2019 novel coronavirus (COVID-19). J. Epidemiol. Community Health 2020, 74, 861–866. [Google Scholar] [CrossRef]

- Shah, D.; Zaman, T. Rumors in a network: Who’s the culprit? IEEE Trans. Inf. Theory 2011, 57, 5163–5181. [Google Scholar] [CrossRef]

- Prakash, B.A.; Vreeken, J.; Faloutsos, C. Efficiently spotting the starting points of an epidemic in a large graph. Knowl. Inf. Syst. 2014, 38, 35–59. [Google Scholar] [CrossRef]

- Sundareisan, S.; Vreeken, J.; Prakash, B.A. Hidden hazards: Finding missing nodes in large graph epidemics. In Proceedings of the 2015 SIAM International Conference on Data Mining; SIAM: Philadelphia, PA, USA, 2015; pp. 415–423. [Google Scholar]

- Makar, M.; Guttag, J.; Wiens, J. Learning the probability of activation in the presence of latent spreaders. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Wang, J.; Jiang, J.; Zhao, L. An invertible graph diffusion neural network for source localization. In Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1058–1069. [Google Scholar]

- Ling, C.; Jiang, J.; Wang, J.; Liang, Z. Source localization of graph diffusion via variational autoencoders for graph inverse problems. In Proceedings of the 28th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Washington, DC, USA, 14–18 August 2022; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1010–1020. [Google Scholar]

- He, Q.; Bao, Y.; Fang, H.; Lin, Y.; Sun, H. Hhan: Comprehensive infectious disease source tracing via heterogeneous hypergraph neural network. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 291–299. [Google Scholar]

- Cheng, L.; Zhu, P.; Tang, K.; Gao, C.; Wang, Z. GIN-SD: Source detection in graphs with incomplete nodes via positional encoding and attentive fusion. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 55–63. [Google Scholar]

- Hazan, E. Introduction to online convex optimization. Found. Trends® Optim. 2016, 2, 157–325. [Google Scholar] [CrossRef]

- Ozella, L.; Paolotti, D.; Lichand, G.; Rodríguez, J.P.; Haenni, S.; Phuka, J.; Leal-Neto, O.B.; Cattuto, C. Using wearable proximity sensors to characterize social contact patterns in a village of rural Malawi. EPJ Data Sci. 2021, 10, 46. [Google Scholar] [CrossRef]

- Rossi, R.A.; Ahmed, N.K. The Network Data Repository with Interactive Graph Analytics and Visualization. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015. [Google Scholar]

- Adhikari, B.; Zhang, Y.; Amiri, S.E.; Bharadwaj, A.; Prakash, B.A. Propagation-based temporal network summarization. IEEE Trans. Knowl. Data Eng. 2017, 30, 729–742. [Google Scholar] [CrossRef]

| Symbol | Meaning |

|---|---|

| V | Set of nodes, . |

| E | Set of temporal edges with and . |

| Observed temporal network at timestamp t. | |

| Time-expanded static graph built from . | |

| Candidate source set. | |

| High-risk source candidates at time t (e.g., previously inferred sources). | |

| Cumulative report set up to time t; . | |

| Number of activated nodes at time t used by the Steiner approximation. | |

| , | Shortest path and distance in from candidate c to report r (online graph). |

| Dummy-to-candidate edge cost at time t (source-opening cost). | |

| Initial penalty. [second round] A high default cost applied when the model adds an extra source, making this choice less favorable than increasing path length (detouring). | |

| Lower bound (floor) on source costs. [second round] Acts as a regularizer to prevent over-detouring: the model avoids adding redundant sources or detouring from lower-risk candidates unless the expected cost reduction exceeds per action. | |

| , | Maximum/minimum source-edge costs at time t. |

| Fraction of reports infeasible under missing edges. | |

| , | Integrals used in regret bounds. |

| Cumulative false-positive regret. |

| Temporal Network | Nodes | Edges | Timestamps | Type |

|---|---|---|---|---|

| copresence–SFHH | 403 | 2,834,970 | 55 | Contact |

| fb–messages | 1899 | 123,468 | 203 | Social |

| escort | 13,769 | 80,880 | 36 | Sexual |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, J.; Lin, C.; Guo, X.; Mitchell, C.S. Source Robust Non-Parametric Reconstruction of Epidemic-like Event-Based Network Diffusion Processes Under Online Data. Big Data Cogn. Comput. 2025, 9, 262. https://doi.org/10.3390/bdcc9100262

Xie J, Lin C, Guo X, Mitchell CS. Source Robust Non-Parametric Reconstruction of Epidemic-like Event-Based Network Diffusion Processes Under Online Data. Big Data and Cognitive Computing. 2025; 9(10):262. https://doi.org/10.3390/bdcc9100262

Chicago/Turabian StyleXie, Jiajia, Chen Lin, Xinyu Guo, and Cassie S. Mitchell. 2025. "Source Robust Non-Parametric Reconstruction of Epidemic-like Event-Based Network Diffusion Processes Under Online Data" Big Data and Cognitive Computing 9, no. 10: 262. https://doi.org/10.3390/bdcc9100262

APA StyleXie, J., Lin, C., Guo, X., & Mitchell, C. S. (2025). Source Robust Non-Parametric Reconstruction of Epidemic-like Event-Based Network Diffusion Processes Under Online Data. Big Data and Cognitive Computing, 9(10), 262. https://doi.org/10.3390/bdcc9100262