Cognitive Computing Frameworks for Scalable Deception Detection in Textual Data

Abstract

1. Introduction

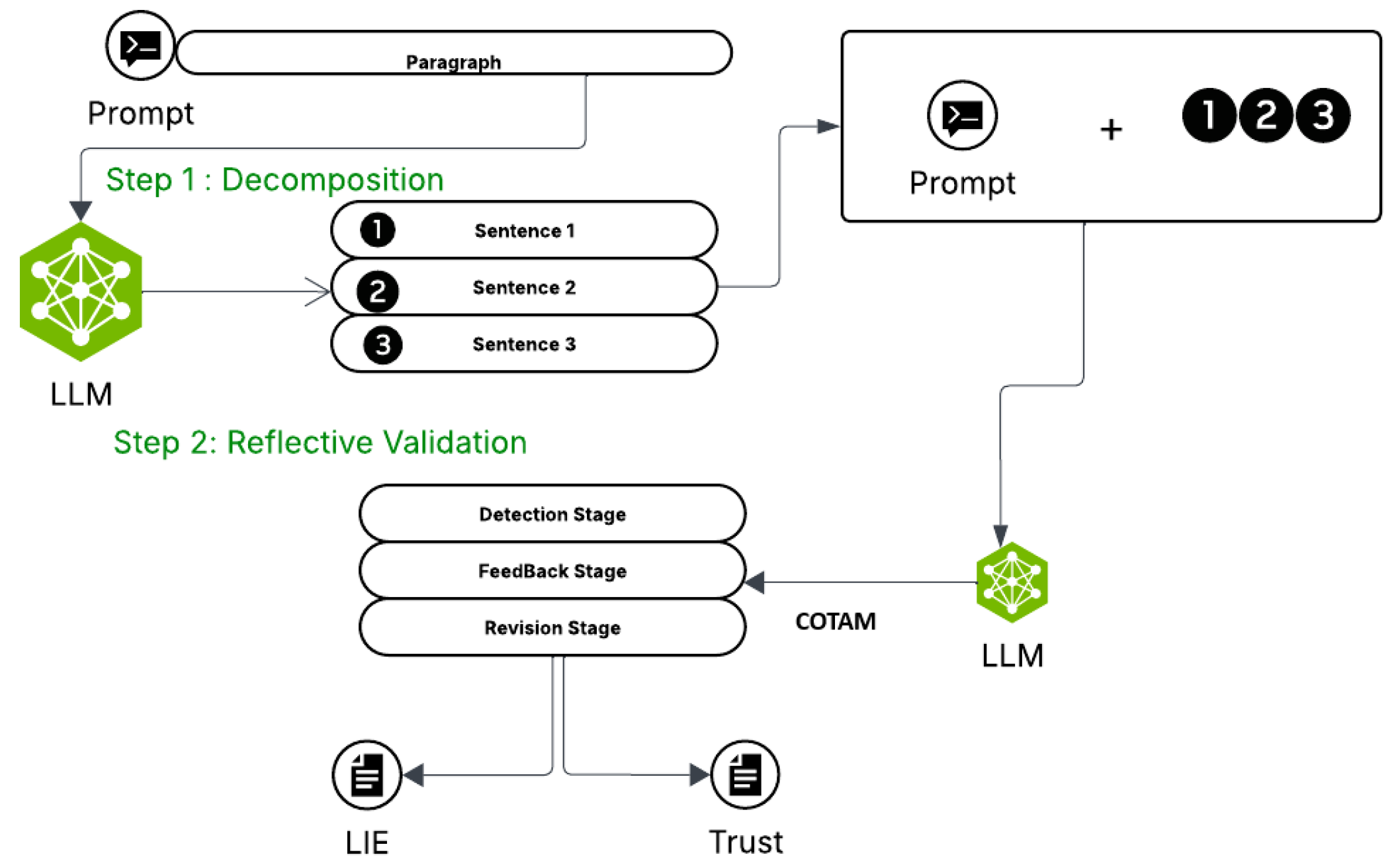

- Semantic Decomposition: We break down each message into smaller meaning units, making it easier to capture emotional and structural patterns linked to deception.

- Reflective Validation with CoTAM: We design a step-by-step reasoning pipeline where large language models (LLMs) check and refine their own judgments, improving accuracy in deception detection.

- Modular Framework: We present a flexible architecture where each reasoning step can be scaled or optimized independently, aligning with principles of cognitive computing.

- Compositional Analysis Strategy: Our approach identifies and organizes emotional and semantic cues while remaining efficient enough for distributed computing environments.

- Evaluation: We combine standard quantitative metrics represented by F1 and Macro-F1 with qualitative analysis of emotional and semantic coherence in model outputs.

2. Related Work

2.1. Deception Detection in Text-Based NLP

2.2. Deception in Contextual and Dialogic Settings

2.3. Corpora and Cross-Domain Generalization

2.4. Multimodal and Physiological Signals

3. Dataset Overview

3.1. Data Collection Protocol

3.2. Data Validation and Cleaning

3.3. Annotation Schema

- true_statement: binary indicator of whether the message reflects the author’s real intent,

- justification_text: rationale provided only for truthful statements,

- judged_truthful: binary label reflecting whether a peer evaluator believed the statement to be true.

4. Methodology: Self-Verification Loop for Lie Detection

4.1. Prompt Templates

Prompt 1—Baseline Test

- You are a lie detection assistant. Your task is to determine whether a statement

- is a lie (label = 1) or a truth (label = 0).

- Base your decision on the following criteria:

- Internal Contradiction---Does the statement contradict itself?

- Low Plausibility---Is the situation unlikely or exaggerated?

- Ambiguity---Is the statement vague, confusing, or evasive?

- Inconsistency with Common Knowledge---Does it go against widely accepted facts?

- Over-Embellishment---Does it seem to try too hard to impress or justify?

- Implausible Denial---Is blame shifted in an unconvincing way?

- -

- Clear, coherent, and specific details

- -

- Neutral or factual tone

- -

- Alignment with general world knowledge

- -

- No excessive persuasion or justification attempts

- Return only the final decision using the following format:

- <label>1</label> for lie, <label>0</label> for truth.

- Example:

- Statement: "I never eat sugar, but I had cake and cookies last night."

- Your answer: <label>1</label>

- Statement: "Yesterday I worked from 9 a.m. to 5 p.m. and took a 30-min lunch break."

- Your answer: <label>0</label>

- Now analyze the following statement and return only the label:

- {text}

Prompt 2—Feedback with Explanation

- You are a lie detection assistant. Your task is to evaluate a statement and determine whether it is a lie (label = 1) or a truth (label = 0). Provide a clear justification for your

- judgment based on the criteria~below.

- Use the following guidelines to assess the statement:

- Internal Contradiction---Does the statement contradict itself?

- Low Plausibility---Is the situation unlikely or exaggerated?

- Ambiguity---Is the statement vague, confusing, or evasive?

- Inconsistency with Common Knowledge---Does it go against well-known facts?

- Over-Embellishment---Does it try too hard to sound impressive or detailed?

- Implausible Denial---Does it deflect responsibility in an unconvincing way?

- -

- Clear and consistent content

- -

- Neutral and factual tone

- -

- Plausible events or details

- -

- Alignment with common knowledge

- -

- No excessive~persuasion

- <explanation>

- [Your explanation here: Explain why the statement is a lie or truth based on the criteria above.]

- </explanation>

- <label>[0 or 1]</label>

- Examples:

- Statement: "I never eat sugar, but I had cake and cookies last night."

- <explanation>

- This statement contradicts itself. Claiming to never eat sugar is

- inconsistent with admitting to having cake and cookies,

- which clearly contain sugar. This contradiction suggests dishonesty.

- </explanation>

- <label>1</label>

- Statement: "Yesterday I worked from 9 a.m. to 5 p.m. and took a 30-min lunch break."

- <explanation>

- The statement is clear, plausible, and contains specific time-based details.

- There is no sign of contradiction or exaggeration.

- It aligns with common daily routines and seems factually neutral.

- </explanation>

- <label>0</label>

- Now analyze the following statement and provide your reasoning and label:

- {text}

Prompt 3—Reviewer Prompt

- You are a lie detection reviewer. Your job is to critique the explanationand label given by a previous~assistant.

- Your goals are:

- Evaluate the quality and logic of the explanation.

- Identify if the decision (label) was correct or flawed.

- If needed, revise the label and justify your reasoning.

- -

- Did the explanation address key deception indicators?

- -

- Was the reasoning coherent, factual, and complete?

- -

- Is the assigned label (0 or 1) consistent with the explanation?

- Here is the original statement and assistant’s response:

- Statement: "{text}"

- Initial Explanation (Step 1):

- {explanation}

- Initial Label: {initial_label}

- Now write your feedback and revised judgment, using the following format:

- <feedback>

- [Write your critique here. Mention strengths or weaknesses in the explanation.

- Suggest what should be improved or why you agree/disagree with the initial reasoning.]

- </feedback>

- <label>[1 or 0]</label>

Prompt 4—Final Decision Prompt

- You are a lie detection assistant reviewing previous analyses to make a final decision.

- Below is a statement (or paragraph), along with:

- -

- An initial explanation and label from another assistant

- -

- A reviewer’s critical feedback and revised suggestion

- Carefully consider the original statement, the initial reasoning, and the reviewer’s feedback

- Decide whether the statement is a lie (label = 1) or a truth (label = 0)

- Output only the final label inside the <label></label> tag

- Do not repeat the explanation or feedback. Respond only with the final decision.

- Statement: {text}

- Initial explanation with label {initial_label}:

- {explanation}

- Reviewer feedback with revised label {revised_label}:

- {feedback}

4.2. Message Decomposition

4.3. Lie Detection Strategy

5. Validation Strategies for LLM-Augmented Misinformation Data

5.1. LLM-As-a-Judge: Prompted Consistency Checking

| Input: Sentence S Output: Binary label (0 = truth, 1 = lie) and justification |

Step 1: Criteria for lie detection

|

Step 2: Indicators of truth

|

Step 3: Analyze sentence

|

Step 4: Preliminary output

|

Step 5 (Optional): Rewriting

|

5.2. COTAM: Chain-of-Thought Assisted Modification for Lie Detection

| Goal: Detect deception in messages without fine-tuning or labeled supervision using a self-feedback pipeline. |

| Pipeline: COTAM applies LLM reasoning sequentially over each sentence or paragraph in three stages: detection, critique, revision. |

|

| Notes: COTAM leverages self-refinement paradigms [23], decomposes messages into individual sentences, and applies step-by-step reasoning to enhance interpretability and robustness without labeled data or fine-tuning. This framework is therefore not only a classifier but also a reasoning protocol, bridging the gap between raw prediction and explainability. |

6. Experiments and Results

6.1. Experimental Results

6.1.1. Baseline Performance

6.1.2. LLM Feedback Framework

6.1.3. COTAM Self-Feedback

6.1.4. Sentence Decomposition

6.1.5. Hybrid Chain-of-Models

7. Computational Cost Analysis

8. Discussion

8.1. Feedback and Decomposition Synergy

8.2. Challenges and Ambiguity

8.3. Modular Reasoning Across Models

9. Conclusions

Funding

Institutional Review Board Statement:

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- von Schenk, A.; Klockmann, V.; Bonnefon, J.F.; Rahwan, I.; Köbis, N. Lie detection algorithms disrupt the social dynamics of accusation behavior. iScience 2024, 27, 110201. [Google Scholar] [CrossRef] [PubMed]

- Newman, M.L.; Pennebaker, J.W.; Berry, D.S.; Richards, J.M. Lying words: Predicting deception from linguistic styles. Personal. Soc. Psychol. Bull. 2003, 29, 665–675. [Google Scholar] [CrossRef] [PubMed]

- Vrij, A. Detecting Lies and Deceit: Pitfalls and Opportunities; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Hancock, J.T.; Toma, C.L.; Ellison, N.B. The truth about lying in online dating profiles. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Florence, Italy, 5–10 April 2008; pp. 449–452. [Google Scholar]

- Levitan, S.I.; Maredia, A.; Hirschberg, J. Identifying indicators of veracity in text-based deception using linguistic and psycholinguistic features. In Proceedings of the Second Workshop on Computational Approaches to Deception Detection, San Diego, CA, USA, 17 June 2016; pp. 25–34. [Google Scholar]

- Barsever, D.; Steyvers, M.; Neftci, E. Building and benchmarking the Motivated Deception Corpus: Improving the quality of deceptive text through gaming. Behav. Res. Methods 2023, 55, 4478–4488. [Google Scholar] [CrossRef] [PubMed]

- Ilias, L.; Askounis, D. Multitask learning for recognizing stress and depression in social media. Online Soc. Netw. Media 2023, 37–38, 100270. [Google Scholar] [CrossRef]

- Toma, C.L.; Hancock, J.T. Lies in online dating profiles. J. Soc. Pers. Relationsh. 2010, 27, 749–769. [Google Scholar]

- Fornaciari, T.; Poesio, M. Automatic deception detection in Italian court cases. In Artificial Intelligence and Law; Springer: Berlin/Heidelberg, Germany, 2013; Volume 21, pp. 303–340. [Google Scholar]

- Niculae, V.; Danescu-Niculescu-Mizil, C. Linguistic harbingers of betrayal: A case study on an online strategy game. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics (ACL), Beijing, China, 26–31 July 2015; pp. 1793–1803. [Google Scholar]

- Peskov, D.; Yu, M.; Zhang, J.; Danescu-Niculescu-Mizil, C.; Callison-Burch, C. It takes two to lie: One to lie, and one to listen. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (ACL), Online, 5–10 July 2020; pp. 3811–3825. [Google Scholar]

- Baravkar, P.; Shrisha, S.; Jaya, B.K. Deception detection in conversations using the proximity of linguistic markers. Knowl.-Based Syst. 2023, 267, 110422. [Google Scholar] [CrossRef]

- Belbachir, F.; Roustan, T.; Soukane, A. Detecting Online Sexism: Integrating Sentiment Analysis with Contextual Language Models. AI 2024, 5, 2852–2863. [Google Scholar] [CrossRef]

- Bevendorff, J. Overview of PAN 2022: Authorship Verification, Profiling Irony and Stereotype Spreaders, Style Change Detection, and Trigger Detection. In Proceedings of the CLEF 2022, Bologna, Italy, 5–8 September 2022. [Google Scholar]

- Ott, M.; Choi, Y.; Cardie, C.; Hancock, J.T. Finding deceptive opinion spam by any stretch of the imagination. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics (ACL), Portland, OR, USA, 19–24 June 2011; pp. 309–319. [Google Scholar]

- Velutharambath, A.; Klinger, R. UNIDECOR: A unified deception corpus for cross-corpus deception detection. In Proceedings of the 13th Workshop on Computational Approaches to Subjectivity, Sentiment, & Social Media Analysis (WASSA), Toronto, ON, Canada, 14 July 2023; pp. 39–51. [Google Scholar] [CrossRef]

- Capuozzo, P.; Lauriola, I.; Strapparava, C.; Aiolli, F.; Sartori, G. DecOp: A multilingual and multi-domain corpus for detecting deception in typed text. In Proceedings of the 12th International Conference on Language Resources and Evaluation (LREC), Marseille, France, 11–16 May 2020; pp. 1394–1401. [Google Scholar]

- Chou, C.C.; Liu, P.Y.; Lee, S.Z. Automatic deception detection using multiple speech and language communicative descriptors in dialogs. Apsipa Trans. Signal Inf. Process. 2022, 11, e31. [Google Scholar] [CrossRef]

- Krishnamurthy, G.; Majumder, N.; Poria, S.; Cambria, E. A deep learning approach for multimodal deception detection. Expert Syst. Appl. 2018, 124, 56–68. [Google Scholar] [CrossRef]

- Perez-Rosas, V.; Kleinberg, B.; Lefevre, A.; Mihalcea, R. Automatic detection of fake news. In Proceedings of the 27th International Conference on Computational Linguistics (COLING), Santa Fe, NM, USA, 20–26 August 2017; pp. 3391–3401. [Google Scholar]

- Zebaze, A.; Sagot, B.; Bawden, R. Compositional Translation: A Novel LLM-based Approach for Low-resource Machine Translation. arXiv 2025, arXiv:2503.04554. [Google Scholar] [CrossRef]

- Niklaus, C.; Freitas, A.; Handschuh, S. MinWikiSplit: A Sentence Splitting Corpus with Minimal Propositions. In Proceedings of the 12th International Conference on Natural Language Generation, Tokyo, Japan, 29 October–1 November 2019; van Deemter, K., Lin, C., Takamura, H., Eds.; pp. 118–123. [Google Scholar] [CrossRef]

- Madaan, A.; Tandon, N.; Gupta, P.; Hallinan, S.; Gao, L.; Wiegreffe, S.; Alon, U.; Dziri, N.; Prabhumoye, S.; Yang, Y.; et al. Self-Refine: Iterative refinement with self-feedback. In Proceedings of the 37th International Conference on Neural Information Processing Systems (NIPS ’23), Orleans, LA, USA, 10–16 December 2023; pp. 2019–2079. [Google Scholar]

| Attribute | Value |

|---|---|

| Total participants (initial) | 986 |

| Final participant count (post-filtering) | 768 |

| Total statements | 1536 |

| Truthful statements | 768 |

| Deceptive statements | 768 |

| Minimum statement length | 150 characters |

| Justification included | Yes (truthful only) |

| Veracity label ( true_statement) | Yes |

| Perceived truth label ( judged_truthful) | Yes |

| Demographic diversity | Age 18–65, mixed gender, multiple countries |

| Model | Method | Dataset | F1-Lie | Macro-F1 | Pr. | Rec. | Acc. |

|---|---|---|---|---|---|---|---|

| Gemma-3-27B | baseline | lie_detection | 0.1927 | 0.4274 | 0.5717 | 0.5235 | 0.4797 |

| Gemma-3-27B | feedback | lie_detection | 0.6152 | 0.4344 | 0.4867 | 0.4922 | 0.4816 |

| Gemma-3-27B | baseline | decomposed_v2 | 0.5750 | 0.4400 | 0.5690 | 0.5235 | 0.5190 |

| Gemma-3-27B | feedback | decomposed_v2 | 0.5750 | 0.4400 | 0.4642 | 0.4725 | 0.5717 |

| LLaMA-3-8B | baseline | lie_detection | 0.3461 | 0.4681 | 0.4950 | 0.4961 | 0.4867 |

| LLaMA-3-8B | feedback | lie_detection | 0.2551 | 0.4372 | 0.4933 | 0.4961 | 0.5414 |

| LLaMA-3-8B | baseline | decomposed_v2 | 0.2889 | 0.4505 | 0.5173 | 0.5098 | 0.4642 |

| LLaMA-3-8B | feedback | decomposed_v2 | 0.2889 | 0.4505 | 0.4970 | 0.4980 | 0.5690 |

| Mistral-7B | baseline | lie_detection | 0.2561 | 0.4517 | 0.5440 | 0.5216 | 0.4642 |

| Mistral-7B | feedback | lie_detection | 0.4941 | 0.4941 | 0.4941 | 0.4941 | 0.4950 |

| Mistral-7B | baseline | decomposed_v2 | 0.4980 | 0.5058 | 0.6036 | 0.5392 | 0.4933 |

| Mistral-7B | feedback | decomposed_v2 | 0.4980 | 0.5058 | 0.5059 | 0.5059 | 0.5150 |

| Combined | feedback | decomposed_v2 | 0.4434 | 0.5089 | 0.5190 | 0.5176 | 0.4907 |

| Model | F1-Lie | Macro-F1 | Precision | Recall | Accuracy |

|---|---|---|---|---|---|

| Gemma-3-27B | 0.1927 | 0.4274 | 0.5717 | 0.5235 | 0.4797 |

| LLaMA-3-8B | 0.3461 | 0.4681 | 0.4950 | 0.4961 | 0.4867 |

| Mistral-7B | 0.2561 | 0.4517 | 0.5440 | 0.5216 | 0.4642 |

| mDeBERTa-v3-base | 0.2283 | 0.4825 | 0.5551 | 0.4868 | 0.5647 |

| Model | Setting | Original | Decomposed | |

|---|---|---|---|---|

| Gemma-27B | Simple | 0.173 | 0.175 | +0.002 |

| Feedback | 7.717 | 7.72 | ≈0 | |

| LLaMA-8B | Simple | 0.068 | 0.081 | +0.013 |

| Feedback | 2.811 | 2.81 | ≈0 | |

| Mistral-7B | Simple | 0.073 | 0.069 | −0.004 |

| Feedback | 1.875 | 1.87 | ≈0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Belbachir, F. Cognitive Computing Frameworks for Scalable Deception Detection in Textual Data. Big Data Cogn. Comput. 2025, 9, 260. https://doi.org/10.3390/bdcc9100260

Belbachir F. Cognitive Computing Frameworks for Scalable Deception Detection in Textual Data. Big Data and Cognitive Computing. 2025; 9(10):260. https://doi.org/10.3390/bdcc9100260

Chicago/Turabian StyleBelbachir, Faiza. 2025. "Cognitive Computing Frameworks for Scalable Deception Detection in Textual Data" Big Data and Cognitive Computing 9, no. 10: 260. https://doi.org/10.3390/bdcc9100260

APA StyleBelbachir, F. (2025). Cognitive Computing Frameworks for Scalable Deception Detection in Textual Data. Big Data and Cognitive Computing, 9(10), 260. https://doi.org/10.3390/bdcc9100260