Abstract

The rapid expansion of online education has generated large volumes of learner interaction data, highlighting the need for intelligent systems capable of transforming this information into personalized guidance. Educational Recommender Systems (ERS) represent a key application of big data analytics and machine learning, offering adaptive learning pathways that respond to diverse student needs. For widespread adoption, these systems must align with pedagogical principles while ensuring transparency, interpretability, and seamless integration into Learning Management Systems (LMS). This paper introduces a comprehensive framework and implementation of an ERS designed for platforms such as Moodle. The system integrates big data processing pipelines to support scalability, real-time interaction, and multi-layered personalization, including data collection, preprocessing, recommendation generation, and retrieval. A detailed use case demonstrates its deployment in a real educational environment, underlining both technical feasibility and pedagogical value. Finally, the paper discusses challenges such as data sparsity, learner model complexity, and evaluation of effectiveness, offering directions for future research at the intersection of big data technologies and digital education. By bridging theoretical models with operational platforms, this work contributes to sustainable and data-driven personalization in online learning ecosystems.

1. Introduction

The increasing availability of digital learning platforms has transformed the educational landscape, enabling learners to access high-quality resources anywhere in the world. Massive Open Online Courses (MOOCs), Learning Management Systems (LMSs), and Intelligent Tutoring Systems (ITSs) have allowed the expansion of education and the adaptation of content to diverse learning needs [1]. However, this abundance of content introduces a significant burden to the student: learners must navigate vast repositories of materials without always having clear guidance on what to study next, which resources best suit their needs, or how to progress through a subject in a coherent pedagogical way [2]. Without personalized guidance, students may follow inefficient study paths, revisit previously learned material unnecessarily, or skip crucial concepts, which negatively impacts knowledge retention and motivation.

In response to this challenge, Educational Recommender Systems (ERSs) have emerged as promising tools to provide personalized learning experiences. These systems aim to deliver relevant recommendations that support academic goals, increase motivation, and optimize learning outcomes by analyzing learners’ profiles, past behaviors, and contextual information. By providing tailored recommendations, ERSs directly address the challenge of content overload. They guide learners through appropriate sequences of resources, helping them focus on relevant materials and maintain motivation. ERSs can recommend various item types, including learning resources (e.g., videos, exercises, readings), learning sequences, or even courses and career trajectories [3]. Importantly, the rise of digital education has also created massive amounts of learner interaction data, positioning ERSs as a natural application of big data analytics and machine learning in order to extract actionable insights from these complex and large-scale datasets.

Despite this potential, the use of ERSs in real educational settings remains limited as many existing implementations lack integration with pedagogical frameworks or rely on black-box algorithms that offer little transparency to educators and learners [4]. Learners in online courses, especially those that are self-paced or large in scale, often face a lack of guidance and struggle to identify what to study next. This leads to inefficient study paths, reduced motivation, and in some cases, course abandonment. Instructors cannot always provide personalized recommendations manually, particularly when dealing with hundreds of students. While LMSs offer content delivery tools and basic analytics, they generally lack mechanisms to dynamically guide learners based on their needs or course progression. As a result, students may access resources that are either too easy, too complex, or pedagogically misaligned with their current level [5].

Moreover, as pointed out by [5], there remains a significant gap between the development of ERS technologies and their actual integration into educational practice. Without practical integration, the technological potential of ERSs remains largely untapped, leaving instructors without scalable tools to guide students effectively, particularly in courses with large enrollments or diverse learner backgrounds. Many systems are designed from a purely algorithmic perspective, without considering how they can be used by educators or embedded into real instructional workflows. As a result, ERSs are often difficult to adapt, interpret, or align with pedagogical goals [6]. This disconnect limits their practical value in authentic learning contexts, especially in small-scale or specialized courses, where instructors require flexible tools that enhance, rather than complicate, the teaching process.

Unlike domains such as e-commerce or entertainment, where the success of recommender systems is often measured through metrics like click-through rates or user satisfaction, educational settings demand a fundamentally different approach. Here, recommendations must be pedagogically meaningful and support actual learning progress. The central question is not merely whether a student clicks on a resource, but whether that resource contributes to understanding, retention, and skill development. This challenge is further amplified by the sequential and cumulative nature of learning, where concepts build upon each other, and by the ethical considerations surrounding transparency, fairness, and explainability [7].

Contrary to previous works found in the scientific literature, this work is directly motivated by the practical challenges of integrating ERSs into authentic learning environments. Its core aim is to help bridge the persistent gap between ERS research and educational practice by proposing a system that is pedagogically grounded, technically viable, and capable of supporting both learners and instructors in real-world settings. This contribution seeks to promote the sustained use of ERSs in educational settings by addressing technical development needs alongside practical integration challenges. By aligning recommendations with pedagogical goals it ensures that learners receive guidance that is both meaningful and actionable, thereby directly addressing the issues of content overload and inefficient learning paths. Ultimately, it reinforces the goal of ensuring that technological advances lead to tangible improvements in teaching and learning experiences.

To this end, the article adopts a design-based and implementation-oriented approach, rooted in Design Science Research (DSR), presenting an ERS architecture that can be readily adopted in actual instructional contexts [8]. The system addresses a well-documented disconnect between algorithmic development and classroom integration by offering a deployable, LMS-compatible, and pedagogically informed framework. It is designed to be adapted by instructors and technical teams to the specific needs of their courses. Furthermore, it highlights the importance of tailoring recommendations to the diverse needs of students, whether at risk, progressing, or disengaged, ensuring that the system’s recommendations are both personalized and actionable. Specifically, the article proposes:

- A comprehensive framework for ERSs tailored to digital learning environments.

- A practical implementation of the framework that can be integrated into existing LMSs, such as Moodle.

- An emphasis on the need to adapt recommendation strategies to different student profiles to ensure relevance and effectiveness.

The remainder of this paper is structured as follows: Section 2 presents the theoretical background, including fundamentals on RSs and their adaptation to educational contexts. Section 3 outlines the key requirements that ERSs must meet to be meaningful, responsible, and viable. Section 4 introduces the proposed modular architecture, detailing its components and their respective roles. Section 5 describes a concrete use case that illustrates how the framework and implementation can be integrated into an existing LMS. Section 6 showcases the multi-perspective evaluation process of the proposed system conducted with professors and students. Finally, Section 7 and Section 8 discuss the main challenges, considerations, and limitations for the development and adoption of ERSs, and Section 9 concludes the article by summarizing the contributions and proposing directions for future work.

2. Theoretical Background

Designing an effective ERS requires integrating insights from multiple disciplines, including Machine Learning (ML) and Deep Learning (DL), Recommender System Theory, and Educational Science. This section outlines the foundational concepts that inform our proposed architecture.

2.1. Recommender Systems

RSs are tools developed to help users discover relevant content by examining their preferences, behavior patterns, and contextual factors [9]. These systems can handle large-scale data to uncover trends and deliver customized suggestions, simplifying the process of finding valuable items while minimizing the time and effort involved. By filtering out irrelevant options, they support more efficient decision-making and contribute to improving user engagement and satisfaction [10]. Several core approaches have been developed over the years, each leveraging different data types and assumptions. The most common methods are as follows:

- Collaborative Filtering (CF): One of the most widely used paradigms. It relies on the assumption that users with similar preferences in the past will also have similar preferences in the future [11]. Among the different implementations of CF, two stand out as the most commonly used. The first is User-based CF, which recommends items liked by users similar to the target user. The second is Item-based CF, which recommends items similar to those with which the target user has interacted. CF techniques typically use interaction matrices, gathering ratings or clicks, and measure similarity using distance or correlation-based methods.

- Content-Based Filtering (CBF): This method recommends items similar to those that the user has liked in the past, based on item characteristics such as keywords, categories, or metadata [12]. CBF builds individual profiles using supervised learning techniques to predict preferences. It can also provide explanations for recommendations based on the features involved.

- Knowledge-Based Filtering (KBF): These systems use explicit domain knowledge, constraints, or logical rules to drive recommendations [13]. KBF is particularly useful when user preferences are not learned from the data but are specified directly, such as recommending a laptop based on performance, price, and battery life. This aspect makes KBF highly interpretable and controllable.

- Hybrid Systems: These systems are designed to address the limitations of individual approaches [14]. Two or more techniques are integrated, achieving better performance and greater robustness across various user groups and application scenarios.

Despite the proven effectiveness of the different RS algorithms, they still face several challenges [9]. These difficulties stem from the fundamental complexities of how users act, the limitations of available data, and the constantly changing landscapes of the fields where these systems are employed. Resolving these underlying factors is essential to ensure that recommendations remain precise, relevant, and valuable in diverse contexts. The most common challenges are as follows:

- Cold-start: This problem refers to the challenge RSs face when dealing with new users or items for which little or no historical data is available [15]. Since many recommendation strategies, particularly CF, rely on past user-item interactions, a lack of this kind of data leads to unreliable or generic suggestions. Solutions to this problem often include using content-based methods, metadata attributes such as user demographics or item attributes, or hybrid approaches that combine several sources of information [16].

- Data Sparsity: In many real-world systems, the user-item matrix, used for similarity calculations, is extremely sparse, which means that only a small fraction of possible interactions are observed [17]. For example, a user may interact with only a few dozen items in a catalog with thousands of them. This makes it difficult for the algorithms to learn reliable patterns and obtain good recommendations. Techniques such as dimensionality reduction, implicit feedback modeling, and graph-based approaches mitigate this problem by uncovering latent structures or inferring unobserved connections.

- Bias and Fairness: RSs can suffer from social and algorithmic biases arising from data, user actions, or the model design [18]. These biases often lead to over-recommending popular items and overlooking niche content, which may not reflect true preferences. Fairness in RSs means that recommendations should not unfairly disadvantage users or content, especially in areas such as education or healthcare [19]. Achieving fairness involves balancing accuracy with diversity and equity, and can be done through techniques like re-ranking or incorporating fairness into the model’s learning.

These RS principles and challenges acquire unique significance in the educational domain. Educational environments typically involve highly sparse and sensitive data, such as learning activities, assessments, and engagement patterns. Furthermore, the impact of recommendations extends beyond immediate user satisfaction, potentially influencing learning outcomes, student motivation, and long-term academic achievement. Addressing cold-start and sparsity issues is especially critical when supporting new learners or educational resources infrequently accessed. Likewise, addressing bias and ensuring fairness are crucial to avoid reinforcing existing inequalities or disadvantaging underperforming students. By thoughtfully adapting and extending general-purpose recommendation techniques, ERSs can be powerful tools for delivering personalized, equitable, and pedagogically sound learning experiences.

To ensure the correct functioning of any AI algorithm, including RSs, it must go through a structured development process. This process is divided into three main phases: training, evaluation, and inference. Each stage plays a specific role in the system lifecycle, from learning patterns in historical data to delivering personalized recommendations in real time. Understanding and properly implementing each phase is crucial to building effective systems, especially in sensitive domains such as education. The three main development phases are as follows:

- Training Phase: In this phase, the RS processes the available data to learn patterns, extract relevant knowledge, and build a predictive model [20]. The algorithms implemented by the system developer are optimized during this stage to capture user behavior, preferences, and contextual signals effectively. The output of this phase is a trained model along with a preliminary set of recommendations, which must undergo further evaluation before being used in production.

- Evaluation Phase: This phase plays a crucial role in evaluating the precision and robustness of the model trained in the previous step [21]. The evaluation is performed on a separate subset of data not used during training and is based on objective performance metrics to quantify the model’s effectiveness. A thorough evaluation process helps detect different issues and ensures the model responds well to new and unseen data.

- Inference Phase: In the final stage, the trained and validated model is deployed in a live environment, interacting with real-time data from student-platform interactions. Based on this current information, the RS generates personalized recommendations that are presented to the user [22]. This is the only phase where users directly experience the system output, making its precision, timeliness, and usability especially critical to learner engagement and educational value.

These three phases are interconnected and collectively ensure the system’s reliability, accuracy, and responsiveness. System developers typically perform training and evaluation in an offline context, but the platform must seamlessly integrate the inference phase to deliver real-time suggestions.

In educational settings, properly navigating each phase ensures that recommendations reflect meaningful patterns while also supporting student learning processes in a pedagogically sound manner. These three phases should be treated as a continuous cycle rather than a linear process. For example, during training, domain knowledge can guide feature selection; during evaluation, instructional goals can inform metric choice; and during inference, cognitive load theory can help determine the timing and volume of recommendations. Ultimately, mastering the training, evaluation, and inference phases ensures that RSs in education do not function merely as technical tools but as intelligent agents that foster inclusive, effective, and engaging learning environments.

In practice, a portion of the available student data is typically reserved for the training phase, where the model learns from past interactions. The remaining data is used during evaluation to validate the model’s ability to generalize to new situations and avoid overfitting. This separation is essential to simulate realistic scenarios where the system must perform reliably with previously unseen behavior. Once deployed, the inference phase enables the system to provide real-time, personalized recommendations, even for students who were not part of the training process, an especially important feature in dynamic educational environments where new learners are continuously joining.

2.2. Pedagogical Considerations

The integration of RSs into educational contexts necessitates a careful, theoretically informed consideration of learners’ individual needs to ensure pedagogical relevance and effective personalization. Both the instructional design of a course and the development of the underlying recommendation algorithms must be informed by principles that account for diversity in learning preferences and cognitive processing. As identified in the systematic review conducted by [23], learning styles represent one of the most frequently addressed variables in the domain of personalized learning.

Learning style models offer taxonomies for categorizing individual differences in how learners perceive, process, and retain information. These models provide a conceptual basis for tailoring educational interventions and system recommendations. Among the most widely adopted models are Felder-Silverman Learning Style Model (FSLSM) [24], Honey and Mumford’s Learning Style Model (LSM) [25], Kolb’s model [26], and Fleming’s Visual, Aural, Read/write, and Kinesthetic (VARK) model [27]. Each of these proposes distinct dimensions or modalities of learning, as outlined in Table 1, along with their associated learning requirements.

Table 1.

Key characteristics of learning style models and associated learning needs.

Although there is limited empirical evidence supporting the effectiveness of each learning style model individually, there is substantial support for the benefits of personalized learning experiences. Rather than rigidly assigning a single learning style to each learner, ERSs can draw on the profiles suggested by these models to generate adaptive and flexible learning pathways. In this regard, the emphasis is not on categorical labeling but on addressing a broad spectrum of instructional needs that enhance learner agency and personalization.

Despite their popularity and intuitive appeal, learning style models such as VARK, Kolb, and FSLSM have been subject to considerable criticism in the fields of cognitive science and educational psychology. Meta-analyses and empirical studies have questioned the predictive validity and educational effectiveness of matching instructional methods to identified learning styles [28,29]. As such, their use in ERSs should be approached with caution. In this work, learning style models are not adopted as rigid typologies, but rather as heuristic frameworks to support flexible instructional strategies and enhance learner agency. The emphasis is on designing diverse and adaptable learning pathways, not on reinforcing fixed learner categories. This nuanced perspective aligns with contemporary calls for inclusive and evidence-informed personalization strategies.

Table 2 outlines key pedagogical implications for educational design based on the learning needs identified in the models discussed.

Table 2.

Pedagogical implications for educational design based on learning style needs.

Based on these design implications, both the course and the RS can adopt an instructional strategy that accommodates the diversity of learning styles, taking into account the following considerations:

- Content should be delivered in multiple formats, including visual, textual, auditory, and hands-on resources.

- The learning design should integrate both active and reflective cycles, combining practice-based activities with spaces for individual and group reflection.

- Multiple learning paths should be made available, offering sequential access as well as global overviews to match different processing preferences.

- Tasks should be embedded in meaningful contexts, with clearly defined applications that support practical engagement.

Educators must accompany the integration of RSs in educational settings with a thorough pedagogical reflection on students’ individual learning needs. While learning style models may have limitations in terms of empirical validation, they offer a theoretical foundation to guide both instructional design and system adaptivity. Considering these differences should not lead to rigid classification, but rather to the creation of flexible, accessible, and diverse learning environments that foster student autonomy and learning effectiveness. As such, pedagogical design and technological development must advance in tandem to provide truly personalized and student-centered educational experiences.

It is important to note that learning style models are not used in the proposed system as direct drivers of personalization. Instead, they are considered as a reference to inform content representation, helping to provide diversity in resource formats (e.g., video, text, quiz) without assuming them as predictors of learner success. Practical validation of learning styles in ERSs remains limited, and this implementation relies on multiple other signals for personalization.

In addition to drawing on learning style models as heuristic guides, the pedagogical impact of ERSs can also be understood through established learning theories. From a constructivist perspective, recommendations act as scaffolds that guide learners in actively building knowledge, ensuring that prerequisites and exploratory opportunities are respected. In the context of self-regulated learning, the system supports learners in setting goals, monitoring progress, and reflecting on their performance by offering adaptive next steps and timely feedback.

Equally important is the alignment with curricular objectives and competency-based education. Recommendations should not be limited to linear sequencing but should contribute directly to competency acquisition by suggesting remedial resources when gaps are detected or enrichment activities when learners progress faster than expected.

In this way, ERSs move beyond simple sequencing to provide flexible and meaningful pathways that are both pedagogically grounded and aligned with curricular structures, thereby ensuring personalization that is theoretically informed and educationally relevant.

2.3. Personalization in Educational Systems

A considerable body of research has been devoted to the development of personalized RSs in educational contexts, particularly within online learning environments. These works have contributed significantly to the conceptual and theoretical foundations of adaptive learning technologies. For instance, the work [30] introduces a general framework for RSs that integrates knowledge representation, reasoning mechanisms, and learning capabilities. Similarly, the article [31] proposed the Recommender Online Learning System (ROLS), a conceptual model designed to support multiple stakeholders in online education. Other works, such as those by [32,33], focused on personalized learning recommendations for K-12 and higher education students, respectively.

However, a common limitation across these studies is that the proposed systems remain largely at the conceptual or prototype level. Most of them have not been fully implemented or integrated into real-world educational platforms. Although some implementations have been conducted in simulated environments, for example, the use of Fuzzy Cognitive Maps (FCMs) by the study [30] to validate a reasoning-based recommendation engine, these systems have not been deployed in actual e-learning settings. As a result, their practical applicability remains untested and their integration into existing educational ecosystems is not straightforward.

In contrast, the system presented in this research has been fully developed, and is ready for deployment in real online learning platforms such as Moodle. This work addresses the gap between theoretical design and operational implementation, offering a functional solution that can be directly incorporated to enhance personalized learning experiences. This positions our approach as a tangible step forward in closing the implementation gap that continues to hinder the broader impact of recommender technologies in online education.

3. Requirements for an Education Recommendation System

Designing an effective ERS implies addressing a complex set of requirements that go beyond accuracy or personalization. Unlike traditional RSs in commercial domains, such as e-commerce or entertainment, where optimizing for engagement or purchases may be sufficient, ERSs must also be aligned with educational goals, consider the learning context, and ensure ethical use of sensitive student data. This section outlines some important key requirements that an ERS must meet to be truly impactful in online learning environments.

3.1. Learner-Centered Personalization

Traditional RSs often rely on similarity-based approaches, where recommendations are made by identifying users with similar behaviors or preferences. Although this strategy may be effective in specific domains, such as e-commerce or entertainment, it may be insufficient and often counterproductive in educational contexts, where learners’ performance and progression must be considered.

In educational settings, a student who is likely to fail should not receive recommendations derived from similar low-performing peers, as this may reinforce ineffective behaviors or content exposure. Instead, such students should be guided by learning trajectories that have been proven to be successful, leveraging the behavior of students who managed to pass the course under similar conditions. However, this guidance must be realistic: recommendations should not be based solely on the behavior of top-performing students, as their strategies may be inaccessible or overwhelming for learners at risk.

This implies that ERSs must transcend superficial similarity and adopt a performance-aware personalization strategy. The system should be able to differentiate between students who fail, barely pass, excel, or will drop out, and generate recommendations that are calibrated to help each group move toward meaningful improvement. For students with a performance near the passing threshold, the goal is to consolidate and maintain performance, while for those at risk of failure or drop out, the focus is on remediation and support. Even among students who pass, the system should recognize that a learner achieving a minimum passing grade (e.g., 5/10) has different needs than one consistently scoring top marks (e.g., 10/10) and should adjust recommendations accordingly.

Ultimately, ERSs must be sensitive to learner status and progression and leave the assumption that regular behavioral similarity is a reliable proxy for pedagogical relevance. Recommendations should be driven not by who the learner is most similar to, but by what type of support will most effectively help them succeed.

3.2. Pedagogical Alignment

ERSs must personalize the content while also respecting and supporting sound pedagogical structures. Unlike domains where items can be consumed in any order, learning often follows a sequential and hierarchical process, in which understanding advanced concepts depends on the mastery of foundational knowledge. An ERS disregarding this structure risks suggesting resources that may be misaligned with a student’s readiness, leading to confusion, frustration, or superficial learning.

To address this, ERSs must be aligned with the curricular goals and instructional design, ensuring the pedagogical relevance and coherence of the recommendations. This involves respecting the prerequisite relationships between topics, reinforcing prior knowledge before introducing new material, aligning resources with the desired learning outcomes, and learning style preferences. A potential effective strategy is to integrate pedagogical design considerations based on different learning style models (as described in Section 2.2) related to several dependencies that can guide the recommendation process.

In addition, it can be highly beneficial for ERSs to identify and promote effective learning patterns, which are sequences of resource consumption that are associated with successful results. By mining these patterns from historical data and validating them pedagogically, the system can recommend not just isolated resources, but structured learning paths that reflect proven strategies. For example, if students who consistently engage in a “video → quiz → reading” pattern tend to perform better, the system can guide other learners to similar sequences, provided that they match the current state and goals of the learner.

Incorporating such structured recommendation strategies helps transform ERSs from content filters into pedagogical agents, actively shaping the learning journey in a way that mirrors effective teaching practices. Rather than recommending the next most similar item, the system can recommend the next most pedagogically appropriate step, enhancing both the efficacy and coherence of the learning experience.

3.3. Evaluation of Recommendations

A crucial but often overlooked challenge in the design of ERSs lies in determining whether a recommendation was truly effective. In domains like e-commerce or entertainment, the success of a recommendation can easily be inferred from observable user behavior, such as purchasing an item, watching a movie to completion, or leaving a positive rating. However, in education, these proxies are inadequate and often misleading.

Educational impact unfolds over multiple timescales, such as short-term (e.g., completing an activity), medium-term (e.g., passing an assessment), and long-term (e.g., mastering a skill or completing a course). A resource might not have immediate visible effects, yet could be critical for understanding future concepts. In contrast, a resource might seem engaging or enjoyable, but fail to contribute meaningfully to learning outcomes. This temporal complexity makes it difficult to define the ground truth for the success of the recommendation.

Relying on student ratings or feedback is also problematic. Learners may not be able to accurately assess whether a resource contributed to their understanding, particularly when dealing with complex or unfamiliar content. Their evaluations may be influenced more by perceived difficulty or presentation style than by pedagogical value. Similarly, using behavioral signals such as clicks, time spent, or completion rates does not guarantee that the learner understood or internalized the content. These surface-level metrics may be insufficient to capture the depth of cognitive engagement or learning transfer and can lead to engagement optimization rather than actual educational benefit.

To address this, ERSs must move toward multi-faceted and pedagogically-informed evaluation strategies. These could include measuring changes in learner performance over time, detecting improvement in assessment outcomes after exposure to recommended resources, or modeling learning gains using cognitive or mastery-based metrics. In addition, evaluations must be contextualized within the history, goals, and starting point of each learner, recognizing that the same recommendation can have different impacts depending on the individual. Ultimately, defining and validating the success of the recommendation in education requires a paradigm shift, where the impact of learning, not just user behavior, becomes the primary objective function.

3.4. Ethical Use and Data Privacy

ERSs often rely on sensitive data, including performance metrics, behavioral traces, and personal information. As such, these systems must strictly adhere to data protection regulations (e.g., GDPR), ensuring that students’ data is collected, processed, and stored with transparency, security, and purpose limitation. Users should be informed about what data is collected and how it is used, with the possibility to opt in or out of data sharing and personalization features.

It is important to note that not all data used by ERSs carries the same level of sensitivity. For example, aggregated behavioral data, such as navigation paths, interaction sequences, or anonymized clickstream logs, typically pose less of a privacy risk and can often be utilized without compromising individual identity. These data types can provide valuable insight into effective learning patterns and support adaptive recommendations without requiring access to deeply personal or identifiable information. However, personal data such as names, emails, demographic attributes, or grades requires stricter safeguards, including anonymization, encryption, and controlled access.

Beyond compliance, ethical design must also address issues of bias, fairness, and transparency. RSs should avoid reinforcing existing inequalities, such as disproportionately directing certain demographic groups toward less challenging content or career-limiting paths. They must promote equity in access to educational opportunities, ensuring that all students, regardless of background, receive relevant and empowering recommendations. This perspective resonates with the principles of justice and fairness identified in international AI ethics frameworks. For example, the European Commission’s Ethics Guidelines for Trustworthy AI (2019) stress non-discrimination and fairness as fundamental requirements [34], while the UNESCO Recommendation on the Ethics of Artificial Intelligence (2021) highlights inclusivity and equitable access to AI benefits as global priorities [35].

Importantly, learners should retain full autonomy over their educational journey. Although the system may provide suggestions based on data-driven insights, students must always have the freedom to ignore, question, or override recommendations. ERSs are intended to assist, not dictate, the learning process. Preserving learner autonomy is essential to foster motivation, self-regulation, and learning ownership, which are critical to long-term academic and personal development. Recommender interfaces should therefore clearly communicate the optional nature of suggestions and support independent exploration and decision-making. This is consistent with the principle of human agency and oversight, as emphasized in both the IEEE Ethically Aligned Design report (2019) [36] and the European Commission’s guidelines, which call for systems to remain under meaningful human control.

Finally, transparency and explainability represent a cornerstone of trustworthy ERSs. Students and educators should be able to understand, at least at a high level, why specific recommendations are generated. Providing interpretable explanations not only increases trust but also empowers educators to contextualize or adapt recommendations within their pedagogical strategies. Embedding such practices into the design of ERSs ensures alignment with ethical AI principles and reinforces the ultimate goal of these systems: to support equitable, transparent, and learner-centered education.

3.5. Scalability and Integration

For ERSs to be viable in real-world learning environments, they must be scalable and seamlessly integrable into the existing digital infrastructure used by educational institutions. This includes Learning Management Systems (LMSs), online course platforms, and Virtual Learning Environments (VLEs), which often come with their own constraints, data models, and user-interaction paradigms.

Scalability refers not only to the ability of the system to handle large volumes of users and resources, but also to its ability to adapt to different educational contexts, such as varying course structures, disciplines, or levels of education. An ERS designed for a university-level programming course must not assume the same structure or user behavior as one supporting primary-level mathematics. Therefore, ERSs must be modular, adaptable, and extensible, allowing institutions to customize their behavior and integrate them into heterogeneous curricula and pedagogical approaches.

Moreover, integration goes beyond technical compatibility. It also requires interoperability with existing data sources, such as grade books, activity logs, assessment results, and student profiles. This enables the system to generate more accurate and context-aware recommendations, leveraging rich historical and real-time data. Using open standards such as Learning Tools Interoperability (LTI) and Experience API (xAPI) can facilitate this integration, allowing ERSs to function as part of a broader learning ecosystem [37,38].

Finally, systems must maintain responsiveness and low latency, even as the number of learners and interactions grows. Delayed or outdated recommendations can reduce user trust and system effectiveness. Leveraging cloud-based infrastructure, parallel computing, and incremental model updates can help ensure that the system performs efficiently at scale, even under heavy use.

4. Proposed Architecture

To address the unique challenges of educational recommendation, this work proposes a modular and adaptable system architecture designed to support recommendations that are centered on the learner, pedagogically aligned, and ethically responsible. The architecture is structured into five primary layers: Data Layer, Processing Layer, Recommending Layer, Evaluation Layer, and Output Layer. Each layer plays a specific role in ensuring that recommendations are timely, relevant, contextually appropriate, and accessible to students.

It is important to note that the RS developer always defines the layers and does not allow them to be modified. Their use by whoever integrates the system into a platform will depend solely on the capabilities provided by the developed system. The role of the person in charge of integrating the system into an educational platform is to ensure that the necessary data for the system is properly collected within the platform and transmitted following the structure designed by the system’s developer.

This layered approach facilitates scalability, modular development, and easier integration with current educational platforms. It also allows for clear separation of concerns: from data acquisition and transformation, to real-time student modeling, pedagogical alignment, and personalized recommendation delivery. Each layer is designed to operate independently and in coordination with the others, enabling the system to respond dynamically to student needs while respecting educational constraints and ethical principles.

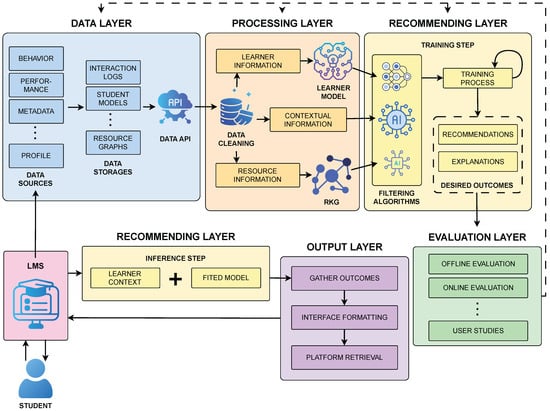

The following subsections detail the function and components of each architectural layer. Figure 1 illustrates the complete architecture proposed in this work.

Figure 1.

Proposed architecture for developing Educational Recommender Systems.

4.1. Data Layer

The Data Layer is responsible for collecting, organizing, and securely storing all relevant information used by the system. In the educational domain, there are a wide variety of data types involved, each differing notably in terms of sensitivity, structure, and pedagogical relevance [5]. The system must collect data from multiple sources while upholding privacy and ethical standards to enable effective personalization. Below are some examples of such data types:

- Behavioral Data: This includes elements such as clickstream logs, time spent on resources, scrolling activity, and the sequence in which learning materials are accessed. These traces provide valuable and low-risk information on learning behaviors and are especially useful for identifying engagement patterns, cognitive load indicators, and common paths among successful students.

- Performance Data: Results from resources like quizzes, homework submissions, midterm and final assessments, and participation in graded activities can be used for estimating learner progress and competence. These are often considered sensitive and must be handled with appropriate care.

- Resource Metadata: Each educational resource (e.g., videos, readings, exercises) is annotated with features such as topic, difficulty level, format, pedagogical role (introduction, reinforcement, assessment), or estimated completion time. These attributes may help align recommendations with the learners’ current needs and instructional goals.

- Learner Profile Data: This encompasses student-specific information such as declared learning goals, prior knowledge (e.g., through diagnostic tests), historical interactions, risk status (e.g., low, medium, high likelihood of failure), and engagement trends. Profiles may be initialized at course entry and updated dynamically based on behavior and performance.

Once the required data is available to the system, it must be organized into dedicated repositories based on their intended purpose. This organization ensures that the data is structured to facilitate efficient processing in subsequent layers of the architecture. Examples of such repositories include:

- Interaction logs for temporal behavior analysis.

- Student models representing current states of the learner.

- Resource graphs encoding relationships between content units.

Although some data types, such as interaction paths or aggregated usage patterns, pose relatively low privacy risks, others, such as academic performance or personally identifiable information, are highly sensitive. The architecture, therefore, should incorporate robust data anonymization and encryption mechanisms whenever these sensitive types of data are present, ensuring that identifiable information is either transformed or securely protected before processing. Compliance with regulations such as GDPR is enforced at this layer.

To support real-time and historical analysis, this layer should include both batch processing components (for offline training and profiling) and streaming capabilities (to capture live learning data as students interact with the system). Additionally, it should expose standardized APIs for upstream layers to retrieve data efficiently and securely.

In some platforms, such as Moodle, much of this data is stored natively, which means that the system integrator may not need to make significant modifications to enable data access. However, if the functions responsible for transferring data from the platform do not match those expected by the Data Layer of the system, a mismatch occurs. In this case, the integrator will need to develop an intermediate communication layer that will bridge the gap between the functions available on the platform and the requirements of the system. For data not collected by the platform, the integrator must design and implement new functions to provide the necessary information to the system.

4.2. Processing Layer

The Processing Layer acts as the bridge between raw data and actionable recommendations. Its main function is to convert diverse data streams into structured and semantically rich representations suitable for use by recommendation algorithms. By dynamically modeling both learners and educational content in a context-aware manner. This layer is essential to achieve adaptivity and align recommendations with pedagogical goals.

Initially, this layer is responsible for cleaning, normalizing, and structuring the raw input data to align with the system’s requirements. These requirements are defined during the design phase of the RS and are based on factors such as the chosen recommendation algorithm and the specific input characteristics that the system relies on to generate recommendations. Essentially, this process involves aggregating the data according to the specifications of the system.

For example, behavioral data can be segmented into learning sessions, sequences, or weekly activity windows. Performance data can be normalized for the grading scales and variability between the different assessment tasks. Missing or noisy data should be handled or filtered out, depending on the possible impact they may have. Temporal alignments can also be performed to associate interactions with course progression stages (e.g., early, midterm, final weeks), allowing context-aware analysis.

At the core of the processing layer lies the learner model. The learner model represents the user using all the cleaned data used by the system, and it serves as the input of the RS to retrieve the recommendations. Based on the data that it reflects, it can be a static model (e.g., using data attributes such as country or goal) or a dynamic model (e.g., using data attributes such as path or trajectories). Instead of static models, the developed system should use dynamic models, continuously refining their profiles based on recent behaviors or performance results [39]. These models may include characteristics such as:

- Knowledge-state representations, typically encoded in different forms.

- Engagement and motivation indicators, inferred from behavior patterns (e.g., decreased activity, abrupt dropouts).

- Estimation of the risk status, using predictive models, integrated in the LMS platform, to detect students who are likely to fail or disengage.

- Learning preferences or pace, derived from resource consumption habits and task completion times.

Some platforms, such as Moodle, already include built-in functionalities to estimate learners’ risk status or to analyze user behavior patterns to infer their preferences or engagement. The characteristics included in the learner model are determined by the system developer, as they serve as input to the recommendation system. When building the learner model, the integrator of the system into the educational platform has no active role, since the data required to construct the model should already be accessible after the previous layer has been properly configured.

Another interesting approach is to construct Resource Knowledge Graphs (RKGs) that represent the logical and instructional relationships between resources that are part of the course [40]. RKGs are structures that link the different resources depending on the relations that the system developer has envisioned. The system itself constructs the RKG, but the system integrator within the educational platform is responsible for providing all necessary data in the Data Layer. However, the structure and nature of the graph are determined by the types of relationships defined by the system developer, which guide how the resources should be connected. These graphs can be particularly useful in critical situations where data may be missing or when the student requires a more guided learning path based on the instructor’s directives. Possible relations that may be represented are as follows:

- Prerequisite chains defined by instructors or inferred from sequences of learners.

- Topic similarity using Natural Language Processing techniques on resource descriptions.

- Co-occurrence and success patterns among past learners.

Moreover, these RKGs can incorporate multiple dimensions or be enriched with metadata to better capture the characteristics of the resources. For example, metadata can include the type of resource (e.g., video, PDF), allowing the system to differentiate between content formats. If the system aims to consider student preferences, such as some learners favoring videos while others prefer reading, resources can be duplicated within the graph. Each instance represents the same content in a different format. This enrichment enables more personalized and flexible recommendation strategies based on both the structure of the content and the learner’s preferences.

Incorporating contextual information into the recommendation process may also improve relevance and pedagogical alignment. Unlike traditional domains, educational settings are highly dynamic and are limited by both instructional design and the learner’s progression. As with the RKG, this contextual information must be provided by the system integrator, in the Data Layer, following the structure and specifications defined by the system developer. Context-aware recommendation strategies can adapt to these variables by considering elements such as:

- Course timeline: The system should be aware of the academic calendar, including upcoming deadlines, exam periods, or the current week of the course.

- Instructor rules: Many courses enforce pedagogical logic through structures such as prerequisite modules or locked assessments. The system should respect these rules by ensuring that students are only guided toward resources they are eligible to access, or by helping them complete required preparatory content in the appropriate order.

- Cohort-level trends: Analyzing aggregate data from the entire class can uncover patterns of difficulty or success, thus reinforcing the recommendation of certain resources.

Incorporating these elements allows the system to generate recommendations that are not only personalized but also contextually relevant and aligned with the overall instructional design and learning objectives. This alignment is especially critical in educational contexts, where personalization must go beyond surface-level preferences to address deeper cognitive and perceptual differences between learners. By integrating principles from established learning style models (see Section 2.2), the system can diversify the presentation of content and the structure of learning paths, ensuring that students with varying learning preferences have equitable opportunities to engage, process, and retain information effectively. Consequently, the processing layer becomes a pedagogically-aware component that structures data for algorithmic consumption and also plays a foundational role in supporting flexible, multimodal, and learner-centered educational experiences.

4.3. Recommending Layer

The Recommending Layer is the core of the ERS, responsible for generating learning suggestions that are personalized, pedagogically meaningful, and ethically grounded. Unlike generic RSs, ERSs must account for the dynamic and evolving nature of both the learner and the learning environment. As the course progresses, the learners develop new skills, change their behavior patterns, and face different challenges, requiring the system to adapt its recommendations accordingly. In addition, instructors can introduce new materials or modify existing content in response to emerging needs, changing objectives, or specific classroom dynamics. Therefore, this layer must operate with a high degree of flexibility and context-awareness, aligning its outputs with ongoing changes in learner profiles, course structures, and educational goals such as progression, remediation, or re-engagement.

This layer contains two main components, each with its own inputs and outputs. The first component is the training phase, where the algorithms implemented by the system developer are fine-tuned. In this phase, the input consists of the various learner models, RKGs, and contextual information required to generate personalized recommendations. The output is a set of preliminary recommendations that are then subjected to an initial evaluation process (in the evaluation layer). The second component is the inference phase, where the input is the real-time interaction of the student with the course resources. Based on this input, the system generates recommendations that are presented to the student to guide their learning path.

Due to the nature of recommendations in the educational domain, different recommendation algorithms or a combination of them can be used in this layer. This allows the integration of several paradigms to balance personalization, effectiveness, awareness, and instructional validity [41]. Some examples of recommendation algorithms are the following:

- Content-Based Filtering: Suggestions are based on resource features (topic, difficulty, prerequisites) and learner profiles (knowledge state, learning goals).

- Collaborative Filtering: Past interactions of similar students are used, with a critical caveat: learners predicted to fail should not receive recommendations based on others in the same risk group. Instead, they are guided using patterns from students who overcame similar challenges and eventually succeeded.

- Knowledge-Based Filtering: Pedagogical rules, such as prerequisite completion, resource type balancing, or topic coverage constraints, are embedded to preserve instructional coherence.

It is important to note that the generated recommendations should be adapted according to the current status and trajectory of the learner during the course [42]. For example, for an at-risk learner, the focus should be placed on recovery and motivation, suggesting accessible but effective resources, often those that helped similar students transition from failing to passing. For passing learners, recommendations should aim to consolidate knowledge and ensure that they maintain progress, without assuming that their goals or needs align with high-performing students. For disengaged learners, the content should be prioritized to regain attention and promote reentry into the learning process, potentially using short or interactive resources to lower reengagement friction.

The system should move beyond recommending individual resources and instead propose learning sequences or short-term learning paths. This can be achieved by prioritizing sequences that have historically resulted in improved outcomes for learners with similar profiles, while also respecting the pedagogical dependencies encoded in the RKG. This approach helps optimize cognitive load, promote content diversity, and ensure adequate coverage, preventing learning experiences from becoming repetitive or too fragmented.

In this layer, the system integrator is not expected to play an active role, as the implementation of the training and inference logic falls entirely under the responsibility of the system developer. However, in practice, it is possible that the developer has not fully implemented the connection between the educational platform and the inference component of the system. In such cases, it becomes the integrator’s responsibility to ensure that this connection is properly established, allowing the system to receive the necessary real-time input and deliver the recommendations effectively within the platform.

4.4. Evaluation Layer

The Evaluation Layer ensures that the recommendations generated by the system are relevant, effective, and pedagogically sound. In educational settings, measuring the success of a recommendation is more complex than in traditional domains, as the benefits may not be immediate or easily quantifiable. In domains like e-commerce or entertainment, validating a recommendation is often straightforward. However, in education, the impact of a recommendation may unfold gradually, and success may involve improvements in understanding, retention, or motivation. This highlights the need to adopt a broader and more nuanced perspective on what defines a valid recommendation.

The Evaluation Layer must incorporate various indicators to assess whether the recommendations have the desired effect [43]. These indicators help to infer whether the system’s suggestions support learning goals, even if direct feedback is unavailable or unreliable. Among the possible indicators, some are as follows:

- Observable changes in learner behavior.

- Improvements in academic performance over time.

- Patterns of continued engagement with recommended content.

Rather than focusing only on predictive accuracy or behavioral similarity, evaluation must be guided by pedagogical intent. A valid recommendation must help the learner move closer to meaningful learning outcomes, whether that means overcoming a weakness, maintaining steady progress, or staying engaged in the course. The system should be regularly reviewed to ensure that it supports, not replaces, these goals.

Evaluation is not a one-time process but an ongoing mechanism for system refinement. Insights gained from observing how students respond to recommendations can inform adjustments to the recommendation logic, personalization strategies, or even the course structure itself. This promotes a culture of evidence-based iteration and keeps the system aligned with both learner needs and instructional objectives.

Ideally, the evaluation should assess the effectiveness of the recommendations and the quality of the explanations provided by the system. In educational environments, transparency is crucial, not only for ethical reasons but also to support learner autonomy and trust. Ensuring that students (and educators) understand why a particular recommendation was made helps them make informed decisions and fosters reflective learning. Therefore, evaluation should include methods to evaluate whether the explanations are clear, meaningful, and aligned with pedagogical goals, ensuring that the system is accurate while also interpretable and accountable.

RS evaluation typically relies on three complementary strategies [44]. These strategies offer different perspectives for assessing the performance and impact of RSs, providing a more comprehensive understanding of their effectiveness in practical applications. Specifically, the main evaluation strategies are the following:

- Offline evaluation, which involves assessing algorithm performance using historical or synthetic datasets, employing metrics such as precision, recall, or NDCG.

- Online evaluation, where the system is deployed in a live environment and its effectiveness is measured through methods such as A/B testing or real-time performance monitoring.

- User studies, in which learners or instructors interact directly with the system, providing qualitative feedback on aspects such as usability, perceived relevance, and overall satisfaction.

It is essential to leverage the Output Layer to conduct online evaluations and user studies, as these strategies require real interactions between the student, the platform, and the recommendation system. In these cases, the inference component of the Recommending Layer must be fully operational, allowing recommendations to be delivered and monitored in real time. Conversely, the Output Layer is not required if the goal is limited to performing an offline evaluation. In such scenarios, it is sufficient to test the results generated during the training phase of the Recommending Layer using historical data, without involving any real-time interaction or platform integration. In educational settings, combining these evaluation approaches is particularly valuable as it allows capturing both the quantitative effectiveness of the system and its qualitative educational impact.

In this layer, the system integrator within the platform has no direct responsibility, as it is the responsibility of the developer to ensure that the system functions correctly and produces valid recommendations. The design, training, and evaluation of the algorithms fall entirely within the scope of the developer. However, the integrator may choose to perform online evaluations or user studies if desired.

4.5. Output Layer

The Output Layer serves as the interface between the RS and the learner. Its main role is to present the retrieved recommendations to students in a clear, transparent, and pedagogically supportive way. This layer is essential to ensure that all the suggestions provided are not only technically accurate but also understandable, practical, and meaningful for all types of students.

These recommendations must be presented through the educational platform’s user interface and be, whenever possible, accessible to the student from any view of the platform, integrating the RS directly into the students’ learning environment. These recommendations can be presented through different delivery formats, such as resource suggestions, learning paths, or recovery plans. Recommendations must be context-aware, and thus should be adapted to the learner’s current needs and state, such as recent activity, current possible outcome, or course timeline. For example, a student with limited time before an exam may receive a condensed review sequence rather than an extended remediation plan.

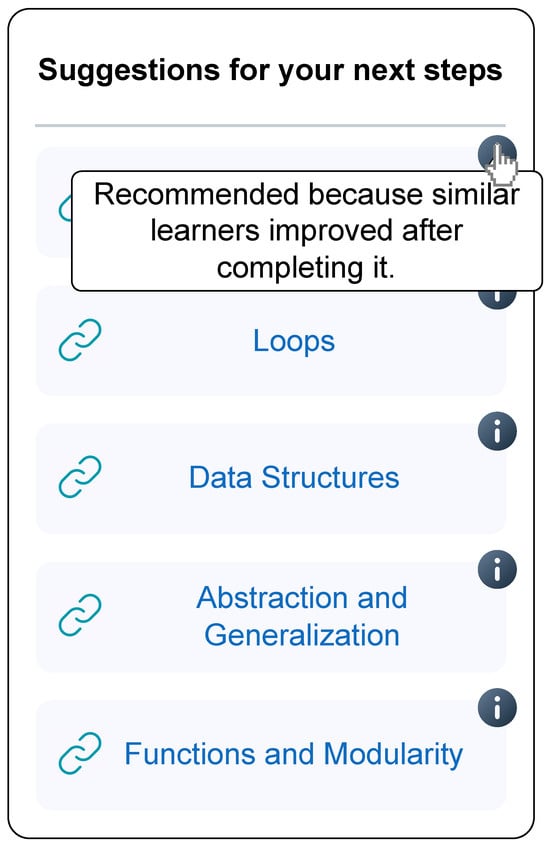

Another important trait to consider is the promotion of trust and user engagement. For this reason, each recommendation should be accompanied by a brief explanation highlighting the rationale behind the suggestion. This interpretability is essential in educational contexts, where students and instructors must be able to understand and reflect on the reasoning behind system decisions [45]. Statements like “Recommended because similar learners improved after completing it”, “Covers a prerequisite for an upcoming assessment”, or “You struggled with similar topics earlier” are good examples of indicating the reasons to students.

This layer must always reinforce the learners’ autonomy, aligning with ethical design principles. This approach promotes self-regulation and student agency, encouraging learners to take ownership of their learning journey while still receiving support from the RS. Some of these ethical principles are as follows:

- Recommendations must be non-binding and clearly labeled as suggestions.

- Users must be able to dismiss, postpone, or select alternative resources.

- The system must respect user preferences.

These recommendations should be delivered through a well-designed interface that aligns with usability principles and supports intuitive interaction to ensure effectiveness. The interface should provide contextual explanations, minimize cognitive load, and promote learner autonomy by allowing students to explore and reflect on suggestions. To support the diversity present in learners, recommendations can also be delivered through multimodal channels, such as text, visual tags, or optional notifications, and respect accessibility standards, such as screen-reader compatibility, customizable font sizes, and colors. This ensures the RS is inclusive and usable in a broad student population.

Similarly to the Recommending Layer, the system integrator is generally not expected to have any responsibilities within this layer beyond ensuring that the system is properly deployed and enabled on the platform. However, if the system developer has not implemented the output layer, it becomes the integrator’s responsibility. The integrator must design, implement, and connect the user interface to present the generated recommendations. This includes ensuring that recommendations are delivered to the appropriate components within the educational platform in a usable and accessible format for learners.

5. Use Case

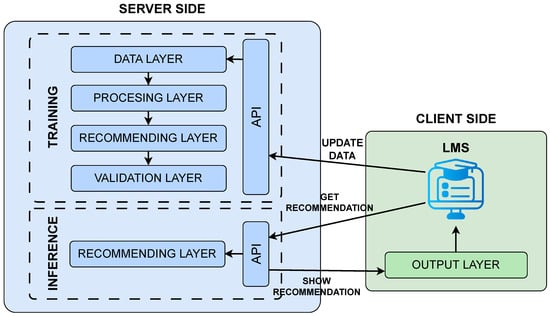

One practical and impactful use case for ERSs is their integration into widely used LMSs such as Moodle. Moodle is an open-source platform widely used by educational institutions worldwide to manage and deliver online learning. One of its main advantages is its modular architecture, which allows the system to be easily extended through plugins. These plugins enable the incorporation of new functionalities, including intelligent personalization features. Moreover, Moodle supports integration with external services via internet-based communication, being well-suited for client-server architectures where complex processing can be handled externally while maintaining a seamless user experience. In this client-server setup, the Moodle platform acts as the client side, while the host of the developed RS operates as the server side. Figure 2 shows how the communication protocol between the client and the server side works.

Figure 2.

Example of communication protocol between Moodle (client side) and the RS (server side).

This architecture enables the recommendation service to scale independently from the Moodle instance, maintaining a clear separation between the learning environment and the intelligent components of the system. This modularity not only facilitates the evolution of the RS, allowing the integration of more sophisticated models and data-driven strategies over time, but also minimizes the need for invasive changes to the LMS. This flexible design aligns well with current trends in educational technology, where the acceptance and effective use of digital tools depend on their seamless integration into existing platforms [46]. As educators increasingly seek to design learning experiences that enhance student engagement, adaptable systems like this offer a promising foundation for embedding personalization into mainstream educational workflows.

Going deeper into the role of the system integrator in the platform, the different steps to be carried out to include the system in Moodle will be explained below. It will be assumed that there is neither an Output Layer nor a direct communication between the Data Layer and the platform, nor a communication between the Recommending Layer, in its inference phase. In this use case, the system developer has enabled various API functions to provide the system with all the necessary data. It will also be assumed that the integrator will not perform any evaluation.

To facilitate adoption, the complete implementation of the proposed recommendation system, including source code, configuration files, and example data structures, is available at https://github.com/almtav08/moodle_recom (accessed on 14 September 2025), and can be deployed in any Moodle platform. The repository includes detailed instructions to guide technical developers and system integrators through the setup process, ensuring that the system can be effectively incorporated into Moodle or similar LMS platforms with minimal effort.

5.1. Data Layer Integration

Regarding the interaction data between students and platform resources and performance data, Moodle automatically records this information, so there is no need to implement additional data collection mechanisms. However, it is necessary to develop a function that, upon each interaction, retrieves the relevant data, formats it according to the structure defined by the system developer, and sends it to the RS via the corresponding API. Moodle facilitates this process by developing custom plugins, PHP scripts to access the Moodle database and perform the required operations, including data transformation and communication with external systems.

A possible approach for other types of student data, such as demographic information, prior knowledge, or learning goals, is to use Moodle’s built-in questionnaire or survey tools to collect this information. A custom script can then be developed to extract the responses, format them according to the structure required by the system, and transmit the data through the appropriate API.

For the data required to construct the RKG, it is sufficient to provide tuples that link pairs of resources based on the relationships defined by the system developer. For example, given a set of 10 resources and two distinct types of relationships, the input could consist of triples such as . From these data, the corresponding graph structure can be generated. Additionally, it is possible to enrich the graph with metadata. This can be achieved by providing tuples that associate each resource with its respective metadata attributes, for example . All of this information must be transmitted to the system through the corresponding API, following the format and structure established by the developer.

Finally, contextual information can be obtained through various means, depending on its nature. For example, course-related data, such as the timeline or schedule, is natively collected by Moodle. In the case of student behavior patterns or trends, Moodle may include built-in mechanisms or plugins capable of performing such analyses and making the results available to the system. Conversely, if contextual information is based on instructor-defined rules or pedagogical constraints, it is the system integrator’s responsibility to implement the necessary functionality. This functionality is needed to extract and transmit the information to the system and must be done via the appropriate API, following the structure defined by the developer.

5.2. Recommending Layer Integration

To integrate the inference phase, Moodle provides the option to create a specific type of plugin known as a Block. These blocks enable the addition of custom components to the user interface within each course, allowing for the dynamic display of system outputs, such as recommendations. A block typically consists of two main components: a back-end component responsible for processing the necessary information (e.g., retrieving and preparing recommendations), and a front-end component that handles the presentation of this information to the user in a visually appropriate format.

The integration of this layer is tied to the development of the back-end component of the block. Specifically, this component is responsible for identifying the currently logged-in user and making a request to the system’s recommendation API. Based on this request, the system processes relevant learner data, such as prior interactions, learner model, or contextual information, and returns a set of raw recommendations, with their corresponding explanations. These recommendations can then be transmitted to the front-end component for visualization within the course interface.

5.3. Output Layer Integration

The integration of this layer is linked to the front-end component of the block. At this stage, a user interface must be designed to present the recommendations and their corresponding explanations to the student. This interface should communicate the information clearly and concisely while remaining visually consistent with the platform’s design to ensure usability and a seamless user experience. Figure 3 shows the implementation of this front-end component in the Moodle platform.

Figure 3.

Use Case example of how the Output Layer of RSs can be implemented in a Moodle platform.

5.4. Institutional Integration

Although the system is designed for seamless integration with standard LMS platforms such as Moodle, we acknowledge that institutions may vary in terms of available technical resources and staff expertise. This section outlines the minimum requirements for deployment and clarifies the level of institutional effort involved.

The proposed architecture already includes native capabilities to extract and process LMS content. Specifically, in the case of Moodle, the system can automatically retrieve all course resources through direct database access or APIs, without requiring any additional development. The only requirement from the integrator is to grant the system permission to access the relevant data sources. As a result, instructors or course designers are not expected to manually input resource data or interaction logs.

More importantly, the system provides a dedicated configuration interface through which instructors can:

- Select which resources should be included in the recommendation process.

- Define pedagogical relations among resources (currently, prerequisite order and revisitation).

This interface eliminates the need for manual configuration or programming knowledge, enabling instructors to create structured learning paths with minimal effort. These relationships are then used to construct the RKG, which serves as the backbone of the recommendation engine.

From a technical perspective, the only requirement for deployment is the installation of lightweight plugins within the LMS. These plugins are responsible for securely fetching recommendations from the external engine and displaying them within the Moodle interface. All necessary scripts, API endpoints, and installation instructions are available in the open-source repository (accessed on 14 September 2025): https://github.com/almtav08/moodle_recom.

To run the system, institutions must provide a server, either an internal machine or a lightweight cloud-based instance, accessible over the internet, where the recommendation engine can be hosted. Once deployed, the engine functions independently from Moodle’s internal logic and requires only occasional maintenance or updates.

A minimum viable deployment can typically be completed within one week by a developer or LMS administrator. The required technical effort is limited to:

- Installing the provided Moodle plugins.

- Configuring the connection between Moodle and the external recommendation engine.

- Hosting the recommendation engine in an accessible server environment.

By shifting the integration workload away from software engineering toward pedagogical configuration and by providing prebuilt integration tools, the system significantly reduces the barrier to adoption. Institutions with limited technical capacity can still benefit from personalized learning support, leveraging existing course structures and instructor-defined relationships.

Overall, the architecture supports a realistic and scalable integration pathway suitable for most educational contexts. Its modular design enables gradual adoption, while the open-source distribution and reliance on pedagogical rather than behavioral data ensure broad applicability and long-term flexibility.

5.5. Technical Requirements and Portability

The current implementation of the proposed framework has been developed and validated for the Moodle LMS. In order to ensure reproducibility and facilitate adoption, we provide the following technical details:

- Prerequisites: The ERS is hosted externally as a server-side service. Moodle requires network connectivity to the service, and the installation of a custom plugin (provided in the repository) that manages API communication. PHP 7.4+ and a database driver compatible with the institution’s Moodle installation are required.

- Moodle version: The plugin has been tested with Moodle versions 4.0 and above, which ensure compatibility with the plugin system and web service APIs. Earlier versions of Moodle (3.x) may require adjustments in database queries and API calls.

- Portability to other LMSs: Although Moodle was selected for the reference implementation due to its widespread use and modular plugin architecture, the framework is not limited to Moodle. Integration with other LMSs (e.g., Canvas, Blackboard, Open edX) can be achieved by developing equivalent plugins or middleware components that: (i) collect learner interaction data; (ii) transmit it to the recommender service following the expected API format; and (iii) present the retrieved recommendations in the LMS user interface. Since the framework follows open standards (e.g., LTI, xAPI), portability is feasible with moderate development effort.

By clarifying these requirements, we aim to facilitate the practical adoption of the proposed system across different institutional settings and ensure that the framework can evolve beyond Moodle-based deployments.

6. Evaluation

The effective assessment of ERSs is a critical aspect that goes beyond technical performance. It must also capture pedagogical relevance and user perceptions to ensure the system’s applicability in real learning contexts. This section presents the evaluation processes conducted for the proposed system, describing the methods, criteria, and instruments employed to analyze the perceived usefulness and pedagogical impact of the system.

6.1. Evaluation Setup

The evaluation of the proposed ERS was designed to provide evidence of its feasibility, pedagogical potential, and practical integration into an educational context. Given that the primary goal of this stage was not to assess the ultimate quality of the recommendations in real courses, but rather to determine the system’s viability and acceptance, a multi-perspective evaluation setup was adopted.

The evaluation combined expert-based assessment with usability testing. Twelve professors and twelve students participated, selected to represent different ages and to ensure a balance in gender. The participants were from Spain and Italy, allowing the study to incorporate perspectives from two different educational contexts. This cross-national composition contributes to a more diverse and representative evaluation of the system’s applicability and usability.