1. Introduction

Modern data analytics pipelines routinely generate large sets of structured patterns, such as frequent itemsets and association rules extracted from transactional data, sequential motifs inferred from logs and clickstreams, and subgraphs extracted within networked settings. While algorithms have improved, such pattern discovery scalability, and practical pattern mining utility ultimately depend on the efficacy of configuring, indexing, storing, and querying such findings.

Here, the true interactive pattern retrieval, far from being considered as a secondary aspect of the discovery process, must be a fundamental resource. It should enable diverse query types (exact, top-k, and approximation), permit diverse representation and rich metadata, and achieve interactive interaction times with realistic operating conditions. This work argues that pattern retrieval is a bridge between mining work and decision-making, emphasising the need for a repository organisation, index schemes, and access mechanisms, supporting scalable, intelligible, and actionable pattern use across a range of domains reaching well beyond immediate experimental settings.

1.1. Motivation for Pattern Retrieval Efficiency

With increased focus on actionable knowledge, pattern extraction, including frequent itemsets, sequences, subgraphs, or association rules, has become more sensitive to performance metrics [

1,

2]. Though notable progress has been made in algorithmic approaches making larger and more complex patterns amenable to mining, little research has investigated the effectiveness of the pattern searching process itself. This effectiveness goes beyond outright computation speed; it is critical to nurture auxiliary tasks, such as understanding retrieved patterns, consolidating models, managing user feedback, and performing real-time analysis execution [

3,

4]. To orient the reader within the wider landscape,

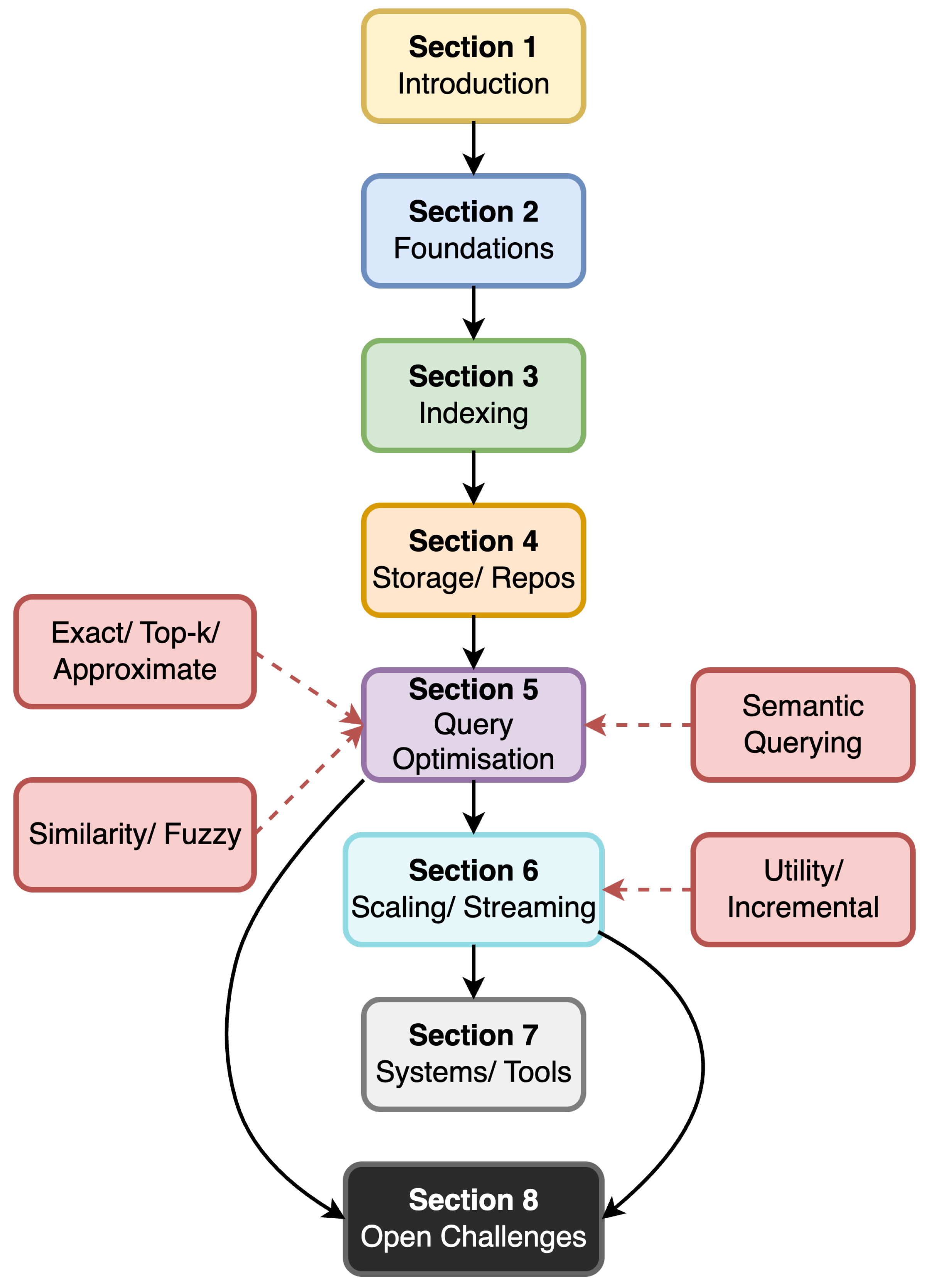

Figure 1 provides a taxonomy-style classification with the targets (in red) for this review.

The insufficiency of strong data organisation and access paradigms has an impact on pattern mining, largely because of redundancy, latency, and limited scalability concerns in query execution, including approaches based on cloud pattern mining through hybrid meta-heuristic optimisations [

5,

6]. The ability of pattern mining to increase exponentially with respect to data volume and dimensional richness makes simplistic recovery techniques progressively unworkable.

This challenge is aggravated in environments where immediate, dynamic access to knowledge mining is necessary; for instance, querying a pattern repository via an interactive dashboard or contrasting real-time data with pre-mined models. It is therefore crucial that data and their corresponding patterns are rigorously structured to allow efficient, flexible, and memory-effective access, a key element of successful data mining systems. The need to improve the efficiency of pattern retrieval, popularly seen as a secondary issue, deserves amplified attention with applications increasingly in need of faster response times and better interpretability.

1.2. Scope and Contribution of the Review

The goal of this review is to shed light on the often-overlooked relationship between data management and data mining, by means of an in-depth exploration of how different data organisation methods enable the discovery of patterns. This research highlights the structural, algorithmic, and systemic aspects, which underpin the effective storage, indexing, and retrieval of both raw data and discovered patterns [

5,

7,

8]. The exploration includes basic indexing structures (e.g., prefix trees, inverted indices, and hash maps), the physical data storage configurations (e.g., flat files, databases, and NoSQL databases), alongside methods for optimising access (e.g., caching, ranking, and approximate retrievals). Special focus is given to structured classes of data, e.g., transactional, relational, and sequence data, while striving to learn from environments involving semi-structured and spatial data.

We take a system-oriented approach to pattern retrieval, considering indexing, storage, and access as an integrated and complete design framework that covers main pattern types. By explaining the relations between representation, metadata, and querying-and by showing how these choices affect the repository structure, we offer an integrated model that complements algorithm-oriented work and helps readers grasp the complete range of retrieval operations relevant to different scenarios.

This review comprised a structured literature search in peer-reviewed publication databases (such as IEEE Xplore, Web of Science, and ACM Digital Library) with terms including “pattern mining”, “pattern retrieval”, “pattern indexing”, “data organisation”, “indexing structures”, “storage systems”, “storage structures”, “scalable storage”, “structured data access”, and “pattern querying”. Studies were selected based on their alignment with the predefined scope and thematic focus of the review, as well as the depth of insights they offered on the topics discussed. Priority was given to articles from 2000 onwards, given their relevance, impact or through backwards citation chaining.

We focus on retrieval-enabling mechanisms, indexing choices, storage and repository design, and access methods, across structured pattern families. Advances in discovery algorithms or domain pipelines are referenced only insofar as they inform these mechanisms (e.g., representation formats, metadata captured, update handling). For each mechanism, we summarise typical assumptions, indicative use contexts, and representative systems, so readers, whether designing repositories, integrating dashboards, or selecting storage/indexing options, can adapt the synthesis to their setting.

This review contributes to several key fronts: (1) an organisation of indexing and access techniques applicable to common pattern types; (2) assessments of storage arrangements and repository systems optimised for pattern-based data flows; (3) explorations of query-optimisable techniques supporting rapid querying, filterability, and ranking of query results; and (4) investigations on system-level properties, including distributed repositories and adaptive indexing, and their behaviour at large scales [

8]. Finally, we discuss open issues concerning the balance between scalability, explainability, and real-time concerns, and outline a research direction aiming to inform future work on pattern retrieval in data mining pipeline construction. These elements collectively provide:

A systematic lexicon and structured framework developed for pattern repositories, combining representation, storage options, and access methods. This offers a unified means of analysing designs and comprehending the compromises found in the literature.

The pragmatic design principles that result from recurring conflicts, namely, redundancy rather than completeness, generality rather than domain specificity, interpretability relative to storage requirements, and the balance between repository consistency and update simplicity, enable context-sensitive decision-making in professional environments.

1.3. Overview of Use Cases and System Needs

Effective pattern identification is a continuous need in many data-intensive systems. In the retail and e-commerce domains, transaction log data may be mined to identify frequent itemsets and association rules, thus enabling real-time product recommendation and improving market basket analysis [

9,

10]. These operate by running queries on the indicated parts of patterns, based on item presence, minimum support, or co-occurrence of the patterns themselves. Likewise, in cybersecurity, there is a need to query repositories of recognised attack signatures or anomalous behaviour patterns in real-time for detecting concomitant threats within network data streams [

11]. Blockchain can increase security, reducing query response times, while ensuring secure data access [

12].

In healthcare and bioinformatics, complex sequences or graph-like structures, illustrated by patient therapeutic paths or gene interaction motifs-are stored and later matched against fresh cases or cohorts. As such, speed and interpretability come to be critical, requiring the outcomes to be not just accurate, but also provided almost in real-time and in a format easily understandable, through web-based pattern query tools like in [

13] which used biomolecular data. Other application areas include process mining, by which activity patterns are discerned to aid decision-auditing, and sensor data analysis, by which spatiotemporal patterns from historical data are identified to establish trends or recognise outliers.

In addition, representative domains make the trade-offs in search strategies more concrete. For example, fraud detection favours sliding-window indexing and fast approximate pre-filters for real-time triage. Recommendation systems query association rules/sequences as top-

k lookups and often hybridise with vector search. Bioinformatics uses ontology-aware graph/relational stores to balance exact subgraph matches with semantic generalisation.

Section 7.3 details these choices with compact, domain-specific architectures.

Across these different systems, the underlying need is uniform: moving away from the simple passive caching of extracted patterns and towards a dynamic and smart retrieval process, which supports user interaction, decision-making automation, and continuous model improvement. Addressing this need involves a combination of indexing concepts, data storage optimisation, and query processing, namely the convergence discussed in this study.

1.4. Paper Organisation and Roadmap

The remaining of the paper outlines the problem landscape, introduces fundamental data and query notions, and surveys index and store techniques, allowing efficient, production-quality, retrieval systems.

Section 2 sets the foundations, formalising data and pattern types, core operations, and exact/top-

k/approximate query modes.

Section 3 and

Section 4 survey indexing (tries, inverted, hash/Bloom, bitmap/compressed) and storage/repository design (metadata-rich catalogues, physical formats, redundancy-aware layouts).

Section 5 and

Section 6 cover query optimisation and performance at scale, including index-aware matching, caching/ranking, and parallel, distributed, and streaming/incremental processing.

Section 7 reviews systems and integrations with illustrative cases. Finally,

Section 8 highlights open directions, dynamic/real-time repositories, uncertainty-aware methods, and cross-domain/semantic retrieval.

Figure 2 provides a brief roadmap for the paper.

2. Foundations of Data and Pattern Organisation

This section establishes the terminology and basic concepts that underpin the following discussion of this paper. We clarify the boundaries that define “structured data” in our setting and outline the kinds of patterns that are typically generated through workflow mining pipelines. In addition, we summarise the operations expected by users: retrieval, filtering, and summarisation. The distinction between exact, top-k, and approximate queries provides a common framework for studying efficiency trade-offs. These ideas form the basis for analysing different system designs in the following sections.

2.1. Core Retrieval Operations and Query Modes

Containment (subset) search. Given a query itemset Q and a repository of patterns , return (or, dually, ). This is the default semantics for itemset/rule containment and underlies posting-list intersections in inverted indices.

Prefix and subsequence search. For sequences, tries/suffix structures answer prefix/subsequence queries: given a query q, return all P s.t. q occurs as a contiguous prefix (or subsequence) of P.

Similarity search (metric or inner-product). Let

be a metric space (or a vector space with similarity

s). Two canonical tasks are

range queries

and k-NN queries returning the

k closest patterns to

q. Modern vector databases support these queries and “hybrid” predicates combining attributes and vectors [

14].

Approximate search. To trade accuracy for latency/space, systems return

-approximate k-NN or optimise recall@k under a time/space budget. Index choices (e.g., HNSW/graph-based, product quantisation) govern this trade-off [

14].

Fuzzy matching. When items/tokens have graded membership, a membership function and a t-norm aggregator define the degree by which a pattern P matches q; retrieval returns .

Graph pattern matching. For structural motifs, subgraph isomorphism(NP-hard) returns embeddings of a query graph in a data graph. Practical engines rely on filter-and-verify, backtracking heuristics, and indexable graph features; recent experimental studies standardise metrics (e.g., embeddings/s) and unbiased comparisons [

15].

Top-k semantics and ranking. Given a scoring function (e.g., support, confidence, lift, utility), retrieve top-kP. Score-aware postings or threshold algorithms enable early termination.

Semantic querying. Ontologies/taxonomies enable generalisation (e.g., expanding a drug class to its members) and virtual integration; survey work consolidates querying patterns across materialised/virtual/hybrid settings [

16].

Exact vs. approximate guarantees. We clearly flag whether results are exact (complete and sound) or approximate (with declared recall/precision or

bounds), since index and storage choices in

Section 3 and

Section 4 directly determine attainable guarantees under latency/memory constraints [

14].

2.2. Types of Structured Data and Pattern Outputs

The most prevalent use of pattern retrieval is in the domain of structured data, which follows a pre-established schema and has consistent formatting. Representative cases of structured data include transactional data (e.g., shopping baskets and logging data), relational databases (e.g., tables mapping customers to products), sequential data (e.g., clickstreams and DNA sequences), time-stamped data (e.g., sensor event streams), and graph structures (e.g., social networks and molecular structures) [

17,

18,

19,

20]. These data collections can yield a wide range of pattern types—itemsets, association rules, frequent subsequences, temporal motifs, clusters, or subgraphs—each demanding correspondingly customised methods for storage and retrieval.

Transactional data often facilitates the discovery of itemsets and association rules, expressed as sets of items along with corresponding support and confidence measures. Sequential data yields sequences which are guided by order-sensitive constraints, usually emerging as episode rules or prefix subsequences. In graph-related contexts, mining operations extract frequent subgraphs, cliques, or motifs, thus introducing complexity in storage and comparative difficulty due to structural isomorphism [

19,

20]. Additionally, within a single dataset, multiple types of patterns can be identified, and as such, storage strategies need to be distinct to support all the identified patterns.

The complexity of semantics and the dimensional properties of pattern outcomes show significant heterogeneity. Some patterns tend to be very concise (e.g., itemsets), while others show more complexity or hierarchical structure (like sequence patterns with gaps or labelled subtrees). Many patterns themselves include constructs pertinent to metadata, be it frequency, lift, timestamp, or particular segments to which data points are assigned. Therefore, the heterogeneity found within pattern categorisations and data representation necessitates flexible and extensible data structuring techniques [

21,

22]. Within modern mining approaches, it becomes less common to work from a single indexing or storage configuration.

Table 1 summarises different structured data sources and typical pattern types they yield (see also

Figure 3).

2.3. Common Operations: Retrieval, Filtering, Summarisation

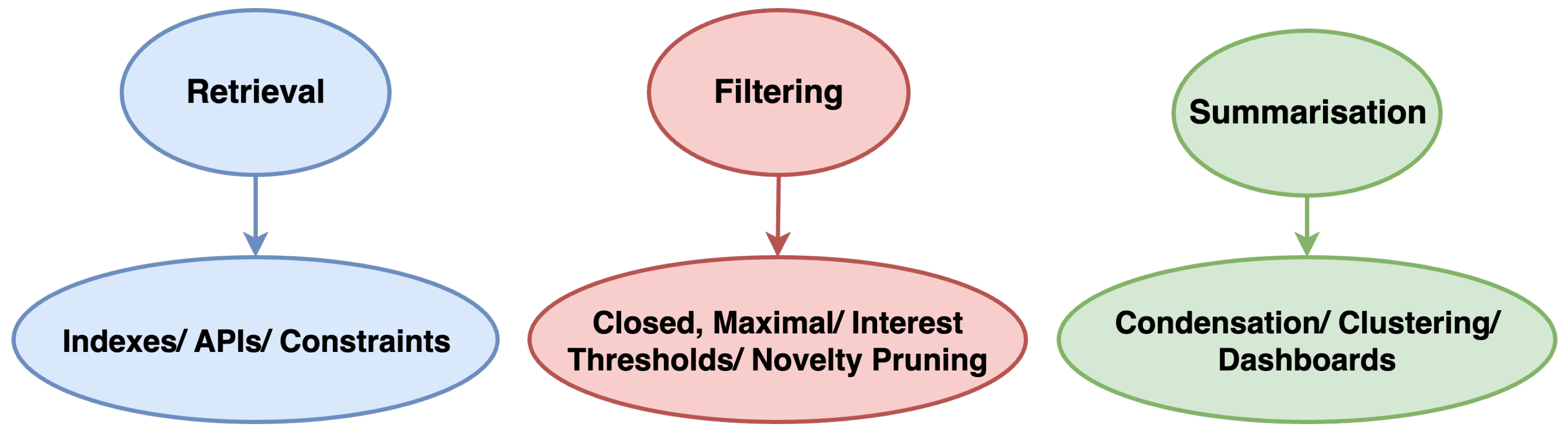

Successful pattern discovery depends not only on storage methodology but also on the nature of operations performed by users or applications. Typically, operations can be grouped into three broad categories: retrieval, filtering, and summarisation.

Table 2 presents the differences between these three operations on pattern sets (See also

Figure 4).

Retrieval involves querying a pattern repository based on user-specified criteria. Queries may include support thresholds, specific items or subsequences, time constraints, or structural configurations. For example, a query may search for all patterns containing both “milk” and “bread” with support above 5%, or it may search for all subgraphs that include a given motif. In such cases, storage systems must provide efficient access via key attributes (e.g., items, nodes, timestamps), thus requiring the use of indexing, inverted lists, or hashing methods [

5,

23,

24].

Filtering refers to post-processing pattern sets to retain only the most relevant or non-redundant patterns. In frequent pattern mining, this step is crucial because result sets can become very large. Filtering methods can exclude subsumed patterns (such as keeping only maximal or closed patterns), apply constraints such as anti-monotonicity, or incorporate novelty/diversity criteria. Effective filtering often relies on additional metadata (e.g., pattern hierarchies, generalisations, or statistical rankings), which may be stored alongside the patterns or computed on demand.

Summarisation aims to consolidate large pattern sets into concise representations that capture key trends. Core methodologies include pattern condensation (e.g., mining maximal or closed patterns) and rule clustering. Summaries are often stored alongside large pattern collections for interactive exploration or dashboards [

25,

26]. These methods require that the storage infrastructure supports grouping, aggregation, and linking patterns to their original data. Efficient support for all three operations requires careful design of the data schema, indexes, and access controls.

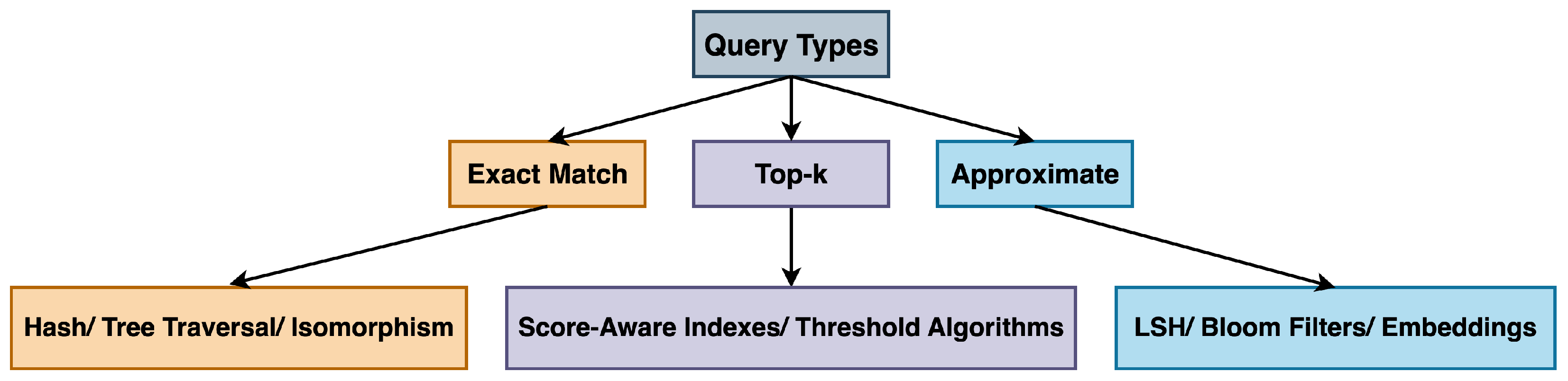

2.4. Query Types: Exact, Top-k, Approximate

The properties of pattern retrieval queries have a strong impact on storage and indexing design. Typically, such queries can be divided into three types: exact match, top-

k, and approximate retrieval, as summarised in

Table 3 (See also

Figure 5).

Exact Match retrieval finds patterns that satisfy precise conditions. These conditions can include item membership (patterns containing a specific item), exact sequence equivalence (an exact subsequence match), or subgraph isomorphism (finding patterns isomorphic to a given query graph). Exact retrieval typically uses key-based or hash-based indices and tree-based structures to facilitate efficient traversal [

27,

28]. However, performance generally degrades as pattern size or dimensionality increases.

Top-

k queries return the

k highest-scoring patterns, ranked by a given measure (support, confidence, lift, novelty, etc.). Top-

k queries are often used in recommendation and dashboard settings to retrieve the most “interesting” patterns. Efficient top-

k processing typically relies on sorted or score-aware indexes and pruning strategies (e.g., threshold algorithms), often leveraging precomputed materialisations [

29].

Approximate queries seek patterns with similarity to a query, or match based on relaxed criteria, offering a trade-off for speed or flexibility. For example, one might find patterns within a certain distance of a query using vector embeddings or sketches. These methods sacrifice some accuracy for scalability and are particularly useful in high-dimensional or streaming contexts. Locality-sensitive hashing (LSH), Bloom filters, and vector quantisation are common techniques to support such approximate retrieval [

24,

30]. In practice, hybrid support for all three types is increasingly desirable, especially in systems supporting both exploratory and operational pattern mining [

6].

2.5. Utility-Aware and Incremental Contexts

Beyond frequency or support, many applications optimise utility measures (e.g., profit, risk, clinical benefit) and must operate incrementally, as new data arrive. Recent surveys formalise these settings and highlight design implications for repositories and indexes. The authors in [

31], review incremental high average-utility itemset mining, delineating update models, thresholding strategies, and challenge areas (e.g., stability under changing windows), all of which affect score maintenance and cache invalidation policies discussed later in

Section 5 and

Section 6. Complementarily, the authors in [

32] survey incremental high-utility itemset mining, emphasising data structures for low-latency updates and taxonomy choices that bear directly on our redundancy-aware storage (

Section 4) and streaming indices (

Section 6).

We therefore treat utility-aware scoring and incremental updates as first-class concerns in the remaining of this paper. Scores are stored alongside patterns to enable top-

k retrieval (

Section 5). Update-friendly physical designs (e.g., log-structured layouts) and incrementally updatable indexes are prioritised (

Section 4 and

Section 6).

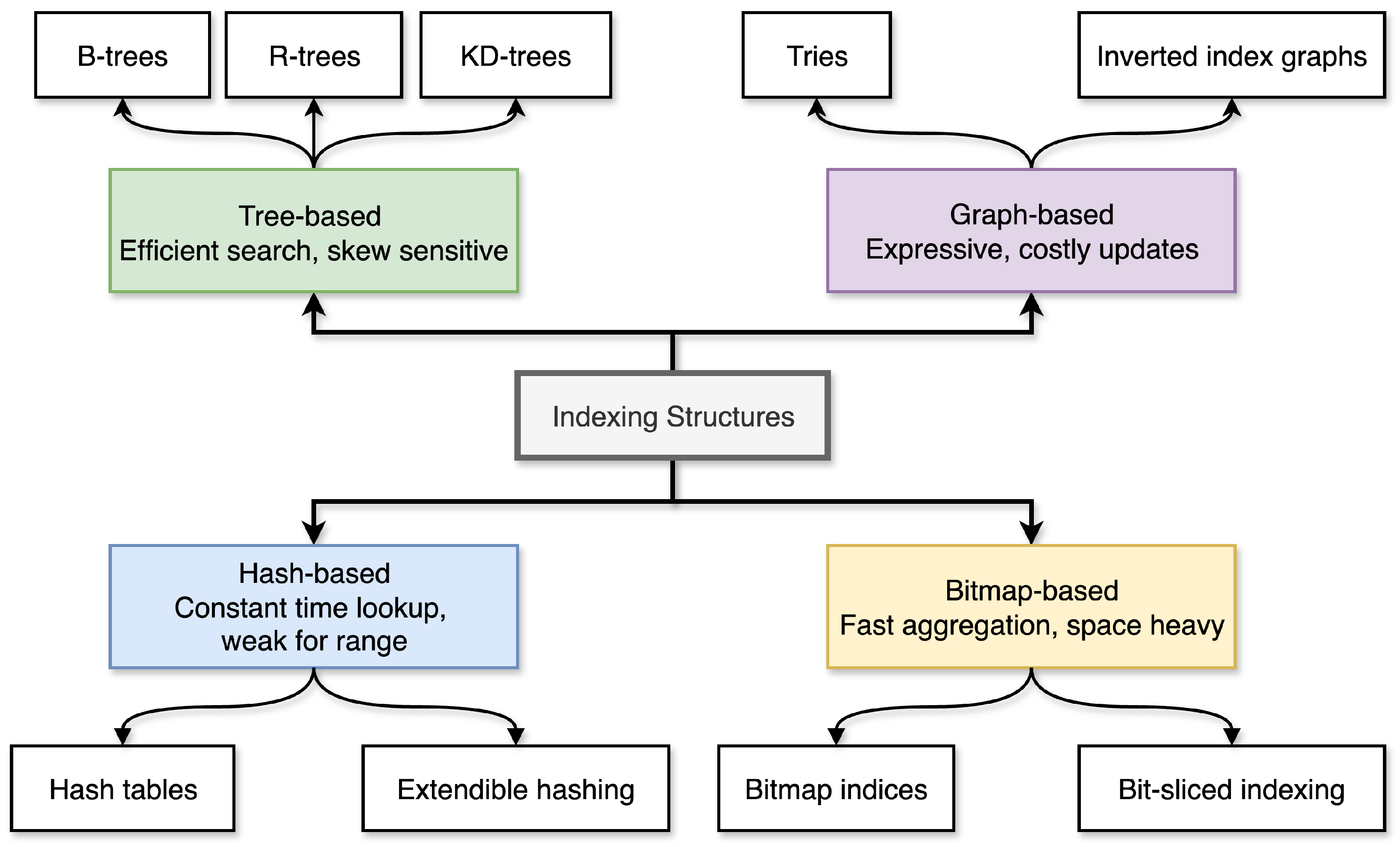

3. Indexing Structures for Pattern Retrieval

Based on the foundation established, this section explores the mechanisms by which indexes enable the retrieval of large sets of extracted patterns. We examine different categories of structures, such as prefix trees or tries, inverted indices, hash-based approaches (like Bloom filters), and compressed or bitmap representations, highlighting their best operating conditions while presenting trade-offs. The aim is to present readers with a foundational support system on which to make informed decisions on the selection and integration of indexes within practical limits.

3.1. Prefix Trees and Tries

Prefix trees (also known as tries) exploit shared prefixes among patterns to index them efficiently. They provide a hierarchical organization, where each root-to-leaf path corresponds to a pattern, enabling quick prefix-based searches and compact storage of overlapping patterns [

30,

33].

Every prefix tree node represents some symbol or token, and links from root to leaves describe complete patterns. The configuration becomes very useful when it comes to storage and querying of sequences, itemsets, or transactions, which often have overlapping subsequences across different patterns. A leading application of prefix trees, as described in data mining approaches, is illustrated in FP-Growth and its compressed prefix-tree structure known as the FP-tree [

34,

35,

36]. The FP-tree, subsequently, makes it easy to retrieve frequent itemsets without the requirement to generate candidate sets, thus avoiding the high computational requirements expected of Apriori-based approaches. Under this framework, frequent items can be systematically stored and included in the tree, thus encouraging dense storage solutions and efficient recursive data mining techniques.

Prefix trees are used widely in sequence mining, especially in bioinformatics (for example, DNA sequence analysis for motif identification) and web usage mining (for example, extracting popular navigation patterns of users). Another prominent feature of this data structure is its ability to support prefix-based query processing: it can retrieve completions (that is, those sequences which start with a given prefix) in linear time for all its completions, given a query prefix extracted from a sequence. Secondly, prefix trees can be enhanced by incorporating frequency counts, temporal annotations, or statistical metadata at each node. These extensions support sophisticated query operations, which include support-threshold filtering, sequence truncation, and temporal analysis in specified windows. Nevertheless, one of the major limitations of prefix trees is their memory usage, especially when the dataset involves a large vocabulary or long patterns with sparse prefix overlap. These issues can be addressed using different compression algorithms, such as node consolidation, suffix-sharing, and subtree trimming, together with disk- or distributed-based computation [

37].

In short, prefix trees and tries provide a strong foundation to store and query structured pattern data, especially in cases when the pattern has hierarchical or serial characteristics. A visual intuition of how tries store patterns with overlapping prefixes is shown in

Figure 6. Contemporary research continues to improve trie-based methods. For instance, Ref. [

38] propose a memory-efficient hybrid trie for sequential pattern mining, and [

33] develop a trie-of-rules structure to compactly store association rules.

3.2. Inverted Indices

Inverted indices are a cornerstone of information retrieval and are equally vital for pattern retrieval tasks. By indexing patterns by their constituent items, they enable rapid containment-style lookups in large repositories. They are widely used for pattern retrieval over itemsets, rules, and textual data [

5,

23,

39]. An inverted index links each element (e.g., a token, item, or attribute value) to a list of identifiers of patterns it contains. The resulting posting lists support efficient Boolean evaluation (e.g.,

,

,

) by operating on the postings rather than scanning the entire repository.

The basic architecture of an inverted index consists of two main components: a dictionary of all possible components (e.g., terms, items, or attributes), and a posting list for every component, containing pointers or references to the patterns or documents containing the component. This organisation allows for incredibly fast resolution of queries, as illustrated by queries like: “retrieve all patterns with item X,” or “retrieve all rules with items A and B.”

Figure 7 illustrates the dictionary–postings layout and AND-intersection for item containment queries.

In the context of association rule mining, inverted indices represent a way to support interactive pattern extraction from large datasets. For example, an interactive graphical query interface can give users the option to restrict their search to either antecedents or consequents. Having an inverted index supports such retrieval to be performed in sublinear time, especially when posting lists happen to be organised and optimised by techniques like variable-byte encoding or run-length encoding.

More sophisticated applications include multi-term retrieval, where Boolean queries (e.g., “item A AND item B NOT item C”) are converted to set intersection and set difference by exploiting posting lists. Here, set operation optimisation becomes crucial and often depends on support for bitwise operations or hybrids combining memory and disk storage solutions. To support more functionality of retrieval, it is possible to store weights or metadata like support, confidence, or timestamps, alongside posting entries, allowing the index to support ranking or chronological filtering. In graph mining, inverted indices play an important role by allowing subgraph or path pattern queries, especially when these patterns are defined as lists of labels or neighbourhood vectors. The inverted index in this scenario acts as a fast access method, which aids in reducing the search space before resorting to time-consuming verifications of isomorphism.

Despite their advantages, inverted indices can experience severe growth when facing datasets marked by high cardinality or sparsity. Term selection, pruning, and on-the-fly indexing techniques, among others, are used to keep space usage minimal, especially within resource-constrained environments. Nonetheless, inverted indices remain among the most flexible and commonly used frameworks supporting pattern retrieval. In [

40], the authors demonstrate that inverted-index structures can even accelerate pattern mining algorithms on big data, underscoring their practical importance.

3.3. Hash-Based Indexing and Bloom Filters

Hash-based indexing offers average-time lookups by converting patterns or keys into fixed-length hash codes. This makes hashing very fast for exact-match queries, though at the expense of not supporting range or similarity searches. Bloom filters extend the hash-based approach with a space-efficient, probabilistic structure for set membership tests, trading a small false-positive rate for substantially reduced storage. Applied to pattern retrievals, hashing-based indices have considerably different characteristics, especially when either the query or pattern is defined by keys, a common case in databases handling frequent itemsets or subgraphs.

The underlying data structure used is a hash table, where every bucket holds a set of patterns corresponding to its respective hash key. Though this technique is very efficient, it could face collision and load imbalance problems if not arranged in a systematic fashion. For better performance, different hashing strategies like double hashing, cuckoo hashing, or minimal perfect hashing are used. Hashing is very useful in performing queries which require finding exact matches, like getting all pattern sets containing a specific itemset or label set.

Bloom filters are an improvement over traditional hash-based indexing techniques through a probabilistic model which ensures excellent space efficiency for membership checking [

41,

42,

43]. Through the use of a set of different hash functions to hash elements into a bit vector, Bloom filters allow for very compact representations, which in turn yield efficient query processes. These filters provide quick determinations of the existence of a certain element or pattern within a given set through membership testing, guaranteeing no false negatives and only a minimal rate of false positives. In systems based on pattern queries, Bloom filters are often used as a preliminary screening mechanism to reduce the candidate set before performing more computationally intensive exact match verifications. Different kinds of filtering techniques, such as counting Bloom filters, scalable Bloom filters, and quotient filters, have evolved to overcome limitations related to update operations and scalability.

These data structures offer significant benefits in streaming or distributed environments, where it is essential to keep storage to a minimum and optimise I/O operations. Hash-based indexing is an important aspect of many applications of Locality-Sensitive Hashing (LSH), enabling the detection of approximate nearest neighbours by mapping similar patterns to be clustered in the same bucket. This feature is especially useful in applications like approximate subgraph matching, clustering of rules, and performing similarity searches in high-dimensional pattern spaces.

Figure 8 shows k-hash insertion and lookup in a Bloom filter, highlighting the no-false-negative property and controlled false positives.

In general, Hashing-based methods tend to allow a larger quantity of data retrievals, especially where scalability or approximation is of higher value than accuracy.

3.4. Bitmap Indexing and Compressed Structures

Bitmap indexing uses bit vectors to record the presence or absence of items within patterns, enabling very fast query filtering via bitwise operations. This approach is particularly effective for answering Boolean combination queries on pattern attributes (e.g., finding all patterns containing a given set of items), and compression techniques can substantially reduce the storage overhead of the bit vectors [

44]. Each bit vector is assigned to a specific attribute, and its presence is represented by 1 while its absence is represented by 0 in a single pattern record. Bitmap indexing is especially useful for handling Boolean queries, since it allows fast filtering through bitwise operations like AND, OR, and XOR.

Bitmap indexes show high efficiency in handling categorical or binary attributes, which are common in some application domains like market basket analysis, rule extraction, and presence or absence pattern identification. For example, the identification of all patterns which contain the itemset AB can be achieved by the invocation of a bitwise AND operation on all bitmaps applicable to AB. The method is oblivious of the overall number of patterns and hence is highly optimised in the case of large databases.

To reduce storage overhead, different bitmap compression methods, including Word-Aligned Hybrid (WAH), Byte-Aligned Bitmap Codes (BBC), and Roaring Bitmaps, have been utilised [

45,

46]. These methods use properties of run-length encoding or word alignment to compress large bitmaps efficiently, supporting fast access to data. Apart from saving disk space, compression also increases input/output efficiency, mainly when data has notable sparsity or clustering properties.

Bitmap indexes can be extended to support multi-valued attributes by applying one-hot encoding, range encoding, or hierarchical bitmaps as different encoding techniques. Such support helps manage complex pattern metadata, including timestamps, rule types, and quality scores. In high-performance environments, bitmap indexes can be combined with memory-mapped files or columnar storage engines, thereby supporting zero-copy access and improving performance during execution within query processing using Single Instruction, Multiple Data (SIMD) features. While bitmaps might not be the best option for datasets with high dynamism and recurring update needs, they prove to be quite effective in read-centric environments or analytical workloads. Adding inverted files or trie structures to bitmap indexes can provide an additional filtering layer, improving performance without adding an unmanageable level of complexity.

Table 4 summarises the various indexing structures reviewed and compares their effectiveness.

4. Storage and Repository Design

Indexes are meaningful only when combined with storage architectures which respect data semantics and access strategies. In this discussion, the relevant metadata structures maintaining provenance, quality, and constraints are considered. We refer to an array of storage formats from flat files and relational structures to column-oriented and NoSQL databases, and explain their impact on performance, especially regarding compression and scanning costs. Redundancy management strategies, such as materialised views, deduplication, and delta storage are discussed seeking an optimum balance of cost and query performance. In addition, this section outlines design milestones controlling implementations before optimisations start, such as key architectural decisions that should be settled prior to low-level performance tuning (including finalising the data model, metadata schema, and indexing strategy).

4.1. Pattern Repositories and Metadata Annotation

Following identification of patterns, it is also essential to keep them in a systematically maintained and easily queryable repository to allow for their reuse and additional analysis [

47]. In order to address this need, pattern repositories have been created, as storage systems tailored to handle well-structured groups of identified patterns. These can be thought of as a supplement to standard databases; instead of knowledge-containment systems, however, they are knowledge-centred ones, designed not merely to store patterns, but also to store comprehensive metadata explaining their meaning, context, and quality.

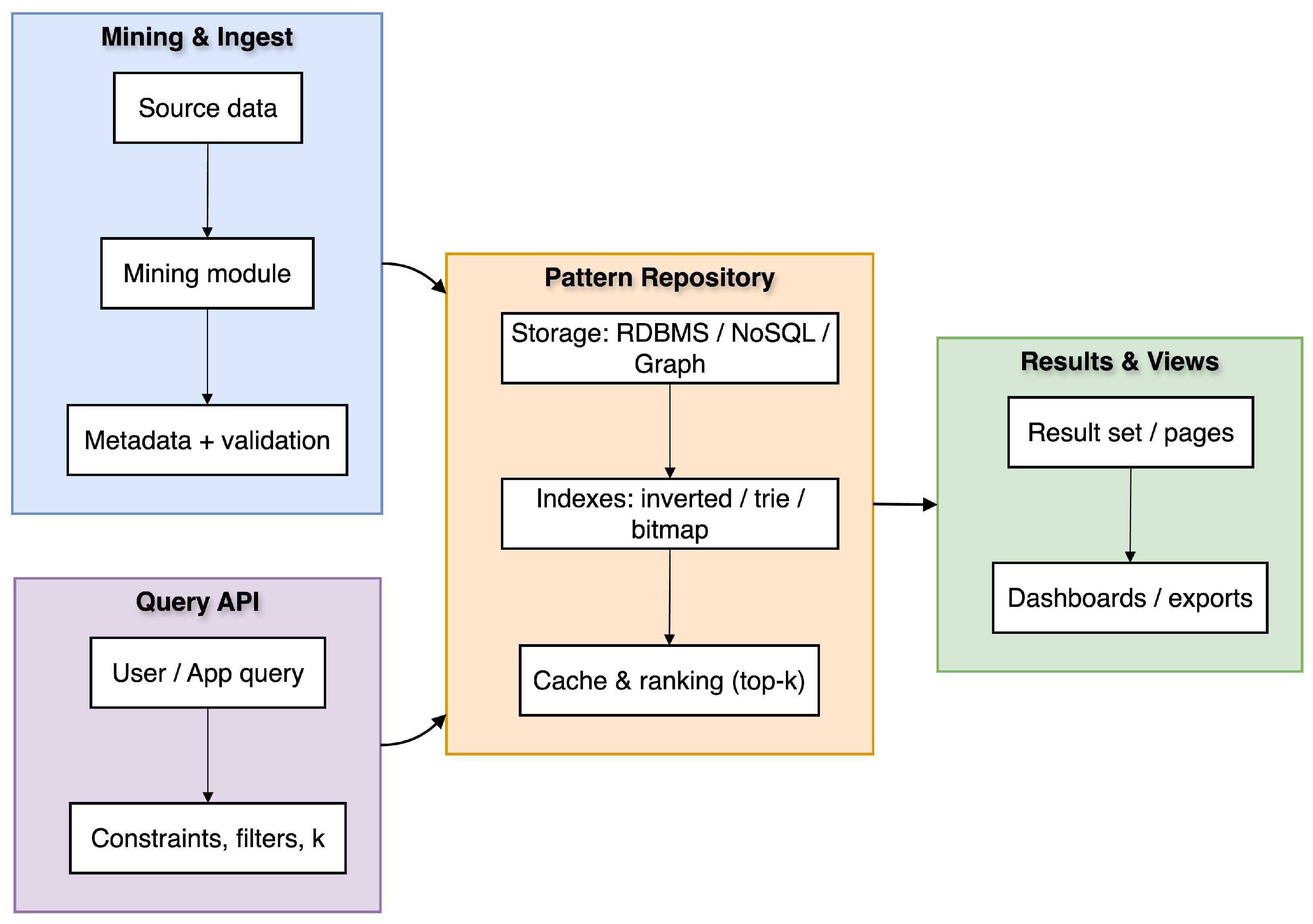

Figure 9 summarises the end-to-end repository: mining produces patterns and metadata; the repository manages storage and indexes; the API serves ranked results.

Typical elements in a pattern repository include configuration of patterns (e.g., itemsets, sequences, and graphs), statistical properties (e.g., support, confidence, and lift), context-related factors pertinent to discovery (which comprise the dataset, mining algorithm, and its parameters), and domain-related annotations (e.g., product categories and gene functions) [

1]. Such metadata facilitates critical procedures like searching, ranking, filtering, and data visualisation. For instance, a data analyst might want to retrieve only such patterns possessing a confidence measure above a pre-specified threshold, stemming from a particular customer population, or possessing items organised in a particular taxonomy.

Repositories can be conceptualised relationally (e.g., by using separate tables organised by pattern kind), hierarchy-based structures (like XML or JSON representations), or as object-oriented databases, when support for polymorphism and inheritance across different kinds of patterns becomes a requirement [

47]. Some systems support ontology combination to add increased semantic support, and therefore allow complex querying, including questions like “patterns involving high-risk behaviour” or “rules dealing with organic products.”

To support effective retrievals, repositories often have indexes referring to structural properties (e.g., components of a rule) and to metadata (e.g., discovery date, user tags). Compressive techniques and removal of redundancy play a significant role in keeping repository sizes manageable, especially in application domains where large volumes of intersecting patterns can be generated. Additionally, incremental update support and version control features play a crucial role in dynamic environments where patterns continue to change over time or across multiple data streams. Overall, pattern repositories act much like an organisational memory of smart systems, and their design has an essential impact on knowledge extraction, evaluation, and interpretation efficiency.

4.2. Storage Formats: Flat Files, Databases, NoSQL

The storage of data and patterns physically has a significant impact on the efficiency of retrieval, scalability options, and integrability with external systems [

48]. Early data mining systems used flat file representations like CSV, ARFF, or proprietary log files; however, such representations were soon realised to be inadequate for large-scale or interactive uses, mainly by virtue of their inadequacies in indexing, transactional guarantee, and query optimisation support.

Relational Database Management Systems (RDBMS) have a query-optimised structure, which is more organised. Patterns can be sustained in normalised tables (e.g., by using separate tables for metrics, items, and rules) and accessed using Structured Query Language (SQL) queries. They allow standard indexing and query optimisation capabilities to be applied, thus favouring an organised collection of patterns [

49]. However, relational models often struggle when dealing with nested, recursive, or graph-based patterns, which are not easily manageable using sophisticated joins or other schema methods.

In response to flexibility and scalability needs, many modern systems use NoSQL storage approaches. In particular, document-oriented databases, represented by MongoDB, show maximum efficiency when handling variable-length entities, including nested ones, like rule representations stored in JSON or subgraphs. Columnar and key-value storage solutions, represented by Cassandra and HBase, allow effective high-throughput access and horizontal scaling, supporting efficient storage of large distributed repositories spanning across clusters. Graph databases, represented by Neo4j and JanusGraph, store and query structural motifs, like subgraphs, frequent motifs, or relation rules, efficiently. However, scaling to very large graphs remains challenging in practice [

50].

Columnar storage data formats like Parquet and Optimised Row Columnar (ORC) offer great compression and projection benefits when data access is centred on specific fields or measures [

48]. In addition, columnar formats help query processing efficiently using distributed computing environments like Apache Spark v4.0.1 and Dask v2025.9.1 by allowing batch analytics on large collections of data to be performed. Storage format selection often requires trade-offs between structure, scalability, flexibility, and preexisting ecosystem compatibility. In real-world deployments, use of hybrid architectural approaches is commonly found; e.g., familiar patterns may be kept in-memory, serialised to JSON format, stored in a document-oriented store, and exposed via RESTful APIs like Apache Livy v0.8.0-incubating, Trino v477, Apache Drill v1.22.0 or combined analytics platforms.

Table 5 outlines the different storage approaches for pattern repositories, with their traits.

4.3. Redundancy-Aware Storage Strategies

A major problem in the pattern storage domain is redundancy, which occurs when many patterns show either structural or semantic resemblance [

5,

51]. For example, itemset mining can produce thousands of supersets with similar base items, while sequence mining has the potential to produce patterns which can serve as subsequences of each other. Without strict control, redundancy can cause increased storage, longer query response times, and issues related to interpretability.

To overcome this issue, storage techniques based on redundancy are employed. One such technique is pattern condensation, which requires that storage contains only representative patterns, e.g., closed, maximal, or non-redundant sets [

52]. These methods keep storage costs low, whist preserving the essential nature of mining outcomes. For example, closed itemsets keep all frequency data of their subsets, and hence, accurate reconstruction can be achieved whenever needed [

1].

Another technique is hierarchical storage, where patterns are organised into generalisation trees or Directed Acyclic Graphs (DAGs) based on subset/superset or subgraph relationships. This structuring supports navigational queries (drill-down or roll-up, for example) and avoids storing the same common pieces redundantly. In addition, indexes can be built on these hierarchies to provide accelerated navigation and support grouping.

Compression methods like frequent pattern trees, compressed suffix arrays, and codebook-based encodings allow for good storage solutions in addition to offering real-time data extraction functionality. Other techniques include pattern hashing and signature-based clustering, which imply classification of structurally similar patterns, using delta encoding or variation vectors to mark their differences.

In environments where streaming or dynamic conditions prevail, redundancy management acquires particular importance. Redundancy-eliminating or combining methods, incremental condensation, eliminate or merge patterns showing little difference from existing ones, yet keep the freshness of the repository without unchecked growth. Also, systems can apply statistical pruning methods to keep only those patterns which overcome some threshold of diversity or novel value, and keep the repository capturing essential knowledge instead of raw algorithmic outcomes. Ultimately, redundancy awareness is important not just for improving efficiency, but also for improving usability. Reducing pattern clutter improves human interpretability, improves system responsiveness, and improves the chances that extracted patterns will be used in real-world decision-making.

5. Query Optimisation and Access Patterns

Regardless of the availability of strong indexes and storage, the strategy of query execution dominates determining end-to-end performance. We distinguish between brute-force or naïve and index-aware traversals supporting early search pruning and input/output reductions. We discuss index-aware pattern matching and outline caching and ranking mechanisms that deliver interactive response times. These techniques collectively convert traditional infrastructures into agile retrieval platforms.

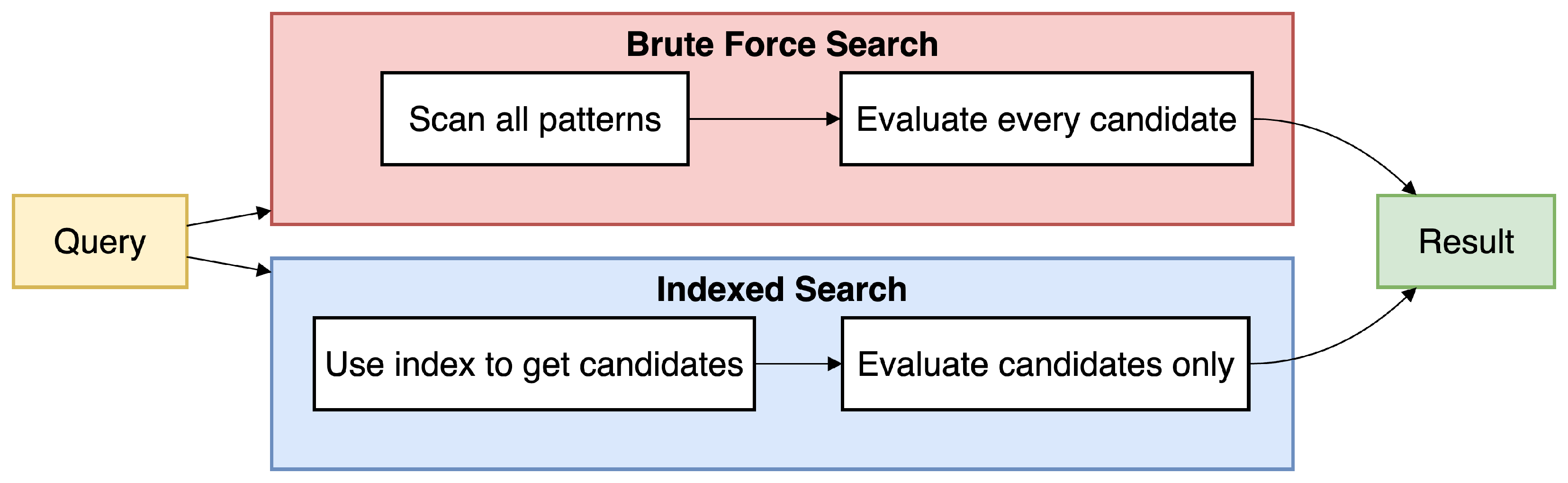

5.1. Search Strategies: Brute-Force vs. Optimised Traversal

Contrasting naïve full scans with index-aware traversals which exploit repository structures (e.g., tries, inverted lists, graph summaries) can prune candidates early and reduce I/O and latency in practice. The retrieval process from pattern repositories varies on a scale from simple containment checks to advanced structural pattern matching. The simplest retrieval method is the brute-force search strategy, where each pattern stored is compared to the query using equality or similarity measures. Although its implementation is simple, it is highly computationally intensive, especially for repositories storing hundreds of thousands of patterns [

53]. From such cases, linear scan-based querying is impractical owing to considerations of memory, input/output delay, and redundant computation overhead.

Very efficient searching methodologies aim to reduce reliance on exhaustive searching by adopting sophisticated traversal and pruning methods. These methods take advantage of the nature of the pattern repository, e.g., isomorphic configurations involving trees, graphs, or indexes, to avoid superfluous branches and decrease candidate pattern evaluations to an absolute minimum. For instance, within prefix trees, if some prefix does not match against the query, an entire subtree can be eliminated from further analysis. Trie-of-rules designs further reduce branching and accelerate containment tests in rule repositories [

33]. Similarly, within subgraph retrieval, adopting graph summaries, neighbour filtering, or path indexes can significantly reduce the need to perform isomorphism verifications [

54].

Another strategy is query rewriting, wherein the system converts a complex query into multiple subqueries which are simpler and more efficient and can be executed independently and then merged. For example, a query which covers many items can be broken into item pair-based constraints, which can be handled by intersecting inverted lists. Another strategy, including query expansion and pattern reformulation, can also be used to handle semantically similar patterns, particularly in application areas like recommendation systems or document mining. Traversal methods can be classified as top-down and bottom-up approaches, depending on the repository structure. Top-down methods dominate cases where pattern storage is organised taxonomically, whereas bottom-up techniques are best suited to cases involving pruning from more specific to less specific ones, e.g., maximal ones [

55].

In practice, hybrid traversals combine both orders with priority queues and score thresholds to enable early termination once sufficient high-scoring results are found (top-k). The best-performing traversal tends to combine both approaches, using priority queues, score-based thresholds, or dynamic filtering constraints to allow early termination upon detection of a sufficient number of good solutions.

In short, it increases traversal operation efficiency by changing pattern querying from a batch to an interactive function. These innovations play an essential role in application scenarios demanding sub-second latency, live user interaction, or automation integration.

Figure 10 contrasts a full scan with an index-based two-phase plan which first prunes candidates and then evaluates only those.

5.2. Index-Aware Pattern Matching

Pattern matching, especially in structural or sequence mining is a core operation, which can benefit significantly from index-aware retrieval. Instead of comparing every pattern with the query in a brute-force manner, the system leverages pre-built indexes to limit the search space and perform matching more efficiently [

55]. In practice, index-aware matching typically follows a

filter–and–verify pipeline: an index generates a small candidate set (filter), and only those candidates are checked exactly against the query (verify), which substantially reduces end-to-end cost.

In itemset or rule mining, inverted indexes can map items to pattern IDs, allowing fast containment tests. For instance, given a query for patterns containing {milk, bread}, the intersection of posting lists for “milk” and “bread” yields all candidate patterns. This avoids scanning unrelated patterns entirely. When scores or metadata are stored alongside postings, additional filtering or ranking can be done in-place. Score-aware postings (e.g., sorted by confidence, lift, or utility) enable early-termination for top-

k retrieval, and recent systems formalise such refinements within the query engine [

56].

In sequence and time-series data, suffix trees, trie structures, or compressed suffix arrays allow for efficient subsequence matching. These structures support fast lookup of all patterns sharing a common prefix or subsequence, enabling retrieval with linear or near-linear time complexity [

57].

For graph patterns, matching is far more complex, due to the Nondeterministic Polynomial-time hard (NP-hard) nature of subgraph isomorphism. Here, index-aware methods use graph indexing schemes, such as graph features (e.g., paths, labels, subtrees), graph fingerprints, or filter-and-verify approaches [

58]. These allow quick elimination of non-matching candidates and reduce the number of expensive isomorphism checks. Specialised graph indexes, such as gIndex, GraphGrep, or TurboISO

+, use different strategies to encode and retrieve structural graph information efficiently [

27,

28]. Research also both systematises subgraph-matching design choices [

15] and advances exact methods with stronger pruning and ordering heuristics (e.g., PathLAD

+) [

59].

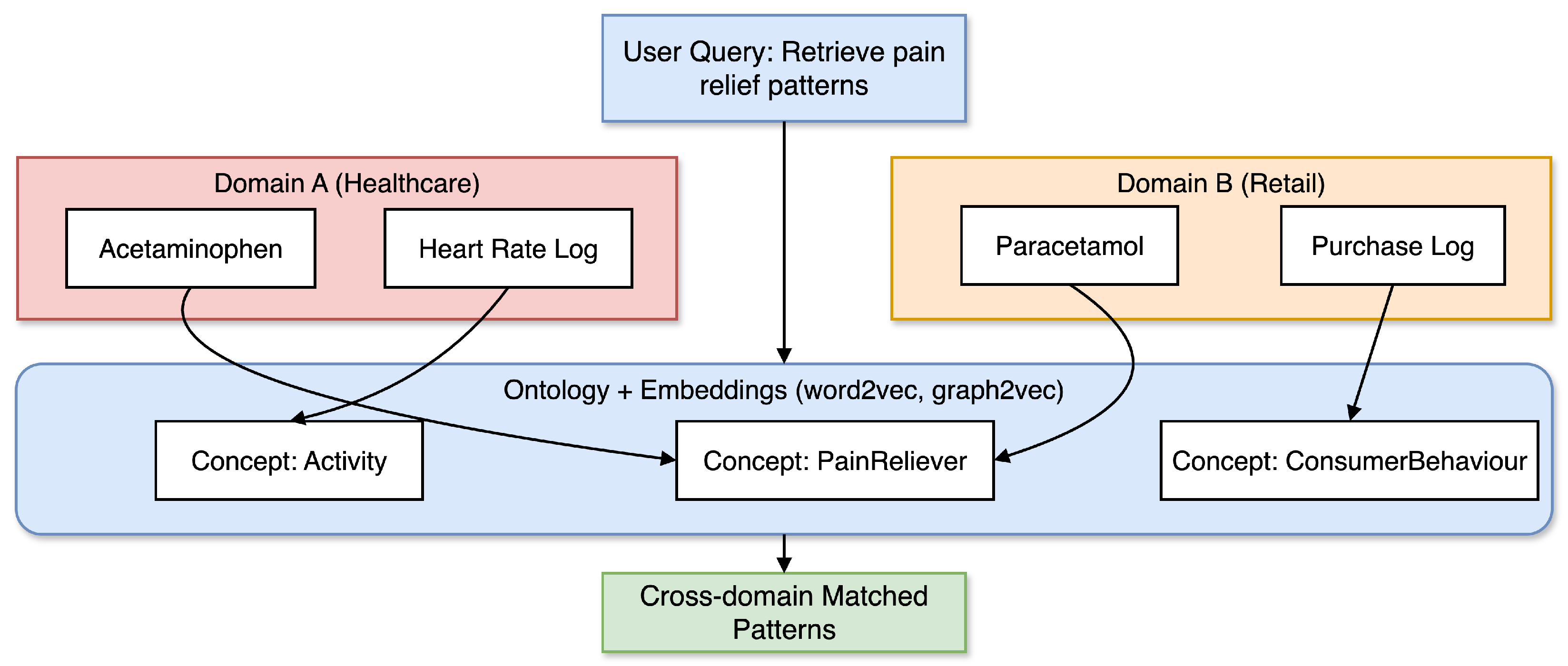

Another dimension of index-aware matching is the integration of semantic indexing, where ontologies or taxonomies are used to support pattern generalisation or abstraction. For example, in medical pattern retrieval, a rule involving “non-steroidal anti-inflammatory drugs” may match queries involving specific drugs like ibuprofen or naproxen if a domain-aware index supports this semantic expansion.

Modern retrieval systems also use multi-level indexes, combining different types (e.g., inverted and trie-based) to support hybrid query types or cross-domain pattern repositories. These combinations can offer both fast access and structural awareness, crucial for interactive and exploratory mining tools. In cross-domain settings, such index compositions are often the practical route to maintain low latency without sacrificing expressiveness.

5.3. Caching and Result Ranking Mechanisms

To further enhance query responsiveness and augment the user experience, many systems apply a blend of techniques, using caching combined with result-ranking approaches. Caching entails maintaining the results-or parts thereof-of previous queries to support efficient processing of similar or repetitious queries by avoiding reprocessing [

60]. Result-ranking approaches ensure that the most salient patterns dominate or near-top in rankings, especially when either the user or the system is limited to dealing with a modest number of results (e.g., top-

k). In interactive repositories, these two levers are complementary: caching amortises repeated work, while ranking ensures early delivery of the most useful patterns.

Query result caching works extremely well in scenarios with recurring access patterns, such as dashboards and recommender systems. This helps to significantly reduce latency, while reducing recomputation overhead, when the same query is run repeatedly. Caches can store raw outputs, partial indexes, or even intermediate stages of calculations. For example, a query finding patterns related to “milk” and “bread” can store the intersection results computed using the posting lists of these items. Follow-up queries following similar constraints can either consume the cached result directly or further leverage it. Production deployments typically combine admission/eviction policies (e.g., LRU/LFU/Adaptive Replacement Cache (ARC) with staleness controls (Time To Live (TTL) or dependency-based invalidation). Recent studies quantify the trade-offs among cache levels and policies for low-latency analytics [

61].

Advanced systems employ semantic caching, which allows for precise queries, as well as similar or overlapping queries to reuse cached results. Such systems track containment relationships among queries and allow reuse through query rewriting or aggregation mechanisms. Caching content handling and expiration are supported by policies like LRU (Least Recently Used), LFU (Least Frequently Used), or adaptive replacement policies, thus balancing freshness and spatial efficiency concerns [

55]. Semantic (containment-aware) caches are particularly effective for conjunctive constraints over indexed attributes, where cached partial answers can be safely re-used after lightweight refinement.

Prioritisation of outcomes is necessary in situations involving large query results, given that users can only review a limited number of patterns. Most ranking algorithms use statistical metrics, such as support, confidence, lift, leverage, novelty, or diversity. For particular application domains, utility functions based on input data or expertise in the domain are used to adjust or extend ranking according to specific priorities or needs. For instance, patterns with high or recent anomaly scores in a fraud detection application can be ranked higher. Ranking can be performed at the retrieval step or can be precomputed as part of the indexing process. Score-aware indexes, such as sorted posting lists or top-

k join trees, support efficient ranking without requiring full materialisation of results [

55].

Recent engines formalise constraint-aware top-

k refinement and provide provable early-termination guarantees within the query optimiser, improving both fairness and latency [

56]. In more dynamic environments, incremental ranking is used, where results are computed and ranked in real-time, when new data becomes available or when the preferences of users change.

The incorporation of ranking and caching abilities into the base retrieval engine turns pattern repositories into interactive knowledge systems supporting exploration, decision-making based on knowledge, and fast analytical processes.

6. Scalability and Performance Considerations

Real-world deployments must sustain growth in both data volume and query concurrency. This section explores scale-up and scale-out tactics, including partitioning, compression, and load-balanced replication of indexes. We examine parallel and distributed builds and streaming and incremental update paths to keep repositories fresh without disruptive rebuilds. The aim is practical guidance for maintaining throughput and predictable latency at scale.

6.1. Handling Large-Scale Pattern Sets

With the increased deployment of data mining systems on large and complex datasets, there has been an exponential growth in the number of discovered patterns [

2]. Such an occurrence poses a significant scalability problem not only for the mining algorithms, but also for the following storage, indexing, and retrieval systems. In particular, the explosion in pattern outputs imposes severe memory overhead on pattern repositories and can drastically increase query latency if left unmanaged [

33,

38].

For example, a frequent itemset repository may include hundreds of thousands of patterns, even with comparatively low support levels. In graph or sequence mining contexts, the pattern space can grow at an even higher rate due to structure variation and noise tolerance, as evidenced by challenges in large-scale graph pattern management [

50] and scalability concerns in graph indexing [

15].

As the magnitude of this problem is addressed, different methods are used. Pattern compression and condensation are used first to reduce the number of patterns without sacrificing semantic consistency [

26]. By storing only maximal, closed, or representative patterns, the system reduces unnecessary storage and improves access efficiency. Partitioning methods are then applied to split the pattern space into smaller pieces, arranged in order by item domains, pattern lengths, or support ranges. This method supports parallel processing, enhances query selectivity, and minimises memory requirements. However, effective partitioning must also consider load balancing to prevent skew, ensuring no single partition becomes a performance bottleneck.

In distributed computing environments, sharding between many machines or clusters allows for horizontal scaling. Distribution of shards can be based on ranges of hashes, time-based divisions, or semantic categories. Query planning needs to account for the scattered nature of data, and map-reduce or distributed search procedures are often used to consolidate results [

62].

Another important method is called lazy materialisation, which involves pre-storing summaries or metadata and, only when needed, building complete representations of patterns. This method is especially beneficial in interactive systems, where users normally interact with a limited set of patterns. Combined with effective caching techniques, lazy approaches greatly reduce both storage costs and input/output costs. Scalable pattern collections use memory-mapped access, where the collections themselves remain in a compressed form on disk and are mapped into memory only when accessed [

63]. This makes it possible to efficiently navigate and perform subset matching, even when running on standard hardware configurations.

These techniques further allow query of large pattern collections, when combined with compressed bitmap or trie indexes and still support very high performance requirements. Equally important, these scaling techniques are designed for compatibility with existing data infrastructures-leveraging distributed file systems, cloud storage layers, and database engines-so that pattern retrieval systems can integrate into modern enterprise analytics workflows.

6.2. Parallel and Distributed Storage/Indexing

Scalability is highlighted further at the architectural level by adopting parallel and distributed storage and indexing systems [

2]. They divide data and computational work among multiple processors or nodes accordingly, enabling the system to process large amounts of patterns and multiple query instances concurrently.

The use of multi-threading and SIMD instructions is a core element of parallel computing environments, making operations like posting list intersection, Boolean query evaluation, and traversal operations more efficient. For example, posting list intersections and Boolean query evaluation can be done in parallel over multiple CPUs. Bitmap indexes are highly optimised for parallel access based on their nature as bitwise indexes. Moreover, in-memory-accessible data structures, such as Roaring Bitmaps or succinct tries, allow multi-core access with minimal overhead in terms of locking needs.

In distributed systems, repositories are realised in clusters using distributed file systems like Hadoop Distributed File System (HDFS) and storage engines (e.g., Cassandra, MongoDB, or Apache Ignite). Depending on the query model, the placement of indexes is either co-located with data or centralised. Apache Lucene or Elasticsearch are examples of technologies, which improve these ideas with distributed inverted indexing, replication, and load balancing capabilities. They also support sharding and replication, which are critical if fault tolerance and high availability are to be attained.

However, replicating large indexes across nodes introduces significant storage and memory overhead, and coordinating queries across a network can incur latency. Practical systems mitigate these issues with efficient routing, caching, and data partitioning strategies. Distributed indexing essentially encompasses the routing of queries and balancing of workload across nodes [

62]. Effective query routing is needed to direct each query (or sub-query) to the appropriate node based on index partitions, so that partial results can be combined efficiently.

Most query planners use cost models or statistics from data source metadata to improve routing efficiency. Federated scoring and distributed top-k retrieval techniques allow seamless combining of ranked results from several nodes. To allow scalability in scenarios involving streaming or high throughput, systems can adopt asynchronous indexing and eventual consistency, which enable adding new patterns without hindering ongoing data extraction operations. For cases demanding real-time responses, index snapshots coupled with versioned writes allow systems to provide consistent viewpoints, while concurrent updates happen behind the scenes.

Overall, parallel and distributed indexing techniques play a critical role in scaling up pattern retrieval systems for practical deployment, since system performance must grow with data size and user demand. For instance [

8] report Decomposition Transaction for Distributed Pattern Mining (DT-DPM) framework that speedups mining patterns up to 701 on a Webdocs dataset compared to Frequent Itemset Mining on Hadoop Data Partitioning (FiDoop-DP), which is up to 521, and up to 1325 on a New York Times dataset compared to Mind the Gap for Frequent Sequence Mining (MG-FSM) that is less than 900, as the percentage of transactions increases. Their experiments ran on a single Intel Core i7 machine (16 GB RAM) with multiple mappers rather than on a multi-node cluster [

8], noting the substantial performance gains of parallelisation.

Figure 11 illustrates this architecture: a coordinator distributes the query to sharded index nodes in parallel, gathers partial results, and merges them into a unified output.

6.3. Streaming and Incremental Updates

Many application scenarios involve a constant flow of data, such as user actions, sensor inputs, or transactions evolving over time. For application scenarios of this nature, the base pattern repository has to support incremental update, so newly found patterns can be stored and queried without re-reading all data from scratch [

64,

65]. This streaming capability ensures that query results remain up-to-date with minimal latency as new data arrives. Static repositories, however, cannot easily deal with application scenarios demanding up-to-date knowledge and adaptive decision-making.

The incremental pattern finding process is the underlying requirement which entails the use of increments to update current patterns, re-evaluate support values, and systematically accumulate newly found patterns. Systems must observe impacted parts in the repository, while executing increments in a way that does not compromise the validity of the index. For instance, when adding a new transaction into a stream of data, changes can be made to overlapping itemset support values, and more itemsets that satisfy or exceed the set support value can be added to the index.

To make updates efficient, log-structured repositories and append-only storage are often utilised. These forms allow including more patterns or even novel metadata, without rewriting every file. Compaction or merge operations help to ensure consistency and optimise storage organisation [

63].

In the context of indexing, self-adjusting data structures like self-balancing trees, adaptive hash tables, and incrementally updatable Bloom filters support efficient insertions and deletions. In addition, tries can be augmented using methods involving lazy deletion or versioning, thus supporting stream churn with little cost related to expensive reorganisation.

For enabling prompt responsiveness, stream-based indexes are designed to function within sliding windows and hold onto only such patterns that demonstrate frequency or relevance within a modern context [

66]. This feature becomes crucial in concept drift situations or analysis across different temporal contexts, where outdated patterns need to be removed or down-ranked [

67]. For instance, a streaming pattern index can underpin a fraud detection system that continuously updates and queries patterns of suspicious behaviour as new transactions arrive, allowing the model to adapt to emerging fraud tactics in real time.

Performance evaluation and maintenance in streaming scenarios require not just adaptive query planning, but real-time load balancing mechanisms as well to prevent any single node from becoming a bottleneck. Systems must efficiently vary indexing depth, cache policy, and pattern filtering parameters, based on data velocity, query complexity, and hardware load, among other factors. Furthermore, integrating stream processing frameworks (e.g., Apache Flink or Kafka Streams) ensures end-to-end pattern mining and retrieval can run with minimal latency in real time. These frameworks also provide built-in fault tolerance (through state checkpointing and replication), so that pattern retrieval pipelines can recover from node failures with minimal disruption.

Finally, streaming and incremental updating procedures play critical roles in supporting the effective operation of pattern retrieval systems in dynamic environments, where a continual influx of new information requires immediate incorporation into the knowledge base on an ongoing basis.

7. Systems and Tools Supporting Pattern Retrieval

The emphasis now turns from abstract ideas toward practical instruments that practitioners can apply. In this section, we discuss diverse mining libraries and frameworks (e.g., those offering retrieval interfaces) and integration pathways with databases, search engines, analytics dashboards and representative architectures from operational settings.

7.1. Mining Libraries with Retrieval Support

In the past two decades, there have been many research-oriented and open-source libraries available for data mining, which help to identify different kinds of patterns, including frequent itemsets, association rules, sequences, and subgraphs. Nevertheless, so far, little support can be found in libraries to tackle pattern extraction in terms of storage, querying, and filtering beyond the early mining phase.

The Sequential Pattern Mining Framework (SPMF), is a well-organised and comprehensive resource for sequential pattern mining, which includes over 250 algorithms focused on the discovery of frequent patterns and the learning of association rules [

68,

69]. Apart from its large collection of mining methods, SPMF supports the exportation of discovered patterns in structured formats, like text and XML, and allows programmatic interaction through its Java APIs [

69]. Although not a standalone database system, SPMF supports integration with larger projects and allows customised retrievals to be constructed based on its outputs.

Several other libraries have facilities available for querying parts of databases. For example, Borgelt’s suite of frequent itemset mining tools has facilities available for post-processing and compression of found patterns. The GUI-based data mining suite Orange v3.39.0 and the MLxtend v0.23.4 library for Python v3.11.x provide access to miscellaneous mining algorithms and basic exploration tools, but often rely on external storage and indexing infrastructure to support query scalability [

70,

71,

72].

Most mining libraries were created to support batch processing more than supporting query-based interactive data retrieval. These libraries, therefore, produce results, which are often transferred to flat files, requiring further processing to be made queryable. These libraries, however, remain an essential building block to broader systems, especially when combined with external storage devices or database management systems. Demand for libraries supporting real-time querying and data mining is on the rise, essentially driven by users’ ability to dynamically change parameters, while performing interactive analyses of discovered patterns. Including mining and retrieval capabilities is driving the evolution of sophisticated tools in which pattern repositories are viewed as fundamental queryable objects instead of being simple storage files [

73].

7.2. Integration with Querying Systems and Dashboards

To make it easy to identify patterns from user interactions, many systems have utilised sets of patterns obtained from inquiries, visual presentations, and dashboards. Consolidation is the process of integrating initial conclusions or results of data mining into knowledge, which is understandable, systematically organised, and user-friendly, potentially for clients or analysts.

Some methodologies store pattern data in relational or NoSQL databases, which can be queried by standard languages, like SQL or MongoDB query [

74,

75]. Doing so makes it possible to draw out patterns and to perform filtering actions. Those methodologies rely on established techniques, such as indexing, sorting, and caching, which show significant efficiency, when patterns happen to be stored in tabular or JSON format. Association rules, for instance, can be stored as part of attribute values, antecedents, consequents, support, confidence, and lift, and help perform complex queries like “rules where the value of confidence is 0.8 or more, concerning product X.”

Advanced systems use Online Analytical Processing (OLAP) cubes or column-storage databases like Apache Druid and ClickHouse to support extremely rapid pattern-metadata aggregation, slicing, and filtering. These systems become especially valuable in business-intelligence scenarios, where pattern visualisations can involve multiple temporal dimensions, categorical classification, or different user segments [

74,

75]. User-interactive dashboards, including Power BI, Apache Superset, and Tableau, allow user interaction with backend pattern storage by leveraging APIs or connectors. These allow users to set filtering parameters, compare distributions, or dig into particular sequences or resultant points by themselves [

73].

Some systems further this experience by leveraging techniques of pattern-instrumented data exploration to identify pertinent records, timelines, or visualisations within underlying data, realised by highlighting a particular rule or sequence. Thus, there comes to be the integration of information as a necessary aspect of systems created to aid decision-making through computational support, where underlying patterns are not graphically represented; instead, they occur through alerting mechanisms, recommendations, or interactive user interface models. For example, rules flagging potentially fraudulent transactions can trigger fraud alerts, while behaviour sequences can lead to proactive website content updating by dynamic refreshes.

Finally, integration brings about the transfer of pattern recognition from research activities in academia to real-world applications, where the aspects of interpretability, responsiveness, and usability are no different in priority compared to algorithmic accuracy.

7.3. Case Examples and Architectures

Several of the operational frameworks and experimental prototypes have shown scalability in data organisation and pattern retrieval. These represent examples of trade-offs within architectural and design paradigms used to integrate data mining, storage, and query techniques.

In retail analytics, entities typically have pattern mining services running either nightly or in real-time, which recognise association rules, frequent itemsets, or sequences [

10]. Extracted patterns are then stored in distributed document databases, e.g., MongoDB or ElasticSearch [

76], and exposed via RESTful APIs to support integrations with internal applications. Marketing analysts perform exploratory analysis and interpret patterns by looking at attributes by product category, store or season, using ranking algorithms to call out top-performing association rules or surprise contributors, and using supporting metadata to perform segmentation analysis [

77]. Assessing the stability of clustering results is essential to ensure meaningful segmentation in unsupervised analysis [

78]. Downstream recommendation systems operationalise these repositories. Pattern stores expose association rules and sequential motifs as queryable tables/postings (antecedent → consequents with support/confidence/lift) consulted as top-

k lookups at request time. Inverted indices over itemsets/rules support fast containment; tries help prefix-sequence queries; columnar/NoSQL storage keeps rule attributes filterable at scale; dashboards and APIs operationalise the retrieval layer (

Section 7.2). A practical compromise is hybrid retrieval: rank candidates symbolically for explainability, then rerank with embedding search for recall, balancing transparency with coverage under tight Service Level Objectives (SLOs) (

Section 7.4).

In fraud detection (finance & e-commerce), operational stacks maintain stream-updated repositories of suspicious sequences (e.g., purchase–login–device changes) and relational/graph motifs linking cards, devices, and IPs. Search mixes containment/top-

k queries against prefix tries or temporal inverted lists with approximate membership (e.g., Bloom filters) for real-time pre-screening. Positives trigger costlier exact checks. The central trade-off is latency vs. precision. Approximate filters and shallow indexes reduce time-to-decision but introduce false positives, whereas deeper traversal and subgraph isomorphism increase precision at a higher cost. Sliding windows and incremental updates (

Section 6.3) keep scores fresh under drift, while caching popular rule antecedents reduces hot-path latency.

In cybersecurity, different systems, especially those using pattern-matching intrusion detection techniques, compare real-time network traffic against databases, which store known attack patterns, usually kept as sequences or graph representations. For increased effectiveness of matching procedures, indexing techniques like graph signatures and Bloom filters are used, and real-time refreshes are made as fresh patterns become recognised by feeds of threat intelligence sources [

41,

79].

In bioinformatics and biomedical analytics, repositories and investigation platforms capture clinical pathways, comorbidity patterns, and interaction subgraphs from electronic health records in relational stores and knowledge graphs with ontology-backed access (e.g., SNOMED). Concept-aware querying via semantic annotations enables temporal roll-ups and data visualisation to show how patterns evolve over time, supporting physicians and research staff in trend analysis, cohort studies, and hypothesis generation. Indexing typically combines graph signatures/fingerprints for candidate generation with ontology expansion for semantic matches. The key trade-off is exact subgraph containment versus semantic generalisation across heterogeneous vocabularies (

Section 8.3 on cross-domain semantics). This design supports cohort discovery, hypothesis support, and explainable retrieval in noisy, evolving datasets [

80,

81,

82].

Table 6 summarises the domain-specific data models, access patterns, indexing, and key trade-offs discussed in this section.

Educational systems, such as PROPHET, PeTMiner, and SMARTMiner represent the integration of mining, retrieval, and visualisation specifically for learning or prototype purposes. These systems support user-specified pattern constraints, enable dynamic querying, and offer rule visualisation-usually via web-based interfaces, which interact with backend repositories through APIs or in-memory engines. From a design point of view, most systems separate the phases of mining and retrieval by using decoupled modules for pattern extraction, storage, and access. However, there is rising interest in integrated pattern platforms, where mining and querying are tightly coupled, enabling incremental discovery, just-in-time retrieval, and feedback-driven learning. Together, these perspectives illustrate the multi-layered evaluation and application of pattern retrieval tools.

Table 7 is a summary by consolidating libraries, integration pathways, and representative architectures that support pattern retrieval.

7.4. Integration with Data-Science and ML Pipelines

Modern deployments increasingly combine symbolic pattern repositories with embedding stores to support hybrid queries (metadata & vectors). Pipeline-level integration typically exposes pattern retrieval via SQL/REST APIs and User-Defined Function (UDFs), while vector search (Approximate Nearest Neighbour (ANN)) handles semantic similarity. The execution layer reconciles attribute filters with ANN stages and delivers

top-k results under latency SLOs [

14].

From an engineering perspective, the interface points include: (i) ingestion connectors (e.g., Spark/Flink) that produce both discrete patterns and learned embeddings, (ii) index-coordinated query planners that interleave postings/bitmaps with ANN operators, and (iii) dashboard/Business Intelligence (BI) adapters that surface ranked, explainable patterns alongside nearest-neighbour evidence. The recent VDBMS survey documents operator support for hybrid predicates, partitioning/compression (Product Quantisation (PQ), residual quantisation), and distributed serving-directly relevant when integrating our indexes with Machine Learning (ML) retrieval stacks [

14].

Where semantic enrichment is required (e.g., pattern roll-ups along taxonomies), ontology-backed access provides controlled generalisation and virtual integration across sources, with current surveys outlining design choices and open challenges in semantic querying [

16].

8. Open Challenges and Future Trends