1. Introduction

In the current era, with the digital information wave sweeping across the globe, data has become the core resource driving social development and innovation. However, the contradiction between data sharing and privacy protection is intensifying. Moreover, cloud computing involves the processing of a massive volume of user data, and during network transmission, this data lacks effective network security protection, bringing serious security problems such as data contamination and privacy leakage to users [

1,

2,

3]. Traditional encryption technologies, which require decryption during data processing, pose severe challenges to data security. As a breakthrough achievement in the field of cryptography [

4], homomorphic encryption technology has broken this deadlock. It enables direct computation on encrypted data, providing a new path for the secure circulation of data and privacy protection. Its emergence has revolutionized data processing. Homomorphic encryption was initially proposed by Rivest et al. in 1978 [

4]. In the subsequent three decades, this technology remained an open problem in the field of cryptography that urgently needed to be addressed. The design of homomorphic encryption is typically based on various mathematical challenges. Based on this, researchers began to explore homomorphic properties from some early cryptographic systems [

5,

6,

7,

8,

9,

10]. However, these early cryptographic systems could only achieve partial homomorphic encryption functions. For instance, some cryptographic systems only satisfied the multiplicative homomorphic property [

5,

6], while others only met the additive homomorphic property [

7,

8,

9,

10]. In 2009, Gentry constructed the first fully homomorphic encryption scheme based on ideal lattices [

11]. Creatively, he proposed the use of the bootstrapping technique to transform a somewhat homomorphic encryption (SHE) scheme into a fully homomorphic encryption scheme. Since then, a large number of optimization methods and implementation schemes for fully homomorphic encryption have emerged [

12,

13,

14,

15,

16,

17,

18,

19]. At present, practical fully homomorphic encryption schemes are mainly designed based on the difficult problems of Learning with Errors (LWE) [

20] and Ring-Learning with Errors (Ring-LWE) [

21]. For example, there are fully homomorphic encryption schemes represented by BGV [

14], BFV [

15], and CKKS [

19], as well other types of fully homomorphic encryption schemes represented by TFHE [

17,

18] and FHEW [

22]. The emergence of these algorithms has brought homomorphic encryption one step closer to practical applications, and research in this field is currently booming [

23,

24,

25,

26,

27,

28,

29,

30].

Images are important carriers of information, but can include private personal and business-related information; in particular, data such as medical images demand a high level of privacy protection. Under such circumstances, the application of homomorphic encryption in image processing becomes particularly crucial. In recent years, numerous studies on the application of homomorphic encryption in image processing have emerged [

31,

32,

33,

34,

35,

36,

37,

38]. However, most of these achievements either directly apply homomorphic encryption algorithms to image processing or improve operational efficiency by modifying algorithms or optimizing devices. Regarding the current research situation, the application of homomorphic encryption algorithms in image processing still faces numerous challenges. First, the algorithms rely on complex mathematical problems such as large-integer factorization and lattice problems, resulting in a high threshold for design and implementation. Second, there are limitations in computing power. Some homomorphic encryptions only support specific types of calculations. Although fully homomorphic encryption theoretically supports any calculation, its efficiency is extremely low, making it difficult to adapt to complex image processing operations such as convolution. Moreover, ciphertext operations significantly increase the computational cost. Third, the rounding operations introduced to control precision make the approximation nature of the algorithms quite prominent, and it is difficult to ensure the accuracy of the processing results. Fourth, as the data volume increases and the application scenarios become increasingly more complex, the difficulty of algorithmic steps such as key generation and management also increases accordingly. The ultimate cause of these challenges is that the current mainstream fully homomorphic encryption algorithms (such as CKKS, BFV), due to not taking into account the special characteristics of image processing in their original designs (such as the two-dimensional matrix structure of pixels, strong correlations, and the need to preserve visual semantics), have core bottlenecks such as mismatch between the operation mechanism and image data, visual quality degradation caused by noise accumulation, and weak support for non-linear operations.

This paper systematically uncovers the homomorphic properties of pixel scrambling algorithms for the first time. Through the analysis of typical algorithms such as magic square transformation, Arnold transformation, Henon map, and Hilbert curve, we demonstrate that these algorithms generally possess homomorphic encryption characteristics. This finding shatters the traditional perception that homomorphic encryption relies on complex mathematical problems (such as LWE and Ring-LWE). It constructs a lightweight homomorphic encryption theoretical framework in the field of image processing, fills the research gap in the combination of pixel-level operations and homomorphic properties, and enriches the theoretical system of homomorphic encryption.

The remainder of this paper is organized as follows. An overview of the research background is presented in

Section 2. An analysis of the homomorphic encryption characteristics of pixel scrambling algorithms is presented in

Section 3.

Section 4 analyzes and discusses the homomorphic characteristics of pixel scrambling algorithms, along with their advantages and limitations. Finally, the conclusions are presented in

Section 5.

2. Overview of Research Background

2.1. Homomorphic Encryption

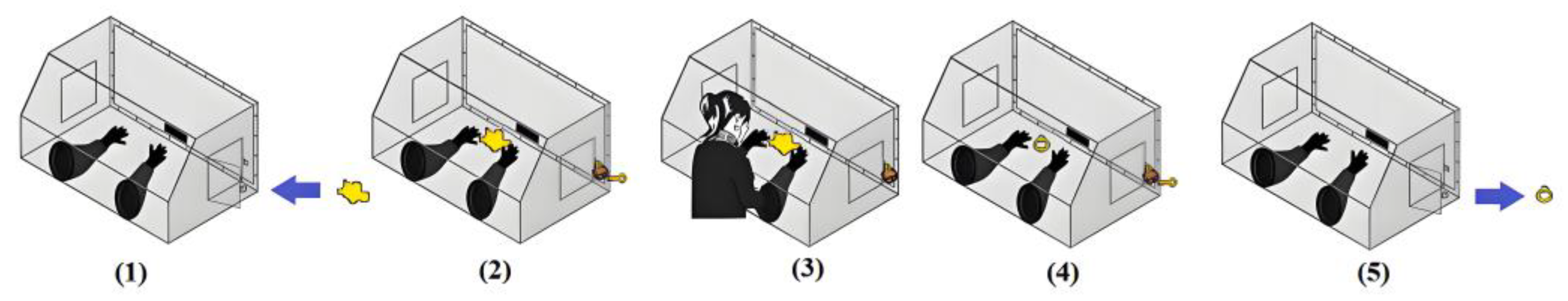

Homomorphic encryption offers significant convenience in daily life. Its core principle is that an operator can directly process ciphertext. When the sender decrypts the processed ciphertext, the result obtained is equivalent to that of performing the same operation directly on the plaintext. To understand this principle more intuitively, we illustrate it with a real-life example (see

Figure 1). Suppose we expect to process a piece of gold into a ring while ensuring that there is no loss of gold during the processing. In this scenario, we can put the gold into a special box and then lock the box (this step is similar to the data encryption process in homomorphic encryption, converting plaintext into ciphertext). With the help of a special linking device, the processor can process the gold inside the box without opening it (this is analogous to processing ciphertext in homomorphic encryption). After the processing is complete, we use a specific key to open the box (corresponding to the decryption process in homomorphic encryption), thus obtaining the processed ring, and the gold has not been lost throughout the process (this indicates that the result of the ciphertext operation, after decryption, is consistent with that of the plaintext operation). Through this example, we can clearly see the intuitive presentation of the homomorphic encryption principle. Of course, this is a relatively broad explanation. For a more in-depth and specific analysis, please refer to reference [

23], which explores the principle of homomorphic encryption in detail from an elementary to an advanced level.

This principle is particularly applicable in the current era of big data processing. In the context of huge amounts of data and extremely high requirements for data confidentiality, we can utilize homomorphic encryption technology. Under the premise of ensuring data confidentiality, encrypted data can be uploaded to the cloud for processing. After the cloud has processed the encrypted data, the user downloads and decrypts it. The result obtained is exactly the same as that of performing the same operation directly on the plaintext data, which not only enables efficient data processing, but also fully guarantees data security.

When evaluating whether a system possesses homomorphic properties from a mathematical perspective, it can be understood in the following way. If an encryption system can support addition and multiplication operations, that is, after performing addition or multiplication operations on encrypted data, the result is the same as that obtained by performing the same operations on plaintext data and then encrypting. Then, this system has homomorphic properties. Specifically, if an encryption function E and a decryption function D exist, for any plaintexts A and B, the following conditions are satisfied:

Additive Homomorphism:

where ⊕ represents the addition operation of ciphertexts.

Multiplicative Homomorphism:

where ⊗ represents the multiplication operation of ciphertexts.

When only one of these conditions is satisfied, it is called partial homomorphic encryption. When both conditions are met, we call it fully homomorphic encryption. According to their construction methods, fully homomorphic encryption algorithms can currently be classified into four generations:

First generation (2009–2010): There are two main representative schemes. One is the ideal-lattice-based scheme proposed by Gentry in 2009 [

11], and the other is the integer-based scheme proposed by van Dijk et al. in 2010 [

12].

Second generation (2011–2013): The core construction idea of the second-generation schemes underwent a transformation. They started to be based on the “Ring-Learning with Errors (R-LWE)” problem, and optimized the “hierarchical homomorphic” structure, thereby reducing the reliance on the bootstrapping technique. Representative schemes include the BV scheme proposed by Brakerski and Vaikuntanathan [

13], the BGV scheme proposed by Brakerski, Gentry, and Vaikuntanathan [

14], and the BFV scheme proposed by Brakerski, Fan, and Vercauteren [

15].

Third generation (2013–2016): The third-generation fully homomorphic encryption schemes take the “matrix approximate eigenvector” technology as the core construction idea. Through this technology, the bootstrapping process was simplified, thus improving the operational efficiency. Its representative schemes include the GSW scheme proposed by Gentry, Sahai, and Waters [

16].

Fourth generation (2016–present): The core construction idea of the fourth-generation schemes is based on the combination of “Ring-Learning with Errors (Ring-LWE) over polynomial rings” and “noise control in the complex domain”. This combination first achieved support for “Somewhat Homomorphic Encryption for Approximate Numbers”. Representative schemes include the CKKS scheme proposed by Cheon, Kim, Kim, and Song [

19], and the TFHE (Fast Fully Homomorphic Encryption) scheme focusing on Boolean circuits [

17,

18].

In recent years, homomorphic encryption algorithms have witnessed rapid development. For instance, numerous review papers on homomorphic encryption have been published [

23,

24,

27,

30]. These papers comprehensively summarize and analyze the theoretical basis, algorithmic evolution, and application scenarios of fully homomorphic encryption, thus facilitating the continuous refinement of the theory of fully homomorphic encryption. With the deepening of research, several efficient algorithms [

25] and optimization techniques have been proposed [

26]. In the medical field, Munjal et al. systematically reviewed the contributions of homomorphic encryption to the medical industry, including aspects such as patient data privacy protection and medical data analysis [

28]. Additionally, Xie et al. provided a review of the efficiency optimization techniques of homomorphic encryption in privacy preserving federated learning, introducing a series of methods to improve computational efficiency [

29]. Recently, homomorphic encryption, especially fully homomorphic encryption, has been developing in multiple dimensions [

39,

40,

41,

42]. Examples include cross domain technology integration [

39], efficiency breakthroughs at the hardware level [

40], and scenario-oriented tool adaptation [

41,

42]. This indicates that homomorphic encryption is no longer confined to a single cryptographic algorithm. Instead, centered around the core objectives of enhancing privacy protection capabilities, reducing implementation barriers, and expanding application scenarios, it is advancing simultaneously in multiple dimensions such as interdisciplinary collaboration, hardware support, scenario specific tools, and theoretical systems, thereby forming a three-dimensional development pattern.

Homomorphic encryption is burgeoning, especially with the CKKS algorithm capable of handling floating-point data, which holds the promise of enabling broader applications of homomorphic encryption. Nevertheless, its application in image processing confronts formidable challenges.

2.2. Homomorphic Encryption in Image Processing

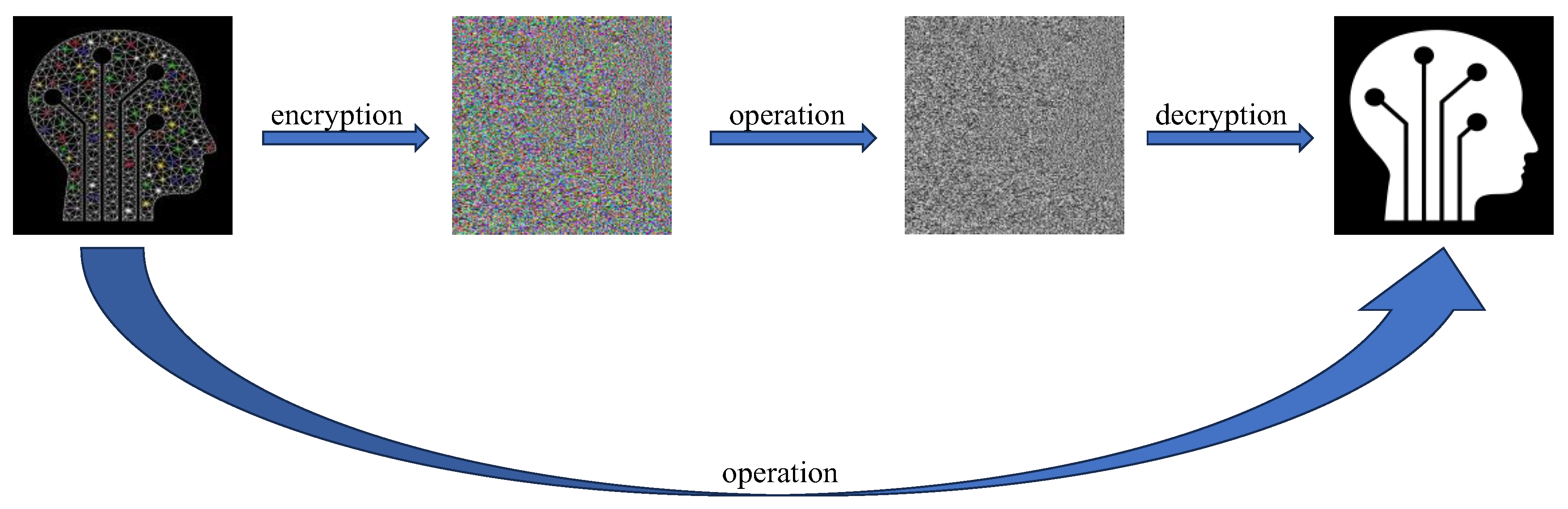

The ideal state of homomorphic encryption in image processing should be that an image can be processed without revealing its content, and the processed result is exactly the same as that obtained by performing the same operations on the original image. As illustrated in

Figure 2, the sender first encrypts the image and then transmits the encrypted ciphertext to the cloud, where the information is processed. After the processing is complete, the relevant information is sent back to the sender. The sender obtains the final processed result through the decryption operation. Throughout this process, the original image information remains confidential, and the result obtained after decryption is identical to that of directly processing the plaintext image.

At present, the application of homomorphic encryption in image processing has expanded to multiple aspects. For example:

Image privacy protection: Homomorphic encryption can perform calculations without decrypting the image content, effectively protecting the privacy of the image data [

10,

31,

32,

33,

34,

35,

36,

37,

38].

Image enhancement processing: Homomorphic encryption algorithms can be applied to image enhancement processes such as image denoising and contrast enhancement. Without decrypting the image content, image enhancement can be achieved, improving the effectiveness and security of the image processing [

32,

34,

36,

37].

Image retrieval: Traditional image retrieval methods pose a risk of privacy leakage when dealing with sensitive image data, while homomorphic encryption algorithms can enable the fast retrieval of encrypted images [

10,

31,

32,

34,

36,

37,

38].

Image watermark embedding: Homomorphic encryption technology can be used to embed watermark information in the encrypted domain [

10,

35].

Collaborative computing and sharing: Homomorphic encryption supports collaborative computing, allowing multiple users to share data in an encrypted state and conduct joint processing, effectively resolving the contradiction between data sharing and privacy protection [

34,

35].

The core logic of these schemes is to take existing mature homomorphic encryption algorithms (such as the CKKS algorithm supporting somewhat homomorphic encryption, the Paillier algorithm supporting additive homomorphic encryption, or the lattice-based BGV algorithm) as the technical basis, and adapt and integrate them according to the specific requirements of image processing (such as image enhancement, retrieval, watermarking, and face recognition). Instead of developing a brand-new homomorphic encryption algorithm specifically for image processing scenarios, the core feature of existing algorithms, which is the ability to perform calculations without decrypting ciphertexts, is utilized to solve the contradiction between data privacy protection and function realization in image processing.

However, when directly applying existing homomorphic encryption algorithms to image processing, due to the mismatch between the data characteristics of image processing (such as high dimensionality and strong pixel correlation) and the inherent attributes of homomorphic encryption algorithms (such as high computational complexity and noise sensitivity), several core problems will be faced. These include the following:

- (1)

The computational efficiency is extremely low, making it difficult to meet the real-time requirements of image processing.

- (2)

The data dimension does not match the algorithm’s carrying capacity, easily triggering noise overflow.

- (3)

There is a misalignment between the algorithm functions and the requirements of image processing, and some operations cannot be directly supported.

- (4)

The storage and transmission costs are too high, making it difficult to adapt to practical scenarios. (For example, for a 16-bit pixel plaintext, after being encrypted by BGV or CKKS, the ciphertext length may reach thousands or even tens of thousands of bits.)

- (5)

There is poor compatibility with the existing image processing toolchain, and it is difficult to implement.

Based on the above context, in what follows, we will adopt the perspective of algorithm design to deeply analyze and explore some known scrambling algorithms. These algorithms are characterized by low resource consumption and high computational speed. Our aim is to identify elements within them that can satisfy the characteristics of homomorphic encryption, thereby offering new insights into resolving the challenges of homomorphic encryption in image processing.

4. Analysis and Discussion

Pixel scrambling algorithms feature low resource consumption. Encryption is achieved merely by rearranging pixel positions, eliminating the need for complex mathematical operations. With low demands on hardware computing power, they can be directly deployed on embedded devices. There is no quality loss, as scrambling only modifies pixel positions without altering pixel values. After decryption, the original image quality can be fully restored, meeting the requirements of image-quality-sensitive scenarios such as medical imaging and high-definition images.

4.1. Additive Homomorphic Encryption

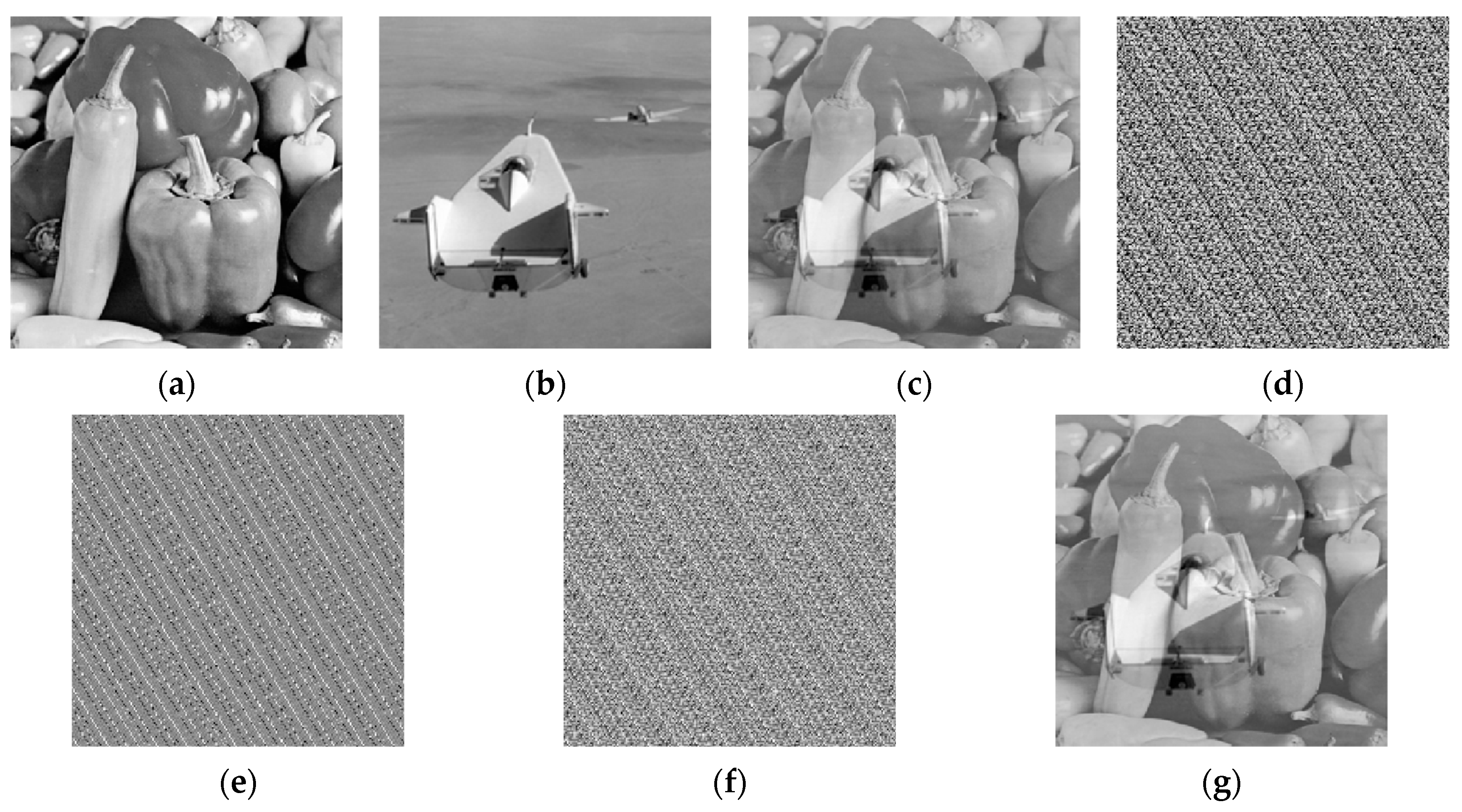

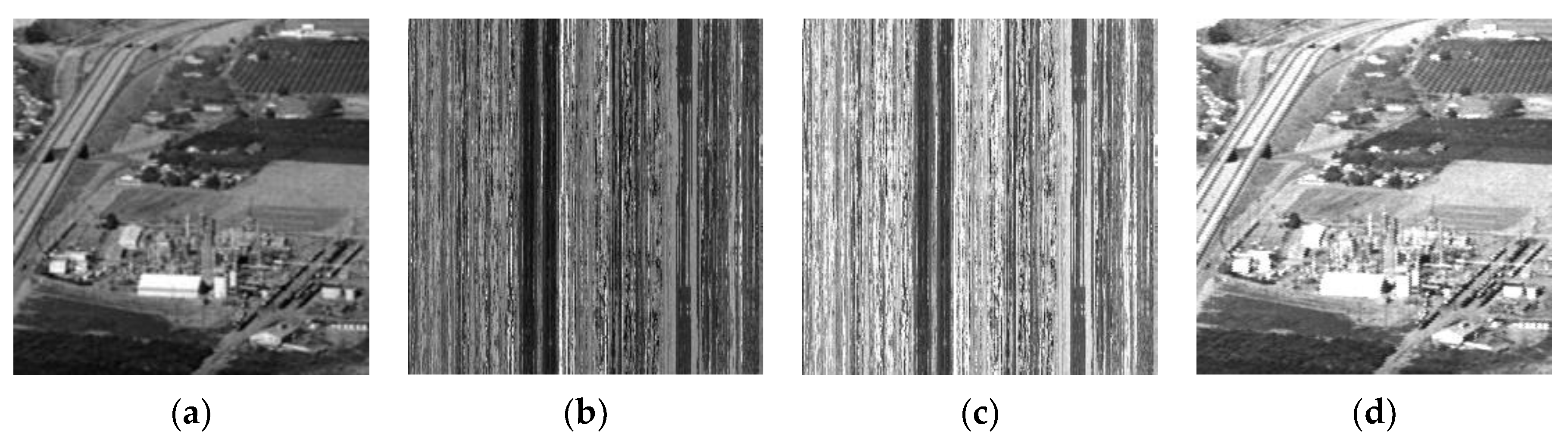

In the numerical simulation, the four algorithms mentioned in

Section 3 all satisfy additive homomorphic encryption (the additive homomorphic encryption emphasized here is specifically manifested as the results presented in

Figure 6). Based on this, we propose a hypothesis: Do common pixel scrambling algorithms all possess the characteristics of additive homomorphic encryption?

The essence of the pixel scrambling algorithm is to change the positions of the pixels. This concept has a long history, dating back to the ancient Caesar cipher and extending to the current chaotic systems. In the field of information security protection, they ensure security by changing the positions of plaintext, achieving the effect of confusion in Shannon’s system confidentiality theory. In view of the potential relationship between the pixel scrambling algorithm and additive homomorphic encryption, next, we will verify whether such a position scrambling algorithm satisfies additive homomorphism through the following proof.

For image

A, assume that each pixel is represented as

(where

i represents the row and

j represents the column of the pixel). For image

B, assume that each pixel is represented as

. When we perform the addition operation on images

A and

B, the corresponding mathematical operation is to add the pixels at the same positions. Therefore, it can be expressed as

where

represents the result of the addition. Next, we use the pixel scrambling algorithm to scramble the pixel positions of images

A and

B, respectively, and thus we can obtain

By adding the encrypted results, we then have

We carry out position restoration (decryption) on the result of Equation (12), and then we obtain

When comparing Equation (9) with Equation (13), it is easy to find that the result of adding the ciphertexts is equivalent to adding the plaintexts. This demonstrates that the pixel scrambling algorithm satisfies additive homomorphic encryption.

The additive homomorphic encryption of images has many applicable scenarios in daily life. For example, in digital watermarking, it can directly add watermark information to the pixel values of the original image in an encrypted state. This approach not only safeguards the privacy of the image content, but also ensures the accuracy of watermark embedding and extraction, effectively supporting copyright tracking and anti-counterfeiting. In the field of medical imaging, it enables the encrypted superposition of pixels from multiple-source images or batch statistical calculations (such as summing the gray-scale values of the lesion area). This makes it possible for cross-institutional collaborative analysis and medical research to be carried out smoothly without disclosing patient privacy, thus achieving a balance between data privacy protection and practical value.

The advantage of pixel scrambling algorithms lies in their strong universality. They address the issues of high computational costs and easy data expansion in image addition that mainstream homomorphic encryption algorithms (such as CKKS) face. Pixel scrambling algorithms require no complex mathematical operations and avoid problems such as a sharp increase in data volume.

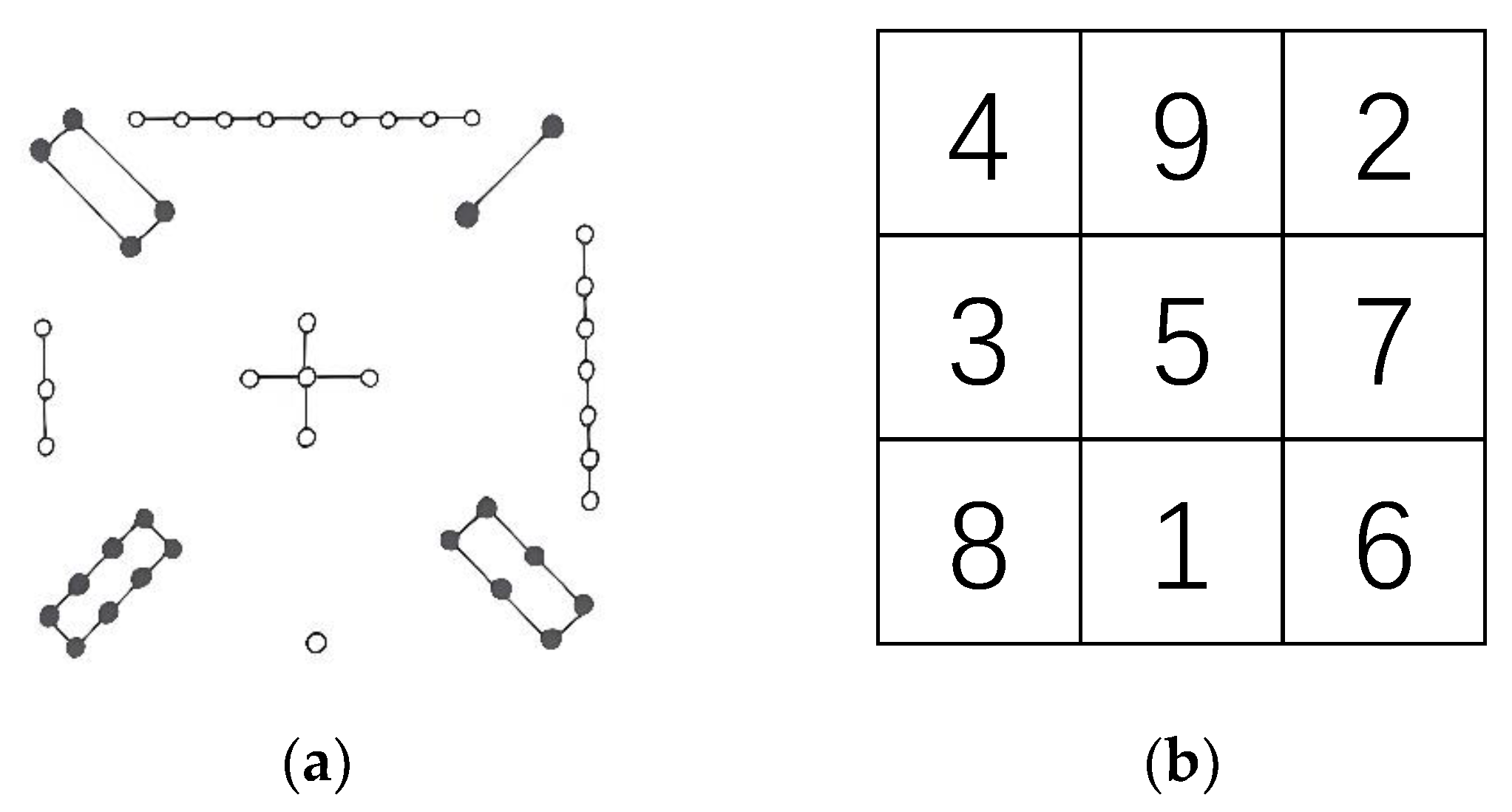

4.2. Rotational Homomorphic Encryption

In the numerical simulation, among the four algorithms proposed in

Section 3, only the magic square transformation and the Arnold transformation satisfy rotational homomorphic encryption. However, the applicability of the Arnold transformation is highly limited. The other two algorithms do not comply with rotational homomorphic encryption. This naturally prompts us to ponder what conditions a pixel scrambling algorithm should meet to satisfy rotational homomorphic encryption. By examining the characteristics of the magic square transformation and the Arnold transformation, we can find the answer: a pixel scrambling algorithm should possess a symmetric property. Here, we will conduct an analysis using this transformation as an example.

- (1)

Rotational symmetry: When a magic square rotates around its center by angles such as 90 degrees, 180 degrees, or 270 degrees, the sum of the numbers in each row, each column, and on each diagonal remains equal. The rotated magic square is equivalent to the original one.

- (2)

Axial symmetry: In some magic squares, there exists an axis of symmetry. When these magic squares are folded along this axis, the numbers on either side can be in one-to-one correspondence, and the sums of the numbers in the corresponding positions are equal. This property endows the magic squares with a certain degree of invariance under axial symmetry transformation.

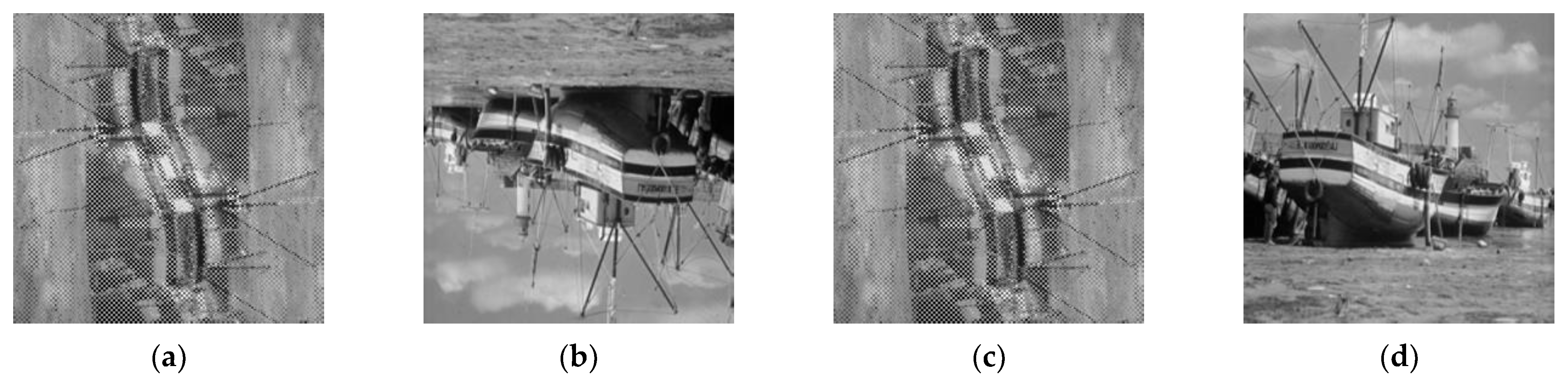

This characteristic of the magic square transformation implies that rotation, along with horizontal or vertical mapping of the ciphertext, does not affect the decryption outcome. As observed from

Figure 4 and

Figure 5, both the rotation and horizontal or vertical flipping of the ciphertext image adhere to homomorphic encryption. Nevertheless, this property is applicable only to rotations that are multiples of 90 degrees and fails to hold for rotations at arbitrary angles.

In the Arnold transformation, when the ciphertext is rotated by 180 degrees, decryption can recover the inverted image of the plaintext, and this scenario satisfies rotational homomorphic encryption, as depicted in

Figure 7. However, for rotations at other angles, this property does not hold. This is because, with the parameters

a = 19,

b = 1, and

N = 11 chosen, the ciphertext encrypted by the Arnold transformation exhibits symmetry. In contrast, the other two algorithms lack such symmetric features. Consequently, when recovering the ciphertext image, they do not possess the traits of rotational homomorphic encryption. This indicates that only when the pixel scrambling algorithms used or designed meet certain symmetric or rotational properties will they satisfy the characteristics of rotational homomorphic encryption in applications.

Image rotational homomorphic encryption has broad application prospects. For example, in medical imaging, it enables the rotation and alignment of lesion areas captured by different devices in an encrypted state, facilitating cross-institutional collaborative diagnoses without disclosing patient information. In remote sensing images, it can calibrate the angles of encrypted satellite images, facilitating multi-temporal data comparison while protecting sensitive geographical information. In the fields of surveillance and autonomous driving, it can rotate the encrypted images to correct the perspective deviation, ensuring privacy without affecting subsequent processing such as target detection. Its core lies in achieving the rotation of encrypted images under the premise of privacy protection, balancing the needs of data security and practicality.

The advantage of pixel scrambling algorithms with symmetric properties is that they compensate for the defects of mainstream homomorphic encryption algorithms. In the latter, rotational operations tend to disrupt pixel correlations, resulting in a decline in the visual quality of decrypted images.

4.3. Homomorphic Encryption for Brightness Adjustment

In digital images, the brightness of each pixel is directly determined by its pixel value (such as the grayscale value in a grayscale image, or the red/green/blue channel values in an RGB image). Specifically, the larger the pixel value, the higher the brightness at the corresponding position; conversely, the smaller the pixel value, the lower the brightness. Therefore, by adjusting the pixel values of all pixels in the image (such as uniformly increasing or decreasing the pixel values, or scaling the pixel values proportionally), the brightness can be increased or decreased. For example, if the grayscale value of each pixel in a grayscale image is increased by 50 (provided that it does not exceed the maximum pixel value), the overall image will become brighter. Conversely, decreasing the pixel values will darken the image. This approach represents the most fundamental and commonly used method for brightness adjustment.

Obviously, the core characteristic of the pixel scrambling algorithm lies in merely rearranging the pixel positions without altering their original values. This feature endows it with unique advantages in homomorphic encryption for brightness adjustment. Brightness adjustment relies on the linear adjustment of pixel values, such as increasing or decreasing the grayscale values. The transformation of pixel positions after scrambling does not interfere with the numerical operations in the encrypted state. On the one hand, the pixel scrambling algorithm can meet the core requirement of homomorphic encryption in terms of brightness adjustment. That is, after performing brightness adjustment on the image in the encrypted state, the decrypted result is consistent with that obtained by directly performing the same brightness adjustment on the plaintext image. On the other hand, through pixel position scrambling, the degree of privacy protection for the image is further enhanced, thus achieving dual-layer security protection for both pixel values and pixel positions.

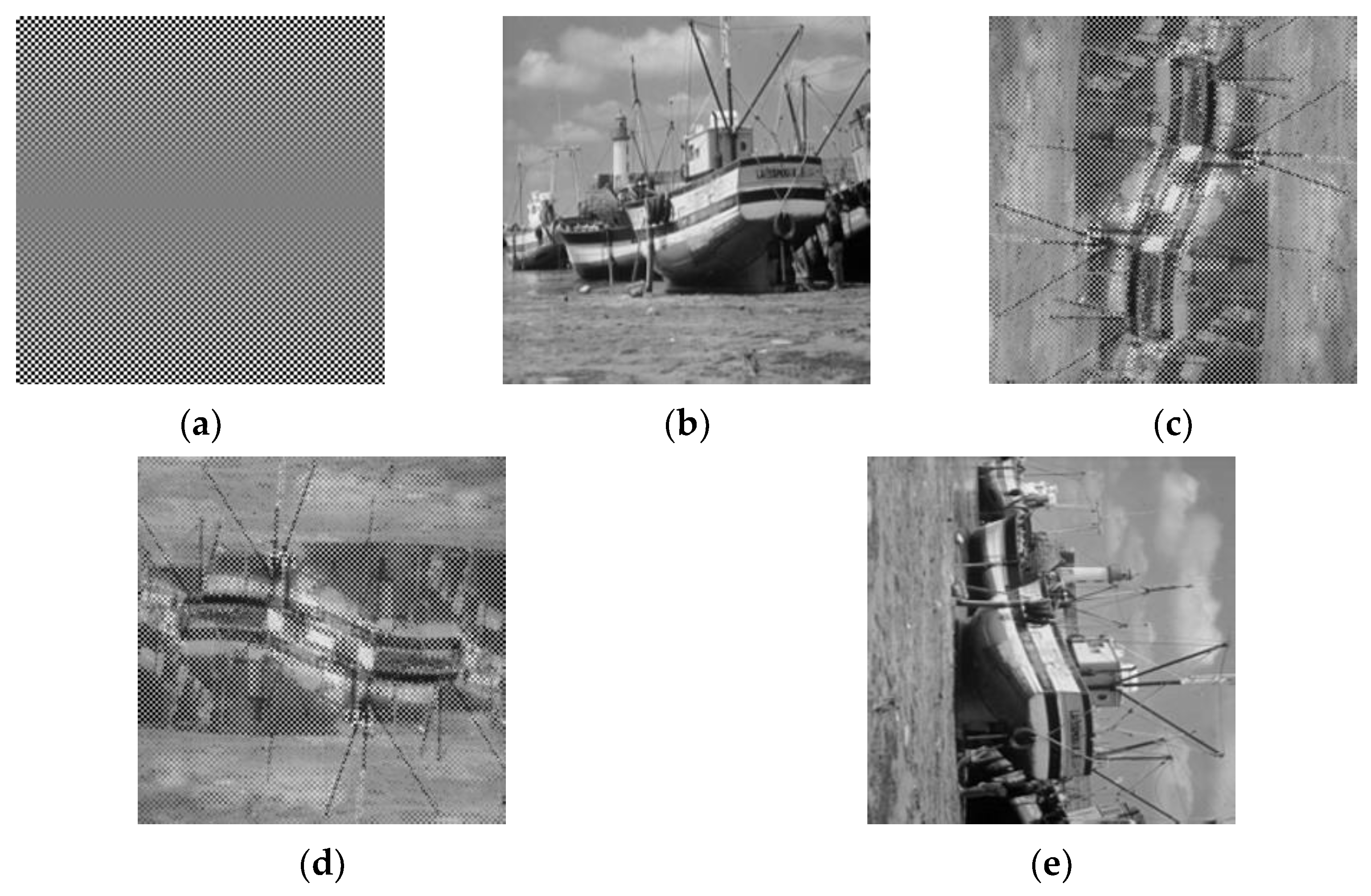

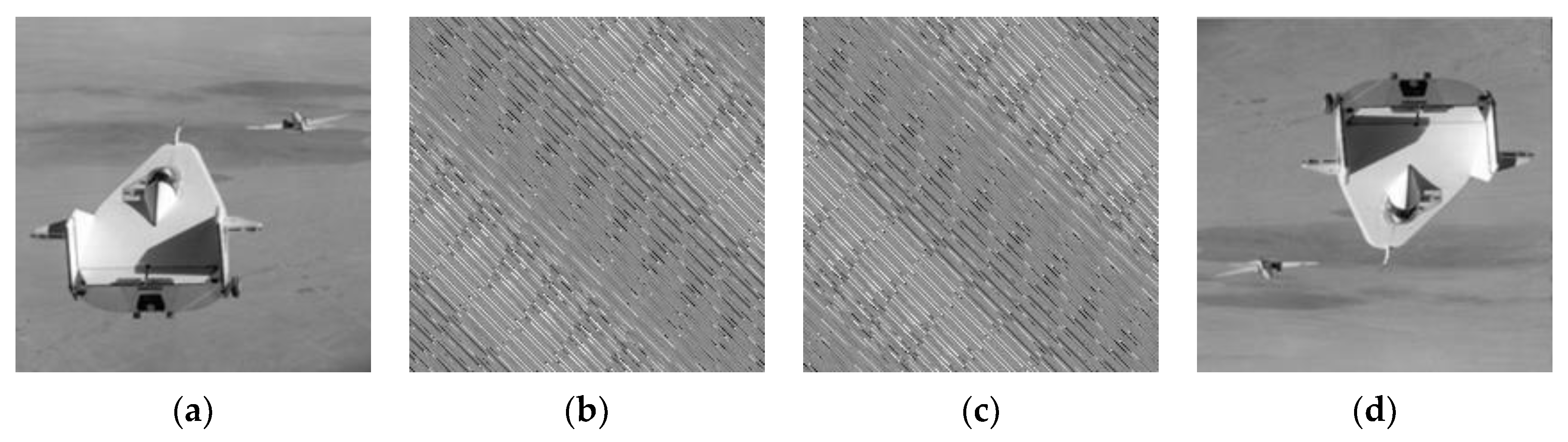

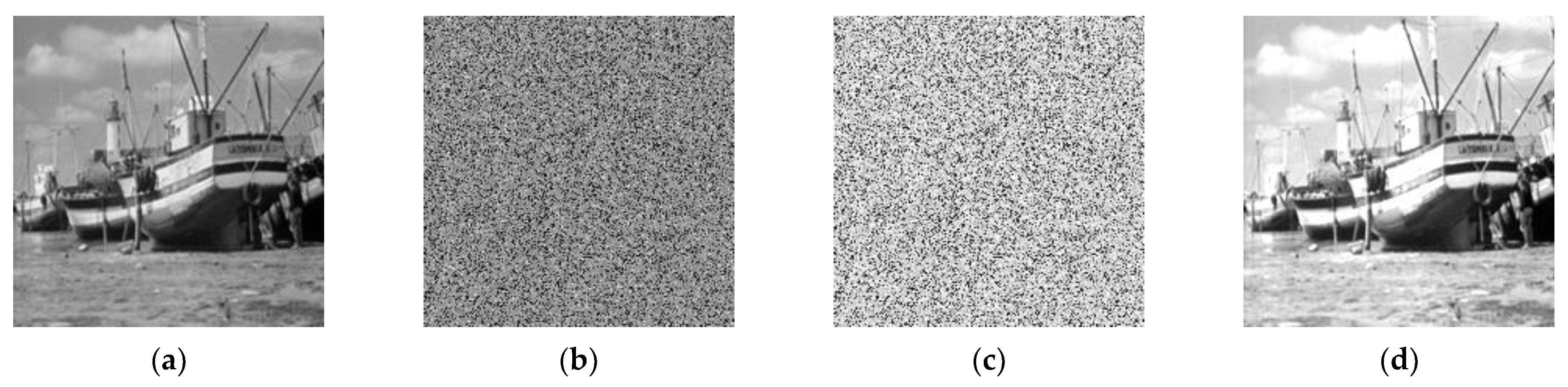

To ensure the generality of the pixel scrambling algorithm, we use Matlab to generate a pseudo random matrix and encrypt the known image using the position elements of the matrix as indices. The results are shown in

Figure 10.

Figure 10a represents the original image. The result after encrypting the plaintext image with the pseudo-random matrix is shown in

Figure 10b. For the ciphertext in

Figure 10b, we perform brightness adjustment by adding 80 to each pixel value. If the value exceeds 255, it is regarded as white, and we obtain

Figure 10c. Subsequently, we decrypt

Figure 10c, and the restored image is shown in

Figure 10d. It can be easily seen from the verification results in

Figure 10 that the pixel scrambling algorithm satisfies homomorphic encryption for brightness adjustment.

Homomorphic encryption for image brightness adjustment has a wide range of applications. In the field of medical imaging, primary hospitals upload encrypted CT scans to the cloud for expert consultation. If the images are dim due to shooting parameters, experts can directly increase the brightness in the encrypted state. This not only prevents the leakage of patient information, but also facilitates an accurate diagnosis. In remote sensing image analysis, for encrypted satellite images with locally dim areas caused by cloud cover, analysts can enhance the brightness in the encrypted state. While highlighting key information, this also ensures the security of confidential content such as military bases and border lines. In the scenario of security monitoring, if the encrypted surveillance footage at night is blurred due to insufficient lighting, making faces or license plates indistinguishable, the system can increase the brightness in the encrypted state. This enables the back-end algorithm to clearly identify suspicious persons and also protects the privacy of residents’ activities and details of private places. During cloud-based image editing, when users upload encrypted family photos, if the photos are underexposed due to backlighting, the cloud can directly adjust the brightness of the encrypted images, balancing privacy protection and image optimization. In the field of digital art, during academic exchanges of encrypted scanned paintings, if local brushstrokes appear dim due to scanning issues, researchers can brighten the image in the encrypted state. This allows for the analysis of color layers while preventing the unauthorized replication of original high-definition images.

Pixel scrambling algorithms only rearrange pixel positions and do not change pixel values. Their advantage lies in the fact that the decryption effect of brightness adjustment (such as an increase or decrease in grayscale value) in the encrypted state is consistent with that of plaintext adjustment, without brightness deviation or detail loss. They solve the problem of brightness adjustment in mainstream homomorphic encryption algorithms that easily leads to noise accumulation and image quality degradation. With pixel scrambling algorithms, brightness changes can be achieved only through linear adjustment. Meanwhile, due to their low hardware requirements, they are suitable for embedded devices and real-time scenarios. Brightness adjustment in the encrypted state can be realized on terminals such as mobile phones and monitoring devices, meeting real-time demands such as enhancing the brightness of night-time surveillance images, and avoiding the problem of high-computing-power consumption in algorithms such as CKKS.

4.4. Homomorphic Encryption with Added Noise

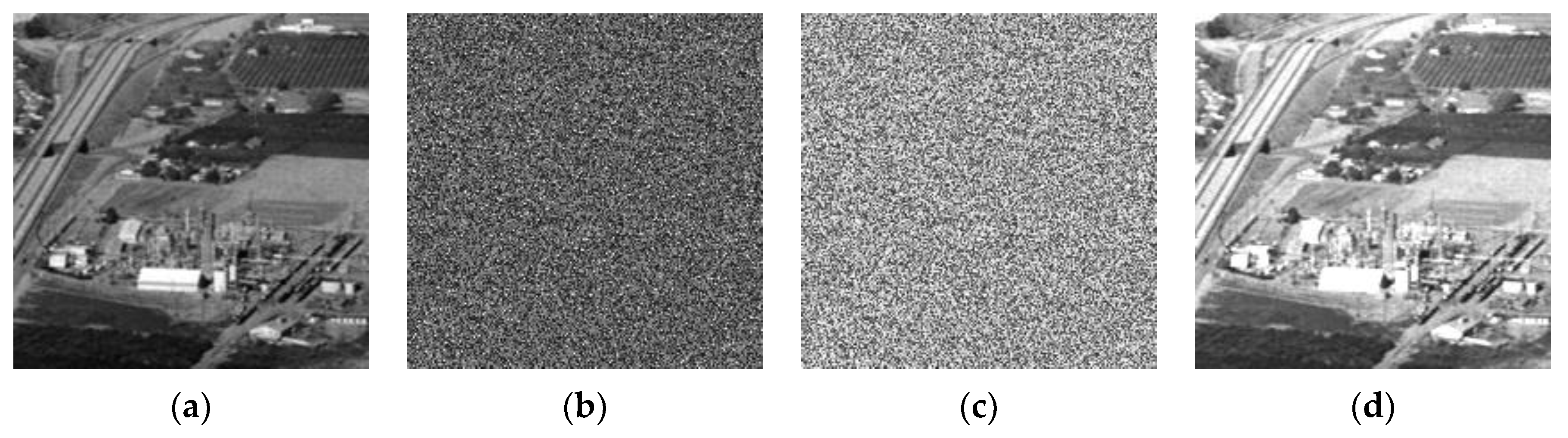

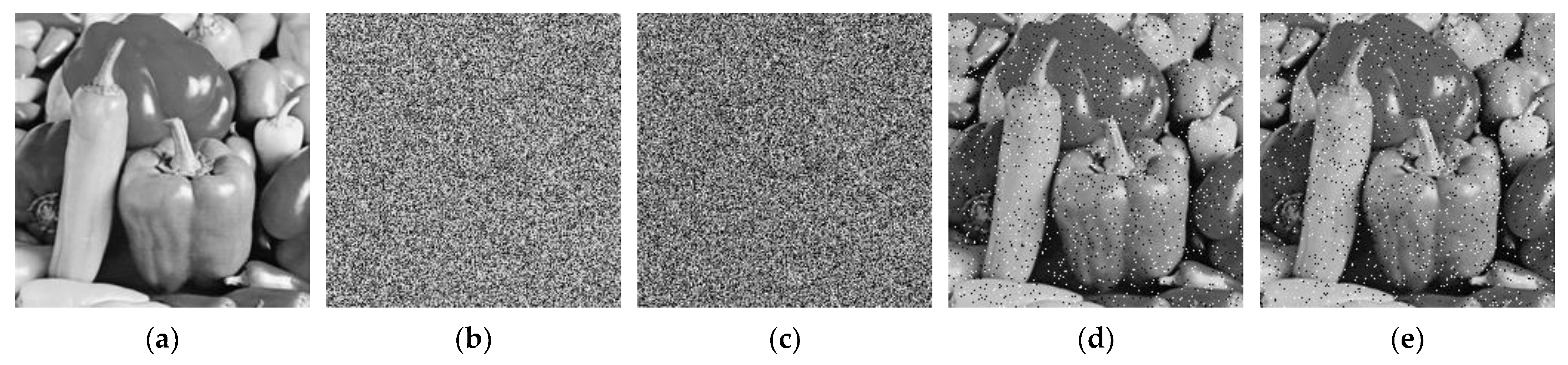

Sometimes, it is necessary to add noise to signals or images to simulate the possible impacts that information may undergo during real-world communication. Here, we simulate such a scenario: Alice’s company has a series of sensitive images that need to be tested by adding noise. To ensure fairness, the operation of adding noise must be carried out by a third-party company: Bob’s company. However, since Alice’s images need to be kept confidential, the approach of adding noise in the ciphertext state is considered. This aims to make the decrypted result equivalent to the effect of adding noise to the plaintext.

For the abovementioned scenario, it can be easily achieved by using the pixel scrambling algorithm. Here, a pseudo random matrix is also employed to perform pixel scrambling processing on the original image,

Figure 11a; the resulting image is shown in

Figure 11b. Subsequently, salt-and-pepper noise is added to

Figure 11b, and the outcome is presented in

Figure 11c. After decrypting the image in

Figure 11c, the obtained result is shown in

Figure 11d.

It can be seen from observing the images that the noise has been successfully added. For the purpose of comparison,

Figure 11e is the result of directly adding the same noise to the plaintext image

Figure 11a. Through a careful comparison between

Figure 11d and

Figure 11e, it is found that the positions of the noise are different. This is because

Figure 11d is the image obtained after decryption. The noise initially added to the ciphertext has its position rearranged after decryption. However, the noise itself is characterized by randomness, and its essential properties have not been altered by the encryption/decryption process.

This indicates that the advantages of pixel scrambling algorithms are as follows. After adding noise (such as salt-and-pepper noise) to the ciphertext in the encrypted state, although the positions of the noise are rearranged during decryption, the core characteristics of the noise, such as randomness and distribution density, remain unchanged. Statistically, it is consistent with adding noise to the plaintext. Moreover, it allows a third party to perform the noise-adding operation without obtaining the plaintext, ensuring privacy and test fairness. It breaks through the traditional limitation of “noise adding requires decryption”, avoiding privacy leakage caused by third parties obtaining the plaintext. At the same time, it circumvents the problems of data expansion and increased difficulty in subsequent processing that occur in mainstream algorithms after the addition of noise. The size of the ciphertext after noise addition is the same as that of the plaintext, reducing transmission and processing costs, and making it suitable for scenarios such as signal simulation and anti-interference testing.

4.5. Advantages and Limitations of Pixel Scrambling Algorithm in Homomorphic Encryption

As a lightweight encryption scheme tailored to the characteristics of image pixels, the pixel scrambling algorithm presents distinct advantages in integrating homomorphic encryption with image processing, while also exhibiting inherent technical boundaries. The characteristic disparities between this algorithm and mainstream fully homomorphic encryption algorithms further underscore the existing application contradictions of current homomorphic encryption technology in the field of image processing.

In terms of advantages, firstly, the algorithm offers notable convenience in security implementation and key management. Although pixel scrambling merely fulfills the “confusion” requirement in Shannon’s secrecy theory (without achieving “diffusion”), within the homomorphic encryption scenarios emphasized in this study, both encryption and decryption processes are executed by the data sender, whereas the receiver is solely responsible for ciphertext processing—thus obviating the need to address key distribution challenges. When integrated with a “one-time pad” mechanism (e.g., employing a new pseudo random index matrix for each encryption iteration), the algorithm can readily achieve ideal system confidentiality. This renders it particularly suitable for scenarios with non-critical confidentiality demands, such as image transmission on social platforms, where simple operations such as row/column permutation suffice to meet basic privacy protection requirements. Secondly, the algorithm demonstrates excellent hardware adaptability and image quality retention. In comparison to symmetric encryption algorithms (e.g., AES, SM4), pixel scrambling primarily involves coordinate mapping and pixel relocation, which imposes minimal requirements on hardware computing capabilities. Consequently, it can be directly deployed in embedded devices or real-time scenarios (e.g., video stream encryption). Furthermore, since the algorithm only modifies pixel positions without altering the original pixel values, the pristine image can be fully reconstructed provided that permutation rules (e.g., encryption keys) are preserved. This feature makes it ideally suited for scenarios demanding stringent image quality, such as medical imaging, effectively preventing the loss of image details post-decryption.

Regarding limitations, the pixel scrambling algorithm is inherently a form of partial homomorphic encryption. It can only support a limited range of operations, including addition, rotation at specific angles, and brightness adjustment, and is incompatible with complex processing scenarios that rely on local pixel correlation—such as image filtering and edge detection—resulting in clear constraints on its application scope. In contrast, current mainstream fully homomorphic encryption algorithms (e.g., CKKS and BFV, designed based on hard mathematical problems) are capable of providing genuine ciphertext domain full operation computing capabilities and rigorous security guarantees, positioning them as the “ultimate goal” in cryptography. Nevertheless, these fully homomorphic encryption algorithms suffer from high computational overhead and data expansion of decrypted results, posing challenges to balancing security and practicality in real-world applications.

In summary, the diversity of image processing algorithms imposes dual requirements on homomorphic encryption technology: it must not only cover a broader spectrum of operation types, but also provide customized solutions aligned with the mathematical properties, performance requirements, and data structures of different algorithms. This contradiction cannot be resolved through a single technological breakthrough in the short term and necessitates collaborative optimization across multiple dimensions. Specifically, at the level of encryption algorithm design, a hierarchical homomorphic encryption architecture can be explored, wherein pixel scrambling algorithms handle lightweight operations while fully homomorphic encryption algorithms manage complex tasks; at the level of algorithm reconstruction, ciphertext-friendly operators need to be developed to reduce the reliance of nonlinear operations on encrypted data; and at the level of hardware acceleration, dedicated ASIC chips matching the characteristics of pixel scrambling or fully homomorphic encryption can be engineered to enhance computational efficiency. These measures are expected to ultimately advance the practical application of homomorphic encryption technology in the field of image processing.