Abstract

Contemporary machine learning (ML) systems excel in recognising and classifying images with remarkable accuracy. However, like many computer software systems, they can fail by generating confusing or erroneous outputs or by deferring to human operators to interpret the results and make final decisions. In this paper, we employ the recently proposed quantum tunnelling neural networks (QT-NNs) inspired by human brain processes alongside quantum cognition theory to classify image datasets while emulating human perception and judgment. Our findings suggest that the QT-NN model provides compelling evidence of its potential to replicate human-like decision-making. We also reveal that the QT-NN model can be trained up to 50 times faster than its classical counterpart.

1. Introduction

1.1. Motivation and Literature Review

Uncertainty refers to the absence of complete knowledge about the present state or the ability to accurately predict future outcomes [1,2,3]. As a complex, nonlinear, and often chaotic system [4], nature presents challenges when we attempt to anticipate its behaviour. Uncertainty also limits our understanding of human behaviour and decision-making patterns [1,2], thereby fuelling anxiety [3] and contributing to a lack of confidence in critical situations where accurate predictions are essential [5]. This makes the study of uncertainty especially pertinent to fields such as machine learning (ML) and artificial intelligence (AI) [6,7].

Studies of uncertainty in AI and ML systems often draw on Shannon entropy (SE) [6,8,9], a fundamental concept in information theory that quantifies the uncertainty within a probability distribution [10]. SE provides a specific mathematical framework for assessing unpredictability and information content associated with a random variable within an ML model, also establishing a link between natural intelligence and AI [11]. This figure-of-merit is important for understanding not only the internal behaviour of the model, but also its confidence in making specific predictions [6,9]. For instance, SE can be applied to track changes in neural network weight distributions during training, where increased entropy may reflect heightened variability in weights as the model encounters complex or ambiguous data [6,12] (see Figure 1 for an example from real life). Entropy also enables distinguishing between confident and uncertain predictions in output distributions, where lower SE values often correspond to a higher confidence of the model in its classification outcomes [9]. Additionally, SE has been useful in assessing uncertainty for probabilistic layers in neural networks and evaluating robustness in models exposed to noisy data [6].

Figure 1.

Uncertainty in detecting fresh produce items at a supermarket self-checkout equipped with a machine vision system. (Left): The system has analysed a transparent plastic bag containing truss tomatoes and identified two possible categories, namely, truss tomato and gourmet tomato, leaving the final selection to the customer. (Right): In another test with a bag of Amorette mandarins, the system has suggested three potential options: Delite mandarin, Amorette mandarin, and Navel orange. Similar results are observed with other visually ambiguous items.

In traditional ML models based on artificial neural networks [13,14], the weights of network connections are derived from minimal information contained within the dataset [15]. However, such an optimisation often leads to poor model performance and limited generalisation capabilities [7,16,17]. As a result, these models can lack accuracy, and also frequently exhibit overconfidence in their predictions [18,19]. This poses a significant risk in critical applications where failures could have severe consequences, such as self-driving vehicles [20], medical diagnosis [18], and financial modelling [21].

As a result, several approaches have been proposed to address this risk [22,23], including traditionally designed confidence-aware deep neural networks (DNNs) [24,25,26,27,28], Bayesian neural networks (BNNs) [7,9,17,29], and various classes of quantum neural networks (QNNs) [30,31,32,33,34,35,36,37]. These models provide robust frameworks for developing uncertainty-aware neural networks, thereby enhancing the reliability and safety of AI systems in high-stakes applications. In particular, ref. [7] surveyed methods for analysing uncertainty in neural network models, while [9] discussed a model that evaluates uncertainty in the context of quantum contextuality. Additionally, refs. [17,29] outlined the foundations of BNNs and discussed their implementation in hardware, respectively. In the domain of QNNs, ref. [30] discussed the quantum generalisation of feedforward neural network models, while [31,33,35] introduced different approaches to quantum-inspired deep learning [32,36]. In [34,37], the authors provided a systematic review of the state-of-the-art and outlined hardware implementations of QNNs, respectively.

The Bayesian approach in statistics contrasts with the frequentist perspective, particularly in how it handles uncertainty and hypothesis testing [38]. Its application in ML, especially in deep learning systems, offers several advantages over traditional methods [17]. These include improved calibration and uncertainty quantification, the ability to distinguish between epistemic and aleatoric uncertainty [6,9], and increased integration of prior knowledge into models [17]. Architecturally, BNNs are typically categorised as stochastic models, meaning that uncertainty is represented either through probability distributions over the activations or over the weights of the network [17].

In QNNs, the concept of weights and activation functions takes on a distinctive interpretation compared to classical neural networks [36,37,39]. In particular, their weights are typically represented as unitary transformations that evolve as quantum states, and are governed by quantum bits (qubits) [40]. Unlike classical weights, which scale and adjust input values linearly or nonlinearly [14], quantum weights manipulate complex probability amplitudes of qubits, leveraging superposition and entanglement to achieve richer representations of data [39,41,42].

The activation function, another key component in traditional neural networks [13], presents unique challenges in the quantum realm due to the linear nature of quantum mechanics [43]. Indeed, nonlinear activation functions such as the rectified linear unit (ReLU) function, which are crucial for capturing complex patterns in classical systems [13], are not directly implementable in quantum circuits [43]. However, a number of strategies have been devised to simulate nonlinear processes through measurement and interference processes [37,44,45]. These quantum-inspired activation mechanisms enable QNNs to handle complex datasets and perform tasks similarly to their classical counterparts while retaining the extra functionality brought by quantum parallelism and entanglement [37,41].

The principles of neuromorphic computing can also be applied to build QNNs. Neuromorphic computers mimic the operational dynamics of a biological brain [46,47,48]. Various classical neuromorphic neural network models have been developed [49,50]. Rather than using conventional Boolean logic, these process information through the nonlinear dynamic properties of physical systems [47,48].

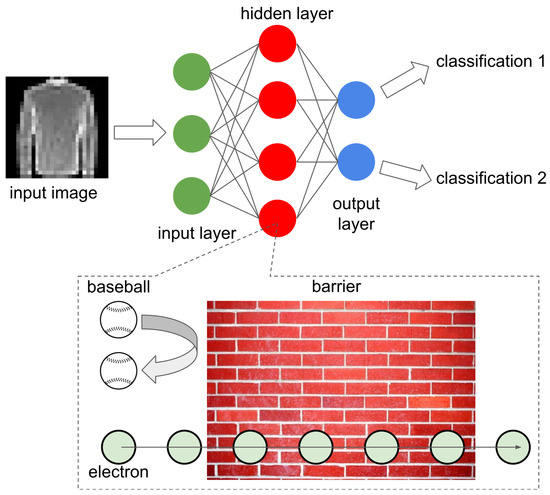

Recently, a novel neuromorphic QNN architecture was introduced in [12] utilising the physical effect of quantum tunnelling (QT), which describes the transmission of particles through a high potential barrier [51,52]. In classical mechanics, a baseball with energy , where is the height of the barrier, cannot penetrate the barrier (see Figure 2, where the barrier is depicted as a brick wall). However, an electron, which is a quantum particle behaving as a matter wave, has a non-zero probability of penetrating the barrier and continuing its motion on the other side. Similarly, for , the electron may still be reflected from the barrier with a non-zero probability.

Figure 2.

Schematic representation of the QT-NN architecture. The inset illustrates the effect of quantum tunnelling employed as an activation function of the network.

Consequently, the neural network can be conceptualised as an electronic circuit, with the connection weights corresponding to the energies of electrons traversing the connections of the circuit. Importantly, these weights are updated according to the principles of quantum mechanics. In this framework, neurons do not require a specific accumulation of weight for activation, as they instead function collectively in a probabilistic manner. The so-designed operating principle introduces additional degrees of freedom into the network, enhancing its flexibility and potential for processing of complex data [12].

The quantum tunnelling neural network (QT-NN) model incorporates certain characteristics of BNNs and quantum computing-based QNNs. While the QT-NN does not utilise qubits, the application of the QT effect introduces additional degrees of freedom during network training, offering advantages akin to those provided by quantum parallelism and entanglement. At the same time, akin to BNNs [29,50], the QT-NN is a stochastic model that captures uncertainty through the probabilistic nature of its activation functions [12] as well as via the injection of white noise and its application for the initialisation of connection weights [53].

However, the QT-NN model is uniquely different from any other competing approaches, as it also incorporates the fundamental principles of quantum cognition theory (QCT) [1,54,55,56]. By integrating principles from quantum mechanics with cognitive psychology and decision-making theory, quantum cognition models offer a revolutionary perspective on understanding human decision-making and cognitive processes [1,55]. Unlike classical models, which often rely on deterministic frameworks [57], QCT postulates that human behaviour and perception of the world are inherently probabilistic [56]. For instance, QCT employs quantum superposition to explain how individuals can hold multiple often contradictory beliefs and percepts simultaneously until a decision is made [1]. This approach not only enhances our understanding of cognitive phenomena such as optical illusions, biases, and uncertainty in judgment [1,12,53,58,59], but also offers potential applications in the field of AI, where algorithms inspired by quantum cognition can improve decision-making processes in complex environments.

The QT-NN model is based on the mathematical solution of the Schrödinger equation [12]. This equation is also pivotal for QCT due to its context-dependent solutions that provide insights into the complex patterns of human behaviour and perception [1,58]. Importantly, the effect of QT has been naturally integrated into the fundamental framework of QCT [53,60], facilitating neural network models capable of capturing the intricate features of human behaviour. Furthermore, a strong connection has been established between QCT and models [61,62,63] that attempt to explain human consciousness and brain function from the perspective of quantum information theory [64]. Consequently, the QT-NN serves as a powerful tool that leverages quantum physics, quantum information, psychology, neuroscience, and decision-making, enabling the modelling of human choices with uncertainty in a unique way that is not available with other models.

1.2. Objectives and Outline

The objective of this paper is to investigate the potential of the QT-NN model in mimicking human-like perception and judgment. Drawing upon QCT, we have the following aims:

- To explore whether the QT-NN can replicate essential cognitive processes inherent in human decision-making, including ambiguity handling and contextual evaluation.

- To examine whether the QT-NN can outperform conventional ML models in classifying image datasets, while providing evidence of enhanced flexibility of the quantum approach and its ability to adapt to complex data patterns.

In completing these tasks, we chose the Fashion MNIST dataset as a testing benchmark due to its real-world relevance in classifying clothing items [65]. Generally, images are inherently complex, containing rich high-dimensional data that challenge the capacity of any ML model to extract patterns, features, and relationships [66]. Therefore, testing with such data is designed to demonstrate the ability of the QT-NN model to generalise and process diverse inputs effectively. Moreover, images are readily interpretable by humans and offer intuitive and widely understood figures-of-merit, a fact that is particularly important to this paper.

The remainder of this paper is structured as follows. We begin by introducing the theoretical foundations of the QT-NN model and its connection to QCT. Then, we present computational results demonstrating the advantages of the QT-NN, particularly its ability to provide human-like classification accuracy and flexibility. The following section discusses the implications of these findings, focusing on how the QT-NN model could enhance decision-making in critical real-world scenarios. Overall, by simulating human-like judgment, the QT-NN model aims to reduce reliance on human operators and improve decision-making processes in situations involving uncertainty and complexity.

2. Methodology

2.1. Quantum-Tunnelling Neural Network

In this section, we introduce the image recognition confidence model used throughout the remainder of the paper. The computational task of recognising and classifying images is a well-established challenge in the field of classical [14] and quantum ML [42]. We use a generic neural network algorithm [67] as a reference framework to highlight the essential algorithmic features and advantages of our proposed QT-NN model.

For the purposes of our study, we assume that all input images used for both training and testing are greyscale with dimensions of 28 × 28 pixels, aligning with the format of the MNIST and Fashion MNIST datasets discussed below. Hence, the QT-NN architecture includes an input layer with nodes, a hidden layer containing nodes, and a final output layer with nodes for classification. Such a configuration has been shown to effectively predict MNIST images with an accuracy of less than 2% [68].

The weights between the nodes are generated using a pseudorandom number generator with a uniform distribution (for a relevant discussion, see, e.g., [12,53]) and are updated during the training process using a backpropagation algorithm [14]. The activation function for the nodes in the hidden layers is defined using the algebraic expressions for the transmission coefficient T of an electron penetrating a potential barrier. These expressions are well-established in quantum mechanics, with their final ML-adopted algebraic forms detailed in the prior relevant publication [12].

The output nodes of QT-NN are governed by the Softmax function [14]:

where is the weighted sum of input signals to the ith output node and M is the number of the output nodes.

The network is trained and utilised as follows. First, we construct the output nodes that correspond to the correct classifications for the training datasets. The weights of the neural network are initialised randomly within the range from −1 to 1. After inputting the data and the corresponding training targets , we compute the error between the output of the network and the target as .

Next, we propagate the error backward through the network, computing the respective parameters for each hidden node. This is done using the equations and , where n denotes the layer number, is the derivative of the activation function, and is the transpose of the weight matrix corresponding to that layer. This backpropagation process is repeated through each hidden layer until it reaches the first one.

Finally, we update the weights using the learning rule , where are the weights between an output node i and input node j of layer n, with . These steps are applied sequentially to all training data points, iteratively refining the weights to minimise the error across the dataset.

The above-outlined algorithm can be readily adapted to a classical framework by replacing the QT activation function with a standard ReLU activation function [14]. Relevant information and the source code of the QT-NN algorithms can be found in [12,53].

2.2. Benchmarking Testbed

Now, we discuss the rationale behind our choice of test image dataset for this study. The MNIST [69] and Fashion MNIST [65] datasets are widely used benchmarks in the field of ML, particularly for image classification tasks [42,70]. The MNIST dataset consists of 70,000 greyscale images of handwritten digits (0–9) sized 28 × 28 pixels. Its simplicity and accessibility have made it a standard testbed for developing and evaluating models [14].

The Fashion MNIST dataset was introduced as a more challenging alternative [65]. It contains the same number of greyscale images and structure, but features ten classes of fashion items, including shirts, shoes, and bags, offering increased complexity over digits alone. These datasets serve as practical tools for training and testing ML models, providing insights into model accuracy, generalisation, and robustness. Consequently, in the following analysis, we utilise the Fashion MNIST dataset.

Classifying images from the Fashion MNIST dataset presents a more challenging task for the chosen neural network architecture. However, we selected this dataset deliberately in order to increase the likelihood of neural network errors, mimicking situations in which humans make mistakes. Fashion MNIST has also been used in recent third-party work on QNNs [42], enabling us to compare the outputs of our QT-NN model with the results produced by other quantum models described in the literature.

2.3. Statistical Analysis

Both outputs and weight distributions generated by the trained classical model and the QT-NN were formally compared using rigorous mathematical methods. Such techniques have often been used in the fields of classical and quantum ML [71]. Below, we provide the rationale behind our choice of the particular approaches.

The Kullback–Leibler divergence (KLD) [72] is a well-established method in the field of ML for comparing the weight distributions of neural network models as well as for conducting other types of statistical analysis [73,74]. This approach quantifies how much information is lost when approximating the initial weight distribution with the trained weight distribution. A high KLD indicates that the training process has significantly altered the weight distribution, while a low KL divergence suggests the trained weights remain closer to the initial distribution. The results of KLD analysis are often visualised by comparing the probability density functions of the initial and trained weights, where the shaded area between them represents the divergence; the larger the shaded area, the greater the difference between the distributions.

Alternative approaches include the Jensen–Shannon divergence (JSD) [75,76]. A low JSD value indicates greater similarity between the two distributions, while higher values suggest more significant divergence. Unlike KLD, JSD is bounded between 0 and 1, providing a more intuitive measure of similarity. A value closer to 0 indicates that the distributions are highly similar, while values approaching 1 reflect substantial divergence. This provides a clearer indication of the differences, which justifies the use of JSD for the analysis in the remainder of this paper.

JSD is typically computed as follows [76]:

where p and q are the probability distributions and D is the dimension of the distributions. In this paper, it has been implemented using the jensenshannon procedure of the SciPy open-source Python 3.0 library.

2.4. Model of Uncertainty

Shannon entropy (SE) [10] is widely recognised as a standard model of uncertainty in studies of AI and ML systems [6,8,9]. As a cornerstone of information theory, SE quantifies uncertainty within a probability distribution, providing insight into the variability of outcomes. SE is calculated as follows [9]:

where represents the probability of occurrence of each possible outcome in a set of n outcomes. A logarithm of base 2 is used, providing the entropy values in bits. The negative sign ensures a non-negative entropy value, as probabilities range between 0 and 1.

Essentially, SE reflects the uniformity of probability distributions. When the probabilities are distributed more evenly, the entropy is higher, indicating greater uncertainty about the outcome; conversely, if one outcome dominates the probability distribution, the entropy value is lower, indicating less uncertainty. This principle underpins many applications of SE in AI and ML, where it helps to assess model confidence and robustness by measuring the spread of predicted probabilities across possible outcomes [6,9,29].

3. Results

3.1. Comparison of the Outputs of QT-NN and the Classical Model

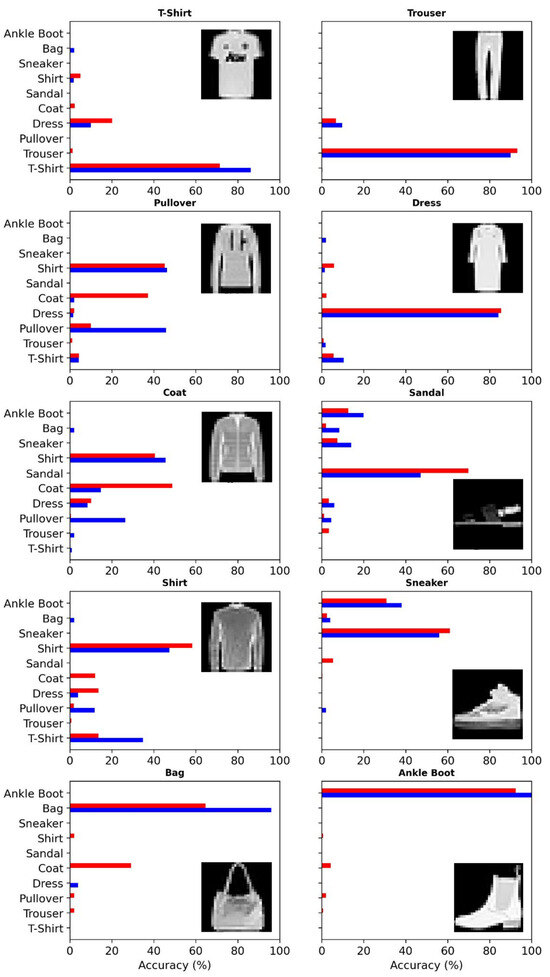

In Figure 3, we compare the outputs generated by the QT-NN and a classical neural network model trained on the Fashion MNIST dataset. To ensure a rigorous scientific comparison, both models were designed to have identical architectures, incorporating the same number of neurons, connections, and initial random weight distributions, and both used identical training and testing procedures. Specifically, both models were trained on 32 batches of training image sets, with 100 training epochs for each batch—an optimal training strategy that we previously identified—before classifying the same sequence of 50 test images. The respective outputs generated by the models were then averaged to produce the classification bar charts.

Figure 3.

Outputs generated by the QT-NN (red) and the classical neural network model (blue). The insets show the representative testing images for each classification category.

Figure 3 provides an alternative to the commonly used confusion matrix [77]. We established that its composition offers more detailed information, which proves particularly valuable in applying the statistical tools outlined earlier in the text. Specifically, the title of each panel in Figure 3 indicates the actual type of fashion item that each model was tasked to recognise and classify, while the bar height represents the accuracy with which each model classifies the item. For example, when presented with 50 images of the ‘Trouser’ category, the QT-NN and classical models correctly classify the items as ‘Trouser’ with respective accuracies of 93.2% and 90%. Both models also suggest that these items could be ‘Dress’, with respective accuracies of 6.8% and 10%. As another example, while the classical model presented with the test images of the ‘Ankle Boot’ category correctly identifies this item with accuracy of 100%, the QT-NN model identifies it with 92.4% accuracy, also suggesting with respective lower accuracies that the items could be ‘Coat’, ‘Pullover’, ‘Shirt’, and ‘Trouser’.

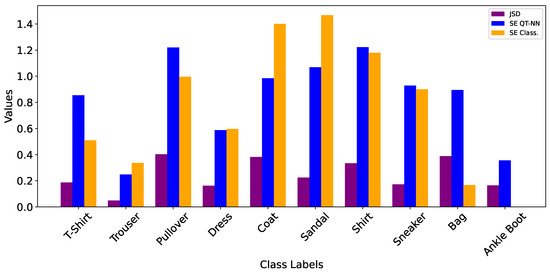

Analysis of the JSD and SE figures-of-merit (Figure 4) provides insights into the prediction similarities and uncertainty levels between the QT-NN and the classical model. A lower JSD score closer to 0 indicates high alignment between the probability distributions of the models, as seen in the ‘Trouser’ category (JSD = 0.049), where the two models produce nearly identical predictions. Conversely, a higher JSD score like that of the ‘Pullover’ category (JSD = 0.403) signals substantial divergence, implying the models interpret this category differently. SE values supplement this by highlighting uncertainty levels in predictions, where a higher entropy indicates more ambiguity. Notably, the QT-NN model shows greater uncertainty for ‘T-Shirt’ compared with the classical model (SE = 0.8542 for the QT-NN compared with SE = 0.5093 for the classical model). Additionally, categories such as ‘Coat’ and ‘Sandal’ yield higher SE values for the classical model, suggesting that the predictions of the classical model are less confident. However, ‘Bag’ has minimal entropy for the classical model (SE = 0.1672), implying high certainty, whereas the QT-NN shows moderate uncertainty (SE = 0.8943).

Figure 4.

JSD and SE figures-of-merit for the QT-NN and the classical model for each item category. Note that the classical SE is zero (to machine accuracy) for the ‘Ankle Boot’ category.

Thus, considering the overall ability of both the QT-NN and classical models to accurately classify fashion objects, albeit with varying degrees of accuracy and similar patterns of failure, it becomes evident that the quantum model yields results that are more aligned with human cognition and perception of the world. Furthermore, given that the QT-NN achieves faster training times compared to the classical model (see the discussion below), we assert that it demonstrates superior overall performance. Additionally, the QT-NN compares favourably with more conventional superposition-enhanced quantum neural networks [42], further underscoring its potential.

Indeed, the outputs generated by both models are interpretable based on common human reasoning. Recalling that the images presented in the insets of Figure 3 are representative examples of their respective categories and vary between batches, we observe certain pattern-related similarities among the categories of ‘Pullover’, ‘Coat’, and ‘Shirt’. Interestingly, images classified as ‘T-Shirt’ share some common features with ‘Dress’, while ‘Dress’ exhibits similarities with ‘Trouser’. We also observe that images of footwear are distinctly recognised by both models as separate from the other categories. Conversely, ‘Bag’ presents an intriguing test case, as its contours may closely resemble those of the ‘Coat’ category.

In Section 4.1, we present an idealised yet instructive physical model that elucidates why the QT-NN is inherently more adept at managing the ambiguity of input data. This model demonstrates how the QT-NN produces results with higher confidence, mimicking certain essential aspects of human cognitive processes.

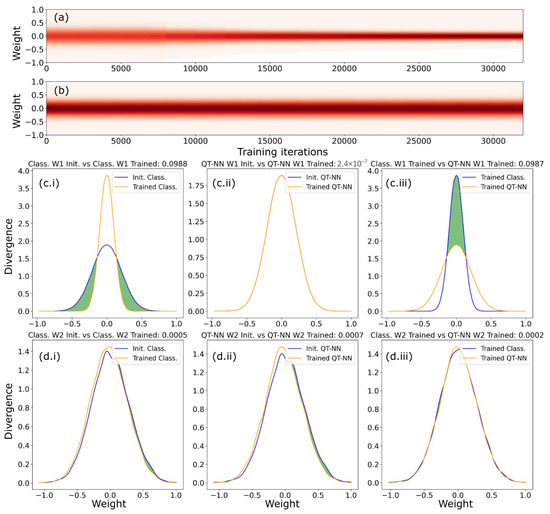

3.2. Trained Weight Distribution Comparison

In this section, we formally compare the weight distributions of the trained quantum and classical neural network models. We reveal that the QT-NN model can be trained up to 50 times faster than its classical counterpart.

In traditional neural network training, entropy can serve as a figure-of-merit that provides insights into the level of order or disorder in the connection weights of the network. From the physical perspective, high entropy typically indicates a high degree of randomness and minimal structure within the network, which corresponds to a state of low energy or minimal work invested in training. Without significant training, the weights would remain in this disordered high-entropy state. In contrast, effective training organises the weights into more structured distributions in which their values align with patterns that optimise input data processing [15,78,79,80,81,82].

However, the QT-NN model differs fundamentally in its training approach. Indeed, rather than adjusting weights to fixed values, it aims to effectively use the entire range of possible weights represented by probability distributions. This approach reduces the need for extensive training, as the QT-NN achieves optimal performance by incorporating the full spectrum of probable weights. Subsequently, the training process becomes more efficient and resource-effective, leveraging probabilistic weights to adapt dynamically rather than converging to fixed values.

To demonstrate this, we treat the weight distribution matrices as two-dimensional random signals (essentially as white-noise images) and apply a fast Fourier transform to obtain their spectral information. For simplicity, in the following, we denote the weights between the input layer and the hidden layer as , while denotes the weights between the hidden layer and the output layer. This approach enables us to analyse frequency components, revealing underlying patterns in the weight distributions that may not be apparent in the spatial domain. Then, using the histogram function from the NumPy open-source Python library, which computes the occurrences of input data that fall within each bin, we plot the weight distribution; this computation is performed continuously throughout the training process each time the neural network model is presented with new data. As a result, we obtain a plot showing the distribution of weights in the value range from −1 to 1 as a function of training iterations. The total number of training iterations is 32,000, corresponding to 32 batches of images trained over 100 epochs across 10 categories of fashion items.

In Figure 5a,b, the weight distributions exhibit Gaussian profiles centered around zero, which is consistent with the central limit theorem [83]. At the start of training, the weight distributions for both the QT-NN and classical models are identical. However, focusing on the weights in Figure 5a, training causes the distribution profile of the classical model to narrow, indicating a reduction in the diversity of weight values. This trend is further illustrated in Figure 5c.i, which shows the JSD analysis results for the initial and trained weights of the classical model.

Figure 5.

(a,b) Distributions of weights between the input layer and the hidden layer (denoted as in the main text), plotted as a function of training iterations for the QT-NN model and the classical model (labelled as ‘Class’.), respectively. (c,d) Results of the JSD cross-comparison of the initial (labelled as ‘Init’.) and trained weight distributions and . The shaded areas in the JSD plots quantify the divergence, with the numerical value presented above each panel. The roles of subpanels (i)–(iii) are detailed in the main text.

Importantly, the narrowing of the Gaussian profile does not occur in the QT-NN model, as shown in both Figure 5b,c.ii. Additionally, Figure 5c.iii provides a direct JSD comparison of between the trained classical and QT-NN models, further confirming this key difference in the training processes.

It should be emphasised that the observed value of JSD = 2.4 × in Figure 5c.ii is small but not zero, indicating that the training process of the QT-NN led to only a minor adjustment in the weights relative to the initial random weight distribution. This characteristic not only distinguishes the behaviour of the QT-NN from that of the classical model, but also suggests that a relatively small number of training epochs is required to achieve a satisfactory level of classification accuracy. Indeed, we found that the QT-NN can be trained up to 50 times faster than the classical model, underscoring the efficiency of quantum mechanics-based neural network models for solving classification problems. In particular, our results demonstrate that combining the QT effect with the additional degrees of freedom for data representation and processing facilitated by the probabilistic nature of quantum mechanics, along with a random initialisation of the weight distributions, enables more efficient training and exploitation of the model [84].

A similar analysis was conducted on the evolution of the weights for the connections between the hidden and output layers (see the corresponding panels (i)–(iii) of Figure 5d). Because both the QT-NN and the classical model employ the Softmax activation function for the output, the behaviour observed in Figure 5d is similar for both models, and is consequently of less relevance to the main discussion in this paper.

4. Discussion

4.1. Modelling of Human Judgement

To establish a connection between the QT-NN model analysed in this paper and the group of interrelated theories collectively known as QCT, we extend the illustration in Figure 2 by incorporating additional hidden layers into the artificial neural network (see Figure 6). This enhanced structure enables us to demonstrate that an idealised training process similar to the natural learning mechanisms of a biological brain (see, e.g., [85]) leads to the formation of robust neural connections that reflect how the neural network model organises and stores information [79,80].

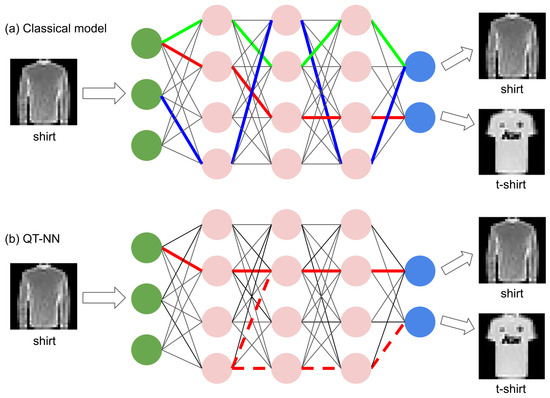

Figure 6.

Schematic illustration of the training process of (a) the classical model and (b) the QT-NN model, inspired by the discussion in [80]. The coloured lines illustrate the possible pathways of neural connection formation. Note that the additional hidden layers of neurons are included purely for the sake of illustrating more advanced neural connections; also, note that the neural connections of the QT-NN model, depicted by the solid and dashed lines in panel (b), are equally valid from the perspective of the algorithm and possess a probabilistic quantum nature.

Similar to a young child who needs to see numerous images of wild animals to distinguish them accurately, an artificial neural network must be exposed to many images of fashion items to achieve high classification accuracy. If the network is trained with only a limited number of shirt images, it can mistakenly develop an internal connection (illustrated by the red lines in Figure 6a) that causes it to classify the input as a t-shirt. However, through repeated training on the same category of inputs, the network forms a series of progressively more intricate connections (depicted by the green and blue lines in Figure 6a), enabling it to deliver correct classifications with high accuracy.

Continuing the analogy between the activation function of artificial neurons and a ball that must climb a brick wall to activate the neuron (Figure 2), we uncover a fundamental difference in the behaviour of the QT-NN model as compared to the classical model. In the classical model, the ball can only overcome the barrier (the wall) when it accumulates sufficient energy; translated to the connection weights within the network, this implies that the network must be exposed to the same category of input images multiple times. This repetition ensures that the sum of weights produces an equivalent energy value sufficient for the ball to surmount the barrier.

This strategy appears to work effectively when the network is tasked with classifying unambiguous images. However, the natural or artificially introduced ambiguity of the inputs significantly complicates the task, prompting neural network developers to explore algorithms that allow for multiple classifications aligned with expert human judgment [23].

Indeed, the approach based on the classical summation of weights has demonstrated certain limitations in modelling human perception of images that can have two or more possible interpretations [1,24]. A paradigmatic example of such images is the Necker cube, an optical illusion that alternates between two distinct three-dimensional perspectives depending on how the viewer interprets its edges and faces [86]. Alongside similar optical illusions, the phenomenon of the Necker cube illustrates the complexity of modelling perceptual ambiguity since human cognition switches between interpretations without requiring additional input or recalibration [53,60,86,87,88,89,90].

Subsequently, it has been suggested that human judgment regarding the perceived state of the Necker cube can be effectively modelled using the principles of quantum mechanics [1,53,60,91]. Specifically, it has been demonstrated that a general quantum oscillator model can plausibly simulate the switching of human perception between the two states of this optical illusion [1]. This approach provides a compelling framework for understanding the dynamic and probabilistic nature of human perceptual processes. Follow-up studies have demonstrated that incorporating a potential barrier into the quantum oscillator model allows for the capture of more intricate perceptual patterns, including extended periods of sustained perception of a single state of the cube [53,60]. This enhancement provides deeper insights into the stability and transitions of human perception in the face of ambiguous stimuli [12].

Thus, as schematically shown in Figure 6b, the formation of the training link to the t-shirt (denoted by the dashed lines) no longer represents an algorithmic mistake for the QT-NN model. Visually, a shirt resembles a t-shirt, with the primary distinction being the absence of long sleeves in the latter. A similar perceptual ambiguity can be established between the other Fashion MNIST objects, such as ‘Shirt’, ‘Coat’, and ‘Pullover’. This means that the QT-NN may form two possible pathways early in the training (denoted by the solid and dashed lines in Figure 6b) that are equally valid from the algorithmic point of view but have different probabilities to be triggered by the input image. The further training process primarily makes cosmetic refinements to these connections; in fact, the neural network can generate meaningful results as soon as its initial internal connections are established. This explains why the QT-NN model can be trained significantly faster than the classical model.

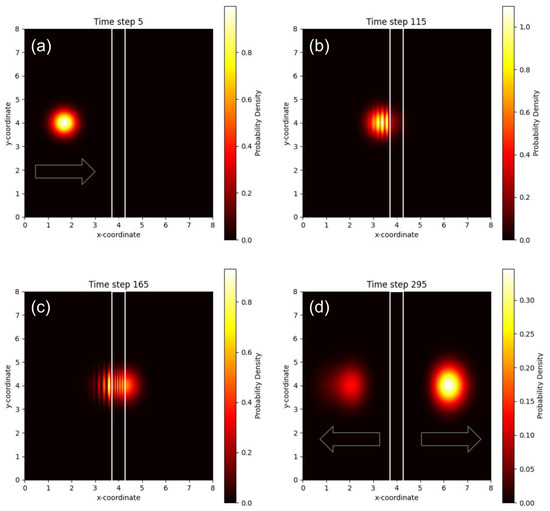

From the physical perspective, the process of tunnelling and probabilistic action of weight coefficients can be illustrated using a two-dimensional mathematical model in which the weights are represented as a Gaussian shaped energy packet, which in turn represents a single electron that moves towards a potential barrier (Figure 7). The motion of the energy packet, the direction of which is indicated by the arrows in Figure 7, and its interaction with the barrier, indicated by the white rectangle in Figure 7), are governed by the Schrödinger equation, which is solved using a Crank–Nicolson method [92]; the same numerical experimental setup has been demonstrated to accurately model problems related to human cognition [93].

Figure 7.

(a–d) Instantaneous snapshots of an energy wave packet modelling the tunnelling of an electron through a potential barrier, depicted by a white rectangle. The false-colour scale of the images encodes the computed probability density values. Within the framework of the QT-NN model used in this paper, these values correspond to the connection weights of the neural network. The arrows indicate the direction of propagation of the wave packet. Note that in panel (d), the wave packet splits into two parts, a physical phenomenon that would eventually lead to the formation of the connections illustrated by the solid and dashed lines in Figure 6b.

Plotting the probability density in two-dimensional space, Figure 7 depicts the evolution of the energy packet through four snapshots taken at distinct instances of non-dimensionalised time. The peak amplitude of the initial packet (Figure 7a) corresponds to the sum of all weights associated with a single node in the neural network. The packet subsequently interacts with the barrier (Figure 7b,c), producing both a reflected signal and a transmitted signal (Figure 7d).

The algebraic expressions underlying the operation of the QT-NN model [12] encapsulate the same physical processes, but do not offer the visual interpretability presented in Figure 7. However, in both physical interpretations, the thickness of the barrier serves as a hyperparameter that can be adjusted to control the model’s confidence level in its assessment of fashion items. Moreover, the geometry of the barrier can be made more complex, for example by incorporating a double-slit structure [93]. This complexity enhances the physics of interaction between the energy packet and the barrier, enriching the significance of this setup in the context of human cognition and decision-making modelling [1,58,59]. Furthermore, it establishes a strong connection with competing QNN architectures that are being developed to enhance computational power and algorithmic advancements, particularly as tools for identifying patterns in data [81,84].

4.2. Practical Applications and Future Work

Our future research work aims to explore and evaluate various classes of neural networks, including the QT-NN model and more traditional quantum and classical approaches. Each of these models, whether quantum-based or traditional, has unique strengths and weaknesses depending on the context in which they are applied. Therefore, it is important to acknowledge that no single model, including the QT-NN approach investigated in this paper, is universally superior. Moreover, significant experimental evidence gathered in recent years suggests that many urgent real-life problems can most effectively be addressed through hybrid approaches combining the strengths of both classical and quantum models [94,95,96].

Thus, subsequent exploration could investigate deeper how different neural network models make predictions and assess confidence levels. For instance, entropy measures on prediction outputs could highlight cases where quantum or classical algorithms overestimate their certainty. In such scenarios, hybrid classical–quantum models might intervene, potentially combined with human operator input, to refine and validate the outcomes, thereby ensuring greater accuracy and reliability.

One particular avenue for future work is designing and developing hybrid quantum–Bayesian neural networks (QBNNs) [97,98]. These models can integrate the uncertainty quantification capability of Bayesian neural networks (BNNs) with the computational advantages of the QT-NN. A proposed hybrid QBNN architecture might employ a QT layer for initial feature extraction, followed by processing these quantum-derived features using a BNN layer, and concluding with either a quantum or classical layer for output generation.

Moreover, these architectures could leverage quantum superposition and entanglement principles, enabling quantum layers to manipulate complex probability amplitudes. This approach could mimic human-like cognitive behaviours such as evaluating multiple possibilities simultaneously before reaching a decision [1,56,58,59]. Such hybridisation opens up pathways to more nuanced and flexible AI models that are capable of addressing complex real-world problems with improved reliability and decision-making capabilities.

In the fields of decision-making and QCT, superposition enables a model to consider multiple states at once, similar to how human cognition holds several options in mind before making a decision [1,55,56,58,59,91]. In a comparable way, the hybrid neural networks envisioned above should be able to mimic this behaviour by integrating new data and continuously refining decisions. Just as human subconscious processing helps to weigh options and select the best course of action, hybrid neural networks would cross-reference different models to reduce overconfidence and incorrect AI predictions.

To support this, future research could adopt standard metrics such as entropy and divergence in order to measure uncertainty levels, positioning these hybrid models as a risk mitigation strategy for unreliable AI/ML predictions. Nevertheless, in light of the fast advancement of new technologies, novel approaches remain essential; thus, this paper has introduced several novel theoretical methods grounded in the principles of physics and engineering.

4.3. Further Challenges and Opportunities

In his address to the UN Security Council, Professor Yann LeCun, Chief AI Scientist at Meta, emphasised that current AI systems fail to truly understand the real world, lack persistent memory, and are unable to effectively reason or plan [99]. Moreover, they cannot acquire new skills with the speed and efficiency of humans, or even of animals.

Moreover, LeCun’s insights suggest that simply combining vast amounts of data with high-performance computing does not necessarily equate to intelligence. Instead, genuine intelligence should stem from cognition and human-like behavioural capabilities, extending beyond the mere ability to process large datasets at high speed.

Subsequently, many modern figures-of-merit applied to AI/ML models, such as scalability in handling significantly larger datasets, may become obsolete in light of the emerging requirement for models to exhibit human-like cognitive capabilities. Indeed, training modern models remains more of an art, much like numerical modelling [100], relying heavily on empirically acquired skills [101]. In fact, fundamental ML techniques such as gradient descent, learning schedules, batch and layer normalisation, and skip connections, all of which are integral to the functionality of large-scale deep learning models, were largely introduced based on intuition, and only later validated as being practically effective.

Nevertheless, we have established that incorporating the QT-NN formalism into a neural network model does not adversely affect its scalability. While further improvements in both the QT functions and the structure of the model remain desirable from a computational science perspective, the integration of QT-NN functionality does not alter the overall responsiveness of the model to the data.

The efficiency of neural network models can be enhanced by adopting neuromorphic computing principles that emulate the biological brain [46,47,48]. Neuromorphic models process information through the nonlinear dynamical properties of physical systems rather than conventional Boolean arithmetic [47,48]. Demonstrated implementations include energy-efficient and inexpensive chips suitable for applications in ML, AI, and robotics [49,50,102,103].

The QT effect has been exploited in semiconductor devices [104,105,106,107], spectroscopy [108,109] and microscopy [109,110], and is promising for neuromorphic computing as well. While QT has been indirectly exploited via the nonlinear dynamics of QT-based devices [111,112], its direct use offers further potential. A QT-NN device can be created using tunnelling and resonant tunnelling diodes [104,106], as well as systems with negative differential resistance [113,114], all of which consume less power compared to conventional circuits [111]. Additionally, scanning tunnelling microscopy instrumentation [110,115] and quantum dots [113,116] provide promising platforms for QT-NN implementation in quantum neuromorphic systems.

Subsequently, similarly to floating-point units such as Intel 8087 (a coprocessor for the 8086 line of microprocessors that significantly enhanced mathematical performance of personal computers while consuming minimal electric power) neuromorphic QT chips could complement conventional software to accelerate the training and deployment of neural network models [48]. The introduction of such neuromorphic chips and related technologies [117] promises to relax modern computer science-driven constraints, improve overall performance, and bring cognitive functionality to AI systems.

5. Conclusions

This study has highlighted the potential of the QT-NN model to mimic human-like perception and judgment, providing a promising alternative to traditional ML approaches. Using quantum cognition theory, our approach replicates cognitive processes observed in human decision-making, demonstrating that the rsulting QT-NN can classify image datasets with enhanced flexibility compared to conventional models. This result underscores the growing synergy between quantum technologies and AI, offering new avenues for AI systems to approach problems with more human-like reasoning and adaptability.

Our results not only open up new possibilities for AI development but also offer a pathway to refining decision-making in complex real-world scenarios. While current ML systems generally excel in tasks like image recognition, they often require human intervention when faced with ambiguous or erroneous situations. As demonstrated in this paper, the QT-NN model can bridge this gap by simulating human judgment, thereby reducing the reliance on human operators and potentially revolutionising fields where precision and nuanced decision-making are crucial, such as healthcare, autonomous systems, and cognitive robotics.

In addition to the future directions outlined above, we note that we are also developing a natural language processing version of the QT-NN model, with the results of this research to be reported in a separate publication.

Author Contributions

I.S.M. developed the classical and quantum neural network models used in this work and obtained the primary results. M.M. conducted the statistical analysis and authored the respective sections of the article, including discussions on practical applications and future work. I.S.M. edited the overall text with contributions from M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This article has no additional data.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | artificial intelligence |

| BNN | Bayesian neural network |

| DNN | deep neural network |

| JSD | Jensen–Shannon divergence |

| KLD | Kullback–Leibler divergence |

| ML | machine learning |

| MNIST | Modified National Institute of Standards and Technology database |

| QBNN | quantum–Bayesian neural network |

| QCT | quantum cognition theory |

| QNN | quantum neural network |

| QT | quantum tunnelling |

| QT-NN | quantum tunnelling neural network |

| ReLU | rectified linear unit |

| SE | Shannon entropy |

References

- Busemeyer, J.R.; Bruza, P.D. Quantum Models of Cognition and Decision; Oxford University Press: New York, NY, USA, 2012. [Google Scholar]

- Sniazhko, S. Uncertainty in decision-making: A review of the international business literature. Cogent Bus. Manag. 2019, 6, 1650692. [Google Scholar] [CrossRef]

- Gu, Y.; Gu, S.; Lei, Y.; Li, H. From uncertainty to anxiety: How uncertainty fuels anxiety in a process mediated by intolerance of uncertainty. Neural Plast. 2020, 2020, 8866386. [Google Scholar] [CrossRef]

- Strogatz, S.H. Nonlinear Dynamics and Chaos. With Applications to Physics, Biology, Chemistry, and Engineering, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Lucero, K.S.; Chen, P. What do reinforcement and confidence have to do with it? A systematic pathway analysis of knowledge, competence, confidence, and intention to change. J. Eur. CME. 2020, 9, 1834759. [Google Scholar] [CrossRef]

- Hüllermeier, E.; Waegeman, W. Aleatoric and epistemic uncertainty in machine learning: An introduction to concepts and methods. Mach. Learn. 2021, 110, 457–506. [Google Scholar] [CrossRef]

- Gawlikowski, J.; Tassi, C.R.N.; Ali, M.; Lee, J.; Humt, M.; Feng, J.; Kruspe, A.; Triebel, R.; Jung, P.; Roscher, R.; et al. A survey of uncertainty in deep neural networks. Artif. Intell. Rev. 2023, 56, 1513–1589. [Google Scholar] [CrossRef]

- Guha, R.; Velegol, D. Harnessing Shannon entropy-based descriptors in machine learning models to enhance the prediction accuracy of molecular properties. J. Cheminform. 2023, 15, 54. [Google Scholar] [CrossRef]

- Wasilewski, J.; Paterek, T.; Horodecki, K. Uncertainty of feed forward neural networks recognizing quantum contextuality. J. Phys. A Math. Theor. 2024, 56, 455305. [Google Scholar] [CrossRef]

- Karaca, Y. Multi-chaos, fractal and multi-fractional AI in different complex systems. In Multi-Chaos, Fractal and Multi-Fractional Artificial Intelligence of Different Complex Systems; Karaca, Y., Baleanu, D., Zhang, Y.D., Gervasi, O., Moonis, M., Eds.; Academic Press: Cambridge, MA, USA, 2022; pp. 21–54. [Google Scholar] [CrossRef]

- Bobadilla-Suarez, S.; Guest, O.; Love, B.C. Subjective value and decision entropy are jointly encoded by aligned gradients across the human brain. Commun. Biol. 2020, 3, 597. [Google Scholar] [CrossRef] [PubMed]

- Maksymov, I.S. Quantum-tunneling deep neural network for optical illusion recognition. APL Mach. Learn. 2024, 2, 036107. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Pearson-Prentice Hall: Singapore, 1998. [Google Scholar]

- Kim, P. MATLAB Deep Learning With Machine Learning, Neural Networks and Artificial Intelligence; Apress: Berkeley, CA, USA, 2017. [Google Scholar]

- Franchi, G.; Bursuc, A.; Aldea, E.; Dubuisson, S.; Bloch, I. TRADI: Tracking Deep Neural Network Weight Distributions. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; pp. 105–121. [Google Scholar]

- Erhan, D.; Bengio, Y.; Courville, A.; Manzagol, P.A.; Vincent, P.; Bengio, S. Why does unsupervised pre-training help deep learning? J. Mach. Learn. Res. 2010, 11, 625–660. [Google Scholar]

- Jospin, L.V.; Laga, H.; Boussaid, F.; Buntine, W.; Bennamoun, M. Hands-on Bayesian neural networks–A tutorial for deep learning users. IEEE Comput. Intell. Mag. 2022, 17, 29–48. [Google Scholar] [CrossRef]

- Wang, D.B.; Feng, L.; Zhang, M.L. Rethinking Calibration of Deep Neural Networks: Do Not Be Afraid of Overconfidence. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–14 December 2021; Volume 34, pp. 11809–11820. [Google Scholar]

- Wei, H.; Xie, R.; Cheng, H.; Feng, L.; An, B.; Li, Y. Mitigating Neural Network Overconfidence with Logit Normalization. In Proceedings of the 39th International Conference on Machine Learning, Baltimore, MD, USA, 17–23 July 2022; pp. 23631–23644. [Google Scholar]

- Melotti, G.; Premebida, C.; Bird, J.J.; Faria, D.R.; Gonçalves, N. Reducing overconfidence predictions in autonomous driving perception. IEEE Access 2022, 10, 54805–54821. [Google Scholar] [CrossRef]

- Bouteska, A.; Harasheh, M.; Abedin, M.Z. Revisiting overconfidence in investment decision-making: Further evidence from the U. S. market. Res. Int. Bus. Financ. 2023, 66, 102028. [Google Scholar] [CrossRef]

- Mukhoti, J.; Kirsch, A.; van Amersfoort, J.; Torr, P.H.; Gal, Y. Deep Deterministic Uncertainty: A New Simple Baseline. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 24384–24394. [Google Scholar] [CrossRef]

- Weiss, M.; Gómez, A.G.; Tonella, P. Generating and detecting true ambiguity: A forgotten danger in DNN supervision testing. Empir. Softw. Eng. 2023, 146. [Google Scholar] [CrossRef]

- Nguyen, A.; Yosinski, J.; Clune, J. Deep neural networks are easily fooled: High confidence predictions for unrecognizable images. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 427–436. [Google Scholar] [CrossRef]

- Guo, C.; Pleiss, G.; Sun, Y.; Weinberger, K.Q. On calibration of modern neural networks. In Proceedings of the 34th International Conference on Machine Learning–Volume 70. JMLR.org, Sydney, NSW, Australia, 6–11 August 2017; ICML’17. pp. 1321–1330. [Google Scholar]

- Moon, J.; Kim, J.; Shin, Y.; Hwang, S. Confidence-aware learning for deep neural networks. In Proceedings of the 37th International Conference on Machine Learning, Virtual Event, 13–18 July 2020; ICML’20. pp. 7034–7044. [Google Scholar]

- Wang, J.; Ai, J.; Lu, M.; Liu, J.; Wu, Z. Predicting neural network confidence using high-level feature distance. Inf. Softw. Technol. 2023, 159, 107214. [Google Scholar] [CrossRef]

- Rafiei, F.; Shekhar, M.; Rahnev, D. The neural network RTNet exhibits the signatures of human perceptual decision-making. Nat. Hum. Behav. 2024, 8, 1752–1770. [Google Scholar] [CrossRef]

- Liu, S.; Xiao, T.P.; Kwon, J.; Debusschere, B.J.; Agarwal, S.; Incorvia, J.A.C.; Bennett, C.H. Bayesian neural networks using magnetic tunnel junction-based probabilistic in-memory computing. Front. Nanotechnol. 2022, 4. [Google Scholar] [CrossRef]

- Wan, K.H.; Dahlsten, O.; Kristjánsson, H.; Gardner, R.; Kim, M.S. Quantum generalisation of feedforward neural networks. Npj Quantum Inf. 2017, 3, 36. [Google Scholar] [CrossRef]

- Beer, K.; Bondarenko, D.; Farrelly, T.; Osborne, T.J.; Salzmann, R.; Scheiermann, D.; Wolf, R. Training deep quantum neural networks. Nat. Commun. 2020, 11, 808. [Google Scholar] [CrossRef]

- Yan, P.; Li, L.; Jin, M.; Zeng, D. Quantum probability-inspired graph neural network for document representation and classification. Neurocomputing 2021, 445, 276–286. [Google Scholar] [CrossRef]

- Zhao, C.; Gao, X.S. QDNN: Deep neural networks with quantum layers. Quantum Mach. Intell. 2021, 3, 15. [Google Scholar] [CrossRef]

- Choi, S.; Salamin, Y.; Roques-Carmes, C.; Dangovski, R.; Luo, D.; Chen, Z.; Horodynski, M.; Sloan, J.; Uddin, S.Z.; Soljačić, M. Photonic probabilistic machine learning using quantum vacuum noise. Nat. Commun. 2024, 15, 7760. [Google Scholar] [CrossRef]

- Hiesmayr, B.C. A quantum information theoretic view on a deep quantum neural network. AIP Conf. Proc. 2024, 3061, 020001. [Google Scholar] [CrossRef]

- Pira, L.; Ferrie, C. On the interpretability of quantum neural networks. Quantum Mach. Intell. 2024, 6, 52. [Google Scholar] [CrossRef]

- Peral-García, D.; Cruz-Benito, J.; García-Peñalvo, F.J. Systematic literature review: Quantum machine learning and its applications. Comput. Sci. Rev. 2024, 51, 100619. [Google Scholar] [CrossRef]

- van de Schoot, R.; Depaoli, S.; King, R.; Kramer, B.; Märtens, K.; Tadesse, M.G.; Vannucci, M.; Gelman, A.; Veen, D.; Willemsen, J.; et al. Bayesian statistics and modelling. Nat. Rev. Methods Prim. 2021, 1, 1. [Google Scholar] [CrossRef]

- Monteiro, C.A.; Filho, G.I.S.; Costa, M.H.J.; de Paula Neto, F.M.; de Oliveira, W.R. Quantum neuron with real weights. Neural Netw. 2021, 143, 698–708. [Google Scholar] [CrossRef]

- Pan, X.; Lu, Z.; Wang, W.; Hua, Z.; Xu, Y.; Li, W.; Cai, W.; Li, X.; Wang, H.; Song, Y.P.; et al. Deep quantum neural networks on a superconducting processor. Nat. Commun. 2023, 14, 4006. [Google Scholar] [CrossRef]

- Qiu, P.H.; Chen, X.G.; Shi, Y.W. Detecting entanglement with deep quantum neural networks. IEEE Access 2019, 7, 94310–94320. [Google Scholar] [CrossRef]

- Bai, Q.; Hu, X. Superposition-enhanced quantum neural network for multi-class image classification. Chin. J. Phys. 2024, 89, 378–389. [Google Scholar] [CrossRef]

- Nielsen, M.; Chuang, I. Quantum Computation and Quantum Information; Oxford University Press: New York, NY, USA, 2002. [Google Scholar]

- Maronese, M.; Destri, C.; Prati, E. Quantum activation functions for quantum neural networks. Quantum Inf. Process. 2023, 21, 128. [Google Scholar] [CrossRef]

- Parisi, L.; Neagu, D.; Ma, R.; Campean, F. Quantum ReLU activation for Convolutional Neural Networks to improve diagnosis of Parkinson’s disease and COVID-19. Expert Syst. Appl. 2022, 187, 115892. [Google Scholar] [CrossRef]

- Tanaka, G.; Yamane, T.; Héroux, J.B.; Nakane, R.; Kanazawa, N.; Takeda, S.; Numata, H.; Nakano, D.; Hirose, A. Recent advances in physical reservoir computing: A review. Neural Newt. 2019, 115, 100–123. [Google Scholar] [CrossRef]

- Marcucci, G.; Pierangeli, D.; Conti, C. Theory of neuromorphic computing by waves: Machine learning by rogue waves, dispersive shocks, and solitons. Phys. Rev. Lett. 2020, 125, 093901. [Google Scholar] [CrossRef] [PubMed]

- Maksymov, I.S. Analogue and physical reservoir computing using water waves: Applications in power engineering and beyond. Energies 2023, 16, 5366. [Google Scholar] [CrossRef]

- Onen, M.; Emond, N.; Wang, B.; Zhang, D.; Ross, F.M.; Li, J.; Yildiz, B.; del Alamo, J.A. Nanosecond protonic programmable resistors for analog deep learning. Science 2022, 377, 539–543. [Google Scholar] [CrossRef] [PubMed]

- Ye, N.; Cao, L.; Yang, L.; Zhang, Z.; Fang, Z.; Gu, Q.; Yang, G.Z. Improving the robustness of analog deep neural networks through a Bayes-optimized noise injection approach. Commun. Eng. 2023, 2, 25. [Google Scholar] [CrossRef]

- McQuarrie, D.A.; Simon, J.D. Physical Chemistry—A Molecular Approach; Prentice Hall: New York, NY, USA, 1997. [Google Scholar]

- Griffiths, D.J. Introduction to Quantum Mechanics; Prentice Hall: Upper Saddle River, NJ, USA, 2004. [Google Scholar]

- Maksymov, I.S. Quantum-inspired neural network model of optical illusions. Algorithms 2024, 17, 30. [Google Scholar] [CrossRef]

- Atmanspacher, H.; Filk, T.; Römer, H. Quantum Zeno features of bistable perception. Biol. Cybern. 2004, 90, 33–40. [Google Scholar] [CrossRef]

- Khrennikov, A. Quantum-like brain: “Interference of minds”. Biosystems 2006, 84, 225–241. [Google Scholar] [CrossRef] [PubMed]

- Pothos, E.M.; Busemeyer, J.R. Quantum Cognition. Annu. Rev. Psychol. 2022, 73, 749–778. [Google Scholar] [CrossRef] [PubMed]

- Galam, S. Sociophysics: A Physicist’s Modeling of Psycho-Political Phenomena; Springer: New York, NY, USA, 2012. [Google Scholar]

- Maksymov, I.S.; Pogrebna, G. Quantum-mechanical modelling of asymmetric opinion polarisation in social networks. Information 2024, 15, 170. [Google Scholar] [CrossRef]

- Maksymov, I.S.; Pogrebna, G. The physics of preference: Unravelling imprecision of human preferences through magnetisation dynamics. Information 2024, 15, 413. [Google Scholar] [CrossRef]

- Benedek, G.; Caglioti, G. Graphics and Quantum Mechanics–The Necker Cube as a Quantum-like Two-Level System. In Proceedings of the 18th International Conference on Geometry and Graphics, Milan, Italy, 3–7 August 2018; pp. 161–172. [Google Scholar]

- Georgiev, D.D.; Glazebrook, J.F. The quantum physics of synaptic communication via the SNARE protein complex. Prog. Biophys. Mol. 2018, 135, 16–29. [Google Scholar] [CrossRef] [PubMed]

- Georgiev, D.D. Quantum Information and Consciousness; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Georgiev, D.D. Causal potency of consciousness in the physical world. Int. J. Mod. Phys. B 2024, 38, 2450256. [Google Scholar] [CrossRef]

- Chitambar, E.; Gour, G. Quantum resource theories. Rev. Mod. Phys. 2019, 91, 025001. [Google Scholar] [CrossRef]

- Xiao, H.; Rasul, K.; Vollgraf, R. Fashion-MNIST: A Novel Image Dataset for Benchmarking Machine Learning Algorithms. arXiv 2017, arXiv:1708.07747. [Google Scholar]

- Pope, P.E.; Zhu, C.; Abdelfattah, M.; Goldblum, M.; Goldstein, T. The Intrinsic Dimension of Images and Its Impact on Learning. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual Event, Austria, 3–7 May 2021. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J.H. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Series in Statistics; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Simard, P.Y.; Steinkraus, D.; Platt, J.C. Best practices for convolutional neural networks applied to visual document analysis. In Proceedings of the Seventh International Conference on Document Analysis and Recognition, Edinburgh, Scotland, UK, 3–6 August 2003; pp. 958–963. [Google Scholar] [CrossRef]

- Deng, L. The MNIST database of handwritten digit images for machine learning research. IEEE Signal Process. Mag. 2012, 29, 141–142. [Google Scholar] [CrossRef]

- Kayed, M.; Anter, A.; Mohamed, H. Classification of Garments from Fashion MNIST Dataset Using CNN LeNet-5 Architecture. In Proceedings of the 2020 International Conference on Innovative Trends in Communication and Computer Engineering (ITCE), Aswan, Egypt, 8–9 February 2020; pp. 238–243. [Google Scholar] [CrossRef]

- Rudolph, M.S.; Lerch, S.; Thanasilp, S.; Kiss, O.; Shaya, O.; Vallecorsa, S.; Grossi, M.; Holmes, Z. Trainability barriers and opportunities in quantum generative modeling. Npj Quantum Inf. 2024, 10, 116. [Google Scholar] [CrossRef]

- Csiszar, I. I-divergence geometry of probability distributions and minimization problems. Ann. Probab. 1975, 3, 146–158. [Google Scholar] [CrossRef]

- Burnham, K.P.; Anderson, D.R. Model Selection and Multi-Model Inference; Spriger: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Wanjiku, R.N.; Nderu, L.; Kimwele, M. Dynamic fine-tuning layer selection using Kullback–Leibler divergence. Eng. Rep. 2023, 5, e12595. [Google Scholar] [CrossRef]

- Endres, D.M.; Schindelin, J.E. A new metric for probability distributions. IEEE Trans. Inf. Theory 2003, 49, 1858–1860. [Google Scholar] [CrossRef]

- Nielsen, F. On the Jensen–Shannon symmetrization of distances relying on abstract means. Entropy 2019, 21, 485. [Google Scholar] [CrossRef] [PubMed]

- Stehman, S.V. Selecting and interpreting measures of thematic classification accuracy. Remote Sens. Environ. 1997, 62, 77–89. [Google Scholar] [CrossRef]

- Go, J.; Baek, B.; Lee, C. Analyzing Weight Distribution of Feedforward Neural Networks and Efficient Weight Initialization. In Proceedings of the Structural, Syntactic, and Statistical Pattern Recognition, Lisbon, Portugal, 18–20 August 2004; pp. 840–849. [Google Scholar]

- Nguyen, A.; Clune, J.; Bengio, Y.; Dosovitskiy, A.; Yosinski, J. Plug & Play Generative Networks: Conditional Iterative Generation of Images in Latent Space. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3510–3520. [Google Scholar] [CrossRef]

- Yosinski, J. How AI Detectives Are Cracking Open the Black Box of Deep Learning. Science, 6 July 2017. Available online: https://www.science.org/content/article/how-ai-detectives-are-cracking-open-black-box-deep-learning (accessed on 4 December 2024).

- Ohzeki, M.; Okada, S.; Terabe, M.; Taguchi, S. Optimization of neural networks via finite-value quantum fluctuations. Sci. Rep. 2018, 8, 9950. [Google Scholar] [CrossRef]

- Eilertsen, G.; Jönsson, D.; Ropinski, T.; Unger, J.; Ynnerman, A. Classifying the classifier: Dissecting the weight space of neural networks. In Proceedings of the European Conference on Artificial Intelligence (ECAI 2020), Santiago de Compostela, Spain, 29 August–8 September 2020; Volume 325, pp. 1119–1126. [Google Scholar]

- Billingsley, P. Probability and Measure, 3rd ed.; Wiley: New York, NY, USA, 1995. [Google Scholar]

- Biamonte, J.; Wittek, P.; Pancotti, N.; Rebentrost, P.; Wiebe, N.; Lloyd, S. Quantum machine learning. Nature 2017, 549, 195–202. [Google Scholar] [CrossRef]

- Bavelier, D.; Green, C.S.; Dye, M.W.G. Brain plasticity through the life span: Learning to learn and action video games. Annu. Rev. Neurosci. 2012, 35, 391–416. [Google Scholar] [CrossRef]

- Kornmeier, J.; Bach, M. The Necker cube–an ambiguous figure disambiguated in early visual processing. Vis. Res. 2005, 45, 955–960. [Google Scholar] [CrossRef]

- Inoue, M.; Nakamoto, K. Dynamics of cognitive interpretations of a Necker cube in a chaos neural network. Prog. Theor. Phys. 1994, 92, 501–508. [Google Scholar] [CrossRef]

- Gaetz, M.; Weinberg, H.; Rzempoluck, E.; Jantzen, K.J. Neural network classifications and correlation analysis of EEG and MEG activity accompanying spontaneous reversals of the Necker cube. Cogn. Brain Res. 1998, 6, 335–346. [Google Scholar] [CrossRef] [PubMed]

- Araki, O.; Tsuruoka, Y.; Urakawa, T. A neural network model for exogenous perceptual alternations of the Necker cube. Cogn. Neurodyn. 2020, 14, 229–237. [Google Scholar] [CrossRef]

- Joos, E.; Giersch, A.; Hecker, L.; Schipp, J.; Heinrich, S.P.; van Elst, L.T.; Kornmeier, J. Large EEG amplitude effects are highly similar across Necker cube, smiley, and abstract stimuli. PLoS ONE 2020, 15, e0232928. [Google Scholar] [CrossRef]

- Atmanspacher, H.; Filk, T. A proposed test of temporal nonlocality in bistable perception. J. Math. Psychol. 2010, 54, 314–321. [Google Scholar] [CrossRef]

- Khan, A.; Ahsan, M.; Bonyah, E.; Jan, R.; Nisar, M.; Abdel-Aty, A.H.; Yahia, I.S. Numerical solution of Schrödinger equation by Crank–Nicolson method. Math. Probl. Eng. 2022, 2022, 6991067. [Google Scholar] [CrossRef]

- Maksymov, I.S. Quantum Mechanics of Human Perception, Behaviour and Decision-Making: A Do-It-Yourself Model Kit for Modelling Optical Illusions and Opinion Formation in Social Networks. arXiv 2024, arXiv:2404.10554. [Google Scholar]

- Liang, Y.; Peng, W.; Zheng, Z.J.; Silvén, O.; Zhao, G. A hybrid quantum–classical neural network with deep residual learning. Neural Netw. 2021, 143, 133–147. [Google Scholar] [CrossRef] [PubMed]

- Domingo, L.; Djukic, M.; Johnson, C.; Borondo, F. Binding affinity predictions with hybrid quantum-classical convolutional neural networks. Sci. Rep. 2023, 13, 17951. [Google Scholar] [CrossRef]

- Gircha, A.I.; Boev, A.S.; Avchaciov, K.; Fedichev, P.O.; Fedorov, A.K. Hybrid quantum-classical machine learning for generative chemistry and drug design. Sci. Rep. 2023, 13, 8250. [Google Scholar] [CrossRef]

- Nguyen, N.; Chen, K.C. Bayesian quantum neural networks. IEEE Access 2022, 10, 54110–54122. [Google Scholar] [CrossRef]

- Sakhnenko, A.; Sikora, J.; Lorenz, J. Building Continuous Quantum-Classical Bayesian Neural Networks for a Classical Clinical Dataset. In Proceedings of the Recent Advances in Quantum Computing and Technology, Budapest, Hungary, 19–20 June 2024; ReAQCT ’24. pp. 62–72. [Google Scholar] [CrossRef]

- LeCun, Y. Security Council Debates Use of Artificial Intelligence in Conflicts, Hears Calls for UN Framework to Avoid Fragmented Governance. Video Presentation, 19 December 2024. Available online: https://press.un.org/en/2024/sc15946.doc.htm (accessed on 4 January 2025).

- Press, W.H.; Teukolsky, S.A.; Vetterling, W.T.; Flannery, B.P. Numerical Recipes: The Art of Scientific Computing, 3rd ed.; Cambridge University Press: Cambridge, MA, USA, 2007. [Google Scholar]

- Li, H.; Xu, Z.; Taylor, G.; Studer, C.; Goldstein, T. Visualizing the loss landscape of neural nets. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; NIPS’18. pp. 6391–6401. [Google Scholar]

- Trensch, G.; Morrison, A. A system-on-chip based hybrid neuromorphic compute node architecture for reproducible hyper-real-time simulations of spiking neural networks. Front. Neuroinform. 2022, 16, 884033. [Google Scholar] [CrossRef] [PubMed]

- Abbas, A.H.; Abdel-Ghani, H.; Maksymov, I.S. Classical and Quantum Physical Reservoir Computing for Onboard Artificial Intelligence Systems: A Perspective. Dynamics 2024, 4, 643–670. [Google Scholar] [CrossRef]

- Esaki, L. New phenomenon in narrow germanium p − n junctions. Phys. Rev. 1958, 109, 603–604. [Google Scholar] [CrossRef]

- Kahng, D.; Sze, S.M. A floating gate and its application to memory devices. Bell Syst. Tech. J. 1967, 46, 1288–1295. [Google Scholar] [CrossRef]

- Chang, L.L.; Esaki, L.; Tsu, R. Resonant tunneling in semiconductor double barriers. Appl. Phys. Lett. 1974, 12, 593–595. [Google Scholar] [CrossRef]

- Ionescu, A.M.; Riel, H. Tunnel field-effect transistors as energy-efficient electronic switches. Nature 2011, 479, 329–337. [Google Scholar] [CrossRef] [PubMed]

- Modinos, A. Field emission spectroscopy. Prog. Surf. Sci. 1993, 42, 45. [Google Scholar] [CrossRef]

- Rahman Laskar, M.A.; Celano, U. Scanning probe microscopy in the age of machine learning. APL Mach. Learn. 2023, 1, 041501. [Google Scholar] [CrossRef]

- Binnig, G.; Rohrer, H. Scanning tunneling microscopy—From birth to adolescence. Rev. Mod. Phys. 1987, 59, 615–625. [Google Scholar] [CrossRef]

- Feng, Y.; Tang, M.; Sun, Z.; Qi, Y.; Zhan, X.; Liu, J.; Zhang, J.; Wu, J.; Chen, J. Fully flash-based reservoir computing network with low power and rich states. IEEE Trans. Electron Devices 2023, 70, 4972–4975. [Google Scholar] [CrossRef]

- Kwon, D.; Woo, S.Y.; Lee, K.H.; Hwang, J.; Kim, H.; Park, S.H.; Shin, W.; Bae, J.H.; Kim, J.J.; Lee, J.H. Reconfigurable neuromorphic computing block through integration of flash synapse arrays and super-steep neurons. Sci. Adv. 2023, 9, eadg9123. [Google Scholar] [CrossRef] [PubMed]

- Yilmaz, Y.; Mazumder, P. Image processing by a programmable grid comprising quantum dots and memristors. IEEE Trans. Nanotechnol. 2013, 12, 879–887. [Google Scholar] [CrossRef]

- Kent, R.M.; Barbosa, W.A.S.; Gauthier, D.J. Controlling chaos using edge computing hardware. Nat. Commun. 2024, 15, 3886. [Google Scholar] [CrossRef] [PubMed]

- Bastiaans, K.M.; Benschop, T.; Chatzopoulos, D.; Cho, D.; Dong, Q.; Jin, Y.; Allan, M.P. Amplifier for scanning tunneling microscopy at MHz frequencies. Rev. Sci. Instrum. 2018, 89, 093709. [Google Scholar] [CrossRef]

- Marković, D.; Grollier, J. Quantum neuromorphic computing. Appl. Phys. Lett. 2020, 117, 150501. [Google Scholar] [CrossRef]

- van der Made, P. Learning How to Learn: Neuromorphic AI Inference at the Edge. BrainChip White Pap. 2022, 12. Available online: https://brainchip.com/learning-how-to-learn-neuromorphic-ai-inference-at-the-edge/ (accessed on 5 January 2024).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).