Abstract

Text localization and recognition from natural scene images has gained a lot of attention recently due to its crucial role in various applications, such as autonomous driving and intelligent navigation. However, two significant gaps exist in this area: (1) prior research has primarily focused on recognizing English text, whereas Arabic text has been underrepresented, and (2) most prior research has adopted separate approaches for scene text localization and recognition, as opposed to one integrated framework. To address these gaps, we propose a novel bilingual end-to-end approach that localizes and recognizes both Arabic and English text within a single natural scene image. Specifically, our approach utilizes pre-trained CNN models (ResNet and EfficientNetV2) with kernel representation for localization text and RNN models (LSTM and BiLSTM) with an attention mechanism for text recognition. In addition, the AraElectra Arabic language model was incorporated to enhance Arabic text recognition. Experimental results on the EvArest, ICDAR2017, and ICDAR2019 datasets demonstrated that our model not only achieves superior performance in recognizing horizontally oriented text but also in recognizing multi-oriented and curved Arabic and English text in natural scene images.

1. Introduction

There is a growing interest in image-processing methods such as object detection [1], scene text localization [2], and scene text recognition (STR) [3] due to the increasing use of applications that interact with images and their components, such as text, signage, and other objects. Natural scene images, which are unmodified digital representations of real-world settings, are commonly used in these applications and often contain textual information [4]. As a result, the topic of localizing and recognizing text within natural scene images has gained significant attention in recent years.

In the past, localizing and recognizing text from natural scene images were seen as separate steps in the process of extracting text from images. This approach involves first identifying and isolating the textual areas within the input images, and then using a text recognizer to determine the sequence of the recognized text from the cropped words. However, this method may have some drawbacks. Firstly, errors can accumulate between the two tasks, and inaccurate localization results can have a significant impact on text recognition performance. Additionally, if each task is optimized individually, it may result in decreased performance in text recognition. Finally, this approach requires a large amount of memory and has poor inference efficiency [5].

In recent years, there have been significant advancements in deep learning (DL) techniques that have enabled the development of end-to-end deep neural frameworks for accurately recognizing text in images of natural scenes. The end-to-end method integrates the localization and recognition processes into a unified framework. This approach is more likely to produce superior results because accurate text localization greatly enhances text recognition accuracy. Implementing a trainable framework that can simultaneously localize and recognize text has shown substantial improvements in overall performance, particularly for text with irregular shapes in uncontrolled environments [6].

Recognizing text in natural scene images is essential for many content-based applications, such as language translation, security systems for identifying names or company logos on cards, reading street signs for navigation, understanding signage in driver assistance systems, aiding navigation for individuals with visual impairments, and facilitating check processing at banks. To achieve these applications, it is necessary to have techniques that can accurately and consistently localize scene text [7].

Text localization poses significant challenges due to the diverse visual characteristics of texts and complex backgrounds. Texts differ greatly from conventional objects, often appearing in multiple styles and varying in size, color, font, language, and orientation. Additionally, environmental elements such as windows and railings can resemble written language, and natural features like grass and leaves may occasionally mimic textual patterns. These variations and ambiguities make precise text localization [8] more complicated.

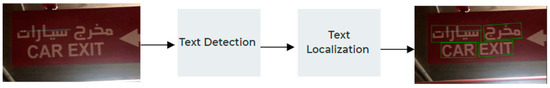

The process of localizing textual information from natural scene images is depicted in Figure 1. The first stage, called text detection, determines whether there is text within an image. Then, text localization identifies the exact location of the text. It involves clustering the identified text into coherent regions while minimizing background interference and establishing a bounding box around the text [9].

Figure 1.

Phases of localization text in natural scene images, image from the EvArEST dataset [10]. Green box demonstrated the result of localization phase.

Recognizing text in natural scene images presents greater challenges because of the intricate text patterns and complex backgrounds that vary significantly in natural settings. Additionally, text in natural scenes can have diverse characteristics, including different fonts, sizes, forms, orientations, and layouts [11]. Recognizing Arabic text, in particular, is more complex than recognizing English text [12].

Arabic is one of the six most widely spoken languages globally and serves as an official language in 26 states across the Arab world, particularly in the Middle East [13]. It is spoken by over 447 million native speakers [14], and its various dialects influence its written form, which progresses from right to left. Arabic characters can take different forms based on their positions within words: initial, medial, final, and isolated [10].

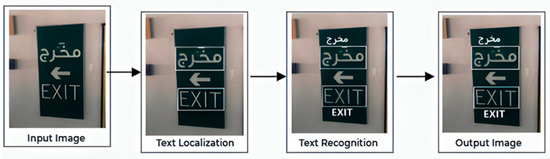

Alrobah et al. [15] proposed a framework for character recognition consisting of four main steps, as shown in Figure 2. The preprocessing phase involves applying techniques such as binarization and noise removal to improve image quality. Segmentation follows, dividing paragraphs into lines and then further segmenting lines into individual words. In the feature extraction stage, each word is treated as a separate entity and input into the machine-learning model after segmentation. Feature extraction is important and complex, and it has a significant impact on the performance of classifiers [16]. Finally, the extracted features are inputted into machine-learning classifiers for recognition.

Figure 2.

Phases of recognition text from natural scene images, image from the EvArEST dataset [10]. The word in image means “Elegant” in English terms.

Previous research on localizing and recognizing Arabic and English text from natural scene images has identified several limitations:

- Many studies on Arabic text have focused on recognizing handwritten Arabic text [17,18,19,20], with relatively few focused on recognizing Arabic text within natural scene images.

- To our knowledge, no researchers have developed an end-to-end STR system capable of integrating the tasks of localizing and recognizing bilingual Arabic and English text within a unified framework. Most existing methods treat these tasks separately.

- The most advanced studies on localizing Arabic text from natural scene images have primarily addressed horizontal text, neglecting the challenges posed by multi-oriented and curved Arabic text.

- To our knowledge, our study is the first to utilize advanced Arabic language models like AraElectra to enhance the recognition accuracy of Arabic text from natural scene images.

This study aims to address a research gap by using an end-to-end STR approach to accurately locate and recognize multi-oriented and curved bilingual Arabic and English texts from natural scene images. Our model is based on the PAN++ framework [21], which was developed for localizing and recognizing multi-oriented and curved English text from natural scene images. In our study, we propose using the pretrained EfficientNetV2 convolutional neural network (CNN) model for feature extraction instead of the ResNet model. EfficientNetV2 is a recent, smaller, and faster neural network for image recognition. Additionally, EfficientNetV2 significantly outperforms previous CNN models in extracting features from images. We compare the performance of these two models in locating Arabic and English text that is both multi-oriented and curved. Additionally, we suggest using the bidirectional long short-term memory (BiLSTM) model instead of the LSTM model in the recognition phase to effectively recognize multi-oriented and curved bilingual Arabic and English text from natural scene images. The BiLSTM model aims to capture additional contextual information by processing the text in forward and backward networks. The BiLSTM model enables us to handle long-term dependencies more effectively and enhances the overall accuracy of recognizing text. We conduct a comparative analysis of the LSTM and BiLSTM models. A key novelty of our research is the integration of the Arabic language model, AraElectra, in the recognition phase to improve the accuracy of Arabic text recognition. Here is a summary of the contributions of our work:

- To the best of our knowledge, this is the first study to utilize end-to-end STR for localizing and recognizing Arabic text only, as well as bilingual Arabic and English text from natural scene images.

- To the best of our knowledge, this is the first study to propose an EvArest dataset that contains multi-oriented and curved text for localizing and recognizing Arabic, as well as bilingual Arabic and English text from natural scene images.

- We employed a pretrained CNN model, EfficientNetV2, to extract features from bilingual Arabic and English texts in the images.

- We utilized BiLSTM with an attention mechanism to recognize bilingual Arabic and English text from natural scene images.

- We integrated the Arabic language model named AraElectra with our end-to-end STR model to enhance the recognition of Arabic text from natural scene images.

The remainder of the paper is organized as follows: Section 2 provides background on localizing and recognizing text in natural scene images. Section 3 discusses related work in localizing and recognizing Arabic and English text from natural scene images. Section 4 details our study’s methodology. Section 5 explains the experiments conducted. Section 6 presents the experimental results, followed by a general discussion in Section 7. Finally, Section 8 concludes the paper and outlines future research directions.

2. Background

In this section, we present background information on STR, focusing on approaches for localizing and recognizing text in natural scene images. Furthermore, we explore the unique characteristics of Arabic text and conduct a comprehensive review of existing datasets available for Arabic text in natural-scene images.

2.1. Arabic Language Characteristics

The Arabic language is spoken by a significant number of people worldwide and is an official language in 25 nations [10]. Arabic script differs from other languages, such as English and French, in several ways: it is written from right to left, and Arabic characters consist of 28 letters, which do not have uppercase and lowercase variations like English [15]. Furthermore, each Arabic character can take on four different forms depending on its position within a word: isolated, initial, medial, or final. Table 1 illustrates the various shapes of Arabic characters.

Table 1.

Examples of the names and shapes of Arabic characters.

The dot, known as “Noqtah,” plays a vital role in maintaining the consistency and structure of Arabic characters. Arabic letters may contain one, two, or three dots, positioned above, in the center, or below the characters. Table 2 provides details about the attributes of Arabic characters. In addition to dots, Arabic text includes the “Hamzah” character (e.g., “ء”), which is essential in distinguishing between different characters and can appear in various positions: above, in the center, or below. Depending on its context, the Hamzah character may be considered an integral part of the character or a distinct component when appearing separately [22].

Table 2.

Characteristics of Arabic characters.

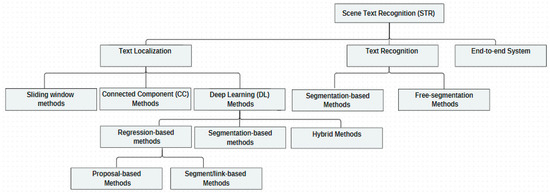

2.2. Scene Text Recognition (STR)

STR is often considered a specialized form of OCR that focuses specifically on recognizing text within natural scenes. STR presents significant challenges due to factors such as complex backgrounds, various font styles, and less-than-ideal imaging conditions [23]. The primary objective of STR is to automatically localize and recognize textual content within scene images. In recent years, multiple methodologies have been developed to address this objective, typically categorized into three main phases according to Chen et al. [23] and Khan et al. [6]: text localization, text recognition, and end-to-end systems. Figure 3 provides an overview of the STR system. In the following sections, we will delve into a detailed examination of each of these stages.

Figure 3.

Overview of the scene text recognition system (STR).

2.2.1. Text Localization

The objective of text localization is to pinpoint the precise area within an input image, typically marked by a bounding box, where text is present. These bounding boxes can take various shapes, such as quadrilaterals, oriented rectangles, or regular rectangles. According to [24], different sets of parameters define these shapes: for rectangles, they are (x, y, w, and h); for oriented rectangles, (x, y, w, and h); and for quadrilaterals, (x1, y1, x2, y2, x3, y3, x4, and y4). Khan et al. [6] categorize text localization techniques into three main types: connected component (CC), sliding window-based, and DL-based methods.

- Connected Component-Based Methods

In CC-based methods, the goal is to identify and unify small elements into coherent entities. These approaches typically involve segmentation techniques such as stable intensity regions and color clustering. Subsequently, filtering out non-textual elements using low-level features like stroke width, edge gradients, and texture helps in isolating text within an image. These methods are known for their low computational requirements and efficiency. However, they face challenges in handling rotation, scale variations, complex backgrounds, and other intricate scenarios. Representative CC-based techniques include maximally stable extremal regions (MSERs) [25] and stroke width transform (SWT) [26].

- Sliding Window-Based Methods

Sliding window-based methods involve generating multiple potential text regions by systematically moving a window of varying sizes and aspect ratios across an image. These candidate text regions are then grouped using a classifier that relies on manually crafted features. Subsequently, these grouped regions are combined to form complete words or lines of text. For instance, Pan et al. [27] introduced a system that localizes text in natural images by employing a sliding-window approach at different scales within a pyramid image structure. They use a conditional random field to distinguish non-textual areas and a minimum spanning tree to segment text into words or lines.

Previous localization techniques, like those using low-level handcrafted features and extensive preprocessing and post-processing steps, tend to be slow and computationally intensive. These methods are sensitive to challenges commonly found in natural scene images, such as background noise, varying lighting conditions, text orientation, and clutter. Due to these limitations, traditional approaches such as CC and sliding window methods are insufficient for achieving both speed and accuracy in text localization tasks, prompting the adoption of DL approaches [6].

- Deep Learning-Based Methods

DL methods achieve accurate text localization by autonomously extracting high-level features from input images. CNNs are widely used for this purpose because they excel at capturing complex features such as geometric patterns and lighting conditions, regardless of variations in these factors. CNNs efficiently extract a multitude of features from images, reducing processing time and computational complexity. DL-based text localization methods can be classified into three main categories: regression-based methods, segmentation-based methods, and hybrid methods.

Regression-Based Methods: Regression-based methods for text localization encompass both proposal-based and segment/link-based approaches tailored for natural scene images. In proposal-based methods, a process akin to sliding windows is employed to generate multiscale bounding boxes of varying aspect ratios across potential text regions. Each bounding box is then evaluated to determine the most accurate localization of the text, whether it is horizontal or quadrilateral. This technique proves highly effective for identifying horizontal and multi-oriented text but can struggle with accurately localizing curved text. Prominent examples of proposal-based methods include Faster R-CNN [28], EAST [29], SSD [30], and TextBoxes++ [31].

Conversely, segment/link-based methods segment text into numerous regions and subsequently use a linking process to connect these regions into a cohesive text localization result. This method, as implemented in connectionist text proposal networks (CTPN) [32], offers greater flexibility compared to proposal-based methods and achieves exceptional accuracy in text localization tasks.

Segmentation-Based Methods: Segmentation-based methods in text localization aim to accommodate a wide range of text sizes by leveraging segmentation algorithms to distinguish text from non-text areas based on pixel-level identification. After segmentation, semantic information and post-processing steps are crucial to accurately delineate text regions. However, the effectiveness of these methods can be influenced by factors such as complex backgrounds, diverse languages, and varying lengths of text lines. Segmentation-based methods typically fall into two categories: semantic segmentation and instance-aware segmentation. Semantic segmentation involves labeling pixels according to semantic information to identify text within an image. The fully convolutional network (FCN) is commonly used for this purpose in text localization tasks [6]. On the other hand, instance-aware segmentation addresses challenges such as overlapping texts by recognizing multiple instances of the same class as distinct objects.

Hybrid Methods: Hybrid methods combine regression-based and segmentation-based techniques to localize text using both bounding box and segmentation approaches. This approach is known for its high accuracy and effectiveness in overcoming various challenges associated with text localization in natural scene images. In recent years, several systems, including LOMO [33], have adopted this hybrid approach to achieve precise text localization results.

2.2.2. Text Recognition

Text recognition involves converting image regions that contain text into machine-readable strings. Unlike general image classification tasks, which have fixed outputs, text recognition deals with variable-length sequences of characters or words. Often, text localization is performed before text recognition as an initial step. Traditional text recognition methods rely on handcrafted features such as CCs, SWT, and histograms of oriented gradient descriptors. However, these methods are often inefficient and slow due to their dependence on low-level features. The adoption of DL approaches is crucial for developing efficient and highly accurate text recognition systems. DL methods significantly improve both the speed and accuracy of text recognition tasks. Text recognition in natural scene images can be classified into segmentation-based and free-segmentation methods, which will be further explained in subsequent sections [23].

- Segmentation-Based Methods

Methods based on segmentation involve breaking down characters into their constituent parts and applying a classification algorithm to identify each segment. Segmentation-based approaches typically consist of three main steps: image preprocessing, character segmentation, and character recognition. Accurately locating text in an image is crucial for these methods. However, segmentation-based methods have significant drawbacks. First, accurately pinpointing individual characters is widely recognized as one of the most challenging tasks in this field. The quality of character detection and segmentation often limits overall recognition performance. Second, segmentation-based approaches are unable to capture contextual information beyond individual characters. This limitation can result in suboptimal word-level results, especially during complex training scenarios.

- Free-Segmentation Methods

Free-segmentation techniques aim to recognize entire text passages without segmenting individual characters. This is achieved through an encoder–decoder framework. The process of free-segmentation methods typically involves four stages: image preprocessing, feature representation, sequence modeling, and prediction.

- Image Preprocessing Stage: The image preprocessing stage aims to enhance image quality and mitigate issues caused by poor image conditions. It plays a critical role in text recognition by improving feature representation. Various image preprocessing techniques such as background removal, text image super-resolution, and rectification are employed. These methods effectively address challenges associated with low image quality, thereby significantly enhancing text recognition accuracy.

- Feature Representation Stage: Feature representation is crucial for converting raw text-instance images into a form that emphasizes essential characteristics for character recognition while minimizing the influence of irrelevant factors such as font style, color, size, and background. CNNs are widely adopted in this stage due to their efficiency and effectiveness in extracting image features.

- Sequence Modeling Stage: The sequence modeling stage establishes connections between image features and predictions, enabling the extraction of contextual information from sequences of characters. This approach is valuable for predicting characters in sequence, demonstrating improved reliability and efficiency compared to independent character analysis. BiLSTM networks are commonly utilized in sequence modeling for their capability to capture long-range dependencies accurately [34].

- Prediction Stage: In the prediction stage, the objective is to determine the correct string sequence based on features extracted from the input text-instance image. Two main techniques employed for this purpose are Connectionist Temporal Classification (CTC) [35] and attention mechanisms [36]. These techniques facilitate accurate and effective decoding of the sequence from the extracted features.

2.2.3. End-to-End System

The objective of end-to-end STR is to convert all text regions within an image into sequences of strings. This process involves several stages, including text localization, recognition, and post-processing, as shown in Figure 4. Traditionally, text localization and recognition were treated as separate tasks that were combined to extract text from images. However, several factors have driven the development of end-to-end STR systems, which integrate text localization and recognition into a unified framework. One motivation is the potential for error accumulation in cascaded systems, where inaccuracies in one stage can propagate and lead to significant overall prediction errors. End-to-end solutions address this by mitigating error growth during training. Additionally, these systems facilitate the exchange of information between localization and recognition stages, generally improving the accuracy of text extraction. Moreover, end-to-end frameworks offer greater adaptability and ease of maintenance across different domains compared to traditional cascaded pipelines. Finally, they often achieve comparable efficiency with faster inference times and reduced storage requirements [6].

Figure 4.

End-to-end scene text recognition phases, image from the EvArEST dataset [10]. The white box is the result of localization phase. Text in white illustrates the result of the recognition phase.

2.3. Datasets of Arabic Scene Text

In this section, we perform a thorough analysis of existing datasets that concentrate on Arabic scene text. Table 3 presents a comparative assessment of these datasets, highlighting factors such as the year of publication, the types of tasks they support for localization and recognition, the orientation of text within images, the number of included images, and their accessibility. These datasets encompass a diverse range of images, including shopping boards, product names, advertisements, and highway signs captured under challenging conditions. These conditions encompass low resolution, inadequate lighting, noise, misaligned text, and variations in colors and sizes. The following section provides a concise overview of each dataset.

Table 3.

Summary of Arabic scene text datasets.

2.3.1. ARASTC [37]

This dataset includes Arabic characters extracted from scene images such as signs, hoardings, and advertisements. It consists of 100 classes categorizing 28 Arabic characters in different positions within words (initial, medial, final, and isolated).

2.3.2. ARASTI [38]

This dataset features textual content extracted from images depicting Arabic scenes. It includes segmented words and characters from natural settings, comprising 2093 segmented Arabic characters and 1280 segmented Arabic sentences extracted from 374 scene images. The characters are primarily from signs, billboards, and advertisements, manually segmented.

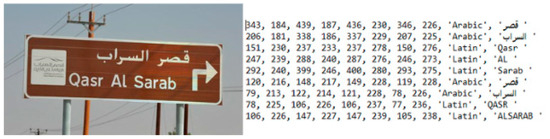

2.3.3. ICDAR2017 [39]

This dataset contains natural-scene images with embedded text from nine languages, including Arabic. It encompasses 18,000 images, with each language represented by 2000 images.

2.3.4. EASTR-42K [40]

This dataset comprises bilingual (English and Arabic) scene text images, highlighting diverse word combinations in Arabic. It includes precise text in both languages in an unrestricted environment, totaling 2107 lines of Arabic text, 983 lines of English text, and 784 lines of multi-lingual text.

2.3.5. ICDAR2019 [41]

This dataset is designed for multi-lingual scene text localization and recognition systems. It contains 20,000 images, each featuring text in at least one of Arabic, Bangla, Chinese, Devanagari, English, French, German, Italian, Japanese, or Korean.

2.3.6. ASAYAR [42]

This dataset focuses on Arabic text localization on highways and comprises three components: Arabic–English scene text localization, traffic sign detection, and directional symbol detection. It includes 1763 annotated photographs from Moroccan highways, categorized into 16 distinct object groups, with nearly 20,000 bounding boxes and annotations at both word and line levels.

2.3.7. Real-Time Arabic Scene Text Detection [43]

This dataset focuses on Arabic scene text localization. It includes 575 photos manually annotated with 762 instances of text and 1120 words. Challenges within the dataset include approximately 20% of photos featuring curved text, 10% with blurred images, and variations in typefaces.

2.3.8. ATTICA [44]

ATTICA is a multitask dataset specifically designed for Arabic traffic signs and panels. It covers two distinct Arabic regions: North Africa (Algeria, Egypt, Morocco, Tunisia) and the Gulf region (Bahrain, Kuwait, Qatar, Saudi Arabia, United Arab Emirates). The dataset comprises two primary sub-datasets: ATTICA_Sign, consisting of 1215 annotated images of traffic signs and panels, and ATTICA_Text, featuring 1180 annotated text objects at both line and word levels.

2.3.9. EvArEST [10]

The EvArEST dataset includes Arabic and English text captured in diverse indoor and outdoor settings throughout Egypt. Images were taken using cell phone cameras with varying resolutions. The dataset contains 510 annotated images at the word level.

2.3.10. Tunisia Street View Dataset [45]

The Tunisia Street View Dataset (TSVD) consists of 7000 images from various Tunisian cities sourced from Google Street View, featuring Arabic text. The dataset underwent annotation using an active learning method, with only approximately 20% of training samples labeled and utilized.

The information in Table 3 emphasizes the urgent requirement for additional publicly accessible datasets to facilitate the localization and recognition of Arabic texts. Out of the reviewed datasets, only five are publicly accessible, with three [10,39,41] featuring text in different formats like horizontal, multi-oriented, and curved. The ARASTI dataset [38] specifically concentrates on Arabic characters for specific recognition tasks, whereas the ASAYAR dataset [42] offers publicly available Arabic texts primarily in horizontal orientation.

Our research focuses on accurately recognizing and localizing multi-oriented and curved bilingual Arabic and English texts within natural scene images. To achieve this, we utilized publicly available Arabic and bilingual datasets, specifically ICDAR2017, ICDAR2019, and EvArEST, which provide the necessary types of Arabic and bilingual text.

3. Related Works

Text recognition from natural scene images has recently gained significant attention due to its potential to enhance various applications in daily life. Text localization, which involves identifying text regions, feature extraction, and recognition, plays a crucial role in the effectiveness of text recognition systems. While end-to-end systems have shown promising results in recognizing Latin text from natural scene images, surpassing traditional approaches that handle each text reading process separately, the study of Arabic and bilingual Arabic–English text in this domain remains relatively underexplored. This gap necessitates further research to develop effective strategies for localizing and recognizing Arabic and bilingual text. Our study focuses on prominent academic journals such as IEEE, Web of Science, SpringerLink, and Science Direct. We use specific keywords such as ‘Arabic scene text detection’, ‘English scene text detection’, ‘Arabic scene text recognition’, ‘English scene text recognition’, and ‘end-to-end scene text recognition’ to review the latest research on localizing and recognizing Arabic and English text in natural scene images.

3.1. Text Localization from Natural Scene Images

The first step in standard end-to-end text recognition pipelines is scene text localization. The localization of text from natural scene images presents several challenges, especially when dealing with different text orientations. Currently, text localization increasingly relies on DL techniques, which have become the predominant approach. Text found in natural scenes can generally be classified into three types: horizontal text, multi-oriented text, and curved text. Localizing multi-oriented and curved text is more complex than localizing horizontal text. In this section, we specifically explore the techniques employed for localizing Arabic and English text within natural-scene images.

3.1.1. Arabic Scene Text Localization

Gaddour et al. [46] introduced a method to extract Arabic text connections and localize text based on distinct characteristics of the Arabic script and color uniformity. Their approach utilizes threshold values for connection extraction and incorporates ligature and baseline filters to identify and localize text. The ligature filter identifies horizontal connections between characters through analysis of vertical projection profile histograms, while the baseline filter detects the highest intensity value.

Akallouch et al. [42] introduced the ASAYAR dataset, focusing on Moroccan highway traffic panels. It encompasses three primary categories: localization of Arabic–Latin scene text, detection of traffic signs, and identification of directional symbols. This study utilized DL techniques to precisely determine the positions of Arabic text, traffic signs, and directional symbols. TextBoxes++ [31], CTPN [32], and EAST [29] approaches were applied to the ASAYAR_TXT dataset. Experimental results demonstrated that EAST and CTPN achieved superior performance compared to TextBoxes++ in accurately localizing Arabic texts.

Moumen et al. [43] presented a real-time Arabic text localization method using a fully convolutional neural network (FCN). Their approach adopts a two-step framework based on the VGG-16 architecture. In the initial phase, they employed a scale-based region network (SPRN) to classify regions as either text or non-text. Subsequently, a text detector was utilized to accurately identify text within the predefined scale range, thereby delineating the text regions effectively.

Boujemaa et al. [44] introduced the ATTICA dataset, which includes images of traffic signs and panels from Arabic-speaking countries. The dataset is divided into two sub-datasets: ATTICA-Sign, focusing on traffic signs and boards, and ATTICA-Text. This study utilized DL techniques, specifically EAST and CTPN, to accurately locate Arabic text on traffic panels. Experimental results indicated that the EAST model outperformed the CTPN model in localizing Arabic text.

Boukthir et al. [45] created the TSVD, comprising 7000 images captured from various cities in Tunisia using the Google Street View platform. This study aimed to implement a deep active learning algorithm based on methodologies from Chowdhury et al. [47] and Yang et al. [48]. Their approach integrates CNNs with an active learning strategy to effectively identify Arabic text within natural-scene images. The research focusing on the localization of Arabic text in natural scene images is summarized in Table 4. However, several weaknesses exist in the current methods used for localizing Arabic text from natural scene images.

Table 4.

Studies of Arabic text localization in natural scene images.

- The majority of techniques for handling Arabic datasets, such as ASAYAR and ATTICA, primarily focus on horizontal Arabic text.

- Two studies were conducted by Moumen et al. [43] and Boukthir et al. [45], utilizing Arabic datasets containing text with curved formatting and various orientations. However, these methods struggle to accurately locate curved texts.

- The aforementioned approaches exclusively target Arabic text and do not address bilingual text combining Arabic and English. While the ASAYAR dataset used in [42] accurately locates bilingual text, it is restricted to horizontal text.

- All of the above techniques employ the same pretrained CNN model, specifically VGG-16, for feature extraction from images.

3.1.2. English Scene Text Localization

Taino et al. [32] and Lia et al. [49] successfully utilized object detection frameworks to accurately locate horizontal text in natural scene images, showing excellent performance. Taino et al. [32] introduced the CTPN, which accurately detects the position of text lines within sequences of precise text proposals using convolutional feature maps. The CTPN model includes a vertical anchor mechanism that simultaneously predicts the location and text/non-text status. Lia et al. [49] introduced TextBoxes, a text detector based on an FCN. This method is known for its speed and accuracy, as it generates word-bounding box coordinates across multiple network layers by predicting text presence and offset coordinates relative to default boxes.

Subsequently, their research focused on the challenges of localizing multi-oriented English text in natural scene images and proposed various strategies to overcome these difficulties. Zhou et al. [29] developed EAST, a scene text detector known for its efficiency and accuracy. This detector uses a single FCN to directly predict multi-oriented text lines. The EAST approach simplifies the process by eliminating intermediate steps such as candidate aggregation and word division. Liao et al. [31] introduced TextBoxes++, an enhanced version of the TextBoxes model discussed in [49]. TextBoxes++ improves the localization of multi-oriented text in natural scene images by optimizing the network structure and training procedure. Shi et al. [50] introduced the seglink method to localize oriented text in natural scene images. This method operates in two stages: segmentation, where rectangular boxes are placed over specific word or text line areas, and linking, which connects adjacent segments.

Currently, researchers are intensifying efforts to address the challenge of localizing curved English text in natural scene images by proposing various models to tackle this complex problem. Wang et al. [51] introduced the progressive scale expansion network, which begins by identifying the smallest-scale text kernel within each text instance. It then gradually expands this kernel using breadth-first-search to obtain a fully completed text instance. Wang et al. [2] introduced the pixel aggregation network (PAN), which is an efficient and accurate text detector that has low computation costs and a learnable post-processing method. The PAN model consists of a segmentation head with the feature pyramid enhancement module (FPEM) and the Feature Fusion Model (FFM), which are used to extract features at different depths. Pixel aggregation (PA) is a trainable post-processing technique that is used to consolidate text pixels based on a predicted similarity vector. Zhang et al. [33] introduced the LOMO system, which uses segmentation techniques and an object identification framework to precisely locate curved text. LOMO consists of three modules: the direct regressor generates text proposals in quadrangle shapes; the iterative refinement module gradually improves these proposals to determine the complete text extent; and the shape expression module enhances accuracy by taking into account geometric text characteristics such as region, center, and border offset. Beak et al. [52] introduced character region awareness for text detection (CRAFT), a method designed to automatically identify and locate each character region. Using CNNs, CRAFT generates region scores and affinity scores. The region score pinpoints the exact position of each character, while the affinity score categorizes characters into separate entities by connecting them. Dai et al. [53] introduced progressive contour regression (PCR) for precise detection of curved text. PCR first suggests horizontal text by estimating center points and sizes, then aligns the overall shape of horizontal suggestions with the corner points of oriented text suggestions. Finally, PCR transforms the shape of directed text suggestions into curved text contours. Ye et al. [54] presented the dynamic point text detection transformer network (DPText-DETR). Unlike traditional bounding boxes, DPText-DETR utilizes explicit point query modeling, which directly employs point coordinates for positional queries. The model introduces the enhanced factorized self-attention module to accurately represent circular points based on polygonal points.

These methods were developed to address the varied challenges in localizing English text from natural scene images, such as horizontal, multi-oriented, and curved text. However, localizing Arabic text and bilingual Arabic–English text presents additional challenges that require further research and development in this field.

3.2. Text Recognition from Natural Scene Images

The process of end-to-end text recognition typically involves a second step known as STR. This section provides an overview of the techniques used to recognize Arabic text and English text in natural scene images.

3.2.1. Arabic Scene Text Recognition

Tounsi et al. [37] proposed an approach for character recognition from natural images using a heap of features, which utilizes spatial pyramid matching (SPM) to handle variations in text. Initially, local features are extracted using SIFT and represented via sparse coding. SPM divides the image into sub-regions and computes histograms describing local features for each region. These histograms are aggregated to represent the features of the entire image. SVMs are employed for individual character classification and recognition. The approach was evaluated on the Arabic scene text character dataset, which includes 260 manually segmented images of characters categorized into 100 classes of 28 Arabic characters in various positions. The experiments involved three different sample configurations: (1) training and testing with five samples per class (5-Arabic characters), (2) training with 15 samples per class and testing with five samples per class (15-Arabic characters), and (3) training and testing with 15 samples per class (15-Glyphs), similar to the 15-Arabic character setup. The results indicate nearly equivalent accuracy in recognizing both characters and glyphs, with slight variation.

Ahmed et al. [55] presented a method for recognizing Arabic characters that have been manually segmented from images. The proposed model preprocesses the images by resizing them to a standard size and converting them to grayscale. Arabic characters have variations depending on their position within a word (beginning, middle, end, isolated), and the model aims to accommodate these variations by considering five different orientations at various angles for each segmented character. The approach uses a ConvNet to extract features of the characters, followed by classification using fully connected layers. The experiments were conducted on Arabic images obtained from the English–Arabic Scene Text (EAST) dataset, which consists of 250 images containing a total of 2700 segmented characters across 27 classes. The findings indicate improved accuracy and high performance of ConvNets when trained on a large and diverse dataset.

Jain et al. [56] demonstrated the recognition of Arabic text in natural images at the word level using a hybrid neural network called CNN–RNN. In this approach, CNN is used to extract feature vectors from the input images’ feature maps. These feature vectors are then processed by a bidirectional LSTM network in the second neural network. The bidirectional LSTM predicts the features extracted by CNN, and the final layer of the approach is the transcription layer. This layer utilizes the CTC technique to convert the BLSTM’s predictions into sequences of characters. The method was applied to a dataset consisting of 2000 words of Arabic script collected from various locations on Google Images. The performance of the CNN-RNN approach was evaluated using two metrics: character recognition rate (CRR) and word recognition rate (WRR). Leveraging the RNN’s ability to capture contextual dependencies, the approach achieved high recognition performance.

Alsaeedi et al. [57] introduced a method for recognizing Arabic texts in natural images using a combination of two neural networks. This approach incorporates a novel segmentation technique that applies a normalization filter to the image and detects characters through vertical and horizontal scanning. CNN is used to extract features and classify the characters. In addition, a transparent neural network (TNN) is employed to validate character recognition against a dictionary, specifically for identifying place names. The effectiveness of the method was evaluated using three different character fonts: Calibri, Aldhabi, and Al-Andalus.

Due to the lack of a dedicated Arabic dataset, Ahmed et al. [40] developed the English–Arabic Scene Text Recognition 42k (EASTR-42K) dataset for Arabic scene images. Their approach involves recognizing Arabic words from these images using MSTR and SFIT for feature extraction, followed by recognition using different input images (binary image and mask image). In the recognition phase, Multidimensional Long Short-Term Memory (MDLSTM) serves as the learning classifier, with CTC used to predict and recognize words. The experimental study utilized 1500 scene text images segmented into words, evaluating the technique’s performance using precision, recall, and F-measure metrics.

Ahmed et al. [58] proposed a method for extracting hybrid features from Arabic text. Their technique starts by detecting the extremal regions of text in an image using MSER, applied to both binary and mask images. They then identify invariant features that are common to both images using SIFT. In the recognition phase, MDLSTM is used to learn from the sequence of features extracted in the previous phase. The experiment was conducted on the Arabic Scene Text Recognition (ASTR) dataset, which consists of 13,593 words segmented from images.

Hassan et al. [10] introduced the EvArEsT dataset, which includes Arabic and English text. They evaluated nine different approaches for recognizing Arabic text by applying methods originally designed for Latin text to Arabic within the EvArEsT dataset. These methods include convolutional recurrent neural network (CRNN), recurrent attention encoder (RARE), R2AM, STARNET, gated recurrent convolutional network (GRCNN), Rosetta, WWSTR, multi-object rectified attention network (MORAN), and SCAN. Among these, WWSTR demonstrated the highest accuracy in recognizing Arabic text. Table 5 provides a comprehensive summary of studies focused on recognizing Arabic characters in natural scene images. It categorizes the methods used into four stages of text recognition: preprocessing, feature extraction, sequence modeling, and prediction. These studies highlight various gaps and challenges in current Arabic text recognition methods.

Table 5.

Studies of Arabic text recognition in natural scene images.

- Currently, there is no research on an end-to-end system for recognizing scene text that can localize and recognize bilingual Arabic and English text. Most studies treat localization and recognition as separate processes.

- Although current studies are successful in recognizing Arabic text in horizontal forms, there are still difficulties in recognizing multi-oriented and curved Arabic text.

- A study [10] attempted to address the recognition of Arabic and English text from natural scene images but did not employ an end-to-end approach.

- Researchers have not yet investigated the use of the LSTM model with an attention mechanism specifically for Arabic text recognition.

Due to the inherent difficulties in localizing and recognizing Arabic text within natural scene images, there exists a substantial need for further research into developing comprehensive end-to-end STR systems. These systems should be equipped to effectively identify and localize Arabic text, especially in scenarios involving multi-oriented and curved text, and extend to the recognition of bilingual text.

3.2.2. English Scene Text Recognition

Three main categories broadly define the field of English STR: character-based text recognition, CTC-based text recognition, and attention-based text recognition. Character-based text recognition involves the initial recognition of individual characters, followed by their assembly into words. For instance, Bissacco et al. [59] introduced PhotoOCR, a device that utilizes a deep neural network specifically designed for individual character recognition.

The text recognition approach that utilizes CTC typically involves stacking RNNs on top of CNNs to effectively capture long-term sequence information. The model is trained using the CTC loss function. Liu et al. [60] introduced the spatial attention residue network, which incorporates a residual network with a spatial attention mechanism. This model consists of three main components: a spatial transformer, a residual feature extractor, and CTC. The spatial transformer uses spatial attention to transform loosely bound and distorted text regions into tightly bound and rectified ones. The residual feature extractor utilizes residual convolutional blocks and integrates LSTM for feature extraction. Shi et al. [61] introduced the CRNN model, which combines deep convolutional neural networks with RNNs. CRNN employs convolutional layers for feature extraction and recurrent layers for making predictions from each frame. Finally, the transcription layer converts the per-frame predictions from the recurrent layer into a sequence of labels. Wang et al. [62] introduced the GRCNN for text recognition. This method follows a three-step approach: GRCNN performs feature extraction, LSTM handles sequence modeling, and CTC facilitates text prediction through transcription. Borisyuk et al. [63] proposed Rosetta, a scalable OCR system consisting of two stages: text detection and text recognition. Text detection utilizes the Faster-RCNN model to accurately locate text regions, while a fully convolutional model predicts character sequences using CTC.

In attention-based text recognition, Shi et al. [64] introduced RARE for recognizing irregular text. The RARE model incorporates a spatial transformer network (STN) using thin-plate spline to rectify and enhance text readability within images. The recognition system employs an attention-based framework with an encoder–decoder architecture. Lee et al. [65] developed the recursive recurrent neural network with attention model (R2AM). R2AM uses a recursive CNN for efficient and accurate image feature extraction. It employs a soft-attention mechanism to effectively utilize image features in a coordinated manner. Luo et al. [3] introduced the MORAN, which consists of two main components: a multi-object rectification network and attention-based sequence recognition (ASRN). The multi-object rectification network corrects irregular text images, facilitating ASRN. ASRN includes a CNN-LSTM architecture followed by an attention decoder for text recognition. The model is trained using weak supervision to learn image part offsets. Zhan et al. [66] presented ESIR, a STR system aimed at reducing perspective distortion and text line curvature to enhance recognition performance. ESIR includes iterative rectification and recognition networks. Iterative rectification adjusts input images using transformation parameters, while the recognition network employs a sequence-to-sequence model with an attention mechanism. The SCAN model proposed by Hassan et al. [67] aims to accurately detect and classify characters, followed by word generation using a sequential approach. This system consists of two main modules: one for character prediction based on semantic segmentation using the high-resolution network (HRNet), and another for word generation employing an encoder–decoder network. The decoder utilizes LSTM to produce final results, while the encoder employs a series of convolutional layers. Cheng et al. [68] introduced the length-insensitive scene text recognizer (LISTER), designed to accurately identify text in natural scene images of varying lengths. To achieve specific character attention maps, LISTER employs a neighbor decoder. The Feature Enhancement Module is incorporated to capture long-range dependencies with minimal computational cost.

Recent advancements have addressed various challenges in the recognition of English text from natural scene images. Some methods incorporate rectification techniques to precisely adjust text orientation, thereby enhancing their ability to handle multi-oriented and curved English text. These advancements not only improve model performance but also optimize computational efficiency. In contrast, recognizing Arabic text poses more complex challenges compared to English due to the unique characteristics of Arabic characters. Moreover, recognizing bilingual Arabic and English text requires further research to address the distinct challenges of processing two languages within a single model. In our study, we employed an end-to-end STR approach to tackle these issues. Our method utilizes two RNN models and an attention mechanism without relying on rectification methods.

3.3. End-to-End Scene Text Recognition

The end-to-end STR system operates as a unified network capable of both localizing and recognizing text in a single pass, eliminating the need for intermediate processes such as image cropping, word segmentation, or character recognition [11]. Recent advancements in this system have primarily focused on localizing and recognizing multi-oriented and curved English text within natural scene images. However, there has been comparatively less emphasis on addressing Arabic text and bilingual text within the same context. In this section, we present the latest advancements in end-to-end STR approaches specifically tailored for localizing and recognizing multi-oriented and curved Latin script in natural scene images.

Liu et al. [69] introduced ABCNetv2, an end-to-end system designed to localize and recognize multi-oriented and curved scene text using parametrized Bezier curves. In the localization phase, the system employs a novel text detector called Bezier Curve, which offers lower computational intensity compared to traditional rectangular bounding box methods. For recognition, ABCNetv2 utilizes BezierAlign, a feature alignment method that connects the localization and recognition outcomes. Zhang et al. [70] proposed TESTR, another end-to-end method for text localization and recognition that eliminates the need for region of interest (RoI) or post-processing. TESTR operates in two stages: first, a multiscale deformable attention mechanism generates multiscale feature maps. Then, dual decoders are employed—one for text localization and another for recognition. SwinTextSpotter, developed by Huang et al. [5], uses the Swin transformer method for both text localization and recognition in an end-to-end system. Wang et al. [21] introduced PAN++, an end-to-end STR system that employs pixel-based representation and PA techniques. Kittenplon et al. [71] presented TextTranSpotter (TTS), a novel framework for end-to-end STR. TTS integrates a transformer-based architecture with encoder–decoder structures and task-specific heads for localization, recognition, and segmentation. Huang et al. [72] proposed ESTextSpotter, a state-of-the-art approach for end-to-end STR. ESTextSpotter leverages explicit synergy between text localization and recognition processes. UNITS, developed by Kil et al. [73], is a new model designed for end-to-end STR, treating it as a task of generating a sequence. This model supports various localization formats—points, bounding boxes, quadrilaterals, and polygons—by integrating them into a unified interface. Ye et al. [74] introduced DeepSolo, a groundbreaking method inspired by DETR. DeepSolo combines text localization and recognition into a single process using a single decoder. It uses an explicit point representation for text lines, employing ordered points on Bezier center curves. This approach improves detection and recognition by modeling queries with attributes such as position, offset, and category. Das et al. [75] introduced the Swin-TESTR model for addressing end-to-end STR. This model is specifically designed to handle regular text, multi-oriented text, and curved text. Swin-TESTR utilizes a transformer-based architecture with a Swin Transformer backbone. This design enables the extraction of multi-scale features from input images, thereby improving its capability to capture intricate text details across different domains and orientations.

In our research, our goal is to create a stronger end-to-end system for localizing and recognizing text in scenes. This system should be able to locate and identify Arabic only, bilingual Arabic, and English text in natural scene images. Our approach is influenced by PAN++ [21], an advanced system for recognizing scene text that is well-known for its ability to localize and recognize English text that is oriented in multiple directions or curved. PAN++ is a recent model for localizing and recognizing multi-oriented and curved text from natural scene images that achieved superior results in accuracy and obtained the fastest inference speed compared with other state-of-the-art. PAN++ incorporates a fast and accurate object detector called kernel representation that can localize text by using a single fully convolutional network, which is very useful in real-time applications.

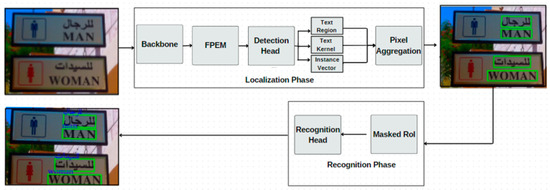

4. Proposed Methodology

Recognizing bilingual Arabic and English text from natural scene images in an end-to-end STR system involves two main phases: scene text localization and STR. Figure 5 illustrates the architectural representation of our end-to-end system, which is inspired by the PAN++ model [21]. In our approach, we selected the EfficientNetV2 model for feature extraction and compared its performance with the ResNet model. During the recognition phase, we utilized the BiLSTM model to handle the recognition of bilingual Arabic and English text, assessing its performance against the LSTM model. Furthermore, we integrated the AraElectra Arabic language model as a post-processing step to enhance the accuracy of recognizing Arabic text from natural scene images. For scene text localization, our system utilizes the kernel representation of PAN++ to precisely locate text regions within an image. Initially, we extract features from the image using a pretrained CNN model and employ methods like the feature pyramid network. The backbone network is enhanced with the Feature Pyramid Enhancement Module (FPEM), which integrates multiple feature pyramid modules to deepen the network and improve feature expression. The PAN++ stage then uses CNN models in the detection head to predict the text region, text kernel, and instance vector, aggregating these predictions with Pixel Aggregation (PA) to accurately localize the final text regions. In the recognition phase, a masked RoI extracts feature patches from the detected text lines. The recognition head includes two layers of the LSTM model followed by a multi-head attention mechanism, ensuring accurate recognition of the text content.

Figure 5.

Phases of the proposed end-to-end scene text recognition system, image from ICDAR2017 [39]. The green box illustrated the result of localization phase. Text in blue illustrates the result of the recognition phase.

4.1. Localization Phase

The following subsections explain each step of the localization phase.

4.1.1. Backbone Stage

In our research, we used a pretrained CNN model as the fundamental framework for extracting features. More specifically, we examined two different types of framework models for extracting features from bilingual Arabic and English text: ResNet [76] and EfficientNetV2 [77]. Although PAN++ typically uses ResNet as its default framework, our study aimed to investigate the performance of EfficientNetV2, another pretrained CNN model. Our objective was to train and assess the effectiveness of EfficientNetV2 in accurately identifying bilingual Arabic and English text within natural scene images.

- ResNet

He et al. [76] introduced ResNet, a DL model designed to address the issue of ‘vanishing gradient’ through residual learning. Residual learning involves shortcut connections that bypass one or more layers and perform identity mappings. These shortcuts add the output of skipped layers directly to the subsequent layers’ outputs. Importantly, this approach avoids the introduction of extra parameters or increased computational complexity. ResNet is structured as a residual network using convolutional layers, typically with 3 × 3 filters. It was trained on the ImageNet dataset, which comprises 1.28 million images categorized into 1000 classes. A separate validation set of 50,000 images was used during the training process. ResNet was developed with depths ranging from 18 to 152 layers, demonstrating its scalability and efficacy in various DL tasks.

- EfficientNetV2

Several techniques are commonly used in DL to improve the performance of neural networks. These methods often involve increasing the depth of the network, expanding input image sizes, or widening the network. However, improvements in accuracy are primarily limited by the increase in network depth alone, as it can lead to issues such as gradient explosion or vanishing gradients. Furthermore, deeper networks require more storage capacity. Expanding the dimensions of the model allows for greater complexity and specificity. However, as the model complexity increases, achieving deeper insights becomes more challenging. Alternatively, increasing the resolution of the input images enables the model to capture more intricate features. Nevertheless, this augmentation also increases the computational demands and slows down the training speed. EfficientNet [78] effectively balances these trade-offs to achieve optimal performance.

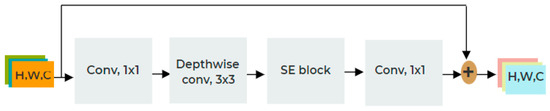

EfficientNet is a CNN model that uses neural architecture search to develop a baseline network, which is then scaled up to create a series of models. This family includes EfficientNet-B0 through EfficientNet-B7, each scaled using specific parameters. The main building block used is the mobile inverted bottleneck MBConv [79,80], which incorporates squeeze-and-excitation optimization [81]. Tan et al. [77] introduced EfficientNetV2 to improve training speed compared to EfficientNetV1. During the development of EfficientNetV1, researchers identified challenges that could slow down training and reduce model efficiency. Training with large images can result in increased memory usage and slower training times. EfficientNetV1’s architecture incorporates MBConv, which utilizes depth-wise convolution [82] (as shown in Figure 6 and inspired by [77]). Depthwise convolutions are CNN layers with fewer parameters and floating-point operations than standard convolutions, although they may not fully take advantage of modern accelerators, potentially slowing down training in early layers. EfficientNetV1 employs a straightforward compound scaling approach to uniformly increase all network stages. For example, when the depth coefficient is 2, each stage within the network doubles in the number of layers.

Figure 6.

Structure of MBConv. “+” means element-wise addition. “Conv” and “SE” represent regular convolution and Squeeze and Excitation optimization, respectively.

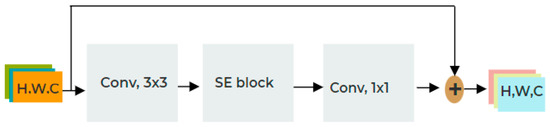

EfficientNetV2 improves the training speed of EfficientNetV1 by substituting MBConv in the initial layer with Fused-MBConv [83], as shown in Figure 7 and inspired by [77]. Fused-MBConv is designed to optimize the utilization of mobile or server accelerators. It replaces the depth-wise conv3 × 3 and expansion conv1 × 1 in MBConv with a single regular conv3 × 3 convolution. The architectures of EfficientNetV2-S models integrate two types of CNNs: MBConv and Fused-MBConv. These networks differ in the number of layers and kernel sizes, providing options like 3 × 3 and 5 × 5 kernel sizes, as well as expansion ratios of 1, 4, and 6.

Figure 7.

Structure of Fused-MBConv. “+” means element-wise addition. “Conv” and “SE” represent regular convolution and Squeeze and Excitation optimization, respectively.

4.1.2. Feature Pyramid Enhancement Module (FPEM) Stage

During this phase, the backbone network generates four feature maps from the conv2, conv3, conv4, and conv5 stages, corresponding to image resolutions of 1/4, 1/8, 1/16, and 1/32. We then applied 1 × 1 convolutions to reduce the channel dimensions of each feature map to 128, creating a compact feature pyramid. The FPEM, structured in a U-shape, enhances features during both upscaling and downscaling phases. Upscaling improves input features using four distinct stride sizes: notably 32, 16, 8, and 4 pixels. These enhanced features from upscaling serve as input for the subsequent downscaling phase. Here, features are further refined with strides matching those from the upscaling phase, starting at 4 and concluding at 32 pixels. The final feature map size obtained from the FPEM stage is H/4 × W/4 × 512.

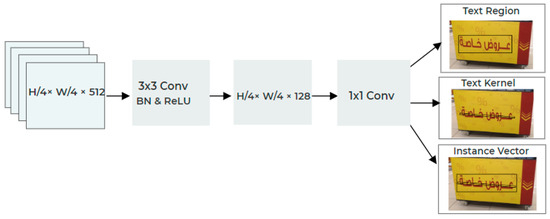

4.1.3. Detection Head

The FPEM provides the detection head with a feature map of dimensions H/4 × W/4 × 512. A 3 × 3 regular convolution [84] initiates the feature map in the first convolutional layer, accompanied by batch normalization and ReLU activation functions. Figure 8, inspired by [21], illustrates the structure of the detection head. The initial convolution operation reduces the channel dimension to 128 in the feature map. This processed feature map then undergoes a second convolutional step with a 1 × 1 kernel size to generate the final output. The detection head identifies three distinct categories: text regions, text kernels, and instance vectors. The text region precisely outlines the spatial boundary of entire text lines. The text kernel distinguishes neighboring instances by identifying the center of each text line, independent of its shape. Combining the text region and kernel allows for the reconstruction of complete text line structures using instance vectors.

Figure 8.

Structure of the detection head. “Conv” and “BN” represent regular convolution and Batch Normalization, respectively. The words in images mean “Special Offers” in English terms.

4.1.4. Pixel Aggregation

PA improves the output of the instance vector by using a clustering method. PA collects text pixels using a suitable text kernel and treats separate text lines as different clusters. The focal points of these text kernels represent each cluster, while the pixels within text areas contribute to creating the cluster center for the text. The goal of this process is to precisely locate bilingual Arabic and English text by efficiently gathering pixels around the identified cluster centers.

4.2. Recognition Phase

The following subsections explain each step of the recognition phase.

4.2.1. Masked Region of Interest (RoI)

The masked RoI is a tool designed to extract feature patches of a specified size from text lines that may have varying shapes. This process involves four sequential steps. First, it determines the smallest bounding rectangle that encompasses the target text line in an upright position. Second, it extracts the feature patch from within this upright bounding rectangle. Third, a binary mask is applied to the feature patch to filter out noise features. This mask assigns a weight of 0 to areas outside the target text line. Finally, the feature patch is resized to a consistent, predetermined size. The masked RoI offers two main advantages. First, by using a binary mask specific to the target text line, it effectively removes noise characteristics that could originate from the background or other text lines. This ensures precise feature extraction even from text lines with diverse shapes. Second, the technique eliminates the need for spatial rectification processes such as the STN.

4.2.2. Recognition Head

The recognition head is structured as a sequence-to-sequence model with a decoder architecture that includes an attention mechanism, skipping the encoder step. This model is divided into two main stages: the starter and the decoder. A detailed explanation of these components is provided in the following section.

- Stater Stage

The initial phase of the recognition head focuses on finding the starting point of the string (start of sequence, or SOS) within a text line that may not start in the leftmost position. This component consists of a linear transformation, an embedding layer ε1, and a multi-head attention layer A1. More specifically, the embedding layer ε1 converts the SOS symbol (represented as a one-hot vector) into a 128-dimensional vector.

- Decoder Stage

The decoder stage of the PAN++ model aims to utilize contextual information gathered from visual features obtained in the starter stage to predict the words presented in the text. In this stage, the PAN++ model incorporates two LSTM layers and one multi-head attention layer. Specifically, BiLSTM models with multi-head attention are used to facilitate the recognition of bilingual Arabic and English text.

- Long Short-Term Memory: LSTM is a widely recognized technique that effectively addresses challenges related to vanishing and exploding gradients [85]. Unlike traditional ‘sigmoid’ or ‘tanh’ activation functions, LSTM introduces memory cells equipped with gates to manage the flow of information in and out of the cells. These gates regulate how information is input to hidden neurons and preserve features from earlier time steps [86]. An LSTM cell consists of input, forget, and output gates, alongside a cell activation component. These elements receive activation signals from various sources and control cell activation using designated multipliers. LSTM gates prevent other network components from modifying memory cell contents across successive time steps. Compared to RNNs, LSTMs excel in retaining signals and transmitting error information over longer periods, making them highly effective in processing data with intricate dependencies across various sequence learning tasks [87].

- Bidirectional Long Short-Term Memory: BiLSTM, an extension of the bidirectional recurrent neural network (BiRNN) introduced in 1997 to enhance traditional RNNs [88], combines both forward and backward LSTM networks. During training, the forward LSTM network processes the input sequence in chronological order, while the backward LSTM network processes it in reverse. Both networks capture the context of the input sequence and extract crucial features [89]. The outputs of both the forward and backward LSTM networks are then combined to generate the final output of the BiLSTM network. By processing input data in both directions, BiLSTM captures additional contextual information compared to unidirectional LSTM models, enabling it to handle long-term dependencies more effectively and improve overall model accuracy [90].

AraELECTRA: Efficiently learning an encoder that classifies token replacement (ELECTRA) introduces a sophisticated approach to self-supervised language representation learning [91]. This method involves training two neural networks: a generator (G) and a discriminator (D). Each network includes a bidirectional encoder, such as Small BERT. The generator performs masked language modeling by randomly masking out tokens in input sequences and training to predict the original tokens at those positions. Meanwhile, the discriminator is trained to distinguish between the original tokens and the replaced tokens generated by the generator, a technique known as replaced token detection. Formally, both G and D encode an input sentence x into a sequence of contextualized vector representations h(x) = h1, h2, …, hn, given a sequence of tokens x = x1, x2, …, xn. The generator calculates the probability of having a token xt at a specific position t when the corresponding xt is masked as [MASK]. This is achieved using a softmax layer:

where e represents token embeddings.

pG(xt|x) = exp e(xt)ThG(x)t/∑exp ex′ ThG(x)t

The discriminator predicts whether the token xt is ‘real’ for a given position t, meaning that it originates from the data rather than the generator distribution, using a sigmoid output layer:

D(x, t) = sigmoid wThD(x)t

While ELECTRA’s pretraining approach shares similarities with generative adversarial networks (GANs) [92], there are distinct differences. For instance, ELECTRA improves performance on downstream tasks by transforming tokens from ‘fake’ to ‘real’ status after generating the correct token. Moreover, ELECTRA focuses on predicting with maximum probability, whereas GANs aim to deceive the discriminator.

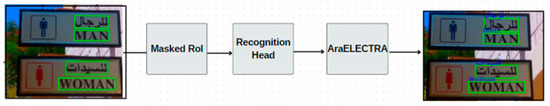

The AraELECTRA model [93] is designed for learning Arabic language representation, based on the ELECTRA architecture, with the aim of improving the understanding of Arabic text. It consists of a bidirectional transformer encoder with 136 million parameters, 12 encoder layers, 12 attention heads, a hidden size of 768, and supports a maximum input sequence length of 512 tokens. For pretraining, AraELECTRA used a dataset similar to ARABERT V0.2 [94], which includes approximately 8.8 billion words from various Arabic corpora, mainly composed of news articles. When evaluated in tasks such as sentiment analysis, Arabic question answering, and named-entity recognition, AraELECTRA showed better performance compared to other models trained on the same dataset but with larger model sizes. In our study, we incorporated the AraELECTRA model into the post-processing phase of the recognition pipeline to identify Arabic text from natural-scene images. The phases of Arabic text recognition that include the AraELECTRA model are illustrated in Figure 9.

Figure 9.

The utilization of the AraELECTRA model in the recognition phase, image from ICDAR2017 [39]. The green box refers to the result of the localization phase. Text in blue illustrates the result of the recognition phase.

5. Experiments

We carried out two comprehensive experiments to evaluate the model’s ability to handle bilingual Arabic and English texts: (1) bilingual text localization, and (2) end-to-end bilingual Arabic and English STR. This section presents detailed information about the dataset used, the evaluation metrics employed, and the specifics of training and implementation.

5.1. Datasets

The following subsections explain Arabic scene text datasets, English scene text datasets, and datasets statistics.

5.1.1. Arabic Scene Text Datasets

We evaluated the model’s performance in localization and end-to-end STR using the ICDAR2017, ICDAR2019, and EvArEST datasets. Our research focused on identifying and recognizing bilingual Arabic–English texts in natural scene images. To accomplish this, we utilized images from the multi-lingual ICDAR2017 [39] and ICDAR2019 [41] datasets, which are currently the only publicly available datasets containing Arabic and bilingual Arabic–English text in natural scene images suitable for localization and recognition tasks. These datasets encompass a range of challenges commonly found in natural scene images, including complex backgrounds, varying resolutions, diverse text orientations, and multiple Arabic and English fonts. This diversity was crucial in achieving the objectives of our study. Importantly, our study represents the first known use of the EvArEST dataset for both localization and end-to-end tasks.

However, both ICDAR datasets lacked accurate ground-truth data necessary to effectively evaluate the performance of the model. In [41], it was suggested to use online evaluation tools, but these tools typically evaluate all languages within the dataset rather than specific ones. Therefore, we chose to select approximately 30% of the bilingual Arabic and English testing images from ICDAR2017 and ICDAR2019 and used the online annotation tool Label Studio at https://labelstud.io/ (accessed on 11 April 2024) to create our own ground-truth data. Each word in the annotated images was outlined with a four-point polygon instead of a rectangle to accurately represent irregular text shapes. The polygon vertices were defined starting from the top-left corner of the word and proceeded clockwise. The annotation format for the images followed a structure similar to that used in the ICDAR datasets [95,96]. For each image, a corresponding text file contained three components: the four-point polygon outlining the word, the language of the word, and the text itself, as shown in Figure 10. Table 6 provides statistical information about the bilingual Arabic and English datasets and English datasets.

Figure 10.

An example of an image and its ground-truth format from the ICDAR2019 dataset [41].

Table 6.

Datasets’ statistics.

5.1.2. English Scene Text Datasets

Our training process involved using a combination of publicly available benchmark English datasets along with previous Arabic text datasets to train our model for recognizing bilingual Arabic and English text. Specifically, we selected the most widely used English dataset containing natural scene images with varying levels of complexity. The English datasets used for training are as follows:

- ICDAR 2015 [96]: This dataset was collected over several months in Singapore and contains 1670 images with 17,548 annotated regions. It is one of the most comprehensive publicly available datasets with complete ground truth for Latin-scripted text. Out of these images, 1500 are publicly accessible, divided into a training set of 1000 images and a test set of 500 images.

- COCO-Text [97]: The Microsoft COCO dataset serves as the largest benchmark for text localization and recognition. It includes 173,589 text instances from 63,686 images, encompassing handwritten and printed text in both clear and blurry conditions, as well as English and non-English texts. The dataset is composed of 43,686 training images and 20,000 testing images.

- Total-Text [98]: The Total-Text dataset is designed for the localization and recognition of Latin text in various forms, including curved, multi-oriented, and horizontal text lines. It consists of 1255 training images and 300 testing images, annotated with polygons at the word level, primarily obtained from street billboards.

5.2. Evaluation Metrics

The performance evaluation of localizing bilingual Arabic–English texts in natural scene images was assessed using three metrics: precision, recall, and F-score. Additionally, end-to-end STR was evaluated based on accuracy. The evaluation protocol follows that of ICDAR2015 [96], utilizing the intersection over union (IoU) metric. This protocol aligns with the evaluation framework used in object detection tasks such as PASCAL VOC [99]. IoU is computed by measuring the overlap between predicted and ground-truth bounding boxes, as represented in Equation (3). A prediction is classified as True Positive (TP) if its IoU exceeds 0.5; otherwise, it is considered a False Positive (FP). A False Negative (FN) occurs when the model fails to predict any output for a specific region of an image.

The evaluation metrics were calculated as follows:

- Accuracy: This metric calculates the ratio of correctly predicted texts (True Positives, TP, and True Negatives, TN) to the total number of predicted texts, including both correct and incorrect predictions.

- Precision: Precision measures the proportion of correctly predicted bounding boxes (TP) relative to the total number of predicted bounding boxes (both TP and False Positives, FP).

- Recall: Recall quantifies the ratio of correctly predicted bounding boxes (TP) to the total number of expected results based on the ground truth of a dataset.

- F-score: The F-score represents the harmonic mean of precision and recall, providing a balanced measure of a model’s performance.

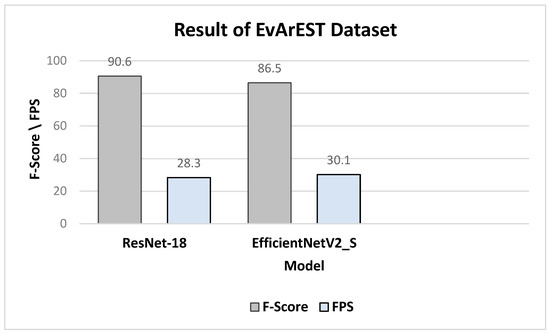

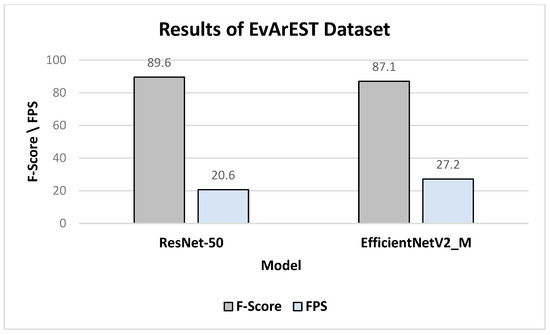

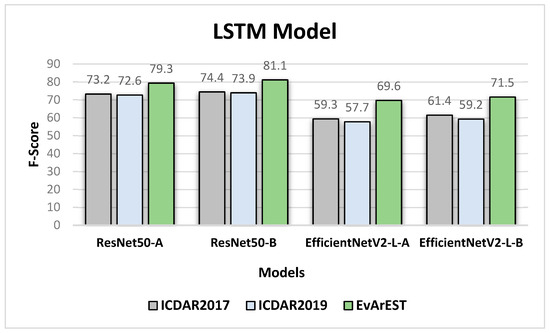

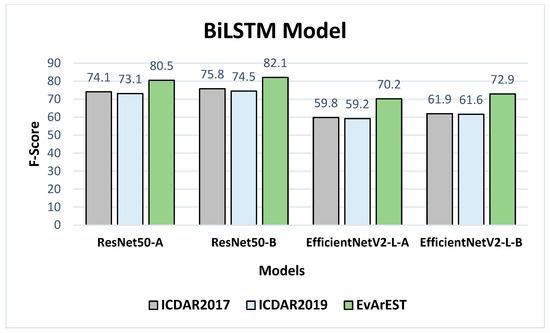

5.3. Training Details