Q8VaxStance: Dataset Labeling System for Stance Detection towards Vaccines in Kuwaiti Dialect

Abstract

:1. Introduction

- How can we create a labeling system to annotate a large dataset of Kuwaiti dialect tweets for stance detection towards vaccines with or without help from subject matter experts (SMEs)?

- What experimental setup produces the best performance for the proposed labeling system?

2. Background

2.1. Vaccine Hesitancy and Stance Detection Using Social Network Analysis and Natural Language Processing

2.2. Natural Language Processing (NLP) of Kuwaiti Dialect

2.3. Dataset Labeling Approaches

- SMEs write labeling functions (LFs) that express weak supervision sources such as distant supervision, patterns, and heuristics.

- Snorkel applies the LFs on unlabeled data and learns a generative model to combine the LF outputs into probabilistic labels.

- Snorkel uses these labels to train a discriminative classification model such as a deep neural network.

3. Methodology

3.1. Dataset Collection

- We manually searched the Twitter platform and collected specific keywords and hashtags associated with Kuwaiti people’s attitudes towards the vaccine.

- We used an online tool, Communalytic [31], along with the Twitter academic API to extract tweets, and we used the collected keywords and hashtags from the previous step to search for historical tweets. The time frame of collection was from the start of the vaccination campaign in Kuwait to the end of all precautions against COVID-19 (December 2020 to July 2022).

3.2. Dataset Preparation

3.3. Dataset Labeling

3.4. Q8VaxStance Labeling System

- We selected the weak supervised learning framework to use in our experiments. After examining several Python packages and frameworks that support weak supervised learning for natural language processing, we decided to use the Snorkel open-sourced software framework [33] based on the good results we were able to establish in [16] for the sentiment classification of the Kuwaiti dialect.

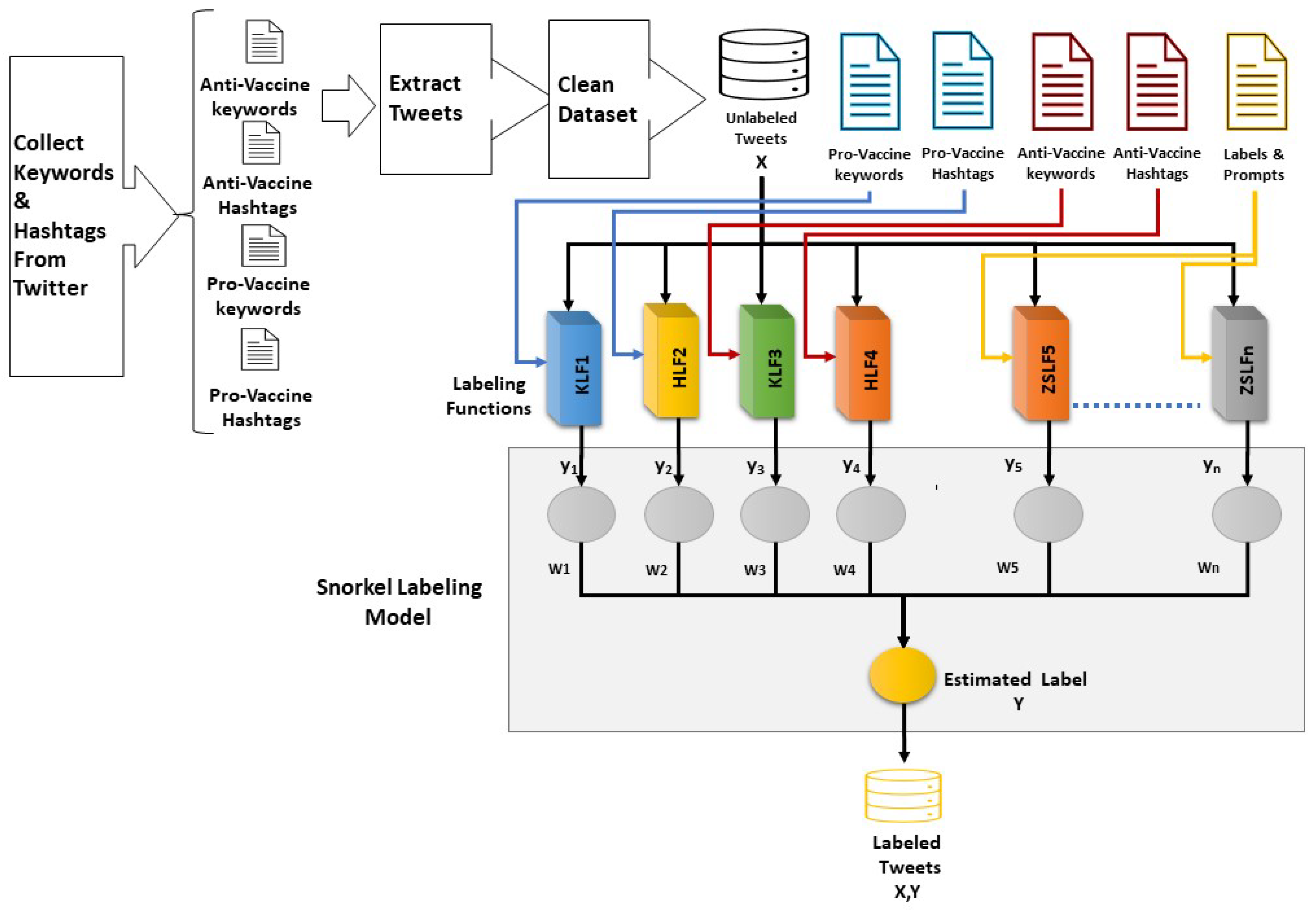

- We set up 52 experiments, as described in Table 1; for each experiment, we created the labeling functions that determine the stance towards vaccines. Figure 1 illustrates the general Q8VaxStance labeling system architecture used in the KHZSLF experiment setup; the system architecture for the KHLF and ZSLF experiments is similar, with a few labeling functions being excluded depending on the specific experimental setup.

- We applied the labeling functions on 42,815 unlabeled tweets and trained the model using the Snorkel package to predict the dataset labels. As a first experiment, we created labeling functions to label the dataset based on the presence of specific pro-vaccine and anti-vaccine keywords and hashtags in the tweet texts. In this experiment, we used the same keywords and hashtags that were used before to obtain the dataset from Twitter.

- We conducted several experiments to compare the performance of using only zero-shot (ZS) learning-based labeling functions versus combining keyword-based labeling functions with zero-shot learning-based labeling functions. We implemented the inference code provided by the ZS models’ creators using the huggingface website. The following pretrained zero-shot models were used in the ZS labeling functions:

- We applied prompt engineering to check the effect of using different prompts and labels on the labeling system performance, then determined the best labels and prompt combinations that produced the best performance when using the zero-shot learning-based labeling function. To apply prompt engineering, we varied the text of labels and prompts; in addition, we tested different combinations consisting of English labels and prompts, Arabic labels and prompts, and mixed language labels and prompts to check the effect of the language used in the labels and prompts on system performance. Table 2 and Table 3 contain a list of the labels and prompts used in our experiments.

4. Experimental Results and Discussion

- Changing the type of labeling function:

- –

- KHLF: keyword and hashtag detection used in labeling functions;

- –

- ZSLF: only zero-shot models used in labeling functions;

- –

- KHZSLF: both keyword and hashtag detection plus zero-shot models used in labeling functions.

- Changing the language of labels and prompts used in zero-shot models:

- –

- AA: Arabic labels and Arabic prompts;

- –

- EE: English labels and English prompts;

- –

- AE: Arabic labels and English prompts;

- –

- AAAEEE: mixed labeling function with mixed language labels and prompts;

- –

- NN: not using zero-shot models as labeling functions, i.e., using keyword and hashtag detection in labeling functions.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Alibrahim, J.; Awad, A. COVID-19 vaccine hesitancy among the public in Kuwait: A cross-sectional survey. Int. J. Environ. Res. Public Health 2021, 18, 8836. [Google Scholar] [PubMed]

- Sallam, M.; Dababseh, D.; Eid, H.; Al-Mahzoum, K.; Al-Haidar, A.; Taim, D.; Yaseen, A.; Ababneh, N.A.; Bakri, F.G.; Mahafzah, A. High Rates of COVID-19 Vaccine Hesitancy and Its Association with Conspiracy Beliefs: A Study in Jordan and Kuwait among Other Arab Countries. Vaccines 2021, 9, 42. [Google Scholar] [CrossRef] [PubMed]

- Al-Ayyadhi, N.; Ramadan, M.M.; Al-Tayar, E.; Al-Mathkouri, R.; Al-Awadhi, S. Determinants of hesitancy towards COVID-19 vaccines in State of Kuwait: An exploratory internet-based survey. Risk Manag. Healthc. Policy 2021, 14, 4967–4981. [Google Scholar] [CrossRef]

- Cascini, F.; Pantovic, A.; Al-Ajlouni, Y.A.; Failla, G.; Puleo, V.; Melnyk, A.; Lontano, A.; Ricciardi, W. Social media and attitudes towards a COVID-19 vaccination: A systematic review of the literature. eClinicalMedicine 2022, 48, 101454. [Google Scholar] [CrossRef]

- Greyling, T.; Rossouw, S. Positive attitudes towards COVID-19 vaccines: A cross-country analysis. PLoS ONE 2022, 17, e0264994. [Google Scholar] [CrossRef] [PubMed]

- AlAwadhi, E.; Zein, D.; Mallallah, F.; Bin Haider, N.; Hossain, A. Monitoring COVID-19 vaccine acceptance in Kuwait during the pandemic: Results from a national serial study. Risk Manag. Healthc. Policy 2021, 14, 1413–1429. [Google Scholar] [CrossRef]

- Putra, C.B.P.; Purwitasari, D.; Raharjo, A.B. Stance Detection on Tweets with Multi-task Aspect-based Sentiment: A Case Study of COVID-19 Vaccination. Int. J. Intell. Eng. Syst. 2022, 15, 515–526. [Google Scholar]

- Muric, G.; Wu, Y.; Ferrara, E. COVID-19 Vaccine Hesitancy on Social Media: Building a Public Twitter Data Set of Antivaccine Content, Vaccine Misinformation, and Conspiracies. JMIR Public Health Surveill 2021, 7, e30642. [Google Scholar] [CrossRef]

- Hayawi, K.; Shahriar, S.; Serhani, M.; Taleb, I.; Mathew, S. ANTi-Vax: A novel Twitter dataset for COVID-19 vaccine misinformation detection. Public Health 2022, 203, 23–30. [Google Scholar] [CrossRef]

- Jun, J.; Zain, A.; Chen, Y.; Kim, S.H. Adverse Mentions, Negative Sentiment, and Emotions in COVID-19 Vaccine Tweets and Their Association with Vaccination Uptake: Global Comparison of 192 Countries. Vaccines 2022, 10, 735. [Google Scholar] [CrossRef]

- Moubtahij, H.E.; Abdelali, H.; Tazi, E.B. AraBERT transformer model for Arabic comments and reviews analysis. IAES Int. J. Artif. Intell. (IJ-AI) 2022, 11, 379–387. [Google Scholar] [CrossRef]

- Abdul-Mageed, M.; Elmadany, A.; Nagoudi, E.M.B. ARBERT & MARBERT: Deep Bidirectional Transformers for Arabic. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Bangkok, Thailand, 1 August 2021; pp. 7088–7105. [Google Scholar] [CrossRef]

- Obeid, O.; Zalmout, N.; Khalifa, S.; Taji, D.; Oudah, M.; Alhafni, B.; Inoue, G.; Eryani, F.; Erdmann, A.; Habash, N. CAMeL tools: An open source python toolkit for Arabic natural language processing. In Proceedings of the Twelfth Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 7022–7032. [Google Scholar]

- Salamah, J.B.; Elkhlifi, A. Microblogging opinion mining approach for kuwaiti dialect. In Proceedings of the The International Conference on Computing Technology and Information Management (ICCTIM), Dubai, United Arab Emirates, 9 April 2014; p. 388. [Google Scholar]

- Almatar, M.G.; Alazmi, H.S.; Li, L.; Fox, E.A. Applying GIS and Text Mining Methods to Twitter Data to Explore the Spatiotemporal Patterns of Topics of Interest in Kuwait. ISPRS Int. J.-Geo-Inf. 2020, 9, 702. [Google Scholar] [CrossRef]

- Husain, F.; Al-Ostad, H.; Omar, H. A Weak Supervised Transfer Learning Approach for Sentiment Analysis to the Kuwaiti Dialect. In Proceedings of the Seventh Arabic Natural Language Processing Workshop (WANLP). Association for Computational Linguistics, Abu Dhabi, United Arab Emirates, 8 December 2022; pp. 161–173. [Google Scholar]

- Aldihan, H.; Gaizauskas, R.; Fitzmaurice, S. A Pilot Study on the Collection and Computational Analysis of Linguistic Differences Amongst Men and Women in a Kuwaiti Arabic WhatsApp Dataset. In Proceedings of the Seventh Arabic Natural Language Processing Workshop (WANLP), Abu Dhabi, United Arab Emirates, 8 December 2022; pp. 372–380. [Google Scholar]

- Shimizu, A.; Wakabayash, K. Effect of Label Redundancy in Crowdsourcing for Training Machine Learning Models. J. Data Intell. 2022, 3, 301–315. [Google Scholar] [CrossRef]

- Zhang, Z.; Strubell, E.; Hovy, E. A Survey of Active Learning for Natural Language Processing. arXiv 2022, arXiv:2210.10109. [Google Scholar] [CrossRef]

- Simmler, N.; Sager, P.; Andermatt, P.; Chavarriaga, R.; Schilling, F.P.; Rosenthal, M.; Stadelmann, T. A Survey of Un-, Weakly-, and Semi-Supervised Learning Methods for Noisy, Missing and Partial Labels in Industrial Vision Applications. In Proceedings of the 2021 8th Swiss Conference on Data Science (SDS), Lucerne, Switzerland, 9 June 2021. [Google Scholar] [CrossRef]

- Hang, D.; Victor, S.P.; Huayu, Z.; Minhong, W.; Arlene, C.; Emma, D.; Jiaoyan, C.; Beatrice, A.; William, W.; Honghan, W. Ontology-Driven and Weakly Supervised Rare Disease Identification From Clinical Notes. BMC Med Inform. Decis. Mak. 2023, 23, 86. [Google Scholar]

- Ratner, A.; Bach, S.H.; Ehrenberg, H.; Fries, J.; Wu, S.; Ré, C. Snorkel: Rapid training data creation with weak supervision. Proc. Vldb Endow. 2017, 11, 269–282. [Google Scholar] [CrossRef]

- Naeini, E.K.; Subramanian, A.; Calderon, M.D.; Zheng, K.; Dutt, N.; Liljeberg, P.; Salanterä, S.; Nelson, A.M.; Rahmani, A.M. Pain Recognition With Electrocardiographic Features in Postoperative Patients: Method Validation Study. J. Med. Internet Res. 2021, 23, e25079. [Google Scholar] [CrossRef]

- Datta, S.; Roberts, K. Weakly Supervised Spatial Relation Extraction From Radiology Reports. JAMIA Open 2023, 6, ooad027. [Google Scholar] [CrossRef]

- Yu, F.; Xiu, X.; Li, Y. A survey on deep transfer learning and beyond. Mathematics 2022, 10, 3619. [Google Scholar] [CrossRef]

- Tunstall, L.; von Werra, L.; Wolf, T. Natural Language Processing with Transformers; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2022. [Google Scholar]

- Yildirim, S.; Asgari-Chenaghlu, M. Mastering Transformers: Build State-of-the-Art Models from Scratch with Advanced Natural Language Processing Techniques; Packt Publishing: Birmingham, UK, 2021. [Google Scholar]

- Ranasinghe, T.; Zampieri, M. An Evaluation of Multilingual Offensive Language Identification Methods for the Languages of India. Information 2021, 12, 306. [Google Scholar] [CrossRef]

- Kuo, C.C.; Chen, K.Y. Toward Zero-Shot and Zero-Resource Multilingual Question Answering. IEEE Access 2022, 10, 99754–99761. [Google Scholar] [CrossRef]

- He, P.; Gao, J.; Chen, W. DeBERTaV3: Improving DeBERTa using ELECTRA-Style Pre-Training with Gradient-Disentangled Embedding Sharing. arXiv 2021, arXiv:2111.09543. [Google Scholar] [CrossRef]

- Gruzd, A.; Mai, P. Communalytic: A Research Tool For Studying Online Communities and Online Discourse. 2022. Available online: https://communalytic.org/ (accessed on 14 September 2023).

- 2022. Available online: https://nlp.johnsnowlabs.com/docs/en/alab/quickstart (accessed on 14 September 2023).

- Ratner, A.; De Sa, C.; Wu, H.; Davison, D.; Wu, X.; Liu, Y. Language Models in the Loop: Incorporating Prompting into Weak Supervision. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 1776–1787. [Google Scholar]

- Davison, J. XLM-Roberta-Large-XNLI. Available online: https://huggingface.co/joeddav/xlm-roberta-large-xnli (accessed on 4 September 2023).

- Laurer, M.; van Atteveldt, W.; Casas, A.; Welbers, K. Less Annotating, More Classifying–Addressing the Data Scarcity Issue of Supervised Machine Learning with Deep Transfer Learning and BERT-NLI. Political Anal. 2022, 1–33. [Google Scholar] [CrossRef]

- Gallego, V. XLM-RoBERTa-Large-XNLI-ANLI. Available online: https://huggingface.co/vicgalle/xlm-roberta-large-xnli-anli. (accessed on 4 September 2023).

| Experiment | Keywords and Hashtags LFs | Zero-Shot Models LFs | English Prompt | Arabic Prompt | English Labels | Arabic Labels | Count |

|---|---|---|---|---|---|---|---|

| KHLF | √ | 1 | |||||

| KHZSLF-EE | √ | √ | √ | √ | 6 | ||

| KHZSLF-EA | √ | √ | √ | √ | 9 | ||

| KHZSLF-AA | √ | √ | √ | √ | 9 | ||

| ZSLF-EE | √ | √ | √ | 6 | |||

| ZSLF-EA | √ | √ | √ | 9 | |||

| ZSLF-AA | √ | √ | √ | 9 | |||

| ZSLF-AA-AE-EE | √ | √ | √ | √ | √ | 3 | |

| Total Experiments | 52 |

| Labels | Language |

|---|---|

| pro-vaccine, anti-vaccine | English |

| in favor vaccine, against vaccine | English |

| مع التطعيم, ضد التطعيم | Arabic |

| مؤيد التطعيم, معارض التطعيم | Arabic |

| نعم للتطعيم, لا للتطعيم | Arabic |

| Prompts | Language |

|---|---|

| the attitude towards COVID-19 vaccination is {} | English |

| the stance towards COVID-19 vaccination is {} | English |

| the opinion towards COVID-19 vaccination is {} | English |

| الرأي في هذه التغريده {} | Arabic |

| الموقف في هذه التغريده تجاه التطعيم {} | Arabic |

| التوجه في هذه التغريده {} | Arabic |

| Experiment | Accuracy | Macro-F1 | Cohen Kappa | Experiment | Accuracy | Macro-F1 | Cohen Kappa |

|---|---|---|---|---|---|---|---|

| KHZSLF-EE1 | 0.815 | 0.810 | 0.618 | ZSLF-EE1 | 0.795 | 0.785 | 0.570 |

| KHZSLF-EE2 | 0.802 | 0.798 | 0.598 | ZSLF-EE2 | 0.803 | 0.789 | 0.579 |

| KHZSLF-EE3 | 0.824 | 0.820 | 0.638 | ZSLF-EE3 | 0.795 | 0.780 | 0.561 |

| KHZSLF-EE4 | 0.839 | 0.834 | 0.668 | ZSLF-EE4 | 0.775 | 0.766 | 0.533 |

| KHZSLF-EE5 | 0.822 | 0.817 | 0.633 | ZSLF-EE5 | 0.779 | 0.765 | 0.532 |

| KHZSLF-EE6 | 0.825 | 0.821 | 0.640 | ZSLF-EE6 | 0.784 | 0.768 | 0.538 |

| Average | 0.820 | 0.820 | 0.633 | Average | 0.790 | 0.780 | 0.552 |

| Experiment | Accuracy | Macro-F1 | Cohen Kappa | Experiment | Accuracy | Macro-F1 | Cohen Kappa |

|---|---|---|---|---|---|---|---|

| KHZSLF-AA1 | 0.820 | 0.810 | 0.621 | ZSLFAA1 | 0.776 | 0.760 | 0.536 |

| KHZSLF-AA2 | 0.809 | 0.804 | 0.609 | ZSLFAA2 | 0.780 | 0.777 | 0.558 |

| KHZSLF-AA3 | 0.826 | 0.820 | 0.641 | ZSLFAA3 | 0.795 | 0.783 | 0.568 |

| KHZSLF-AA4 | 0.810 | 0.801 | 0.602 | ZSLFAA4 | 0.775 | 0.770 | 0.541 |

| KHZSLF-AA5 | 0.790 | 0.786 | 0.573 | ZSLFAA5 | 0.792 | 0.788 | 0.578 |

| KHZSLF-AA6 | 0.815 | 0.811 | 0.623 | ZSLFAA6 | 0.790 | 0.784 | 0.570 |

| KHZSLF-AA7 | 0.808 | 0.797 | 0.596 | ZSLFAA7 | 0.810 | 0.803 | 0.606 |

| KHZSLF-AA8 | 0.832 | 0.826 | 0.652 | ZSLFAA8 | 0.824 | 0.818 | 0.636 |

| KHZSLF-AA9 | 0.832 | 0.828 | 0.657 | ZSLFAA9 | 0.810 | 0.802 | 0.604 |

| Average | 0.816 | 0.809 | 0.619 | Average | 0.795 | 0.787 | 0.577 |

| Experiment | Accuracy | Macro-F1 | Cohen Kappa | Experiment | Accuracy | Macro-F1 | Cohen Kappa |

|---|---|---|---|---|---|---|---|

| KHZSLF-EA1 | 0.839 | 0.836 | 0.673 | ZSLF-EA1 | 0.792 | 0.788 | 0.578 |

| KHZSLF-EA2 | 0.833 | 0.833 | 0.660 | ZSLF-EA2 | 0.808 | 0.802 | 0.605 |

| KHZSLF-EA3 | 0.832 | 0.828 | 0.659 | ZSLF-EA3 | 0.801 | 0.796 | 0.594 |

| KHZSLF-EA4 | 0.799 | 0.796 | 0.594 | ZSLF-EA4 | 0.787 | 0.781 | 0.562 |

| KHZSLF-EA5 | 0.823 | 0.819 | 0.639 | ZSLF-EA5 | 0.794 | 0.788 | 0.576 |

| KHZSLF-EA6 | 0.807 | 0.803 | 0.608 | ZSLF-EA6 | 0.784 | 0.777 | 0.556 |

| KHZSLF-EA7 | 0.825 | 0.821 | 0.644 | ZSLF-EA7 | 0.809 | 0.803 | 0.606 |

| KHZSLF-EA8 | 0.837 | 0.833 | 0.667 | ZSLF-EA8 | 0.807 | 0.800 | 0.601 |

| KHZSLF-EA9 | 0.829 | 0.824 | 0.649 | ZSLF-EA9 | 0.801 | 0.796 | 0.593 |

| Average | 0.825 | 0.821 | 0.643 | Average | 0.798 | 0.792 | 0.58 |

| Experiment | Accuracy | Macro-F1 | Cohen Kappa |

|---|---|---|---|

| ZSLF-AA-AE-EE1 | 0.804 | 0.799 | 0.599 |

| ZSLF-AA-AE-EE2 | 0.805 | 0.800 | 0.601 |

| ZSLF-AA-AE-EE3 | 0.802 | 0.798 | 0.596 |

| Average | 0.804 | 0.799 | 0.598 |

| Experiment | Pro | Anti | Total | Experiment | Pro | Anti | Total |

|---|---|---|---|---|---|---|---|

| KHZSLF-AA1 | 30,034 | 12,775 | 42,809 | ZSLF-AA1 | 25,439 | 17,318 | 42,757 |

| KHZSLF-AA2 | 21,503 | 21,312 | 42,815 | ZSLF-AA2 | 15,727 | 27,086 | 42,813 |

| KHZSLF-AA3 | 26,710 | 16,092 | 42,802 | ZSLF-AA3 | 30,191 | 12,609 | 42,800 |

| KHZSLF-AA4 | 19,543 | 23,253 | 42,796 | ZSLF-AA4 | 18,348 | 24,373 | 42,721 |

| KHZSLF-AA5 | 18,218 | 24,594 | 42,812 | ZSLF-AA5 | 20,431 | 22,380 | 42,811 |

| KHZSLF-AA6 | 18,494 | 23,535 | 42,029 | ZSLF-AA6 | 21,907 | 20,851 | 42,758 |

| KHZSLF-AA7 | 19,049 | 23,738 | 42,787 | ZSLF-AA7 | 20,209 | 22,606 | 42,815 |

| KHZSLF-AA8 | 18,929 | 23,838 | 42,767 | ZSLF-AA8 | 20,390 | 22,425 | 42,815 |

| KHZSLF-AA9 | 20,928 | 21,869 | 42,797 | ZSLF-AA9 | 18,276 | 24,539 | 42,815 |

| Average | 21,490 | 21,223 | 42,713 | Average | 21,213 | 21,576 | 42,789 |

| Experiment | Pro | Anti | Total | Experiment | Pro | Anti | Total |

|---|---|---|---|---|---|---|---|

| KHZSLF-EE1 | 20,552 | 21,702 | 42,254 | ZSLF-EE1 | 23,666 | 18,896 | 42,562 |

| KHZSLF-EE2 | 17,856 | 23,781 | 41,637 | ZSLF-EE2 | 26,931 | 15,621 | 42,552 |

| KHZSLF-EE3 | 20,925 | 21,262 | 42,187 | ZSLF-EE3 | 15,123 | 27,326 | 42,449 |

| KHZSLF-EE4 | 22,292 | 19,978 | 42,270 | ZSLF-EE4 | 21,195 | 16,743 | 37,938 |

| KHZSLF-EE5 | 19,938 | 22,115 | 42,053 | ZSLF-EE5 | 25,976 | 13,328 | 39,304 |

| KHZSLF-EE6 | 18,385 | 23,124 | 41,509 | ZSLF-EE6 | 24,551 | 12,668 | 37,219 |

| Average | 19,991 | 21,994 | 41,985 | Average | 22,907 | 17,430 | 40,337 |

| Experiment | Pro | Anti | Total | Experiment | Pro | Anti | Total |

|---|---|---|---|---|---|---|---|

| KHZSLF-EA1 | 23,102 | 19,662 | 42,764 | ZSLF-EA1 | 25,409 | 17,406 | 42,815 |

| KHZSLF-EA2 | 23,027 | 19,765 | 42,792 | ZSLF-EA2 | 25,101 | 17,714 | 42,815 |

| KHZSLF-EA3 | 20,647 | 22,117 | 42,764 | ZSLF-EA3 | 23,802 | 19,013 | 42,815 |

| KHZSLF-EA4 | 20,573 | 22,213 | 42,786 | ZSLF-EA4 | 20,156 | 22,659 | 42,815 |

| KHZSLF-EA5 | 21,577 | 21,177 | 42,754 | ZSLF-EA5 | 22,617 | 20,198 | 42,815 |

| KHZSLF-EA6 | 19,393 | 22,052 | 41,445 | ZSLF-EA6 | 22,913 | 19,902 | 42,815 |

| KHZSLF-EA7 | 22,189 | 20,594 | 42,783 | ZSLF-EA7 | 21,912 | 20,903 | 42,815 |

| KHZSLF-EA8 | 20,240 | 22,538 | 42,778 | ZSLF-EA8 | 21,102 | 21,713 | 42,815 |

| KHZSLF-EA9 | 23,212 | 19,580 | 42,792 | ZSLF-EA9 | 21,695 | 21,120 | 42,815 |

| Average | 21,551 | 21,078 | 42,629 | Average | 22,745 | 20,070 | 42,815 |

| Experiment | Pro | Anti | Total |

|---|---|---|---|

| ZSLF-AA-AE-EE1 | 21,894 | 20,921 | 42,815 |

| ZSLF-AA-AE-EE2 | 21,650 | 21,165 | 42,815 |

| ZSLF-AA-AE-EE3 | 22,432 | 20,383 | 42,815 |

| Average | 21,992 | 20,823 | 42,815 |

| p-Value | Keywords vs. Zero-Shot | Language of Labels and Prompts |

|---|---|---|

| Accuracy | 1.577262 × 10 | 0.000386 |

| Macro-F1 | 6.632477 × 10 | 0.000359 |

| Total Labels | 1.397020 × 10 | 2.697203 × 10 |

| Experiment Group 1 | Experiment Group 2 | P-adj Accuracy | P-adj Macro-F1 | P-adj Labels |

|---|---|---|---|---|

| KHLF | KHZSLF | 0.0 | 0.0 | 0.0 |

| KHLF | ZSLF | 0.0 | 0.0 | 0.0 |

| KHZSLF | ZSLF | 0.0 | 0.0 | 0.5 |

| Experiment Group 1 | Experiment Group 2 | P-adj Accuracy | P-adj Macro-F1 | P-adj Labels |

|---|---|---|---|---|

| AA | AAAEEE | 0.9999 | 1.0 | 1.0 |

| AA | AE | 0.8400 | 0.6911 | 1.0 |

| AA | EE | 1.0 | 0.9986 | 0.0004 |

| AA | NN | 0.0001 | 0.0001 | 0 |

| AAAEEE | AE | 0.9583 | 0.9688 | 0.9999 |

| AAAEEE | EE | 1.0 | 0.9994 | 0.0667 |

| AAAEEE | NN | 0.0005 | 0.0009 | 0 |

| AE | EE | 0.8634 | 0.6021 | 0.0005 |

| AE | NN | 0.0003 | 0.0006 | 0 |

| EE | NN | 0.0001 | 0.0001 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alostad, H.; Dawiek, S.; Davulcu, H. Q8VaxStance: Dataset Labeling System for Stance Detection towards Vaccines in Kuwaiti Dialect. Big Data Cogn. Comput. 2023, 7, 151. https://doi.org/10.3390/bdcc7030151

Alostad H, Dawiek S, Davulcu H. Q8VaxStance: Dataset Labeling System for Stance Detection towards Vaccines in Kuwaiti Dialect. Big Data and Cognitive Computing. 2023; 7(3):151. https://doi.org/10.3390/bdcc7030151

Chicago/Turabian StyleAlostad, Hana, Shoug Dawiek, and Hasan Davulcu. 2023. "Q8VaxStance: Dataset Labeling System for Stance Detection towards Vaccines in Kuwaiti Dialect" Big Data and Cognitive Computing 7, no. 3: 151. https://doi.org/10.3390/bdcc7030151

APA StyleAlostad, H., Dawiek, S., & Davulcu, H. (2023). Q8VaxStance: Dataset Labeling System for Stance Detection towards Vaccines in Kuwaiti Dialect. Big Data and Cognitive Computing, 7(3), 151. https://doi.org/10.3390/bdcc7030151