1. Introduction

Humans rate items or entities in many important settings. For example, users of dating websites and mobile applications rate other users’ physical attractiveness, teachers rate scholarly work of students, and reviewers rate the quality of academic conference submissions. In these settings, the users assign a numerical (integral) score to each item from a small discrete set. However, the number of options in this set can vary significantly between applications, and even within different instantiations of the same application. For instance, for rating attractiveness, three popular sites all use a different number of options. On “Hot or Not,” users rate the attractiveness of photographs submitted voluntarily by other users on a scale of 1–10. These scores are aggregated and the average is assigned as the overall “score” for a photograph. On the dating website OkCupid, users rate other users on a scale of 1–5 (if a user rates another user 4 or 5, then the rated user receives a notification). In addition, on the mobile application Tinder, users “swipe right” (green heart) or “swipe left” (red X) to express interest in other users (two users are allowed to message each other if they mutually swipe right), which is essentially equivalent to using a binary scale. Education is another important application area requiring human ratings. For the 2016 International Joint Conference on Artificial Intelligence, reviewers assigned a “Summary Rating” score from −5–5 (equivalent to 1–10) for each submitted paper. The papers are then discussed and scores aggregated to produce an acceptance or rejection decision based on the average of the scores.

Despite the importance and ubiquity of the problem, there has been little fundamental research done on the problem of determining the optimal number of options to allow in such settings. We study a model in which users have an underlying integral ground truth score for each item in

and are required to submit an integral rating in

, for

. (For ease of presentation, we use the equivalent formulation

,

.) We use two generative models for the ground truth scores: a uniform random model in which the fraction of scores for each value from 0 to

is chosen uniformly at random (by choosing a random value for each and then normalizing), and a model where scores are chosen according to a Gaussian distribution with a given mean and variance. We then compute a “compressed” score distribution by mapping each full score

s from

to

by applying

We then compute the average “compressed” score

, and compute its error

according to

where

is the ground truth average. The goal is to pick

(in our simulations, we also consider a metric of the frequency at which each value of

k produces lowest error over all the items that are rated). While there are many possible generative models and cost functions, these seem to be the most natural, and we plan to study alternative choices in future work.

We derive a closed-form expression for that depends on only a small number (k) of parameters of the underlying distribution for an arbitrary distribution.This allows us to exactly characterize the performance of using each number of choices. In simulations, we repeatedly compute and compare the average values. We focus on and , which we believe are the most natural and interesting choices for initial study.

One could argue that this model is somewhat “trivial” in the sense that it would be optimal to set to permit all the possible scores, as this would result in the “compressed” scores agreeing exactly with the full scores. However, there are several reasons that would lead us to prefer to select in practice (as all of the examples previously described have done), thus making this analysis worthwhile. It is much easier for a human to assign a score from a small set than from a large set, particularly when rating many items under time constraints. We could have included an additional term into the cost function that explicitly penalizes larger values of k, which would have a significant effect on the optimal value of k (providing a favoritism for smaller values). However, the selection of this function would be somewhat arbitrary and would make the model more complex, and we leave this for future study. Given that we do not include such a penalty term, one may expect that increasing k will always decrease in our setting. While the simulations show a clear negative relationship, we show that smaller values of k actually lead to smaller surprisingly often. These smaller values would receive further preference with a penalty term.

One line of related theoretical research that also has applications to the education domain studies the impact of using finely grained numerical grades (100, 99, 98) vs. coarse letter grades (A, B, C) [

1]. They conclude that, if students care primarily about their rank relative to the other students, they are often best motivated to work by assigning them coarse categories than exact numerical scores. In a setting of “disparate” student abilities, they show that the optimal absolute grading scheme is always coarse. Their model is game-theoretic; each player (student) selects an effort level, seeking to optimize a utility function that depends on both the relative score and effort level. Their setting is quite different from ours in many ways. For one, they study a setting where it is assumed that the underlying “ground truth” score is known, yet may be disguised for strategic reasons. In our setting, the goal is to approximate the ground truth score as closely as possible.

While we are not aware of prior theoretical study of our exact problem, there have been experimental studies on the optimal number of options on a “Likert scale” [

2,

3,

4,

5,

6]. The general conclusion is that “the optimal number of scale categories is content specific and a function of the conditions of measurement.” [

7] There has been study of whether including a “mid-point” option (i.e., the middle choice from an odd number) is beneficial. One experiment demonstrated that the use of the mid-point category decreases as the number of choices increases: 20% of respondents choose the mid-point for 3 and 5 options while only 7% did for

[

8]. They conclude that it is preferable to either not include a mid-point at all or use a large number of options. Subsequent experiments demonstrated that eliminating a mid-point can reduce social desirability bias, which results from respondents’ desires to please the interviewer or not give a perceived socially unacceptable answer [

7]. There has also been significant research on questionnaire design and the concept of “feeling thermometers,” particularly from the fields of psychology and sociology [

9,

10,

11,

12,

13,

14]. One study concludes from experimental data: “in the measurement of satisfaction with various domains of life, 11-point scales clearly are more reliable than comparable 7-point scales” [

15]. Another study shows that “people are more likely to purchase gourmet jams or chocolates or to undertake optional class essay assignments when offered a limited array of six choices rather than a more extensive array of 24 or 30 choices” [

16]. Since the experimental conclusions are dependent on the specific datasets and seem to vary from domain to domain, we choose to focus on formulating theoretical models and computational simulations, though we also include results and discussion from several datasets.

We note that we are not necessarily claiming that our model or analysis perfectly models reality or the psychological phenomena behind how humans actually behave. We are simply proposing simple and natural models that, to the best of our knowledge, have not been studied before. The simulation results seem somewhat counterintuitive and merit study on their own. We admit that further study is needed to determine how realistic our assumptions are for modeling human behavior. For example, some psychology research suggests that human users may not actually have an underlying integral ground truth value [

17]. Research from the recommender systems community indicates that while using a coarser granularity for rating scales provides less absolute predictive value to users, it can be viewed a providing more value if viewed from an alternative perspective of preference bits per second [

18].

Some work considers the setting where ratings over

are mapped into a binary “thumbs up”/“thumbs down” (analogously to the swipe right/left example for Tinder above) [

19]. Generally, users mapped original ratings of 1 and 2 to “thumbs down” and original ratings of 3, 4, and 5 to “thumbs up,” which can be viewed as being similar to the floor compression procedure described above. We consider a more generalized setting where ratings over

are mapped down to a smaller space (which could be binary but may have more options). In addition, we also consider a rounding compression technique in addition to the flooring compression.

Some prior work has presented an approach for mapping continuous prediction scores to ordinal preferences with heterogeneous thresholds that is also applicable to mapping continuous-valued ‘true preference’ scores [

20]. We note that our setting can apply straightforwardly to provide continuous-to-ordinal mapping in the same way as it performs ordinal-to-ordinal mapping initially. (In fact, for our theoretical analysis and for the Jester dataset, we study our mapping is continuous-to-ordinal.) An alternative model assumes that users compare items with pairwise comparisons which form a weak ordering, meaning that some items are given the same “mental rating,” while, for our setting, the ratings would be much more likely to be unique in the fine-grained space of ground-truth scores [

21,

22]. Note that there has also been exploration within the data mining and AI communities for determining the optimal of clusters to use for unsupervised learning algorithms [

23,

24,

25]. In comparison to prior work, the main takeaway from our work is the closed-form expression for simple natural models, and the new simulation results that show precisely for the first time how often each number of choices is optimal using several metrics (number of times it produces lowest error and the lowest average error). We include experiments on datasets from several domains for completeness, though, as prior work, it has shown that results can vary significantly between datasets, and further research from psychology and social science is needed to make more accurate predictions of how humans actually behave in practice. We note that our results could also have impact outside of human user systems—for example, to the problems of “quantization” and data compression in signal processing.

2. Theoretical Characterization

Suppose scores are given by continuous probability density function (pdf)

f (with cumulative distribution function (cdf)

F) on

and we wish to compress them to two options,

. Scores below 50 are mapped to 0, and above 50 to 1. The average of the full distribution is

The average of the compressed version is

Thus,

For three options,

In general, for

n total and

k compressed options,

Equation (

3) allows us to characterize the relative performance of choices of

k for a given distribution

f. For each

k, it requires only knowing

k statistics of

f (the

values of

plus

). In practice, these could likely be closely approximated from historical data for small

k values (though prior work has pointed out that there may be some challenges in order to closely approximate the cdf values of the ratings from historical data, due to the historical data not being sampled at random from the true rating distribution [

26]).

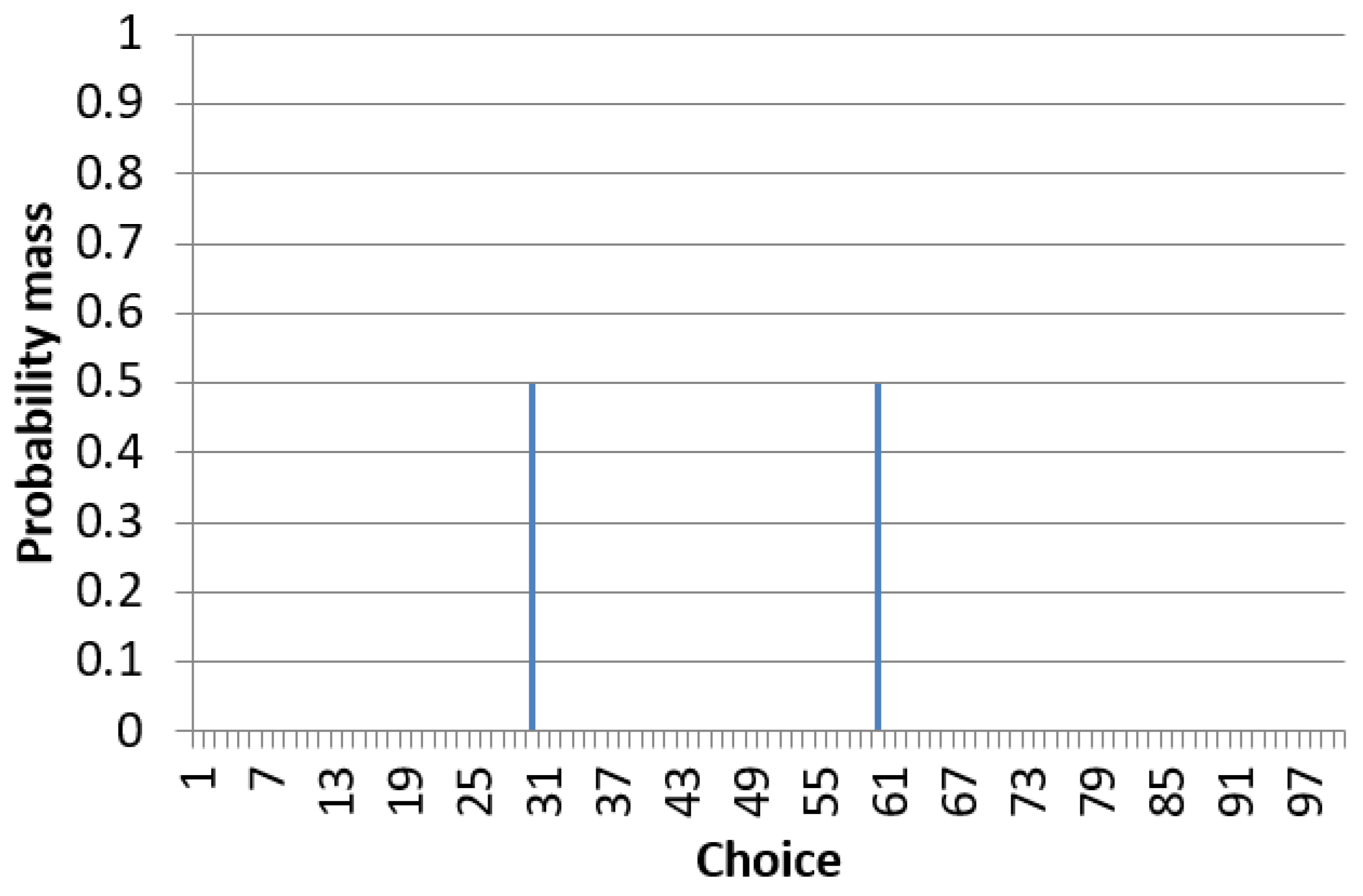

As an example, we see that

iff

Consider a full distribution that has half its mass right around 30 and half its mass right around 60 (

Figure 1). Then,

If we use

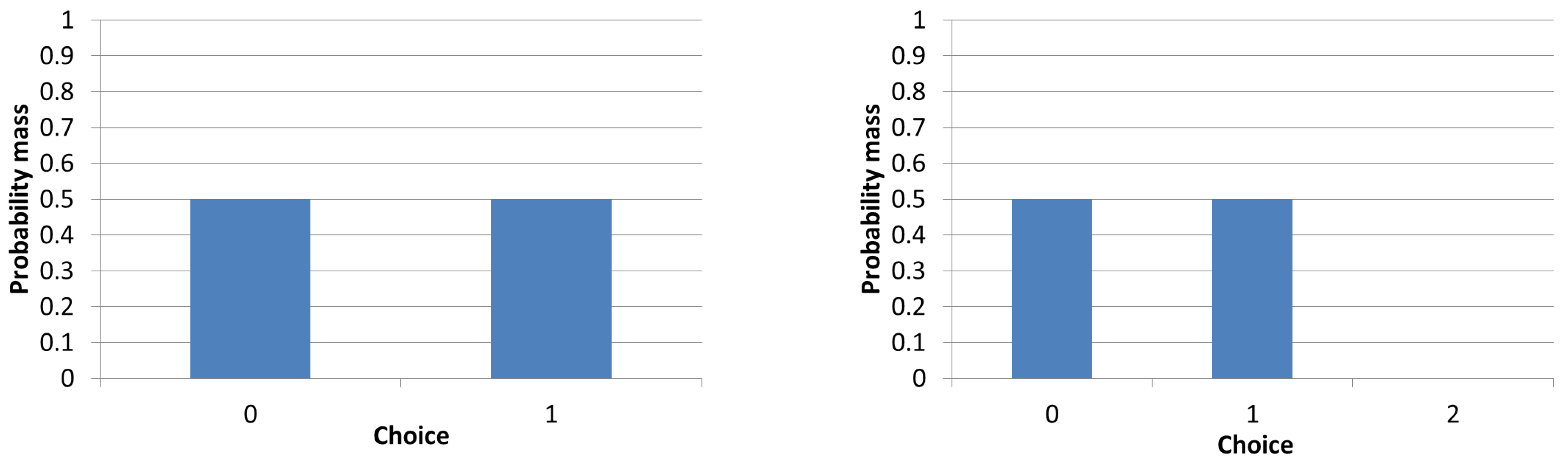

, then the mass at 30 will be mapped down to 0 (since

) and the mass at 60 will be mapped up to 1 (since

(

Figure 2). Thus,

Using normalization of

,

If we use

, then the mass at 30 will also be mapped down to 0 (since

), but the mass at 60 will be mapped to 1 (not the maximum possible value of 2 in this case), since

(

Figure 2). Thus, again

, but now, using normalization of

, we have

Thus, surprisingly, in this example, allowing more ranking choices actually significantly increases error.

If we happened to be in the case where both

and

, then we could remove the absolute values and reduce the expression to see that

iff

Note that the conditions

and

correspond to the constraints that

,

Taking these together, one specific set of conditions for which

is if both of the following are met:

We can next consider the case where both and Here, we can remove the absolute values and switch the direction of the inequality to see that iff Note that the conditions and correspond to the constraints that , Taking these together, a second set of conditions for which is if both of the following are met: ,

For the case

, the conditions are that

In addition, finally, for

Using outperforms () if and only if one of these four sets of conditions holds.

3. Rounding Compression

An alternative model we could have considered is to use rounding to produce the compressed scores as opposed to using the floor function from Equation (

1). For instance, for the case

, instead of dividing

s by 50 and taking the floor, we could instead partition the points according to whether they are closest to

or

. In the example above, the mass at 30 would be mapped to

and the mass at 60 would be mapped to

. This would produce a compressed average score of

No normalization would be necessary, and this would produce an error of

as the floor approach did as well. Similarly, for

, the region midpoints will be

,

,

. The mass at 30 will be mapped to

and the mass at 60 will be mapped to

This produces a compressed average score of

This produces an error of

Although the error for

is smaller than for the floor case, it is still significantly larger than

’s, and using two options still outperforms using three for the example in this new model.

In general, this approach would create

k “midpoints”

:

For

, we have

One might wonder whether the floor approach would ever outperform the rounding approach (in the example above, the rounding approach produced lower error and the same error for ). As a simple example to see that it can, consider the distribution with all mass on 0. The floor approach would produce giving an error of 0, while the rounding approach would produce giving an error of 25. Thus, the superiority of the approach is dependent on the distribution. We explore this further in the experiments.

For general

n and

k, analysis as above yields

Like for the floor model, requires only knowing k statistics of f. The rounding model has an advantage over the floor model that there is no need to convert scores between different scales and perform normalization. One drawback is that it requires knowing n (the expression for is dependent on n), while the floor model does not. In our experiments, we assume , but, in practice, it may not be clear what the agents’ ground truth granularity is and may be easier to just deal with scores from 1 to k. Furthermore, it may seem unnatural to essentially ask people to rate items as “” rather than “” (though the conversion between the score and could be done behind the scenes essentially circumventing the potential practical complication). One can generalize both the floor and rounding model by using a score of for the i’th region. For the floor setting, we set , and for the rounding setting

4. Computational Simulations

The above analysis leads to the immediate question of whether the example for which was a fluke or whether using fewer choices can actually reduce error under reasonable assumptions on the generative model. We study this question using simulations with what we believe are the two most natural models. While we have studied the continuous setting where the full set of options is continuous over and the compressed set is discrete , we now consider the perhaps more realistic setting where the full set is the discrete set and the compressed set is the same (though it should be noted that the two settings are likely quite similar qualitatively).

The first generative model we consider is a uniform model in which the values of the pmf for each of the n possible values are chosen independently and uniformly at random. Formally, we construct a histogram of n scores according to Algorithm 1. We then compress the full scores to a compressed distribution by applying Algorithm 2. The second is a Gaussian model in which the values are generated according to a normal distribution with specified mean and standard deviation (values below 0 are set to 0 and above to ). This model also takes as a parameter a number of samples s to use for generating the scores. The procedure is given by Algorithm 3. As for the uniform setting, Algorithm 2 is then used to compress the scores.

| Algorithm 1 Procedure for generating full scores in a uniform model |

| Inputs: Number of scores n |

| scoreSum |

| for |

| random(0,1) |

| scores[i] |

| scoresum = scoreSum |

| for |

| scores[i] = scores[i] / scoreSum |

| Algorithm 2 Procedure for compressing scores |

| Inputs: scores[], number of total scores n, desired number of compressed scores k |

| | ▹ Normalization |

| for |

| scoresCompressed += scores[i] |

| Algorithm 3 Procedure for generating scores in a Gaussian model |

| Inputs: Number of scores n, number of samples s, mean , standard deviation |

| for |

| randomGaussian() |

| if then |

| |

| else if then |

| |

| ++scores[round(r)] |

| for |

| scores[i] = scores[i] / s |

For our simulations, we used , and considered , which are popular and natural values. For the Gaussian model, we used , , . For each set of simulations, we computed the errors for all considered values of k for “items” (each corresponding to a different distribution generated according to the specified model). The main quantities we are interested in computing are the number of times that each value of k produces the lowest error over the m items, and the average value of the errors over all items for each k value.

In the first set of experiments, we compared performance between using

k = 2, 3, 4, 5, 10 to see for how many of the trials each value of

k produced the minimal error (

Table 1). Not surprisingly, we see that the number of victories (number of times that the value of

k produced the minimal error) increases monotonically with the value of

k, while the average error decreased monotonically (recall that we would have zero error if we set

). However, what is perhaps surprising is that using a smaller number of compressed scores produced the optimal error in a far from negligible number of the trials. For the uniform model, using 10 scores minimized error only around 53% of the time, while using five scores minimized error 17% of the time, and even using two scores minimized it 5.6% of the time. The results were similar for the Gaussian model, though a bit more in favor of larger values of

k, which is what we would expect because the Gaussian model is less likely to generate “fluke” distributions that could favor the smaller values.

We next explored the number of victories between just

and

, with results in

Table 2. Again, we observed that using a larger value of

k generally reduces error, as expected. However, we find it extremely surprising that using

produces a lower error 37% of the time. As before, the larger

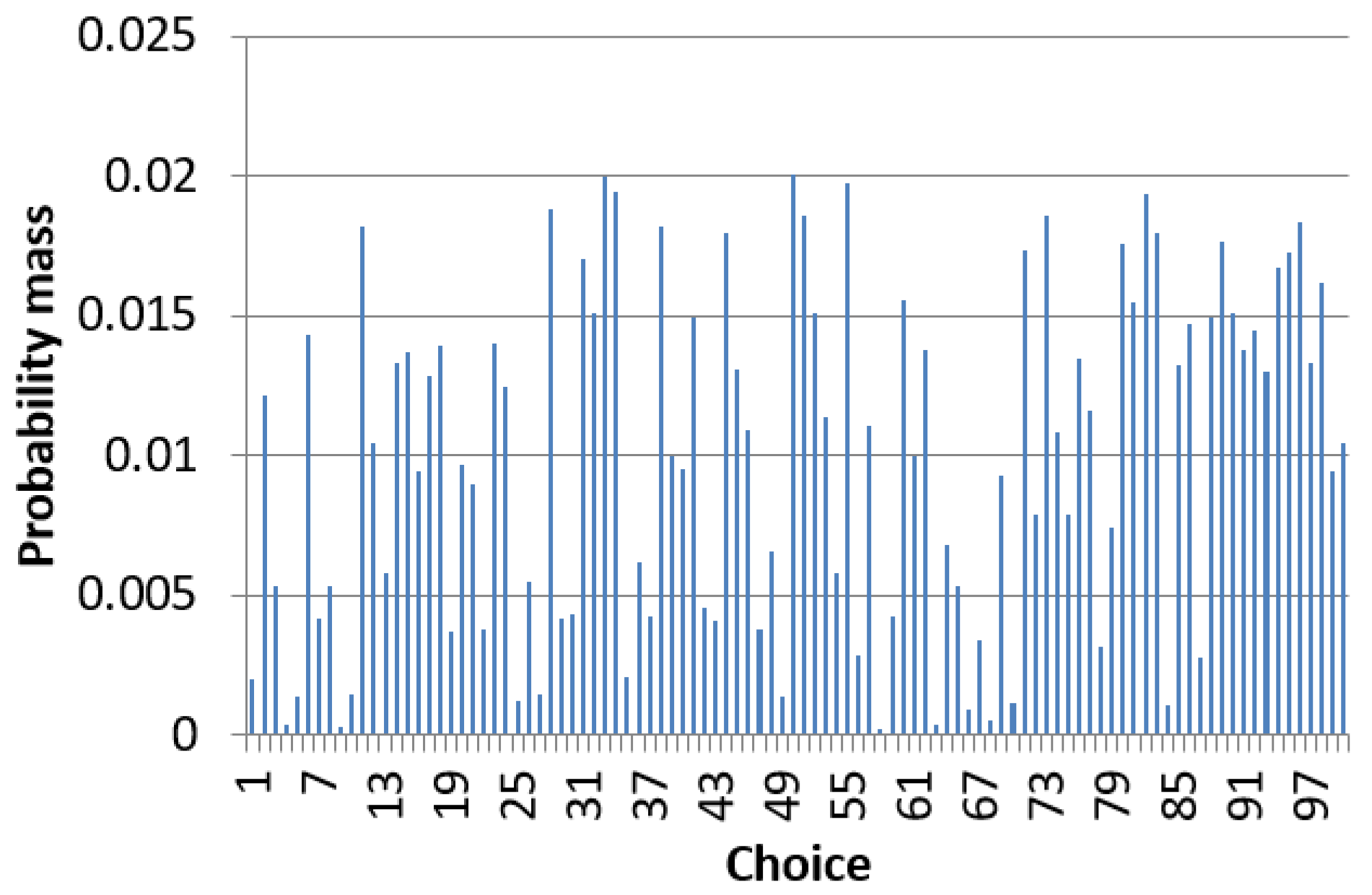

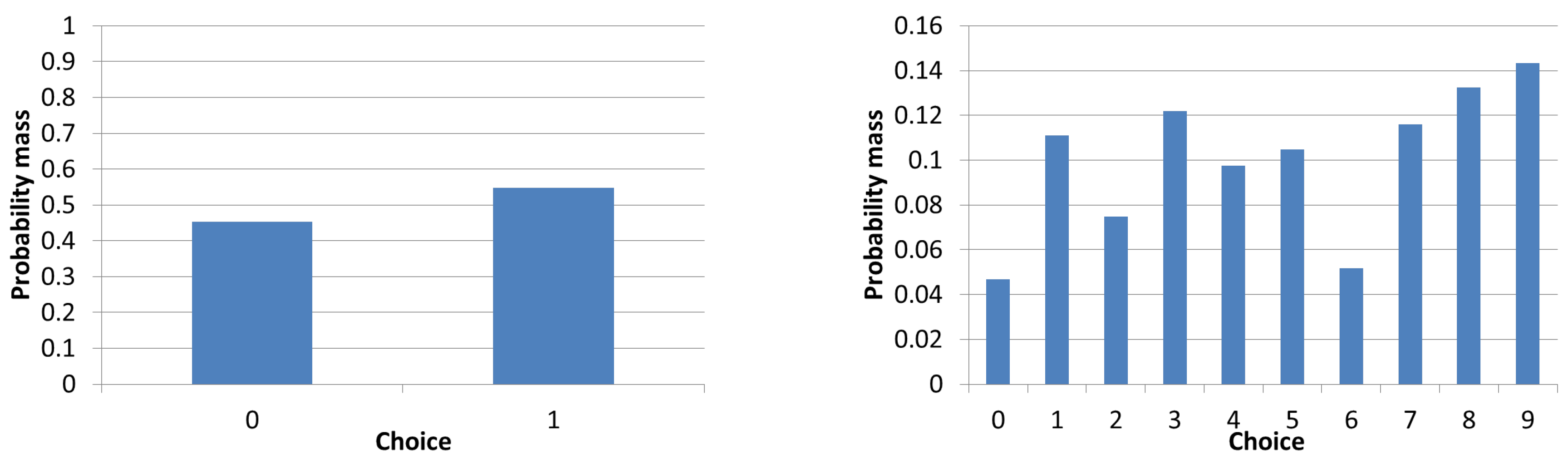

k value performs relatively better in the Gaussian model. We also looked at results for the most extreme comparison,

vs.

(

Table 3). Using two scores outperformed 10 8.3% of the time in the uniform setting, which was larger than we expected. In

Figure 3 and

Figure 4, we present a distribution for which

particularly outperformed

. The full distribution has mean 54.188, while the

compression has mean 0.548 (54.253 after normalization) and

has mean 5.009 (55.009 after normalization). The normalized errors between the means were 0.906 for

and 0.048 for

, yielding a difference of 0.859 in favor of

.

We next repeated the extreme

vs. 10 comparison, but we imposed a restriction that the

option could not give a score below 3 or above 6 (

Table 4). (If it selected a score below 3 then we set it to 3, and if above 6 we set it to 6). For some settings, for instance paper reviewing, extreme scores are very uncommon, and we strongly suspect that the vast majority of scores are in this middle range. Some possible explanations are that reviewers who give extreme scores may be required to put in additional work to justify their scores and are more likely to be involved in arguments with other reviewers (or with the authors in the rebuttal). Reviewers could also experience higher regret or embarrassment for being “wrong” and possibly off-base in the review by missing an important nuance. In this setting, using

outperforms

nearly

of the time in the uniform model.

We also considered the situation where we restricted the

scores to fall between 3 and 7, as opposed to 3 and 6 (

Table 5). Note that the possible scores range from 0–9, so this restriction is asymmetric in that the lowest three possible scores are eliminated while only the highest two are. This is motivated by the intuition that raters may be less inclined to give extremely low scores, which may hurt the feelings of an author (for the case of paper reviewing). In this setting, which is seemingly quite similar to the 3–6 setting,

produced lower error 93% of the time in the uniform model!

We next repeated these experiments for the rounding compression function. There are several interesting observations from

Table 6. In this setting,

is the clear choice, performing best in both models (by a large margin for the Gaussian model). The smaller values of

k perform significantly better with rounding than flooring (as indicated by lower errors) while the larger values perform significantly worse, and their errors seem to approach 0.5 for both models. Taking both compressions into account, the optimal overall approach would still be to use flooring with

, which produced the smallest average errors of 0.19 and 0.1 in the two models (

Table 1), while using

with rounding produced errors of 0.47 and 0.24 (

Table 6). The 2 vs. 3 experiments produced very similar results for the two compressions (

Table 7). The 2 vs. 10 results were quite different, with 2 performing better almost 40% of the time with rounding (

Table 8), vs. less than 10% with flooring (

Table 3). In the 2 vs. 10 truncated 3–6 experiments, 2 performed relatively better with rounding for both models (

Table 9), and for 2 vs. 10 truncated 3–7,

performed better nearly all the time (

Table 10).

6. Conclusions

Settings in which humans must rate items or entities from a small discrete set of options are ubiquitous. We have singled out several important applications—rating attractiveness for dating websites, assigning grades to students, and reviewing academic papers. The number of available options can vary considerably, even within different instantiations of the same application. For instance, we saw that three popular sites for attractiveness rating use completely different systems: Hot or Not uses a 1–10 system, OkCupid uses 1–5 “star” system, and Tinder uses a binary 1–2 “swipe” system. Despite the problem’s importance, we have not seen it studied theoretically previously. Our goal is to select k to minimize the average (normalized) error between the compressed average score and the ground truth average. We studied two natural models for generating the scores. The first is a uniform model where the scores are selected independently and uniformly at random, and the second is a Gaussian model where they are selected according to a more structured procedure that gives preference for the options near the center. We provided a closed-form solution for continuous distributions with arbitrary cdf. This allows us to characterize the relative performance of choices of k for a given distribution. We saw that, counterintuitively, using a smaller value of k can actually produce lower error for some distributions (even though we know that, as k approaches n, the error approaches 0): we presented specific distributions for which using outperforms 3 and 10.

We performed numerous simulations comparing the performance between different values of k for different generative models and metrics. The main metric was the absolute number of times for which values of k produced the minimal error. We also considered the average error over all simulated items. Not surprisingly, we observed that performance generally improves monotonically with k as expected, and more so for the Gaussian model than uniform. However, we observe that small k values can be optimal a non-negligible amount of the time, which is perhaps counterintuitive. In fact, using outperformed and 10 on 5.6% of the trials in the uniform setting. Just comparing 2 vs. 3, performed better around 37% of the time. Using outperformed 10 8.3% of the time, and when we restricted to only assign values between 3 and 7 inclusive, actually produced lower error 93% of the time! This could correspond to a setting where raters are ashamed to assign extreme scores (particularly extreme low scores). We compared two natural compression rules—one based on the floor function and one based on rounding—and weighed the pros and cons of each. For smaller k rounding leads to significantly lower error than flooring, with the clear optimal choice, while for larger k rounding leads to much larger error.

A future avenue is to extend our analysis to better understand specific distributions for which different k values are optimal, while our simulations are in aggregate over many distributions. Application domains will have distributions with different properties, and improved understanding will allow us to determine which k is optimal for the types of distributions we expect to encounter. This improved understanding can be coupled with further data exploration.