RazorNet: Adversarial Training and Noise Training on a Deep Neural Network Fooled by a Shallow Neural Network

Abstract

:1. Introduction

2. Related Work

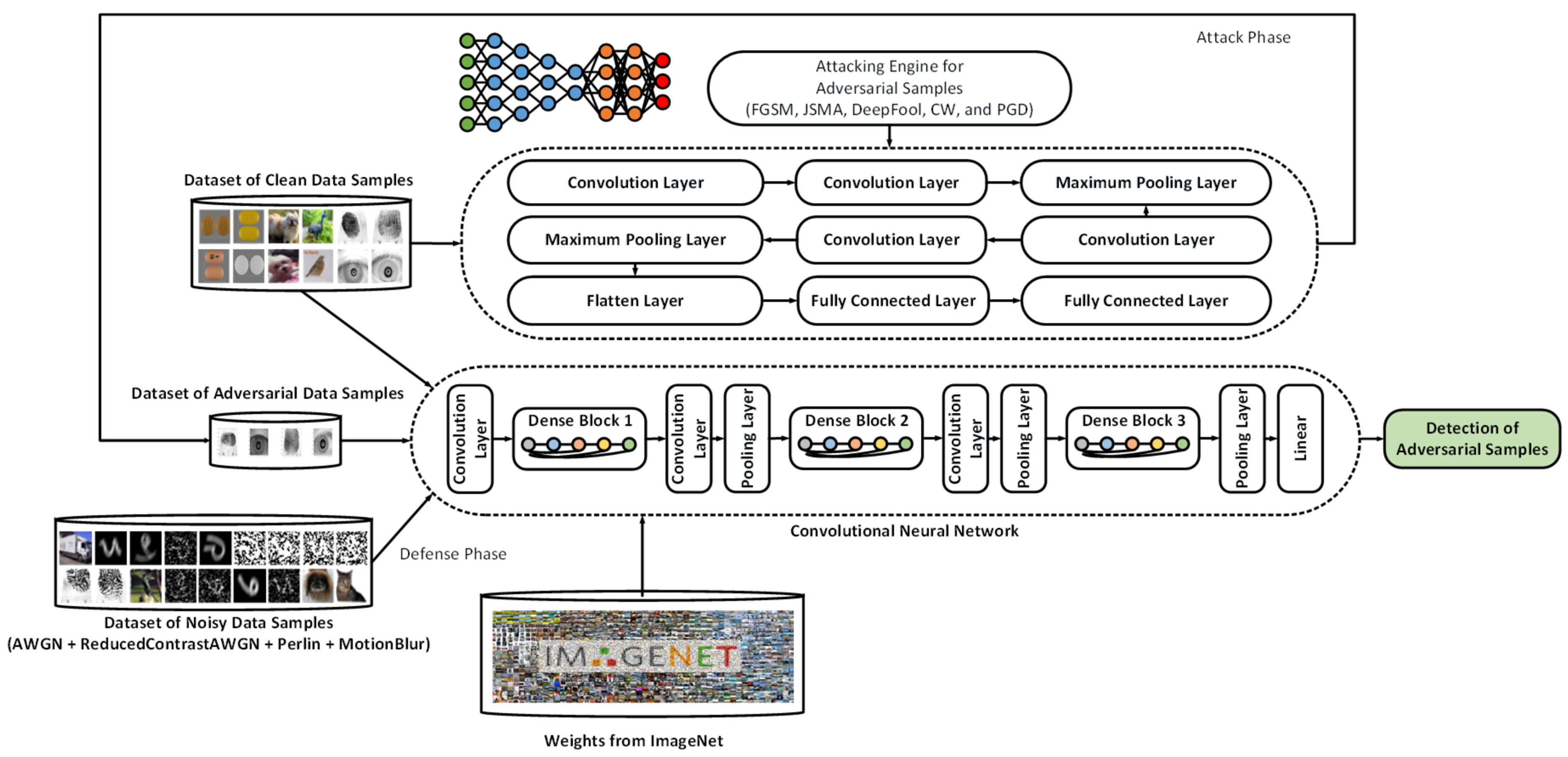

3. Technical Background

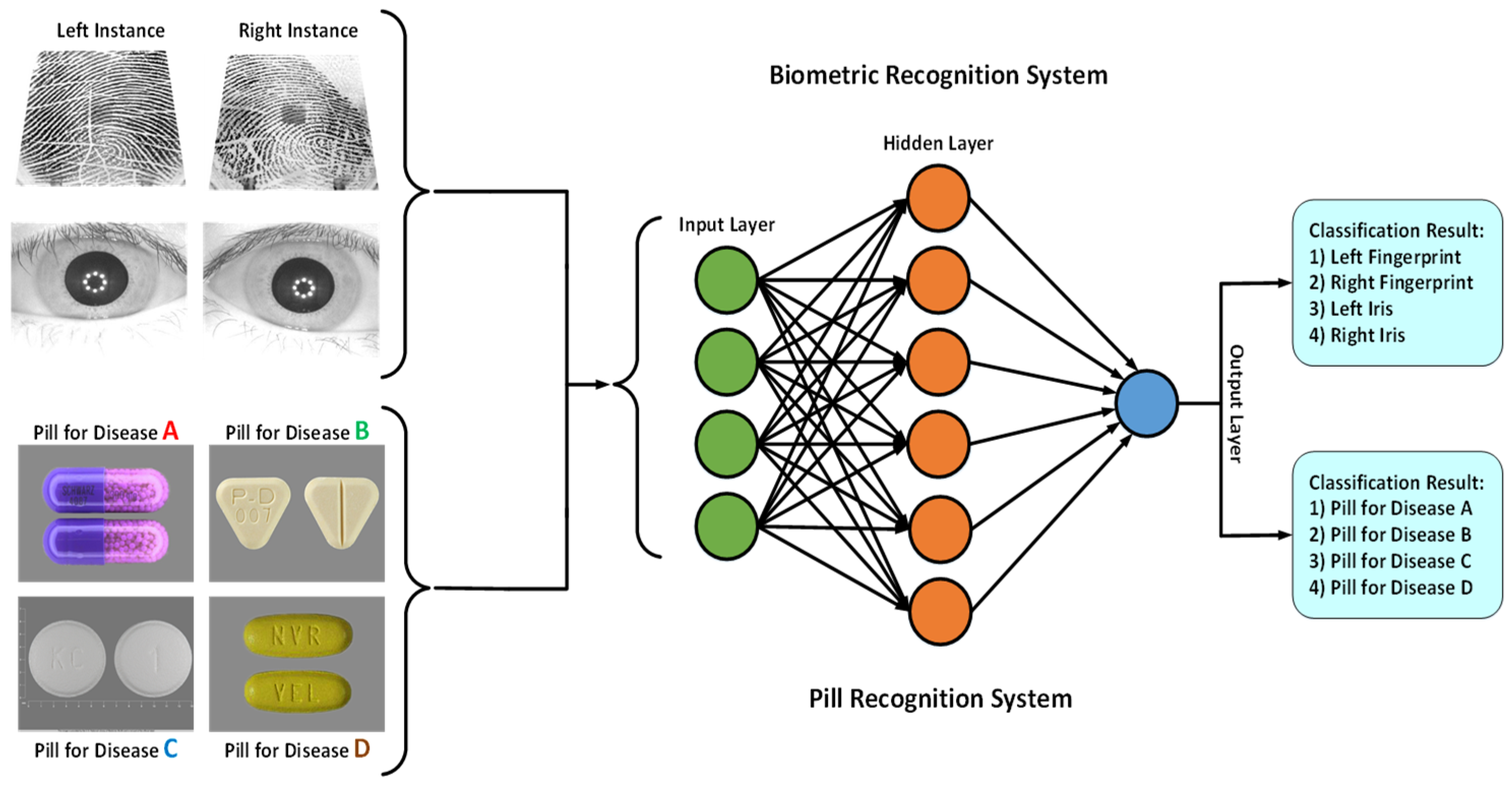

3.1. Data Recognition System for Fingerprint, Iris, and Pill Image Data

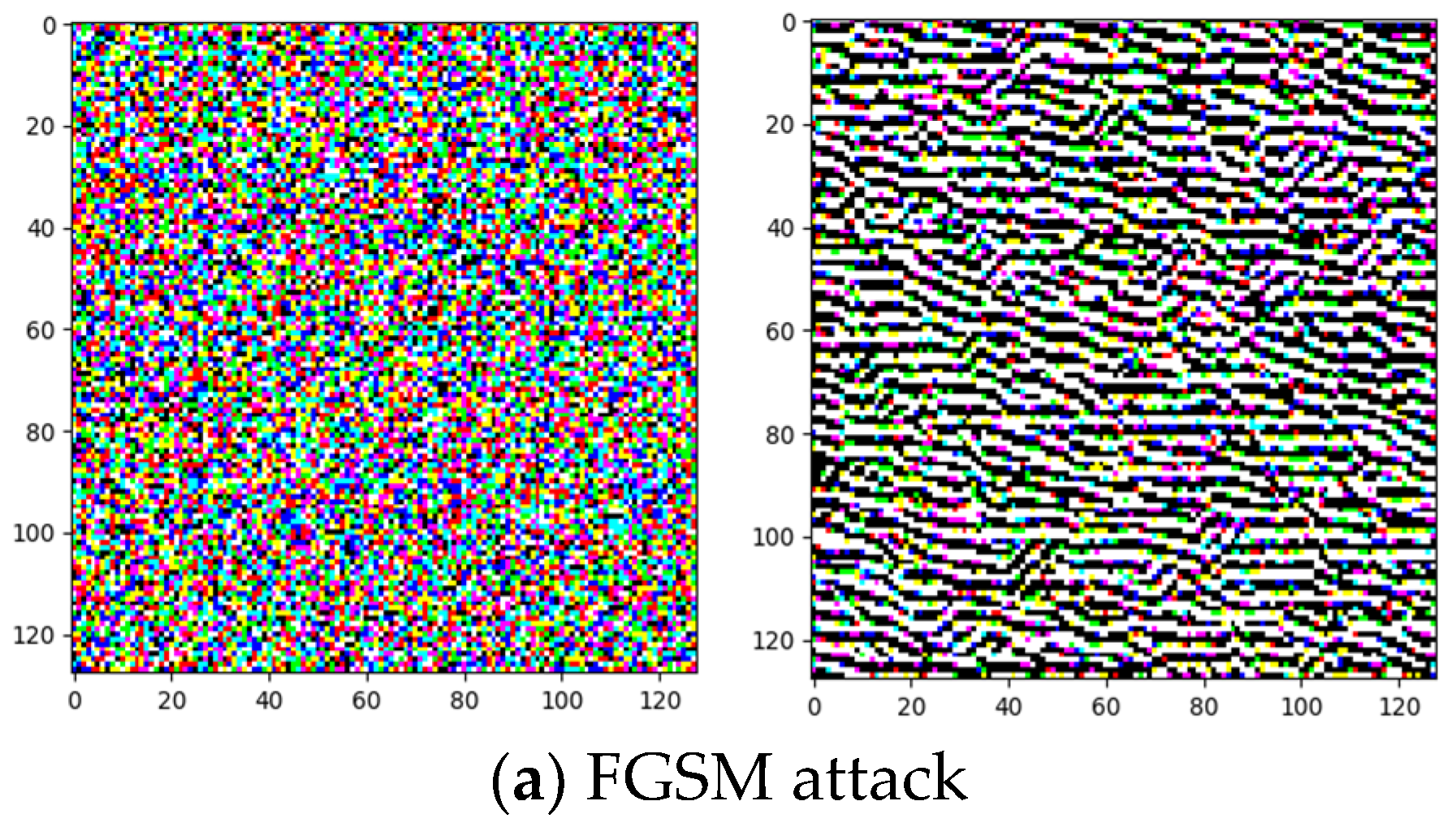

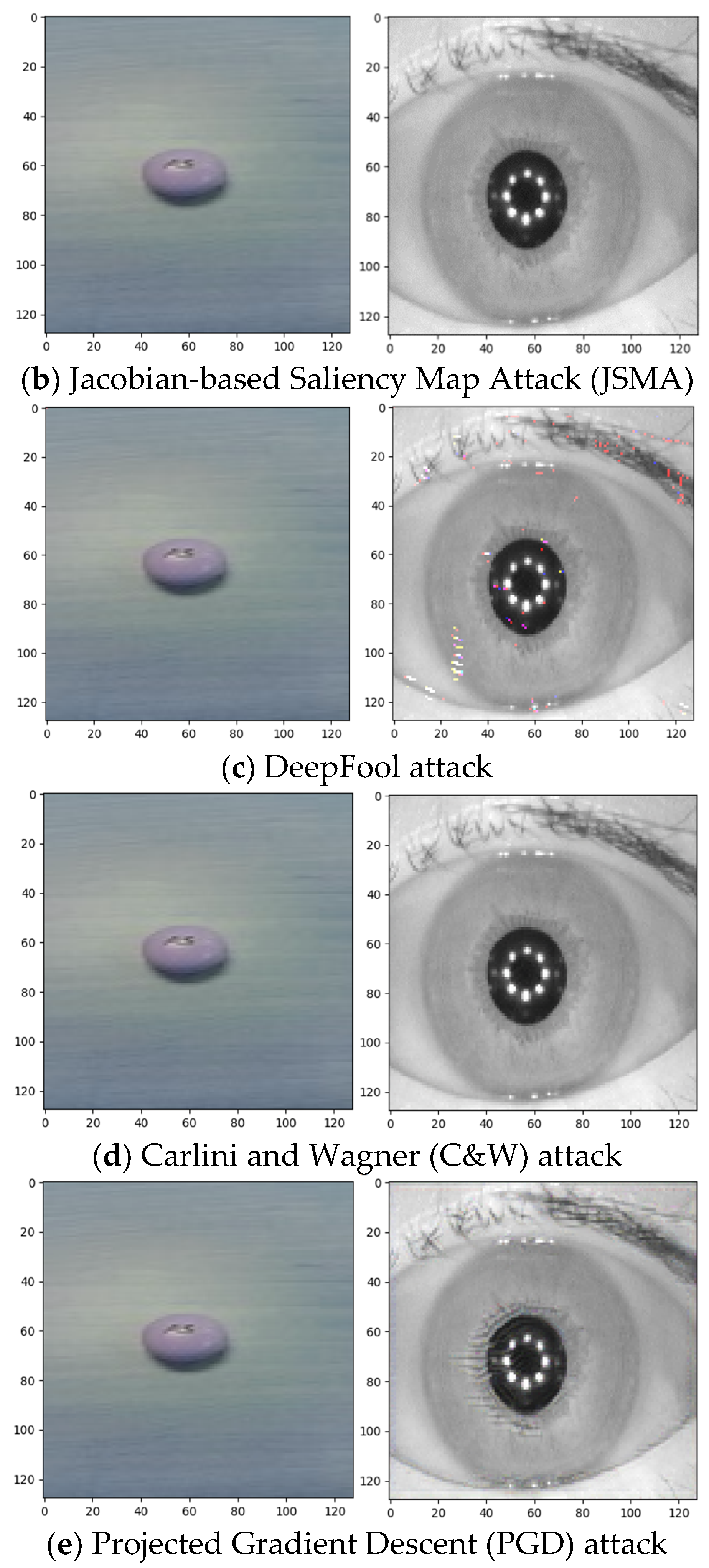

3.2. Threat Model: Well-Known Adversarial Attacks for Fooling Neural Networks

3.3. Noise Training

3.4. Shallow-Deep CNN-Based System Architecture

4. Proposed System and Methodology

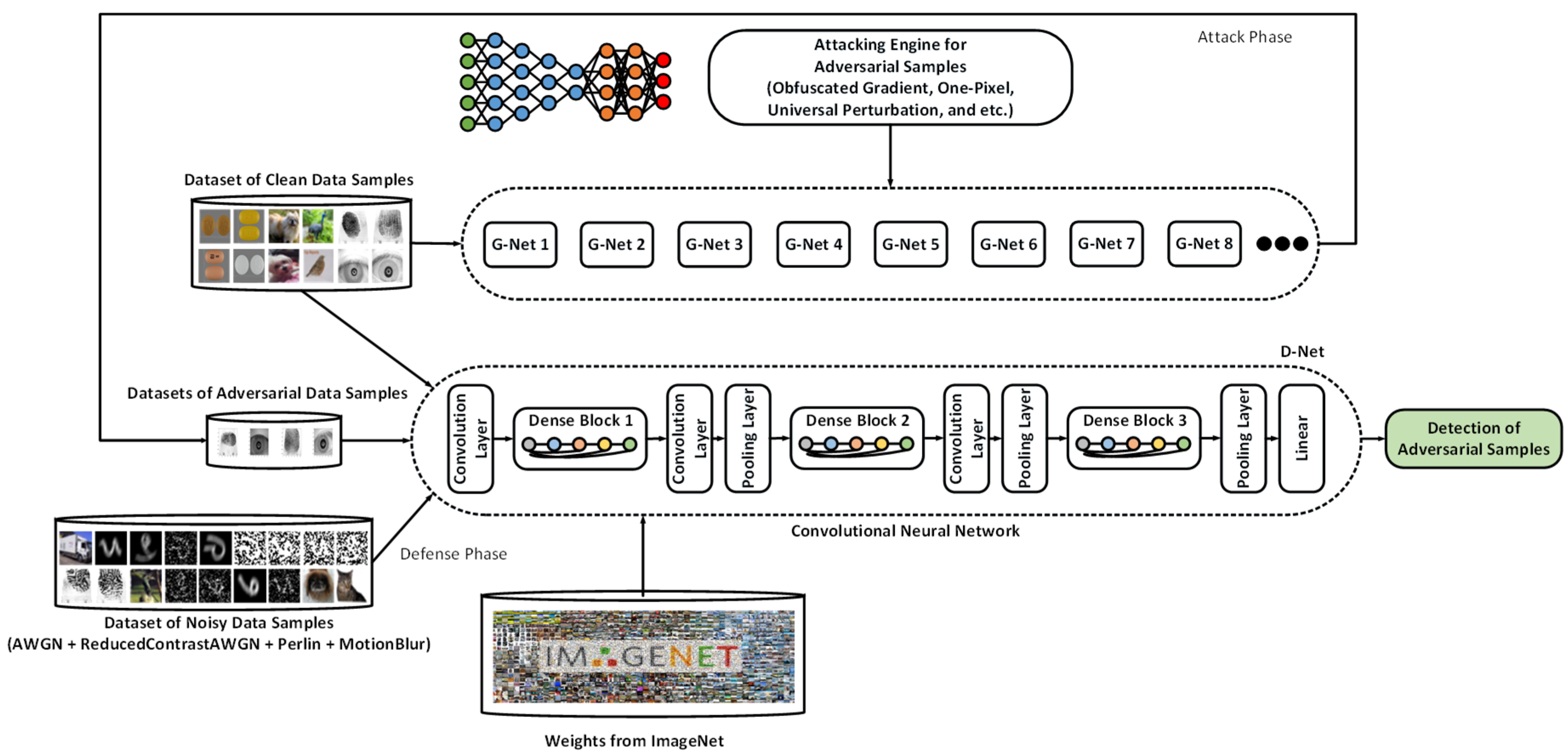

| Algorithm 1: The protocol and overall scheme of the system of shallow-deep neural network architecture, adversarial training, and transfer learning in detection of adversarial perturbations. |

| 01: Input: Dataset of clean data samples (X), dataset of noisy data samples (Y), weights from Imagenet (W), shallow neural network model (SM), and deep neural network model (DM) |

| 02: Output: Detection of adversarial samples |

| 03: K ← AdversarialSampleGenerator(X, SM) |

| 04: AdversarialSampleDetection ← AdversarialSampleDetector(X, Y, W, K, DM) |

5. Experimental Results and Evaluation

6. Limitations and Future Work

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Bastani, O.; Ioannou, Y.; Lampropoulos, L.; Vytiniotis, D.; Nori, A.; Criminisi, A. Measuring neural net robustness with constraints. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 2613–2621. [Google Scholar]

- Gu, S.; Rigazio, L. Towards deep neural network architectures robust to adversarial examples. arXiv 2014, arXiv:1412.5068. [Google Scholar]

- Huang, R.; Xu, B.; Schuurmans, D.; Szepesvári, C. Learning with a strong adversary. arXiv 2015, arXiv:1511.03034. [Google Scholar]

- Jin, J.; Dundar, A.; Culurciello, E. Robust convolutional neural networks under adversarial noise. arXiv 2015, arXiv:1511.06306. [Google Scholar]

- Papernot, N.; McDaniel, P.; Wu, X.; Jha, S.; Swami, A. Distillation as a defense to adversarial perturbations against deep neural networks. In Proceedings of the 2016 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 582–597. [Google Scholar]

- Rozsa, A.; Rudd, E.M.; Boult, T.E. Adversarial diversity and hard positive generation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 25–32. [Google Scholar]

- Shaham, U.; Yamada, Y.; Negahban, S. Understanding adversarial training: Increasing local stability of supervised models through robust optimization. Neurocomputing 2018, 307, 195–204. [Google Scholar] [CrossRef] [Green Version]

- Zheng, S.; Song, Y.; Leung, T.; Goodfellow, I. Improving the robustness of deep neural networks via stability training. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4480–4488. [Google Scholar]

- Mirjalili, V.; Ross, A. October. Soft biometric privacy: Retaining biometric utility of face images while perturbing gender. In 2017 IEEE International Joint Conference on Biometrics (IJCB); IEEE: Piscataway, NJ, USA, 2017; pp. 564–573. [Google Scholar]

- Jia, R.; Liang, P. Adversarial examples for evaluating reading comprehension systems. arXiv 2017, arXiv:1707.07328. [Google Scholar]

- Belinkov, Y.; Bisk, Y. Synthetic and natural noise both break neural machine translation. arXiv 2017, arXiv:1711.02173. [Google Scholar]

- Samanta, S.; Mehta, S. Towards crafting text adversarial samples. arXiv 2017, arXiv:1707.02812. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in neural information processing systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Tramèr, F.; Kurakin, A.; Papernot, N.; Goodfellow, I.; Boneh, D.; McDaniel, P. Ensemble adversarial training: Attacks and defenses. arXiv 2017, arXiv:1705.07204. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. arXiv 2017, arXiv:1706.06083. [Google Scholar]

- Papernot, N.; McDaniel, P.; Goodfellow, I.; Jha, S.; Celik, Z.B.; Swami, A. Practical black-box attacks against machine learning. In Proceedings of the 2017 ACM on Asia Conference on Computer and Communications Security, New York, NY, USA, 2–6 April 2017; pp. 506–519. [Google Scholar]

- Połap, D.; Woźniak, M.; Wei, W.; Damaševičius, R. Multi-threaded learning control mechanism for neural networks. Future Gener. Comput. Syst. 2018, 87, 16–34. [Google Scholar]

- Yu, L.; Zhang, W.; Wang, J.; Yu, Y. Seqgan: Sequence generative adversarial nets with policy gradient. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Narayanan, B.N.; Hardie, R.C.; Balster, E.J. Multiframe Adaptive Wiener Filter Super-Resolution with JPEG2000-Compressed Images. Available online: https://link.springer.com/article/10.1186/1687-6180-2014-55 (accessed on 19 July 2019).

- Narayanan, B.N.; Hardie, R.C.; Kebede, T.M. Performance analysis of a computer-aided detection system for lung nodules in CT at different slice thicknesses. J. Med. Imaging 2018, 5, 014504. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chivukula, A.S.; Liu, W. Adversarial Deep Learning Models with Multiple Adversaries. IEEE Trans. Knowl. Data Eng. 2018, 31, 1066–1079. [Google Scholar] [CrossRef]

- Kwon, H.; Kim, Y.; Park, K.W.; Yoon, H.; Choi, D. Multi-targeted adversarial example in evasion attack on deep neural network. IEEE Access 2018, 6, 46084–46096. [Google Scholar] [CrossRef]

- Shen, J.; Zhu, X.; Ma, D. TensorClog: An Imperceptible Poisoning Attack on Deep Neural Network Applications. IEEE Access 2019, 7, 41498–41506. [Google Scholar] [CrossRef]

- Kulikajevas, A.; Maskeliūnas, R.; Damaševičius, R.; Misra, S. Reconstruction of 3D Object Shape Using Hybrid Modular Neural Network Architecture Trained on 3D Models from ShapeNetCore Dataset. Sensors 2019, 19, 1553. [Google Scholar] [CrossRef] [PubMed]

- Carrara, F.; Falchi, F.; Caldelli, R.; Amato, G.; Becarelli, R. Adversarial image detection in deep neural networks. Multimed. Tools Appl. 2019, 78, 2815–2835. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Y. Defense Against Adversarial Attacks in Deep Learning. Appl. Sci. 2019, 9, 76. [Google Scholar] [CrossRef]

- Grosse, K.; Manoharan, P.; Papernot, N.; Backes, M.; McDaniel, P. On the (statistical) detection of adversarial examples. arXiv 2017, arXiv:1702.06280. [Google Scholar]

- Gong, Z.; Wang, W.; Ku, W.S. Adversarial and clean data are not twins. arXiv 2017, arXiv:1704.04960. [Google Scholar]

- Metzen, J.H.; Genewein, T.; Fischer, V.; Bischoff, B. On detecting adversarial perturbations. arXiv 2017, arXiv:1702.04267. [Google Scholar]

- Feinman, R.; Curtin, R.R.; Shintre, S.; Gardner, A.B. Detecting adversarial samples from artifacts. arXiv 2017, arXiv:1703.00410. [Google Scholar]

- Carlini, N.; Wagner, D. Towards evaluating the robustness of neural networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–24 May 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 39–57. [Google Scholar]

- Ganin, Y.; Ustinova, E.; Ajakan, H.; Germain, P.; Larochelle, H.; Laviolette, F.; Marchand, M.; Lempitsky, V. Domain-adversarial training of neural networks. J. Mach. Learn. Res. 2016, 17, 1–35. [Google Scholar]

- Marchisio, A.; Nanfa, G.; Khalid, F.; Hanif, M.A.; Martina, M.; Shafique, M. CapsAttacks: Robust and Imperceptible Adversarial Attacks on Capsule Networks. arXiv 2019, arXiv:1901.09878. [Google Scholar]

- Xu, W.; Evans, D.; Qi, Y. Feature squeezing: Detecting adversarial examples in deep neural networks. arXiv 2017, arXiv:1704.01155. [Google Scholar]

- Kannan, H.; Kurakin, A.; Goodfellow, I. Adversarial logit pairing. arXiv 2018, arXiv:1803.06373. [Google Scholar]

- Mopuri, K.R.; Babu, R.V. Gray-box Adversarial Training. arXiv 2018, arXiv:1808.01753. [Google Scholar]

- Neelakantan, A.; Vilnis, L.; Le, Q.V.; Sutskever, I.; Kaiser, L.; Kurach, K.; Martens, J. Adding gradient noise improves learning for very deep networks. arXiv 2015, arXiv:1511.06807. [Google Scholar]

- Smilkov, D.; Thorat, N.; Kim, B.; Viégas, F.; Wattenberg, M. Smoothgrad: Removing noise by adding noise. arXiv 2017, arXiv:1706.03825. [Google Scholar]

- Gao, F.; Wu, T.; Li, J.; Zheng, B.; Ruan, L.; Shang, D.; Patel, B. SD-CNN: A shallow-deep CNN for improved breast cancer diagnosis. Comput. Med. Imaging Graph. 2018, 70, 53–62. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ernst, D.; Das, S.; Lee, S.; Blaauw, D.; Austin, T.; Mudge, T.; Kim, N.S.; Flautner, K. Razor: Circuit-level correction of timing errors for low-power operation. IEEE Micro 2004, 24, 10–20. [Google Scholar] [CrossRef]

- Basu, S.; Karki, M.; Ganguly, S.; DiBiano, R.; Mukhopadhyay, S.; Gayaka, S.; Kannan, R.; Nemani, R. Learning sparse feature representations using probabilistic quadtrees and deep belief nets. Neural Process. Lett. 2017, 45, 855–867. [Google Scholar] [CrossRef]

- CASIA-FingerprintV5. 2010. Available online: http://biometrics.idealtest.org/dbDetailForUser.do?id=7 (accessed on 26 December 2017).

- CASIA-IrisV4. 2010. Available online: http://biometrics.idealtest.org/dbDetailForUser.do?id=4 (accessed on 26 December 2017).

- 1k Pharmaceutical Pill Image Dataset. Available online: https://www.kaggle.com/trumedicines/1k-pharmaceutical-pill-image-dataset (accessed on 2 June 2018).

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Bottou, L. Large-scale machine learning with stochastic gradient descent. In Compstat’2010; Physica-Verlag HD: Heidelberg, Germany, 2010; pp. 177–186. [Google Scholar]

- Liu, X.; Cheng, M.; Zhang, H.; Hsieh, C.J. Towards robust neural networks via random self-ensemble. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 369–385. [Google Scholar]

- Ranjan, R.; Sankaranarayanan, S.; Castillo, C.D.; Chellappa, R. Improving network robustness against adversarial attacks with compact convolution. arXiv 2017, arXiv:1712.00699. [Google Scholar]

- Song, Y.; Kim, T.; Nowozin, S.; Ermon, S.; Kushman, N. Pixeldefend: Leveraging generative models to understand and defend against adversarial examples. arXiv 2017, arXiv:1710.10766. [Google Scholar]

- Dai, X.; Gong, S.; Zhong, S.; Bao, Z. Bilinear CNN Model for Fine-Grained Classification Based on Subcategory-Similarity Measurement. Appl. Sci. 2019, 9, 301. [Google Scholar] [CrossRef]

- Varior, R.R.; Haloi, M.; Wang, G. Gated siamese convolutional neural network architecture for human re-identification. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Cham, Switzerland, 2016; pp. 791–808. [Google Scholar]

- Ranjan, R.; Patel, V.M.; Chellappa, R. Hyperface: A deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 121–135. [Google Scholar] [CrossRef] [PubMed]

- Twinanda, A.P.; Shehata, S.; Mutter, D.; Marescaux, J.; De Mathelin, M.; Padoy, N. Endonet: A deep architecture for recognition tasks on laparoscopic videos. IEEE Trans. Med. Imaging 2016, 36, 86–97. [Google Scholar] [CrossRef] [PubMed]

| Type of Distance | Euclidean | Manhattan | Chebyshev | Correlation Coefficient | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Type of Data | Fingerprint | Iris | Pill | Fingerprint | Iris | Pill | Fingerprint | Iris | Pill | Fingerprint | Iris | Pill | |

| Type of Attack | 0.12 | 0.01 | 0.10 | 0.37 | 0.08 | 0.24 | 0.11 | 0.05 | 0.10 | 0.99 | 0.50 | 0.33 | |

| 1.0 | 0.50 | 0.33 | |||||||||||

| 0.08 | 0.01 | 0.05 | 0.21 | 0.05 | 0.11 | 0.06 | 0.01 | 0.04 | 1.00 | 0.50 | 0.33 | ||

| 0.02 | 0.01 | 0.05 | 0.11 | 0.05 | 0.11 | 0.01 | 0.001 | 0.04 | 1.00 | 0.50 | 0.33 | ||

| 0.11 | 0.01 | 0.10 | 0.38 | 0.10 | 0.28 | 0.10 | 0.01 | 0.10 | 0.99 | 0.49 | 0.31 | ||

| Shallow Neural Network | Deep Neural Network |

|---|---|

| Dense Block (1) = | |

| Dense Block (2) = | |

| Flatten Layer | |

| Fully Connected Layer 1 | Dense Block (3) = |

| Fully Connected Layer 2 | |

| Dense Units = 1/4 | |

| Softmax | Dense Block (4) = |

| Softmax |

| Dataset | System Detection Accuracy on Clean Data | System Detection Accuracy on Attacked Data Without Defense | System Detection Accuracy on Attacked Data | ||

|---|---|---|---|---|---|

| Ours - Biometric Dataset | Chinese Academy of Sciences’ Institute of Automation (CASIA) Dataset—Images of iris and fingerprint data | 90.58% | 1.31% | Clean Data + Adversarial Data | Clean Data + Adversarial Data + Noisy Data |

| 80.65% | 93.4% | ||||

| Ours - Pillbox Image Dataset | Pillbox Dataset—Images of pill | 99.92% | 34.55% | 96.03% | 98.20% |

| [48] | CIFAR | 92% | 10% | 86% | |

| [49] | MNIST | N/A | 19.39% | 75.95% | |

| [49] | CIFAR | N/A | 8.57% | 71.38% | |

| [50]—ResNet | MNIST | 88% | 0% (Strongest Attack) | 83% (Strongest Attack) | |

| [50]—VGG | MNIST | 89% | 36% (Strongest Attack) | 85% (Strongest Attack) | |

| [50]—ResNet | CIFAR | 85% | 7% (Strongest Attack) | 71% (Strongest Attack) | |

| [50]—VGG | CIFAR | 82% | 37% (Strongest Attack) | 80% (Strongest Attack) | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Taheri, S.; Salem, M.; Yuan, J.-S. RazorNet: Adversarial Training and Noise Training on a Deep Neural Network Fooled by a Shallow Neural Network. Big Data Cogn. Comput. 2019, 3, 43. https://doi.org/10.3390/bdcc3030043

Taheri S, Salem M, Yuan J-S. RazorNet: Adversarial Training and Noise Training on a Deep Neural Network Fooled by a Shallow Neural Network. Big Data and Cognitive Computing. 2019; 3(3):43. https://doi.org/10.3390/bdcc3030043

Chicago/Turabian StyleTaheri, Shayan, Milad Salem, and Jiann-Shiun Yuan. 2019. "RazorNet: Adversarial Training and Noise Training on a Deep Neural Network Fooled by a Shallow Neural Network" Big Data and Cognitive Computing 3, no. 3: 43. https://doi.org/10.3390/bdcc3030043

APA StyleTaheri, S., Salem, M., & Yuan, J.-S. (2019). RazorNet: Adversarial Training and Noise Training on a Deep Neural Network Fooled by a Shallow Neural Network. Big Data and Cognitive Computing, 3(3), 43. https://doi.org/10.3390/bdcc3030043