A Study on Attention Attracting Elements of 360-Degree Videos Based on VR Eye-Tracking System

Abstract

:1. Introduction

2. Related Work

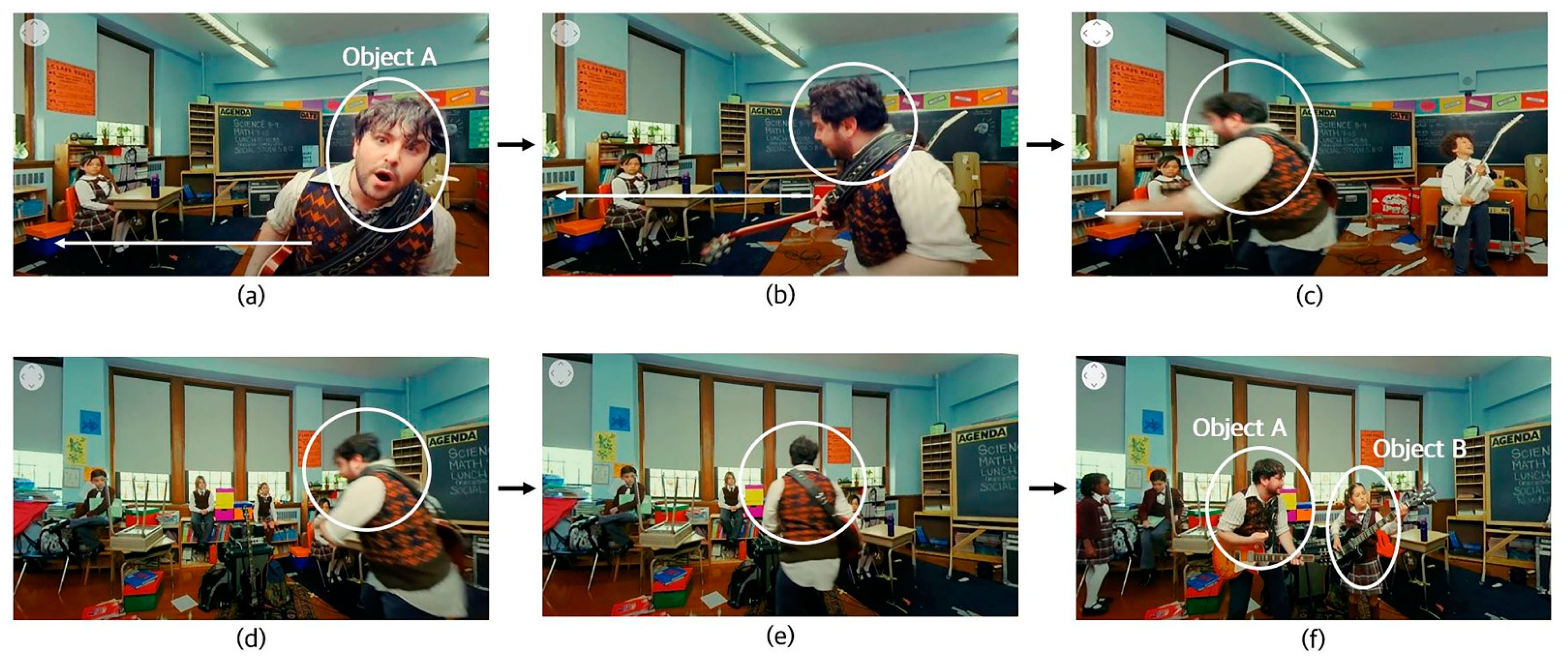

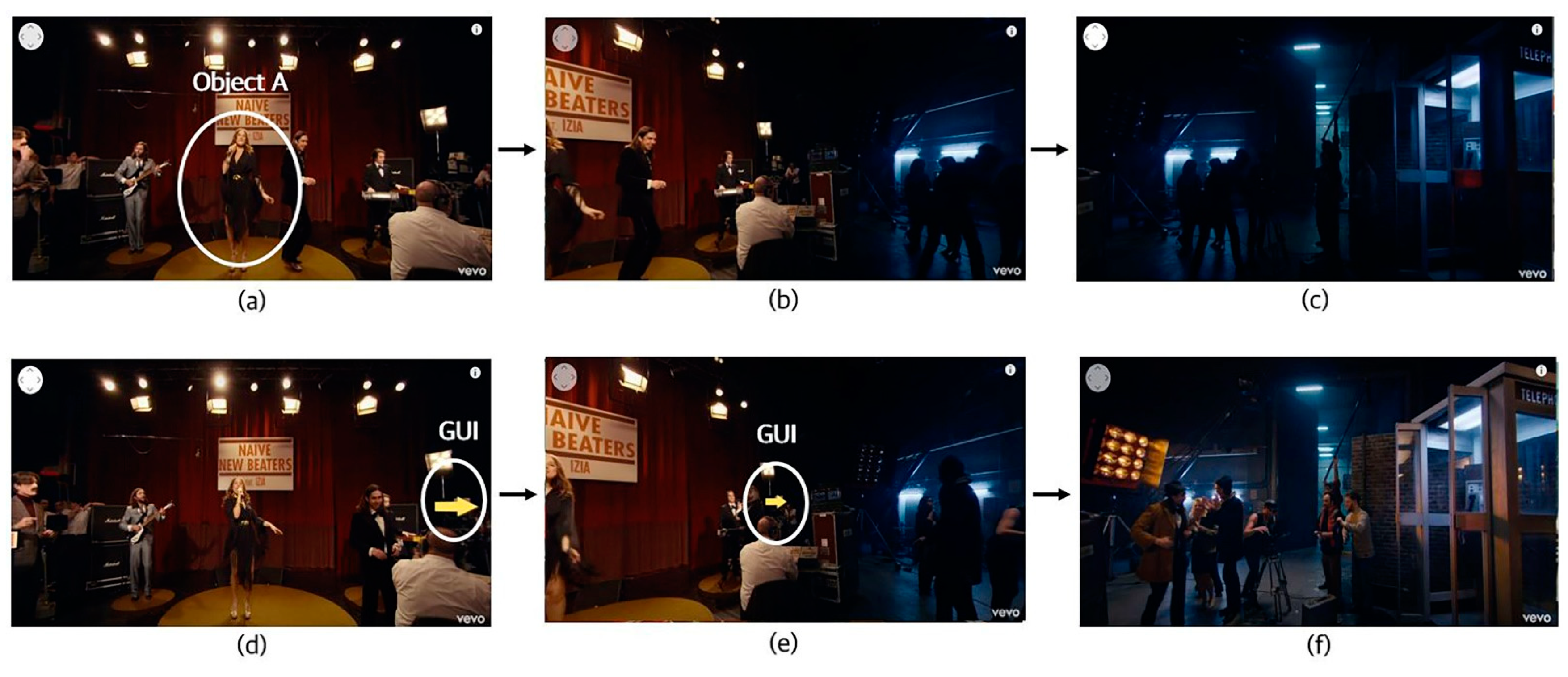

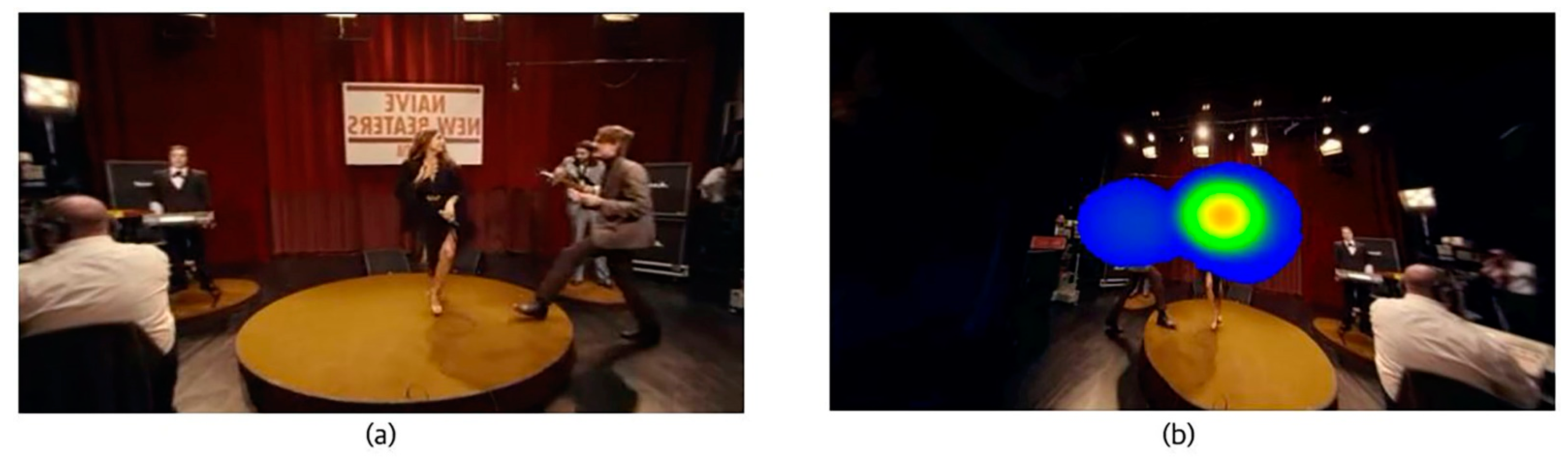

3. Analysis of User’s Attention Attracting Elements in 360-Degree VR Videos

4. Overview and Results of 360-Degree VR Eye-Tracking Experiment

4.1. Experimental Procedure

4.2. Experimental Participants and Material

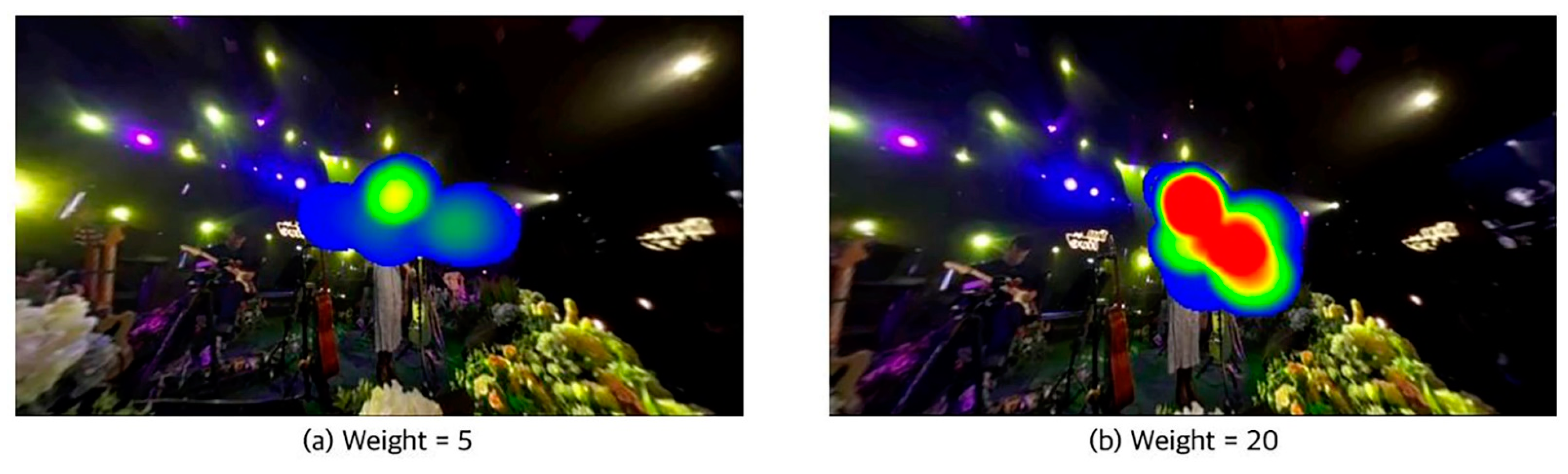

4.3. Data Analysis Methods and Experimental Results

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ban, Y.; Xie, L.; Xu, Z.; Zhang, X.; Guo, Z.; Wang, Y. Cub360: Exploiting cross-users behaviors for viewport prediction in 360 video adaptive streaming. In Proceedings of the 2018 IEEE International Conference on Multimedia and Expo (ICME), San Diego, CA, USA, 23–27 July 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Dooley, K. Storytelling with virtual reality in 360-degrees: A new screen grammar. Stud. Australas. Cine. 2017, 11, 161–171. [Google Scholar] [CrossRef]

- Powell, W.; Powell, V.; Brown, P.; Cook, M.; Uddin, J. Getting around in google cardboard—exploring navigation preferences with low-cost Mobile VR. In Proceedings of the 2016 IEEE 2nd Workshop on Everyday Virtual Reality (WEVR), Greenville, SC, USA, 20 March 2016; pp. 5–8. [Google Scholar] [CrossRef] [Green Version]

- Qian, F.; Ji, L.; Han, B.; Gopalakrishnan, V. Optimizing 360 video delivery over Cellular Networks. In Proceedings of the 5th Workshop on All Things Cellular: Operations, Applications and Challenges, New York, NY, USA, 3–7 October 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Maranes, C.; Gutierrez, D.; Serrano, A. Exploring the impact of 360° movie cuts in users’ attention. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 22–26 March 2020; pp. 73–82. Available online: https://ieeevr.org/2020/ (accessed on 15 October 2021).

- Mateer, J. Directing for cinematic virtual reality: How the traditional film director’s craft applies to immersive environments and notions of presence. Media Pract. 2017, 18, 14–25. [Google Scholar] [CrossRef] [Green Version]

- Sheikh, A.; Brown, A.; Evans, M.; Watson, Z. Directing attention in 360-degree video. In Proceedings of the IBC 2016 Conference, Amsterdam, NL, USA, 8–12 September 2016; pp. 1–9. [Google Scholar] [CrossRef] [Green Version]

- Fearghail, C.O.; Ozcinar, C.; Knorr, S.; Smolic, A. Director’s cut-analysis of aspects of interactive storytelling for VR films. In Interactive Storytelling; Springer: Berlin/Heidelberg, Germany, 2018; pp. 308–322. [Google Scholar] [CrossRef]

- Clay, V.; König, P.; König, S.U. Eye tracking in virtual reality. Eye Mov. Res. 2019, 12, 1–18. [Google Scholar] [CrossRef] [PubMed]

- Majaranta, P.; Bulling, A. Eye Tracking and Eye-Based Human–Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar] [CrossRef]

- Duchowski, A.T. Eye Tracking Methodology; Springer: London, UK, 2017. [Google Scholar] [CrossRef]

- Poole, A.; Ball, L.J. Eye tracking in HCI and Usability Research. In Encyclopedia of Human Computer Interaction; IGI global: Hershey, PA, USA, 2005; pp. 211–219. [Google Scholar] [CrossRef]

- Duchowski, A.T.; Shivashankaraiah, V.; Rawls, T.; Gramopadhye, A.K.; Melloy, B.J.; Kanki, B. Binocular Eye tracking in virtual reality for inspection training. In Proceedings of the 2000 symposium on Eye tracking research & applications, Palm Beach Gardens, FL, USA, 6–8 November 2000; pp. 89–96. [Google Scholar] [CrossRef] [Green Version]

- Piumsomboon, T.; Lee, G.; Lindeman, R.W.; Billinghurst, M. Exploring natural eye-gaze-based interaction for immersive virtual reality. In Proceedings of the 2017 IEEE Symposium on 3D User Interfaces (3DUI), Los Angeles, CA, USA, 18–19 March 2017; pp. 36–39. [Google Scholar] [CrossRef]

- Pfeiffer, J.; Pfeiffer, T.; Meißner, M.; Weiß, E. Eye-tracking-based classification of Information Search Behavior Using Machine Learning: Evidence from experiments in physical shops and virtual reality shopping environments. Inf. Syst. Res. 2020, 31, 675–691. [Google Scholar] [CrossRef]

- Redefining the Axiom of Story: The VR and 360 Video Complex. Available online: https://techcrunch.com/2016/01/14/redefining-the-axiom-of-story-the-vr-and-360-video-complex/ (accessed on 8 December 2021).

- Tricart, C. Virtual Reality Filmmaking; Taylor & Francis: New York, NY, USA, 2017. [Google Scholar]

- Xu, Q.; Ragan, E.D. Effects of character guide in immersive virtual reality stories. In Proceedings of the International Conference on Human-Computer Interaction, Orlando, FL, USA, 26–31 July 2019; pp. 375–391. [Google Scholar] [CrossRef] [Green Version]

- Danieau, F.; Guillo, A.; Dore, R. Attention guidance for immersive video content in head-mounted displays. In Proceedings of the 2017 IEEE Virtual Reality (VR), Los Angeles, CA, USA, 18–22 March 2017; pp. 205–206. [Google Scholar] [CrossRef]

- Nielsen, L.T.; Møller, M.B.; Hartmeyer, S.D.; Ljung, T.C.; Nilsson, N.C.; Nordahl, R.; Serafin, S. Missing the point: An exploration of how to guide users’ attention during cinematic virtual reality. In Proceedings of the 22nd ACM conference on virtual reality software and technology, New York, NY, USA, 2–4 November 2016; pp. 229–232. [Google Scholar] [CrossRef]

- Rothe, S.; Buschek, D.; Hußmann, H. Guidance in cinematic virtual reality-taxonomy, research status and challenges. Multimodal Technol. Interact. 2019, 3, 19. [Google Scholar] [CrossRef] [Green Version]

- Speicher, M.; Rosenberg, C.; Degraen, D.; Daiber, F.; Krúger, A. Exploring visual guidance in 360-degree videos. In Proceedings of the 2019 ACM International Conference on Interactive Experiences for TV and Online Video, Salford, Manchester, UK, 5–7 June 2019; pp. 1–12. [Google Scholar] [CrossRef]

- Schmitz, A.; MacQuarrie, A.; Julier, S.; Binetti, N.; Steed, A. Directing versus attracting attention: Exploring the effectiveness of central and peripheral cues in panoramic videos. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Atlanta, GA, USA, 22–26 March 2020; pp. 63–72. [Google Scholar]

- Noa Neal ‘Graffiti’ 4K 360° Music Video Clip. Available online: https://www.youtube.com/watch?v=LByJ9Q6Lddo&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=1 (accessed on 25 October 2021).

- Avicii-Waiting For Love (360 Video). Available online: https://www.youtube.com/watch?v=edcJ_JNeyhg&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=2 (accessed on 25 October 2021).

- Yuko Ando /"360° Surround"-MV-(Short Ver.). Available online: https://www.youtube.com/watch?v=zDtETW9Tp7w&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=3 (accessed on 25 October 2021).

- INFINITE “Bad” Official MV (360 VR). Available online: https://www.youtube.com/watch?v=BNqW6uE-Q_o&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=4 (accessed on 25 October 2021).

- SCHOOL OF ROCK: The Musical—“You’re in the Band” (360 Video). Available online: https://www.youtube.com/watch?v=GFRPXRhBYOI&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=5 (accessed on 25 October 2021).

- SCANDOL 360: EXID_HOT PINK (VR). Available online: https://www.youtube.com/watch?v=iv_bNWjsQSM&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=6 (accessed on 25 October 2021).

- Peggy Hsu. [Grow old with you] 360 VR MV (Official 360 VR). Available online: https://www.youtube.com/watch?v=k2qgvvXhOaI&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=7, (accessed on 25 October 2021).

- Naive New Beaters-Heal Tomorrow-Clip 360° ft. Izia. Available online: https://www.youtube.com/watch?v=JxVVNm35rJE&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=8. (accessed on 25 October 2021).

- Devo-“What We Do” [OFFICIAL MUSIC VIDEO]. Available online: https://www.youtube.com/watch?v=Rr3akQ8XX3M&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=9 (accessed on 25 October 2021).

- Trevor Wesley-Chivalry is Dead (Official 360 Music Video). Available online: https://www.youtube.com/watch?v=jb0a9kGoZu0&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=10 (accessed on 25 October 2021).

- [M2] 360VR dance: GFriend—Rough. Available online: https://www.youtube.com/watch?v=uAt5srzLt4I&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=11 (accessed on 25 October 2021).

- Leah Dou-May Rain Official MV (360VR Version). Available online: https://www.youtube.com/watch?v=gI5g4YNORyo&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=12 (accessed on 25 October 2021).

- Run The Jewels-Crown (Official VR 360 Music Video). Available online: https://www.youtube.com/watch?v=JCNzOQ2Ok8s&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=13 (accessed on 25 October 2021).

- OneRepublic-Kids (360 Version). Available online: https://www.youtube.com/watch?v=eppTvwQNgro&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=14. (accessed on 25 October 2021).

- Farruko Ft. Ky-Many Marley-Chillax [360° Official Video]. Available online: https://www.youtube.com/watch?v=c9QkJ6272rs&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=15 (accessed on 25 October 2021).

- Imagine Dragons-Shots (Official Music Video). Available online: https://www.youtube.com/watch?v=81fer9ulOeA&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=16 (accessed on 25 October 2021).

- Sampha-(No One Knows Me) Like The Piano (Official 360° VR Music Video). Available online: https://www.youtube.com/watch?v=V-ncE-yR8mI&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=17 (accessed on 25 October 2021).

- The Range-Florida (Official 360° Video). Available online: https://www.youtube.com/watch?v=L40WLGB7V2w&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=18 (accessed on 25 October 2021).

- La La Land Medley in VR!! Sam Tsui & Megan Nicole|Sam Tsui. Available online: https://www.youtube.com/watch?v=VavMLy0QzSA&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=19 (accessed on 25 October 2021).

- The Kills-Whirling Eye (Official 360° VR Video). Available online: https://www.youtube.com/watch?v=GMcr4-7hlKs&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=20 (accessed on 25 October 2021).

- Depeche Mode-”Going Backwards” (360 Version). Available online: https://www.youtube.com/watch?v=y3GXqq9V9a0&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=21 (accessed on 25 October 2021).

- Emri-ADORE-360° (Official Video). Available online: https://www.youtube.com/watch?v=PHqOki9dsgo&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=22 (accessed on 25 October 2021).

- Elton John-Farewell Yellow Brick Road: The Legacy (VR360). Available online: https://www.youtube.com/watch?v=0zXZIgnub6w&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=23 (accessed on 25 October 2021).

- Mamma Mia! Here We Go Again-Waterloo 360 Music Video. Available online: https://www.youtube.com/watch?v=HRC-A5BaY2Q&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=24 (accessed on 25 October 2021).

- The Dandy Warhols “Be Alright” 360° Official Music Video-Shot @ The Dandys’ studio The Odditorium. Available online: https://www.youtube.com/watch?v=peM92qyUy5o&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=25 (accessed on 25 October 2021).

- Christopher-Irony (Official VR Music Video). Available online: https://www.youtube.com/watch?v=Yeo8034XisQ&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=26 (accessed on 25 October 2021).

- [Official MV] (POY Muzeum)-Space High (Feat. TAKUWA, PUP) (360 VR camera). Available online: https://www.youtube.com/watch?v=fw5lUOMN4dY&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=27 (accessed on 25 October 2021).

- Popstar-Reflections (360 VR Experience). Available online: https://www.youtube.com/watch?v=60YGz-gMu-M&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=28 (accessed on 25 October 2021).

- Green Screen MV. Available online: https://www.youtube.com/watch?v=qGbHz2Q-YJ8&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=29 (accessed on 25 October 2021).

- Nolie-Come Over (Official Music Video). Available online: https://www.youtube.com/watch?v=Ow0C5i1a2pw&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=30 (accessed on 25 October 2021).

- DENISE VALLE-REPEAT AFFECTIONS-OFFICIAL 360 MUSIC VIDEO. Available online: https://www.youtube.com/watch?v=nRqP06uX_H0&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=31 (accessed on 25 October 2021).

- Gramofone-My Self|@gramoHOME 360°. Available online: https://www.youtube.com/watch?v=1MJmVTuUA34&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=32 (accessed on 25 October 2021).

- Joey Maxwell—Streetlights (360 Degree Video). Available online: https://www.youtube.com/watch?v=cC396zPuwTY&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=33 (accessed on 25 October 2021).

- Ably House|Grave Song|360° VR. Available online: https://www.youtube.com/watch?v=BrQgMxxgWBY&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=34 (accessed on 25 October 2021).

- Noon Fifteen: Easy: 360 Video. Available online: https://www.youtube.com/watch?v=wuz7ZUvR9es&list=PLpwTD9PEgyLMDq5CtdwJ7angHr7SjL9yQ&index=35 (accessed on 25 October 2021).

- Sipatchin, A.; Siegfried, W.; Katharina, R. Eye-Tracking for Clinical Ophthalmology with Virtual Reality (VR): A Case Study of the HTC Vive Pro Eye’s Usability. Healthcare 2021, 9, 180. [Google Scholar] [CrossRef] [PubMed]

- Tobii Pro Spectrum. Available online: https://www.tobiipro.com/ko/product-listing/ko-tobii-pro-spectrum/ (accessed on 4 November 2021).

| Video Number | Video Title |

|---|---|

| 1 | Noa Neal ‘Graffiti’ 4 K 360° Music Video Clip [24] |

| 2 | Avicii—Waiting For Love (360 Video) [25] |

| 3 | Yuko Ando—360° (Rubi: Zenhoi) Surround [26] |

| 4 | INFINITE—“Bad” Official MV (360 VR) [27] |

| 5 | SCHOOL OF ROCK—You’re in the Band [28] |

| 6 | SCANDOL 360: EXID—HOT PINK (VR) [29] |

| 7 | Peggy Hsu—Grow old with you [30] |

| 8 | Naive New Beaters—Heal Tomorrow ft. Izia [31] |

| 9 | Devo—What We Do [32] |

| 10 | Trevor Wesley—Chivalry is Dead [33] |

| 11 | [M2] 360 VR dance: GFriend—Rough [34] |

| 12 | Leah Dou—May Rain Official MV [35] |

| 13 | Run The Jewels—Crown [36] |

| 14 | OneRepublic—Kids (360 version) [37] |

| 15 | Farruko Ft. Ky-Many Marley—Chillax [38] |

| 16 | Imagine Dragons—Shots [39] |

| 17 | Sampha—No One Knows Me [40] |

| 18 | The Range—Florida (Official 360° Video) [41] |

| 19 | La La Land Medley in VR [42] |

| 20 | The Kills—Whirling Eye [43] |

| 21 | Depeche Mode—“Going Backwards” [44] |

| 22 | emri—ADORE—360° (Official Video) [45] |

| 23 | Elton John—Farewell Yellow Brick Road [46] |

| 24 | Mamma Mia! Here We Go Again—Waterloo [47] |

| 25 | The Dandy Warhols—Be Alright [48] |

| 26 | Christopher—Irony (Official VR Music Video) [49] |

| 27 | POY Muzeum—Space High [50] |

| 28 | Popstar—Reflections (360 VR Experience) [51] |

| 29 | Green Screen MV [52] |

| 30 | Nolie—Come Over (Official Music Video) [53] |

| 31 | DENISE VALLE—REPEAT AFFECTIONS [54] |

| 32 | Gramofone—My Self|@gramoHOME 360° [55] |

| 33 | joey maxwell—streetlights (360 degree video) [56] |

| 34 | Ably House|Grave Song|360° VR [57] |

| 35 | Noon Fifteen: Easy: 360 video [58] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, H.; Nam, S. A Study on Attention Attracting Elements of 360-Degree Videos Based on VR Eye-Tracking System. Multimodal Technol. Interact. 2022, 6, 54. https://doi.org/10.3390/mti6070054

Choi H, Nam S. A Study on Attention Attracting Elements of 360-Degree Videos Based on VR Eye-Tracking System. Multimodal Technologies and Interaction. 2022; 6(7):54. https://doi.org/10.3390/mti6070054

Chicago/Turabian StyleChoi, Haram, and Sanghun Nam. 2022. "A Study on Attention Attracting Elements of 360-Degree Videos Based on VR Eye-Tracking System" Multimodal Technologies and Interaction 6, no. 7: 54. https://doi.org/10.3390/mti6070054

APA StyleChoi, H., & Nam, S. (2022). A Study on Attention Attracting Elements of 360-Degree Videos Based on VR Eye-Tracking System. Multimodal Technologies and Interaction, 6(7), 54. https://doi.org/10.3390/mti6070054