When Agents Become Partners: A Review of the Role the Implicit Plays in the Interaction with Artificial Social Agents

Abstract

:1. Introduction

2. The Process of Interaction

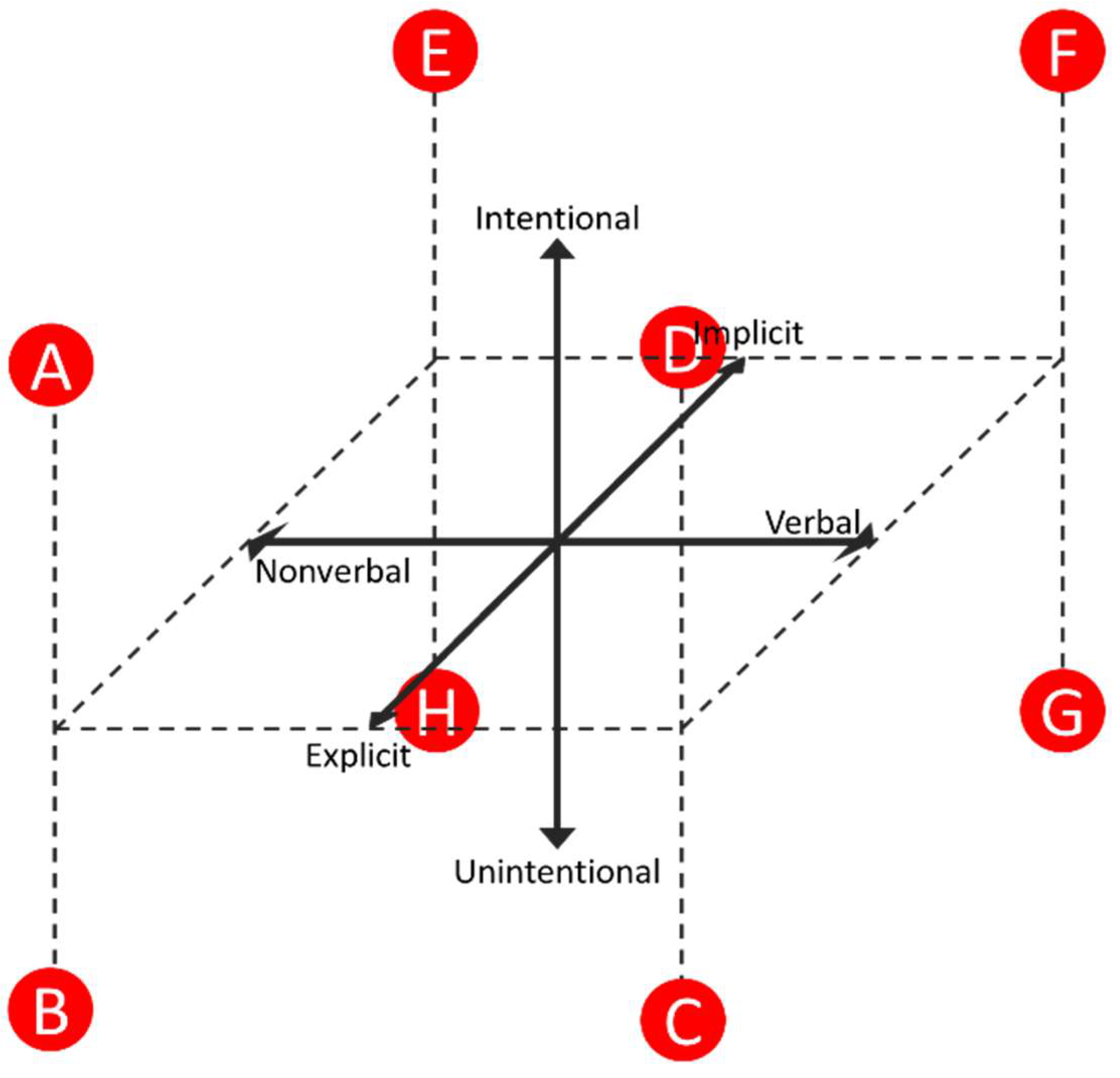

2.1. Dimensions of Interaction

2.2. The Role of Implicit Interaction

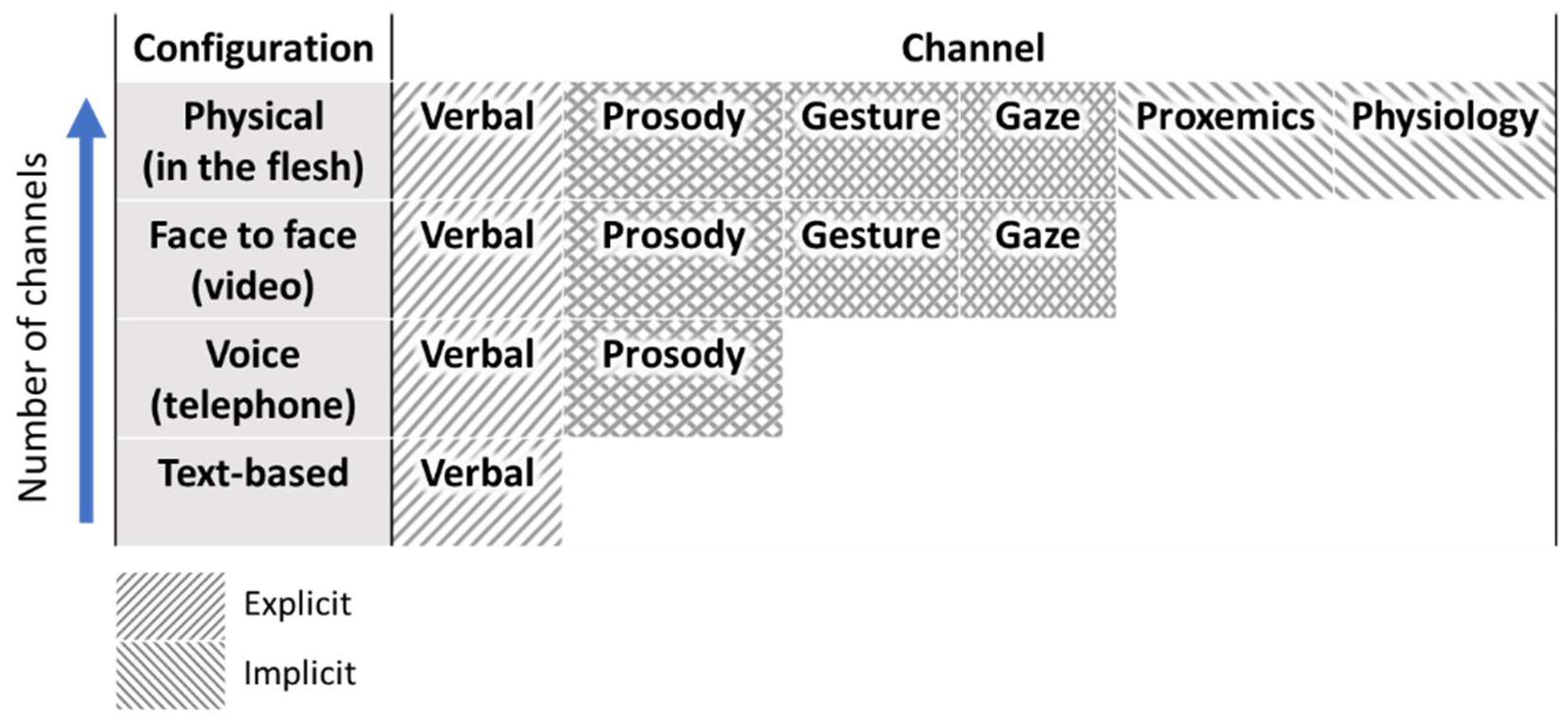

2.3. Interaction Configurations

2.4. Artificial Social Agents

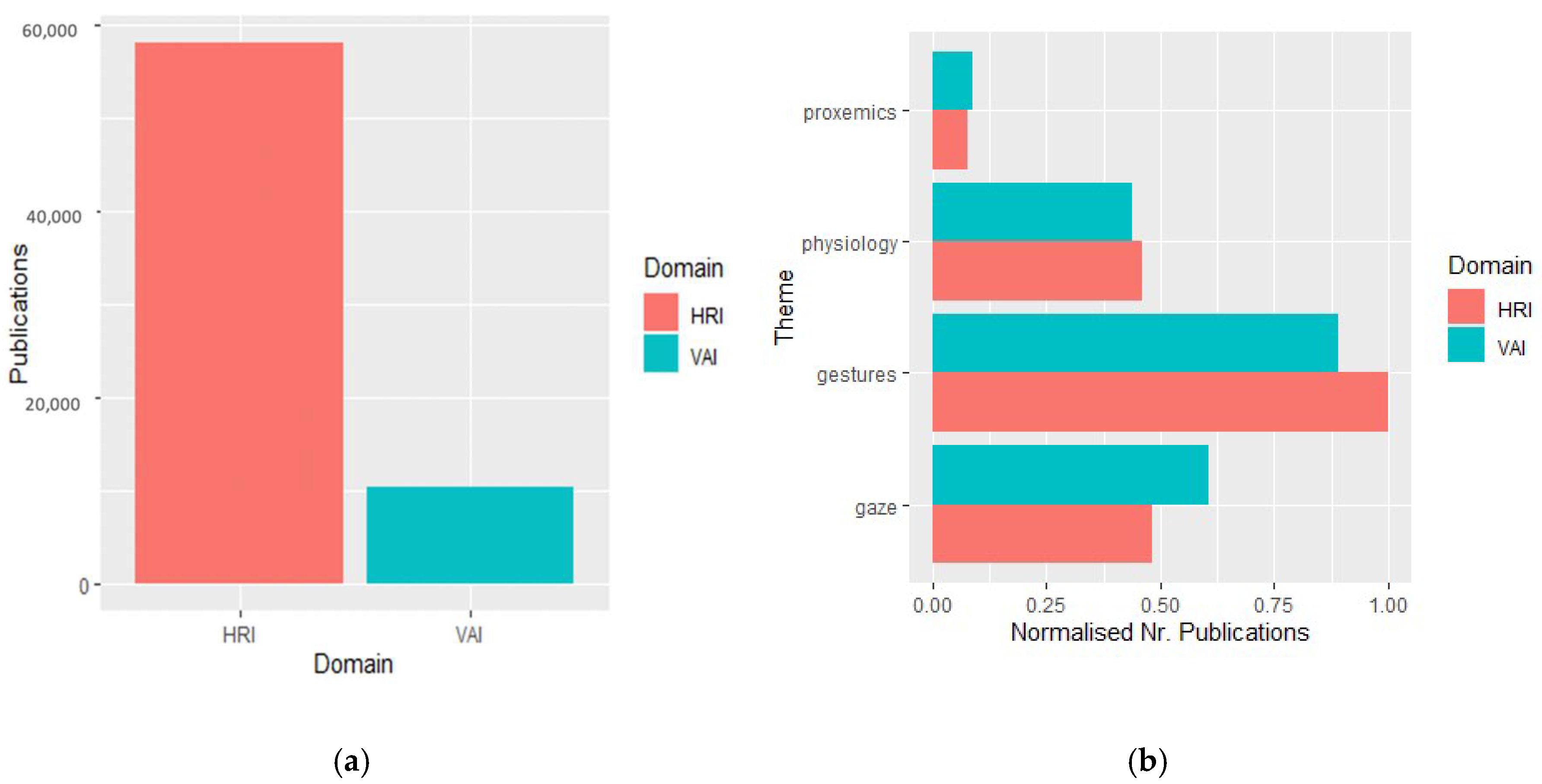

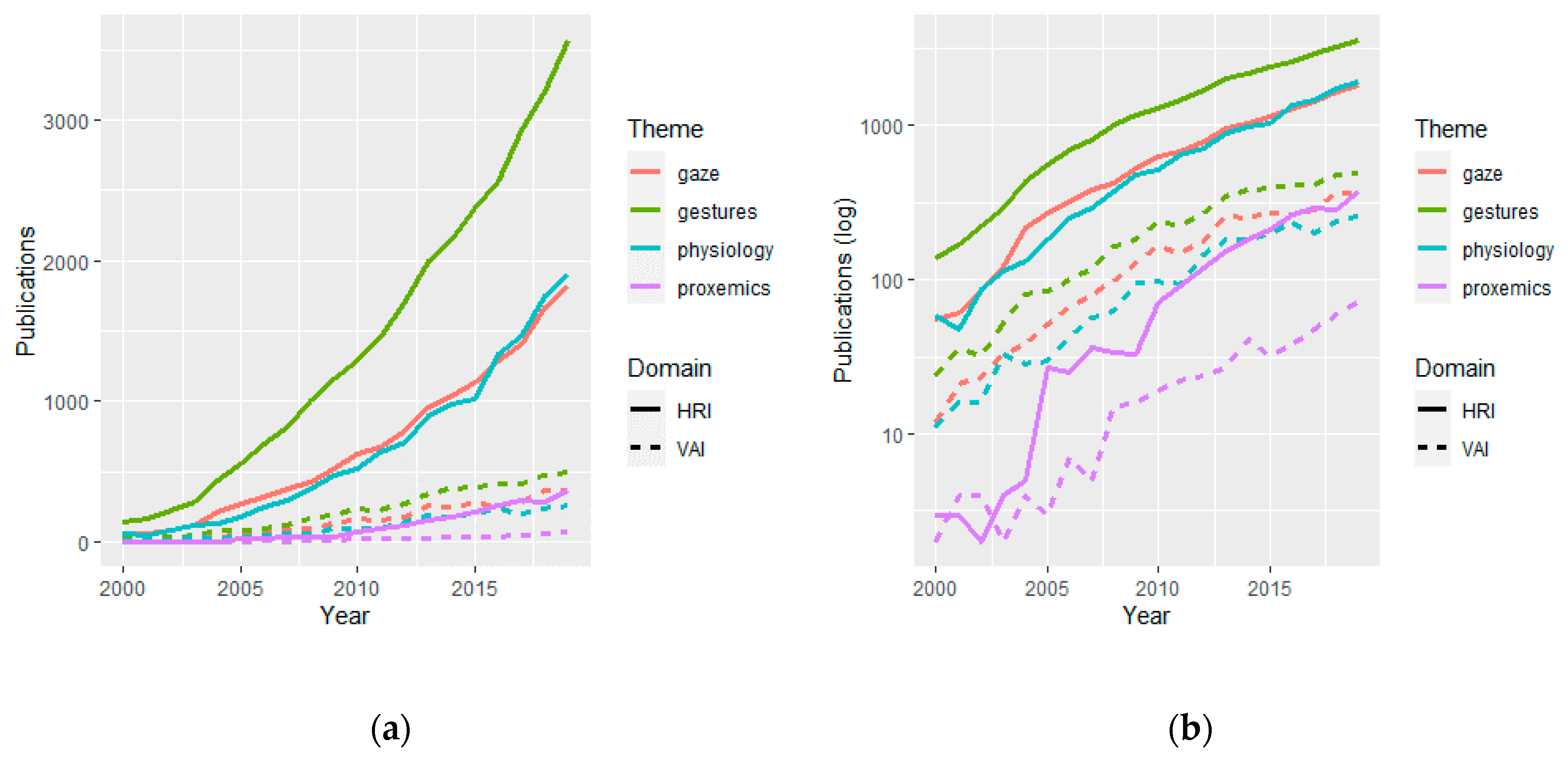

3. A Bibliometric View

Bibliometric Data

4. Implicit Interaction between Humans and Artificial Social Agents

4.1. Physical Distance and Body Orientation

4.2. Interaction through Gestures and Facial Expression

4.3. Communicative Gaze Behaviour

4.4. Interaction Based on Physiological Behaviour

5. Case Study: The Virtual Human Breathing Relaxation System

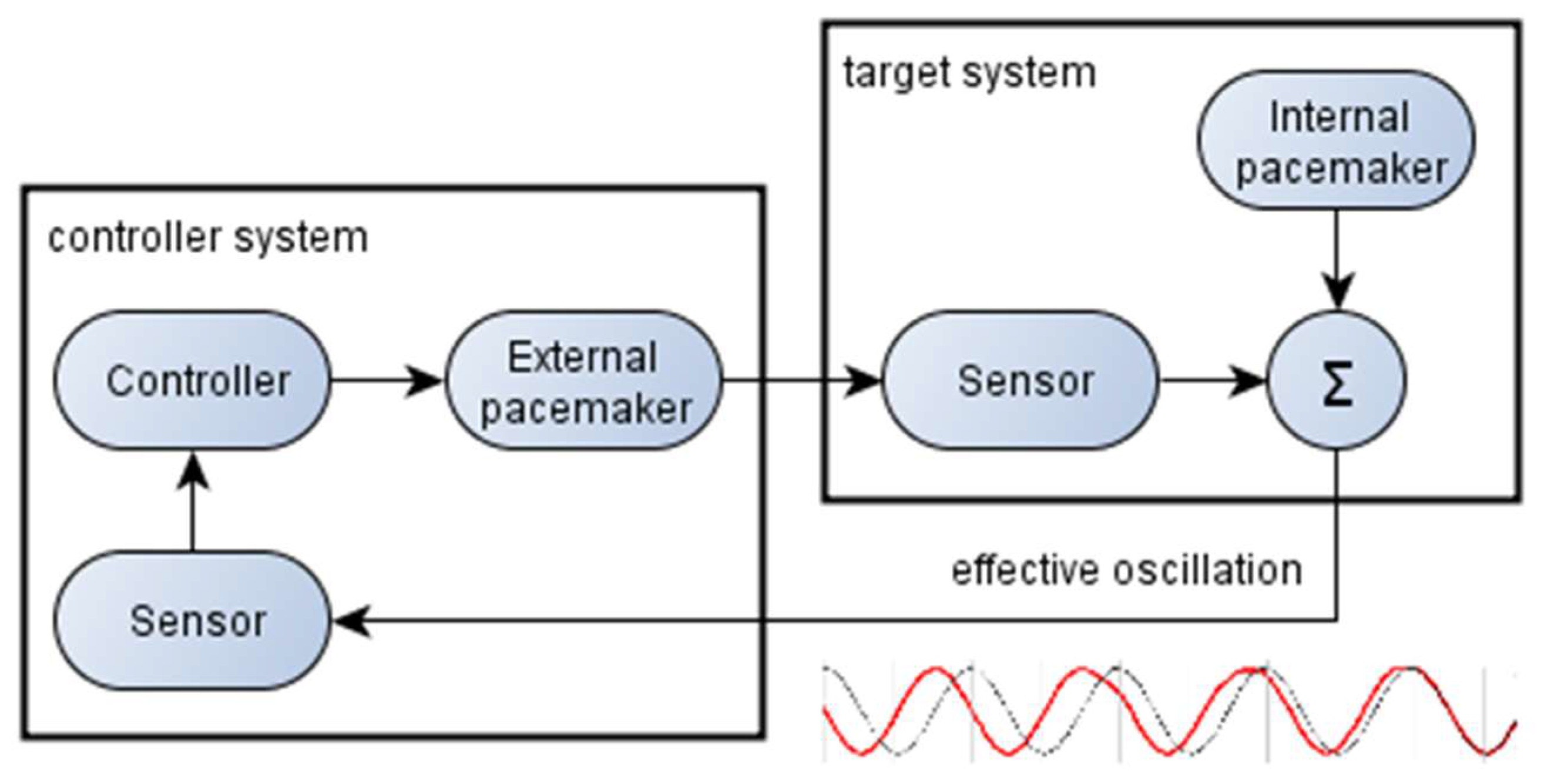

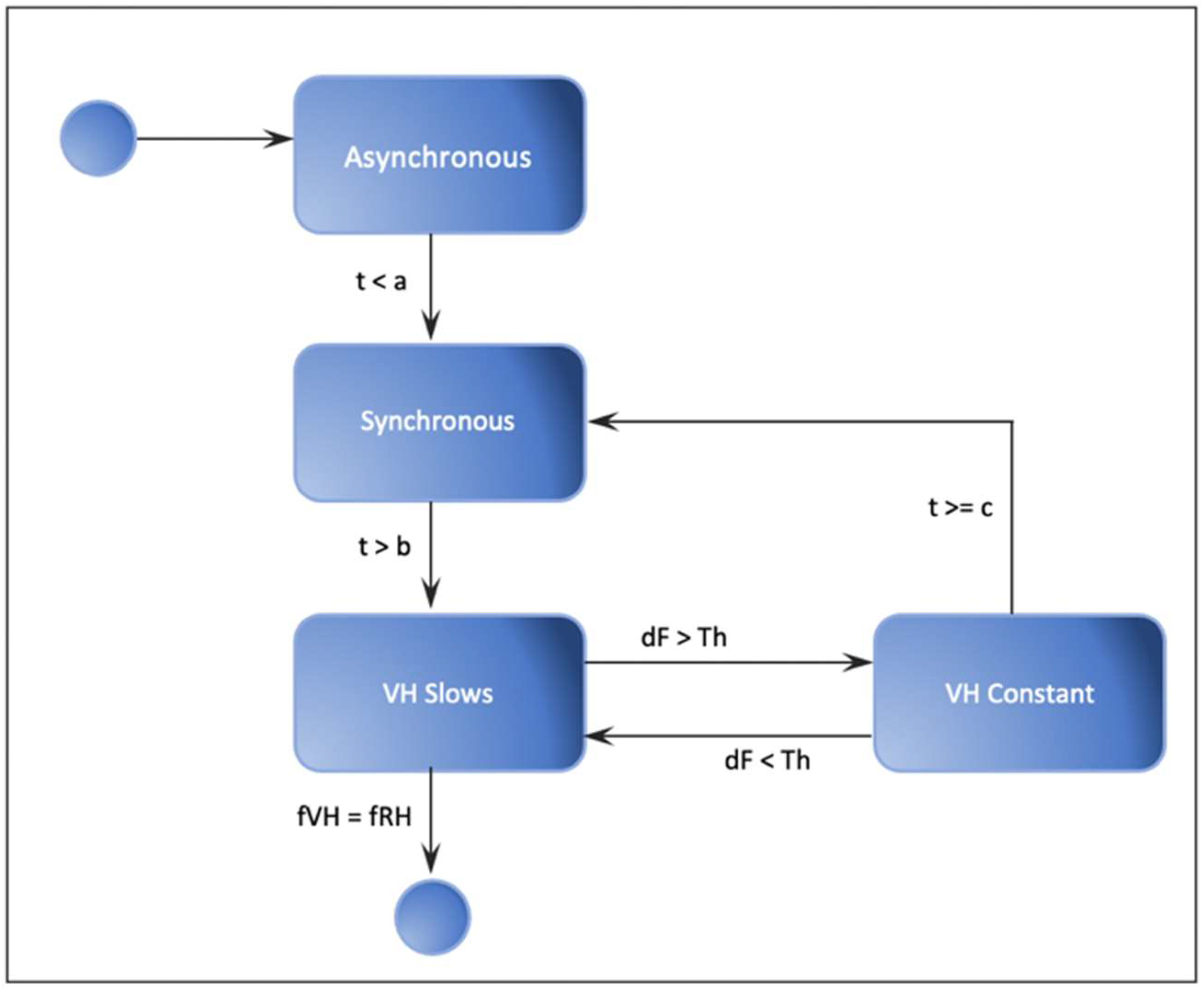

5.1. Physiological Synchrony Based on Active Entrainment

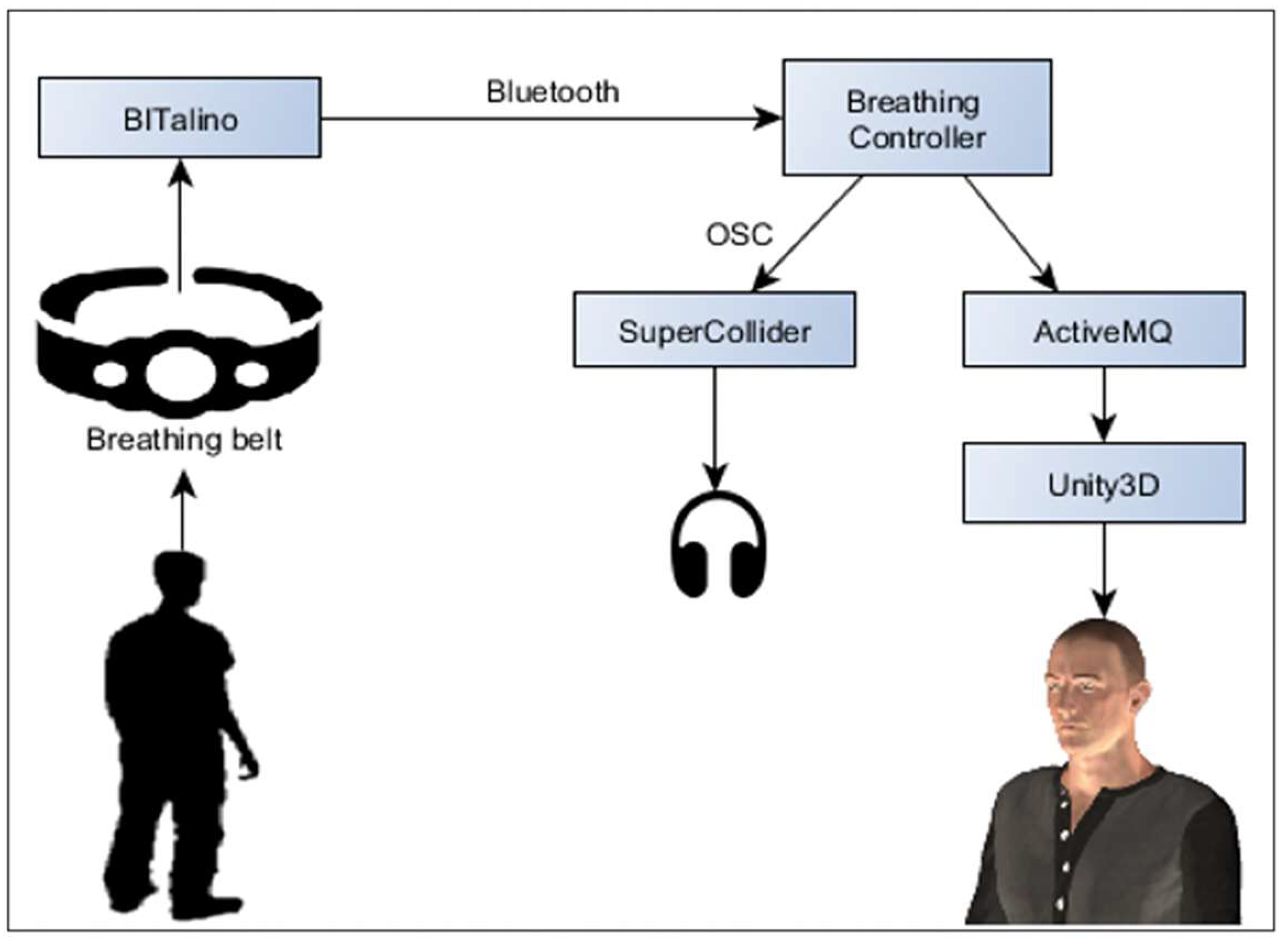

System Implementation

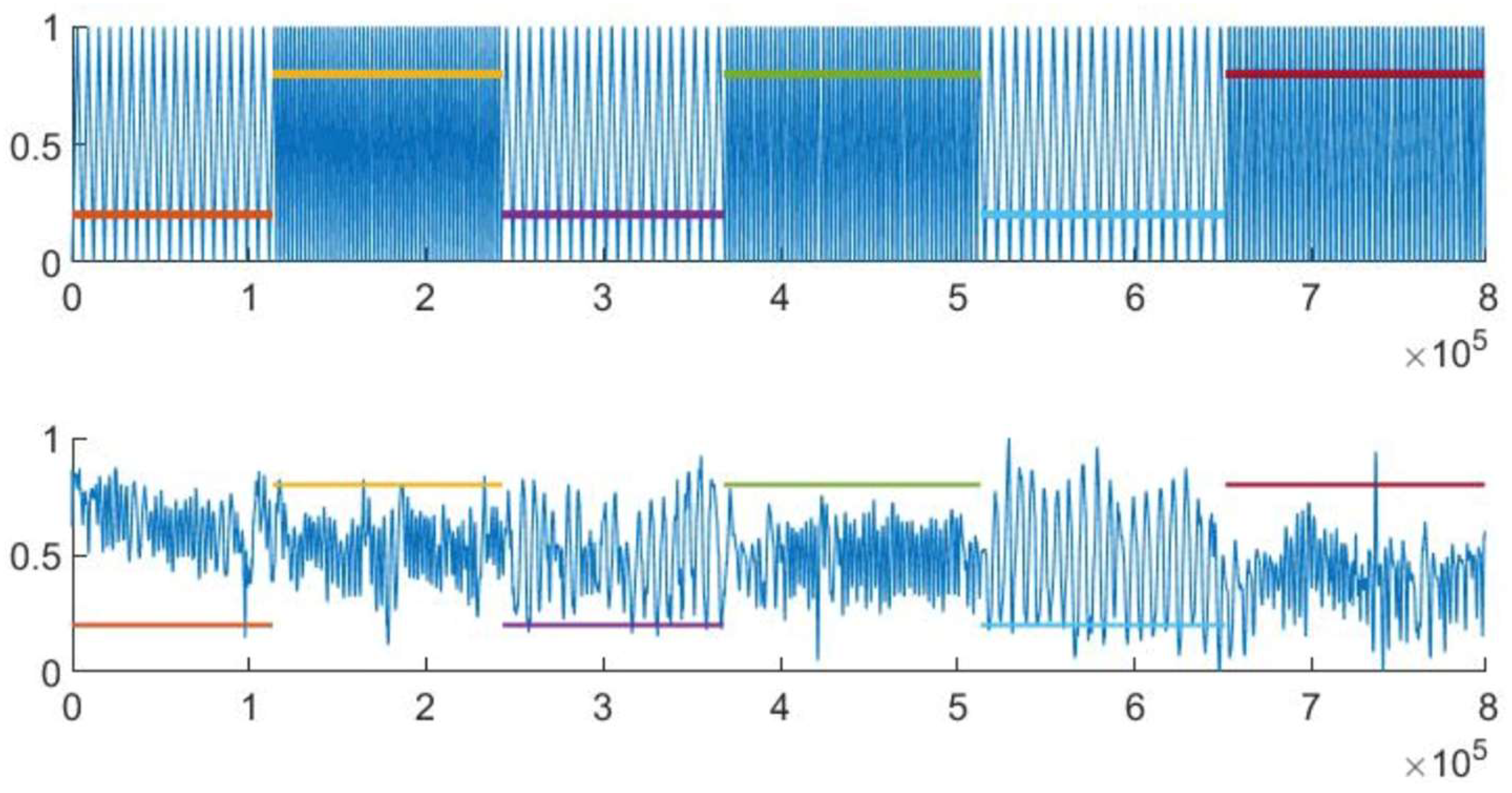

5.2. Pilot Study

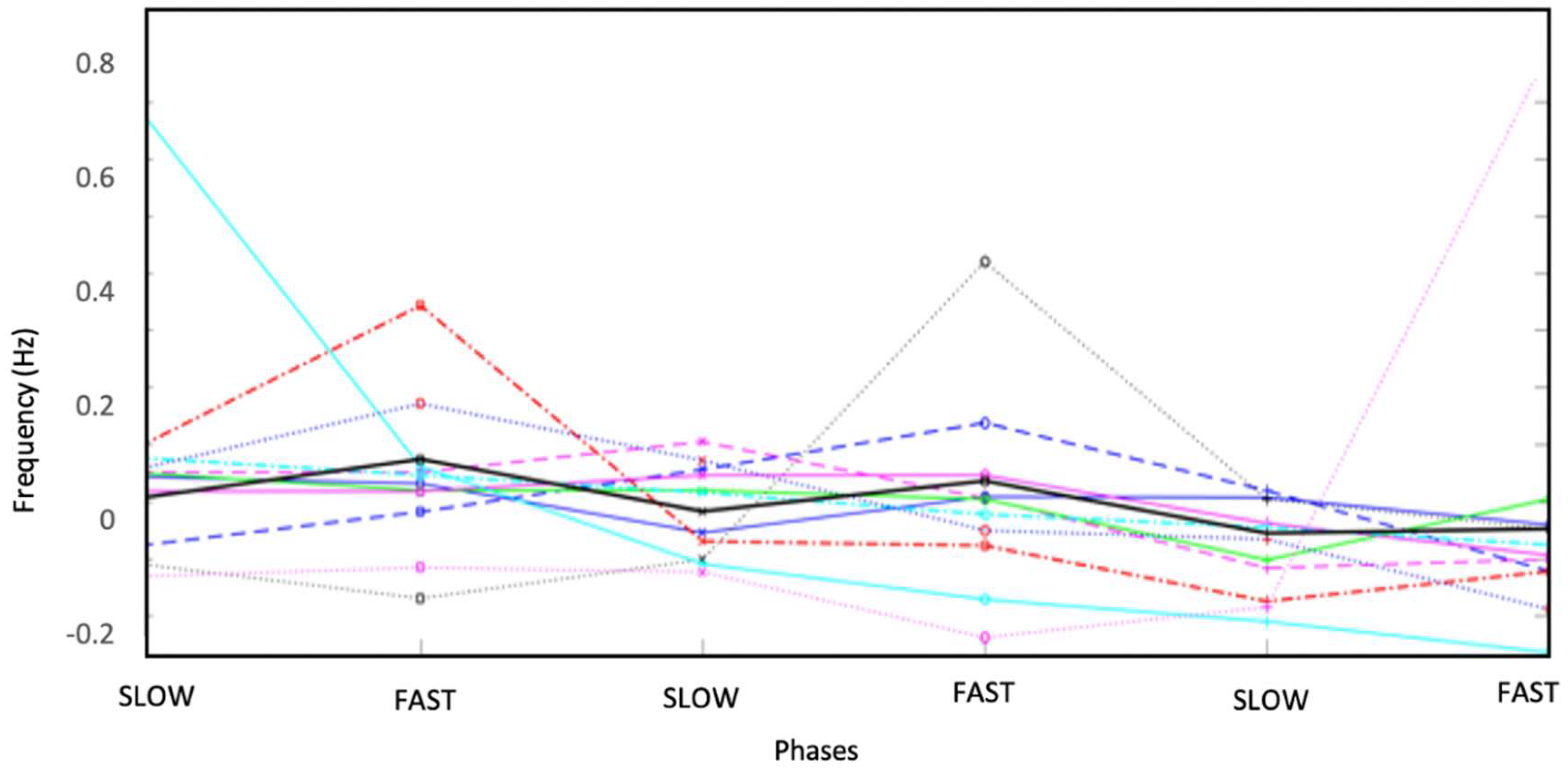

Findings

5.3. Case Study Conclusion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Griffin, J. Voice Statistics for 2020. 2020. Available online: https://www.thesearchreview.com/google-voice-searches-doubled-past-year-17111/ (accessed on 9 July 2020).

- IDTechEx. Smart Speech/Voice-Based Technology Market Will Reach $ 15.5 Billion by 2029 Forecasts IDTechEx Research. 2019. Available online: https://www.prnewswire.com/news-releases/smart-speechvoice-based-technology-market-will-reach--15-5-billion-by-2029-forecasts-idtechex-research-300778619.html (accessed on 20 April 2020).

- Kiseleva, J.; Crook, A.C.; Williams, K.; Zitouni, I.; Awadallah, A.H.; Anastasakos, T. Predicting user satisfaction with intelligent assistants. In Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval, Pisa, Italy, 17–21 July 2016; pp. 45–54. [Google Scholar]

- Cox, T. Siri and Alexa Fails: Frustrations with Voice Search. 2020. Available online: https://themanifest.com/digital-marketing/resources/siri-alexa-fails-frustrations-with-voice-search (accessed on 16 May 2020).

- Major, L.; Harriott, C. Autonomous Agents in the Wild: Human Interaction Challenges. In Robotics Research; Springer: Cham, Switzerland, 2020; Volume 10, pp. 67–74. [Google Scholar]

- Ochs, M.; Libermann, N.; Boidin, A.; Chaminade, T. Do you speak to a human or a virtual agent? automatic analysis of user’s social cues during mediated communication. In Proceedings of the ICMI—19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; pp. 197–205. [Google Scholar]

- Glowatz, M.; Malone, D.; Fleming, I. Information systems Implementation delays and inactivity gaps: The end user perspectives. In Proceedings of the 16th International Conference on Information Integration and Web-Based Applications & Services, Hanoi, Vietnam, 4–6 December 2014; pp. 346–355. [Google Scholar]

- Schoenenberg, K.; Raake, A.; Koeppe, J. Why are you so slow?—Misattribution of transmission delay to attributes of the conversation partner at the far-end. Int. J. Hum. Comput. Stud. 2014, 72, 477–487. [Google Scholar] [CrossRef]

- Jiang, M. The reason Zoom calls drain your energy, BBC. 2020. Available online: https://www.bbc.com/worklife/article/20200421-why-zoom-video-chats-are-so-exhausting (accessed on 27 April 2020).

- Precone, V.; Paolacci, S.; Beccari, T.; Dalla Ragione, L.; Stuppia, L.; Baglivo, M.; Guerri, G.; Manara, E.; Tonini, G.; Herbst, K.L.; et al. Pheromone receptors and their putative ligands: Possible role in humans. Eur. Rev. Med. Pharmacol. Sci. 2020, 24, 2140–2150. [Google Scholar] [PubMed]

- Melinda, G.L. Negotiation: The Opposing Sides of Verbal and Nonverbal Communication. J. Collect. Negot. Public Sect. 2000, 29, 297–306. [Google Scholar] [CrossRef]

- Abbott, R. Implicit and Explicit Communication. Available online: https://www.streetdirectory.com/etoday/implicit-andexplicit-communication-ucwjff.html (accessed on 20 July 2020).

- Implicit and Explicit Rules of Communication: Definitions & Examples. 2014. Available online: https://study.com/academy/lesson/implicit-and-explicit-rules-of-communication-definitions-examples.html (accessed on 3 July 2020).

- Thomson, M.; Murphy, K.; Lukeman, R. Groups clapping in unison undergo size-dependent error-induced frequency increase. Sci. Rep. 2018, 8, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Palumbo, R.V.; Marraccini, M.E.; Weyandt, L.L.; Wilder-Smith, O.; McGee, H.A.; Liu, S.; Goodwin, M.S. Interpersonal Autonomic Physiology: A Systematic Review of the Literature. Personal. Soc. Psychol. Rev. 2017, 21, 99–141. [Google Scholar] [CrossRef] [PubMed]

- McAssey, M.P.; Helm, J.; Hsieh, F.; Sbarra, D.A.; Ferrer, E. Methodological advances for detecting physiological synchrony during dyadic interactions. Methodology 2013, 9, 41–53. [Google Scholar] [CrossRef] [Green Version]

- Ferrer, E.; Helm, J.L. Dynamical systems modeling of physiological coregulation in dyadic interactions. Int. J. Psychophysiol. 2013, 88, 296–308. [Google Scholar] [CrossRef]

- Feldman, R.; Magori-Cohen, R.; Galili, G.; Singer, M.; Louzoun, Y. Mother and infant coordinate heart rhythms through episodes of interaction synchrony. Infant Behav. Dev. 2011, 34, 569–577. [Google Scholar] [CrossRef]

- Chartrand, T.L.; Bargh, J.A. The chameleon effect: The perception-behavior link and social interaction. J. Pers. Soc. Psychol. 1999, 76, 893–910. [Google Scholar] [CrossRef]

- Lakin, J.L.; Jefferis, V.E.; Cheng, C.M.; Chartrand, T.L. The chameleon effect as social glue: Evidence for the evolutionary significance of nonconscious mimicry. J. Nonverbal Behav. 2003, 27, 145–162. [Google Scholar] [CrossRef]

- Severin, W. Another look at cue summation. A.V. Commun. Rev. 1967, 15, 233–245. [Google Scholar] [CrossRef]

- Moore, D.; Burton, J.; Myers, R. Multiple-channel communication: The theoretical and research foundations of multimedia. In Handbook of Research for Educational Communications and Technology, 2nd ed.; Jonassen, D.H., Ed.; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 1996; pp. 851–875. [Google Scholar]

- Baggett, P.; Ehrenfeucht, A. Encoding and retaining information in the visuals and verbals of an educational movie. Educ. Commun. Technol. J. 1983, 31, 23–32. [Google Scholar] [CrossRef]

- Mayer, R.E. Multimedia learning. Psychol. Learn. Motiv. Adv. Res. Theory 2002, 41, 85–139. [Google Scholar]

- Adams, J.A.; Rani, P.; Sarkar, N. Mixed-Initiative Interaction and Robotic Systems. In AAAI Workshop on Supervisory Control of Learning and Adaptative Systems; Semantic Scholar: Seattle, WA, USA, 2004; Volume WS-04-10, pp. 6–13. [Google Scholar]

- Blickensderfer, E.L.; Reynolds, R.; Salas, E.; Cannon-Bowers, J.A. Shared expectations and implicit coordination in tennis doubles teams. J. Appl. Sport Psychol. 2010, 22, 486–499. [Google Scholar] [CrossRef]

- Breazeal, C.; Kidd, C.D.; Thomaz, A.L.; Hoffman, G.; Berlin, M. Effects of nonverbal communication on efficiency and robustness in human-robot teamwork. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems IROS, Edmonton, Canada, 2–6 August 2005; pp. 708–713. [Google Scholar]

- Greenstein, J.; Revesman, M. Two Simulation Studies Investigating Means of Human-Computer Communication for Dynamic Task Allocation. IEEE Trans. Syst. Man. Cybern. 1986, 16, 726–730. [Google Scholar] [CrossRef]

- Coghlan, S.; Waycott, J.; Neves, B.B.; Vetere, F. Using robot pets instead of companion animals for older people: A case of “reinventing the wheel”? In Proceedings of the 30th Australian Conference on Computer-Human Interaction, Melbourne, Australia, 4–7 December 2018; pp. 172–183. [Google Scholar]

- Google Inc. Teens Use Voice Search Most, Even in Bathroom, Google’s Mobile Voice Study Finds. 2014. Available online: https://www.prnewswire.com/news-releases/teens-use-voice-search-most-even-in-bathroom-googles-mobile-voice-study-finds-279106351.html (accessed on 21 April 2020).

- Kang, D.; Kim, M.G.; Kwak, S.S. The effects of the robot’s information delivery types on users’ perception toward the robot. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 1267–1272. [Google Scholar]

- Schmidt, A. Implicit human computer interaction through context. Pers. Ubiquitous Comput. 2000, 4, 191–199. [Google Scholar] [CrossRef] [Green Version]

- Saunderson, S.; Nejat, G. How Robots Influence Humans: A Survey of Nonverbal Communication in Social Human–Robot Interaction; Springer: Dordrecht, The Netherlands, 2019; Volume 11. [Google Scholar]

- Hall, E. The Hidden Dimension; Doubleday: Garden City, NY, USA, 1966. [Google Scholar]

- Chidambaram, V.; Chiang, Y.; Mutlu, B. Designing persuasive robots: How robots might persuade people using vocal and nonverbal cues. In Proceedings of the seventh annual ACM/IEEE international conference on Human-Robot Interaction, Boston, MA, USA, 5–8 March 2012; pp. 293–300. [Google Scholar]

- Mutlu, B.; Forlizzi, J. Robots in organizations. In Proceedings of the 2008 3rd ACM/IEEE International Conference on Human-Robot Interaction (HRI), Amsterdam, The Netherlands, 12–15 March 2008; p. 287. [Google Scholar]

- Fiore, S.M.; Wiltshire, T.J.; Lobato, E.J.C.; Jentsch, F.G.; Huang, W.H.; Axelrod, B. Toward understanding social cues and signals in human-robot interaction: Effects of robot gaze and proxemic behavior. Front. Psychol. 2013, 4, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Vassallo, C.; Olivier, A.H.; Souères, P.; Crétual, A.; Stasse, O.; Pettré, J. How do walkers behave when crossing the way of a mobile robot that replicates human interaction rules? Gait Posture 2018, 60, 188–193. [Google Scholar] [CrossRef]

- Dondrup, C.; Lichtenthäler, C.; Hanheide, M. Hesitation signals in human-robot head-on encounters: A pilot study. In Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot, Interaction, Bielefeld, Germany, 3–6 March 2014; pp. 154–155. [Google Scholar]

- Mumm, J.; Mutlu, B. Human-robot proxemics: Physical and Psychological Distancing in Human-Robot Interaction. In Proceedings of the 6th International Conference on Human-Robot Interaction, Lausanne, Switzerland, 6–9 March 2011; p. 331. [Google Scholar]

- Takayama, L.; Pantofaru, C. Influences on proxemic behaviors in human-robot interaction. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, IROS 2009, St. Louis, MO, USA, 10–15 October 2009. [Google Scholar]

- Kendon, A. Spacing and orientation in co-present interaction. In Development of Multimodal Interfaces: Active Listening and Synchrony; Esposito, A., Campbell, A., Vogel, N., Hussain, C., Nijholt, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 1–15. [Google Scholar]

- Kendon, A. Gesture: Visible Action as Utterance; Cambridge University Press: Cambridge, UK, 2005. [Google Scholar]

- Yamaoka, F.; Kanda, T.; Ishiguro, H.; Hagita, N. How close? Model of proximity control for information-presenting robots. In Proceedings of the 2008 3rd ACM/IEEE International Conference on Human-Robot Interaction (HRI), Amsterdam, The Netherlands, 12–15 March 2008. [Google Scholar]

- Yamaoka, F.; Kanda, T.; Ishiguro, H.; Hagita, N. Developing a model of robot behavior to identify and appropriately respond to implicit attention-shifting. In Proceedings of the 2009 4th ACM/IEEE International Conference on Human-Robot Interaction (HRI), La Jolla, CA, USA, 11–13 March 2009; p. 133. [Google Scholar]

- Yamaoka, F.; Kanda, T.; Ishiguro, H.; Hagita, N. A model of proximity control for information-presenting robots. IEEE Trans. Robot. 2010, 26, 187–195. [Google Scholar] [CrossRef]

- Friedman, D.; Steed, A.; Slater, M. Spatial social behavior in second life. In International Workshop on Intelligent Virtual Agents; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4722, pp. 252–263. [Google Scholar]

- Bailenson, J.N.; Blascovich, J.; Beall, A.C.; Loomis, J.M. Equilibrium theory revisited: Mutual gaze and personal space in virtual environments. Presence Teleoperators Virtual Environ. 2001, 10, 583–593. [Google Scholar] [CrossRef]

- Janssen, J.H.; Bailenson, J.N.; Ijsselsteijn, W.A.; Westerink, J.H.D.M. Intimate heartbeats: Opportunities for affective communication technology. IEEE Trans. Affect. Comput. 2010, 1, 72–80. [Google Scholar] [CrossRef] [Green Version]

- Kim, Y.; Mutlu, B. How social distance shapes human-robot interaction. Int. J. Hum. Comput. Stud. 2014, 72, 783–795. [Google Scholar] [CrossRef]

- Mori, M.; MacDorman, K.F.; Kageki, N. The uncanny valley. IEEE Robot. Autom. Mag. 2012, 19, 98–100. [Google Scholar] [CrossRef]

- Bartneck, C.; Kanda, T.; Ishiguro, H.; Hagita, N. My robotic doppelgänger - A critical look at the Uncanny Valley. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009. [Google Scholar]

- Loffler, D.; Dorrenbacher, J.; Hassenzahl, M. The uncanny valley effect in zoomorphic robots: The U-shaped relation between animal likeness and likeability. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 261–270. [Google Scholar]

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A survey of socially interactive robots. Robot. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef] [Green Version]

- Mehrabian, A. Nonverbal Communication; Routledge: New York, NY, USA, 1972; Volume 91. [Google Scholar]

- Alibali, M.W. Gesture in Spatial Cognition: Expressing, Communicating, and Thinking About Spatial Information. Spat. Cogn. Comput. 2005, 5, 307–331. [Google Scholar] [CrossRef]

- Saberi, M.; Bernardet, U.; Dipaola, S. An Architecture for Personality-based, Nonverbal Behavior in Affective Virtual Humanoid Character. Procedia Comput. Sci. 2014, 41, 204–211. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Jarvis, R. A multi-modal gesture recognition system in a human-robot interaction scenario. In Proceedings of the 2009 IEEE International Workshop on Robotic and Sensors Environments, Lecco, Italy, 6–7 November 2009; pp. 41–46. [Google Scholar]

- Riek, L.D.; Rabinowitch, T.-C.; Bremner, P.; Pipe, A.G.; Fraser, M.; Robinson, P. Cooperative gestures: Effective signaling for humanoid robots. In Proceedings of the 2010 5th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Osaka, Japan, 2–5 March 2010; pp. 61–68. [Google Scholar]

- Ge, S.S.; Wang, C.; Hang, C.C. Facial expression imitation in human robot interaction. In Proceedings of the 17th IEEE International Symposium on Robot and Human Interactive Communication, RO-MAN, Munich, Germany, 1–3 August 2008. [Google Scholar]

- Tinwell, A.; Grimshaw, M.; Nabi, D.A.; Williams, A. Facial expression of emotion and perception of the Uncanny Valley in virtual characters. Comput. Human Behav. 2011, 27, 741–749. [Google Scholar] [CrossRef]

- Kobayashi, H.; Hara, F. Facial interaction between animated 3D face robot and human beings. In Proceedings of the 1997 IEEE International Conference on Systems, Man, and Cybernetics. Computational Cybernetics and Simulation, Orlando, FL, USA, 12–15 October 1997; pp. 3732–3737. [Google Scholar]

- Breazeal, C. Toward sociable robots. Robot. Auton. Syst. 2003, 42, 167–175. [Google Scholar] [CrossRef]

- Terada, K.; Takeuchi, C. Emotional Expression in Simple Line Drawings of a Robot’s Face Leads to Higher Offers in the Ultimatum Game. Front. Psychol. 2017, 8. [Google Scholar] [CrossRef] [Green Version]

- Mirnig, N.; Tan, Y.K.; Han, B.S.; Li, H.; Tscheligi, M. Screen feedback: How to overcome the expressive limitations of a social robot. In Proceedings of the 2013 IEEE RO-MAN, Gyeongju, Korea, 26–29 August 2013; pp. 348–349. [Google Scholar]

- Marti, P.; Giusti, L. A robot companion for inclusive games: A user-centred design perspective. In Proceedings of the IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010. [Google Scholar]

- Gratch, J.; Okhmatovskaia, A.; Lamothe, F.; Marsella, S.; Morales, M.; Van der Werf, R.J.; Morency, L.P. Virtual rapport. In International Workshop on Intelligent Virtual Agents; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4133, pp. 14–27. [Google Scholar]

- Shapiro, A. Building a character animation system. In Motion in Games; Allbeck, J.M., Faloutsos, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 7060, pp. 98–109. [Google Scholar]

- Bohus, D.; Horvitz, E. Facilitating multiparty dialog with gaze, gesture, and speech. In Proceedings of the International Conference on Multimodal Interfaces and the Workshop on Machine Learning for Multimodal Interaction, Beijing, China, 8-12 November 2010. [Google Scholar]

- Noma, T.; Zhao, L.; Badler, N.I. Design of a virtual human presenter. IEEE Comput. Graph. Appl. 2000, 20, 79–85. [Google Scholar] [CrossRef] [Green Version]

- Sauppé, A.; Mutlu, B. Robot deictics: How gesture and context shape referential communication. In Proceedings of the 2014 9th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Bielefeld, Germany, 3–6 March 2014; pp. 342–349. [Google Scholar]

- Bremner, P.; Pipe, A.G.; Fraser, M.; Subramanian, S.; Melhuish, C. Beat gesture generation rules for human-robot interaction. In Proceedings of the IEEE International Workshop on Robot and Human Interactive Communication, Toyama, Japan, 27 September–2 October 2009. [Google Scholar]

- Bremner, P.; Leonards, U. Iconic Gestures for Robot Avatars, Recognition and Integration with Speech. Front. Psychol. 2016, 7. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aly, A.; Tapus, A. Prosody-based adaptive metaphoric head and arm gestures synthesis in human robot interaction. In Proceedings of the 2013 16th International Conference on Advanced Robotics, ICAR 2013, Montevideo, Uruguay, 25–29 November 2013. [Google Scholar]

- Hanson, D. Hanson Robotics. Available online: https://www.hansonrobotics.com/research/ (accessed on 26 October 2020).

- Hanson, D. Exploring the aesthetic range for humanoid robots. In Proceedings of the ICCS/CogSci-2006 Long Symposium: Toward Social Mechanisms of Android Science, Vancouver, Canada, 26–29 July 2006; pp. 39–42. [Google Scholar]

- Kätsyri, J.; de Gelder, B.; Takala, T. Virtual Faces Evoke Only a Weak Uncanny Valley Effect: An Empirical Investigation with Controlled Virtual Face Images. Perception 2019, 48, 968–991. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chattopadhyay, D.; MacDorman, K.F. Familiar faces rendered strange: Why inconsistent realism drives characters into the uncanny valley. J. Vis. 2016, 16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Argyle, M.; Cook, M.; Cramer, D. Gaze and Mutual Gaze. Br. J. Psychiatry 1994, 165, 848–850. [Google Scholar] [CrossRef]

- Duncan, S.; Fiske, D.W. Face-to-Face Interaction; Routledge: Abingdon, UK, 1977. [Google Scholar]

- Admoni, H.; Scassellati, B. Social Eye Gaze in Human-Robot Interaction: A Review. J. Human Robot. Interact. 2017, 6, 25. [Google Scholar] [CrossRef] [Green Version]

- De Hamilton, A.F.C. Gazing at me: The importance of social meaning in understanding direct-gaze cues. Philos. Trans. R. Soc. B Biol. Sci. 2016, 371. [Google Scholar] [CrossRef] [Green Version]

- Knapp, M.L.; Hall, J.A.; Horgan, T.G. Nonverbal Communication in Human Interaction, 8th ed.; Cengage Learning: Boston, MA, USA, 2012. [Google Scholar]

- Das, A.; Hasan, M.M. Eye gaze behavior of virtual agent in gaming environment by using artificial intelligence. In Proceedings of the 2013 International Conference on Electrical Information and Communication Technology (EICT), Khulna, Bangladesh, 13–15 February 2014; pp. 1–7. [Google Scholar]

- Muhl, C.; Nagai, Y. Does disturbance discourage people from communicating with a robot? In Proceedings of the RO-MAN 2007-The 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju, Korea, 26–29 August 2007; pp. 1137–1142. [Google Scholar]

- Mutlu, B.; Forlizzi, J.; Hodgins, J. A storytelling robot: Modeling and evaluation of human-like gaze behavior. In Proceedings of the 2006 6th IEEE-RAS International Conference on Humanoid Robots, HUMANOIDS, Genova, Italy, 4–6 December 2006. [Google Scholar]

- Staudte, M.; Crocker, M. The effect of robot gaze on processing robot utterances. In Proceedings of the 31th Annual Conference of the Cognitive Science Society, Amsterdam, The Netherlands, 29 July–1 August 2009. [Google Scholar]

- Mutlu, B.; Yamaoka, F.; Kanda, T.; Ishiguro, H.; Hagita, N. Nonverbal leakage in robots: Communication of intentions through seemingly unintentional behavior. In Proceedings of the 4th ACM/IEEE International Conference on Human Robot Interaction, La Jolla, CA, USA, 9–13 March 2009; Volume 2, pp. 69–76. [Google Scholar]

- Li, S.; Zhang, X. Implicit Intention Communication in Human-Robot Interaction Through Visual Behavior Studies. IEEE Trans. Human Mach. Syst. 2017, 47, 437–448. [Google Scholar] [CrossRef]

- Sakita, K.; Ogawara, K.; Murakami, S.; Kawamura, K.; Ikeuchi, K. Flexible cooperation between human and robot by interpreting human intention from gaze information. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) (IEEE Cat. No.04CH37566), Sendai, Japan, 28 September–2 October 2004; Volume 1, pp. 846–851. [Google Scholar]

- Li, S.; Zhang, X. Implicit human intention inference through gaze cues for people with limited motion ability. In Proceedings of the 2014 IEEE International Conference on Mechatronics and Automation, Tianjin, China, 3–6 August 2014; pp. 257–262. [Google Scholar]

- Torrey, C.; Powers, A.; Fussell, S.R.; Kiesler, S. Exploring adaptive dialogue based on a robot’s awareness of human gaze and task progress. In Proceedings of the ACM/IEEE international conference on Human-robot interaction, Arlington, VA, USA, March 2007; p. 247. [Google Scholar]

- Lahiri, U.; Warren, Z.; Sarkar, N. Design of a gaze-sensitive virtual social interactive system for children with autism. IEEE Trans. Neural Syst. Rehabil. Eng. 2011, 19, 443–452. [Google Scholar] [CrossRef] [Green Version]

- Bee, N.; Wagner, J.; André, E.; Vogt, T. Gaze behavior during interaction with a virtual character in interactive storytelling. In AAMAS, Proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems, Toronto, Canada, 10–14 May 2010; IFAAMAS: Richland, SC, USA, 2010. [Google Scholar]

- Wang, N.; Gratch, J. Don’t Just Stare at Me! In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010; 1241; 1249. [Google Scholar]

- Kipp, M.; Gebhard, P. ‘IGaze: Studying reactive gaze behavior in semi-immersive human-avatar interactions’. IVA 2008, 2792, 191–199. [Google Scholar] [CrossRef]

- Shi, C.; Kanda, T.; Shimada, M.; Yamaoka, F.; Ishiguro, H.; Hagita, N. Easy development of communicative behaviors in social robots. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 5302–5309. [Google Scholar]

- Crick, C.; Munz, M.; Scassellati, B. ‘Robotic drumming: Synchronization in social tasks’. In Proceedings of the ROMAN—15th IEEE International Workshop on Robot and Human Interactive Communication, Hatfield, UK, 6–8 September 2006; pp. 97–102. [Google Scholar] [CrossRef] [Green Version]

- Lim, A.; Mizumoto, T.; Cahier, L.K.; Otsuka, T.; Takahashi, T.; Komatani, K.; Ogata, T.; Okuno, H.G. Robot musical accompaniment: Integrating audio and visual cues for real-time synchronization with a human flutist. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 1964–1969. [Google Scholar]

- Liu, C.; Rani, P.; Sarkar, N. Affective state recognition and adaptation in human-robot interaction: A design approach. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006; pp. 3099–3106. [Google Scholar]

- Rani, P.; Sarkar, N.; Smith, C.A.; Kirby, L.D. Anxiety detecting robotic system—Towards implicit human-robot collaboration. Robotica 2004, 22, 85–95. [Google Scholar] [CrossRef]

- Mower, E.; Feil-Seifer, D.J.; Matarić, M.J.; Narayanan, S. Investigating implicit cues for user state estimation in human-robot interaction using physiological measurements. In Proceedings of the RO-MAN 2007-The 16th IEEE International Symposium on Robot and Human Interactive Communication, Jeju, Korea, 26–29 August 2007; pp. 1125–1130. [Google Scholar]

- Won, A.S.; Perone, B.; Friend, M.; Bailenson, J.N. Identifying Anxiety Through Tracked Head Movements in a Virtual Classroom. Cyberpsychol. Behav. Soc. Netw. 2016, 19, 380–387. [Google Scholar] [CrossRef] [PubMed]

- Shamekhi, A.; Bickmore, T. Breathe with me: A virtual meditation coach. In Intelligent Virtual Agents; Springer: Cham, Switzerland, 2015; Volume 9238, pp. 279–282. [Google Scholar]

- Shamekhi, A.; Bickmore, T. Breathe deep: A breath-sensitive interactive meditation coach. In Proceedings of the ACM International Conference Proceeding Series, New York, NY, USA, 21–24 May 2018; pp. 108–117. [Google Scholar]

- Park, S.; Catrambone, R. Social Facilitation Effects of Virtual Humans. Hum. Factors J. Hum. Factors Ergon. Soc. 2007, 49, 1054–1060. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Stepanova, E.R.; Desnoyers-Stewart, J.; Pasquier, P.; Riecke, B.E. JeL: Breathing Together to Connect with Others and Nature. In Proceedings of the 2020 ACM Designing Interactive Systems Conference, Eindhoven, The Netherlands, 6–10 July 2020. [Google Scholar]

- Gildert, N.; Millard, A.G.; Pomfret, A.; Timmis, J. The Need for Combining Implicit and Explicit Communication in Cooperative Robotic Systems. Front. Robot. AI 2018, 5, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Ley, R. The Modification of Breathing Behavior. Behav. Modif. 1999, 23, 441–479. [Google Scholar] [CrossRef] [PubMed]

- Gavish, B. Device-guided breathing in the home setting: Technology, performance and clinical outcomes. Biol. Psychol. 2010, 84, 150–156. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Cao, H.; Li, Y.; Lu, Y. How do you breathe-a non-contact monitoring method using depth data. In IEEE e-Health Networking, Applications and Services; IEEE: Piscataway, NJ, USA, 2017; pp. 1–6. [Google Scholar]

- Parati, G.; Malfatto, G.; Boarin, S.; Branzi, G.; Caldara, G.; Giglio, A.; Bilo, G.; Ongaro, G.; Alter, A.; Gavish, B.; et al. Device-Guided Paced Breathing in the Home Setting: Effects on Exercise Capacity, Pulmonary and Ventricular Function in Patients with Chronic Heart Failure: A Pilot Study. Circ. Heart Fail. 2008, 1, 178–183. [Google Scholar] [CrossRef] [Green Version]

- Brown, R.P.; Gerbarg, P.L. Yoga Breathing, Meditation, and Longevity. Ann. N. Y. Acad. Sci. 2009, 1172, 54–62. [Google Scholar] [CrossRef]

- Khalsa, S.B.S. Treatment of Chronic Insomnia with Yoga: A Preliminary Study with Sleep? Wake Diaries. Appl. Psychophysiol. Biofeedback 2004, 29, 269–278. [Google Scholar] [CrossRef]

- Valmaggia, L.R.; Latif, L.; Kempton, M.J.; Rus-Calafell, M. Virtual reality in the psychological treatment for mental health problems: An systematic review of recent evidence. Psychiatry Res. 2016, 236, 189–195. [Google Scholar] [CrossRef] [Green Version]

- Faria, A.L.; Cameirão, M.S.; Couras, J.F.; Aguiar, J.R.O.; Costa, G.M.; Badia, S.B.I. Combined Cognitive-Motor Rehabilitation in Virtual Reality Improves Motor Outcomes in Chronic Stroke—A Pilot Study. Front. Psychol. 2018, 9, 854. [Google Scholar] [CrossRef] [Green Version]

- Dar, S.; Lush, V.; Bernardet, U. The Virtual Human Breathing Relaxation System. In Proceedings of the 5th Experiment@International Conference (exp.at’19), Funchal, Portugal, 12–14 June 2019; pp. 276–277. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dar, S.; Bernardet, U. When Agents Become Partners: A Review of the Role the Implicit Plays in the Interaction with Artificial Social Agents. Multimodal Technol. Interact. 2020, 4, 81. https://doi.org/10.3390/mti4040081

Dar S, Bernardet U. When Agents Become Partners: A Review of the Role the Implicit Plays in the Interaction with Artificial Social Agents. Multimodal Technologies and Interaction. 2020; 4(4):81. https://doi.org/10.3390/mti4040081

Chicago/Turabian StyleDar, Sanobar, and Ulysses Bernardet. 2020. "When Agents Become Partners: A Review of the Role the Implicit Plays in the Interaction with Artificial Social Agents" Multimodal Technologies and Interaction 4, no. 4: 81. https://doi.org/10.3390/mti4040081

APA StyleDar, S., & Bernardet, U. (2020). When Agents Become Partners: A Review of the Role the Implicit Plays in the Interaction with Artificial Social Agents. Multimodal Technologies and Interaction, 4(4), 81. https://doi.org/10.3390/mti4040081