1. Introduction

The world is imbued with technologies that have created and continue to create rapid change that manifests itself in all aspects of our lives [

1]. The past two decades have hailed computer revolutions that have changed various aspects of its role in people’s lives, from undertaking routine activities (e.g., paying bills or shopping) to creating wholly new experiences (e.g., virtual and augmented realities) [

2]. One of the most dramatic developments in computing power is the global proliferation of mobile devices (smart phones and tablets) as convenient auxiliaries to the conventional desktop and personal computers. This collection of tools (personal computer and mobile devices) are further supported by the advent of the cloud-computing paradigm where users are able to access services from different devices and enabling interactions across these devices [

3,

4,

5]. This vision for ubiquitous computing is not new [

6] as the evolution from single-device to multi-device computing has long been predicted [

7]. For instance, a user may draft an email on their phone and proceed to their personal computer to include an attachment; essentially achieving their goal

horizontally across devices or platforms.

As people possess more information devices, it has become common for several devices to be used together as doorways into a shared information space [

4,

5,

7,

8,

9]. Interaction across a blended cross-device ecology is commonly referred to as cross-device interaction, where users are enabled to manipulate shared content via multiple separate input and output devices within a perceived interaction space [

10]. Cross-device interaction can occur in various modes, such as moving sequentially from one device to another at different times (sequential interaction), and interacting simultaneously with more than one device at the same time (simultaneous interaction) [

4,

7]. Of these two modes of interaction, sequential interaction is the most common as it reflects current user interaction with multiple devices [

4]; according to a Think with Google report, 90% of users (

) use multiple devices sequentially to accomplish a task, where 98% of users move between their devices at different times in a single day.

In Human–Computer Interaction (HCI) and Software Engineering communities, usability testing is a method of usability assessment that is used to assess an individual product by testing it on representative users in representative environments [

11,

12,

13]. Usability testing utilises measurable factors, such as efficiency and effectiveness, to assess real user performance [

14]. Usability has traditionally been an important quality attribute of interactive systems, where usable interfaces were found to improve human productivity and performance [

12,

15,

16]. The growth of cross-platform interaction and services has resulted in a new emerging theme for usability referred to as ‘cross-platform usability’ (or ‘inter-usability’ or ‘horizontal usability’) (see [

17,

18,

19,

20,

21,

22,

23]).

Although the traditional usability testing method is valuable for assessing product usability, it only involves quantifying and assessing the usability of a single user interface. This could be seen as the main limitation of service usability engineering. That is, the current testing method does not address how to quantify and assess inter-usability across a combination of user interfaces that involves a user transferring from one interface to another to achieve interrelated goals. The evaluation of each user interface independently might not correspond with the evaluation of a combination of user interfaces where users migrate tasks across platforms [

17,

24]. This paper aims to address this gap with the development of an assessment model for evaluating the cross-platform usability of a combination of user interfaces. The model’s validity and performance was explored via a cross-platform usability test of three test services. The findings of the test were analysed and utilised to refine the proposed model.

The remainder of this paper is organised as follows.

Section 2 defines concepts related to cross-platform services and configuration. Next,

Section 3 reviews the literature for prior work in the area of cross-platform modelling and interaction.

Section 4 presented the proposed cross-platform usability assessment model. The following section,

Section 5, describes the user study conducted to assess the viability of the proposed model. Then,

Section 6 presents the results of the user study.

Section 7 discusses the results and refines the proposed assessment model.

Section 8 presents experts’ evaluations of the assessment model. Finally,

Section 9 concludes the paper and briefly discusses future work.

3. Related Work

In the past few decades, there has been a rapid and drastic change in the way we interact with computers as they become more powerful and easily accessible. This has brought the research in the area of cross-platform user interfaces and interactions into the limelight of Computing and Usability studies [

5]. Research addressing the opportunities and challenges of cross-platform interaction is abundant and variable in contribution to HCI research [

36]. Brudy et al. [

5] provides an overview of cross-device research trends and terminology to unify common understanding for future research. A number of researchers have contributed to the body of knowledge concerning the design and development of cross-device systems (e.g., [

21,

36,

37,

38,

39,

40]). Dong et al. [

21] explored the barriers of designing and developing multi-device experiences. Through a series of interviews with designers and developers of cross-device systems, the authors identified three challenges pertaining to designing interactions, complexity of user interface standards, and lack of evaluation tools and techniques. Other work, such as O’Leary et al. [

37] and Sanchez-Adame et al. [

38], provide designers toolkits and guidelines for cross-device user interfaces for context shifts and consistency, respectively. These studies reflect the relevance of the work presented in the paper, and for the rest of this section we explore the evaluation methods previously utilised in the area of cross-device evaluations.

Denis and Karsenty [

17] studied the inter-usability of cross-device systems, whereby users migrate their tasks from one device to another. Their work found that service continuity could influence inter-device transitions. They argue that service continuity could have two different dimensions: knowledge continuity and task continuity. Their findings suggest that design principles such as inter-device consistency can be applied to user interface designs to support service continuity dimensions. However, although their work established an initial conceptual framework with inter-usability principles to support continuity, there was not a methodological approach for measuring the continuity factor in either a subjective or an objective way.

Seamless transition is an important user experience element in multi-device interaction mode. Dearman and Pierce [

41] studied the techniques that people use to access multiple devices to produce a better user experience (UX) when working across devices. They argue that one of the main challenges is supporting seamless device changes. Concerning seamless transfer between devices, a few studies have attempted to address the issue of migrating tasks or applications across devices to reduce the impacts of interruptions when transitioning from one device to another and support continuity (e.g., [

42,

43]). However, these studies generally focused more on the technological aspects of the problem [

27]. Dearman and Pierce [

41] suggest that there are opportunities to improve the cross-platform UX by focusing on users rather than on applications and devices. Hence, our research aim is to provide a user-based testing methodology that leads to insights into user experiences and needs for task continuity and seamless transition.

Antila and Lui [

24] investigated challenges that designers might encounter when designing inter-usable systems in the emerging field of interactive systems. Semi-structured interviews were conducted with 17 professionals working on interaction design in different domains. Challenges were identified and grouped by design phases: early, design, development, and evaluation phases. One of the identifiable challenges in the evaluation phase was the difficulty of evaluating the whole interconnected system. That is, the usability evaluation of separate components might not necessarily correspond to the evaluation of inter-usability. The work also reported the need for evaluation methods and metrics to support inter-usability, taking into account two factors: the composition of functionalities and the continuity of interaction. Therefore, this paper aims to address this need by providing a model for assessing inter-usability taking into consideration the cross-platform interaction factors.

Many of the related studies investigating cross-platform usability and UX, such as Wäljas et al. [

35] and Denis and Karsenty [

17], did not conduct user testing. Instead, they relied on data-collection methods such as interviews and diaries that may not have been the most reliable sources for research in usability and UX. The interview method is generally used as a supplement to other usability research methods such as user testing. During interviews, people may not be able to remember the details of how they used a specific user interface; further, participants tend to make up stories to rationalise their behaviour and make it sound more logical than it may, in actual fact, have been. In addition, many users have no idea how to categorise their uses of technology according to a description. This means that what users say and what they do could be different. Therefore, a user-based testing method is still required for a reliable assessment of cross-platform service usability.

5. User Study

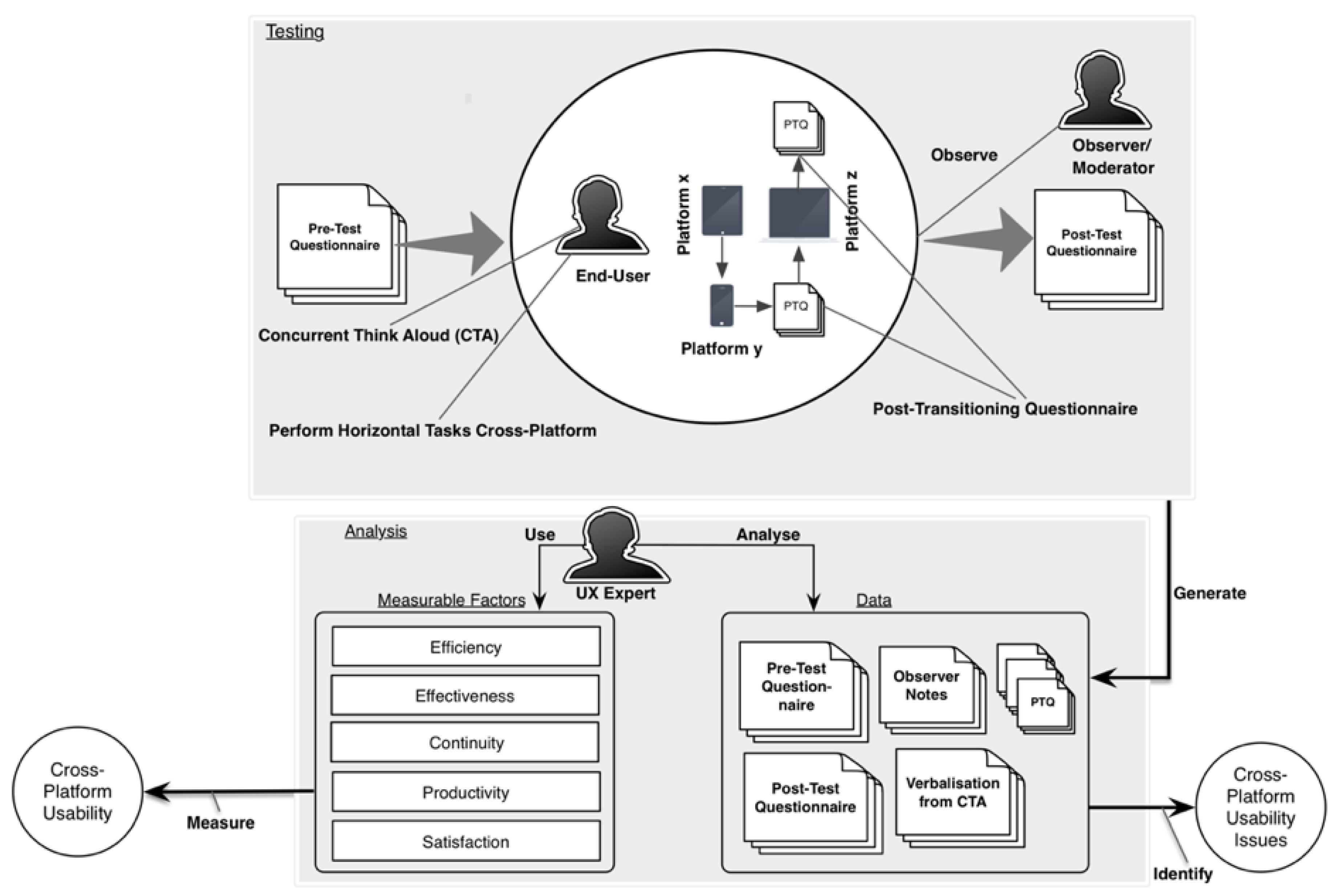

The main purpose of this study is to address the following question: to what extent is the proposed cross-platform assessment model valuable for assessing cross-platform usability? The proposed model was developed to enable the identification of cross-platform usability issues and to quantify cross-platform usability. The main components of the model can be summarised as the use of HTs, the incorporation of a mix of data collection techniques, the consideration of cross-platform usability and seamless transition satisfaction scales, and the use of cross-platform usability objective metrics.

The user study will explore the following aspects of the proposed model:

The extent to which the data-collection techniques proposed in our model help to reveal cross-platform usability issues.

The extent to which the metrics can provide valuable information supporting cross-platform usability assessment.

The correlation between metric groups under efficiency, effectiveness, and continuity attributed in the model.

The correlation between statements on each Likert scale used in the model, the reliability of each scale, and the investigation of whether the statements on each scale as a whole could reflect only one dimension.

5.1. Test Objects and Tasks

The study used three cross-platform services from different domains of lifestyle, travel, and education: Real Estate (

www.realestate.com.au), Trip Advisor (

www.tripadvisor.com), and TED (

www.ted.com). The services are composed of an array of features that correlated with activities often performed cross-platform, such as searching for information and planning a trip [

4]. The services were configured at a complementary level of redundancy. The services have a range of different implementation types across platforms, that is, native mobile or tablet application, desktop website, mobile website, and responsive website. A native application is an application that is coded in a device-specific programming language. A desktop website is a web-based user interface that is optimised to be accessible from large screens (e.g., desktop and laptop screen). A mobile website is a copy of the desktop website, where the server delivers an optimised page that is smaller and easier to navigate on a smaller mobile screen. With a responsive website, the device automatically adjusts the site according to a device’s screen size (large or small) and orientation (landscape or portrait).

The devices used in this study were a MacBook Pro-15 inch, an Apple iPad Air, an Apple iPhone 4, and a Samsung Galaxy S4. These devices belong to three main device categories (PC/laptop, tablet and smartphone) that people use in their daily lives [

4]. These device categories are also used commonly in cross-platform sequential interactions [

4], the interaction mode adopted in our experiments.

Real Estate (RE) lists properties for sale and rent in different areas of Australia. Users can search or browse properties by entering a specific area name or postcode or by selecting their current location. Users interacted with the RE service using a desktop website on the MacBook Pro, native application on the iPad, and mobile website on the Samsung Galaxy S4.

Trip Advisor (TA) offers advice from real travellers and a wide variety of travel choices. Users can search or browse different travel choices by entering a city or selecting their current location. Users interacted with the TA service using a desktop website on the MacBook pro, native application on the iPad, and native application on the Samsung Galaxy S4.

TED (TD) is a cross-platform service for spreading ideas in the form of short talks. Users can search or browse short talks and sort them according to different options, as well as filter search results. Users interacted with the TD service using a responsive website on the MacBook pro, native application on the iPad, and responsive website on the iPhone 4.

After determining the test services, a set of HTs were developed to assess the usability of the chosen objects by means of the proposed cross-platform assessment model. Six HTs were designed, two for each of the cross-platform services. We reiterate that the purpose of the study is to explore the viability of the proposed model and not to cover all the features of examined test objects. All HTs were designed to be carried out independently from each other. The tasks were piloted with one participant for each of the cross-platform services (i.e., three participants overall) prior to the commencement of data collection. This brings the total number of participants down to thirteen users (four participants for TA and TD, and five participants for RE).

Table 2 lists the six HTs and subtasks adopted in this study.

5.2. Participants

What constitutes an optimal number of participants for usability testing has long been debated in the field [

55,

56,

57,

58,

59,

60,

61]. Commonly, yet controversially, researchers have stated that four to nine participants is an adequate number to carry out an effective usability test. For that purpose and due to the lack of consensus, it was decided that four users would be the minimal number considered for the study. For this study, sixteen participants were recruited and distributed amongst the three cross-device services.

An important consideration for usability participants was taken into account, that is, they are representative of the target user groups for the services being evaluated. This approach ensures valid feedback in order to contribute meaningful insight and explore the viability of the proposed model. Considering the services under evaluation, the study sample was selected from among university students. The age of the recruited participants was 18 to 60 years old at varying levels of study at the university. All participants had basic computer skills and used the internet on a daily basis for more than three years. The majority of participants had actively engaged in cross-device interaction for at least a year.

5.3. Procedure

The user study was conducted in the usability laboratory at the Royal Melbourne Institute of Technology (RMIT). Participants were cordially greeted upon arrival by the moderator (first author) and made to feel at ease. The participants were then asked to review an information sheet and sign an informed consent form. Participants were given no training in the selected cross-platform services or in the use of the devices involved in the study. However, they received some explanation about the think-aloud protocol, the terms used in the test session, and the main purpose of each cross-platform service. Participants were divided into three groups using matched-group design, through which the subjects are matched according to particular variables (e.g., age) and then allocated into groups. Each group performed tasks on a single cross-platform service. Each participant attempted two HTs. To achieve a HT, the participant was required to interact with three user interfaces for the same service accessed from three different devices: a laptop, a tablet, and a mobile phone. Test sessions were on average 85 minutes long. The sessions were video-recorded and the devices were also recorded to capture users’ interactions with the devices.

The participants were introduced to the test service, and the moderator set up the screen capture software and video cameras. The participants were first asked to answer the pre-test questionnaire for the purpose of collecting demographic information. Participants then commenced performing each of the HTs for their assigned service. With each HT, participants performed subtask 1 and 2 on the first two devices followed by the STS. Next, participants performed subtask 3 on the third device followed by the second STS. After concluding the first HT, the participants proceeded to the second HT following the same procedure. After all tasks were completed, the moderator ended the recording and directed the participant to fill in the CPUS to conclude the session.

To minimise order effects that could potentially bias the results of the study, a basic Latin square design was used to alternate the device order and tasks. This design resulted in a block of six trials with different orders. Changing the device order also allowed for the assessment of cross-platform usability in each specific order.

5.4. Usability Problem Extraction

The proposed cross-platform assessment model utilised a combination of techniques for data collection, which includes concurrent think aloud protocol, observation, and questionnaires (see

Section 4). For the concurrent think aloud protocol, participants were encouraged to narrate their thoughts while interacting with the devices in the study. This approach is popularly adopted in usability studies as it allows a better understanding of participants’ levels of engagement, their thoughts and feelings during interaction, and any questions that may arise. Since the protocol is applied simultaneously while the test is being carried out, it saves time and can help assist the participants to organise their thoughts while interacting with the set tasks [

45,

62].

Usability issues were analysed by counting the number of problems that those three methods were able to identify [

63,

64,

65,

66]. The process of identifying usability problems in this study involved reviewing each participant’s testing video, observation notes, and questionnaires. The analysis order effect was reduced by randomly selecting data files. Statements were extracted from user verbalisation, post-transitioning, post-test questionnaires and observation notes. Each usability problem discovered was assigned a number that indicates the participants and assigned test service. Usability problems were maintained in a report that also contained information on context.

6. Results

This section presents the findings of the user study pertaining to data collection techniques, factors affecting cross-platform usability measures, and cross-platform usability metrics grouped under the proposed model’s factors: efficiency, effectiveness, continuity, productivity, and satisfaction.

6.1. Data Collection Techniques

The proposed model utilised a combination of techniques for data collection (see

Section 4). Usability problems were analysed by counting the number of problems, which is a common analysis technique [

65,

66]. In total, 540 cross-platform usability issues were identified. For all three services, the average number of usability issues was the highest for the think-aloud protocol (33.0, 26.5 and 21.75, respectively). For RE this is followed next by STS, CPUS, and observations (8.6, 3.6, and 2.4). In the case of the TA service, the think-aloud protocol was followed by observations, STS, and CPUS (9.3, 7.0 and 2.8). For TD, next follows CPUS, observations, and then STS (4.0, 2.8, and 1.3).

The think-aloud protocol revealed more cross-platform usability issues. Nevertheless, the other data collection techniques contributed to the process of identifying usability issues. It was found that some of the issues reported through questionnaires and observations were actually different from those verbalised using the think-aloud protocol. That is, 50%, 70%, and 69% of the issues identified through observation in the RE, TA, and TD services, respectively, were unique. With the post-test questionnaire, 45%, 67%, and 40% of the usability issues revealed in the RE, TA, and TD services, respectively, were different to those from other methods. A further 50%, 54%, and 34% of the issues discovered by the STS for the RE, TA, and TD services, respectively, were unique.

6.2. User Factors

The influence of two user characteristics (cross-platform expertise and expectations of data and function distribution across devices) on HT execution time was examined. In the pre-test questionnaire, participants answered questions to determine their level of expertise across platforms. For each question, experience points were assigned to key responses and accumulated.

A Pearson’s R correlation test was carried out to investigate the relationship between user levels of cross-platform expertise and HT execution time. The correlation was significant for the RE service on both HTs (HT1: ; HT2: ). The negative R values suggest that as user cross-platform expertise increases, the time spent on completing the HT decreases. The correlation was also significant for the TA service (HT1: ; HT2: ). For the TD service, significant correlation was revealed for the first HT () and non-significant correlation for the second HT ().

The second user factor considered in this study addressed users’ expectations of the distributed content across devices. In the pre-test questionnaire, participants were asked a close-ended question: ‘What is your expectation of content and functions of the cross-platform user interfaces?’ The responses to this question represented the different levels of redundancy: exclusive, redundant, and complementary. The three services provided in the study are categorised as complementary. The findings show that participants expecting a complementary service had the lowest execution time for HTs in all three cross-platform services.

6.3. Efficiency

To measure efficiency, the HT execution time and the number of actions for each HT were collected. From these two metrics, the mean execution time (minutes) and the average number of actions were found to be interpretable. HTs performed on the TD service had the lowest average task execution time and number of actions (HT1, HT2: average time = 5.1, 3.7 min, average number of actions = 7.3, 12.2). Since the HTs across the tested services tended to have similar levels of complexity, it could be argued that the TD service supported users in performing their HTs more efficiently across platforms in comparison to the RE and TA services. RE exhibited the highest average task execution time (HT1, HT2: average time = 10.9, 7.1 min, average number of actions = 31.0, 15.4), followed closely next by TA (HT1, HT2: average time = 10.8, 12.0 min, average number of actions = 18.5, 22.5).

The study’s participants attempted the HTs in six different orders of user interfaces. Since the study had a small number of participants per service, each participant attempted each HT in a distinct order. This aspect prevented us from averaging the HT execution times per order of interfaces—a type of analysis that could occur if tested on two user interfaces. With two user interfaces. Therefore, participants can only attempt each HT using one of two distinct orders. This means that it is more likely to have more participants conduct the same HTs in the same order.

To determine if we could reduce the number of cross-platform efficiency metrics, a correlation test was carried out between the metrics. The results show that the relationship between the metrics was in some cases statistically insignificant (with coefficients of less than 0.3 [

67]). In general, the correlations were positive, indicating that an increase in one variable correlated with an increase in the other (RE—H1: 0.64, H2: 0.77; TA—H1: 0.21, H2: 0.96; TD—H1: 0.23, H2: 0.58). However, the correlation results were not always consistent across the data sets at the same level. If the metrics were consistently correlated in a specific pattern, we may have been able to argue that employing one metric could be adequate to assess efficiency. However, an interpretation of the correlations could be that each metric adds unique findings.

6.4. Effectiveness

Cross-platform effectiveness was considered using two metrics: task completion rate and the number of errors. RE participants generally made more mistakes than did the TA and TD participants (HT1, HT2: completion rate , average errors ). This result could be an indication that many cross-platform design issues need to be addressed in the RE service. TA participants performed only slightly better than the RE participants (HT1, HT2: completion rate , average errors ). Overall, the TD service had the highest completion rate and the lowest number of errors (HT1, HT2: completion rate , average errors ). This observation could also mean that TD supports its users to complete their tasks effectively compared to the other tested services. These examples demonstrate the usefulness of the metrics for determining cross-platform effectiveness.

For metric reduction purposes, the relationship between cross-platform effectiveness metrics were examined (the task completion rate and the number of errors) for each HT (RE—H1: −0.69, H2: −0.79; TA—H1: −0.98, H2: −0.99; TD—H1: −0.87, H2: −0.94). The correlation between the variables in all cases was significant. The correlations were negative, which can be interpreted as an increase in the number of errors that users encounter across devices that correlates with a decrease in completion rates. The correlation results appear consistent across the data sets; hence, it can be assumed that similar information will be obtained if only a single metric is used.

6.5. Continuity

The continuity factor is concerned with how seamlessly a user continues with an interrupted task on a new user interface. As previously indicated, three metrics were selected to measure the continuity factors: task-resuming success, the time taken to resume tasks, and user satisfaction with the seamlessness of the transition.

The STS was completed after participants undertook two subtasks that involved moving from one device to another (switching between two devices). Factor analysis was performed by first unifying the scales so that one represented the negative and five represented the positive for all statements. This means that the responses to Statement 2—the negative statement—were transformed to conform to the positive statements on the scales. Each participant conducted two HTs, and this resulted in four transitions per user. Factor analysis was carried out on 52 transitioning cases. Eigenvalues were calculated and used to decide how many factors should be extracted during the overall factor analysis. The eigenvalue for a given factor measures the variance in all variables that is accounted for by that specific factor. It has been suggested that only factors with eigenvalues greater than one should be retained [

68]. The eigenvalue output indicates that there was only one significant factor among the three statements in the STS, the scale as a whole might reflect only one dimension: seamless transitioning. This finding could be interpreted as an indicator that the results of the participant responses to the statements on the scale could be used to measure the seamlessness of the transition between user interfaces.

The correlation between statements was also investigated on the findings of the STS based on the 52 responses. The results show that all statements correlated significantly with one another—all correlations were significant at 0.01 (2-tailed) (see

Table 3). The results show a positive correlation between the statements. That is, increases in one statement correlated with increases in the others. This finding supports our assumption that the proposed scale could be used to measure only one dimension: the seamless transition between devices; that is, if the coefficient of any statement decreases (e.g., to below 0.3 [

67]), it can be concluded that the statement does not contribute to the overall seamless transition score.

Further analysis was conducted to test the reliability of the scale. A reliability test was conducted on the combined data sets (the 52 responses to STS) obtained by testing the three cross-platform services: RE, TA and TD. The results show high reliability values, with Cronbach’s alpha equal to 0.882, which exceeds the acceptable reliability coefficient value of 0.7 [

69]. This outcome indicates that the STS is a reliable method that can be used to measure seamless transitioning between devices.

The overall results for the task-resuming success rate (RE, TA, TD: 40%, 62.5%, 93.7%), the time taken to resume tasks (RE, TA, TD: 12.3, 12.2, 5.8 min), and the user satisfaction with the seamlessness of transitions (RE, TA, TD: 2.8/5, 2.5/5, 3.8/5) showed that the TD cross-platform service supported continuity better than the RE and TA services. However, these are the overall results for continuity, and they are not able to verify which user interface worked better to support task continuity and which failed to do so. Therefore, analysing data by participant could provide an indication of which user interface should be improved to support task continuity.

A Pearson correlation test was carried out to investigate the relationship between the continuity metrics. The test was performed on the data obtained from the first and second transitions of the first HT of each service (see

Table 4 and

Table 5, respectively). In some cases, there were significant correlations between variables. One interpretation of the results indicates that increases in the time taken to resume tasks correspond to decreases in participant satisfaction about the smoothness of transitions as well as decreases in task-resuming success (except for TD, which showed an increase in the second transition). The correlation of the results of task-resuming success and STS were mostly positive, which indicates that increases in task-resuming success correspond to increases in STS scores. As shown in two of the cases, increases in task-resuming success correlate with decreases in seamless transition, which means that users may not always be satisfied with the smoothness of transitions even when they are able to resume a task successfully. As the three metrics did not show a consistent pattern of correlation across the data sets, it could be argued that each metric provided information that was different from that of the other metrics and should therefore all be used in cross-platform usability evaluations.

6.6. Productivity

The unproductive period in minutes for each HT was computed and collected in the study (RE—H1: 6.03, H2: 4.08; TA—H1: 2.59, H2: 5.58; TD—H1: 2.23, H2: 4.64). The results show that RE’s first HT and TA’s second HT produced the highest average of unproductive periods. The majority of participants of these two HTs made several mistakes and requested help when attempting subtasks across devices. For instance, during RE’s first HT, participants encountered inconsistency issues with the search panel across the difference user interfaces. It is safe to conclude that the unproductive period metric can contribute new information about cross-platform usability since it takes into account the time spent seeking assistance.

6.7. Satisfaction

The CPUS was designed to produce a single reference score of user satisfaction for a cross-platform service. The analysis of the CPUS results began with the inspection of the statements on the scale separately.

Table 6 lists descriptive statistics of CPUS responses for the three test services. The table shows the actual mean, post-transformation (PT) mean, and the standard deviation (SD) of the CPUS responses for each of the eight statements. The PT mean adjusts the scores of negative statements 4, 6, 7 and 8, so that positive responses are associated with a larger number, as are the other four (positive) statements. In the PT mean column, the larger numbers for positive statements 1, 2, 3 and 5 show that these statements received more positive responses (agreements), and the larger numbers for negative statements 4, 6, 7 and 8 indicate that these received more positive responses (disagreements). In the RE service, statement 8 received the largest number of positive responses (disagreements) with a mean of 2.20. For the TA service, statements 1, 2, 3, 4, 5 and 6 had the largest positive responses equally. For the TD service, participants gave statement 1 the largest number of positive responses with an average of 4.00, which was also the largest number across all statements for all tested cross-platform services. These findings conclude that the scales can generate different scores according to the designs of services. The results in

Table 6 are also consistent with other cross-platform usability measures. For instance, the TD service received more positive impressions on the scale compared to the RE and TA services. This result is consistent with the cross-platform execution times, the completion rate, and the continuity results. TD users performed HTs with shorter execution times, had greater task completion rates, and produced better continuity results compared with RE and TA users.

The correlation between CPUS statements was investigated by carrying out a correlation analysis on the CPUS results from the three test services. The Pearson correlation results shown in

Table 7 confirm that the eight statements correlate significantly with one another (with coefficients greater than 0.3 [

67]). All statements exhibited significant coefficient values that indicate that each statement contributes positively to measuring the overall cross-platform satisfaction.

A factor analysis of the CPUS statements was performed to confirm that the statements addressed different dimensions of the participants’ experience.

Figure 4 illustrates the eigenvalues output of the factor analysis of the test services’ generated data sets, as well as the results of the analysis of the data set combination. The results show that the first statement was the only significant factor among the eight statements. It can be concluded that viewed collectively, the statement reflects users’ satisfaction with cross-platform systems.

The internal consistency of the CPUS statement was tested via a reliability test. The PT mean was used to compute this test (RE: 0.98; TA: 0.99; TD: 0.98; Combined: 0.99). The results of the reliability test, where the high Cronbach’s alpha corroborates the viability and reliability of the CPUS as a measure for cross-platform satisfaction [

69].

7. Discussion and Model Refinement

7.1. Data Collection Techniques

The combination of data collection methods in cross-platform assessment model supported the identification of several usability issues. Of the three methods, the think-aloud protocol generated the most cross-platform usability issues for all three test services which is consistent with Nielsen’s claim [

11]. Nevertheless, our findings highlight the importance of the three methods to ensure the identification of as many usability issues as possible that might not overlap across methods.

7.2. User Factors

Usability measures are affected by the context of use, including user and task characteristics [

53]. That is, the effectiveness of a usability test depends on the given tasks, the methodology, and the users’ characteristics [

70]. In the design of this study, the tasks were formulated in a way that reduced the effects of task characteristics on cross-platform usability measures. Nonetheless, user traits should be inspected as they may affect the cross-platform usability test. The study findings show that user characteristics, such as cross-platform expertise and distributed data and functions expectation, could have an impact on HT execution time. Accordingly, user factors should be considered when evaluating the usability of cross-platform services. This consideration entails the careful recruitment of participants to eliminate the likelihood of their impact on overall measurements.

7.3. Correlation between Metrics

Several metrics were used to assess efficiency, effectiveness, and continuity. Correlation tests were carried out to determine their impact. For the efficiency factors, the findings show primarily non-significant correlation between the execution time and the number of actions. These results confirm the values of each metric and their ability to contribute unique findings. In the case of the effectiveness factors, the correlation between the metrics was found to be significant, and hence, the use of a single metric can potentially suffice. Nevertheless, there is still a risk of overlooking valuable issues when discarding one of two effectiveness measures. For instance, users are capable of completing a task successfully while still encountering difficulties and making mistakes. In the presented cross-platform usability study, participants incurred several errors due to their attempts at reusing prior knowledge from one device to inform their interaction with another. This observation shows that counting the number of errors can indicate a number of design issues in a cross-platform service that would have otherwise been overlooked. Similar to efficiency, the continuity metrics correlation results proved statistically insignificant, which informs their value at identifying unique usability measures.

7.4. Metrics Comprehensiveness

The proposed cross-platform usability model utilised both traditional usability metrics and introduced a new metric—‘continuity’. All metrics proved valuable; however, it is difficult to argue for the comprehensiveness of these metrics for a cross-platform usability assessment specifically. This difficulty is largely because the theme of cross-platform usability is very much in its infancy and requires further studies to understand its complexities. In relation to the proposed model, we believe that further refinement is expected to confirm the relevance of the CPUS and STS statements. Additionally, further studies may aid the expansion of the model by considering more factors, such as learnability.

7.5. The Number and Order of User Interfaces

The study assessed the usability of three user interfaces, which proved time consuming and generated fewer interpretable results. For instance, it proved difficult to extract measurements (e.g., average HT execution time) because each participant attempted each HT in a distinct order due to the larger number of combination options.

Observations show that at times it was difficult for some participants to explain inter-usability problems because they were exposed to three different user interfaces. Furthermore, it was noted that experience can be gained as they interacted with one interface and then another. This finding further emphasises the importance of testing user interfaces in pairs.

Arguably, users will still need to interact with user interfaces from different platforms interchangeably. In fact, it was observed in the study that unique inter-usability problems may occur when participants interacted with the user interfaces in a specific order. These problems are due to the influence of past experience with the previous user interfaces in the HT assignment. Therefore, testing the interchangeability of user interfaces remains important in cross-platform usability evaluations.

7.6. Model Refinement

Based on the study findings, the proposed model was refined as shown in

Figure 5. The refined model maintains the combination of data collection methods and cross-platform usability metrics from the original model as they have been found to be invaluable to investigating the unique behaviours exhibited in cross-platform systems. The previous section highlighted the importance of user interface interchangeability as unique cross-platform issues can be identified in each distinctive order. Nonetheless, the findings support testing with user interface pairs, particularly when working with a small sample group. In the refined model, two new factors are included: cross-platform expertise and user expectations of data and function distribution. These factors should be taken into account when screening participant for cross-platform testing.

8. Expert Evaluation of the Model

We carried out an expert evaluation to capture the opinions and impressions of professionals specialising in UX about the model. We designed a questionnaire that involved a part that captures participant demographics and their roles in their respective organisations, and another part to capture participant opinions about the model. The questionnaire also involved detailed explanations of our assessment model. Participant responses were analysed using predefined themes including model appropriateness, effectiveness and usefulness, model completeness and redundancy, adaptation of the model to practice and challenges.

Participants’ most recent study programmes included computer science, software engineering, information systems, HCI, and business. The age of participants ranged from 30 to 39 years old for six participants, 40 to 49 for one participant and 50 years old or more for one participant. Three participants held master degrees, and five participants held bachelor degrees. Of the participants, there were two UX designers, two usability engineers, one information architect, one interaction designer, one usability analyst and one UX specialist. Three participants had been working in the field of UX or usability for more than ten years and five participants for between five and ten years. The categories that describe the industry that the participants primarily worked in ranged across government, education, media, medicine and software. Four participants described UX as a part of their roles in their organisations, two participants mentioned that they worked as a part of a UX team in their organisations and two participants defined themselves as the UX people in their organisations.

Generally, seven participants agreed that the assessment model could be used to address some of the current gaps in cross-platform usability evaluation approaches. One participant, P1, stated that they felt that the model is ’appropriate’ and its elements are designed carefully to capture information about cross-platform usability. Another participant, P3, mentioned that the model could contribute to the cross-platform service design practices and may open the door to new thinking about user-centred service design. One participant, P8, focused more on the appropriateness of the model from the business perspective, rather than its suitability for assessing cross-platform usability. This participant argued that the appropriateness of the model needed to be determined based on the practical return on investment, which was not clear in the description of the model.

In terms of the effectiveness of the model for identifying cross-platform usability problems, all participants (except participant P8) agreed that using the model could help identify cross-platform usability problems. One participant, P1, linked the model’s effectiveness to its focus on cross-platform usability assessment. That is, the model does not integrate cross-platform usability evaluation procedures with the traditional procedures. The participant considered this as an important advantage of the model, since it could allow evaluators to focus on identifying cross-platform usability problems. Participant, P3, saw that the model might be effective for identifying inter-usability problems because of our approach to designing HTs. That is, our way of designing HTs allows users to take the same or a similar path to achieve subtasks across devices. Hence, when switching from one device to another, evaluators could identify problems by observing participant interactions and behaviours when attempting to reuse knowledge of specific functions. Participant, P4, reported that the use of several data-collection techniques in our model could help to identify the maximum potential cross-platform usability problems. However, participant, P8, suggested that more practical examples may be needed to allow for the assessment of the effectiveness of the model.

Concerning the usefulness of the model, on a Likert scale from one to five, where one is not useful, three is neutral and five is extremely useful, five participants responded that the model is extremely useful, two participants said that it is useful and one participant remained neutral. Six participants also mentioned that they would use the model in cases when they needed to assess the cross-platform usability of a service and one participant indicated that he might use it by focusing only on certain measures. Participant, P8, stated that he might not use the model because it lacked a description of its business value, which would be needed to fund the assessment activity.

In regard to the completeness of the model, seven participants who answered the related question agreed that the model is comprehensive. One participant stated: “the model is comprehensive, where all required data-collection techniques that are normally used in usability testing are included. Also, it employs most of the required measurements to support the evaluations” (P1; UX designer in the education domain). In terms of the redundancy of model elements (e.g., measures), participants stated that there is no redundancy in the model.

Our analysis of all participants’ responses to the question “Do you think that the model can be adopted in the UX evaluation practices? Why?” showed that seven participants generally agreed that the model can be adopted in the UX evaluation practices. One participant states, "Yes, this can be for several reasons: the absences of a current method for cross-platform usability testing, the simplicity and consistency of the model with traditional testing methods and the revolution of multi-platform services." (P1; UX designers in the education domain).

In regard to challenges and constraints for adopting the model, three participants, P4, P6 and P7, indicated that there are no challenges or constraints for adopting the model for UX evaluation practice. However, some participants listed some possible challenges for the quick adoption of the model. For example, three participants, P1, P2 and P5 mentioned that usability evaluators might need to understand the cross-platform usability concepts and be trained about how to use the model to adopt it and execute it effectively. Participant, P3, indicated that there might be challenges integrating the model into current user-centred design (UCD) practices, concerning the design for individual user interfaces, rather than MUIs. Participant, P8, indicated that there might be a need for a description of the business value of the project, which could be required to fund the assessment activity so businesses could determine whether to adopt the model or not.

9. Conclusions and Future Work

This paper presented a cross-platform usability assessment model and conducted a usability assessment for three cross-platform services using the proposed model. The findings have shown that the think-aloud protocol is the most valuable method for revealing cross-platform usability issues; however, other methods such as observation also help to uncover usability issues. Furthermore, the results have shown that differences in user levels of cross-platform expertise and the expectation of levels of data and function redundancy across devices influence cross-platform task execution times. The study demonstrated that the results obtained from the usability metrics proposed in this model are valuable. The results indicate that the statements comprising each scale (cross-platform usability and seamless transitioning) correlated significantly, that they were internally consistent and that they addressed only one dimension.

One of the most important strengths of the model is that it includes objective and subjective measures for evaluating the continuity and the seamless transition between devices, which are the most important aspects of inter-usability. The CPUS can also reveal important information about the inter-usability of a cross-platform service (e.g., inter-platform service consistency), making it a valuable tool that can be used in cross-platform user experience studies.

It is important to mention that the analysis of the cross-platform usability of a combination of three user interfaces was found to be time consuming and the results were less interpretable. Therefore, we recommend testing user interfaces in pairs. Testing user interfaces in an interchangeable manner is also an important aspect of cross-platform usability evaluation since unique issues can be identified for each distinctive order.

The proposed model was refined based on the user study findings and discussion. In addition, eight UX experts evaluated our model and most of them agreed that the model is appropriate, useful and can be adopted in the practice.

In future work, we intend to improve and extend the user study and assessment model and examine various factors, in the following ways:

Confirm the viability of the refined cross-platform assessment model with a larger number of participants.

Conduct more experimental studies to determine whether the proposed assessment model could be employed for testing services with different degrees of data and functions of redundancy (e.g., exclusive), as well as for testing services when users interact with them to achieve unrelated tasks in a sequential mode, and when attempting related and unrelated tasks in a simultaneous mode.

Utilise other approaches (e.g., using severity ratings or classification of problems) in addition to the problem counting approach used in the study, as this technique may have some limitations (e.g., the counts of potential problems may include problems that are not real usability problems) [

71].

Address the limitation of having only one evaluator to analyse the cross-platform usability problem by recruiting multiple evaluators.

Compare the proposed assessment model to other usability evaluation methods that can be used to assess cross-platform usability (e.g., expert review). This would assist, for example, in choosing the superior method for finding serious cross-platform usability problems with the least amount of effort.

Conduct further studies to extend the model by considering more factors, such as learnability.