Abstract

Given that most cues exchanged during a social interaction are nonverbal (e.g., facial expressions, hand gestures, body language), individuals who are blind are at a social disadvantage compared to their sighted peers. Very little work has explored sensory augmentation in the context of social assistive aids for individuals who are blind. The purpose of this study is to explore the following questions related to visual-to-vibrotactile mapping of facial action units (the building blocks of facial expressions): (1) How well can individuals who are blind recognize tactile facial action units compared to those who are sighted? (2) How well can individuals who are blind recognize emotions from tactile facial action units compared to those who are sighted? These questions are explored in a preliminary pilot test using absolute identification tasks in which participants learn and recognize vibrotactile stimulations presented through the Haptic Chair, a custom vibrotactile display embedded on the back of a chair. Study results show that individuals who are blind are able to recognize tactile facial action units as well as those who are sighted. These results hint at the potential for tactile facial action units to augment and expand access to social interactions for individuals who are blind.

1. Introduction

Effective personal and professional social interactions are of paramount importance in successfully achieving both psychological needs (e.g., developing and maintaining relationships with friends, family, and loved ones) and self-fulfilling needs (e.g., advancing one’s career). A social interaction is a shared exchange between two people (dyad), three people (triad), or larger social groups of four people or more occurring in-person, remotely (technology-mediated), or mixed. Within these social groups, information is shared verbally (e.g., speech) and nonverbally (e.g., eye gaze, facial expressions, body language, hand gestures, interpersonal distance, social touch, appearances of interaction partners, and the appearance/context of the setting in which the interaction occurs). Most of the information exchanged is nonverbal (65% or more) compared to verbal communicative cues [1].

With the exception of social touching (e.g., shaking hands, hugging), vision is needed to sense, perceive, and appropriately respond to visual nonverbal cues present in dynamic social scenarios. For individuals who are blind or visually impaired, these cues are largely inaccessible. Hearing provides intermittent access to certain nonverbal cues; for example, while speaking, an interaction partner’s voice can be used to estimate how far he or she is standing from you (interpersonal distance); and the intonation of voice, together with the content of its speech, can be used, to some extent, to detect emotion and intent. But without vision, immediate emotional responses, nuances of facial expressions, and the intent of eye gaze and body language are lost. In a focus group with individuals who are blind and visually impaired, work environments were mentioned the most frequently as the setting where inaccessible social interactions had the most negative impact. Participants noted that the most challenging type of social interaction is the large group meeting, particularly because questions are commonly directed through eye gaze, and so it is easy to answer a question out of turn, creating socially awkward situations. Individuals who are blind also noted that when passing people in hallways or elevators, they wonder if it is a co-worker keeping quiet to avoid social interaction. Incomplete exchanges of information, as given in the aforementioned examples from focus groups, may lead to feelings of embarrassment, which in turn, may cause an individual to desire social avoidance and isolation, which eventually, may result in psychological problems, such as depression and social anxiety [2]. It is, therefore, important to explore technological solutions to break down these social barriers and provide individuals who are blind with access to social interactions comparable to their sighted counterparts.

To address the aforementioned problem, researchers have begun to explore social assistive aids for individuals with visual impairments. These technologies use sensory substitution algorithms to convert visual data into information for perception by an alternative modality, such as touch or hearing. Researchers have targeted nonverbal cues of eye gaze, interpersonal distance, and facial expressions. Qiu et al. [3] proposed a device for individuals who are blind consisting of a band of vibration motors worn around the head to map eye gaze information to vibrotactile stimulation. The device mapped a quick visual glance to a short vibrotactile burst, and a fixation to a repeating vibrotactile pattern. In our own previous work [4], we proposed a vibrotactile belt for communicating the direction and interpersonal distance of interaction partners using dimensions of body site and tactile rhythm. Actuators were driven by a face detection algorithm ran on frames from a discreetly embedded video camera in a pair of sunglasses. Direction was presented relative to the user, e.g., when someone stood in your field of view to your right side, your right side would be stimulated via pancake motors embedded in the belt. Interpersonal distances of intimate, personal, social, and public were mapped to tactile rhythms that felt like heartbeats of varied tempo depending on the distance (intimacy) of the interaction. Most of the work done toward realizing social assistive aids for individuals who are blind has been focused on visual-to-tactile substitution of facial expressions and emotions, described next.

Buimer et al. [5] used a waist worn vibrotactile belt to map the six basic emotions to body sites on the left and right side of the user. Réhman et al. [6] proposed a novel vibrotactile display consisting of three axes of vibration motors embedded on the back of a chair where each axis represented a different emotion, and the progression of the stimulation along the axis indicated the intensity of the respective emotion. Rahman et al. [7] conveyed behavioral expressions, such as yawning, smiling, and looking away, and their dimensions of affect (valence, dominance, and arousal) through speech output. Krishna et al. [8] proposed a novel vibrotactile glove with pancake motors embedded on the back of the fingers and hand to communicate the six basic emotions (happy, sad, surprise, anger, fear, and disgust) using visual emoticon representations. For example, to convey happiness, a spatiotemporal vibrotactile pattern was displayed that was perceived as a smile being “drawn” on the back of the user’s hand. As shown, much of the previous work aims to solve the problem for the user; i.e., recognize an interaction partner’s basic emotion, and present this information to the user with or without an intensity rating.

Striving for a human-in-the-loop solution, we view social assistive aids as providing rich, complementary information to a user so that they may use this information to make their own conclusions about the facial expressions, emotions and higher cognitive states of interaction partners. To meet this goal, in our early work [9], we proposed the first mapping of visual facial action units to vibrotactile stimulation patterns. As part of a pilot run to gather initial feedback and improve our design, we tested these patterns with sighted participants. Facial action units were chosen for the following reasons: First, any facial expression can be reliably broken down into its facial action units using the descriptive facial action unit coding system (FACS) [10]. Importantly, we are not limiting the capabilities of the system to the six basic emotions previously described; instead, by focusing on facial action units, any facial expression could be communicated to the user. Second, it is well known which facial action units occur most frequently for each of the six basic emotions [11], which simplifies user training for studies exploring the perception of these units and their associated emotions. Third, we may focus our attention on questions surrounding the delivery and recognition of tactile facial action units since their extraction from video is largely a solved problem: e.g., there are numerous freely and commercially available facial action unit extraction software [12,13,14].

The design of the initial set of tactile facial action units, presented in [9], were improved, based on participant performance and feedback, and retested in [15] with individuals who are blind. The study of [15], which, from this point forward, we refer to as “Study #1”, is presented here again, but with new results and analysis comparing the performance between individuals who are blind and sighted. The design of the tactile facial action units from [15] were once more improved, and used in a study [16] exploring how well individuals who are blind learn and recognize the associated emotions of these facial action units. The study of [16], which we will refer to as “Study #2”, is presented again in this work, but with new results and analysis that explores similar performance questions posed in Study #1. To summarize, the aim the work here is to shed light on the following questions: (1) How well can individuals who are blind recognize tactile facial action units compared to those who are sighted? (2) How well can individuals who are blind recognize emotions from tactile facial action units compared to those who are sighted?

While more experiments need to be conducted with much larger sample sizes, the preliminary pilot tests presented in the subsequent sections at least hint toward the potential of using tactile facial action units in social assistive aids for individuals who are blind. Specifically, with very little training, individuals who are blind were able to learn to recognize tactile facial action units and the associated emotions. While the recognition performance of individuals who are blind was comparable to sighted individuals, larger sample sizes are needed for more conclusive results. In any case, these preliminary results are promising and encourage more exploration by researchers.

Our aim here is not to completely solve the problem of developing a wearable social assistive aid for individuals who are blind, capable of extracting and presenting a myriad of non-verbal cues. This challenging problem requires advances within dimensions human-computer interaction, wearable computing, computer vision, and haptics. The present work focuses on one type of non-verbal cue: facial expressions. Before incorporating tactile facial action units in social assistive aids, a better understanding of recognition performance is required to improve their design. This effort assesses recognition performance differences between sighted and blind. The remaining sections of this article are as follows: Section 2 presents the materials and methods of the work including the Haptic Chair apparatus, detailed design of the proposed tactile facial action units, and the experimental procedures. Section 3 presents the results of both studies, and Section 4 provides an analysis of these results with discussion. Finally, Section 5 outlines important directions for future work in social interaction assistants for individuals who are blind.

2. Materials and Methods

2.1. Hardware and Software Design

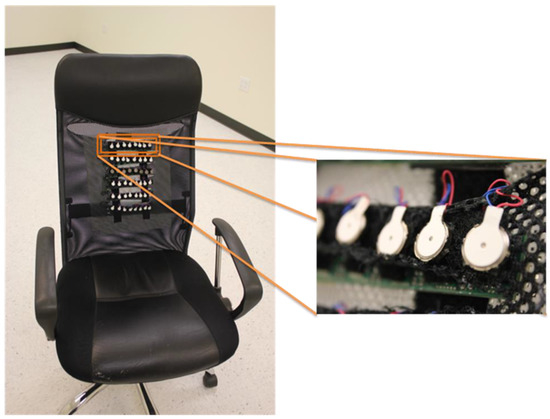

Both Study #1 and #2 use the Haptic Chair, a custom device built in-house for these studies. The device consists of an ergonomic mesh chair of which its back is embedded with a two-dimensional array (six rows by eight columns) of 3.3 volt eccentric rotating mass (ERM) vibrating pancake shaftless motors soldered to custom printed circuit boards called tactor strips (Figure 1). Each tactor strip supports eight motors, and so six tactor strips are attached inside the chair via Velcro, and the vibration motors are affixed to the outside of the mesh, again with Velcro, so that closer contact with a participant’s skin is possible. This configuration results in two-centimeter and four-to-five-centimeter spacing of vibration motors horizontally and vertically, respectively, measured center-to-center between motors. To ensure participants would be able to differentiate between vibration motors, spacing was driven by the research findings of van Erp [17] who investigated vibrotactile spatial acuity limitations on the back and torso. Tactor strips are connected to a control module, powered and controlled through a USB connection to a laptop. The control module uses a custom shield design for the Arduino FIO. An I2C bus with extender chip is used to support communication between the control module and tactor strips. Each tactor strip consists of eight tactor units, which include an ATTINY88 microcontroller for localized processing of patterns and communication, and a separate timing system for precision of displaying spatiotemporal tactile rhythms.

Figure 1.

Frontal view of Haptic Chair with close-up of a tactor strip. The vibration motors sit on the mesh itself for closer contact with participant’s skin and to avoid propagation of vibrations that would occur if attached to the rigid printed circuit board directly.

Control and tactor module firmware are easily modified using the Arduino IDE. The graphical user interface (GUI) of Study #1 and #2 were implemented in Python. Figure 2 depicts a screenshot of the GUI from Study #2. The GUI for Study #1 is similar except it consists of only the top half of the GUI in Figure 2 and includes more facial action units than those explored in Study #2.

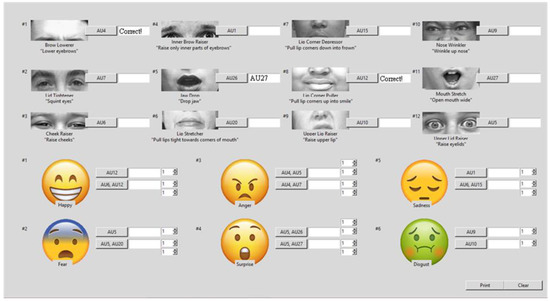

Figure 2.

Screenshot of the graphical user interface (GUI) from Study #2. This GUI is used to control the Haptic Chair (send pattern commands) and quickly record participant responses by entering them directly into the GUI and clicking “print” to save a screen capture.

2.2. Study #1 Design

2.2.1. Aim

The purpose of Study #1 was to evaluate the distinctness and naturalness of a large set of tactile facial action units with individuals who are blind and sighted. Prior to Study #1, the set of patterns had been extensively pilot tested with both individuals who are blind and sighted to refine their design before a more in-depth pilot test was conducted.

2.2.2. Participants

Fourteen individuals who self-identified as blind or visually impaired, and thirteen individuals who are sighted, were recruited for this IRB-approved study. Blindfolds were not used for sighted participants given that all stimuli were tactile. Of the fourteen participants who were blind or visually impaired (VI), ten passed the training phase by four or fewer attempts. These ten participants consisted of 3 males and 7 females; 4 congenitally blind, 3 late-blind, and 3 visually impaired; and their ages ranged 21 to 62 years old (M: 42.0, SD: 11.8). Of the thirteen participants who were sighted, data was lost for three of these participants due to equipment malfunction. Of the remaining ten participants who were sighted, five passed the training phase by four or fewer attempts. These five participants consisted of 2 males and 3 females; and their ages ranged 20 to 26 years old (M: 23.0, SD: 2.2). Limitations of this preliminary pilot study include: (i) small sample sizes, created partly by the difficulty of conditions for passing training (to ensure proficiency, participants must score 80% or better to move on to testing); and age differences between the sighted and blind/VI groups, created partly by the pools of subjects from which recruitment was performed: Sighted participants were recruited from Arizona State University’s student population, and blind participants were recruited from a registry of local subjects, many of which range 30 to 50 years of age. Future work will aim to recruit much larger sample sizes, ease the requirements for moving on to testing, and lessen the age difference between the two groups.

2.2.3. Procedure

Upon entering the study room, each participant was given an overview of the study, asked to read/sign an informed consent form, and then provided with compensation in advance for their time and effort. First, demographic information was collected using a subject information form. Next, each participant underwent a familiarization phase where each pattern was presented using the Haptic Chair and described using layman terminology; Figure 3 depicts these patterns. Variations in pulse width were explored: 250, 500, and 750 ms. The gap between vibrotactile pulses was kept constant at 50 ms. For familiarization, all 15 patterns were first presented with 750 ms pulse width, then 500 ms, and finally, 250 ms. Participants could ask for repeats during familiarization. After familiarization, participants underwent a training phase where they were asked to recognize randomly presented patterns. Each pattern was presented once for each duration, and so each participant must recognize 45 different patterns. The experimenter confirmed correct guesses and corrected incorrect guesses. To pass training and enter testing, a participant must have scored 80% or better during training. The training phase could be repeated at most three times, so a participant has four tries to pass training. The testing phase was similar to the training phase except that during the presentation of the 45 patterns, no feedback was provided by the experimenter regarding correct and incorrect guesses, and each pattern was randomly presented a total of three times. Additionally, unlike familiarization and training, no repeats of patterns were allowed. After the experiment, each participant completed a questionnaire with two Likert-scale questions to collect subjective feedback regarding the ease of recognizing patterns and the naturalness of the patterns.

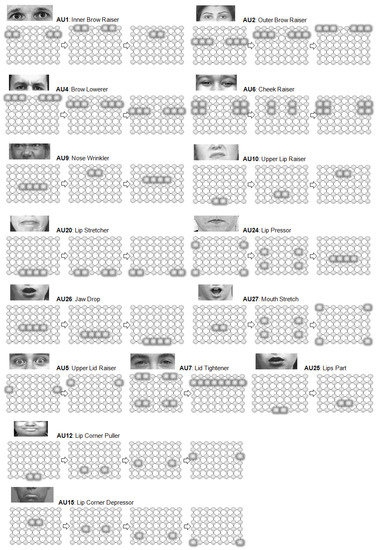

Figure 3.

Proposed mapping of visual facial action units to two-dimensional vibrotactile representations. Pattern designs leverage spatial and temporal variations to create rich, distinct, and natural simulations of their visual counterparts.

2.2.4. Visual-to-Tactile Mapping

The proposed patterns provide good coverage of action units occurring across the six basic universal emotions, particularly for surprise, anger, fear, and disgust. These patterns have been refined through extensive pilot testing and discussions/interviews with individuals who are blind. Pilot tests were also conducted with individuals who are sighted. Throughout Study #1, the following layman terminology was used instead of formal names: AU1 “Raise only inner parts of eyebrows”; AU2 “Raise eyebrows”; AU4 “Lower eyebrows”; AU5 “Raise eyelids”; AU6 “Raise cheeks”; AU7 “Squint eyes”; AU9 “Wrinkle up nose”; AU10 “Raise upper lip”; AU12 “Pull lip corners up into smile”; AU15 “Pull lip corners down into frown”; AU20 “Pull lips tight toward corners of mouth”; AU24 “Press lips tightly together”; AU25 “Slightly part lips”; AU26 “Drop jaw”; and AU27 “Open mouth wide”.

2.3. Study #2 Design

2.3.1. Aim

The purpose of Study #2 was to (i) re-evaluate the distinctness and naturalness of the proposed tactile facial action units following design improvements from the findings of Study #1; and (ii) investigate how well emotions can be associated with tactile facial action units by individuals who are sighted and blind.

2.3.2. Participants

Eight participants who self-identified as blind or visually impaired, and ten participants who are sighted, were enrolled in this IRB-approved study. Blindfolds were not used for sighted participants given that all stimuli were tactile. Six of the participants who were blind passed the training phase. These six consisted of 2 males and 4 females; 1 congenitally blind and 5 late blind; and their ages ranged 24 to 63 years old (M: 42.5, SD: 14.5). Some subjects participated in previous studies using the Haptic Chair; a few months separated these studies and Study #2 to attempt to reduce unwanted learning effects, although such effects may still have been present. Of the participants who were sighted, two had to withdraw from the study due to equipment malfunctions, and three did not pass the training phase. Of the remaining five, they consisted of 4 males and 1 female; and their ages ranged 20 to 24 years old (M: 22, SD: 1.5). This preliminary pilot test has similar limitations compared to Study #1 including small sample sizes and age differences, which future work aims to overcome.

2.3.3. Procedure

Each participant was introduced to the study, read/signed an informed consent form, and received compensation for their time. As with Study #1, facial action units were referred to in layman terminology. Study #2 consisted of two phases referred to as “Part 1” and “Part 2” from this point on. The aim of Part 1 was for participants to learn the proposed set of tactile facial action units, and consisted of a familiarization and training phase. Study #2 used a subset of the tactile facial actions from Study #1 (only twelve of the fifteen); these are depicted in Figure 4. During familiarization, each participant was introduced to the twelve patterns, and two passes were made through all twelve. During training, participants were randomly presented these patterns and asked to recognize them. Correct guesses were confirmed, and incorrect guesses were corrected. Patterns were repeated upon request. The aim of Part 2 was for participants to learn the mapping between facial action units and emotions and consisted of a familiarization, training, and testing phase. During familiarization, participants were introduced to twelve combinations of facial action units, each representing one of the six basic universal emotions: happy, sad, surprise, anger, fear, and disgust. For familiarization, two passes were made through each combination. During training, participants were asked to recognize not only the associated emotion but each individual facial action unit as well. Participants could name the emotion and facial action units in any order, and as before, correct guesses were confirmed and incorrect guesses were corrected. Repeats were allowed upon request. All patterns were randomly presented. To move to Part 2 testing, participants had to score 80% or better (ten out of twelve patterns). If a passing score was not achieved, training was repeated. Training could be repeated a maximum of three times. Testing involved the random presentation of all twelve pattern combinations, and this was repeated four times for a grand total of 48 pattern presentations. Guesses were neither confirmed nor corrected by the experimenter. Participants were allowed to request repeats. Finally, participants completed a post-study survey.

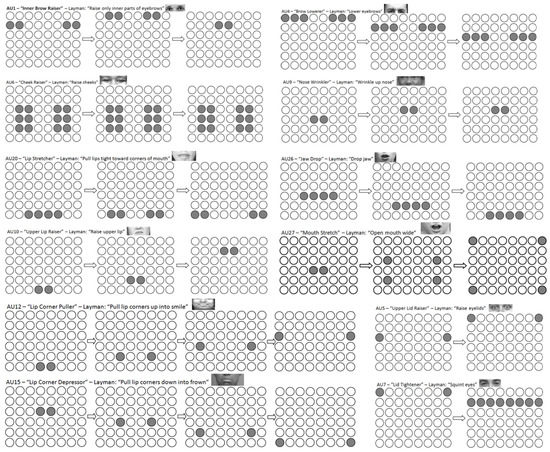

Figure 4.

Proposed tactile facial action units for Study #2. A subset of patterns from Study #1 were used. A few of the patterns were redesigned based on results from Study #1.

2.3.4. Visual-to-Tactile Mapping

Twelve of the fifteen action units from Study #1 were selected based on good representation of the six basic universal emotions for testing purposes. Some patterns were redesigned based on Study #1 results (compare Figure 3 and Figure 4, and see [16] for full details). Study #1 results, discussed in the next section, showed no significant difference in pulse width variations between 250 and 750 ms, and so a pulse width of 250 ms was chosen (with a gap width of 50 ms). Referring back to the screenshot of the GUI (Figure 2), examples are shown of how combinations of facial action units communicate the specified emotions. Twelve such combinations were chosen for this study with each basic emotion allocated two of these combinations. In actual use, our expression of emotions may be one, two, or many facial action units in its complexity. To ensure Study #2 was of reasonable duration for participants, combinations of no more than two facial action units were chosen. For example, from Figure 2, AU12 or AU6+AU12 represent ‘happy’; AU4+AU5 or AU4+AU7 represent ‘anger’; AU1 or AU6+AU15 represent ‘sad’; AU5 or AU5+AU20 represent ‘fear’; AU5+AU26 or AU5+AU27 represent ‘surprise’; and AU9 or AU10 represent ‘disgust’. During the presentation of an emotion, its action units are presented sequentially rather than simultaneously to avoid overlap and occlusion. There is a gap of 1 s between action unit presentations, but reductions in this gap will be explored as part of future work to speed up communication.

3. Results

3.1. Study #1 Results

The following analysis includes only data from the ten blind/VI participants and five sighted (fifteen participants total) who passed training and completed the study with all data intact. For the blind/VI group and the sighted group, the mean number of training phase attempts was M: 2.1 (SD: 0.6) and M: 3.0 (SD: 1.0), respectively. Of the 1350 participant responses collected from the ten participants in the blind/VI group, only eight responses were lost due to equipment malfunction. Of the 675 participant responses collected from the five participants of the sighted group, all responses were successfully recorded. Since data analysis is based on averages across participants, the impact of a data loss of less than 1% is insignificant.

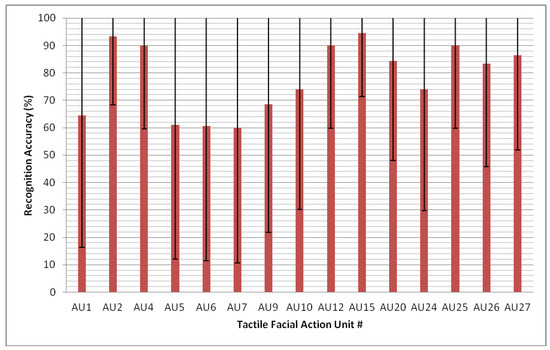

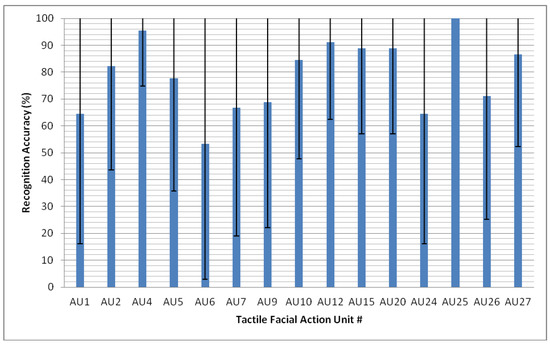

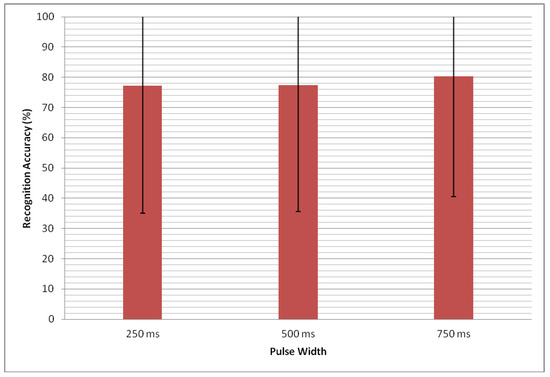

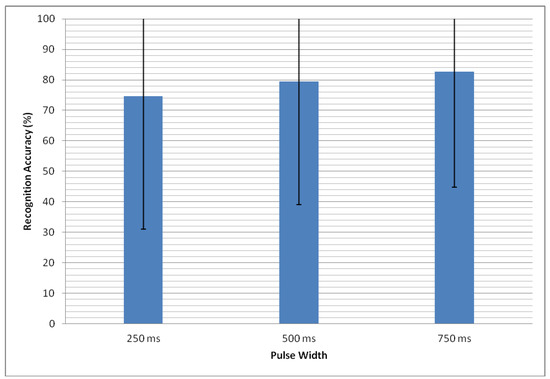

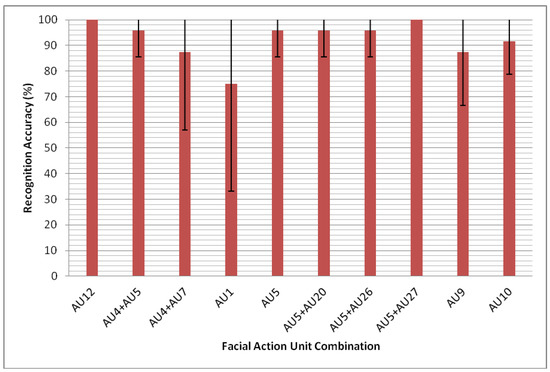

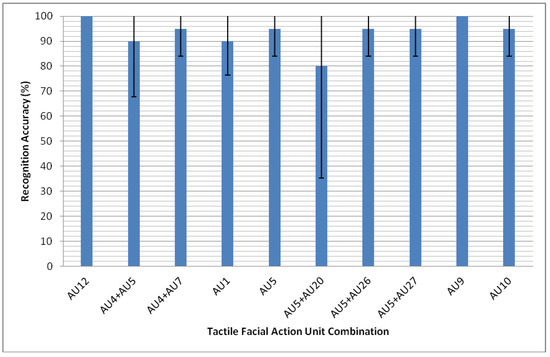

We define recognition accuracy as the number of pattern presentations guessed correctly divided by the total number of pattern presentations. The recognition accuracy of the blind/VI group and sighted group, averaged across all tactile action units and durations, was M: 78.3% (SD: 41.2%) and M: 78.9% (SD: 40.7%), respectively. Figure 5 and Figure 6 depict recognition accuracies for each tactile facial action unit, averaged across the duration, for the blind/VI group and sighted group, respectively. Figure 7 and Figure 8 depict recognition accuracies for each duration, averaged across tactile facial action units, for the blind/VI group and sighted group, respectively. Table 1 and Table 2 summarize subjective responses for the blind/VI group and sighted group, respectively, for the following questions: “How easy was it to recognize the vibration patterns represented by the following facial action units?” and “How natural (intuitive) was the mapping between vibration pattern and facial action unit for the following facial action units?”.

Figure 5.

Tactile facial action unit recognition accuracies (averaged across duration) for the blind/VI group.

Figure 6.

Tactile facial action unit recognition accuracies (averaged across duration) for the sighted group.

Figure 7.

Recognition accuracies with respect to duration (averaged across action units) for the blind/VI group.

Figure 8.

Recognition accuracies with respect to duration (averaged across action units) for the sighted group.

Table 1.

Subjective feedback from the blind/VI group. Part (A). Ease of recognizing the proposed tactile facial action units. Part (B). Naturalness of proposed mapping. Ratings based on Likert scale: 1 (very hard) to 5 (very easy).

Table 2.

Subject feedback from the sighted group. Part (A). Ease of recognizing the proposed tactile facial action units. Part (B). Naturalness of proposed mapping. Ratings based on Likert scale: 1 (very hard) to 5 (very easy).

The main contribution of this work is to investigate recognition performance differences between sighted and blind/VI groups rather than to explore subjects’ performance on individual tactile facial action units and tease out confusions between these designs, which is the focus of our previous presentation of Study #1 [15].

3.2. Study #2 Results

For the duration of the study, malfunctions were caused during the presentation of AU6 Cheek Raiser. Therefore, all participant responses when this facial action unit was present, i.e., during presentation of AU6+AU12 and AU6+15, were thrown out to ensure such malfunctions did not bias the results. A few motors of the 48 ceased operation during the study and were irreparable. Extensive pilot testing following completion of the study was conducted to investigate whether these malfunctions impacted the recognition of the proposed patterns. We found no impact in recognition performance due to the inherent redundancy of these patterns. Perceptually, all patterns felt the same even though a few motors were inoperable.

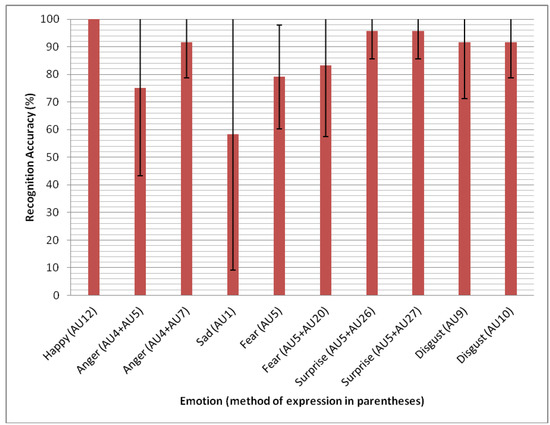

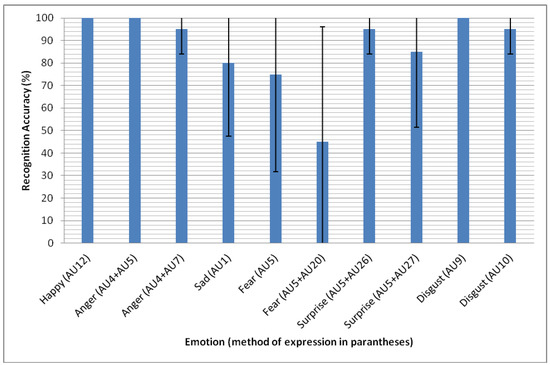

The following analysis includes only data from the six blind/VI participants and five sighted (eleven participants total) who passed training and completed the study with all data intact. For the blind/VI group and the sighted group, the average number of Part 2 training phase attempts were M: 2.7 (SD: 0.8) and M: 2.4 (SD: 0.5), respectively. Mean recognition accuracy for the complete multidimensional pattern (emotion + facial action units) was M: 84.5% (SD: 30.6%). Mean recognition accuracy for associating emotions was M: 87% (SD: 29.1%). Mean recognition accuracy for identifying facial action unit combinations was M: 93.5% (SD: 17.3%). Figure 9 and Figure 10 depict recognition accuracies for facial action unit combinations for the blind/VI group and sighted group, respectively. Figure 11 and Figure 12 depict recognition accuracies for emotions for the blind/VI group and sighted group, respectively.

Figure 9.

Tactile facial action unit combination recognition accuracies for the blind/VI group.

Figure 10.

Tactile facial action unit combination recognition accuracies for the sighted group.

Figure 11.

Emotion recognition accuracies for the blind/VI group.

Figure 12.

Emotion recognition accuracies for the sighted group.

Participant’s subjective assessments are summarized in Table 3 and Table 4 for the blind/VI group and sighted group, respectively. Responses are captured for the following questions: “How easy was it to recognize emotions based on vibration patterns represented by the following facial action units?” and “How natural (intuitive) was the mapping between emotion and vibration patterns represented by the following facial action units?”

Table 3.

Subjective feedback from the blind/VI group. Part (A). Ease of recognizing emotions based on vibration patterns represented by the specified facial action units. Part (B). Naturalness of proposed mapping. Ratings based on Likert scale: 1 (very hard) to 5 (very easy).

Table 4.

Subjective feedback from the sighted group. Part (A). Ease of recognizing emotions based on vibration patterns represented by the specified facial action units. Part (B). Naturalness of proposed mapping. Ratings based on Likert scale: 1 (very hard) to 5 (very easy).

Similar to Study #1, the main contribution of this work is to investigate recognition performance differences between sighted and blind/VI groups rather subjects’ performance and confusions on and between individual patterns, which is the focus of our previous presentation of Study #2 [16].

4. Discussion

4.1. Study #1 Discussion

Ten of the fourteen individuals who were blind or visually impaired passed the training portion of the study and moved on to testing, requiring an average of approximately two attempts at the training phase. This is impressive given the number of tactile facial action units, the variations in pulse width, and the short training period. In contrast, only half of participants who were sighted passed training, although analysis began with thirteen participants, but three were thrown out due to equipment malfunctions. On average, sighted participants required more attempts at training; however, for those participants who passed training in four or less attempts, no significant difference was found for the number of training attempts between the two groups, t (13) = −2.255, p > 0.01, two-tailed. These results, however, are preliminary given the small sample size of each group, so further exploration with larger sample sizes are needed for more conclusive results.

In terms of performance, statistical analysis revealed no significant difference in mean recognition accuracy, averaged across patterns and durations, between the blind/VI group and sighted group, t (43) = −0.172, p = 0.865, two-tailed; nor were any significant differences found in mean recognition accuracy between the groups for any of the three variations in duration: 250 ms, t (13) = 0.332, p = 0.745, two-tailed; 500 ms, t (13) = −0.357, p = 0.727, two-tailed; and 750 ms, t (13) = −0.348, p = 0.733, two-tailed. In other words, individuals who self-identified as blind/VI performed just as well as participants who were sighted. While promising, these results should be viewed as preliminary and inconclusive until larger sample sizes are investigated. Moreover, it is important to note that the blind/VI group consisted of a spectrum of individuals who are legally blind including four individuals who were born blind, three individuals who became blind late in life, and three individuals who self-identify as visually impaired. Further research is needed with larger sample sizes to investigate any performance differences between these three sub-groups.

Our previous work [15] revealed significant differences in mean recognition accuracies for pattern type for the blind/VI group. Indeed, from Figure 5, clearly some patterns are more difficult to recognize than others, and Table 1 corroborates recognition difficulties with participant feedback. In [15], we identified the cause of the misclassifications, which were similarities between a few of the tactile facial action units. Since then, the mapping has been improved, and Study #2 used the refined patterns. Comparing Figure 5 and Figure 6, it is interesting to note the similarity between the groups in terms of recognition performance on the individual tactile facial action units. Table 1 and Table 2 also show many similarities in subjective assessment of the ease of recognizing the patterns and their naturalness.

4.2. Study #2 Discussion

Six of the eight participants in the blind/VI group passed Part 2 training and moved on to testing, needing an average of 2.4 attempts at the training phase. Similarly, five of the eight participants in the sighted group passed Part 2 training, also needing an average of 2.4 attempts. As with Study #1, this is impressive given the short training period and the number of tactile facial action units, in addition to having to associate emotions to facial action unit combinations.

Comparing the blind/VI group with the sighted group, no significant differences were found in mean recognition accuracy for the complete multidimensional patterns, t (9) = −0.047, p = 0.964, two-tailed; emotions, t (9) = −0.120, p = 0.907, two-tailed; nor facial action unit combinations, t (9) = −0.294, p = 0.796, two-tailed. This outcome hints at the possibility of similar recognition performance between sighted and blind/VI groups, but as with Study #1, these results should be viewed as preliminary and inconclusive given the small sample sizes. Moreover, the blind/VI group consisted of five individuals who are late blind and one individual who is congenitally blind. Therefore, a larger sample size would allow comparisons to be made between congenitally blind, late blind, and VI subgroups.

Previous work [16] focused on exploring significant differences in mean recognition accuracy for pattern type within the blind/VI group. No significant differences were found, indicating that no pattern, in particular, was more difficult to recognize in terms of its emotional content or facial movements. However, Figure 9 and Figure 11 clearly indicate that some participants struggled with Sad (AU1), and this is corroborated by the subjective ratings in Table 3. Interestingly, the sighted group seemed to have less an issue with this emotion and its associated facial action unit. They did, however, seem to struggle with Fear (AU5) and Fear (AU5+AU20), as displayed in Figure 10 and Figure 12, and corroborated by subjective ratings in Table 4. The blind/VI group also had challenges recognizing emotions from facial action unit combinations representing Fear as well as Anger. These difficulties warrant further attention with larger sample sizes.

Participants of the blind/VI group incorrectly classified Fear as Surprise eight times, whereas participants of the sighted group incorrectly classified Fear as Surprise six times, Anger five times, and Sadness four times. Expressions of Fear, Surprise, and Anger share AU5 (raising eyelids) along with variations in mouth movement. Expressions of Fear and Sadness share subtle facial movements in the eyes and/or eyebrows/forehead. While these similarities most likely forced participants to rely on subtle variations to distinguish between emotions, we hypothesize that with further training, participants’ recognition of these nuances will be improved and increase proficiency in identifying emotions. We also plan to redesign select patterns, such as AU1, to further enhance the distinctness of the tactile facial action units, thereby easing training.

5. Conclusions and Future Work

The purpose of this work was to explore two questions: (1) How well can individuals who are blind recognize tactile facial action units compared to those who are sighted? (2) How well can individuals who are blind recognize emotions from tactile facial action units compared to those who are sighted?

Results from this preliminary pilot test are promising, hinting at similar recognition performance between individuals who are blind and sighted, although further investigation is required given the limitations of the study including (i) small sample sizes; (ii) age differences between the sighted and blind/VI groups; and (iii) small sample sizes per subgroups within the blind/VI group (i.e., congenitally blind, late blind, and VI), preventing exploration of the impact of visual experience on recognition. While the limitations of the results make them preliminary and inconclusive, the findings show potential for augmenting social interactions for individuals who are blind. Specifically, recognition performance among individuals who are blind was promising, demonstrating the potential of tactile facial action units in social assistive aids. Moreover, we hope that these findings will garner more interest from the research community to investigate further social assistive aids for individuals who are blind or visually impaired.

As part of future work, we aim to conduct the aforementioned pilot studies with much larger sample sizes. We are also investigating recognition of multimodal cues (voice+tactile facial action units) by individuals who are blind. This work would improve the ecological validity of the experiment since real-world social interactions involve a verbal component. Other possible directions for future work include: (1) Exploring limitations in pulse width reductions to further speed up communication while maintaining acceptable levels of recognition performance; (2) Designs that cover more facial action units while maintaining distinctness and naturalness across patterns; and (3) Longitudinal evaluations of tactile facial action units in the wild with individuals who are blind or visually impaired, potentially as part of case studies.

Author Contributions

Conceptualization, T.M.; Data curation, D.T.; Formal analysis, T.M.; Funding acquisition, S.P.; Investigation, D.T.; Methodology, T.M.; Project administration, S.P.; Resources, T.M.; Software, D.T., A.C. and B.F.; Supervision, S.P.; Validation, T.M.; Visualization, T.M.; Writing—original draft, T.M.; Writing—review and editing, T.M.

Funding

This research was funded by NATIONAL SCIENCE FOUNDATION, grant number 1828010.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Knapp, M.L. Nonverbal Communication in Human Interaction; Harcourt College: San Diego, CA, USA, 1996. [Google Scholar]

- Segrin, C.; Flora, J. Poor social skills are a vulnerability factor in the development of psychosocial problems. Hum. Commun. Res. 2000, 26, 489–514. [Google Scholar] [CrossRef]

- Qiu, S.; Rauterberg, M.; Hu, J. Designing and evaluating a wearable device for accessing gaze signals from the sighted. In Universal Access in Human-Computer Interaction; Antona, M., Stephanidis, C., Eds.; Springer: Cham, Switzerland, 2016; Volume 9737, pp. 454–464. [Google Scholar]

- McDaniel, T.; Krishna, S.; Balasubramanian, V.; Colbry, D.; Panchanathan, S. Using a Haptic Belt to Convey Non-Verbal Communication Cues During Social Interactions to Individuals Who Are Blind. In Proceedings of the IEEE Haptics Audio-Visual Environments and Games Conference, Ottawa, ON, Canada, 18–19 October 2008. [Google Scholar]

- Buimer, H.P.; Bittner, M.; Kostelijk, T.; van der Geest, T.M.; van Wezel, R.J.A.; Zhao, Y. Enhancing emotion recognition in VIPs with haptic feedback. In HCI International 2016—Posters’ Extended Abstracts; Stephanidis, C., Ed.; Springer: Cham, Switzerland, 2016; Volume 618, pp. 157–163. [Google Scholar]

- Réhman, S.U.; Liu, L. Vibrotactile rendering of human emotions on the manifold of facial expressions. J. Multimed. 2008, 3, 18–25. [Google Scholar] [CrossRef]

- Rahman, A.; Anam, A.I.; Yeasin, M. EmoAssist: Emotion enabled assistive tool to enhance dyadic conversation for the blind. Multimed. Tools Appl. 2017, 76, 7699–7730. [Google Scholar] [CrossRef]

- Krishna, S.; Bala, S.; McDaniel, T.; McGuire, S.; Panchanathan, S. VibroGlove: An Assistive Technology Aid for Conveying Facial Expressions. In Proceedings of the ACM Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 10–15 April 2010. [Google Scholar]

- Bala, S.; McDaniel, T.; Panchanathan, S. Visual-to-Tactile Mapping of Facial Movements for Enriched Social Interactions. In Proceedings of the IEEE International Symposium on Haptic, Audio and Visual Environments and Games, Dallas, TX, USA, 10–11 October 2014. [Google Scholar]

- Paul Ekman Group, Facial Action Coding System. Available online: https://www.paulekman.com/facial-action-coding-system/ (accessed on 7 May 2019).

- Valstar, M.F.; Pantic, M. Biologically vs. Logic Inspired Encoding of Facial Actions and Emotions in Video. In Proceedings of the IEEE International Conference on Multimedia & Expo, Toronto, ON, Canada, 9–12 July 2006. [Google Scholar]

- Seeing Machines. Available online: https://www.seeingmachines.com (accessed on 15 March 2019).

- IMOTIONS. Available online: https://imotions.com (accessed on 15 March 2019).

- De la Torre, F.; Chu, W.-S.; Xiong, X.; Vicente, F.; Ding, X.; Cohn, J. Intraface. In Proceedings of the IEEE International Conference and Workshops on Automatic Face and Gesture Recognition, Ljubljana, Slovenia, 4–8 May 2015. [Google Scholar]

- McDaniel, T.; Devkota, S.; Tadayon, R.; Duarte, B.; Fakhri, B.; Panchanathan, S. Tactile Facial Action Units Toward Enriching Social Interactions for Individuals Who Are Blind. In Proceedings of the International Conference on Smart Multimedia, Toulon, Cote D’Azur, France, 24–26 August 2018. [Google Scholar]

- McDaniel, T.; Tran, D.; Devkota, S.; DiLorenzo, K.; Fakhri, B.; Panchanathan, S. Tactile Facial Expressions and Associated Emotions toward Accessible Social Interactions for Individuals Who Are Blind. In Proceedings of the 2018 Workshop on Multimedia for Accessible Human Computer Interface held in conjunction with ACM International Conference on Multimedia, Seoul, South Korea, 22 October 2018. [Google Scholar]

- Van Erp, J.B. Vibrotactile Spatial Acuity on the Torso: Effects of Location and Timing Parameters. In Proceedings of the First Joint Eurohaptics Conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Pisa, Italy, 18–20 March 2005. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).