1. Introduction

As one of the many domains within the human–computer interaction (HCI), gesture-based interaction effectively supports both the gestures and body movements of users’ interactions with the systems of digital devices [

1]. As gesture-based interaction is an intuitive and natural approach, it may have the potential to support the self-expression of users [

2], while also allowing users from different backgrounds to experience and participate in artistic engagement [

3]. Meanwhile, some digital devices, such as the Nintendo Wii, Microsoft Kinect, and Sony Move, are improving how users interact with systems, while also promoting cultural creativity through educational and recreational content [

4]. Because the depth cameras used in these devices are becoming more broadly available consumer products, opportunities are emerging for such systems that are to be used in novel body-interaction techniques [

5], although this poses new challenges for interaction designers. Several researchers have already begun to integrate this new form of interaction into their own interactive systems [

6,

7,

8]. However, the gesture sets regarding interaction with each system are usually devised by the designers, and how users’ backgrounds and individual needs can be combined with the goal of devising interactive gestures has rarely been discussed to date. Therefore, the overall aim of the present study was to determine how users’ experiences could be integrated with their multi-cultural and occupational backgrounds, with the intention of enabling interactive gesture-based expression and creativity.

In the present study, the author developed three prototypes with different digital devices (digital camera, PC camera, and Kinect) and conducted two experiments to specifically explore the features of gesture-based interaction, that is, the expressional methods and art forms that constitute the participants’ experiences. For these two experiments, the author adopted an experience-centred design (ECD) [

9] methodology, which enabled the extraction and analysis of data on how to achieve self-expression in gesture-based interaction. Meanwhile, a series of workshops and in-depth interviews [

10,

11] were conducted to engage participants from different backgrounds in exploring the user experience of gesture-based interaction. Based on the results of these experiments, the author found that, from the viewpoint of gesture-based interaction, the participants greatly preferred engaging with visual traces that were displayed at specific timings in multi-experience spaces. Thus, the participants’ gesture-based interactions could effectively support nonverbal emotional expression. Additionally, the participants clearly preferred to draw on their own personal stories and emotions when creating their own gesture-based artwork. Given the participants’ varied cultural and occupational backgrounds, this may lead to them to spontaneously form artistic creations.

2. Related Work

Nowadays, gesture-based interaction has been widely realised in different areas, breaking down some of the barriers between users, devices, such as gloves and trackers [

12,

13,

14], and image-processing or detection techniques [

15,

16]. In addition, some typical cases of gesture-based interaction can be found in both digital arts and technological applications [

17].

2.1. Gesture-Based Interaction

Gesture-based interaction has been embodied in the support of a users’ operation of interfaces or systems. Some studies have investigated the use of simple gestures and audio-only feedback to control music playback in devices [

18], the use of head gestures to operate auditory menus [

19], and the utilisation of gestures to rotate a physical pen and determine how a user may grip the pen to improve the flexibility and control [

20].

Moreover, some applications have supported user operations that are based on gesture-based interaction, such as GestureTek’s interfaces [

9] and Visual Conducting Interfaces [

21]. The applications are capable of accurately executing operational commands that are given by users; however, the interaction is limited to few a gesture that controlled only a few simple operations. In addition, the autonomous experiences of users were limited to a library of standard gestures [

11]. In addition to tangible and wearable technologies [

22,

23], there is another type of technology that does not require either a wearable device or specific markers to interact with a system, being designed according to the “come as you are” principle [

24,

25]. Some devices, such as the Xbox Kinect, Leap Motion, and Myo Armband, have become very popular and they are frequently utilised by researchers. For instance, an optical motion-capture device that is based on a Web3D platform was developed to support a user’s interaction with animated dancers [

26]. Another case study used the Wii Remote or Sony PS Move to animate different objects on a screen through gestures [

27]. In these case studies, the gestures successfully expressed and supported animatic movements. However, the movements were limited by the animated characters, which did not provide the users with a creative environment to express their own emotions or stories. Therefore, the author’s goal was to explore how to use gesture-based interaction to enable the creative experience and engagement of users.

2.2. Gesture-Based Expression

The application domains of gesture-based interaction have become very broad and complex, regardless of whether the interaction involves touch or is touchless. Some studies have explored the creative expression with gesture-based interactive technology, especially in the areas of digital art and related cultural industries [

28,

29,

30,

31]. Some studies have used multi-touch pens and other digital brushes to augment gesture-based interaction. A comparative report on a “‘five-mode switching technique” concluded that clicking a mechanical button on a digital stylus was not the optimal solution [

32]. Various other projects have proposed the idea of finger touch gestures to support the art creation. For example, some studies have explored pen rolling and shaking as a form of interaction [

33]. Furthermore, some projects have demonstrated that the grip can be leveraged to allow the user to interact with a digital device [

34,

35]. However, the operation requirements were high for naive users who do not possess any painting skills; therefore, the completeness of the users’ experiences was relatively low. Meanwhile, tangible technology—which is often based on embodied interfaces for use in artistic exhibitions [

36]—has been designed, integrated into smartphone and tablet devices, and then incorporated into augmented reality (AR) applications to explore the intuitiveness of gesture-based expression [

37]. Given the results of the abovementioned studies, the gesture-based expression of users was designed as an affiliated function in the interactive experience. The main design targets that are focused on gesture-based operation or learning, without the emphasis of creativity. Specifically, during an experience, the availability of only stereotypical gestures limited users’ artistic expression and creativity. For these reasons, the present study set out to explore the use of digital interactive technology to support users’ self-expression and enhance their artistic creativity.

The following section describes the experiment that consisted of an experience in which the participants expressed themselves with gestures, followed by its evaluation, the findings, and critical reflections. Further, a second follow-up experiment, which was designed to explore the characteristics of using interactive technology to support gesture-based expression, as well as potential design ideas for gesture-based artistic creation, are described.

3. Experiment 1: Experiencing Gesture-Based Traces

In Experiment 1, the author set out to explore the design possibilities of using digital technology to support gesture-based interaction, by observing, recording, and analysing the process of the participants’ gesture-based interaction. The author further defined the needs of the participants and explored the potential design insights. Therefore, the target of this case study was not to focus on reporting the evaluation of the digital equipment itself, but rather applying digital technology to various activities to obtain the participants’ feedback on the experience and to discover their needs, as well as offering a series of specific and feasible critical reflections for the design of Experiment 2.

In Experiment 1, two case studies featuring digital interactive technology were undertaken to explore the possibility of interactive gesture-based expression. Two prototypes were designed to assess the experiences of the participants. One provided light using a light-emitting diode (LED), while the second tracked the gestures made with the LED light.

3.1. Recruitment

The author recruited ten participants (six females and four males) that were aged between 19–36. Three of the participants were university students of the Museum and Media Studies course, three were professional artists, and the other four lived and worked locally. All of the participants described themselves as being very interested in art and painting. Apart from the three professional artists, the participants had no painting skills. By selecting these participants, it was hoped that it would be possible to analyse a diverse user with different cultural backgrounds, ages, and artistic proficiencies.

3.2. Equipment Design and Procedure

The ten participants were divided into five groups of two participants each. Before the workshop, the author presented an introductory session to explain the purpose of the activity and how the prototypes worked. This ensured that the equipment’s novel functional features were clear and well-understood.

During the workshop, the author organised two phases of gesture-based experience, with each phase giving the participants a 1.5-h experience. The first phase was called

exploring the light in the dark, and it was an exploration of a gesture-based experience based on a digital camera. To enable participants to clearly comprehend the process of this experience, all of the activities were based on task cards that were provided by the author. The author utilised a digital single-lens reflex (DSLR) camera set to a slow shutter speed to capture the movements of the LEDs in a dimly lit room where the activities were performed. The reason for selecting this combination was to maximise the likelihood of capturing the LED’s track. Task cards were offered as part of the participants’ artwork experience. However, the participants could also contribute their personal understanding regarding the gestures in a group discussion, such that more gesture-based-based tasks, could be devised. The tasks consisted of

simple gesture-based practices (straight lines, curves, circles, etc.) and

object practices (mountains, river, stars, etc.). By performing the gesture-based practices, the participants familiarised themselves with the process of this experience, which included how to identify a suitable position in the room; how to find the direction of the gestures; and, the duration of their gesturing. In the object practices, the author intended to add some different objects to the experience to provide the participants with more specific gesture-based content. The objects were intended to stimulate the imaginations of the participants. The participants also added patterns using their imaginations during their gesture-based engagement. When the author pressed the camera shutter, the participants held the LED and then made several movements in the air (see

Figure 1). Because the camera shutter could only be held open for a certain duration, the participants chose simple geometric shapes as their main gestures in this activity. All of the gestures were completed in less than 9 s.

The second phase was named

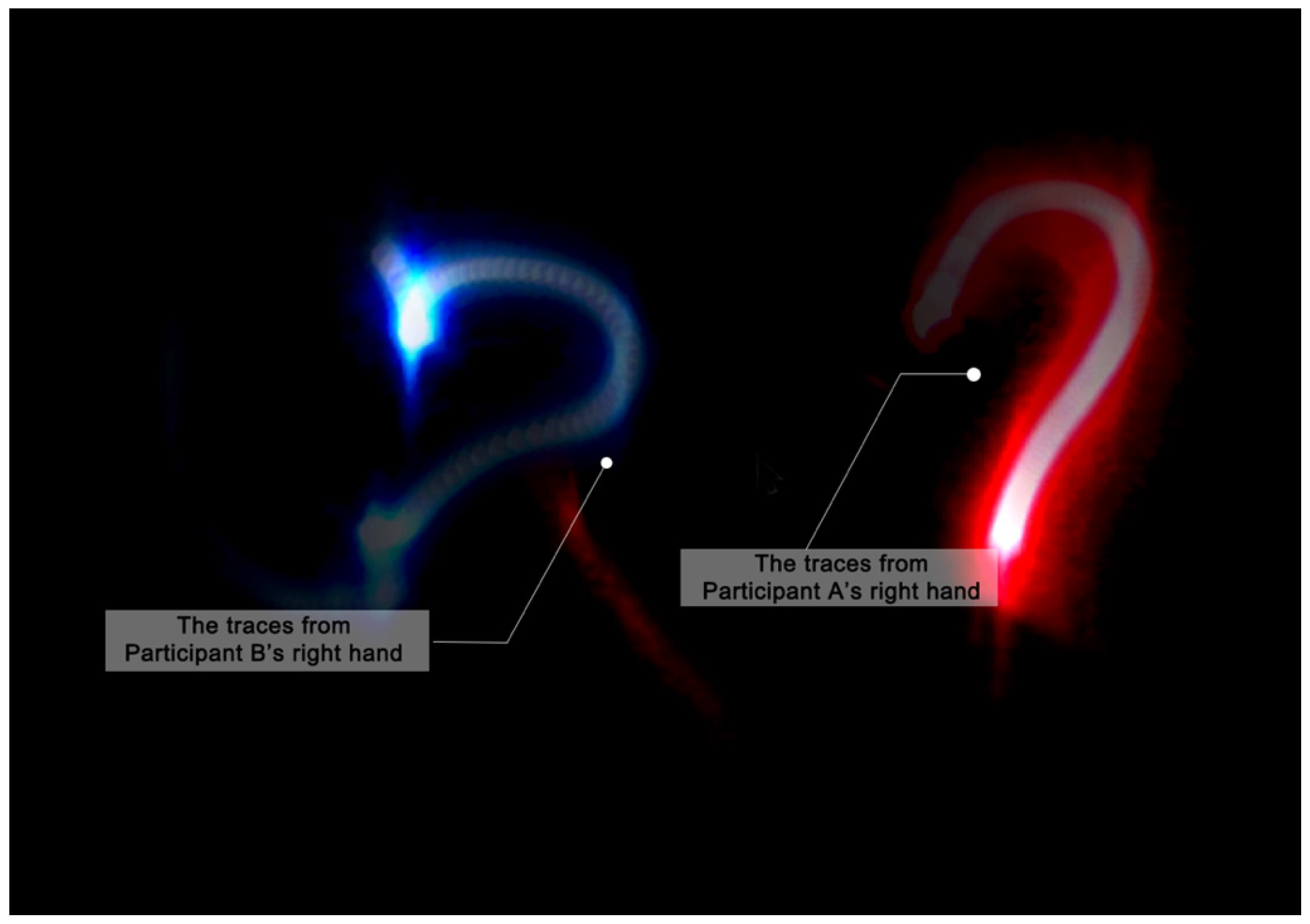

instant flowing lines. In this phase, the equipment was centred on a computer (PC) camera to allow for the participants to perform their gestures rapidly, by means of a simple operation. The author designed the equipment using processing software combined with a PC camera that was used to capture the participants’ gestures. The LED traces from the gestures of two participants were presented on a screen, with each trace being shown for only 2–3 s (see

Figure 2). In each group, participant A made the gestures while facing the PC camera. This was followed by Participant B imitating Participant A’s gestures. To explore a wide range of possible task types, the author designed a game for the participants, which allowed for them to use their gestures to express their emotions (e.g., happy, mad, shy) or a meaning (e.g., what’s the time) to each other without verbal communication. Finally, all of the participants were invited to discuss and explain their experiences and the ideas of their artwork. In this phase, the participants were given more opportunities to experience the gestures spontaneously, thus supporting the author’s analysis of the participants’ preference for visual gesturing.

3.3. Analysis and Findings

For the analysis, the author reviewed the images of the artwork that were created by the participants and carefully observed any behaviours of comments made regarding the equipment. All of the comments related to an envisioned usage, or subtle utterances that described the relationship that the users might form or imagine regarding such a device were transcribed. Using these comments, the author conducted a thematic analysis [

38]. Two main conclusions could be drawn regarding these findings. First, the visualised and immediate interaction that is experienced by the participants is described. Second, the participants’ expectations as to how to collaboratively create artwork were recorded. To ensure the participants’ anonymity, the author referred to the participants as “FP participants” in the same way as in Experiment 1.

3.3.1. Visualised Interaction

In the second phase of the activities, the author found that visualised interaction was the most frequent experience mentioned by participants. The visualised interactions were reasonably expressed by a series of simple movements with which the participants could integrate their own understanding and imagination. For instance, FP7 shared her thoughts:

“I think that this is really fun; when I wave my hands, the traces showed in the air, which is amazing. It’s like we gave the subtle movements different meanings.”

Meanwhile, FP5 stated that the visual traces could potentially provide support for understanding other participants’ gestures, stating:

“When he drew something in the air, I did not even know what he meant by it. Then, we checked the photos that made me realise he drew a love heart and an arrow. More importantly, I understood the meaning, and it is so sweet.”

Meanwhile, based on the use of visual gestures to support semantic understanding, these gestures also inspired the participants to use their own imagination to communicate. As FP2 stated:

“We tried more things that we wanted to express, like some weird, daily gestures which we only knew. I feel like this is not only about the gestures, it is about how to use our imagination to have some visual communication.”

In addition, the participants also offered their opinions regarding the time that the traces were visible and the way in which the traces were presented. For example, FP1 said:

“Although the traces in the photos looked stunning, I had greater expectation that the traces could have been presented like the traces in the gesture-based tracked light (in the second prototype) because I would like to check the traces immediately upon creating them.”

Moreover, FP4 gave her opinion about the duration of the tracked light:

“If the traces could remain on the screen for a longer period, that would be so much better because the extended time could give us more time to check the details in the gestures.”

3.3.2. Gesture Imitation

In this experiment, the author found that the participants were very interested in gesture imitation during their interactions with each other. FP3 responded with praise and affection:

“My group member and I highly preferred this kind of collaborative interaction; the interpretation of our gestures and movements has been passed into the traces. During imitation, we were also expressing the meanings derived from our gestures.”

Meanwhile, the use of gesture imitation and interaction to help participants to appreciate the subtlety of the artistic gestures was another interesting perspective that was mentioned by the participants, as stated by FP2:

“When I imitated his gestures, I realised that our traces were drastically different, which led to a discussion about why and how the traces were different. The traces offered us more details that helped us to observe more information from our gestures.”

However, FP2’s group partner, FP1, also expressed that they were unsatisfied with the length of time that the gestures were displayed on the screen. Based on their gesture imitation, they suspected that the duration of the gesture-based display was shorter than their gesture movements, as explained by FP1:

“The first prototype was really inconvenient, because we needed to finish the gesture first, then wait then go to check it. We cannot check it when we are making the gestures. However, the second prototype was too fast. I do not mean that faster is better. We would like to see more details during the displaying of the gesture, and also appreciate this process. I think the gesture should be displayed after our gesture-based movements for maybe a period of 2 s.”

On the basis of the gesture imitation aspect of the experience, the author found that the interactive imitation provided the participants with more possibilities of engaging in different games. For instance, FP9 mentioned:

“I think we can design some fun games based on this, something like a competition that lets different groups conduct the Chinese whisper game through the use of this prototype. Now that would be awesome.”

FP10 offered an additional viewpoint:

“If this prototype can have more backgrounds or a broader colour palette for the traces, the game could become more personal, fun, and engaging. It would also let us experience a more complicated gesture-based experience.”

3.4. Critical Reflections

Based on the experiences of the five groups, the author developed a framework for the design of an interactive application for use in the next experiment. The participants successfully (1) understood how to use digital devices to create gestures, (2) conducted imitation activities with other participants through gesture-based interaction, (3) expressed simple interpretations or emotions in the gesture traces, and (4) undertook simple artwork creation that is based on their collaborative interaction. Although the participants’ engagement was based on basic and simple graphical traces, their expressions were well conveyed, and the participants also created some artworks with their gesture-based interaction. The interactive technology offered a visual experience of gesture-based activities and the participants were able to express more with their gestures.

However, based on the experiences of the participants, as determined by an interview, the author analysed and summarised two main features in their experiences. The first was duration. In these two case studies, the length of time that the participants’ gestures were displayed on the screen revealed a striking contrast. In the first case study, the participants had to complete the gesture and then check the traces on the photos that are taken by the camera, but in the second phase, the gestures were immediately displayed on the screen for a few seconds. The speed with which the gestures were made, and the length of time that the traces stayed on the screen, did not satisfy the participants. The author observed that the speed of the participants’ gesturing experience was also affected by the speed with which the traces were displayed. An overly quick or overly slow gesturing experience detracted from the aesthetics of the gesture-drawn images to some extent. The author believed that it would be easier if the traces of the gestures were displayed on the screen for roughly 1–2 s upon the completion of participants’ gesture, thus allowing the participants to review their gestures. The second feature concerned space. In the first case study, the participants’ space exploration was limited by the room light and the camera lens. Although the use of the PC camera improved the amount of available light to some extent, the quality of the gesture-based traces and the gesture-based capture distance between the participants, and the camera were still not satisfactory. These limitations also gave rise to the inconvenience of the participants in having to review the details of the gestures. Therefore, the author adopted motion-sensing input devices and multiple screen displays to overcome this limitation.

4. Experiment 2: Engagement in Expressive Gestures

In this phase, the participants’ experiences with the prototypes and the results of the interviews with focus groups from the viewpoint of the ECD [

11] methodology were utilised. This enabled the author to extract and analyse data to support self-expression from gesture-based interaction. The findings of Experiment 1 suggested that motion-sensing input devices (such as the Microsoft Kinect) should be utilised instead of a digital or laptop camera. This is because gestures and body movements would be captured more accurately through the use of Kinect, and the display times and the effects of visual gestures would be more flexible and changeable. This would potentially enhance the exploration of any further interactive potential. Moreover, to explore the spatiality of the users’ experiences, the use of two or more Kinect devices to capture footage created conditions that allowed for the users to share their gestures with each other and to develop collaborative artwork, rather than having the users experience the prototypes in the same location.

4.1. Prototype

4.1.1. Application Implementation

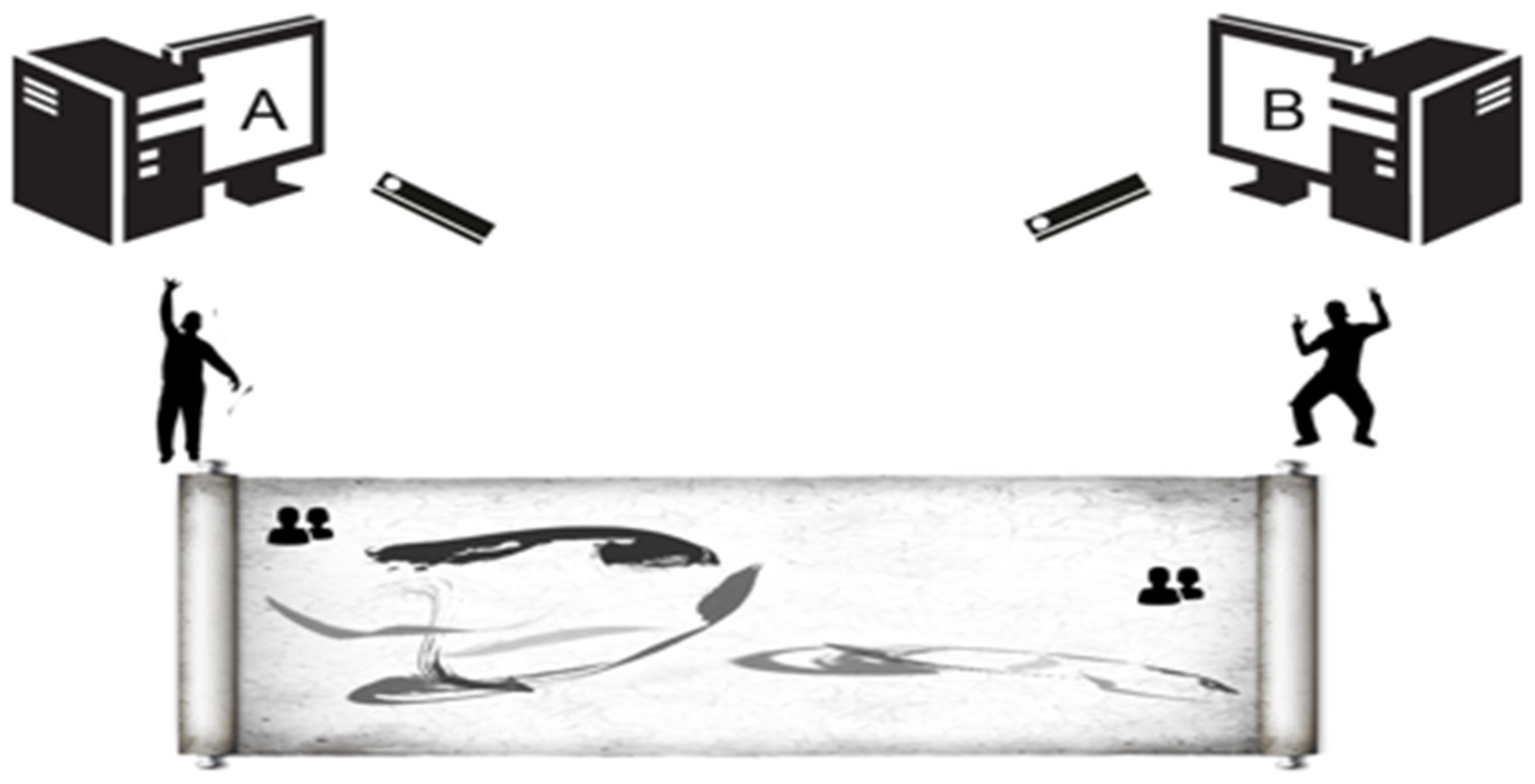

The author designed and developed prototype processing equipment that was based on the oscP5 and SimpleOpenNI open-source libraries. This software was connected to a Microsoft Kinect to digitally capture interactive gestures. Two Kinect devices were utilised to capture gestures from two participants separately and accurately; these gestures would then be presented on a screen in real-time. Meanwhile, these gestures formed interactive calligraphy artwork through collaboration between the participants (see

Figure 3).

4.1.2. Interaction Design Details

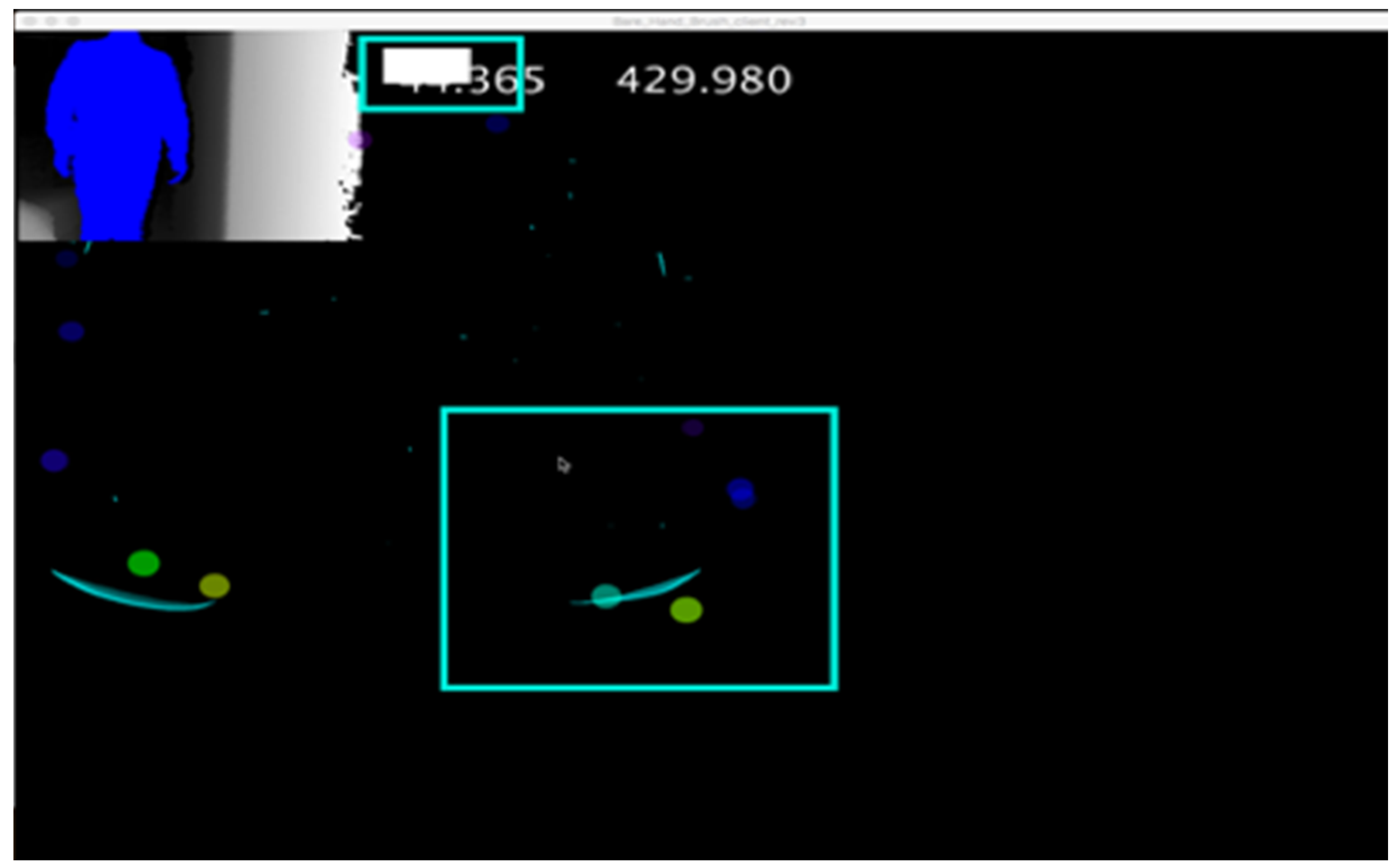

This application can identify/not identify users. There are modes that allow for an ink spray (coloured bubbles) to be activated, as well as the deactivation of the ink spray upon the identification of a user. When the application identifies a user, the positions of the users’ hands would be indicated on the screen by ink-spray bubbles in order to remind the users where their hands and arms are positioned, in the event that they lose track of where they are on the display. When the users bring their knees together, a white rectangle is displayed on the left side of the screen (see

Figure 4). This means that the user has been identified and is able to determine where their hands are positioned, based on the ink-spray bubbles that are displayed on the screen. However, if the user has not been identified, then this rectangle will be displayed as a simple white outline. If a user wants to pause the ink spray, they simply have to move their knees apart. When the ink-spray bubbles have stopped, the rectangle will become a white outline, reminding the users that they are not active. To end or clear the gesture-based traces on the screen, the users would join their hands together for 60 frames (1.5 s), which would remove the current gesture-based traces. If the Kinect device fails to identify the user within 180 frames (3–5 s), the application will automatically clear the traces on the screen.

4.1.3. Application Operation

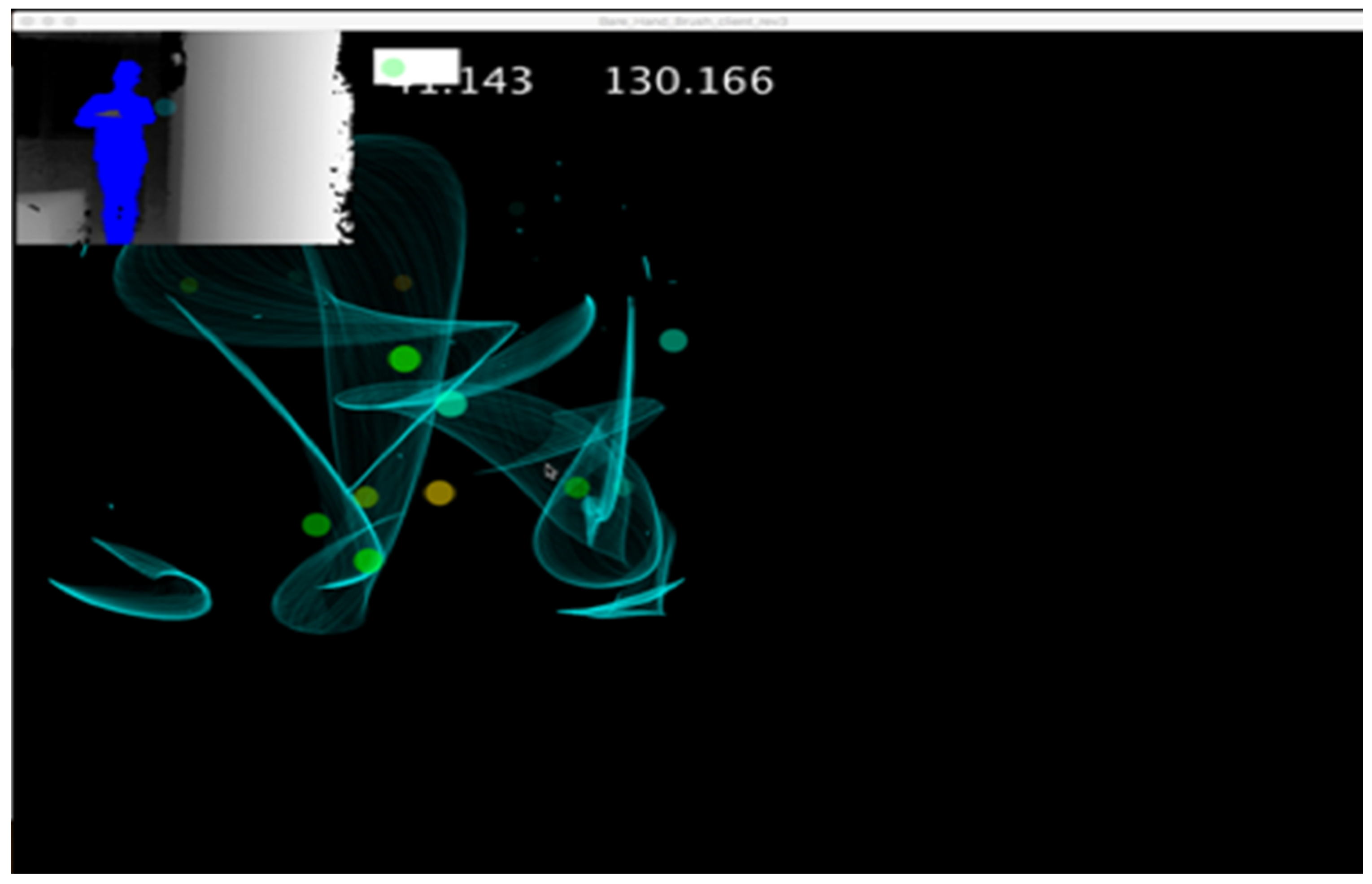

This form of the ink spray was inspired by calligraphy brushwork, and thus it can intuitively present the users’ gesture traces. Based on the results that were obtained in Experiment 1, black was chosen as the background colour of the screen (canvas) and while green was selected for the ink spray (see

Figure 5). Moreover, this application allows for two users to collaborate in different spaces, such that they can interactively gesture to each other, followed by both of their movements being presented on their own screens. For instance, the traces of the gestures that are made by one user could appear on the other user’s screen, such that both of their gestures would be displayed on one screen, such that the users could collaboratively create artwork. Once the users have completed their gesture-making activities, the resulting pictures on the screen can be saved by the users.

4.2. Recruitment

The author recruited 22 participants (from four different countries) aged between 20–56 years. All of these participants worked with the application. Nine were male (41%), and thirteen were female (59%). None of the participants had participated in Experiment 1. They consisted of (1) six British/Spanish/American students that were majoring in fine arts and performing arts at a university, (2) eight English/Chinese staff members who worked in a gallery, (3) four Chinese calligraphers, and (4) two middle-aged British couples who are HCI researchers. The activity space was set up in a dance studio with each group using it independently during their experience. Two TV screens that were connected to a Kinect device were offered to each group. The author’s goal was to analyse a diverse range of users from different cultural backgrounds, age groups, and having different proficiencies, such that valuable critical accounts could be acquired.

4.3. Procedure

All of the participants took part in an introductory session. In this session, the author explained the purpose of the activity and how the system worked, ensuring that the prototype’s novel functional features were well-understood. The participants were asked to fill out the Engagement Sample Questionnaire (ESQ), which was divided into four parts [

39]: Demographics, Before the experience, During the experience, and After the experience (

Table 1).

The first part of the ESQ included questions that addressed the respondent’s demographics (gender, age, occupation, and nationality).

The second part focused on the pre-experience motivation to begin the experience by investigating how much participants want to begin and what motivates them to start the application (their objectives).

The third part included four phases that engaged the participants in the pairs that experienced the prototype. In the first phase, every participant was familiarised with how to use a Kinect device to capture gestures and then went on to separately practice different gestures with the application. In the second phase, two groups of participants experienced the application and explored the interactive mode during their engagement (e.g., how to set each participant’s position on the screen). In the third phase, the participants introduced their own cultural backgrounds and occupations to spontaneously conduct a series of thematic experiences, including dance, calligraphy, freehand painting, and story-making, to explore the multiple uses of the application. In the fourth phase, so that the group could conduct their co-experience, each participant in a group was placed in a different room. Through the system, each participant could interact with their partner through gesture-based interaction on the screen.

After each phase, the participants answered a series of questions addressing their desire to continue with the experience: a quantitative question assessing how much he or she wants to continue with the experience (as captured by a seven-point Likert scale) and open-ended questions concerning what makes them want/not want to continue, what they are doing to reach their objective, and what they feel/experience. The fourth and final part of the ESQ utilised open-ended questions that focused on the post-experience willingness to interact with the prototype again, as well as the story-making within the artwork that was created by the participants. The participants could choose to make an audio recording rather than providing written answers.

4.4. Data Analysis

The data consisted of 22 questionnaire responses and 10 h of audio data that were recorded during the two case studies. First, using the Experience Sampling Method [

40], the questionnaire data were analysed to evaluate the usability of the prototypes. Second, based on the results of the open-ended-question interviews, the author generated codes from the audio data that were related to the participants’ understanding of the expressive gestures. The interview data were analysed using thematic analysis [

38]. Initial codes were generated and refined using an iterative analysis to produce coherent themes that were further refined to establish useful findings that would contribute to the exploration of gesture-based experiences and engagement while using interactive technologies. The data consisted of audio data recorded during interviews and copies of the artwork that was created during the experience. To maintain the anonymity of the participants, the author referred to them as SP participants from Experiment 2.

5. Results

All of the 22 participants completed the experience and the questionnaires. The average participants’ experience time was 73 min (range: 45–90 min). The author analysed and listed the main activities with the number of participants and the duration of the activities constituting the participants’ experience (

Table 2). By combining this with their own cultural backgrounds and occupations, the participants spontaneously undertook these activities. These five activities were mainly divided into two types: (1) collaborative activities that needed two participants to accomplish their gesture-based experiences and (2) interactive game experiences in which the participants used gesture-based expression to interact with each other, expressing a specific meaning. Fourteen of the participants (63%) wanted to try the experience again, mainly because they wanted to attempt more gestures or other types of artwork creation. Of these 14 participants, four wanted to invite friends to collaborate in the experience, three wanted to spend more time experiencing the prototype at home, three wanted to try new ideas for interacting with the gestures, such as finger dancing, two wanted to explore the environment more, and two wanted to try the experience again with music for inspiration. Out of the eight remaining participants (37%) who did not want to try again, four thought that the prototype was difficult or confusing to operate, two stated that they wanted to have more choices of screen backgrounds and trace colours, one did not want to try again because the experience was boring, and one did not give any reason.

In the following sections, based on the participants’ answers to the open questions, provided as audio recordings, the author used thematic analysis to conduct qualitative analysis, which included analysing the key features of the participants’ experiences while using the prototype application and their reflections on using the interactive technology to support their expression and art collaboration. The findings reflect three areas of interest: (1) the story in the gestures, (2) dance and calligraphy in the gesture-based experience, and (3) emotional expression in different places.

5.1. Stories in the Gestures

During the engagement in Study 2, the author further explored and performed an analysis by formulating a comparison with the experiences of the participants in Experiment 1. SP15 and SP 16 tried to conduct storytelling and story-making in their experience, rather than only using gestures to express a simple interpretation. For instance, SP16 provided her thoughts:

“Actually, I drew a picture that described my latest journey in India, but I didn’t tell him what I had drawn. However, he still understood the meaning of my drawing very quickly.”

In addition to sharing stories during their engagement, the participants had conducted a form of storytelling interaction. For example, SP17 stated

“I understood what she wanted to tell me; she is referring to her experience in India. However, I did not tell her that I understood. I used my gestures to draw a moon, windows, a bed, and so on to tell her I was missing her.”

Although using one’s voice to tell a story is an easier method, SP1 preferred to use freewheeling gestures and movements to intuitively express stories with visual images. In SP1’s words,

“For me, I prefer this way to tell a story; I don’t have to draw professionally like a real artist, but this application makes it easier and makes me feel freer to tell my stories. Both of us were engaged in these stories, not just telling and listening.”

Based on the storytelling process of SP5, they expressed more about the gesture-based operation, as SP5 stated:

“I would like to use my hands and my body as a brush to express whatever I want because it is very coherent. This is very important for our experience.”

5.2. Dance and Calligraphy

Some of the participants with a fundamental understanding of dance or performance carried out further exploration during their engagement. They tried to dance with their group members, and the gestures created by dancing were shown on the screen, thus creating an artistically inspired image and atmosphere, as explained by SP3 and her group partner:

“We both danced spontaneously; then, we just felt like the traces looked so good. For example, when we danced around, the traces wrapped around our bodies like a magical aura or halo of some sort; it was stunning.”

Moreover, SP4 mentioned that every dance move acted as a brush stroke with which they created a piece of calligraphy:

“When I was dancing, I felt as though there was an invisible brush in my hand that followed my every move. I imagined my movements acted the way a Chinese paintbrush did upon touching paper. When I stopped dancing, I watched the traces, and it seriously looked like Chinese calligraphy or something like that because there was ‘false and true’ in the artwork due to the vividness of the brush strokes my movements had made.”

In the participants’ engagement, they were producing an artistic creation through their dance and performance. These two different forms of art were combined to become another type of hybrid visual performance. In SP1’s words,

“I would like to try this new approach to performing on stage. When we are dancing, the audience can also see the traces of our movements. A series of layers piled together would be really artistic. The rhythm and cadence would both have been mixed in the artwork.”

5.3. Multi-Place Interaction

In the gesture-based capture experience, every participant was offered a Kinect device to capture their gestures and allow for them to appear on the screen. However, the two participants in each group did not have to remain in the same room or place to interact with each other. The participants’ locations did not limit the gesture-based interaction. Given this point, SP6 felt that interacting with other participants in different places could broaden the possibility of visual communication, which they further elaborated, as follows:

“I think that this is important for me because we do not have to stay together to interact. We can have a good interaction in the same image; however, we are actually in different rooms. This is fantastic.”

The participants also discussed whether gesture-based expression could be used as a tool with social media, which would give users the ability to conduct online artistic interaction, as SP8 explained:

“This application could be connected with social media for the art lovers out there. The gestures could express more information or meaning, even like a message.”

Some participants, such as SP10 and SP11, who have an artistic background preferred to use the gesture-based interaction for collaborative artwork creation. SP11 explained that:

“This application has inspired me to create artwork with other artists online; even artists who are from other countries. We do not have to be at the same place, but we can use this application to create artwork together.”

6. Discussion

On the basis of the findings of the two experiments, the author went on to elaborate on the contributions of the present study to gesture-based experience and engagement: (1) a set of features that analysed the participants’ gesture-based interaction, (2) two main expression habits that are found in the participants’ experiences, and (3) a series of case analyses that explore the multiple art forms in the gesture-based interaction. The goal was to present the analyses and findings from two design studies and summarise the features and expressions in gesture-based interaction.

6.1. Three Features of Gesture-Based Interaction

Based on the results of Experiments 1 and 2, the author analysed the participants’ experiences and interview transcripts to explore the features and needs in the area of gesture-based expression with the support of interactive technology [

2,

3]. Visual gestures are frequently mentioned in the participants’ interviews, in that most of the participants expressed considerable interest in watching how the traces of their gestures were featured on the screen. These visual traces offered the participants an appropriate amount of time to appreciate the details of their gestures that they were unable to observe before. Through this method of appreciation, the participants were also able to compare the subtle differences between their traces and those of others, which developed into an open discussion within the group. From the communication of the participants’ gesture-based traces, the author also noticed that the visual formation of the traces caught the participants’ attention. In other words, the focus of the participants was not only limited by the appreciation of the gesture-based traces, but they also expected to appreciate how their gestures were aesthetically displayed on the screen.

On the basis of this feature, the participants expressed their primary need regarding visual gestures: immediacy. In Experiment 1, the participants expected their gestures to be presented on the screen immediately and wanted the resulting traces to be displayed for a longer period of time. In contrast, upon the completion of the more complex gesture-based experiences in Experiment 2, the participants stated that they preferred the traces to be easier to see through the use of colours or shapes. The author supposed that the use of different colours and styles of traces, when combined with a specific duration that the traces were displayed on the screen, would create a different atmosphere and experience for the participants. For example, the different speeds at which gestures are created and the time sequence of the traces could be used to develop a different display for gestures on the screen, therefore not showing real-time movement.

The third main feature was the multi-experience space. Collectively, the participants argued that they preferred to have gesture-based interaction with participants in other places. Building an online platform to engage more participants to achieve collaborative artistic creation could potentially offer more possibilities for improving their gesture-based interaction and engagement with a diverse spectrum of people. In Experiment 2, the author utilised the multi-experience space and multi-screen display to help the participants to achieve collaborative gesture-based interaction. This further broadened the usability of the prototype system, therefore offering the participants more possibilities, engaging them to explore the gesture-based interaction by themselves. In a future study, the author intends to deploy the prototype from Experiment 2 into different locations that will be used to explore how users use gestures to interact with each other. For instance, how to capture professional dancers’ live performances and how to display these gesture-based traces on the screen in other spaces, for instance a gallery, to provide audiences with an immersive experience.

6.2. Emotional Transmission and Storytelling

In this section, the author discusses two main expression approaches in gesture-based interaction: emotional transmission and storytelling. In Experiment 1, the participants repeatedly conducted simple information transfer and symbolic emotional expression during their experiences. For example, some of the participants used gestures to express romantic love. This sort of emotional expression originates from the participants’ spontaneous activities, which was one of the main reasons that encouraged the participants to continue with their engagement and made the process of appreciation more active. In Experiment 2, the offered application could enable more complex gesture-based interaction and longer engagement, and the participants were not limited to the same location in which to conduct their experience. Rather, the participants tried to express their emotions to other participants in different locations. The majority of the emotions that were expressed during the engagement were not expected to be verbally expressed by the participants. Therefore, some inexpressible emotions would be easier to reflect upon by using gesture-based interaction. It should be emphasised that linguistic expression was not limited during participants’ experience. Thus, it can be concluded that gesture-based interaction could be a potential design direction for supporting the expression of personal emotions to achieve long-distance emotional expression [

10,

28]. For instance, gesture-based interaction could support the expression of romance for couples in long-distance relationships.

The second method is storytelling, whereby the participants can easily convey their imaginative plots and interpretations into gesture-based artwork, which tells their stories. In Experiment 2, the participants revealed a desire to further the shared visual stories. During the engagement, the participants had an aesthetic appreciation of their artwork in storytelling. In addition, the layout of the visual images, the display styles of the traces, and the colours of the traces were key elements that the participants considered. The author noticed that gesture-based interaction in storytelling was more complex and the gesture-based artwork was more artistic and whole. Further, the participants were engaged in collaborative storytelling on an active scale. The storytelling in gesture-based interaction was not limited to one participant gesturing and another participant appreciating. The participants much preferred to combine their stories in order to develop gesture-based artwork. This also requires a more flexible operation (e.g., full body movements capture) that needs to be offered to users to allow for the expression of complex emotions.

6.3. Multiple Art Forms in Gesture-Based Interaction

The features and expression approaches of gesture-based interaction have been discussed in previous sections. Based on these discussions, the author will specifically reflect upon the different art forms that have been combined into gesture-based interaction in this section. This includes (1) the traces within dance movements, (2) the gesture-based traces within Chinese traditional calligraphy, and (3) the traces of artwork mixed with dance movements.

First, those participants with a dance background believed that the use of dance movements and gestures would create a more comfortable environment that would engage them further. Further, the traces that were displayed on the screen in real time were also critical elements that would give the participants an opportunity to observe the subtleties of their movements. Moreover, the positions of the traces on the screen created a sense of space in which to dance. Thus, supporting a more complex pattern of collaboration would enhance the group experience. Second, the effects of the displayed traces matched those of the “false or true” in traditional Chinese calligraphy. The speeds with which the participants made their gestures created different colours, gradations, and textures, which then inspired some participants to use gestures to create calligraphy. In particular, in the creation of cursive handwriting (in Chinese calligraphy, characters executed swiftly and with strokes flowing together), the participants utilised their hands and wrists to replace the ink brush to experience Chinese calligraphy. According to the participants, the effect of the display was satisfactory. Thus, gesture-based interaction could be an alternative means of engaging the participants in the creation of artwork. Third, much of the participants’ discussion explored the use of dance movements to create a painting or another form of artwork, thus expressing their individual thoughts. To a certain extent, this idea integrates the first and second themes that are mentioned in this paragraph. However, the author noticed that the different cultures combined within the gesture-based interaction were closely related to the cultural backgrounds of the participants themselves. The use of dance and calligraphy is an example of cultural fusion through gesture-based interaction. It is evident that there are other cultures that could be involved alongside the participants’ own cultural backgrounds or personal experiences.

On the basis of these three different combinations of approaches to gesture-based interaction, the author believes that the visual effects of the display within the participants’ gesture-based interactions could be further refined from different performance arts and graphical arts [

30,

31]. Gesture-based interaction could also provide a connection to support different types of art or participants who have different cultures to engage in the creation of artwork on a collaborative scale. This is a potentially rich area of future research and it can only be accomplished with further design exploration. The author would like to emphasise that the different art forms are closely linked to the cultural and occupational backgrounds of the participants. In Experiment 2, multiple backgrounds (e.g., dancing, performance arts, painting, and calligraphers) inspired the participants to spontaneously embed their own imagination and creativity into the gesture-based engagement. Thus, the author supposed that the cultural background and the occupational background of the participants may lead to the exploration of the usage of the prototype and system in the test phase. For the specific experience method, the author divided the participants into single- and multiple-background groups (e.g., a professional dancer and a Chinese calligrapher would constitute one), which expanded the cultural dimensions of the gesture-based interaction. For instance, the traces of artwork mixed with dance movements was an interesting finding from the group with multiple backgrounds. In a future study, the author will further explore the use of different art forms in the gesture-based interaction.

7. Conclusion

This paper has reported two design studies using digital devices and interactive applications to support gesture-based expression and engagement. In Experiment 1, the author reported the participants’ expectations of how to create artwork collaboratively, which led to the development of a productive framework to design an interactive application for the next study. The author believed that the traces of the gestures being displayed on the screen for around roughly 1–2 s, once the participants had completed their gesture, would be an easier method of permitting the participants to have time to review the own gestures. Additionally, due to the light in the room, the digital camera and PC camera lens limited the space exploration for the participants. In Experiment 2, the author explored some of the key features of the participants’ experiences in further detail while using a prototype application, as well as delving further into their reflections on using interactive technology to support their expression and art collaboration. On the basis of these findings, the author reflected upon (1) three features of gesture-based interaction: the participants preferred to engage with the visual traces that were displayed for specific times with multi-experience spaces in their gesture-based interaction; (2) two main expressional methods: the gesture-based interaction could effectively support the participants’ nonverbal emotional expression, as well as the participants greatly preferring the combination of their personal stories and emotions to develop their own gesture-based artworks; and, (3) the author also reflected upon three art forms that were produced by the participants’ gesture-based interaction: dance movements, Chinese traditional calligraphy, and the traces of artwork mixed with dance movements. The participants’ varied cultural and occupational backgrounds could spontaneously shape their own artistic creation. Different features of gesture-based interaction integrated into multiple expressional methods offered participants more possibilities that would engage them to display their own imagination in their gesture-based experience, as well as helping the participants to become connected to their cultural and occupational background, and thus freely explore their individual gesture-based expressions. The author supposed that the above-mentioned features and methods as design elements could be used in the design phase. Combining these design elements with each other could potentially offer a personalised design framework that better satisfied the participants’ individual backgrounds and needs. However, these design elements are not limited to the reflection of this study. In this paper, the author has only listed and analysed three items of digital equipment and several representative participants who were engaged in the graphic arts and performance arts. Thus, in a future study, the design elements could be further replenished through the design processes and a greater number of participants with specific backgrounds could be incorporated to support the individualised gesture-based expression of the participants themselves.