Abstract

Tangible technologies are considered promising tools for learning, by enabling multimodal interaction through physical action and manipulation of physical and digital elements, thus facilitating representational concrete–abstract links. A key concept in a tangible system is that its physical components are objects of interest, with associated meanings relevant to the context. Tangible technologies are said to provide ‘natural’ mappings that employ spatial analogies and adhere to cultural standards, capitalising on people’s familiarity with the physical world. Students with intellectual disabilities particularly benefit from interaction with tangibles, given their difficulties with perception and abstraction. However, symbolic information does not always have an obvious physical equivalent, and meanings do not reside in the representations used in the artefacts themselves, but in the ways they are manipulated and interpreted. In educational contexts, meaning attached to artefacts by designers is not necessarily transparent to students, nor interpreted by them as the designer predicted. Using artefacts and understanding their significance is of utmost importance for the construction of knowledge within the learning process; hence the need to study the use of the artefacts in contexts of practice and how they are transformed by the students. This article discusses how children with intellectual disabilities conceptually interpreted the elements of four tangible artefacts, and which characteristics of these tangibles were key for productive, multimodal interaction, thus potentially guiding designers and educators. Analysis shows the importance of designing physical-digital semantic mappings that capitalise on conceptual metaphors related to children’s familiar contexts, rather than using more abstract representations. Such metaphorical connections, preferably building on physical properties, contribute to children’s comprehension and facilitate their exploration of the systems.

1. Introduction

Tangible and embodied interaction corresponds with a paradigm of human–computer interaction that brings computation and information more fully into the physical world, reconsidering the nature and uses of computation, capitalising on people’s physical skills and familiarity with objects from the physical world, and thus providing an interaction paradigm closer to what is considered ‘natural’ [1,2]. Such paradigm is rooted in the theoretical frameworks of situated cognition, phenomenology, and embodied cognition, moving away from the positivist cognitive perspective that poses a strong separation between the mind and the external world. Embodied interaction is predominantly based on the idea that human thinking and experience of the world are tied to action, and cannot be separated from the body [1,3].

In very general lines, tangible systems consist of hybrid physical-digital representations that usually share the following basic paradigm: (i) the user manipulates physical objects via physical gestures; (ii) a computer system detects this; and (iii) it gives feedback accordingly [4]. Although the definition of tangible interfaces is still open to interpretation, the research community has come to a general consensus according to which an artefact is considered a tangible system when it embeds digital data (e.g., graphics and audio) in material forms (i.e., physical objects), yielding interactive systems that are computationally mediated, but generally not identifiable as ‘computers’ in the traditional sense [2,5], and where the distinction between ‘input’ and ‘output’ is less obvious and sometimes nonexistent [2,3,4]. Users act within and touch the interface itself, bodily interacting (within the physical space) with physical objects that are coupled with computational resources, and that can provide immediate and dynamic haptic, visual, or auditory feedback to inform users of the computational interpretation of their actions [3].

Not surprisingly, education is one of the main areas of application of tangibles, as their specific properties and capabilities represent promising novel opportunities for learning [3,6]. The richer sensory experiences, through the interweaving of computation and physical materials, may extend the intellectual and emotional potential of children’s artefacts and integrate compelling and expressive aspects of traditional educational technologies with creative and valuable educational properties of physical objects. By providing hands-on experimentation with embedded computer technologies, tangibles build on the alleged benefits of educational manipulatives and constructivist learning [7], such as the manipulation of physical objects for supporting and developing thinking [8] and the reinforcement of links between the concrete and the symbolic through mappings between digital representations and physical objects [9].

These characteristics are particularly beneficial for children with intellectual disabilities. According to Resnick [10], tangibles can provide conceptual leverage that enables children to learn concepts and develop schemata that might otherwise be difficult to acquire. Analogies between a simulated abstract behaviour and real-life examples are meaningful to facilitate children’s comprehension, especially for children with learning disabilities [11]. Such characteristics, along with the multimodal interaction and the familiarity of the physical devices, make tangibles particularly intuitive and accessible [11,12].

However, although tangible systems provide the possibility of indicating meaning and function through the ‘how objects appear’ perceptually, not all tangible systems are designed in that way. This brings into play aspects of metaphorical correspondence [13] that play a very important part for children’s interpretation and comprehension (and thus learning), as well as the role of affordances for allowing (or inviting) actions that have sensible results to improve the mapping of actions to effects [3]. Tangible technologies still represent a fairly novel paradigm of human–artefact interaction [3] and eventual learning gains are reported in rather hesitant and informal accounts of empirical studies, as most findings consist of anecdotal descriptions of children’s enjoyment and engagement in discovery collaborative activities with the new technologies. In particular, studies that analyse intellectually disabled children’s interactions with tangibles are still scarce [14,15,16,17,18].

This article analyses how children with intellectual disabilities conceptually interpreted the representational elements of four tangible artefacts and which characteristics of the tangible systems were key for children–tangible interaction. In the next section, we discuss how conceptual metaphors are represented in the design of tangible artefacts. Then, we explain the method followed for running empirical studies and analysing the multimodal data. Next, we present the results of the qualitative analysis of child–tangible interaction with a focus on the comprehension of conceptual metaphors, followed by a discussion, and then conclude the article. The findings can feed into the design and use of tangible artefacts with children with intellectual disabilities, potentially being useful for developers and educators.

2. Conceptual Metaphors in Tangible Systems

Metaphors became the basis of interface design with the appearance of graphical user interfaces [1]. According to Dourish, “metaphor is such a rich model for conveying ideas that it is quite natural that it should be incorporated into the design of user interfaces” [1] (p. 143). One of the most commonly stated purposes of tangibility is that such interfaces provide ‘natural’ mappings that employ spatial analogies and adhere to cultural standards [3]. Tangible interaction increases the ‘realism’ of artefacts, allowing users to interact directly with them, through actions that correspond with daily practices within the non-digital world (also called reality-based interaction) [19]. Within the tangible paradigm, people interact with physical objects that convey information through their encoded symbolic meaning, as well as through their physical properties [1]. Such physical representations have associated meanings relevant to the context of the system, conveyed through affordances that guide the user interaction [1,20].

Tangibles can implement space-multiplexing, where different physical objects can represent different functions or data entities, through persistent mappings that enable the designer to take advantage of shape, size, and position of physical devices, as they do not need to be abstract and generic but can be strong-specific, dedicated in form and appearance to a particular function or digital data [3].

However, symbolic information does not always have an obvious physical equivalent [21]. Several frameworks attempt to organise and classify the types of links between physical objects and their conceptual meanings. The taxonomy of Holmquist et al. [22] presents three categories of physical objects in terms of how they represent digital information; namely, containers (generic objects that can be associated with any type of digital information); tools (used to actively manipulate digital information, usually by representing some kind of computational function); and tokens (physical objects that resemble the information they represent in some way, and thus are closely tied to it). Tokens are thus the only category where there is a relationship between physical appearance and associated digital information [22]. Price et al. discuss the metaphors involved in tangible systems in terms of ‘correspondence’ [23]. Of particular interest here is ‘physical correspondence’, which refers to mappings between physical properties of the objects and associated learning concepts. Physical correspondence is symbolic when the object has little or no characteristics of the entity it represents; and literal when the object’s physical properties are closely mapped to the object it is representing.

An important issue to note though, is that meaning does not reside in the representations themselves, but in the ways in which they are manipulated and interpreted [1]. An action-centric view focuses on what can be done with the resources, rather than on the resources themselves and the information they are meant to represent. This perspective argues for tangibles as resources for action, while criticising the focus on representations for being ‘data-centric’ and thus lacking contextualised interaction analysis [24]. Although a focus on resources for action allows creating complex and powerful systems, mappings between representations and meanings become less clear, which can be problematic in educational contexts, particularly in the case of children with intellectual disabilities. Still, it is important to consider that meaning attached to artefacts or assumed by designers is not necessarily transparent to students, nor interpreted by them as the designer predicted [25]. In this sense, Antle proposes that physical-digital semantic mappings should be analysed in the context of children’s comprehension of things in various representational forms, considering the reciprocal nature of physical and mental representations [26]. This is reinforced by Lave and Wenger’s thought that using artefacts and understanding their significance interact in the construction of knowledge within the learning process [27], and Meira’s consequent argument that it is important to study the use of the artefacts in contexts of practice and how they are transformed by the students [25].

Within this theoretical frame, this study specifically aimed to analyse children’s comprehension and interpretation of metaphorical representations in tangible interaction, identifying potential consequences of certain design choices for the learning of students with intellectual disabilities.

3. Method

This research is aligned with an interpretivist epistemology and a socio-constructionist philosophical perspective, taking an inductive approach to develop explanations by moving from observations to theory [28]. It is a descriptive and exploratory kind of research that aims to investigate the ‘how’ and ‘why’ of phenomena [29]. The influence of the researchers’ context and background is acknowledged as affecting and informing the research [30], and findings result from a combination of the understanding of the researcher and of those being researched. The overall goal of the research is to investigate how different characteristics of tangible interaction may support children with intellectual disabilities to productively engage in a process of discovery learning. In this article specifically, we present an analysis focused on children’s interpretation of meanings and metaphors from multimodal interaction in the domains of light and colour, music, and spatial positioning and orientation.

3.1. Participants

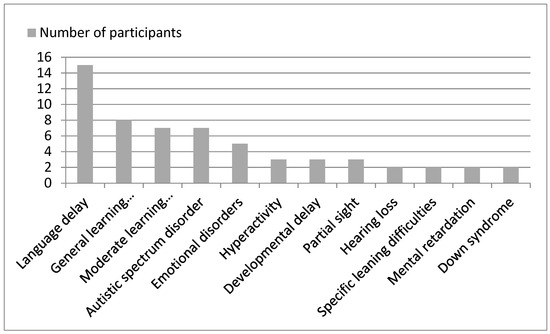

According to a socio-constructionist perspective—which emphasises the importance of culture and context [31], and sees disability as a socially contrived construct derived from social values and beliefs [32,33]—and aiming to address the context of schools, children that contributed to this research were selected on the basis of being considered intellectually disabled by their school’s staff. The criteria for selecting the students were the teachers’ expertise and opinions, within the context of the research. Although reasonably heterogeneous (Figure 1), this group shares common key characteristics, and in the reality of schools, they must be treated as a group by teachers in the learning process, thus possessing total relevance to the phenomenon investigated.

Figure 1.

Main difficulties of participant students (Partial sight and hearing loss were minor physical impairments that accompanied intellectual disabilities, and not the sole reason for including these children in the studies, as the research does not focus on physical disabilities).

The age of the participants ranged mainly from 11 to 13 years. They were in the end of primary or beginning of secondary school. Forty-six children (31 boys and 15 girls) participated, from five different schools, being three mainstream schools (one primary and two secondary) and two special schools (both secondary). Schools’ and participants’ names are not revealed for confidentiality reasons.

Ethical principles followed in the present research included providing written and oral information about the project for schools and parents; obtaining informed consent from parents and legal guardians (with children’s agreement); and guaranteeing anonymity, confidentiality of information, and the right to withdraw at any time [34]. The present research adhered to the British Educational Research Association (BERA) Professional Ethics Code, and was approved by the Research Ethics Committee of the Institute of Education before any kind of data collection was performed. In addition, the researcher underwent a Criminal Record Bureau check, as the research involved children.

3.2. General Procedure

Empirical sessions consisted of children with intellectual disabilities undertaking exploratory activities using tangible technologies, facilitated by the researcher, in dedicated rooms at the university. Each session (with each tangible) lasted about 15 min, and students worked in dyads or triads. Interaction was exploratory: the facilitator set a general goal or question to be explored and gave eventual guidance on an if-needed basis. Students were briefly informed of how the system worked and what it was about, contextualising the theme within everyday life, along with a short practical demonstration by the facilitator, showing the basic functioning of the system and the mode of interaction. Then, the facilitator asked the students to explore the system to try to find out what it was showing and what they thought it meant. Some prompting was needed when the students were reluctant to explore by themselves, that is, suggesting that they use a specific object and see what happens (specific questions and instructions given to students are presented in the findings section). Near the end of the session, the facilitator asked the students to describe what they were doing, and explain what they thought was happening in the system and what this meant.

Records of all sessions were made on video. Video technologies allow detailed recordings of facts and situations, and catch actions and processes that may be too fast or too complex for the human observer watching the situation develop in real time. Although some information is inevitably lost in the recording process, a situation captured in video is more detailed, complete, and accurate than one that is uniquely observed, besides being available for interpretation and analysis at any posterior time [35,36]. In comparison with other data collection methods, video recording includes the non-verbal parts of interaction that are not captured through audio recording; allows registering real-time actions instead of having accounts of actions from a retrospective point of view; and provides the opportunity for capturing more aspects and details than in observation with note taking, thus reducing the selectivity of data construction and broadening the possibilities of analysis [36]. This is particularly relevant when analysing multimodal interaction. Video data is not primarily concerned with talk, but more generally with ways in which the production and interpretation of action relies upon spoken, bodily, and material resources, that is, how people orient bodily, grasp, and manipulate artefacts, and articulate actions within their activities [37]. The use of recorded data thus controls the limitations and fallibility of ‘in person’ observation, providing some guarantee that analytic considerations will not arise from selective attention or recollection [38]. It was thus fundamental to keep a detailed record of the sessions so as to revisit the data to undertake robust qualitative analysis.

3.3. Analytical Approach

A premise of the present research was that the spoken and bodily conduct of the participants was inseparable from, and reflexively constituted, material features of the environment. The focus of the investigation was physical interaction of children with intellectual disabilities with tangible technologies, that is, the different actions performed by the children with such artefacts. Although talk was considered in the analysis, actual verbal utterances of the participants were rare in many cases because of this population’s difficulties in verbalising their thoughts and even in articulating words. Thus, a lot of the analysis had to rely on subjects’ actions and bodily postures.

Video data is multimodal and thus very rich and complex when compared with text, containing information on several levels (e.g., speech and visual conduct, gesture, mimic expressions, representation of artefacts, structure of the environment, signs, and symbols). More often than not, time and/or cost constraints make a detailed analysis of the full video prohibitive. Data were analysed according to meaningful ‘chunks’, identified in terms of causal, behavioural, and thematic structures, and transcribed at a sufficient level of detail for the research aims. Transcription does not replace video recording as data, but provides a resource that allows the researcher to become more familiar with details of the participants’ conduct, clarifying what is said and done, by whom and in what ways, and exploring potential relations between multimodal aspects of the interaction [37]. A whole-to-part analytical inductive procedure was adopted to identify major events, transitions, and themes [35].

As a result of such an approach, four general themes emerged from data: types of digital representations [17]; physical affordances [17]; representational mappings [18]; and conceptual metaphors. This paper presents a qualitative analysis of children’s interpretation of conceptual metaphors, for each tangible used in the empirical studies.

4. Analysis and Findings

Four tangible artefacts were used in this research: the LightTable, the drum machine, the Sifteo cubes, and the augmented object. In this section, each is described in terms of their technical functioning and representational elements, followed by an analysis of how children interpreted the metaphorical representations embedded in the design of each of them.

4.1. LightTable

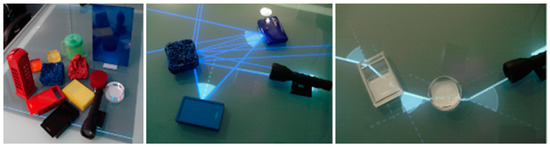

LightTable (Figure 2) is a tangible tabletop designed as part of the Designing Tangibles for Learning project (2008–2010), at the London Knowledge Lab, to support young students learning about the behaviour of light, in particular basic concepts of reflection, transmission, absorption and refraction of light, and derived concepts of colour. The setup of the tangible tabletop is fairly similar to that of reacTable, using similar technology for object recognition. The frosted glass surface is illuminated by infrared light-emitting diodes (LEDs), which enable an infrared camera, positioned underneath the table, to track the objects placed on the table surface using the reacTIVision software [39]. The objects that serve as physical interaction devices are handcrafted and off-the-shelf plastic objects. When distinct objects are recognised by the system, different digital effects are projected onto the tabletop. The torches act as light sources (causing a digital white light beam to be displayed when placed on the surface), and objects reflect, refract, and/or absorb the digital light beams, according to their physical properties (shape, material, and colour).

Figure 2.

Different shapes, materials, and colours of the objects (left) and the effects some of them produce (centre and right) in the LightTable.

LightTable was designed based on literal physical correspondence; all interaction objects have a role in the simulation that is identical to their role in the ‘real world’, that is, a green, smooth, opaque block has in the system the physical properties of a green, smooth, opaque block, and nothing else. The digital effects illustrate real phenomena that occur with such objects in the physical world, but that are not visible to the human eye; for example, a green light beam being reflected off a green block (which is why an object is seen as green, but the process of reflection is not naturally visible in the physical world). Thus, the digital simulation of LightTable is based on the physical characteristics of each interaction device, which have persistent individual behaviours. Such persistence enables taking advantage of shape, size, and position of physical devices, as they are dedicated in form and appearance to a particular function or digital data [3].

In the sessions with LightTable, students were told that the system was about light and how we see colours, contextualising the theme within their life, and were given a short practical demonstration by the facilitator, showing the basic functioning of the system and the mode of interaction (i.e., placing and manipulating the objects). During children’s exploration of the system, the facilitator prompted them with specific questions according to the configurations they were building with the objects on the table, taking opportunities to discuss the concepts involved.

Physical characteristics of LightTable objects, for being very concrete and appealing to the senses, like colour, material, texture, and shape (Figure 2), were generally well perceived by the students, i.e., they recognised the differences across the objects. The practical consequences in the simulation, i.e., how light behaved differently according to the physical characteristics of each object, were learned throughout interaction, as shown in the excerpts below (all names used are pseudonyms):

Researcher: and are they all the same, these objects, do they all behave the same?John: noDavid: yesResearcher: what’s the difference between this one that you’re holding now [the transparent cardholder]David: this is plastic, that’s rock [one of the blocks].Researcher: and when you put it on the table, does it behave the same?John: no, it changes colour

Researcher: why do you think this blue object over there [the rough object] is making a different effect?David: because it’s a different material.Researcher: yeah! So what does it do, this different material?David places a red opaque object on the light beam.John: huh … I don’t know [picking up the rough object]. Maybe it’s because there’s loads of bits of blue … and it’s reflecting over the bits, it makes loads … different, like … [pointing to the reflected beams in many directions].David places a green opaque object on one of the torch’s beams. John sees it and picks it up to add to his explanation.John: whereas the green, it’s only like … it got nothing [showing the smooth surface with his hands], it’s just one colour …John picks up the rough blue object again.John: this is the same colour, but it’s got … all over the place, different blues. And when you put it on there, there’s loads of it, like it is on the material.

The excerpts show that David and John noticed the sensory information of the objects, and not only colour, but also texture/materiality. This information was salient enough to make itself readily present to the students, and led them to identify the correspondent properties and how they differ across the objects.

Nevertheless, perceiving the physical properties of the materials did not mean that students understood the underlying concepts related to the physics of light. In his analysis of the Illuminating Light system, whose design is related to LightTable, Dourish [1] identified various levels of embodied interaction, with multiple levels of meaning being associated with the objects and their manipulation. According to Dourish, a user might move the physical devices just as objects; to see what happens, clear them out of the way, and so on. Or, they might choose to move the icons as mirrors and lenses, that is, as the metaphorical objects that they represent in the simulation space. Yet on another level, these metaphorical objects can be used as tools in another domain (in this case, laser holography), and thought of as, for instance, virtual mirrors with the function of redirecting a virtual beam of light [1]. In studies with LightTable, students with intellectual difficulties, for most of the interaction, remained at the first level of embodied interaction, using the physical devices just as objects, to see what happens. This, per se, is an important step in the learning process: first understand what happens and then become capable of making inferences about the underlying concepts. However, children in these studies were not able to move to the next level. The excerpt below typically represents the kind of dialogues and interaction that predominated in the studies. Figure 3 illustrates highlighted passages of the excerpt.

Figure 3.

Boys engage in manipulating objects, but make no associations with the conceptual domain. They point both torches to the rough blue object, producing many blue beams (left); one boy points the torch to a smooth red object (centre); torches point to red and yellow objects (right).

Lionel points a second torch to the blue rough object and boys enjoy the effects, as both torches point to it.Derick: oh look at this, it shines both sides.Lionel walks to the object area and chooses a different object, the red rectangular object with a hole. He places it on the surface and points the torch to it. He notices the spectrum of colours.Lionel: look! Multicolour.Both boys observe for a couple of seconds. Derick places a yellow block on the white beam. Lionel moves it to another white beam. Then Lionel puts the yellow block inside the red object’s hole, and points the torch to it.Lionel: let’s put this in here.Boys observe but nothing different happens.Derick: let’s shine it on the blue now.

The excerpt shows how the boys were concentrated on producing a variety of effects and exploring the system, but with no spontaneous conceptual links with the domain of physics of light. Previous studies with LightTable have shown that spontaneous engagement with the concepts did occur during interaction of typically developing children of similar ages with the same tabletop [40]. However, students with intellectual disabilities had great difficulty in transferring the concepts conveyed by the system to the physical world; even when they understood the rules involved in the interaction between the objects, they did not associate them with what happens in the physical world with such objects. In other words, they were not able to generalise and take concepts to ‘another level’ of abstraction. In addition, a drawback of LightTable is the paper tag (fiducial), necessary for the camera to recognise each object. Objects had to be placed with these markers facing the surface. The markers caught the students’ attention, as can be seen in the excerpts below, for being unfamiliar symbols. The students (correctly) associated them with the technical functioning of the system, but this made students focus on technical aspects instead of conceptual ideas. In other words, when asked for explanations about the phenomena observed, students were not able to separate technical aspects from conceptual aspects, and used both interchangeably, as shown below:

Researcher: so what do you think is happening there, can you tell me what you found out?Bob: what I think is happening [picks an object and shows fiducial], because of this laser, it’s going through it and it’s like … this little thing in there will … like … maybe there’s all colours in there, and when this touches it, light … they’ve gone to there [placing object on surface] and then just like …Emma: it takes all the colours and like … they try to form the colours of this [an object] on to there [the surface], and make the colour … they’re like sensors.Researcher: and what do you think this is about? What is it trying to show you, or teach you?Bob: It’s trying to show you here that you can form like really good patterns of colours.

Researcher asks the girls what they found out so far and what they think is happening in the system. The girls hesitate.Donna: when you put this [holds the torch] on, it’s like … a line is like coming through that way [makes the action to demonstrate – her hand goes along the light beam] … and every time you move it … it like … it goes like … hum … left and right [moving her hands to demonstrate].Researcher: and when it hits an object, what happens?Donna: well, when you put this [pointing to a red rectangular object] on, the line goes red … because the object is red.

The excerpts show that students’ explanations for what the simulation was showing were mostly technical and pragmatic descriptions of what they observed, and not abstractions and generalisations of concepts. Students could describe what was happening in terms of ‘lines’ and colours, but grounded in very specific, concrete instances observed (i.e., they could say that a red line comes out of a red object, but did not say sentences like “an object produces a line of its own colour”, for example). This relates to known difficulties with abstraction and generalisation of children with intellectual disabilities [41,42] and to Vygotsky’s example of a child who knows they have ten fingers on their hands, but is not able to guess how many fingers another person has—in other words, they cannot extract from a concrete object a corresponding sign to be applied to a collection of objects in the same class [43].

4.2. The Drum Machine

In the drum machine system, unlike LightTable, the physical form of the objects did not hold any metaphorical correspondence to the conceptual object (percussive sounds produced). The d-touch drum machine is part of ‘audio d-touch’, a collection of applications for real-time musical composition and performance [44], controlled by spatially arranging physical objects on an interactive surface, which consists of a simple printed piece of paper. With d-touch, the spatial arrangement modifies sound, and a surface is repeatedly scanned to identify the location of a physical object and determine the sound to be played. The vertical position of the objects determines the type of sound that will be triggered, while the horizontal position determines the timing of the sound trigger, within a computer loop. A sound is thus played for each object placed on the surface, repeatedly, within a loop. This allows the user to create percussion-based musical compositions just by placing the objects on the surface. However, no digital effects are projected onto the surface, nor does it react in any visual way. The only feedback given by the system is auditory. Also, the blocks do not communicate with each other—the only identified parameter is their location on the surface.

In the drum machine, there is a distance between the interaction instruments and the conceptual object: the set of interaction devices are controllers for the abstract object of sound. In addition, the physical devices are equally and generically shaped, and their appearance does not indicate their meaning or function; according to the d-touch designers [44], the objects could be anything, provided they were tagged with the symbols shown in Figure 4, and they serve as tools to trigger sounds. The interactive area does not have an associated conceptual metaphor either—it is simply a graphical arrangement to enable the mappings between position and sounds, and thus allow the user to compose music. Thus, there are no visual associations with a real drum machine or the types of sounds it can produce: representations are all very abstract. For the students, the piece of paper and blocks were not meaningful; they could not relate them to what they knew from their experiences in life with music.

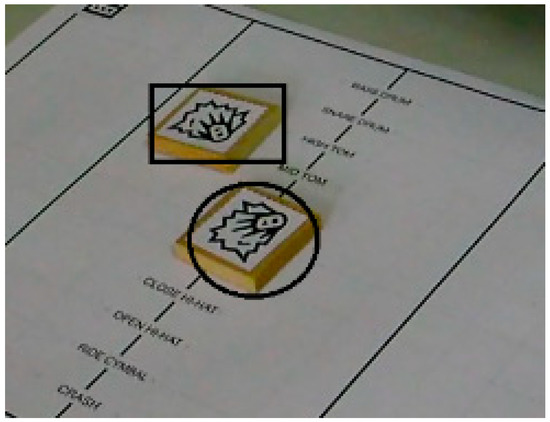

Figure 4.

The drum machine’s customised physical components.

Another important drawback was the fact that meaning was embedded in the devices’ position in the interactive area and not in their shape or appearance, that is, the sounds played were determined by the presence of blocks in specific locations. Sounds were mapped to positions of objects, but not to the objects per se. Students were not able to understand such mapping, nor that the blocks did not carry meaning, but were just triggers for the sounds according to where they were placed. Students rather thought that the sounds depended on each block; that is, to produce a sound of cymbal, for example, one had to place a specific block on the surface, because that block was the trigger for that sound. The excerpt and Figure 5 below illustrate the mapping between sounds and blocks made by the students:

Researcher: Are the sounds all the same?Andrew: NoResearcher: Why do you think there are different sounds?Andrew: because of the blocks …Researcher: Right … what if … let’s try something here.The researcher takes all the blocks away by pushing them with her hand to the side. All sounds stop.Researcher: Let’s see if it stops … has it stopped? Yes … So, if I put one block here. Let’s see … [Researcher places a block on the surface]. If I ask you now to produce a sound different from the one I did, how could you do this?Andrew: another block.Researcher: Another block? And where would you put it?Andrew places a block very near the researcher’s block.

Figure 5.

Block placed by Andrew (marked with circle) when asked to produce a different sound from the one produced by researcher with block marked with rectangle.

4.3. Sifteo Cubes with Loop Loop

Loop Loop, another musical application used in the studies, also has cubes as controllers of sounds, the difference being that they are not mere control devices. Loop Loop is an application developed for the Sifteo cubes, where each cube carries a meaning and has a specific role in the process of composing music. The Instrument cube contains sixteen different types of sounds grouped into four categories. The user can switch between categories by pressing the cube, and listen to each sound by joining the Preview cube with each side of the Instrument cube. To add sounds and thus compose music, the user must join the Instrument cube with the Mix cube. The Mix cube plays, within a loop, all sounds that were added to each of its sides. Sounds added to the same side are played simultaneously. Sounds can also be removed from the Mix cube by joining it with the Instrument cube on the side that matches the sound to be removed. Each cube has a different visual representation (graphics, text, and colours) indicating its function. For example, the Instrument cube has a colour for each category of sound, and the Mix cube has a dashed line going around the sides to indicate the progress of the loop.

These characteristics should have helped students to establish more meaningful mappings than in the case of the completely abstract drum machine’s interaction devices; however, it proved insufficient. Loop Loop’s physical correspondence was symbolic: the Sifteo cubes are equally and generically shaped and do not exploit physical properties, such as shape or size, to convey meaning. Meaning was conveyed through visual representations only in the cubes’ embedded screen. The lack of difference in physical properties heightens the concept of ‘similarity’ between the cubes, making it less likely that students perceive them as having completely different functions and behaviours. Despite the visual representations on screen, the children manipulated them as if they performed the same function, showing no signs of perceiving differences in meaning or functionality between the cubes, but rather dealing with them as identical components of an assembly kit. An illustrative example is given below:

Researcher: Do you like to make music? We’ll try to make a bit of music with the blocks. So, I’m going to explain to you how it works.Researcher explains and demonstrates with the cubes. Paul does not concentrate for very long during the explanation. After finishing the explanation, the researcher suggests that the boys try to make some music. Nathan joins one of the cubes with the cube Paul is holding.Paul: use that …Nathan joins his two cubes in different ways, and joins one cube with Paul’s. Sounds are played now and then. Nathan takes all three cubes and tries different spatial configurations. Paul shakes a cube. Boys press the cubes (which pauses the system).Researcher: tell me when you’ve finished your tune and we’ll listen to your tune.Nathan tries quickly many different ways of joining the cubes.

In addition, even though the cubes had different roles, a conceptual distance between the interaction devices and the conceptual object existed: in Loop Loop, cubes did not represent the sounds themselves—two of them (the Instrument cubes and the Mix cube) could be seen, at most, as containers of several different sounds. It must be acknowledged that sounds do not have obvious physical counterparts, making the design of audio-based systems much more complex in relation to conceptual metaphors, and to designing representations that could be considered as presenting literal physical correspondence.

4.4. The Augmented Object

Last, but not least, the augmented object was developed as part of the project Designing Tangibles for Learning. A polymer cylindrical container was digitally augmented with an accelerometer and an LED screen to respond to movement by displaying different colours. By manipulating the object and rotating it, children could observe the mapping of orientation to colours, which provides an interesting exploration of ‘positioning’ (Figure 6).

Figure 6.

Students point object to different things to check if it changes colour accordingly.

The augmented object embodied its own representation, it was not meant to stand for something else, so there was no distance between interaction instrument and conceptual object. It did not build on conceptual metaphors and was simply an object to be explored in its own right. The augmented object’s shape was generic and had no specific associated meaning from the real world. However, the feedback from the embedded lights provided an abstract mapping that related to positioning, adding another dimension to the object’s behaviour.

Students’ comprehension of the object’s behaviour concentrated on the fact that it “changed colour” (clear action-effect mapping), but it was hard for them to go beyond this in terms of establishing specific mappings between position and colour. This relates again to these students’ difficulties to generalise and build abstract theories from concrete instances. Although they knew that they could produce different colours by moving the object and changing its position, they were unable to articulate general rules such as “if you put the object with the lid down, it will show green”. So students were aware of the action-effect mapping, but did not easily establish the conceptual rules of the system, as shown below:

Researcher: what do you think it’s happening, what is it that you do that is affecting it?Diane: moving it.Donna: and controlling, how you move it.Researcher: so how do you move it to control?Donna: well, it’s like … huh… I don’t know [giggles and looks at Diane]

Researcher: so why do you think it’s changing in that way?Diane: because we’re doing the movement?Researcher: so what do you think your movement is doing then? Are there certain kinds of movement?Donna: there could be … I don’t know … yeah, it’s like we’re controlling it by touching it, maybe …

In an effort to find explanations for the object’s behaviour, students came up with a variety of theories; such as, “is it like a mood bracelet?”; “so do you talk at it?”; “the air, when moving the object, makes it change colour”; “it changes colour when it sees the special thing”; “there’s a sensor, and then when you press, when you put your thumb on it, and take it off, it changes colour”; “oh, I know! It looks like this ball, at soccer time”; “a kind of lamp”; and “it’s like a disco”. A recurrent theory among the students was that when the object was pointed somewhere, it captured the colour of whichever thing it was pointing to. Figure 6 illustrates students testing this theory.

The generic shape of the augmented object seemed to give children freedom to create their own metaphors and try to make associations with real life, trying to find out what the object meant, through a combination of previous knowledge and ‘magical thought’. Similar findings were reported with the Chromarium system: according to the authors, children seemed to understand that there were causal links between the representations and their actions, though explanations that were “a mix of magical thought with bits of previous knowledge” [45]. Indeed, interface metaphors often carry some tension between literalism and magic [46] because digital technologies allow combining realistic simulation with more abstract formalisms [47], and computational referents have capabilities that metaphorical objects do not. Therefore, there is a moment when the metaphorical vehicle is abandoned and ‘magic’, or the extra power of the digital technologies, takes over [1]. On one hand, this breakdown in correspondence to the real world can be seen as an advantage provided by digital technology [48], for aggregating extra capabilities; on the other hand, in educational systems, it can lead to misunderstandings and confusion in the learning process [13]. Deepening this discussion, however, is out of the scope of this research. Here, creation of theories, being magical or more realistic, is seen as a positive sign of students’ exploratory behaviour.

5. Discussion

Table 1 summarises the design characteristics of the tangibles used in the research, with regard to conceptual metaphors and mappings. The implementation of space-multiplexing through persistent mappings between specific physical objects and their meanings (as in the LightTable) yielded better results than the use of generically-shaped objects that “could be anything” (as in the drum machine). The individualisation of objects implemented virtually was not as comprehensible as when based on physicality, even if done through screens embedded on the physical objects (like with the Sifteo cubes). Similarly, truly direct manipulation of objects that conveyed meaning through their specific physical characteristics led to more comprehension than the use of interaction devices that were mere controllers of abstract concepts.

Table 1.

Design characteristics of tangibles used in the research.

It is clearly noticeable that space-multiplexing and distance between interaction and conceptual objects are closely-related design aspects that should be considered jointly. Moreover, this discussion should also be situated within a broader analysis. Besides the design of conceptual metaphors analysed in this paper, three other themes also emerged from previous analysis; namely, types of digital representations, physical affordances, and representational mappings [17,18]. These four themes are intertwined and affect child–tangible interaction in combination. For instance, besides the generic physical form of interaction devices and their distance to the conceptual objects, the difficulty faced by children to interact with the musical applications was the result of a conjunction of factors, such as the difficulty of perception of auditory representations per se [17], the decoupling of input and output, the delayed feedback [18], and the lack of literal physical correspondence. On the other hand, besides the dedicated physical form and truly direct manipulation, LightTable aggregates other design characteristics previously found to be positive: relying on visual representations and physical affordances [17], presenting immediate feedback for actions [18], and being based on physical correspondence.

Ideally, researchers, educators, and designers should take into account all four themes when developing tangible environments for children with intellectual disabilities. In this sense, the set of recommendations presented in previous works [17,18] can be extended by a new guideline derived from the analysis presented in this paper: representations should make metaphorical references to the conceptual domain, building on objects’ physical properties and evoking links with the physical world.

6. Conclusions

The overall goal of this research is to investigate how tangible technologies can help children with intellectual disabilities engage in productive exploratory learning. This paper presented an analysis of child–tangible interaction focused on children’s interpretation of the conceptual metaphors embedded in four different tangible artefacts, within a process of exploration and meaning-making. Analysis has shown the importance, for children with intellectual disabilities, of providing connections with familiar contexts and the physical world through the systems’ representations, particularly by taking advantage of physical properties. The most straightforward way to do this would be designing for literal physical correspondence, as in the case of LightTable. Although, if physical representations do not hold such strong metaphorical links to the conceptual objects, which indeed is not always possible, they should at least evoke familiar concepts from children’s world (as in the case of the augmented object and the Sifteo cubes). Abstract representations like the drum machine’s, with no metaphorical correspondence to the conceptual domain, and unfamiliar representations, led to poor results in comprehension and exploration. It is important to say, however, that literal physical correspondence or strong metaphorical links do not guarantee children’s comprehension of underlying concepts, particularly for children with intellectual disabilities, as has been shown with LightTable.

The present analysis adds to previous discussions towards a more holistic understanding on how to best design tangible systems in order to encourage children’s independent exploration and consequent meaning-making, supported by multimodal interaction. Within this context, conceptual metaphors that capitalise on physical properties and on familiarity with the students’ world are highly recommended.

Funding

The PhD research described in this paper had no specific funding. However, two of the materials used (the LightTable and the augmented object) were developed in the project ‘Designing Tangibles for Learning: an empirical investigation’, funded by the Engineering and Physical Sciences Research Council (EPSRC) grant number EP/F018436.

Acknowledgments

I would like to thank in particular Sara Price, main supervisor of this work, who also supported, revised, and encouraged this publication. My thanks to Diana Laurillard who co-supervised this research, and to all children and teachers who agreed to take part in the studies. The empirical studies would not have been possible without the support of the London Knowledge Lab staff. I also thank my colleagues for their most generous help in the conduction of the studies.

Conflicts of Interest

The author declares no conflict of interest.

References

- Dourish, P. Where the Action Is: The Foundations of Embodied Interaction; MIT Press: Cambridge, MA, USA, 2001; ISBN 0-262-04196-0. [Google Scholar]

- Ullmer, B.; Ishii, H. Emerging Frameworks for Tangible User Interfaces. In Human-Computer Interaction in the New Millenium; Carroll, J.M., Ed.; Addison-Wesley: New York, NY, USA, 2001. [Google Scholar]

- Shaer, O.; Hornecker, E. Tangible User Interfaces: Past, Present, and Future Directions. In Foundations and Trends in Human-Computer Interaction; Now Publishers Inc.: Hanover, MA, USA, 2010; Volume 3, pp. 1–137. [Google Scholar]

- Fishkin, K.P. A taxonomy for and analysis of tangible interfaces. Personal and Ubiquitous Computing; Springer: Norwell, MA, USA, 2004; Volume 8, pp. 347–358. [Google Scholar]

- Hornecker, E.; Buur, J. Getting a grip on tangible interaction: A framework on physical space and social interaction. In Proceedings of the Conference on Human Factors in Computing Systems CHI’06, Montreal, QC, Canada, 22–27 April 2006; ACM Press: New York, NY, USA, 2006. [Google Scholar]

- O’Malley, C.; Fraser, D.S. Literature Review in Learning with Tangible Technologies; NESTA Futurelab: London, UK, 2004. [Google Scholar]

- Parkes, A.; Raffle, H.; Ishii, H. Topobo in the wild. In Proceedings of the Conference on Human Factors in Computing Systems CHI’08, Florence, Italy, 5–10 April 2008; ACM Press: New York, NY, USA, 2008. [Google Scholar]

- Marshall, P. Do tangible interfaces enhance learning? In Proceedings of the Conference on Tangible and Embedded Interaction TEI’07, Baton Rouge, LA, USA, 15–17 February 2007; ACM Press: New York, NY, USA, 2007. [Google Scholar]

- Clements, D.H. ‘Concrete’ manipulatives, concrete ideas. Contemp. Issues Early Child. 1999, 1, 45–60. [Google Scholar] [CrossRef]

- Resnick, M. Computer as paintbrush: Technology, play, and the creative society. In Play = Learning; Singer, D., Golinkoff, R.M., Hirsh-Pasek, K., Eds.; Oxford University Press: Oxford, UK, 2006. [Google Scholar]

- Zuckerman, O.; Arida, S.; Resnick, M. Extending tangible interfaces for education: Digital Montessori-inspired manipulatives. In Proceedings of the Conference on Human Factors in Computing Systems CHI’05, Portland, OR, USA, 2–7 April 2005; ACM Press: New York, NY, USA, 2005. [Google Scholar]

- Schneider, B.; Jermann, P.; Zufferey, G.; Dillenbourg, P. Benefits of a Tangible Interface for Collaborative Learning and Interaction. IEEE Trans. Learn. Technol. 2011, 4, 222–232. [Google Scholar] [CrossRef]

- Price, S.; Pontual Falcão, T. Designing for physical-digital correspondence in tangible learning environments. In Proceedings of the 8th International Conference on Interaction Design and Children, IDC’09, Como, Italy, 3–5 June 2009; ACM Press: New York, NY, USA, 2009. [Google Scholar]

- Farr, W.; Yuill, N.; Hinske, S. An augmented toy and social interaction in children with autism. Int. J. Arts Technol. 2012, 5, 104–125. [Google Scholar] [CrossRef]

- Marco, J.; Cerezo, E.; Basldassarri, S. Bringing tabletop technology to all: Evaluating a tangible farm game with kindergarten and special needs children. Pers. Ubiquitous Comput. 2013, 17, 1577–1591. [Google Scholar] [CrossRef]

- Jadan-Guerrero, J.; Jaen, J.; Carpio, M.A. Kiteracy: A Kit of Tangible Objects to Strengthen Literacy Skills in Children with Down Syndrome. In Proceedings of the 14th International Conference on Interaction Design and Children, IDC’15, Boston, MA, USA, 21–24 June 2015; ACM Press: New York, NY, USA, 2015. [Google Scholar]

- Pontual Falcão, T. Perception of Representation Modalities and Affordances in Tangible Environments by Children with Intellectual Disabilities. Interact. Comput. 2016, 28, 625–647. [Google Scholar] [CrossRef]

- Pontual Falcão, T. Action-effect mappings in tangible interaction for children with intellectual disabilities. Int. J. Learn. Technol. 2017, 12, 294–314. [Google Scholar] [CrossRef]

- Jacob, R.J.K.; Girouard, A.; Hirshfield, L.M.; Horn, M.S.; Shaer, O.; Solovey, E.T.; Zigelbaum, J. A Framework for Post-WIMP Interfaces. In Proceedings of the Conference on Human Factors in Computing Systems—CHI’08, Florence, Italy, 5–10 April 2008; ACM Press: New York, NY, USA, 2008. [Google Scholar]

- Gaver, W. Technology Affordances. In Proceedings of the Conference on Human Factors in Computing Systems—CHI’91, New Orleans, LA, USA, 28 April–5 June 1991; ACM Press: New York, NY, USA, 1991. [Google Scholar]

- Klemmer, S.; Hartmann, B.; Takayama, L. How bodies matter: Five themes for interaction design. In Proceedings of the 6th Conference on Designing Interactive Systems, DIS’06, University Park, TX, USA, 26–28 June 2006; ACM Press: New York, NY, USA, 2006. [Google Scholar]

- Holmquist, L.E.; Redström, J.; Ljungstrand, P. Token-based access to digital information. In Proceedings of the 1st International Symposium in Handheld and Ubiquitous Computing—HUC’99, Karlsruhe, Germany, 27–29 September 1999; Springer: New York, NY, USA, 1999. [Google Scholar]

- Price, S.; Sheridan, J.G.; Pontual Falcão, T.; Roussos, G. Towards a framework for investigating tangible environments for learning. Int. J. Arts Technol. 2008, 1. [Google Scholar] [CrossRef]

- Fernaeus, Y.; Tholander, J.; Jonsson, M. Beyond representations: Towards an action-centric perspective on tangible interaction. Int. J. Arts Technol. 2008, 1, 249–267. [Google Scholar] [CrossRef]

- Meira, L. Making sense of instructional devices: The emergence of transparency in mathematical activity. J. Res. Math. Educ. 1998, 29, 121–142. [Google Scholar] [CrossRef]

- Antle, A. The CTI Framework: Informing the Design of Tangible Systems for Children. In Proceedings of the 1st International Conference on Tangible Embedded Interaction—TEI’07, Baton Rouge, LA, USA, 15–17 February 2007; ACM Press: New York, NY, USA, 2007. [Google Scholar]

- Lave, J.; Wenger, E. Situated Learning: Legitimate Peripheral Participation; Cambridge University Press: Cambridge, UK, 1991. [Google Scholar]

- De Vaus, D.A. Surveys in Social Research; UCL Press: London, UK, 1993. [Google Scholar]

- Deslauriers, J.P.; Kérisit, M. Le devis de recherche qualitative. In La Recherche Qualitative. Enjeux Épistémologiques et Méthodologiques; Poupart, J., Deslauriers, J.P., Groulx, L.H., Laperrière, A., Mayer, R., Pires, A.P., Eds.; Gaëtan Morin Editeur: Montreal, QC, Canada, 1997. [Google Scholar]

- Dey, I. Qualitative Data Analysis: A User-Friendly Guide for Social Scientists; Routledge: London, UK, 1993. [Google Scholar]

- Schwandt, T.A. Three epistemological stances for qualitative inquiry: Interpretativism, hermeneutics and social constructionism. In The Landscape of Qualitative Research: Theories and Issues; Denzin, N., Lincoln, Y., Eds.; Sage: Thousand Oaks, CA, USA, 2003; pp. 292–331. [Google Scholar]

- Soder, M. Disability as a social construct: The labelling approach revisited. Eur. J. Spec. Needs Educ. 1989, 4, 117–129. [Google Scholar] [CrossRef]

- Slee, R. The politics of theorising special education. In Theorising Special Education; Clark, C., Dyson, A., Millward, A., Eds.; Routledge: London, UK, 1998. [Google Scholar]

- Dowling, P.; Brown, A. Doing Research/Reading Research. Re-Interrogating Education, 2nd ed.; Routledge: London, UK; New York, NY, USA, 2010. [Google Scholar]

- Derry, S.J.; Pea, R.D.; Barron, B.; Engle, R.A.; Erickson, F.; Goldman, R.; Hall, R.; Koschmann, T.; Lemke, J.L.; Sherin, M.G.; et al. Conducting Video Research in the Learning Sciences: Guidance on Selection, Analysis, Technology, and Ethics. J. Learn. Sci. 2010, 19, 3–53. [Google Scholar] [CrossRef]

- Flick, U. An Introduction to Qualitative Research, 4th ed.; SAGE: London, UK, 2009. [Google Scholar]

- Heath, C.; Hindmarsh, J. Analysing interaction: Video, ethnography and situated conduct. In Qualitative Research in Practice; May, T., Ed.; Sage: London, UK, 2002. [Google Scholar]

- Heritage, J. Garfinkel and Ethnomethodology; Polity Press: Cambridge, UK, 1984. [Google Scholar]

- Kaltenbrunner, M.; Bencina, R. reacTIVision: A computer-vision framework for table-based tangible interaction. In Proceedings of the Conference on Tangible and Embedded Interaction, Baton Rouge, LA, USA, 15–17 February 2007; ACM Press: New York, NY, USA, 2007. [Google Scholar]

- Price, S.; Pontual Falcão, T. Where the attention is: Discovery learning in novel tangible environments. Interact. Comput. 2011, 23, 499–512. [Google Scholar] [CrossRef]

- Abbott, C. E-Inclusion: Learning Difficulties and Digital Technologies; Report 15; Futurelab: London, UK, 2007. [Google Scholar]

- Stakes, R.; Hornby, G. Meeting Special Needs in Mainstream Schools, 2nd ed.; David Fulton Publishers: London, UK, 2000. [Google Scholar]

- Vygotsky, L.S.; Luria, A.R. Studies on the History of Behavior: Ape, Primitive, and Child; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1993. [Google Scholar]

- Costanza, E.; Shelley, S.B.; Robinson, J. Introducing Audio d-touch: A tangible user interface for music composition and performance. In Proceedings of the 6th International Conference on Digital Audio Effects (DAFX’03), London, UK, 8–11 September2003. [Google Scholar]

- Gabrielli, S.; Harris, E.; Rogers, Y.; Scaife, M.; Smith, H. How many ways can you mix colour? Young children’s explorations of mixed reality environments. In Proceedings of the Conference for Content Integrated Research in Creative User Systems CIRCUS 2001, Glasgow, UK, 20–22 September 2001. [Google Scholar]

- Smith, R. The alternate kit: An example of the tension between literalism and magic. In Proceedings of the Graphics Interface (CHI + GI’87), Toronto, ON, Canada, 5–9 April 1987; ACM Press: New York, NY, USA, 1987. [Google Scholar]

- Scaife, M.; Rogers, Y. External cognition, innovative technologies, and effective learning. In Cognition, Education and Communication Technology; Gardenfors, P., Johansson, P., Eds.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 2005; pp. 181–202. [Google Scholar]

- Grudin, J. The case against user interface consistency. Commun. ACM 1989, 32, 1164–1173. [Google Scholar] [CrossRef]

© 2018 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).