Data-Driven Koopman Based System Identification for Partially Observed Dynamical Systems with Input and Disturbance

Abstract

1. Introduction

2. Background

2.1. Problem Formulation

2.2. A Brief Review on Koopman Operator Theory

3. Proposed Method

3.1. Data-Driven Koopman Operator

| Algorithm 1 Minimization of L1 Norm with L1 Constraint |

|

| Algorithm 2 Subproblem for Updating |

|

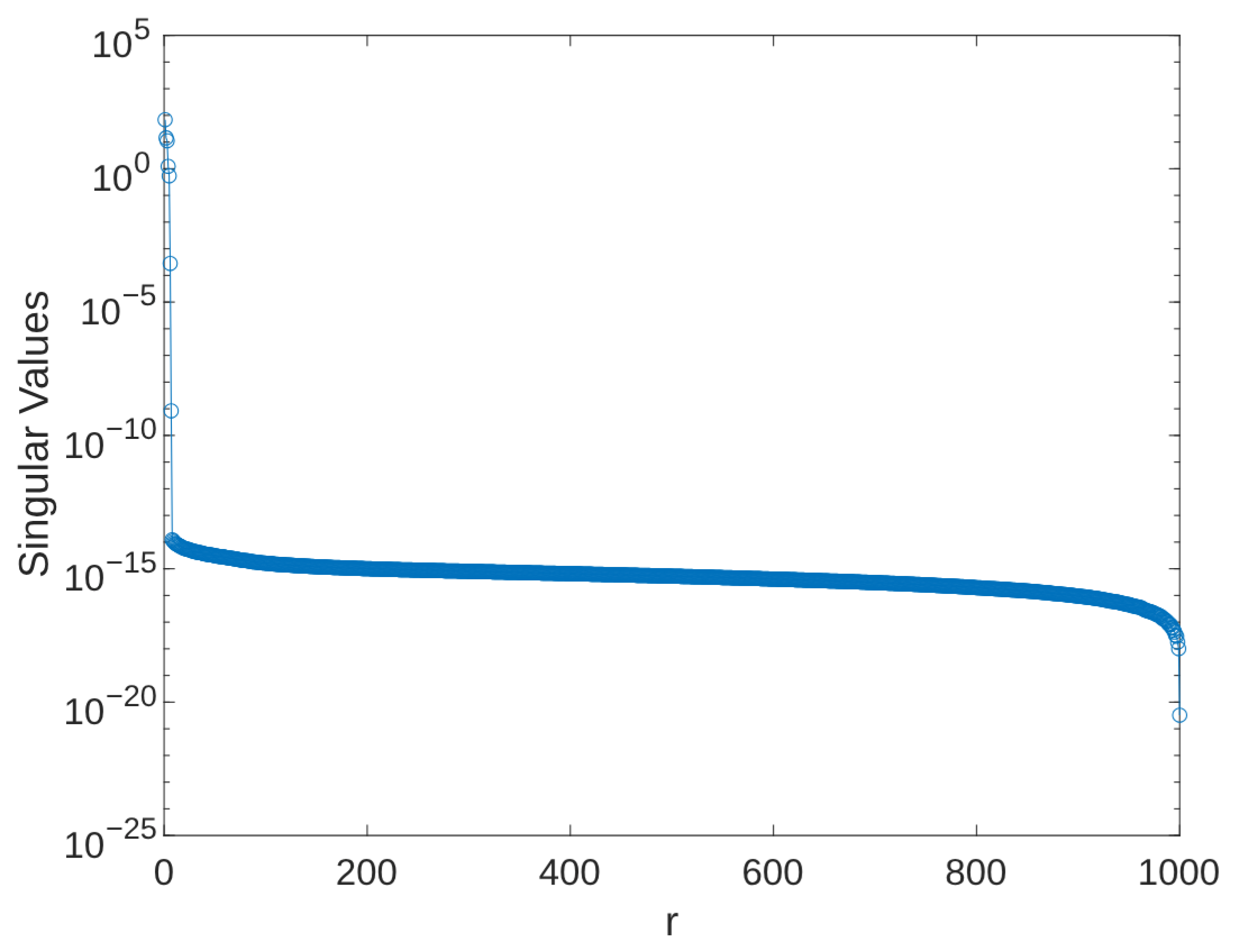

3.2. Low Rank Approximation Method

| Algorithm 3 Partial SVD using Matrix Sketching |

|

4. Numerical Examples

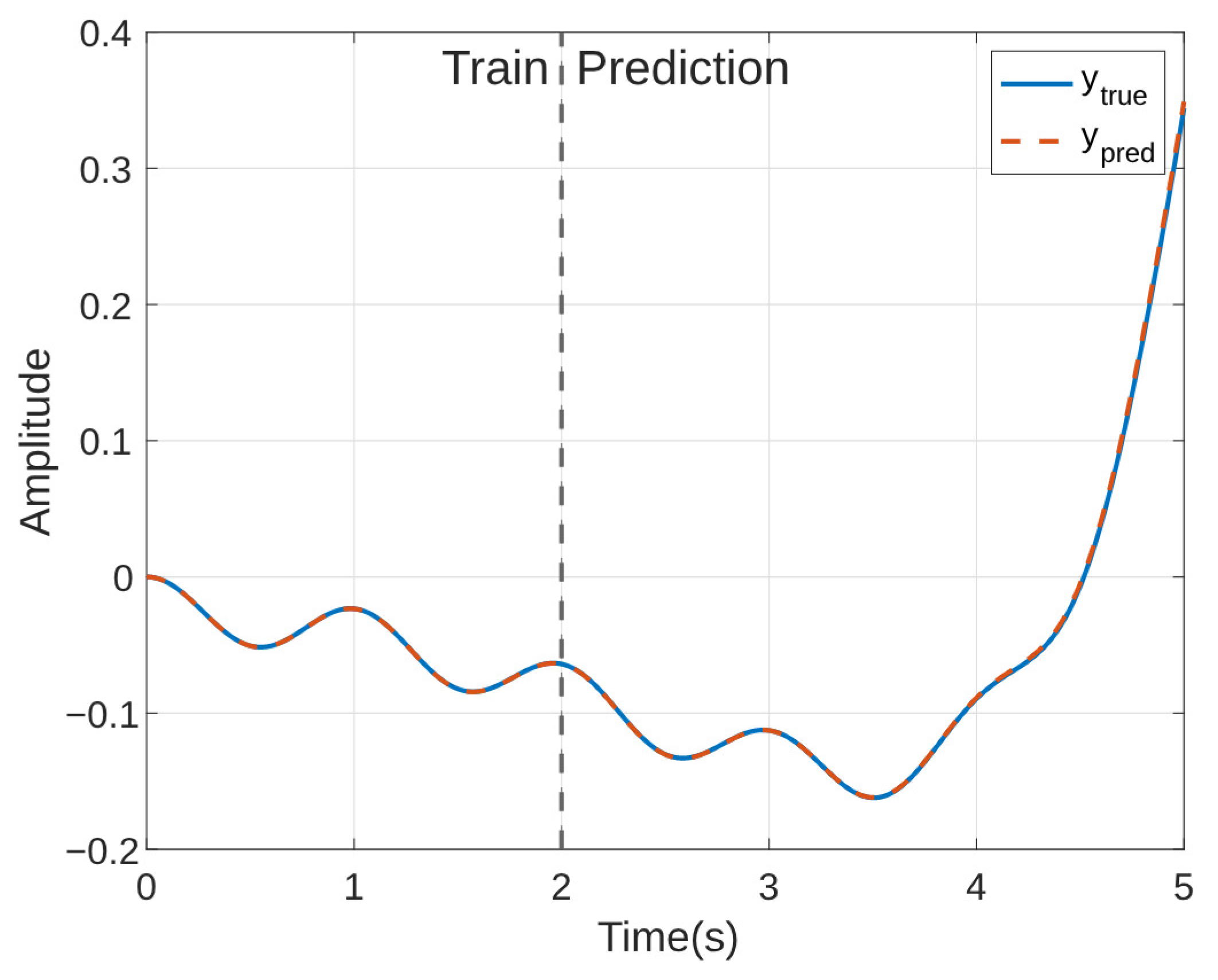

4.1. Nonlinear System

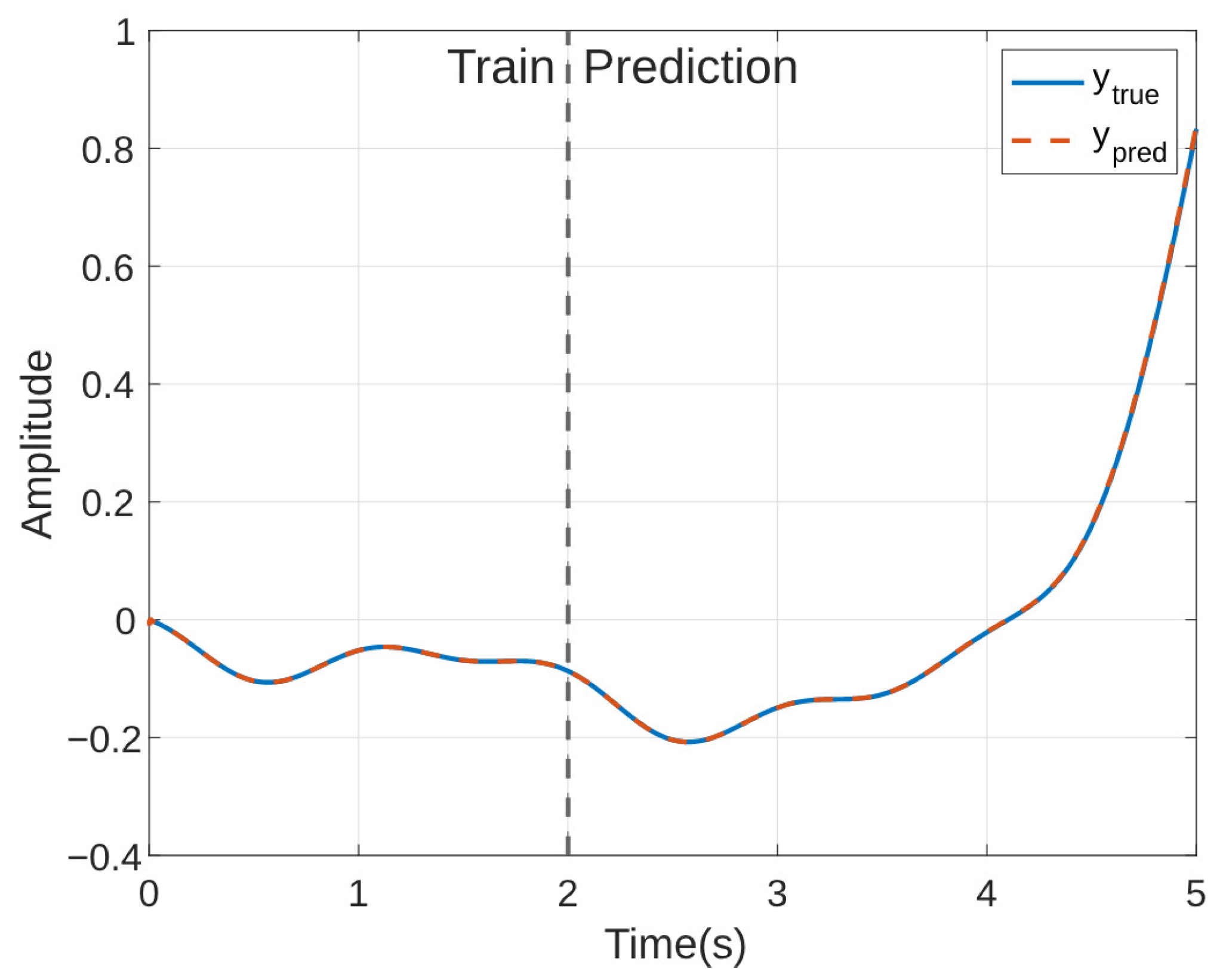

4.2. Unstable Linear System

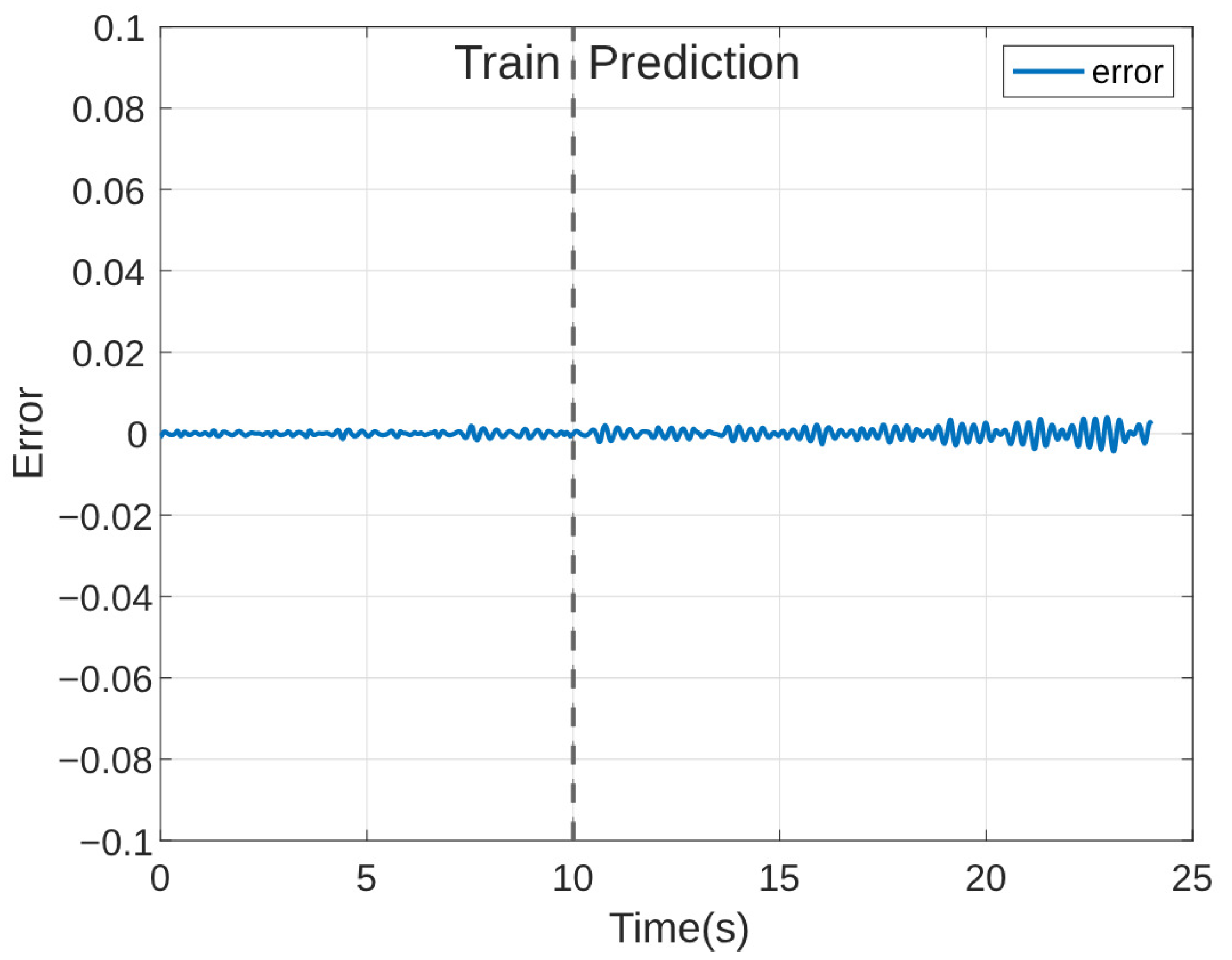

4.3. Unstable Linear System with Input Disturbance

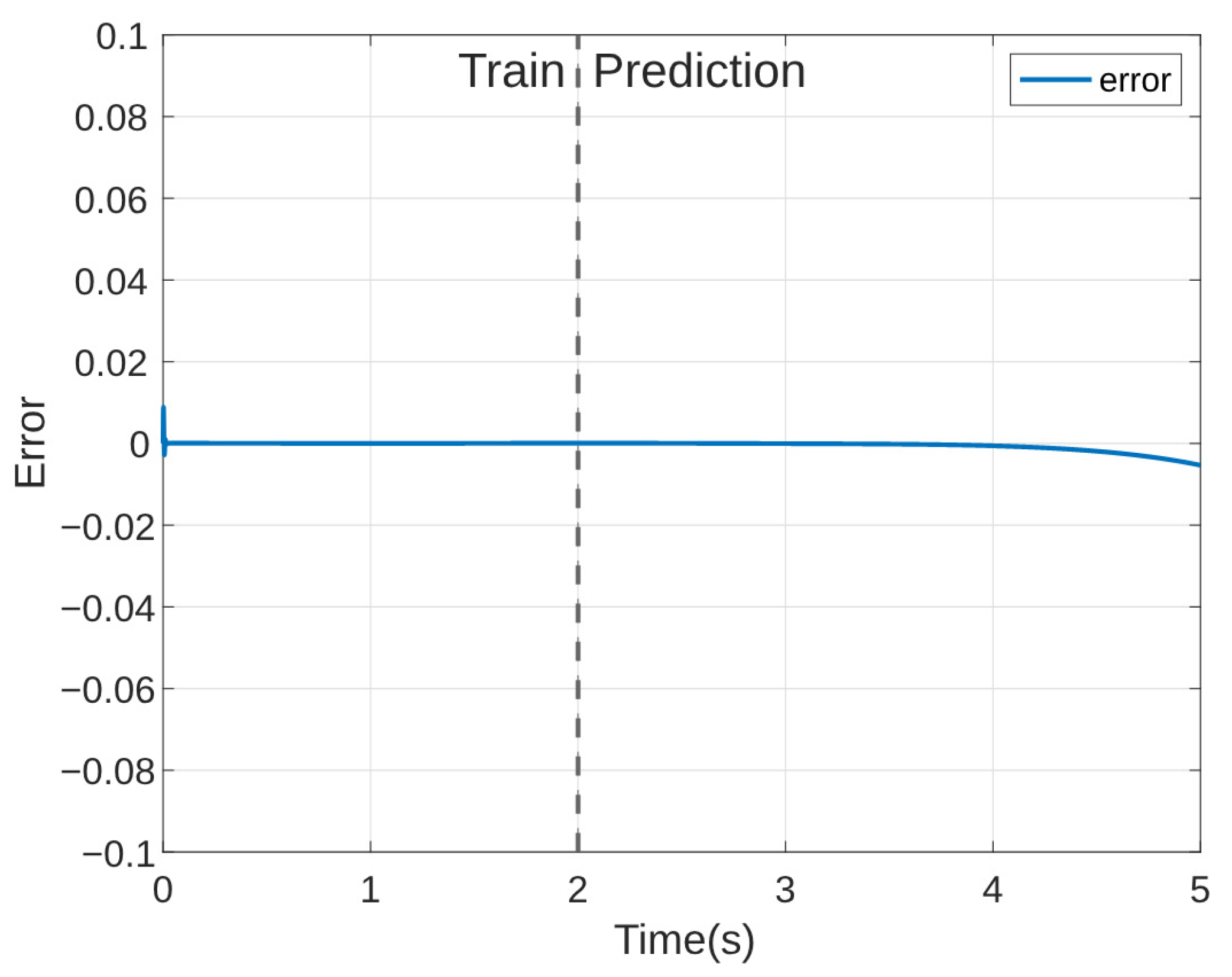

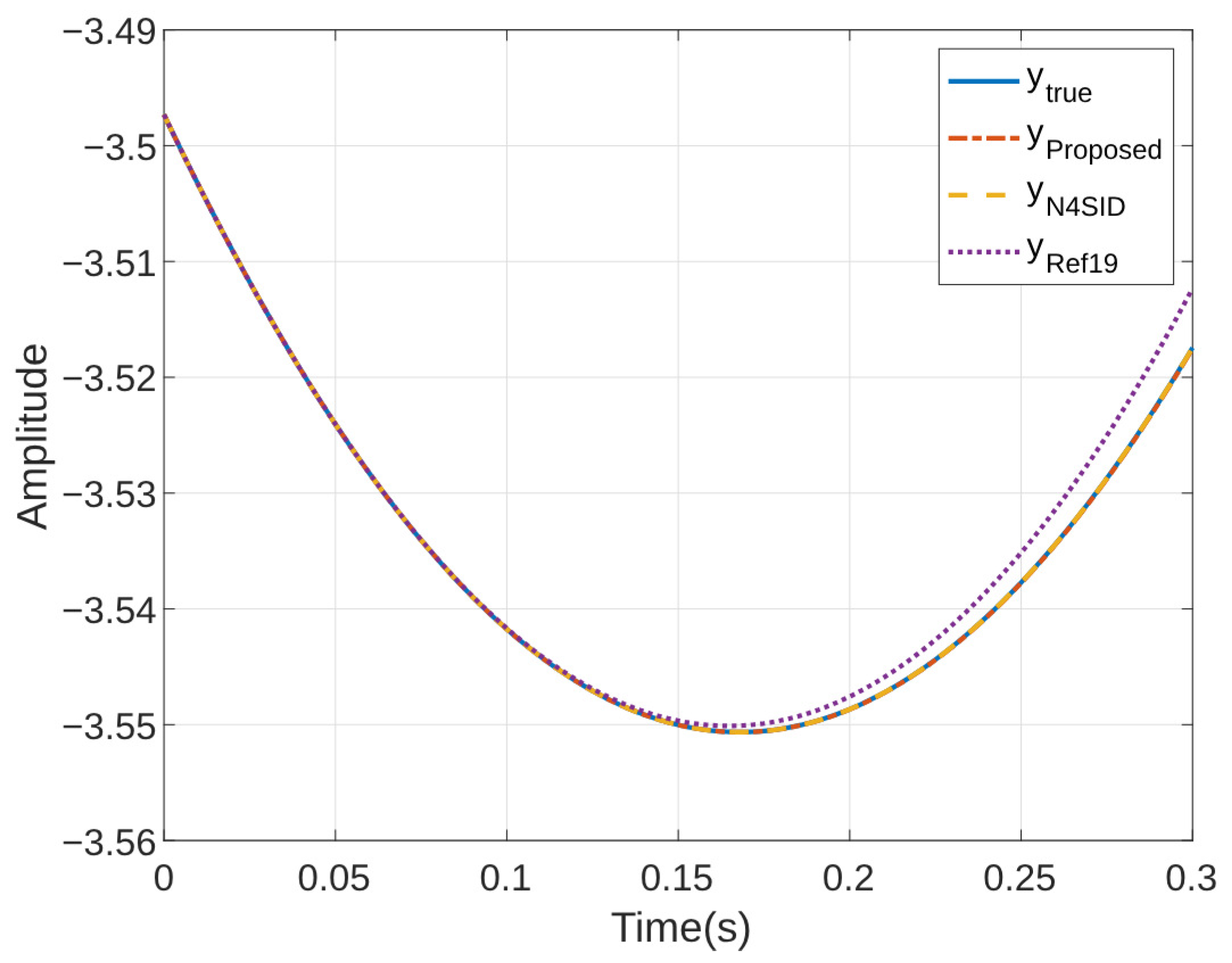

4.4. Comparison to Existing System Identification Methods

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xu, L.; Li, X.R.; Liang, Y.; Duan, Z. Modeling and State Estimation of Linear Destination-Constrained Dynamic Systems. IEEE Trans. Signal Process. 2022, 70, 2374–2387. [Google Scholar] [CrossRef]

- Wang, K.; Liu, M.; He, W.; Zuo, C.; Wang, F. Koopman Kalman Particle Filter for Dynamic State Estimation of Distribution System. IEEE Access 2022, 10, 111688–111703. [Google Scholar] [CrossRef]

- Kang, T.; Peng, H.; Xu, W.; Sun, Y.; Peng, X. Deep Learning-Based State-Dependent ARX Modeling and Predictive Control of Nonlinear Systems. IEEE Access 2023, 11, 32579–32594. [Google Scholar] [CrossRef]

- Makki, O.T.; Moosapour, S.S.; Mobayen, S.; Nobari, J.H. Design, Mathematical Modeling, and Control of an Underactuated 3-DOF Experimental Helicopter. IEEE Access 2024, 12, 55568–55586. [Google Scholar] [CrossRef]

- Gupta, A.; Lermusiaux, P.F.J. Neural closure models for dynamical systems. R. Soc. Math. Phys. Eng. Sci. 2021, 477, 20201004. [Google Scholar] [CrossRef]

- Freeman, D.C.; Giannakis, D.; Slawinska, J. Quantum Mechanics for Closure of Dynamical Systems. Multiscale Model. Simul. 2024, 22, 283–333. [Google Scholar] [CrossRef]

- Agrawal, A.; Koutsourelakis, P.-S. A probabilistic, data-driven closure model for RANS simulations with aleatoric, model uncertainty. J. Comput. Phys. 2024, 508, 112982. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R.; Friedman, J. Textbook of The Elements of Statistical Learning Data Mining, Inference, and Prediction, 2nd ed.; Springer: New York, NY, USA, 2009; pp. 1–2. [Google Scholar]

- James, G.; Witten, D.; Hastie, T.; Tibshirani, R.; Taylor, J. Textbook of An Introduction to Statistical Learning with Application in Python; Springer: New York, NY, USA, 2023; pp. 1–17. [Google Scholar]

- Rainio, O.; Teuho, J.; Klén, R. Evaluation metrics and statistical tests for machine learning. Sci. Rep. 2024, 14, 6086. [Google Scholar] [CrossRef]

- Wang, L.Y.; Yin, G.G.; Zhao, Y.; Zhang, J.-F. Identification Input Design for Consistent Parameter Estimation of Linear Systems With Binary-Valued Output Observations. IEEE Trans. Autom. Control 2008, 53, 867–880. [Google Scholar] [CrossRef]

- Wigren, A.; Wagberg, J.; Lindsten, F.; Wills, A.G.; Schon, T.B. Nonlinear System Identification: Learning While Respecting Physical Models Using a Sequential Monte Carlo Method. IEEE Control Syst. Mag. 2022, 42, 75–102. [Google Scholar] [CrossRef]

- Kwad, A.M.; Hanafi, D.; Omar, R.; Rahman, H.A. Development of system identification from traditional concepts to real-time soft computing based. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Bristol, UK, 2020; Volume 767. [Google Scholar]

- Hjalmarsson, H. System Identification of Complex and Structured Systems. Eur. J. Control 2009, 15, 275–310. [Google Scholar] [CrossRef]

- Rojas, C.R.; Barenthin, M.; Welsh, J.S.; Hjalmarsson, H. The cost of complexity in system identification: The Output Error case. Automatica 2011, 47, 1938–1948. [Google Scholar] [CrossRef]

- Schoukens, J.; Marconato, A.; Pintelon, R.; Rolain, Y.; Schoukens, M.; Tiels, K.; Vanbeylen, L.; Vandersteen, G.; Mulders, A.V. System identification in a real world. In Proceedings of the 2014 IEEE 13th International Workshop on Advanced Motion Control (AMC), Yokohama, Japan, 14–16 March 2014; pp. 1–9. [Google Scholar]

- Kabzinski, T.; Jax, P. A Flexible Framework for Expectation Maximization-Based MIMO System Identification for Time-Variant Linear Acoustic Systems. IEEE Open J. Signal Process. 2024, 5, 112–121. [Google Scholar] [CrossRef]

- Brunton, S.L.; Brunton, B.W.; Proctor, J.L.; Kutz, J.N. Koopman Invariant Subspaces and Finite Linear Representations of Nonlinear Dynamical Systems for Control. PLoS ONE 2016, 11, 11–19. [Google Scholar] [CrossRef]

- Mamakoukas, G.; Castaño, M.L.; Tan, X.; Murphey, T.D. Derivative-Based Koopman Operators for Real-Time Control of Robotic Systems. IEEE Trans. Robot. 2021, 37, 2173–2192. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, Q.; Mili, L.; Zheng, Z.; Gu, W.; Lu, S.; Wu, Z. A Data-Driven Koopman Approach for Power System Nonlinear Dynamic Observability Analysis. IEEE Trans. Power Syst. 2024, 39, 4090–4104. [Google Scholar] [CrossRef]

- Jia, J.; Zhang, W.; Guo, K.; Wang, J.; Yu, X.; Shi, Y.; Guo, L. EVOLVER: Online Learning and Prediction of Disturbances for Robot Control. IEEE Trans. Robot. 2024, 40, 382–402. [Google Scholar] [CrossRef]

- Choi, H.; Elliott, R.; Byrne, R. Data-Driven Power Flow Estimation with Inverter Interfaced Energy Storage Using Dynamic Injection Shift Factor. In Proceedings of the IEEE Power and Energy Society General Meeting (PESGM), Denver, CO, USA, 17–21 July 2022; pp. 1–5. [Google Scholar]

- Zheng, L.; Liu, X.; Xu, Y.; Hu, W.; Liu, C. Data-driven Estimation for a Region of Attraction for Transient Stability Using the Koopman Operator. CSEE J. Power Energy Syst. 2023, 9, 1405–1413. [Google Scholar]

- Sarić, A.A.; Transtrum, M.K.; Sarić, A.T.; Stanković, A.M. Integration of Physics- and Data-Driven Power System Models in Transient Analysis After Major Disturbances. IEEE Syst. J. 2023, 17, 479–490. [Google Scholar] [CrossRef]

- Bruder, D.; Fu, X.; Vasudevan, R. Advantages of bilinear koopman realizations for the modeling and control of systems with unknown dynamics. IEEE Robot. Autom. Lett. 2021, 6, 4369–4376. [Google Scholar] [CrossRef]

- Otto, S.; Peitz, S.; Rowley, C. Learning Bilinear Models of Actuated Koopman Generators from Partially Observed Trajectories. SIAM J. Appl. Dyn. Syst. 2024, 23, 885–923. [Google Scholar] [CrossRef]

- Mamakoukas, G.; Cairano, S.D.; Vinod, A.P. Robust model predictive control with data-driven koopman operators. In Proceedings of the American Control Conference (ACC), Atlanta, GA, USA, 8–10 June 2022; pp. 3885–3892. [Google Scholar]

- Yu, S.; Shen, C.; Ersal, T. Autonomous driving using linear model predictive control with a koopman operator based bilinear vehicle model. Ifac-Papersonline 2022, 55, 254–259. [Google Scholar] [CrossRef]

- Williams, M.O.; Kevrekidis, I.G.; Rowley, C.W. A data-driven approximation of the Koopman operator: Extending dynamic mode decomposition. J. Nonlinear Sci. 2015, 25, 1307–1346. [Google Scholar] [CrossRef]

- Arbabi, H.; Mezić, I. Ergodic theory, dynamic mode decomposition, and computation of spectral properties of the Koopman operator. SIAM J. Appl. Dyn. Syst. 2017, 16, 2096–2126. [Google Scholar] [CrossRef]

- Netto, M.; Susuki, Y.; Krishnan, V.; Zhang, Y. On Analytical Construction of Observable Functions in Extended Dynamic Mode Decomposition for Nonlinear Estimation and Prediction. IEEE Control. Syst. Lett. 2021, 5, 1868–1873. [Google Scholar] [CrossRef]

- Folkestad, C.; Pastor, D.; Mezic, I.; Mohr, R.; Fonoberova, M.; Burdick, J. Extended Dynamic Mode Decomposition with Learned Koopman Eigenfunctions for Prediction and Control. In Proceedings of the American Control Conference (ACC), Denver, CO, USA, 1–3 July 2020; pp. 3906–3913. [Google Scholar]

- Haseli, M.; Cortés, J. Data-Driven Approximation of Koopman-Invariant Subspaces with Tunable Accuracy. In Proceedings of the American Control Conference (ACC), New Orleans, LA, USA, 26–28 May 2021; pp. 470–475. [Google Scholar]

- Koopman, B.O. Hamiltonian Systems and Transformation in Hilbert Space. Proc. Natl. Acad. Sci. USA 1931, 17, 315–318. [Google Scholar] [CrossRef]

- Wenjian, Y.; Gu, Y.; Li, Y. Efficient Randomized Algorithms for the Fixed-Precision Low-Rank Matrix Approximation. SIAM J. Matrix Anal. Appl. 2018, 39, 1339–1359. [Google Scholar]

- Janot, A.; Gautier, M.; Brunot, M. Data Set and Reference Models of EMPS. In Proceedings of the Nonlinear System Identification Benchmarks, Eindhoven, The Netherlands, 10–12 April 2019. [Google Scholar]

- Overschee, P.V.; Moor, B.D. N4SID: Subspace algorithms for the identification of combined deterministic-stochastic systems. Automatica 1994, 30, 75–93. [Google Scholar] [CrossRef]

| Method | Basis Generation | Train NRMSE | Test NRMSE |

|---|---|---|---|

| N4SID | LQ decompostion and SVD | 0.0486 | 0.0096 |

| [19] | High-order derivative | 0.0028 | 8.3228 |

| Proposed | SVD | 0.0010 | 0.0011 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ketthong, P.; Samkunta, J.; Mai, N.T.; Kamal, M.A.S.; Murakami, I.; Yamada, K. Data-Driven Koopman Based System Identification for Partially Observed Dynamical Systems with Input and Disturbance. Sci 2024, 6, 84. https://doi.org/10.3390/sci6040084

Ketthong P, Samkunta J, Mai NT, Kamal MAS, Murakami I, Yamada K. Data-Driven Koopman Based System Identification for Partially Observed Dynamical Systems with Input and Disturbance. Sci. 2024; 6(4):84. https://doi.org/10.3390/sci6040084

Chicago/Turabian StyleKetthong, Patinya, Jirayu Samkunta, Nghia Thi Mai, Md Abdus Samad Kamal, Iwanori Murakami, and Kou Yamada. 2024. "Data-Driven Koopman Based System Identification for Partially Observed Dynamical Systems with Input and Disturbance" Sci 6, no. 4: 84. https://doi.org/10.3390/sci6040084

APA StyleKetthong, P., Samkunta, J., Mai, N. T., Kamal, M. A. S., Murakami, I., & Yamada, K. (2024). Data-Driven Koopman Based System Identification for Partially Observed Dynamical Systems with Input and Disturbance. Sci, 6(4), 84. https://doi.org/10.3390/sci6040084