1. Introduction

Most ancillary structures, such as high-mast light towers, cantilevered sign structures, overhead traffic signals, and luminaires, are continuously exposed to wind-related fatigue cracks, vibration issues, missing bolts, and loosened nuts [

1]. However, corrosion can be considered as the most common defect in them. In ancillary structures, corrosion is caused by factors such as exposure to weather elements, chemicals, and salt compounds. Traditional inspection methods, such as visual and physical nondestructive evaluations (NDEs), can be time-consuming, expensive, complex, dangerous, and even impossible for inaccessible areas. The outcome of the manned evaluations is typically subjective and inconsistent. It is possible that accuracy depends on the inspector’s experience and location’s accessibility for inspection [

2]; however, visual inspections remain the most common method for corrosion damage assessment. Maintenance and protection of traffic (MPT) safety legislation demands make it further challenging for stakeholders to perform in-service ancillary structure physical and visual inspections [

3]. Therefore, developing noncontact methods augmented with artificial intelligence (AI) as an alternative to conventional defect detection is a necessity.

Corrosion can be defined as the reaction of a metal to the surrounding corrosive environment due to the change in its properties, consequently resulting in functional deterioration of the metallic object [

4]. The service life of a steel structure can be reduced by external or internal surface corrosion [

5]. The United States records an estimated annual corrosion damage cost of approximately USD 10.15 billion for steel bridges [

6].

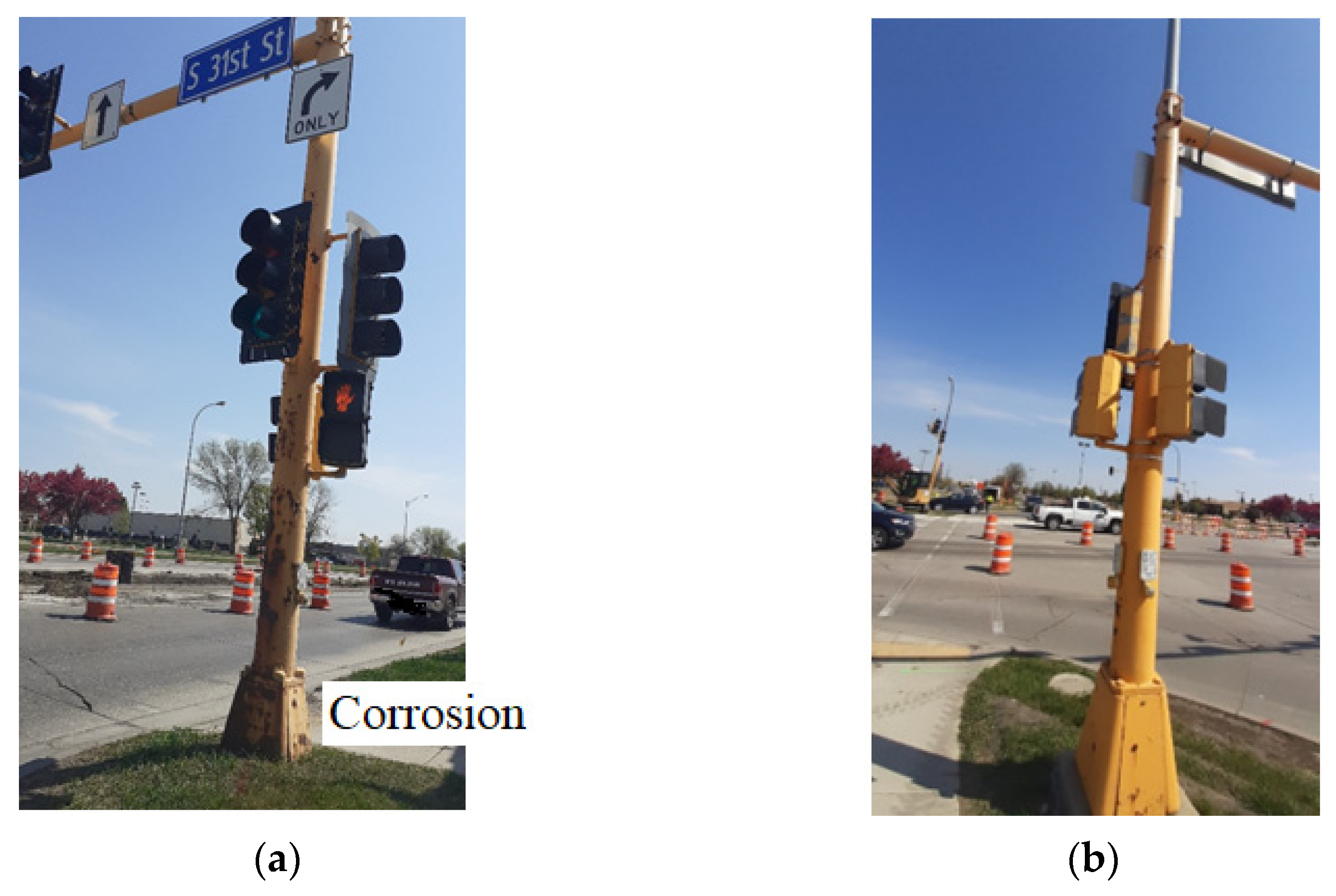

Figure 1a,b illustrates images of an existing ancillary structure in Grand Forks, ND, exhibiting significant corrosion and without visible corrosion, respectively.

Corrosion significantly affects the overall cost of steel structure maintenance [

7], and if neglected, can lead to section loss and eventual failure. For instance, the corroded ancillary structure shown in

Figure 1a was replaced due to severe corrosion to avoid continuous deterioration and subsequent failure. A total of 42% failures of structural elements occur due to corrosion of steel structures [

8]. Moreover, continuous contact with water or electrolytes could cause internal corrosion in the case of tubular members [

3].

The hands-on inspection technique has certain drawbacks [

9] that create the need for computerized digital image recognition, a feasible alternative regarding safety, efficacy, consistency, and accuracy. Despite the advantages of the currently practiced NDE technique in the inspection of steel structures, the automated optical technique is the most preferred due to its simplicity in use and interpretation [

6,

10,

11]. This usually involves the onsite acquisition of digital images of the structure, followed by an offsite analysis using image processing techniques for corrosion detection [

6,

12]; however, there have been some attempts for real-time processing [

13,

14].

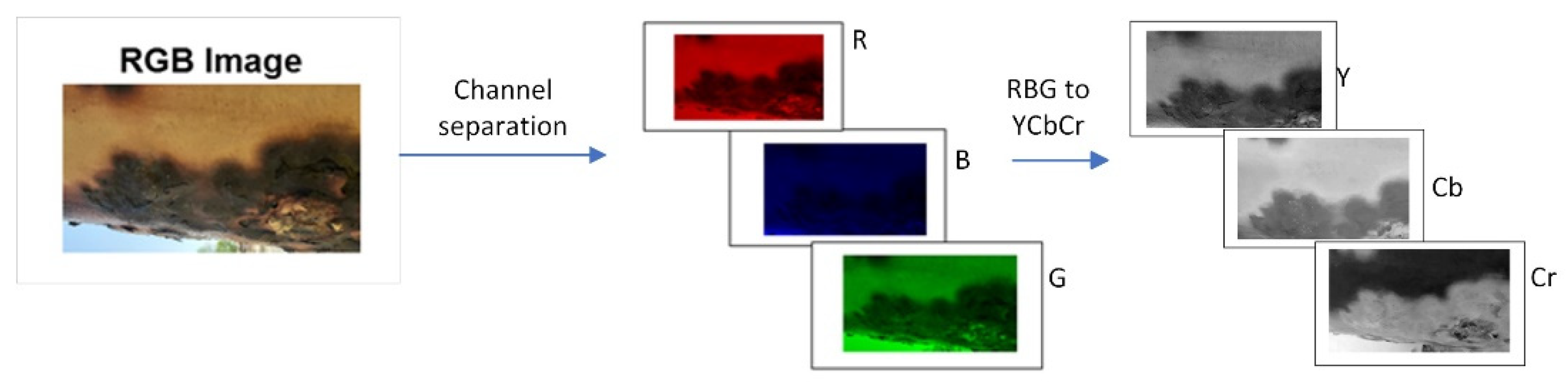

Researchers have proposed various image processing techniques to detect steel structure corrosion [

7,

8,

15]. The texture analysis technique characterizes the pixels of images for classification problems. Pixels associated with corrosion in visual images of ancillary structures have a rougher texture and a distinct color compared to noncorroded or sound pixels. Here, color and rough texture are considered features for pixel classification. Past research studies have investigated corrosion using RGB (red, green, blue) color space without considering the presence of undesired objects in the image’s background [

12,

16]. Most studies have either evaluated corrosion without corrosion quantification from ground truth or have used images collected under controlled environments [

6,

7,

8], as these image-based algorithms are usually affected by undesirable illumination presence of the background.

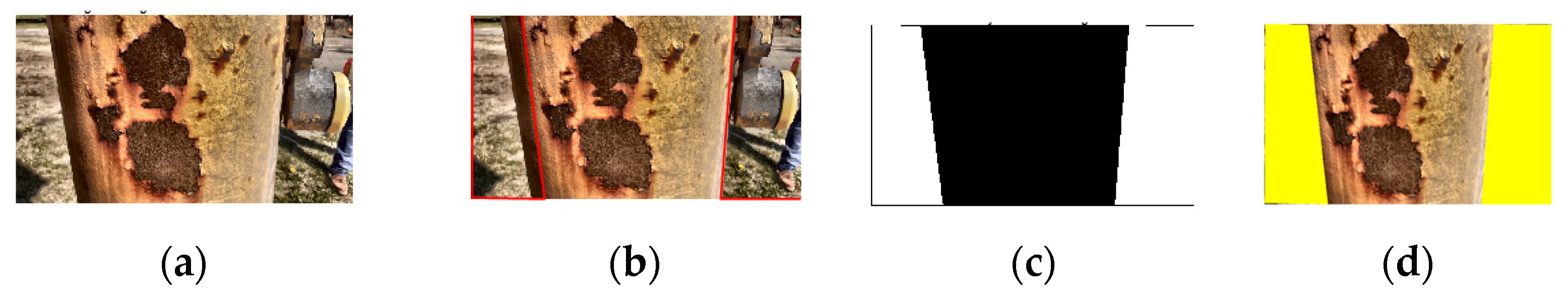

The state’s Department of Transportation (DOT) commonly performs corrosion detection inspections using visual and physical methods. The primary goal of this research is to develop an automated adaptive image processing-based algorithm to detect the corrosion of in-service ancillary structures under ambient environmental conditions. In addition, actual onsite conditions of ancillary structures, such as background and natural lighting, were considered while processing the data.

2. Overview of Corrosion Detecting Sensors

There are numerous nondestructive techniques for corrosion detection. Fiber Bragg grating (FBG) sensors are used to evaluate coated steel’s corrosion behavior [

17]. The performance of electrochemical tests and FBG sensors was compared. Two types of coating—polymetric and wire arc-sprayed Al-Zn (aluminum-zinc) coatings—were used to verify the FBG sensor’s performance. Finally, it was shown that FBG sensors perform well for both detecting corrosion and crack initiation. In past studies, researchers found ultrasonic sensors to be one of the most effective devices for corrosion detection [

18,

19], but they required expertise to identify the critical location of corrosion. Again, an optical microscope was used to detect the hidden corrosion of depths 0.02 to 0.40 mm in steel plates [

18].

Moreover, the traffic lane may need to be closed as these methods need contact with the investigating structural element. Corrosion identification using image processing methods can address both limitations. Digital cameras collect the digital data necessary for image processing methods. However, the sensor type and number specified for data collection are not constant in all inspections. Visual and thermal cameras are the most widely used sensors for corrosion detection and structure evaluation due to their availability, even though other sensor types can perform corrosion assessment and evaluation [

12]. In addition, these sensors can be mounted on an unmanned aerial vehicle/system (UAV/UAS) [

9], which does not need the closure of a traffic lane, as well as auxiliary arrangements such as a ladder and other detection instruments.

6. Conclusions

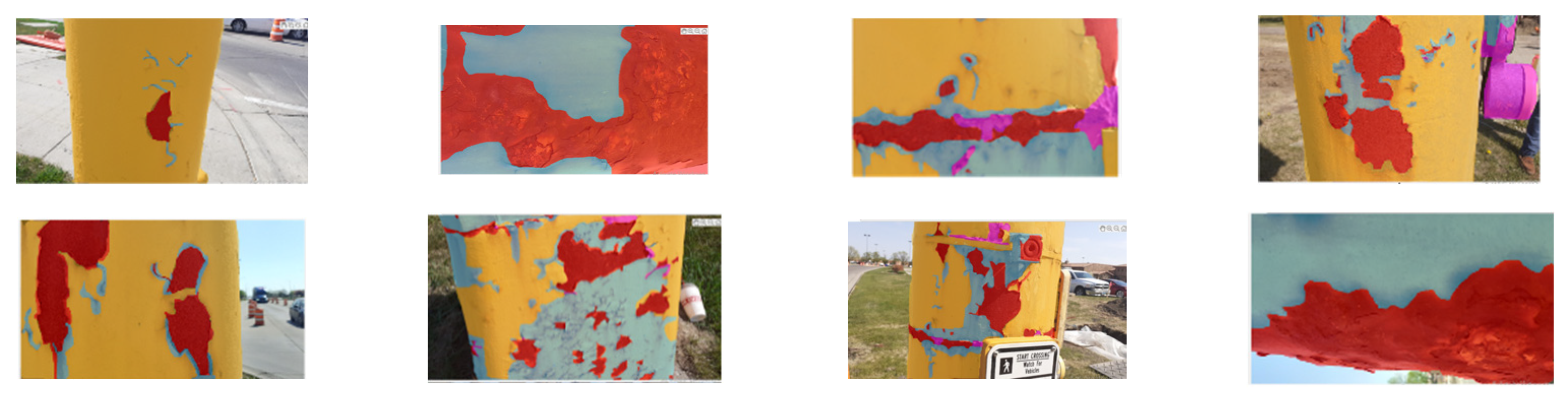

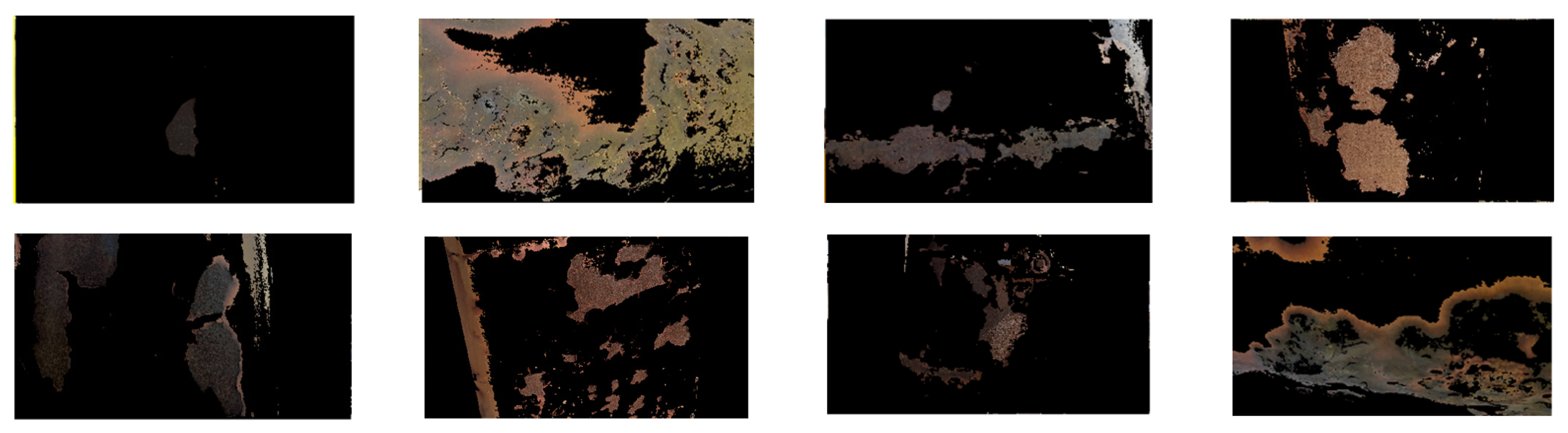

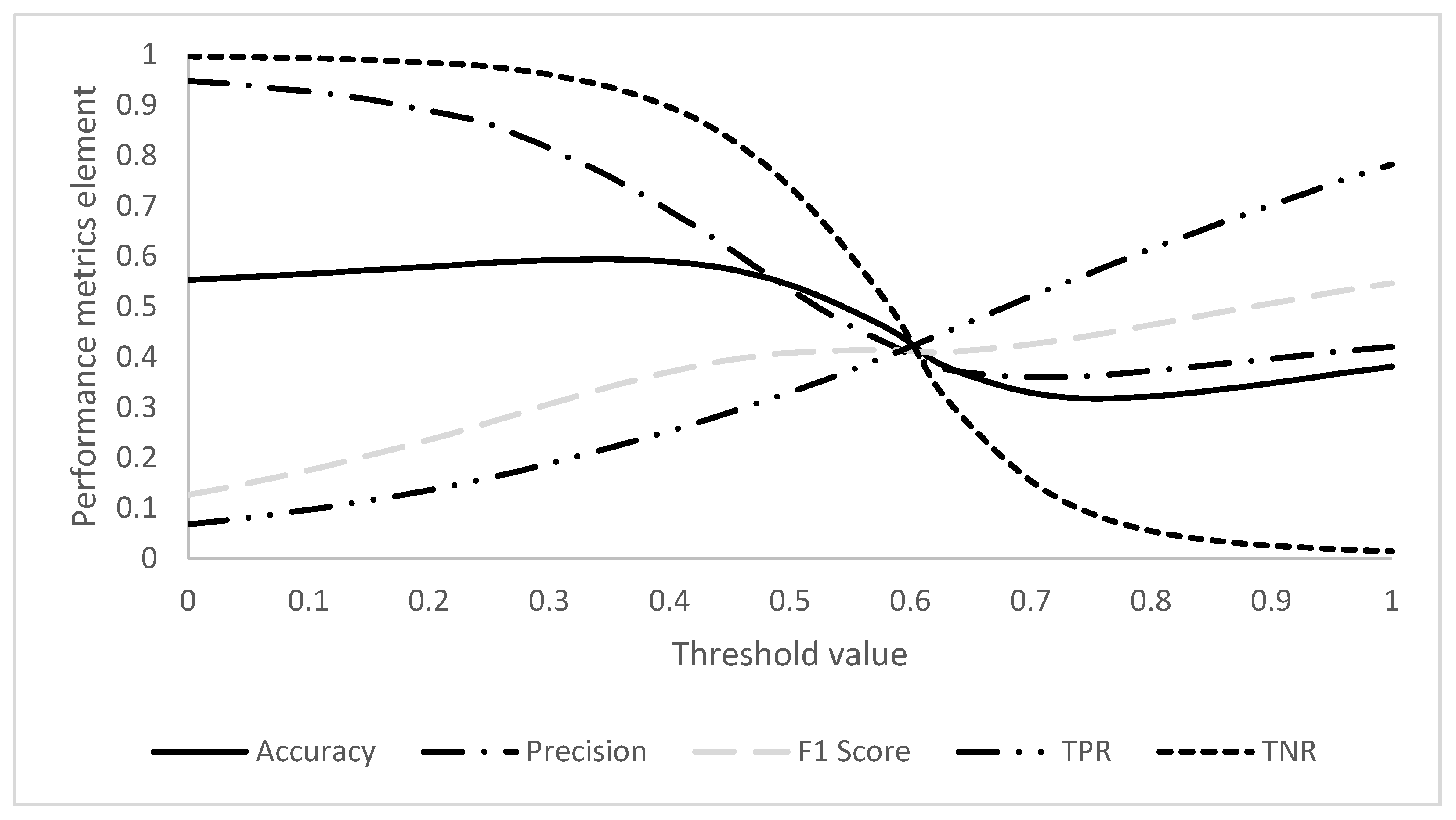

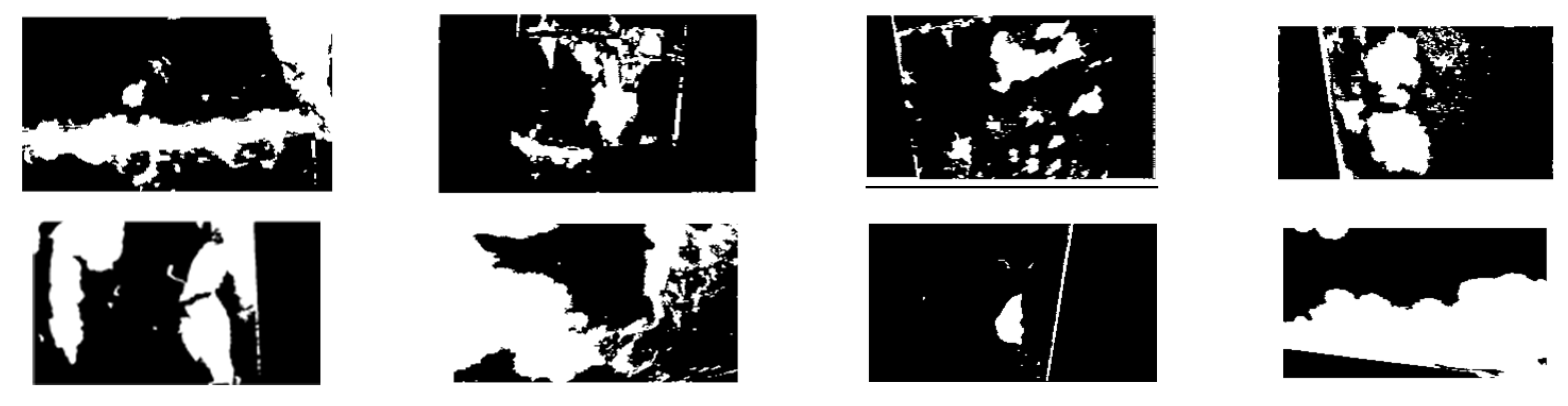

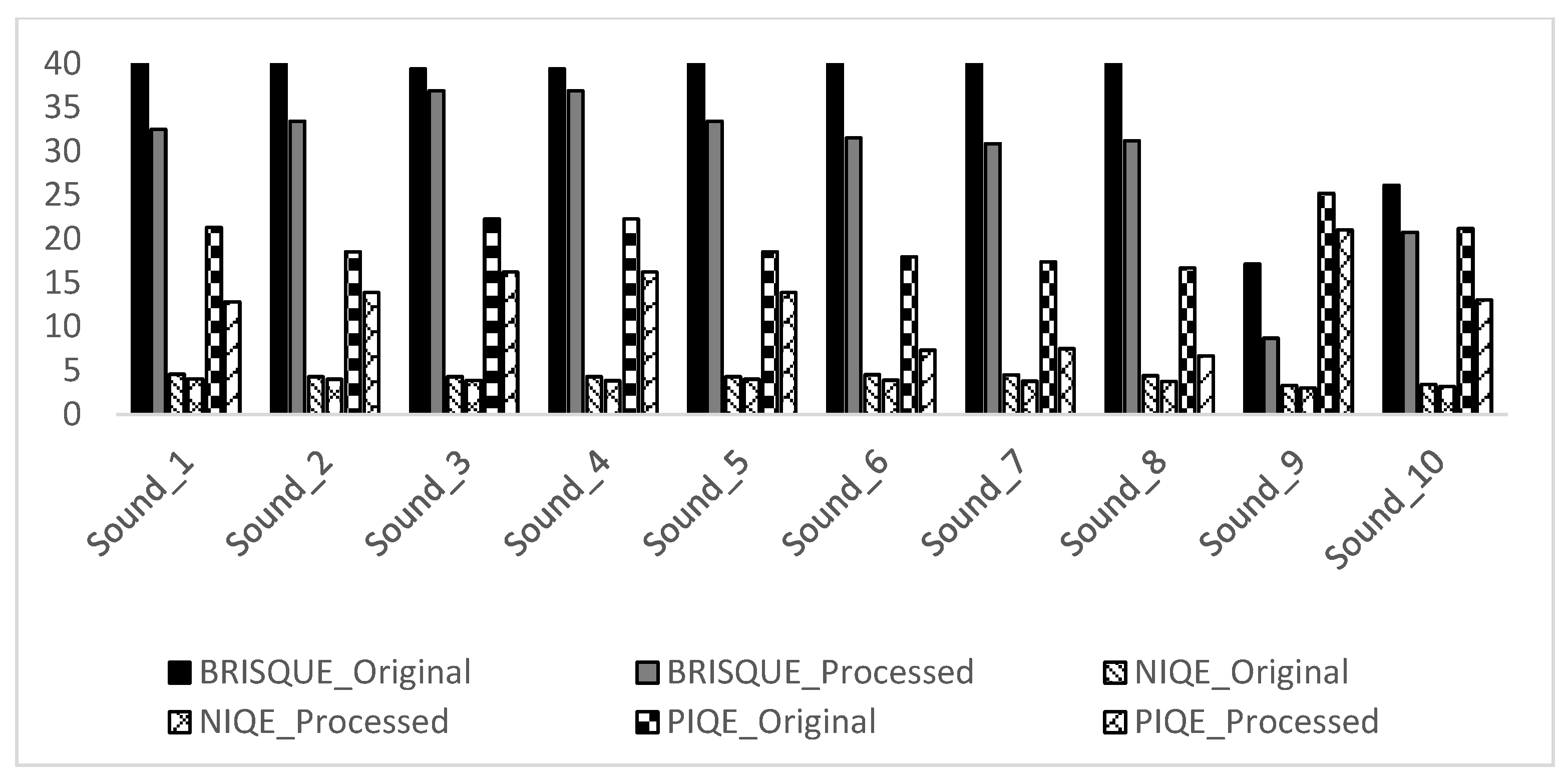

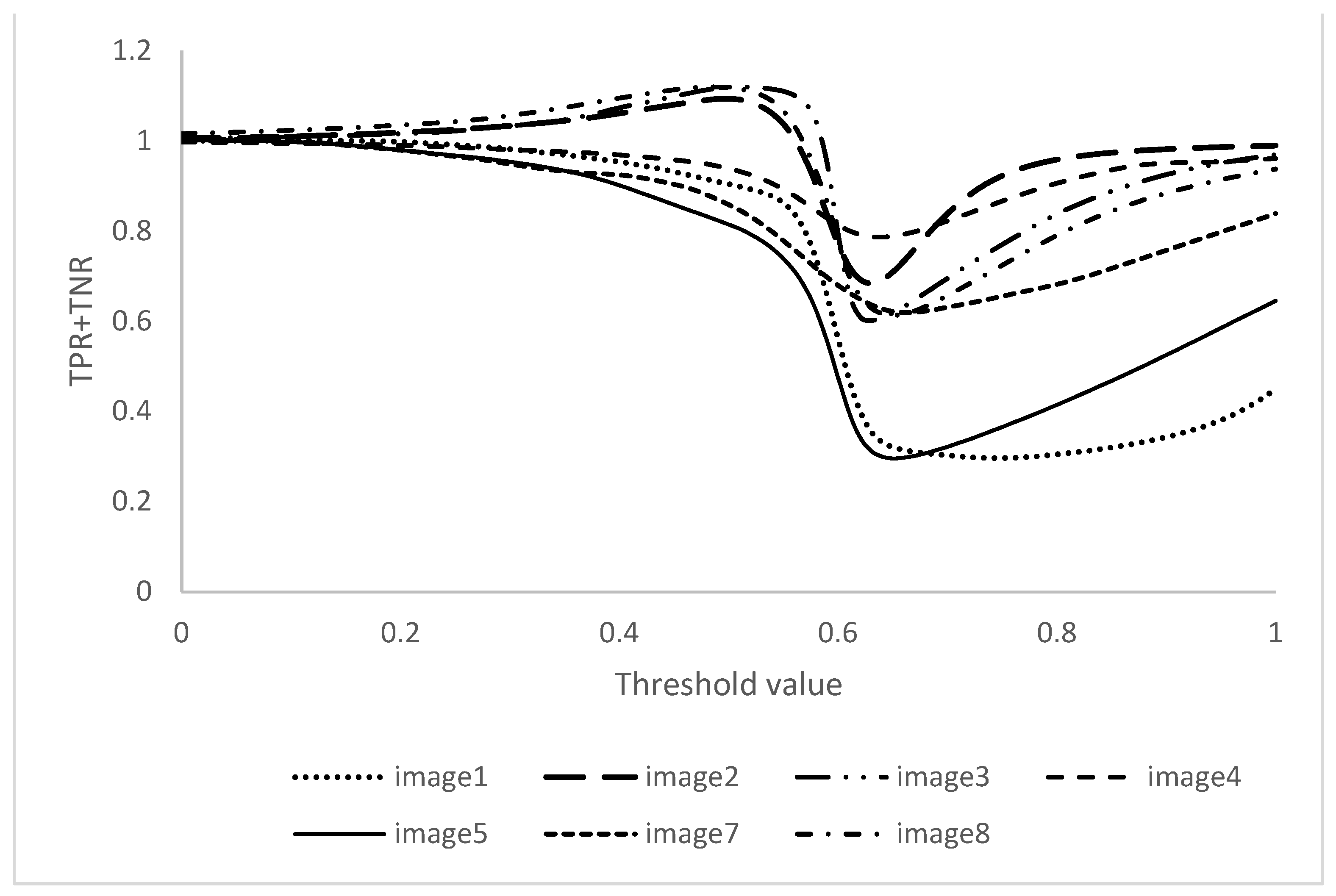

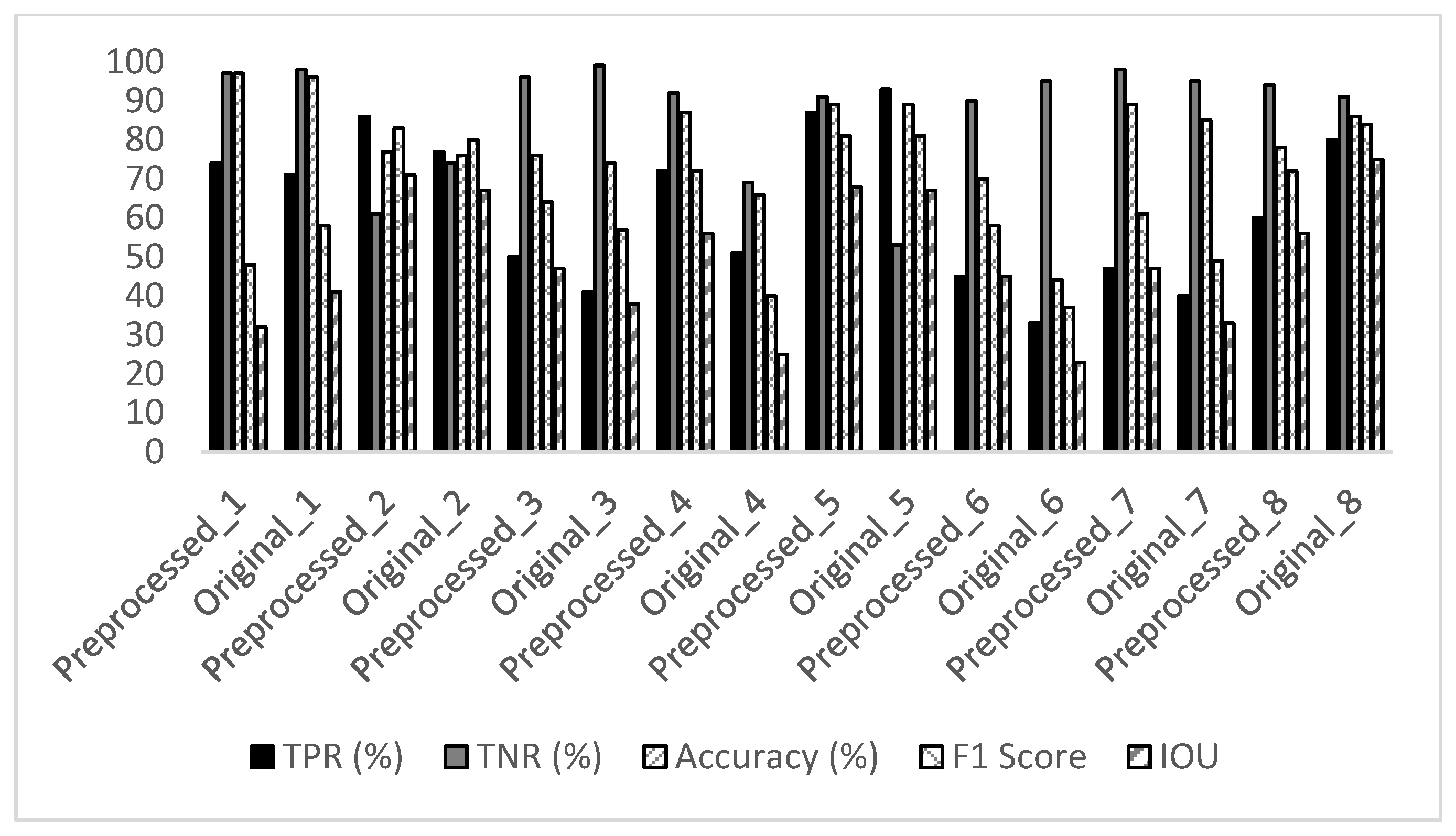

This study presents a novel image-based technique to detect corrosion in ancillary structures. The model was evaluated on sets of images with and without preprocessing. The maximum 20% TPR value improved after the preprocessing steps, followed by background removal and color space transformation. However, the brightness modification should be within the optimum range. Among the investigated images, the corroded areas with uniform illumination and without undesired objects were categorized with an average true positive rate of 70%, whereas for uneven images it was around 60%. Regarding the threshold method, optimization of the threshold value with respect to the performance metrics showed better results than Otsu.

The efficacy of the developed model was evaluated in two different ways: comparison with previous method and tested on external images. In comparison with the previous method, the proposed model detected 23% more corroded pixels correctly from the images with background. Qualitative results of the proposed model on the external images are also promising.

The study revealed that several factors, such as the presence of background objects, preprocessing, color space used for processing, image quality, and thresholding values, affect the performance of any conventional image processing model. Several of these shortcomings have been investigated and addressed through the proposed methodology. The results from this study show significant promise for the future adoption of autonomous unmanned aerial systems and artificial intelligence methods for image-based corrosion monitoring and detection in ancillary structures. Limitations of the proposed image-based algorithms include the selection of the optimum threshold value for binarization, generic brightness modification, and the need for background removal. Deep learning semantic segmentation models in combination with image processing methods could be considered for future work to develop a robust corrosion detection model to combat this limitation.