Show Me a Safer Way: Detecting Anomalous Driving Behavior Using Online Traffic Footage

Abstract

:1. Introduction

2. Related Work

2.1. Vehicle Detection

2.2. Vehicle Tracking

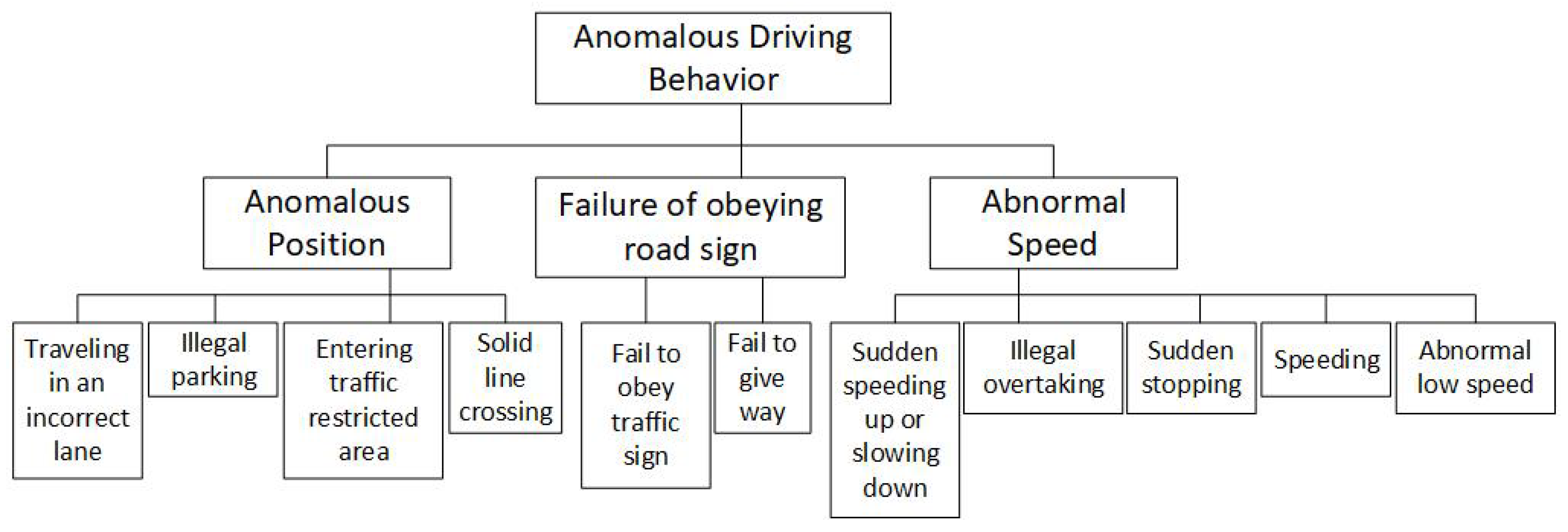

2.3. Behavior Classification

3. Methods

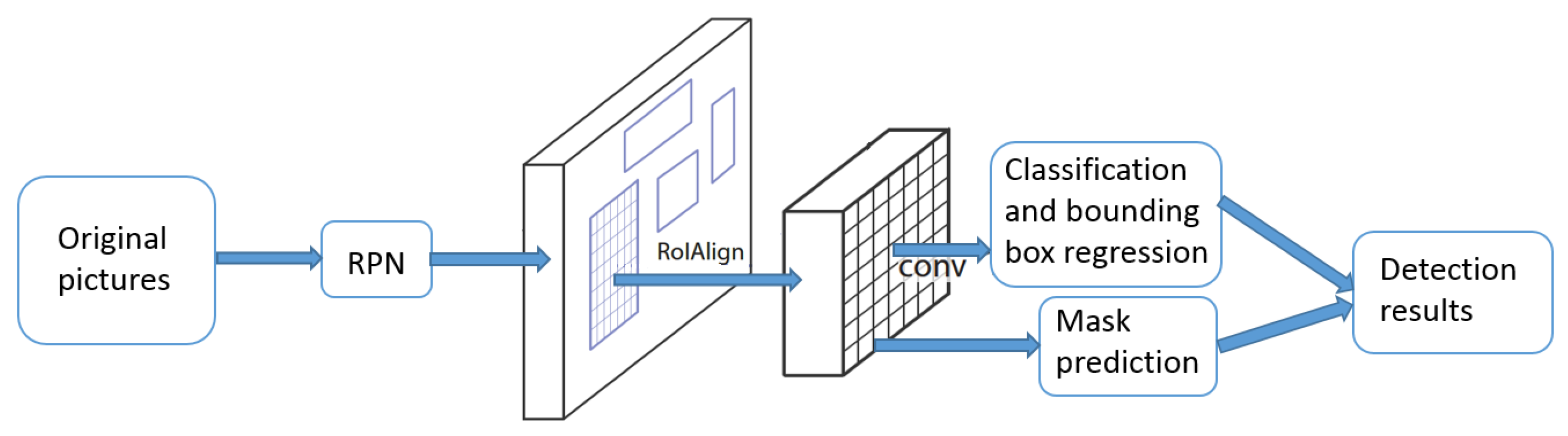

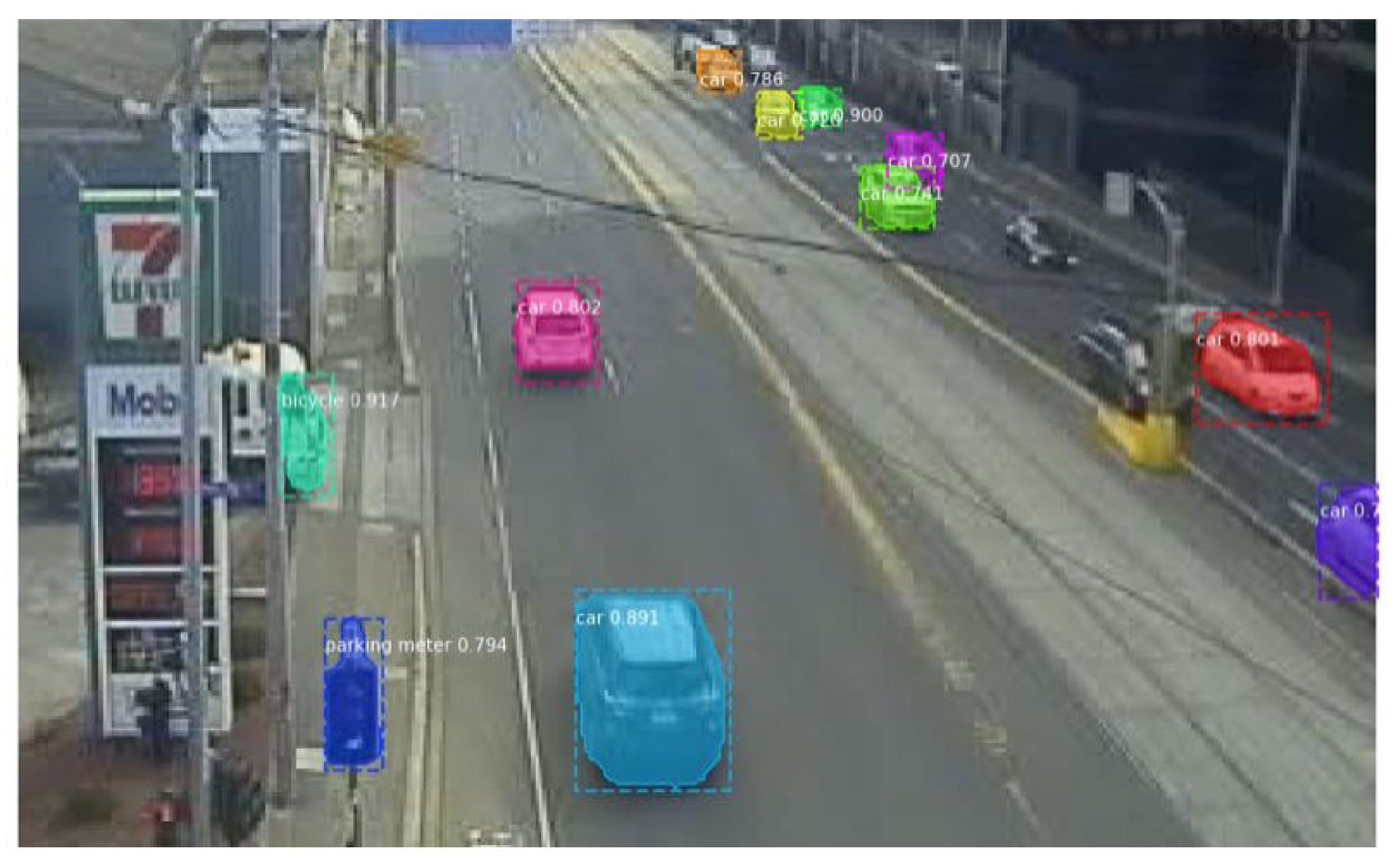

3.1. Vehicle Detection

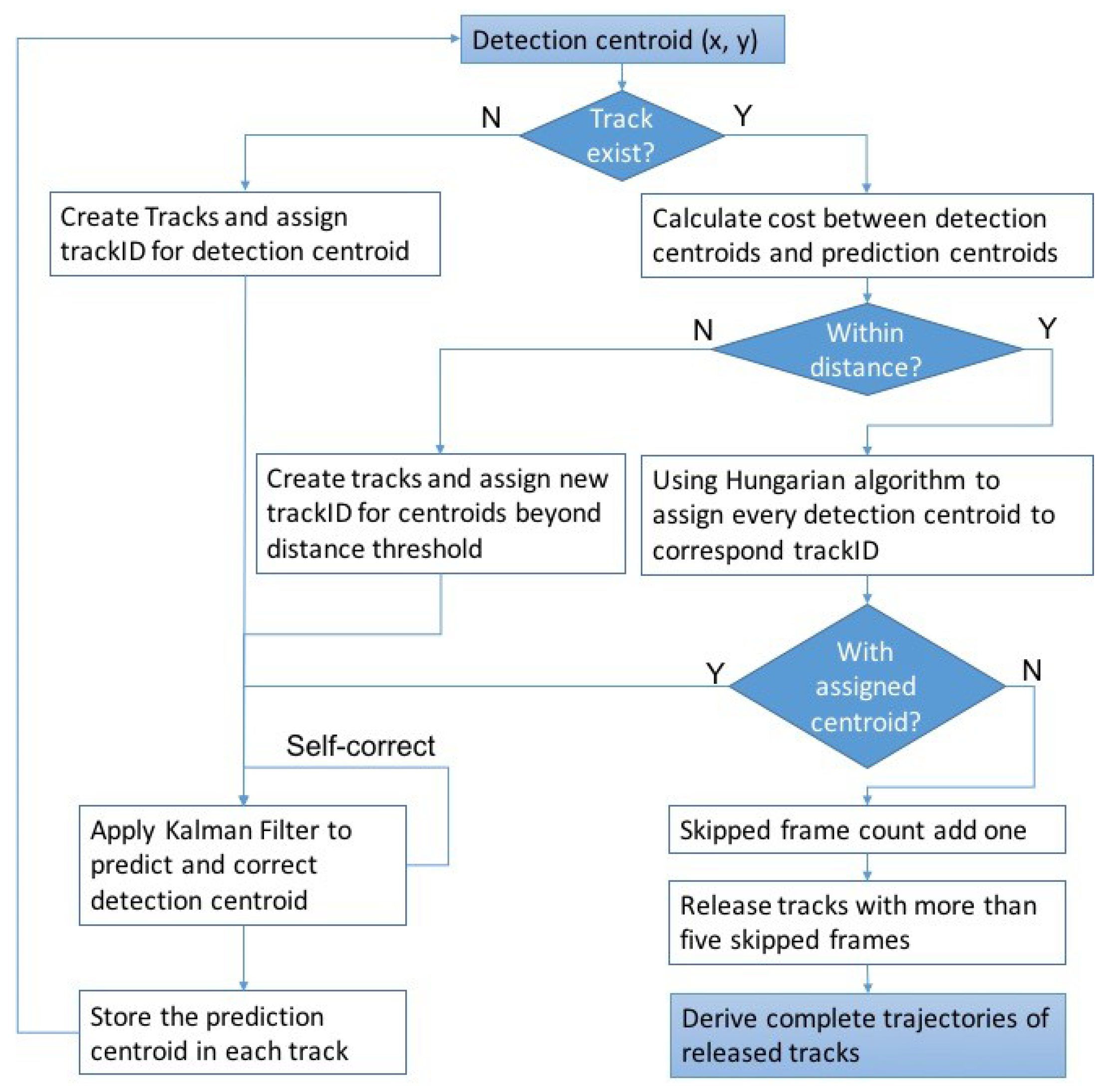

3.2. Vehicle Tracking

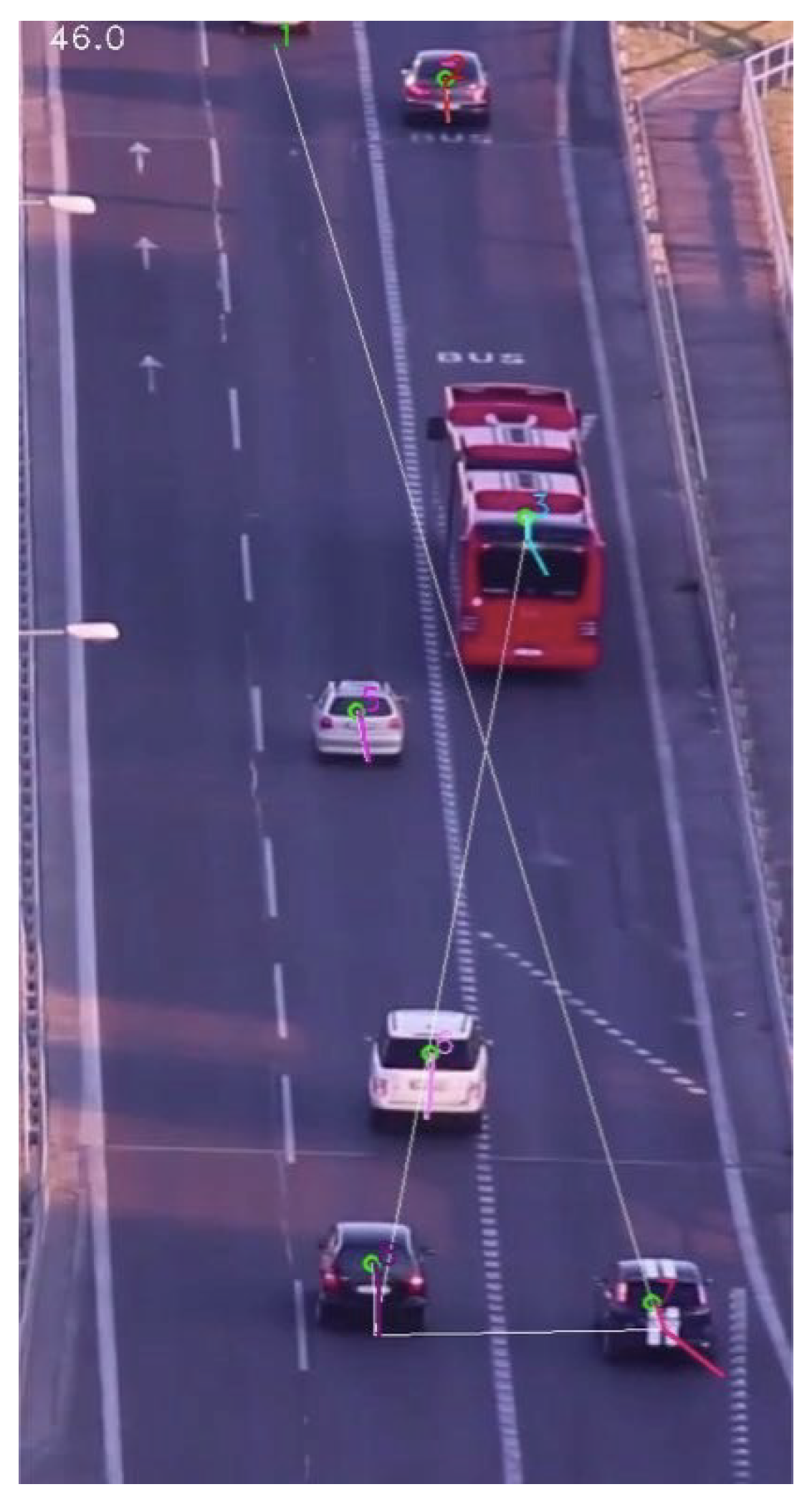

- Newly appearing vehicles are identified, and tracks are created with detected centroids. When the distances between detected centroids and the last centroids in all tracks exceed a predefined threshold, this detected centroid is considered as a newly appearing vehicle.

- The Hungarian algorithm is used to assign the rest of detected centroids to existing tracks by minimizing the sum of cost between assigned detected centroids and predicted centroids in tracks.

- A Kalman filter is used to predict and correct the detected centroids by estimating the centroid of next frame based on the given centroid of the current frame.

- Remove and extract existing trajectories when there is no corresponding vehicle being assigned to these tracks for five frames (one second), which indicates that the vehicle has moved out of the camera range.

3.3. Behavior Classification

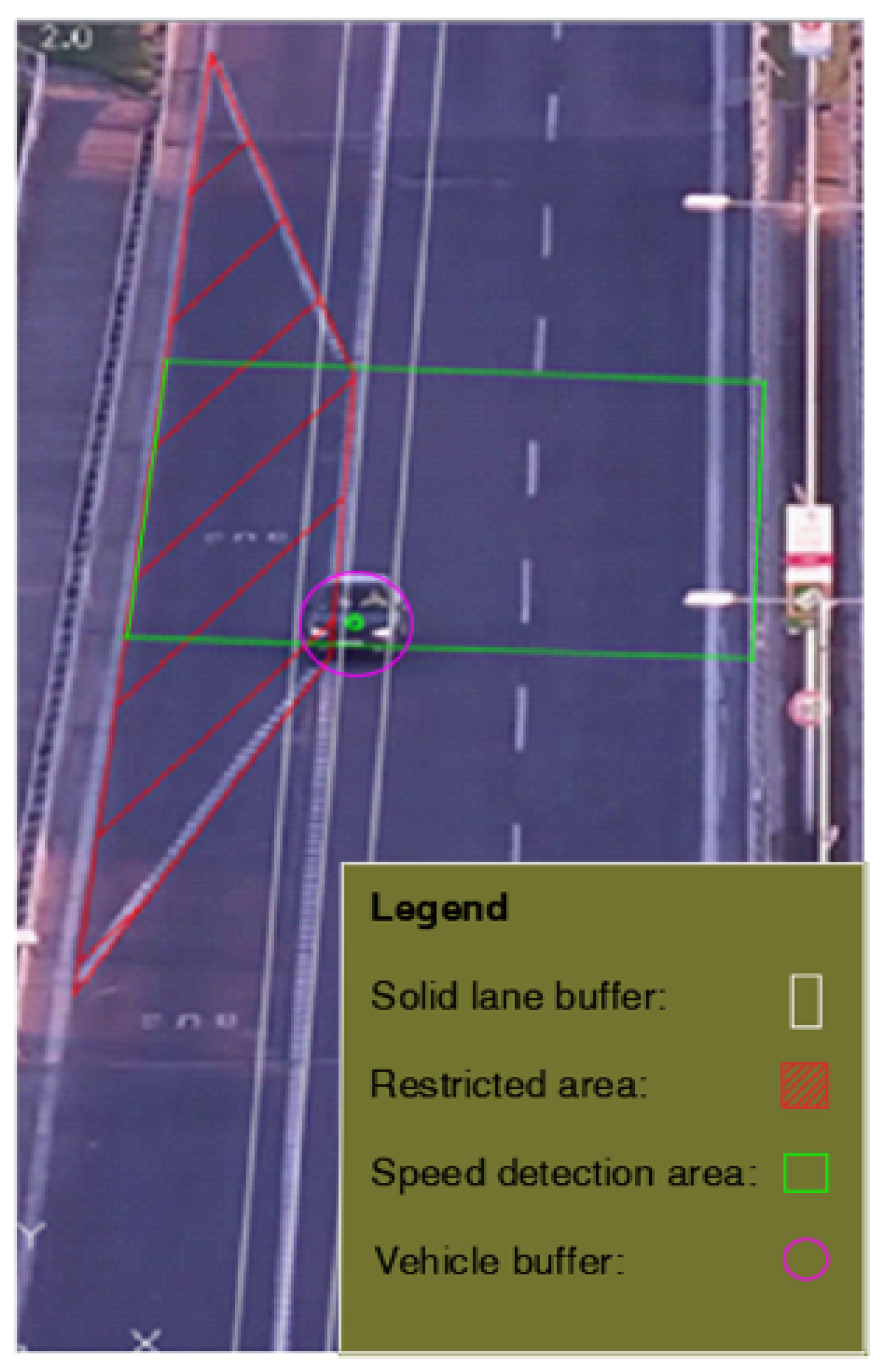

- Speeding: Identified by a comparison between the speed limit and the detected average velocity of the observed vehicle while traveling in a specified region. As shown in Figure 5, two green boundary lines are set for recording where and when a vehicle enters and leaves the region. The positions and frame numbers of a vehicle are recorded once the vehicle passes the upper green line or the lower green line, and time is correspondingly recorded. To correct the perspective effect of a traffic camera, a projective transformation [23] is used to map every point in the footage to its real-world coordinates on the ground plane. For speed estimation, Equation (1) is used:where v is the speed, s is the distance that the vehicle traveled between the upper green line and the lower green line area, (, ) and (, ) are the centroid coordinates of vehicles on the ground plane, and and are the traveling time between entering the ith frame and leaving the jth frame.To detect anomalous speed above or below the speed limit, we define an uncertainty margin for the estimated speed. The uncertainty of the estimated speed is calculated based on the theory of variance propagation, using the uncertainty of traveled distance s and travel time t. Assuming that distance and time are mutually independent, we have:where and are the standard deviation of traveled distance and travel time, respectively, which are obtained from the position of the detected vehicle in multiple consecutive frames. Specifically, the standard deviation of distance is computed by measuring the distance traveled between two consecutive frames across n frames. For the standard deviation of travel time, a constant value corresponding to a time interval between consecutive frames is assumed.

- Solid-line crossing: Recognized by establishing whether the trajectory of a detected vehicle intersects with a buffer created around the solid line (two white lanes in Figure 5). If the centroid of the detected vehicle is within the created buffer, it is determined as crossing the solid line.

- Entering traffic-restricted areas: This behavior is identified by the overlapping area between the traffic-restricted area shown as the hatched red polygon in Figure 5, and a circular buffer with a predefined radius created around the vehicle centroid. If the overlapping area is larger than a specific percentage of the circular buffer area, i.e., approximately at least half of the car is within the traffic-restricted area, the vehicle is determined to have entered the traffic-restricted area.

4. Experiments

4.1. Experiment Setup

- For speeding, we considered an uncertainty margin v ± that corresponds to a confidence interval of 68%.

- For entering traffic-restricted areas, if the overlap area was larger than 50% of the circular buffer area, the vehicle was determined to have entered the traffic-restricted area.

- -

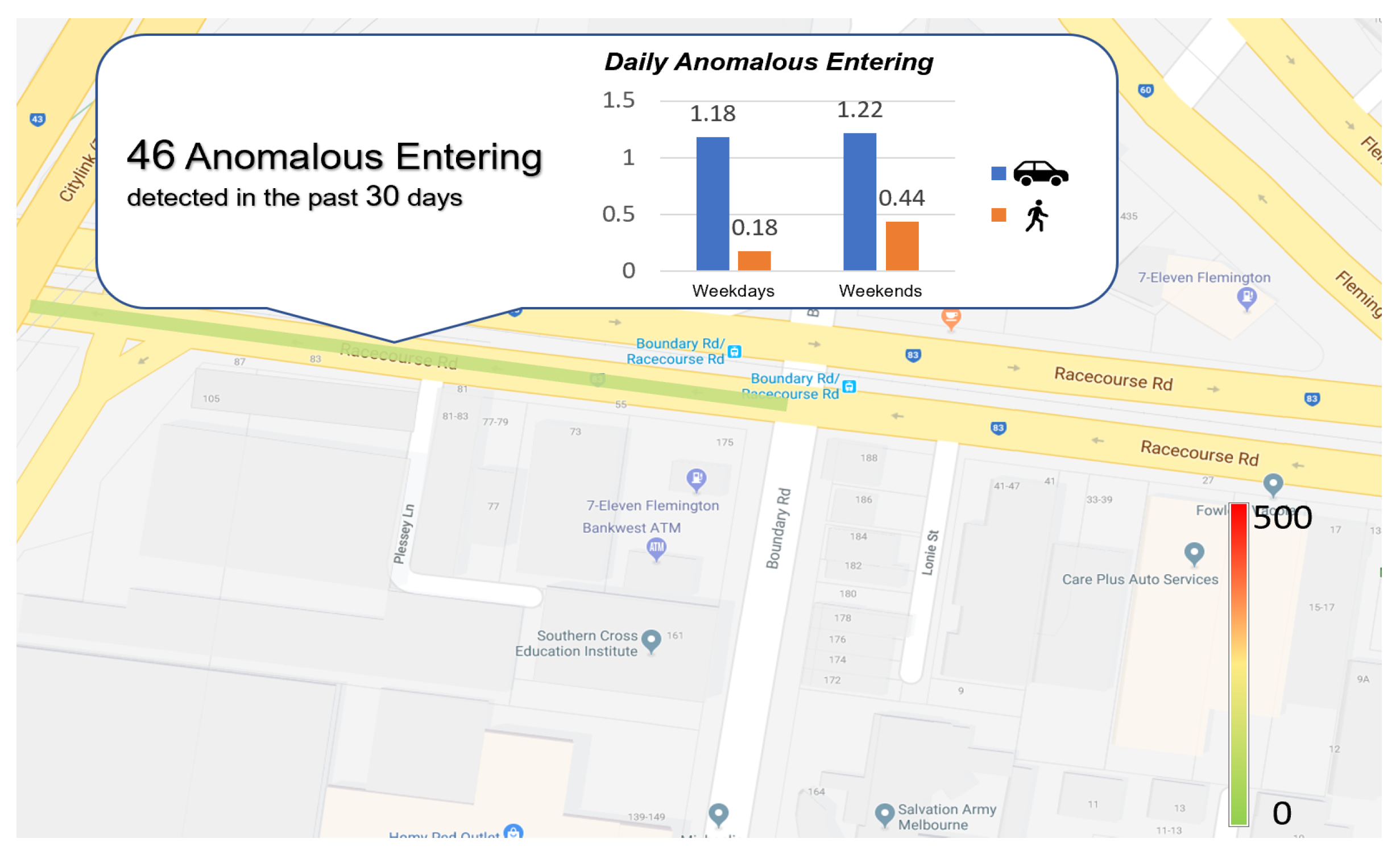

- Dataset 1: Our first dataset consists of snapshots of an online traffic camera on the intersection Racecourse Rd. and Boundary Rd. (RB) in Melbourne, published by Vicroads. Since the snapshot used in this study is refreshed every 120 s, our system could only detect certain anomalous behaviors, for example, entering traffic-restricted areas. Hence, this dataset was used only for the detection of vehicles driving on the bicycle lane.

- -

- Dataset 2: Considering the low sampling rate of Dataset 1, we used recorded traffic footage as Dataset 2 that was sampled more frequently from two highways: Panónska cesta (PA1 & PA2, https://www.youtube.com/watch?v=JmFjluIQGJw), M7 Clem Jones Tunnel (M7), and the intersection of Huangshan Rd and Tianzhi Rd in Heifei, China (CN, http://www.openits.cn/openData2/602.jhtml). This dataset contained three videos with lengths of 2, 4, and 4 min, respectively, and a total of 3112 frames. Given the higher resolution, we defined anomalous driving behavior as solid-line crossing, entering traffic-restricted areas, and speeding. For the first two categories, we manually annotated the images to establish ground truth for the evaluation of the detected anomalous driving behaviors.

4.2. Results

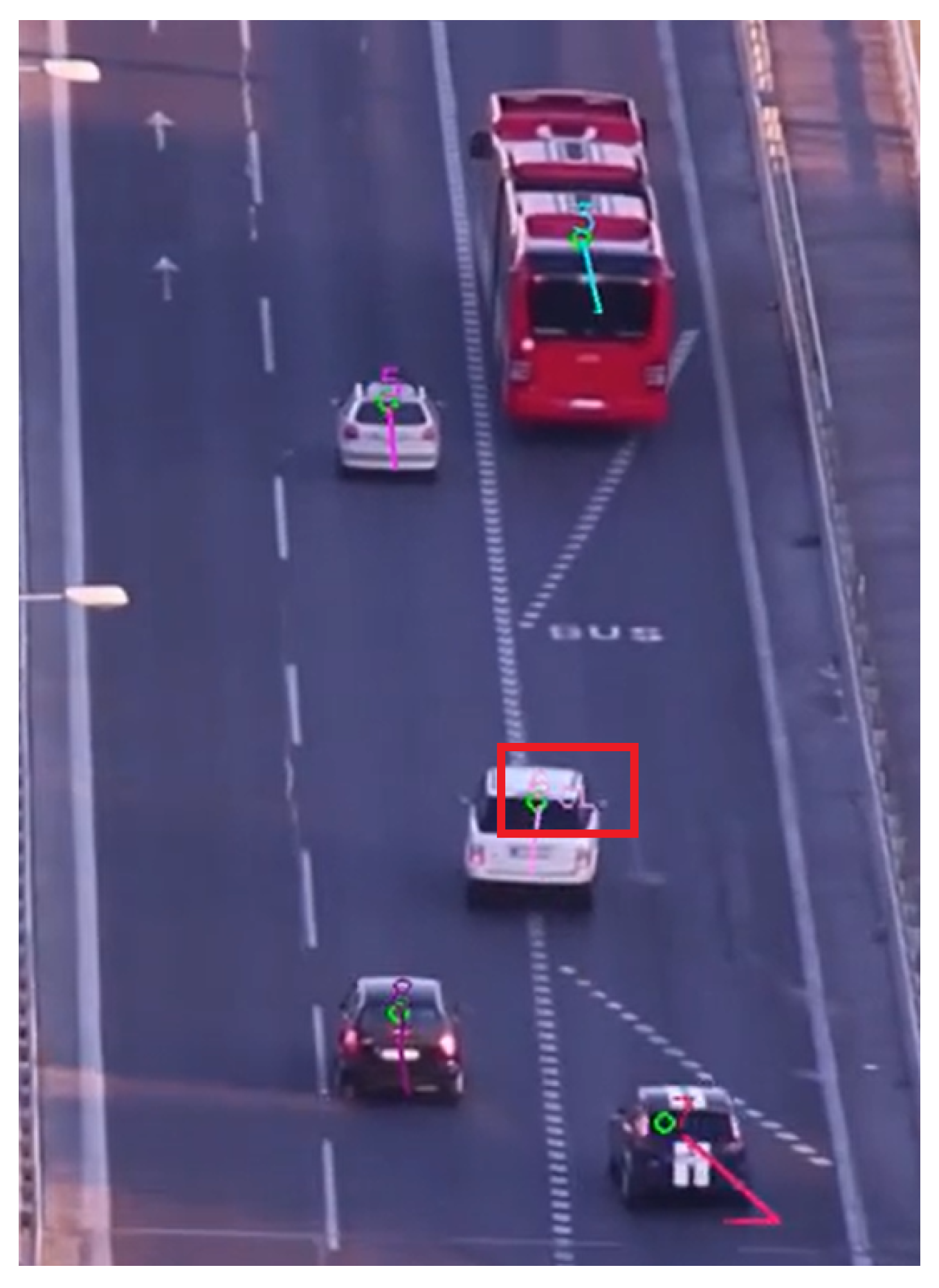

4.2.1. Solid-Line Crossing and Entering Traffic-Restricted Areas

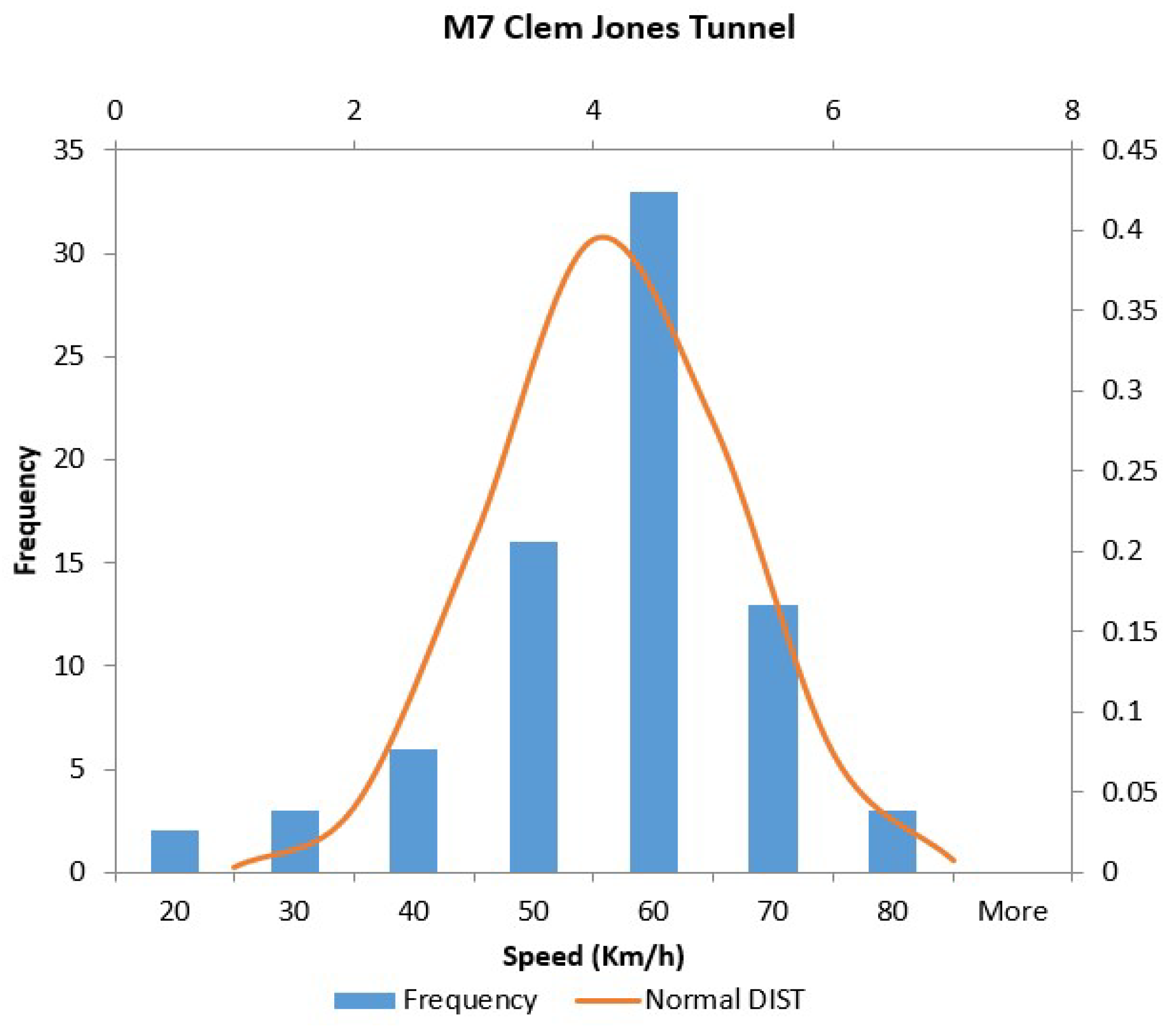

4.2.2. Speeding behavior

5. Discussion

5.1. Combination of Hungarian Algorithm and Kalman Filter

5.2. Integration and Visualization

5.3. High Adaptability

5.4. Limitations

- In scenarios with high traffic volume where the movement of vehicles is not continuous, the Kalman filter may not be able to correctly track the vehicles due to its simple motion model.

- The location and angle of the camera might cause significant distortion and occlusion in the images and hence affect detection and tracking performance. Theoretically, a bird’s-eye view is the most appropriate angle for the camera as it minimizes occlusion. Figure 10 shows an example of a low camera angle where the proposed system cannot perform well due to occlusion.

- In our system, driving behaviors are classified based on simple rules and thresholds, and accuracy may be affected by the threshold setting, which is experimental and subjective. The integration of clustering and classification methods can be used to automatically classify different driving behaviors, thus achieving higher accuracy and efficiency. Such integration has already been proposed to solve the problem of personalized driver-workload inference in Reference [24] and personalized driving behavior prediction in Reference [25].

- System accuracy heavily relies on the accuracy of Mask R-CNN detection. In our current implementation, we used the pretrained model of MS COCO [22]. Fine tuning of this pretrained network using local traffic footage can improve vehicle-detection accuracy. Further improvement can be achieved by incremental learning of the detection model as the system operates and more data become available. Data collected over a long period of time can also be used to detect changes to road rules. For example, a change of speed limit can be inferred from the statistical distribution of the measured speeds for a large number of vehicles over a long period of time.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Lakhina, A.; Crovella, M.; Diot, C. Diagnosing network-wide traffic anomalies. ACM SIGCOMM Comput. Commun. Rev. 2004, 34, 219–230. [Google Scholar] [CrossRef]

- Celik, T.; Kusetogullari, H. Solar-powered automated road surveillance system for speed violation detection. IEEE Trans. Ind. Electron. 2010, 57, 3216–3227. [Google Scholar] [CrossRef]

- Wang, Y.K.; Fan, C.T.; Chen, J.F. Traffic camera anomaly detection. In Proceedings of the 2014 22nd International Conference on Pattern Recognition (ICPR), Stockholm, Sweden, 24–28 August 2014; pp. 4642–4647. [Google Scholar]

- Figueiredo, L.; Jesus, I.; Machado, J.T.; Ferreira, J.R.; De Carvalho, J.M. Towards the development of intelligent transportation systems. In Proceedings of the 2001 IEEE Intelligent Transportation Systems, Proceedings (Cat. No.01TH8585), Oakland, CA, USA, 25–29 August 2001; pp. 1206–1211. [Google Scholar]

- Sun, Z.; Bebis, G.; Miller, R. On-road vehicle detection: A review. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 694–711. [Google Scholar] [PubMed]

- Yang, Z.; Pun-Cheng, L.S. Vehicle Detection in Intelligent Transportation Systems and its Applications under Varying Environments: A Review. Image Vis. Comput. 2017, 69, 143–154. [Google Scholar] [CrossRef]

- Dickmanns, E.D. The development of machine vision for road vehicles in the last decade. In Proceedings of the 2002 Intelligent Vehicle Symposium, Versailles, France, 17–21 June 2002; Volume 1, pp. 268–281. [Google Scholar]

- Sun, Z.; Bebis, G.; Miller, R. Monocular precrash vehicle detection: features and classifiers. IEEE Trans. Image Process. 2006, 15, 2019–2034. [Google Scholar] [PubMed]

- Sivaraman, S.; Trivedi, M.M. Looking at vehicles on the road: A survey of vision-based vehicle detection, tracking, and behavior analysis. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1773–1795. [Google Scholar] [CrossRef]

- Song, H.S.; Lu, S.N.; Ma, X.; Yang, Y.; Liu, X.Q.; Zhang, P. Vehicle behavior analysis using target motion trajectories. IEEE Trans. Veh. Technol. 2014, 63, 3580–3591. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Zhang, W.; Fang, X.; Yang, X.; Wu, Q.J. Spatiotemporal Gaussian mixture model to detect moving objects in dynamic scenes. J. Electron. Imaging 2007, 16, 023013. [Google Scholar] [CrossRef]

- Yang, Y.; Gao, X.; Yang, G. Study the method of vehicle license locating based on color segmentation. Procedia Eng. 2011, 15, 1324–1329. [Google Scholar] [CrossRef]

- Wang, G.; Xiao, D.; Gu, J. Review on vehicle detection based on video for traffic surveillance. In Proceedings of the IEEE International Conference on Automation and Logistics, Qingdao, China, 1–3 September 2008; pp. 2961–2966. [Google Scholar]

- Arróspide, J.; Salgado, L.; Camplani, M. Image-based on-road vehicle detection using cost-effective histograms of oriented gradients. J. Vis. Commun. Image Represent. 2013, 24, 1182–1190. [Google Scholar] [CrossRef]

- Ciresan, D.C.; Meier, U.; Masci, J.; Maria Gambardella, L.; Schmidhuber, J. Flexible, high performance convolutional neural networks for image classification. In Proceedings of the IJCAI Proceedings-International Joint Conference on Artificial Intelligence, Barcelona, Spain, 16–22 July 2011; Volume 22, pp. 1237–1242. [Google Scholar]

- Fan, Q.; Brown, L.; Smith, J. A closer look at Faster R-CNN for vehicle detection. In Proceedings of the 2016 Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 124–129. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Yokoyama, M.; Poggio, T. A contour-based moving object detection and tracking. In Proceedings of the 2nd Joint IEEE International Workshop on Visual Surveillance and Performance Evaluation of Tracking and Surveillance, Beijing, China, 15–16 October 2005; pp. 271–276. [Google Scholar]

- Mithun, N.C.; Howlader, T.; Rahman, S.M. Video-based tracking of vehicles using multiple time-spatial images. Expert Syst. Appl. 2016, 62, 17–31. [Google Scholar] [CrossRef]

- Kuhn, H.W. The Hungarian method for the assignment problem. Naval Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Coifman, B.; Beymer, D.; McLauchlan, P.; Malik, J. A real-time computer vision system for vehicle tracking and traffic surveillance. Transp. Res. Part C Emerg. Technol. 1998, 6, 271–288. [Google Scholar] [CrossRef]

- Yi, D.; Su, J.; Liu, C.; Chen, W.H. Personalized driver workload inference by learning from vehicle related measurements. IEEE Trans. Syst. Man Cybern. Syst. 2017, 49, 159–168. [Google Scholar] [CrossRef]

- Yi, D.; Su, J.; Liu, C.; Chen, W.H. Trajectory clustering aided personalized driver intention prediction for intelligent vehicles. IEEE Trans. Ind. Inform. 2018. [Google Scholar] [CrossRef]

| Behavior Class | Recall | Precision |

|---|---|---|

| Solid-line crossing detection | 0.889 | 0.865 |

| Entering-restricted-areas detection | 0.730 | 0.964 |

| Indicators | Dataset | With Kalman Filter | Without Kalman Filter |

|---|---|---|---|

| Mean Speed (km/h) | Dataset 2-PA1 | 56.66 | 161.98 |

| Dataset 2-PA2 | 62.46 | 57.16 | |

| Speed limit within 68% confidence interval (km/h) | Dataset 2-PA1 | 68.39 | 85.07 |

| Dataset 2-PA2 | 64.24 | 63.23 | |

| Number of speeding behaviors | Dataset 2-PA1 | 3 | 8 |

| Dataset 2-PA2 | 10 | 25 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, X.; Wu, F.; Chen, W.; Naghizade, E.; Khoshelham, K. Show Me a Safer Way: Detecting Anomalous Driving Behavior Using Online Traffic Footage. Infrastructures 2019, 4, 22. https://doi.org/10.3390/infrastructures4020022

Zheng X, Wu F, Chen W, Naghizade E, Khoshelham K. Show Me a Safer Way: Detecting Anomalous Driving Behavior Using Online Traffic Footage. Infrastructures. 2019; 4(2):22. https://doi.org/10.3390/infrastructures4020022

Chicago/Turabian StyleZheng, Xiao, Fumi Wu, Weizhang Chen, Elham Naghizade, and Kourosh Khoshelham. 2019. "Show Me a Safer Way: Detecting Anomalous Driving Behavior Using Online Traffic Footage" Infrastructures 4, no. 2: 22. https://doi.org/10.3390/infrastructures4020022

APA StyleZheng, X., Wu, F., Chen, W., Naghizade, E., & Khoshelham, K. (2019). Show Me a Safer Way: Detecting Anomalous Driving Behavior Using Online Traffic Footage. Infrastructures, 4(2), 22. https://doi.org/10.3390/infrastructures4020022