Adaptive Energy Management Strategy for Hybrid Electric Vehicles in Dynamic Environments Based on Reinforcement Learning

Abstract

1. Introduction

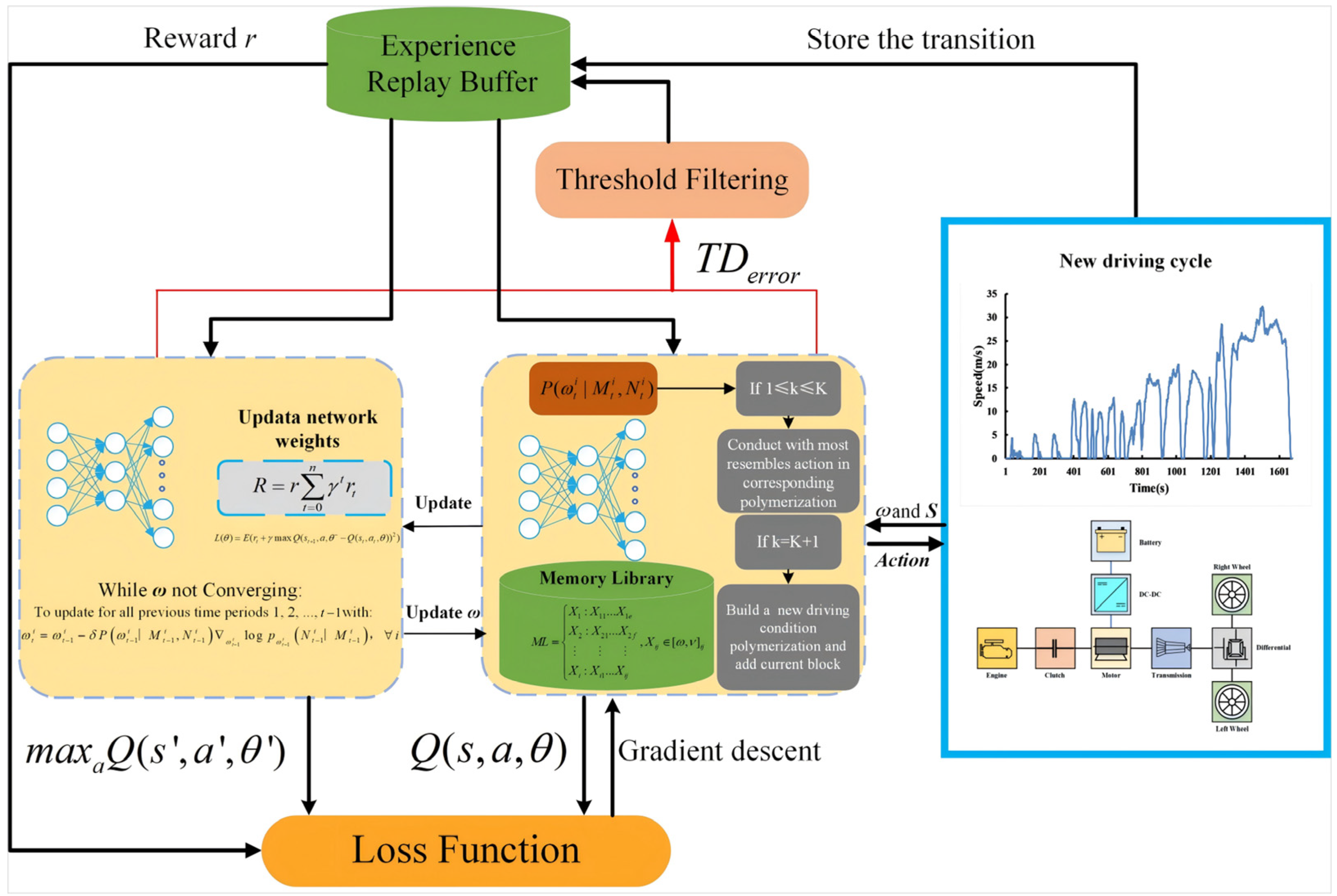

- The memory library (ML) comprising specific actions for different driving condition scenarios has been developed for the reinforcement learning agent to leverage.

- The identification parameters for driving condition blocks and the control parameters in memory library are derived through Dirichlet clustering. During the online process, the control parameters are adjusted by the expectation maximization (EM) algorithm, enabling the agent to continuously build and refine the memory library while retaining previously acquired knowledge.

- The proposed adaptive learning strategy in dynamic environment (ALDE) algorithm equips the agent with the ability to adapt to changing environments. As the algorithm operates, the agent learns and enhances its capacity for managing a range of normal and extreme driving conditions, constantly.

2. Construction of Vehicle Model

2.1. Vehicle Dynamic Modeling

2.2. Engine Modeling

2.3. Motor Modeling

2.4. Battery Modeling

3. Adaptive Learning Strategy in Dynamic Environment

3.1. Reinforcement Learning Modeling and Problem Description

3.2. Cluster Aggregation in the Adaptive Algorithm

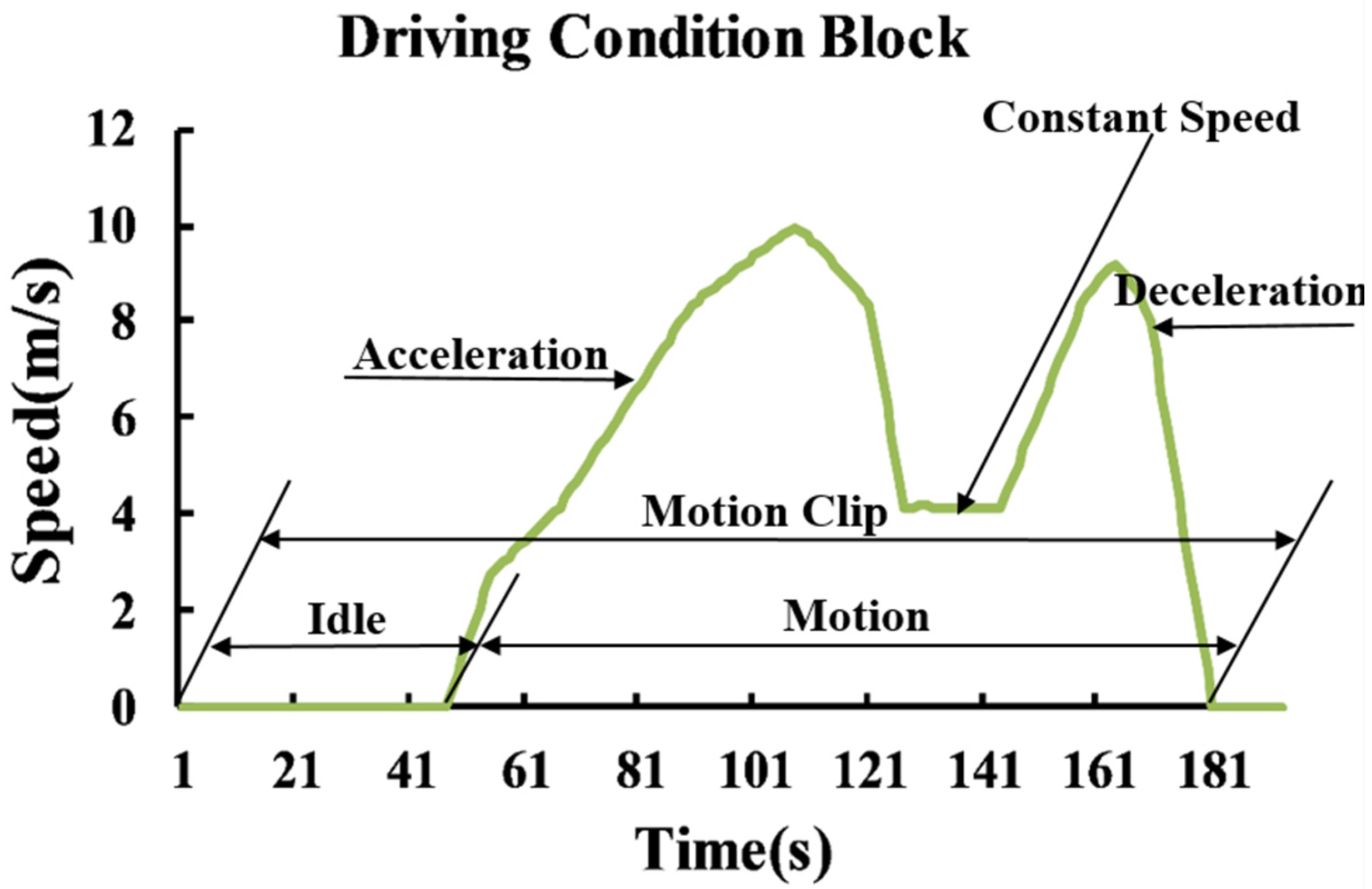

3.2.1. Definition and Characteristics of Driving Condition Block in Cluster Aggregation

3.2.2. Cluster Aggregation Based on Dirichlet Method

3.3. Chinese Restaurant Process in ALDE

3.4. Adaptive Algorithm Update

| Algorithm 1. Pseudo-code of ALDE. |

| Input: Characteristic parameters of dynamic driving condition blocks |

| Output: Optimal action (engine power) |

|

|

|

|

|

Conduct current driving condition block with most resembles action in corresponding , |

, add current driving , and restore them in |

with: |

|

4. Simulation and Hardware-in-the-Loop Experiment

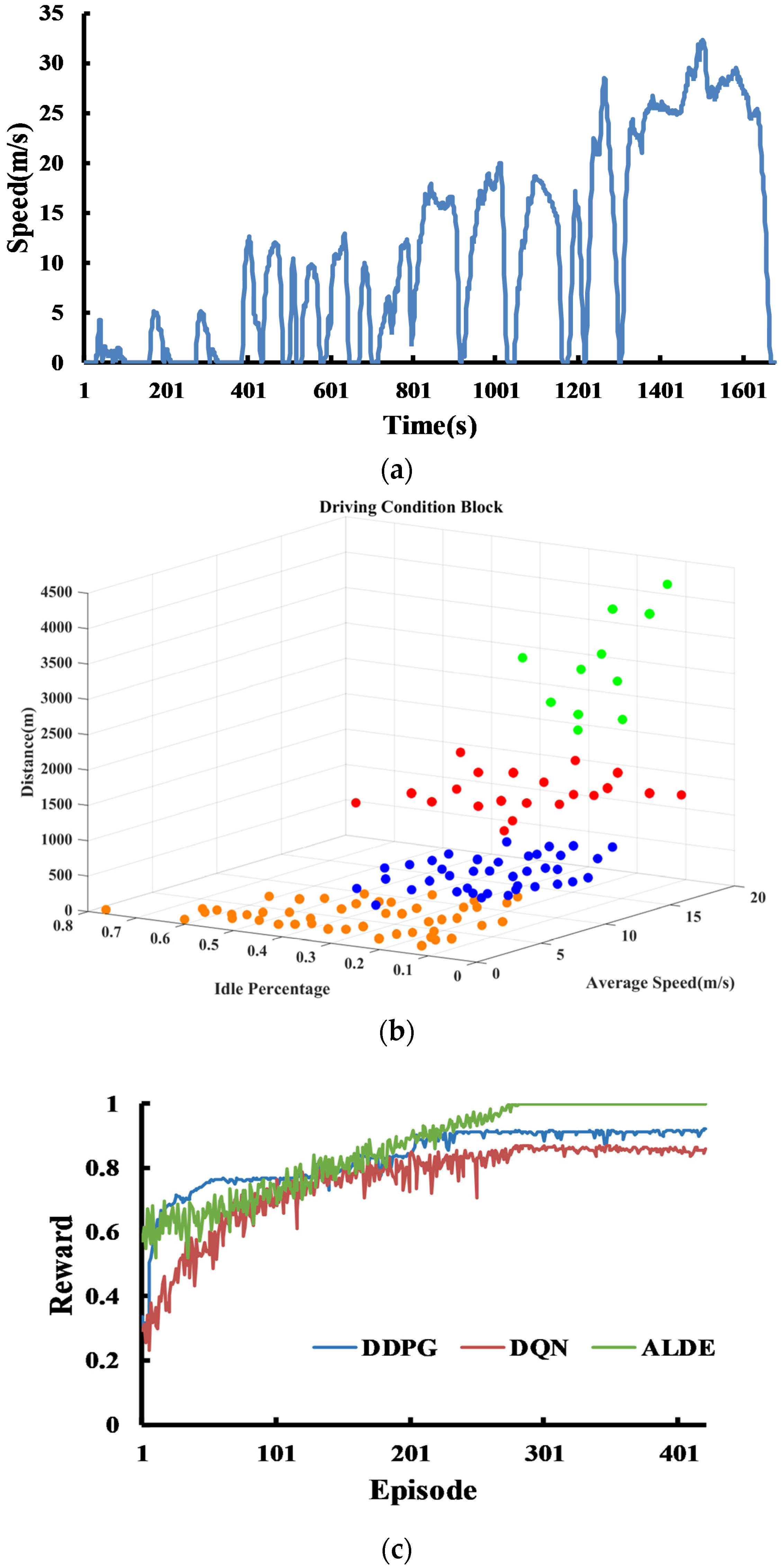

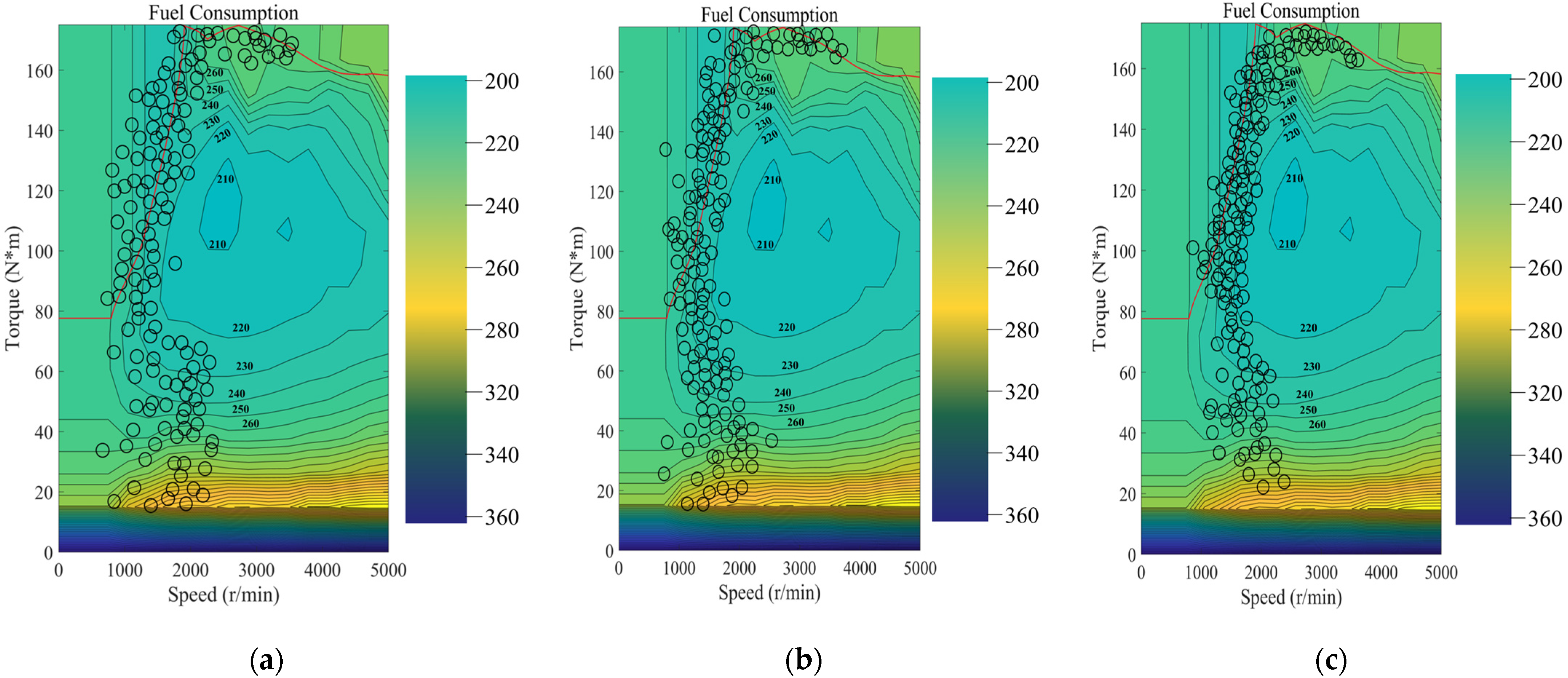

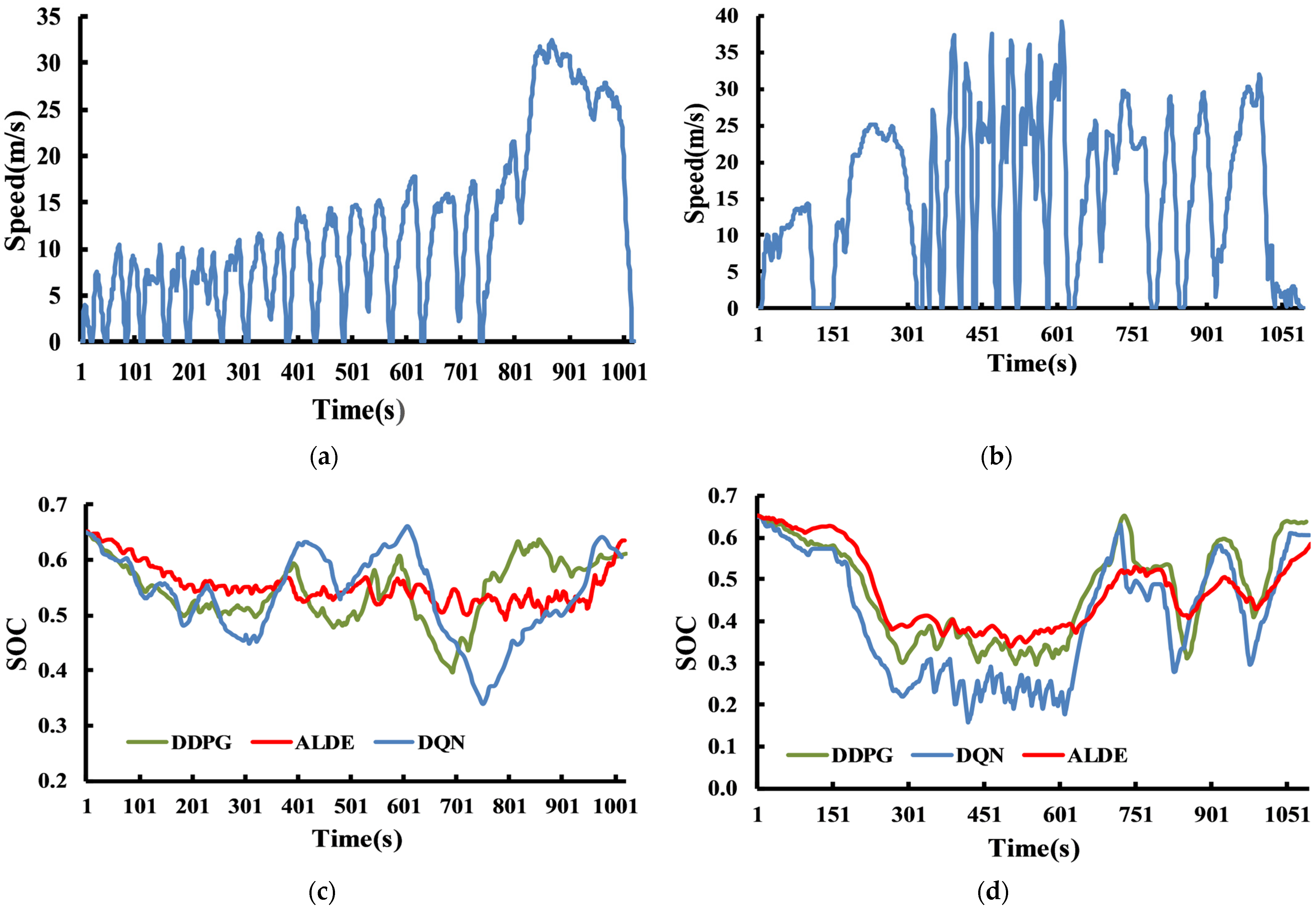

4.1. Simulation Experiment

4.2. Hardware-in-Loop Experiment

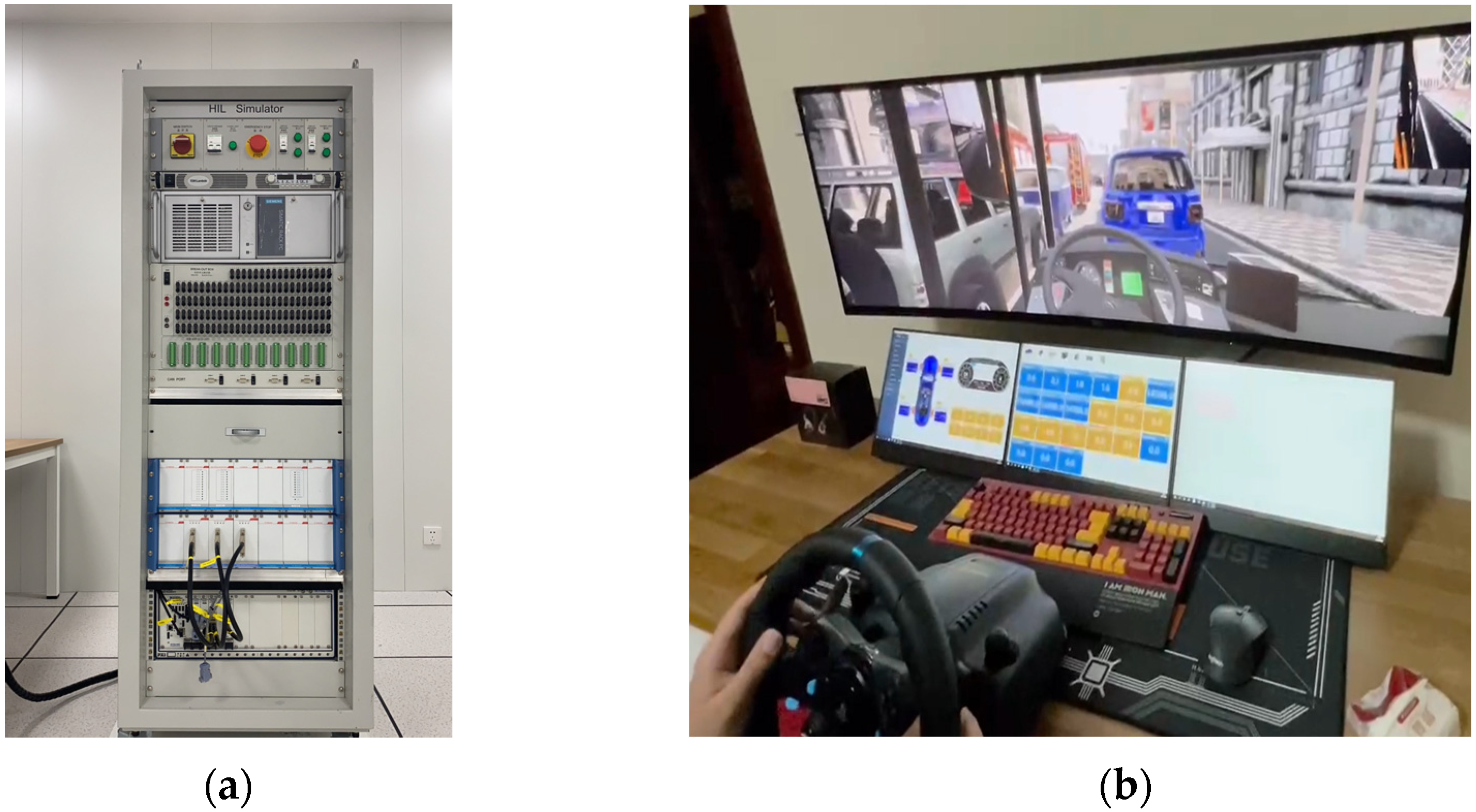

4.2.1. Hardware-in-the-Loop Experimental Platform

4.2.2. Simulation of Hardware-in-the-Loop Experiment

5. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Talari, K.; Jatrothu, V.N. A Review on Hybrid Electrical Vehicles. Stroj. Cas. J. Mech. Eng. 2022, 72, 131–138. [Google Scholar] [CrossRef]

- Gaidar, S.; Karelina, M.; Laguzin, A.; Quang, H.D. Impact of operational factors on environmental safety of internal combustion engines. Transp. Res. Procedia 2020, 50, 136–144. [Google Scholar] [CrossRef]

- Yu, X.; Zhu, L.; Wang, Y.; Filev, D.; Yao, X. Internal combustion engine calibration using optimization algorithms. Appl. Energy 2022, 305, 117894. [Google Scholar] [CrossRef]

- Allwyn, R.G.; Al-Hinai, A.; Margaret, V. A comprehensive review on energy management strategy of microgrids. Energy Rep. 2023, 9, 5565–5591. [Google Scholar] [CrossRef]

- Bagwe, R.M.; Byerly, A.; Dos Santos, E.C., Jr.; Ben-Miled, Z. Adaptive Rule-Based Energy Management Strategy for a Parallel HEV. Energies 2019, 12, 4472. [Google Scholar] [CrossRef]

- Wang, Y.; Li, W.; Liu, Z.; Li, L. An Energy Management Strategy for Hybrid Energy Storage System Based on Reinforcement Learning. World Electr. Veh. J. 2023, 14, 57. [Google Scholar] [CrossRef]

- Inuzuka, S.; Zhang, B.; Shen, T. Real-Time HEV Energy Management Strategy Considering Road Congestion Based on Deep Reinforcement Learning. Energies 2021, 14, 5270. [Google Scholar] [CrossRef]

- Huang, K.D.; Nguyen, M.K.; Chen, P.T. A Rule-Based Control Strategy of Driver Demand to Enhance Energy Efficiency of Hybrid Electric Vehicles. Appl. Sci. 2022, 12, 8507. [Google Scholar] [CrossRef]

- Fu, X.; Wang, B.; Yang, J.; Liu, S.; Gao, H.; He, B.Q.; Zhao, H. A Rule-Based Energy Management Strategy for a Light-Duty Commercial P2 Hybrid Electric Vehicle Optimized by Dynamic Programming. In Proceedings of the SAE 2021 WCX Digital Summit, Virtual, 13–15 April 2021. [Google Scholar]

- Peng, J.; Fan, H.; He, H.; Pan, D. A Rule-Based Energy Management Strategy for a Plug-in Hybrid School Bus Based on a Controller Area Network Bus. Energies 2015, 8, 5122–5142. [Google Scholar] [CrossRef]

- Zhou, S.; Chen, Z.; Huang, D.; Lin, T. Model Prediction and Rule Based Energy Management Strategy for a Plug-in Hybrid Electric Vehicle with Hybrid Energy Storage System. IEEE Trans. Power Electron. 2021, 36, 5926–5940. [Google Scholar] [CrossRef]

- Peng, J.; He, H.; Xiong, R. Rule based energy management strategy for a series–parallel plug-in hybrid electric bus optimized by dynamic programming. Appl. Energy 2017, 185, 1633. [Google Scholar] [CrossRef]

- Ding, N.; Prasad, K.; Lie, T.T. Design of a hybrid energy management system using designed rule-based control strategy and genetic algorithm for the series—parallel plug—in hybrid electric vehicle. Int. J. Energy Res. 2021, 45, 1627–1644. [Google Scholar] [CrossRef]

- Guercioni, G.R.; Galvagno, E.; Tota, A.; Vigliani, A. Adaptive Equivalent Consumption Minimization Strategy with Rule-Based Gear Selection for the Energy Management of Hybrid Electric Vehicles Equipped with Dual Clutch Transmissions. IEEE Access 2020, 8, 190017–190038. [Google Scholar] [CrossRef]

- Mansour, C.J. Trip-based optimization methodology for a rule-based energy management strategy using a global optimization routine: The case of the Prius plug-in hybrid electric vehicle. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2016, 230, 1529–1545. [Google Scholar] [CrossRef]

- Chen, Z.; Xiong, R.; Cao, J. Particle swarm optimization-based optimal power management of plug-in hybrid electric vehicles considering uncertain driving conditions. Energy 2016, 96, 197–208. [Google Scholar] [CrossRef]

- Lian, R.; Peng, J.; Wu, Y.; Tan, H.; Zhang, H. Rule-interposing deep reinforcement learning based energy management strategy for power-split hybrid electric vehicle. Energy 2020, 197, 117297. [Google Scholar] [CrossRef]

- Zhou, Y.; Ravey, A.; Pera, M.-C. A survey on driving prediction techniques for predictive energy management of plug-in hybrid electric vehicles. J. Power Sources 2019, 412, 480–495. [Google Scholar] [CrossRef]

- Xu, B.; Rathod, D.; Zhang, D.; Yebi, A. Parametric study on reinforcement learning optimized energy management strategy for a hybrid electric vehicle. Appl. Energy 2020, 259, 114200. [Google Scholar] [CrossRef]

- Chaoui, H.; Gualous, H.; Boulon, L.; Kelouwani, S. Deep Reinforcement Learning Energy Management System for Multiple Battery Based Electric Vehicles. In Proceedings of the IEEE Vehicle Power and Propulsion Conference, Chicago, IL, USA, 27–30 August 2018. [Google Scholar]

- Aljohani, T.M.; Ebrahim, A.; Mohammed, O. Real-Time metadata-driven routing optimization for electric vehicle energy consumption minimization using deep reinforcement learning and Markov chain model. Electr. Power Syst. Res. 2021, 192, 106962. [Google Scholar] [CrossRef]

- Zou, Y.; Liu, T.; Liu, D.; Sun, F. Reinforcement learning-based real-time energy management for a hybrid tracked vehicle. Appl. Energy 2016, 171, 372–382. [Google Scholar] [CrossRef]

- Xiong, R.; Cao, J.; Yu, Q. Reinforcement learning-based real-time power management for hybrid energy storage system in the plug-in hybrid electric vehicle. Appl. Energy 2018, 211, 538–548. [Google Scholar] [CrossRef]

- Qi, C.; Zhu, Y.; Song, C.; Yan, G.; Xiao, F.; Da, W.; Zhang, X.; Cao, J.; Song, S. Hierarchical reinforcement learning based energy management strategy for hybrid electric vehicle. Energy 2022, 238, 121703. [Google Scholar] [CrossRef]

- Lee, H.; Song, C.; Kim, N.; Cha, S.-W. Comparative Analysis of Energy Management Strategies for HEV: Dynamic Programming and Reinforcement Learning. IEEE Access 2020, 8, 67112–67123. [Google Scholar] [CrossRef]

- Aljohani, T.M.; Mohammed, O. A Real-Time Energy Consumption Minimization Framework for Electric Vehicles Routing Optimization Based on SARSA Reinforcement Learning. Vehicles 2022, 4, 1176–1194. [Google Scholar] [CrossRef]

- Sun, W.; Zou, Y.; Zhang, X.; Gua, N.; Zhang, B.; Du, G. High robustness energy management strategy of hybrid electric vehicle based on improved soft actor-critic deep reinforcement learning. Energy 2022, 258, 124806. [Google Scholar] [CrossRef]

- Guo, L.; Liu, H.; Han, L.; Yang, N.; Liu, R.; Xiang, C. Predictive energy management strategy of dual-mode hybrid electric vehicles combining dynamic coordination control and simultaneous power distribution. Energy 2023, 263, 125598. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y.; Yu, H.; Nie, Z.; Liu, Y.; Chen, Z. A novel data-driven controller for plug-in hybrid electric vehicles with improved adaptabilities to driving environment. J. Clean. Prod. 2022, 334, 130250. [Google Scholar] [CrossRef]

- Akamine, K.; Imamura, T.; Terashima, K. Gain-scheduled control for the efficient operation of a power train in a parallel hybrid electric vehicle according to driving environment. Nippon. Kikai Gakkai Ronbunshu C Hen Trans. Jpn. Soc. Mech. Eng. Part C 2003, 69, 1648–1655. [Google Scholar] [CrossRef][Green Version]

- Chen, J.; Qian, L.; Xuan, L.; Chen, C. Hierarchical eco-driving control strategy for hybrid electric vehicle platoon at signalized intersections under partially connected and automated vehicle environment. IET Intell. Transp. Syst. 2023, 17, 1312–1330. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, H.; Lei, M.; Yan, X. Review on the impacts of cooperative automated driving on transportation and environment. Transp. Res. Part D Transp. Environ. 2023, 115, 103607. [Google Scholar] [CrossRef]

- Zhu, J.; Wei, Y.; Kang, Y.; Jiang, X.; Dullerud, G.E. Adaptive deep reinforcement learning for non-stationary environments. Sci. China Inf. Sci. 2022, 65, 225–241. [Google Scholar] [CrossRef]

- Wang, H.; Liu, N.; Zhang, Y.; Feng, D.; Huang, F.; Li, D.; Zhang, Y. Deep reinforcement learning: A survey. Front. Inf. Technol. Electron. Eng. 2020, 21, 1726–1744. [Google Scholar] [CrossRef]

- Ha, J.; An, B.; Kim, S. Reinforcement Learning Heuristic A. IEEE T. Ind. Inform. 2023, 19, 2307–2316. [Google Scholar] [CrossRef]

- Zhu, Z.; Lin, K.; Jain, A.K.; Zhou, J. Transfer Learning in Deep Reinforcement Learning: A Survey. IEEE Trans. Pattern. Anal. Mach. Intell. 2023, 45, 13344–13362. [Google Scholar] [CrossRef]

- Lv, H.; Qi, C.; Song, C.; Song, S.; Zhang, R.; Xiao, F. Energy management of hybrid electric vehicles based on inverse reinforcement learning. Energy Rep. 2022, 8, 5215–5224. [Google Scholar] [CrossRef]

- Balooch, A.; Wu, Z. A new formalization of Dirichlet-type spaces. J. Math. Anal. Appl. 2023, 1, 127322. [Google Scholar] [CrossRef]

- Gaisin, A.M.; Gaisina, G.A. On the Stability of the Maximum Term of the Dirichlet Series. Russ. Math. 2023, 67, 20–29. [Google Scholar] [CrossRef]

- Qi, C.; Wang, D.; Song, C.; Xiao, F.; Jin, L.; Song, S. Action Advising and Energy Management Strategy Optimization of Hybrid Electric Vehicle Agent Based on Uncertainty Analysis. IEEE Trans. Transp. Electrif. 2024, 10, 6940–6949. [Google Scholar] [CrossRef]

| Symbol | Parameter | Values |

|---|---|---|

| Engine | Maximum power | 92 kW |

| Maximum torque | 175 Nm | |

| Maximum speed | 6500 rpm | |

| Traction motor | Maximum power | 30 kW |

| Maximum torque | 200 Nm | |

| Maximum speed | 6000 rpm | |

| Battery | Capacity | 5.3 Ah |

| Voltage | 266.5 V |

| Ingredient | Contribution Rate/% | Cumulative Contribution Rate/% |

|---|---|---|

| Average Speed | 45.13 | 45.13 |

| Idle Time | 23.28 | 68.41 |

| Distance | 10.47 | 78.88 |

| ⋮ | ⋮ | ⋮ |

| Algorithm | DDPG | DQN | ALDE | Fuel Consumption Reduction Rate Compared with DDPG | Fuel Consumption Reduction Rate Compared with DQN | |

|---|---|---|---|---|---|---|

| Driving Cycles | ||||||

| WLTP | 6.74 L/100 km | 7.05 L/100 km | 6.32 L/100 km | 6.23% | 10.35% | |

| US06 | 6.16 L/100 km | 6.47 L/100 km | 5.85 L/100 km | 5.03% | 9.58% | |

| LA92 | 6.44 L/100 km | 6.68 L/100 km | 6.08 L/100 km | 5.60% | 8.98% | |

| New driving cycle | 7.38 L/100 km | 7.87 L/100 km | 7.13 L/100 km | 3.39% | 9.04% | |

| Algorithm | ALDE/SIM | ALDE/HIL |

|---|---|---|

| Driving Cycles | ||

| WLTP | 6.32 L/100 km | 6.29 L/100 km |

| US06 | 5.85 L/100 km | 5.91 L/100 km |

| LA92 | 6.08 L/100 km | 6.11 L/100 km |

| New driving cycle | 7.13 L/100 km | 7.07 L/100 km |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, S.; Zhang, C.; Qi, C.; Song, C.; Xiao, F.; Jin, L.; Teng, F. Adaptive Energy Management Strategy for Hybrid Electric Vehicles in Dynamic Environments Based on Reinforcement Learning. Designs 2024, 8, 102. https://doi.org/10.3390/designs8050102

Song S, Zhang C, Qi C, Song C, Xiao F, Jin L, Teng F. Adaptive Energy Management Strategy for Hybrid Electric Vehicles in Dynamic Environments Based on Reinforcement Learning. Designs. 2024; 8(5):102. https://doi.org/10.3390/designs8050102

Chicago/Turabian StyleSong, Shixin, Cewei Zhang, Chunyang Qi, Chuanxue Song, Feng Xiao, Liqiang Jin, and Fei Teng. 2024. "Adaptive Energy Management Strategy for Hybrid Electric Vehicles in Dynamic Environments Based on Reinforcement Learning" Designs 8, no. 5: 102. https://doi.org/10.3390/designs8050102

APA StyleSong, S., Zhang, C., Qi, C., Song, C., Xiao, F., Jin, L., & Teng, F. (2024). Adaptive Energy Management Strategy for Hybrid Electric Vehicles in Dynamic Environments Based on Reinforcement Learning. Designs, 8(5), 102. https://doi.org/10.3390/designs8050102