Abstract

The rise of intelligent systems demands good machine translation models that are less data hungry and more efficient, especially for low- and extremely-low-resource languages with few or no data available. By integrating a linguistic feature to enhance the quality of translation, we have developed a generic Neural Machine Translation (NMT) model for Kannada–Tulu language pairs. The NMT model uses Transformer architecture and a state-of-the-art model for translating text from Kannada to Tulu and learns based on the parallel data. Kannada and Tulu are both low-resource Dravidian languages, with Tulu recognised as an extremely-low-resource language. Dravidian languages are morphologically rich and are highly agglutinative in nature and there exist only a few NMT models for Kannada–Tulu language pairs. They exhibit poor translation scores as they fail to capture the linguistic features of the language. The proposed generic approach can benefit other low-resource Indic languages that have smaller parallel corpora for NMT tasks. Evaluation metrics like Bilingual Evaluation Understudy (BLEU), character-level F-score (chrF) and Word Error Rate (WER) are considered to obtain the improved translation scores for the linguistic-feature-embedded NMT model. These results hold promise for further experimentation with other low- and extremely-low-resource language pairs.

1. Introduction

A machine translation (MT) system takes sentences in the source language as input and generates a translation in a target language [1]. Considerable research on Neural Machine Translation (NMT) in high-resource languages has been carried out across the world. Although specific Indian languages have these MT systems in place, creating one for low-resource languages is challenging due to the morphological richness, agglutinative nature and few or no parallel corpora being available. Translation relies on linguistic characteristics unique to each language [2]. The four main categories in machine translation are the following [1,3]:

- Rule-Based Machine Translation (RBMT): It uses dictionary and grammatical rules specific to language pairs for translation. However, more post-editing is required for the translated output. The initial machine translation models were built using rule-based approaches and deducing rules was a time-consuming task.

- Statistical Machine Translation (SMT): It uses statistical models to analyse massive parallel data and generate the target language text by aligning words present in the source language. Though SMT is good for basic translations, it does not capture the context very well, so the translation can be wrong at times.

- Hybrid Machine Translation (HMT): It combines RBMT and SMT. It uses a translation memory and produces good-quality translations and requires editing from human translators.

- Neural Machine Translation (NMT): This type of translation uses neural models, parallel corpora and deductive reasoning to determine the correlation between source and target language words. Nowadays, most MT systems are neural, due to ease of use, and open-source architectures which are applicable to high-resource languages.

Indian languages can be classified into the following language families in particular: Indo-European (Hindi, Marathi, Urdu, Gujarati, Sanskrit), Dravidian (Kannada, Tulu, Tamil, Telugu, Malayalam), Austroasiatic (Munda in particular) and Sino-Tibetan (Tibeto-Burman) [4]. The Dravidian language family has four subgroups: South Dravidian with Badaga, Irula, Kannada, Kodagu, Kota, Malayalam, Tamil, Toda and Tulu; South-Central Dravidian with Gondi, Konda, Kui, Kuvi, Manda, Pengo and Telugu; Central Dravidian with Gadaba, Kolami, Maiki and Parji; and North Dravidian with Brahui, Kurux and Malto [5]. The South Dravidian language, Tulu, dates to the eighth century and is morphologically rich and highly agglutinative. Grammarian Caldwell worked extensively on Tulu grammar [6] and identified Tulu as “one of the most highly developed languages of the Dravidian family”. The Tulu language being more verbal than documented, another grammarian Brigel [7] explained the grammatical boundaries of the language. The works of Brigel serve as a foundation and encourage non-Tulu speakers to understand the grammatical aspect of Tulu in an easier way. As per Caldwell, the Tulu language has a history of 2000 years. A survey conducted in 2011 recognised 1,722,768 Tulu speakers [8], yet the Tulu language is not recognised as one of the constitutional languages. Though the language has its script, most people use the Kannada script to write Tulu. The Tulu script became popular only after the 1980s and the trend has been changing lately.

Machine translation for Kannada–Tulu language pairs is crucial in introducing the Tulu language and its cultural vastness and morphological richness to non-speakers. Every language carries its essence of the land in addition to being a means of communication. From an educational perspective, Tulu is being taught in schools in South Canara. The importance of Tulu is growing with the progress of time, yet very little technological research has been carried out on this language. The potential applications of a Kannada–Tulu MT system include translating governance data from Kannada to Tulu, healthcare services that require text-to-speech systems where the backend needs an improved MT system and applications in the education sector, where students can learn in their mother tongue or understand better using their mother tongue Tulu. A few research works on Tulu script have been attempted for Optical Character Recognition (OCR), but very little work has been carried out on MT problems involving Tulu. The Tulu language still lacks a technological intervention that can translate it back and forth from another language, facilitating a non-Tulu speaker to communicate with the native-Tulu-speaking people. Hence, there is a need for a technique to provide language translation between native and non-Tulu speakers. In this study, we attempt to introduce one such linguistic element from the source side and examine its impact on translation. This research explicitly incorporates Parts of Speech (POS) linguistic features into Neural Machine Translation (NMT). Analysing the effect of the linguistic feature on NMT for Dravidian languages like Kannada and Tulu is the main theme of this study.

Both Kannada and Tulu are morphologically rich and highly agglutinative languages. Tulu is one of the extremely-low-resource languages spoken in the coastal region, while Kannada is a low-resource language spoken all over the state of Karnataka. The languages share many similarities yet are unique on their own. Both languages have sentence structures of the form Subject–Object–Verb and linguistic features like gender, number, tense [9], etc., and show inflections of morphemes. For a native speaker, both languages are easily translatable, but a non-native speaker finds it difficult to use Tulu. Though Kannada and Tulu languages share a few common vocabularies, Tulu is morphologically richer than Kannada. This behaviour can be witnessed in translating verbs. Verbs in Tulu undergo inflections based on number, gender and tense. Depending on the context and usage, several forms of the same word can be obtained in translation from Kannada to Tulu. For example, the usage of verb ಇಲ್ಲ (illa meaning “not present”) in Kannada can translate to ಇಜ್ಜೆ (ijje masculine), ಇಜ್ಜೆರ್ (ijjer masculine/feminine in plural), ಇಜ್ಜೊಲ್ (ijjol feminine) or ಇಜ್ಜಿ (ijji neuter). There are other verbs which show similar behaviours during inflection. Hence, it is difficult to translate morphologically rich languages. The proposed work is an experiment in this direction, aiming to obtain a translation of Kannada text into Tulu using an NMT model. Developing an NMT model that is less data hungry is the need of the hour for low- and extremely-low-resource languages like Kannada and Tulu. NMT-based approaches are quite popular and generate state-of-the-art translations using Transformers [10]. Certain variations of the Transformer architecture are proven to improve performance by introducing layers using Content Adaptive Recurrent Unit (CARU)-based Content Adaptive Attention (CAAtt) and a CARU-based Embedding (CAEmbed) layer for NMT and word embedding tasks [11,12] due to dynamic adaptation to the content.

The literature mentions that only a few NMT models are available for Kannada–Tulu MT tasks. Although neural models learn words based on those found in the source and target texts provided during training, translation errors still occur in current systems due to the low frequency of certain words [13]. In addition, the lack of linguistic-feature-capturing systems could be a cause for incorrect translations. Linguistic features like POS are added to Kannada–Tulu NMT, and translation accuracy is analysed in the proposed research. We experiment with the dataset released as part of a shared task, DravidianLangTech-2022 [14], where 10,300 parallel sentences were compiled from various sources. The training, validation and test sets are independent, without any overlap. Evaluation metrics such as BLEU score [15] and chrF [16] are computed by comparing the output text with the reference translation text in the target language, Tulu. Also, corpus BLEU and Word Error Rate [17] scores are computed for the generated text.

Very little work has been carried out on low- and extremely-low-resource Dravidian languages, especially Kannada–Tulu language pairs. The proposed work is a novel approach in the field of NLP for low- and extremely-low-resource language pairs. When translating into morphologically rich languages, features like the case, gender, number and tense that cause inflections must be captured. By explicitly adding the linguistic feature, we resolve the problem of incorrect translations. Dravidian languages share certain morphological features like gender, number, tense, euphonic change and POS, which can be utilised to resolve the problem of incorrect translations. Introducing POS at the source side can resolve ambiguity. The proposed methodology helps span other Dravidian languages as well. NMT in morphologically rich language pairs is challenging because a more significant number of suffixes generate inflections on a single word. The key contributions include building a machine translation system on low- and extremely-low-resource language pairs. As less work has been carried out on Kannada–Tulu language pairs, the proposed work is a novel one in NLP for low- and extremely-low-resource language pairs. The linguistic feature embedded in the NMT model improves translation accuracy when it is embedded into Transformer architecture due to ambiguity resolution in the case of homonyms. Evaluation metrics such as BLEU score, chrF and WER are used to evaluate the proposed model.

2. Literature Survey

The background study is based on the linguistic motivation for machine translation where one among the language pairs considered for translation is low resource. The impact of including a linguistic feature is studied and the gaps are identified from the literature. Very little work has been carried out on Kannada–Tulu language pairs and the translations from the NMT systems are biased, generating lower BLEU scores.

Rico and Barry incorporated linguistic features like POS and morphological information into MT of English–German and English–Romanian language pairs [18]. In a collaborative effort, they trained an NMT model in addition to the linguistic characteristics and saw a slight boost in the BLEU scores. Vandan and Dipti presented a comparable paradigm of language translation for Marathi–Hindi language pairs [19]. They performed translation using the OpenNMT tool equipped with Sequence-to-Sequence models and an internal shallow parser to obtain POS tags. Compared to a baseline that did not consider the linguistic information in NMT, the suggested approach produced better outcomes.

Goyal et al. proposed NMT for Dravidian languages using a basic two-layered Long Short-Term Memory (LSTM) with 500 hidden units [20]. The results obtained were not very satisfactory for Kannada–Tulu language pairs as the lack of inclusion of a linguistic feature affected the translation quality, generating lower BLEU scores. Vyawahare et al. used Transformer architecture to train Dravidian language NMT [21] models using the Fairseq toolkit. They fine-tuned the existing model using a custom dataset to obtain multilingual NMT. They explored pre-trained models and data augmentation techniques to improve translation but ended up with lower BLEU scores. Hegde et al. experimented with MT models tailored explicitly for Kannada–Tulu language pairs [22]. The paper explored challenges in NMT for the language pairs, focusing on differences in vocabulary, syntax and morphology, yet generated biased translations. Rodrigues et al. proposed an OCR system to identify Tulu scripts and a rule-based translation system for translating from English to Tulu [23]. This model was a speech-to-text model, and they enhanced the model with NMT techniques for two-way translation using LSTM architecture [24]. The model fails for complex sentences due to a lack of a dataset.

Chakrabarty et al. built a Multilingual Neural Machine Translation (MNMT) model that harnesses source-side linguistic features for low-resource languages by including morphological features [25]. They stress the inclusion of feature relevance into the model rather than plugging a linguistic feature. Eight low-resource Asian languages were considered. They conclude that mixing language indicators and dummy features with self-relevance outperformed other methods. An observation made was that a poor-quality morphological analyser results in degraded performance in translation. Marouani et al. proposed a metric to analyse the impact of linguistic features in translation to the target language, Arabic. The AL-TERp metric was designed by tuning to the Arabic language [26]. Imbibing linguistic information improved the correlation of metric results with human assessments. They conclude that morphologically rich languages benefit from including linguistic features. Agarwal et al. compared the performance of NMT models with and without linguistic features [27]. They concluded that incorporating linguistic features improves the baseline, yet the effect is less in the case of Dravidian compared to Indo-Aryan languages. Hlaing et al. explored methods for improving the NMT of low-resource language pairs [28] using Transformer architecture and POS tags. They observed improvement in translation using the POS-tagged approach over the baseline. Yin et al. proposed an attention model for NMT using POS tags. They jointly modeled bilingual POS tagging and NMT, which also includes POS tags in the attention model [29]. This tagging enhances semantic representations on the source side, and, on the target side, they refine attention weight calculation. The models show improvement over conventional NMT models.

From the literature, we observe that none of the existing approaches has attempted to integrate linguistic feature(s) for Kannada–Tulu translation. Further, not much work has been carried out on Kannada–Tulu MT tasks, as the availability of a dataset is a major bottleneck. The development of an NMT model for Kannada–Tulu language pairs itself is an area to explore and is a challenging one. The linguistic-feature-embedded NMT model poses challenges and opportunities to explore new horizons in low- and extreme-low-resource language pairs. The proposed explicit linguistic feature inclusion experiment in NMT is a novel contribution to Kannada–Tulu language pairs with fewer parallel data. Including linguistic features may reduce the necessity for large parallel corpora and generate better translations with comparatively fewer parallel data. Based on the above, our work is grounded on developing an NMT system for Kannada–Tulu language pairs by integrating linguistic features and evaluating the results by comparing them with state-of-the-art models.

3. Proposed Methodology

The development of an NMT system requires a baseline neural network and a good-quality parallel corpus in source and target languages. The baseline chosen for the proposed work is a Transformer architecture and the language pairs are Kannada–Tulu pairs. Further, the generated translations are verified with the expected translation and the evaluation scores are computed.

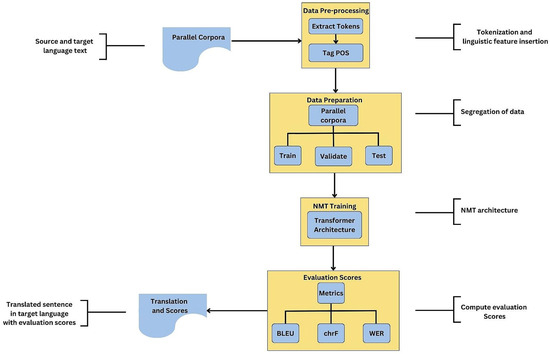

The steps involved in developing the MT model are depicted in Figure 1. The raw data obtained from a given source must be pre-processed to make them suitable for further processing. This step aids in increasing the accuracy of the output expected for the analysis. Hence, pre-processing techniques are employed to process the input data. Further, the input data are divided into three categories, training, validation and testing, to check the efficiency of the model in the data preparation step. An NMT model using Transformer architecture is developed and trained using the training dataset. Any low-resource language pairs can be considered. As a case study, we have experimented with Kannada–Tulu language pairs. Using the Transformer architecture, the Kannada text is translated to Tulu, and the scores are calculated. Each of these steps is explained in detail in the subsequent subsections.

Figure 1.

Steps involved in developing the POS-integrated Kannada-to-Tulu machine translation system.

3.1. Data Pre-Processing

The source language chosen is Kannada, and the target language is Tulu. The parallel corpora consist of sentences in both source–target language pairs. The dataset is pre-processed to remove duplicates, extra spaces and special symbols and digits. Tokenisation is based on space, and Kannada’s source-side text is tagged with its corresponding POS using an existing shallow parser [30]. The Kannada shallow parser developed by IIIT Hyderabad [31,32] is used to POS tag Kannada tokens. The tags are in Shakti Standard Format, suitable for Indic languages. The reason for choosing the POS linguistic feature over others is firmly based on the commonality between Kannada and Tulu. Both these languages, being Dravidian, share a small portion of the vocabulary. The source text is tagged with its POS and is aligned with its corresponding parallel text in Tulu. The POS tags are defined using the Shakthi Standard Format (SSF). These tags are concatenated with the tokens before encoding. An example of POS tagged data is shown in Table 1.

Table 1.

Example of POS tagged data.

3.2. Data Preparation

The Kannada–Tulu parallel corpora contain 10,300 sentences. The sentences are divided into training, validation and test sets, without sentence overlap. During the training phase, a small validation dataset will help to tune the model and create better translations and the test set is used for the model’s performance evaluation. The sentence distribution statistics are depicted in Table 2. Since MT requires parallel corpora, the sentence counts at the source and target side are equal. Further, if more sentences are available for training from mixed sources, the translation quality can be expected to improve. However, the validation set is a small subset of sentences independent of the training data.

Table 2.

Statistics of sentence distribution across training, validation and test sets for Kannada–Tulu language pairs.

3.3. Training NMT Model Using Transformer Architecture

The OpenNMT PyTorch [33] framework is used to develop the POS-embedded NMT model [34]. The Transformer architecture used for NMT consists of an encoder and decoder blocks with several encoder or decoder units within each block [10]. The encoder and decoder comprise multiple layers of feedforward neural networks and attention mechanisms. The sentences in the source language are converted into a sequence of vectors called input embeddings. To identify the position of tokens, a positional encoding is added into the input embeddings. The encoder consists of numerous self-attention heads for each layer. The model can assess each word’s significance in relation to the other words in the input phrase because of self-attention. This method assists in concurrently capturing word dependencies inside the input sequence. Each point is subjected to an independent feedforward neural network after self-attention. In the encoder, layer normalisation comes after a residual connection surrounding each sub-layer. This advances training while assisting in the mitigation of the vanishing gradient issue. The sentence in the target language is embedded, and positional encodings are added just like the encoder. During training, the decoder uses masked self-attention, where each position in the decoder is only allowed to attend to previous positions. This prevents the model from peeking ahead during training, maintaining the auto-regressive property required for generation. The decoder also performs multi-head attention over the encoder’s output. This allows the decoder to focus on different parts of the input sequence when generating each token in the output. After attention, a feedforward neural network is applied to the decoder’s output. Similar to the encoder, each sub-layer in the decoder has residual connections and layer normalisation. The Transformer architecture learns words from the parallel corpus that it is trained on by building a vocabulary. During the training phase, Kannada–Tulu parallel corpora are fed into the system. Here, the words are tokenised based on spaces and no sub-word tokenisation methods are performed. The corresponding vocabulary is built on the training set. Every token is converted into its embedding, and, input to Transformer, is a sequence of these word embedding vectors. Word embeddings and positional embeddings are inputs to the encoder. The generated feature vector is then fed into the decoder, which decodes the feature vector into target probability words, the generated output.

3.4. Translating Text from Kannada to Tulu

The testing phase will evaluate the translation accuracy of the proposed model for the given test set. The sentences present in training, validation and test sets are independent. The input sentences in Kannada are translated to Tulu by the proposed model, and the generated output in the target language, Tulu, is written into a file for further analysis and score calculation. An example of source and translated text is shown in Table 3.

Table 3.

Source and translated text.

Algorithm 1 chalks out the steps in integrating the linguistic feature with NMT. The input file is a parallel corpus containing sentences in source and target languages. As a case study, we considered Kannada and Tulu language pairs. The pre-processing stage cleans the data and concatenates a linguistic feature into each token. For illustration purposes, the POS tag is shown in pseudocode. However, any linguistic feature can be integrated similarly. The data preparation stage follows, segregating the data into training, validation and test sets. The NMT training phase uses Transformer architecture and generates the translation file. The accuracy of the translation is computed using the following evaluation metrics: BLEU score, chrF and WER.

| Algorithm 1: Linguistic-Feature-Integrated NMT | |||

| Data: | |||

| Source file: sentences in Kannada language | |||

| Target file: sentences in Tulu language | |||

| Variables: | |||

| SP= [‘!’, ‘@’, ‘#’, ‘$’, ‘%’, ‘&’, ‘(’, ‘)’] | |||

| data: parallel corpora | |||

| Functions: | |||

| remove_Duplicate(file, temp): takes source and target files,removes duplicate sentences and returns temporary files temp_src and temp_tgt | |||

| split(s,a): splits s on special character ‘a’ | |||

| delete(k): removes the token ‘k’ | |||

| tagPOS(): return POS concatenated with token | |||

| Output: Translated text and evaluation scores | |||

| 1 | Data Pre-processing | ||

| 2 | d← split(data,newline) | ||

| 3 | for i in d do | ||

| 4 | temp ← remove_Duplicate(src,s1) | ||

| 5 | end | ||

| #tokenisation of line based on space | |||

| 6 | for i in d do | ||

| 7 | token ← split(i,space) | ||

| 8 | if token == tab || token == [0-9]+ || token ==SP then | ||

| 9 | delete(token) | ||

| 10 | end | ||

| 11 | token.tagPOS() | ||

| 12 | end | ||

| 13 | Data Preparation | ||

| 14 | train ← 0.8 * d | ||

| 15 | validation ← 0.1 * d | ||

| 16 | test ← 0.1 * d | ||

| 17 | Train NMT model using Transformer architecture | ||

| 18 | Write output to file f | ||

| 19 | Compute BLEU score, chrF and WER as per equations 2, 3 and 4 | ||

| 20 | Display output scores | ||

| 21 | End | ||

4. Results and Experimental Analysis

The proposed model is trained and evaluated using Kannada–Tulu parallel corpora which were released as part of the DravidianLangTech-2022 shared task. The data are curated for MT and obtained from multiple sources [14], including e-newspapers, academic books and WhatsApp resources. The mean words per sentence was 7 for Kannada and 8 for Tulu. The dataset consists of 10,300 Kannada–Tulu parallel sentences in Unicode. The vocabulary is built from 8300 sentences in the training set of source and target languages separately. Further, the model is trained for 10,000 steps on a single GPU with 80 GB memory, and an Adam optimiser is used [35]. A checkpoint is created for every 500 steps to save the model, and translation scores are calculated after saving each checkpoint. The details of the hyper parameters are shown in Table 4. The experimental setup results analysis using standard evaluation metrics for NMT is discussed in the following subsections in detail.

Table 4.

Hyper parameters and their values used in the proposed NMT system.

4.1. Experimental Setup

Table 5 outlines the experimental setup for the proposed model, including the hardware and software required to carry out the proposed work. We have used an A100 Graphical Processing Unit (GPU) from NVIDIA manufactured in Taiwan, with a minimum of 32 GB RAM, 80 GB memory and a 1.92 TB SSD. The scripts are written in Python 3.11.7 using OpenNMT Pytorch on Ubuntu Operating System 22.04.4. This environmental setup allows for easy reproduction of the experiment.

Table 5.

Hardware and software requirements.

4.2. Performance Metrics

The standard metrics used to evaluate NMT models include BLEU score, chrF and WER. The BLEU score is calculated by taking the product of the Brevity Penalty (BP) and the geometric mean of modified precision pn until n-gram size n. The Brevity Penalty (BP) penalises very short predictions compared to the reference sentences. Here, r is the reference sentence length, c is the translated sentence length and N is the n-gram order to be used for calculation. In general, N = 4, and wn is the weight, which adds to 1. Equations (1) and (2) show the calculation of BP and BLEU scores.

The chrF [36] is calculated using Equation (3).

The average character n-gram precision (chrP) is the part of n-grams present in a translated sentence that has their counterpart in the reference. The average character n-gram recall (chrR) is the portion of n-grams of the reference sentence present in the translation output. The parameter β signifies more importance is to be given to recall over precision. WER for a sentence [17] is calculated using Equation (4).

Here, S, I and D correspond to the number of substitutions, insertions and deletions, respectively, and are obtained by considering the reference translation sentence. N is the total number of words in the reference sentence.

4.3. Result Analysis

After training the NMT model for 10,000 steps, we tested its accuracy using a test set with 1000 sentences in Kannada. We obtained the following translations, as shown in Table 6, with and without embedding the POS information into the model.

Table 6.

Sample Kannada–Tulu translations generated with and without including POS details on the source side.

The detailed analysis of the generated output in Table 3 is discussed below. It can be observed that we have an accurate translation for a few input sentences, but, for a few sentences, the translation with POS is better than the one without.

In the first row, it can be observed that the generated translation both with and without POS inclusion is accurate and is the same as the expected translation. Hence, it can be deduced that shorter sentences are expected to give better translation results. Based on the results shown in the second row, it can be observed that, though the generated translation both with and without POS does not capture the exact meaning of input, the one generated using POS inclusion is slightly better as it correlates elders and kids with human relations like brothers. In contrast, the one without POS gives a different context for women’s education. Further, for the input shown in the third row, which is a short sentence, the generated translation both with and without POS inclusion is accurate and is the same as the expected translation. The sentence in the fourth row contains seven words and is comparatively longer. Though the generated translation both with and without POS does not capture the exact meaning of the source text, the one generated using POS inclusion is slightly better as it relates the illuminating lamp to sparkling eyes. In contrast, the one without POS gives a different context for the rainy season. However, the shorter input sentence in the fifth row that was translated both with and without POS inclusion is accurate and is the same as the expected translation.

The output obtained for the sentence in the sixth row shows that the output translations generated are much better in the POS-embedded model than the one without POS. The POS-embedded model captures a word more than the latter, which is evident in the generated translations. The translation result obtained in the seventh row shows that, though the entire translation is not fully correct, a few words are correctly translated using the POS-embedded model. The output translation generated using the POS-embedded model for the source sentence performs better than the one without POS. The generated translation without POS is inaccurate.

In the above examples, though the translations obtained with and without POS are not entirely correct, the model with POS has a better translation. From the results, it can be deduced that the POS-embedded NMT model performs better in comparison to the baseline NMT model.

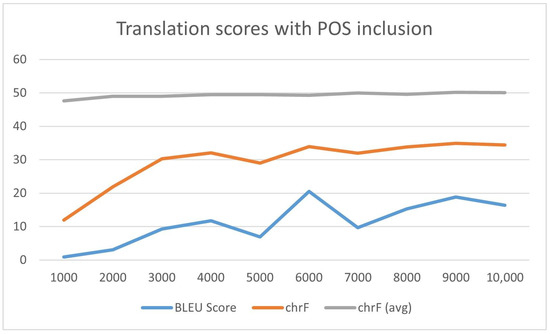

The model evaluation is based on the translation scores using the following performance metrics: BLEU score, chrF and average chrF. The translation score obtained for BLEU, chrF and average chrF with POS inclusion is shown in Figure 2. In Figure 2, with every thousand steps, the BLEU score and chrF rise until 5000. There is a glitch followed by a spike at 6000, where the highest BLEU score and chrF are obtained. The variation in the BLEU score is significantly evident, whereas, for the chrF, it still shows a rise.

Figure 2.

BLEU score and chrF obtained for the generated translations in Tulu with the inclusion of POS on the source side.

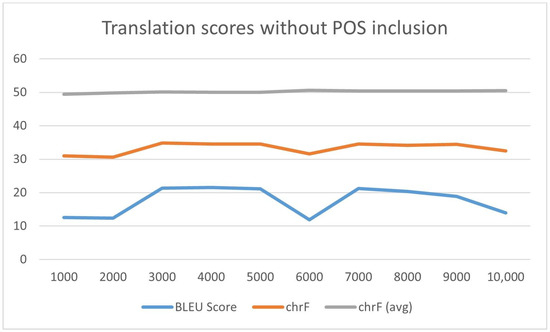

Further, the translation score obtained for BLEU, chrF and average chrF without POS inclusion is shown in Figure 3. In Figure 3, the trend is different as both the BLEU score and chrF hit a lower value at the 6000th step, but the average chrF is stable throughout. There are fluctuations, and the highest value of BLEU and chrF can be found at 3000 and 7000 steps, respectively. Once both models are trained for 10,000 steps, we identify the best score amongst the models saved at checkpoints created every 500 steps.

Figure 3.

BLEU score and chrF obtained for the generated translations in Tulu without the inclusion of POS on the source side.

Figure 2 and Figure 3 show that the inclusion of POS features at the source side achieves a slight improvement in the BLEU score, chrF and average chrF. Though the average chrFs seem stable in both the figures, variation in BLEU score and chrF can be observed. Considering the best scores in each model, we obtain the results as shown in Table 7.

Table 7.

Best translation scores for BLEU score, chrF and average chrF metrics.

From Table 7, it is evident that there is a slight improvement in the scores obtained for Kannada–Tulu translation using POS information compared to the ones without. The linguistic features are similar since both Kannada and Tulu belong to the Dravidian family. Hence, adding one such linguistic feature, POS, has led to a better translation score. BLEU score is a metric that matches exact words for reference and generated translation, whereas the chrF is more suitable for translation as it matches the F-score of character n-grams. The corpus-level translation scores for WER and BLEU [37] are shown in Table 8. The score calculation is performed using the expected sentences as reference in comparison with the translated sentences. The WER score inversely correlates to the quality of translation [38]. WER scores are calculated by considering the number of insertions, deletions and substitutions required to align the output sentence to the reference.

Table 8.

Corpus-level translation scores for WER and BLEU.

4.4. Experimental Analysis and Discussions

The proposed approach of integrating a linguistic feature is generic for similar language pairs. A detailed comparison of the proposed model with existing Kannada–Tulu NMT models is discussed in Table 6. The models based on the only available Kannada–Tulu parallel corpora, curated by Hegde et al., are considered for discussion [14]. It can be observed that the LSTM generated the lowest BLEU score of 0.6149. Though Vyawahare et al. [21] used Transformer architecture, the BLEU score was significantly lower than that of the proposed approach. Hegde et al. used the existing dataset of 10,300 sentences to obtain a BLEU score of 22.89 and 23.06, respectively, using a Transformer and Byte Pair Encoding (BPE) for tokenisation. They curated further sentences with Automatic Text Generation (ATG) tools using paradigms to obtain 29,000 sentences for training the Transformer. The BLEU scores obtained using this approach were 41.02 and 41.82 with BPE. Using the proposed linguistic feature integration, there is a possibility to obtain even better scores for their methods as well.

From Table 9, it can be inferred that the existing approaches have not adopted chrF and WER, which are important in assessing quality of translation. A word that can be considered a noun in one context and a verb in another context can be disambiguated using POS tags. The disambiguation process is performed before entering the training phase, and, hence, there is substantial improvement in the translation. None of the approaches in the literature includes chrF and WER, which are needed for signifying the quality of translation. Although some works showed improved accuracy, WER and chrF were not employed. Other features like gender, number, tense and case can also be integrated in a similar approach, which may enhance the quality of translation. The translation quality relies on the training data and the sentence length. For those sentences lengthier than the average sentence length, the translation quality may vary. Though both Kannada and Tulu languages have several dialects [39], standard Kannada and standard Tulu [40] are considered in the parallel data, and the translation on the test set is carried out accordingly. The proposed Kannada–Tulu NMT model is a work in progress and shall be enhanced to obtain better translations for Kannada and Tulu language pairs.

Table 9.

Comparison of the proposed model with existing Kannada–Tulu NMT.

5. Conclusions and Future Scope

The proposed NMT model introduces linguistic information into the source side of machine translation in low-resource language pairs. Translation across low- and extremely-low-resource languages is challenging due to the lack of parallel corpora. As a case study, we have considered low-resource Dravidian languages Kannada and Tulu, and the POS linguistic feature. The proposed model uses a Transformer architecture, and the translation scores for evaluation metrics like BLEU score, chrF and WER show improvement for the model involving POS information. Since a Tulu POS tagger is unavailable, we restrict our work to Kannada–Tulu translation by embedding POS information at the source side and intend to take up POS-embedded Tulu–Kannada translation in the future.

The proposed approach is tailored explicitly for low-resource languages with morphologically rich features. However, any low-resource language belonging to the same class, such as Dravidian languages, could be tailored to share the same methodology. This experiment proves that adding a linguistic feature benefits machine translation output for similar language pairs. As well as helping in NMT, linguistic feature integration can help the development of NLP tools that require identification of stems, chunks, summarising a given text and marking the named entities. To implement linguistic features, it is necessary to have a morphological analyser in the language of concern and analyse the entailment of each linguistic feature on the corpus. We intend to enhance this work for a more improvised model by adding sub-word tokenisation and data augmentation. There are many linguistic features that could enhance the quality of translation such as tense, gender, number and euphonic change that are interesting to pursue in low-resource languages.

Author Contributions

Conceptualization, M.S.; methodology, M.S.; software, M.S.; validation, U.D.A., A.N. and M.S.; investigation, M.S.; resources, M.S.; data curation, M.S.; writing—original draft preparation, M.S.; writing—review and editing, U.D.A. and A.N.; visualization, M.S.; supervision, U.D.A. and A.N.; project administration, U.D.A. and A.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bhattacharyya, P.; Joshi, A. Natural Language Processing; Wiley: Chennai, India, 2023; pp. 183–201. [Google Scholar]

- Imami, T.R.; Mu’in, F. Linguistic and Cultural Problems in Translation. In Proceedings of the 2nd International Conference on Education, Language, Literature, and Arts (ICELLA 2021), Banjarmasin, Indonesia, 10–11 September 2021; Atlantis Press: Amsterdam, The Netherlands, 2021. [Google Scholar]

- Koehn, P. Statistical Machine Translation; Cambridge University Press: Cambridge, UK, 2010; pp. 14–20. [Google Scholar]

- Encyclopaedia Britannica. Indian Languages, In Encyclopaedia Britannica. Available online: https://www.britannica.com/topic/Indian-languages (accessed on 17 February 2023).

- Steever, S.B. The Dravidian Languages; Routledge: London, UK, 1998; p. 1. [Google Scholar]

- Caldwell, R. A Comparative Grammar of the Dravidian or South-Indian Family of Languages; University of Madras: Madras, India, 1956; p. 35. [Google Scholar]

- Brigel, J. A Grammar of the Tulu Language; Basel Mission Press: Mangalore, India, 1872; pp. 28–30. [Google Scholar]

- Tulu Language. Available online: https://en.wikipedia.org/wiki/Tulu_language?variant=zh-tw (accessed on 24 May 2024).

- Sridhar, S.N. Modern Kannada Grammar; Manohar Publishers: Delhi, India, 2007; pp. 156–157. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 261–272. [Google Scholar]

- Im, S.K.; Chan, K.H. Neural Machine Translation with CARU-Embedding Layer and CARU-Gated Attention Layer. Mathematics 2024, 12, 997. [Google Scholar] [CrossRef]

- Chan, K.H.; Ke, W.; Im, S.K. CARU: A Content-Adaptive Recurrent Unit for the Transition of Hidden State in NLP. In Neural Information Processing; Yang, H., Pasupa, K., Leung, A.C.S., Kwok, J.T., Chan, J.H., King, I., Eds.; ICONIP 2020. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12532, pp. 123–135. [Google Scholar] [CrossRef]

- O’Brien, S. How to deal with errors in machine translation: Postediting. Mach. Transl. Everyone: Empower. Users Age Artif. Intell. 2022, 18, 105. [Google Scholar]

- DravidianLangTech-2022 the Second Workshop on Speech and Language Technologies for Dravidian Languages. GithubIO. Available online: https://dravidianlangtech.github.io/2022/ (accessed on 23 January 2024).

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Popović, M. chrF++: Words helping character n-grams. In Proceedings of the Second Conference on Machine Translation, Copenhagen, Denmark, 7–8 September 2017; pp. 612–618. [Google Scholar]

- Zechner, K.K.; Waibel, A. Minimizing word error rate in textual summaries of spoken language. In Proceedings of the 1st Meeting of the North American Chapter of the Association for Computational Linguistics, Seattle, WA, USA, 29 April–4 May 2000. [Google Scholar]

- Sennrich, R.; Haddow, B. Linguistic input features improve neural machine translation. arXiv 2016, arXiv:1606.02892. [Google Scholar]

- Mujadia, V.; Sharma, D.M. Nmt based similar language translation for hindi-marathi. In Proceedings of the Fifth Conference on Machine Translation, Online, 19–20 November 2020; pp. 414–417. [Google Scholar]

- Goyal, P.; Supriya, M.; Dinesh, U.; Nayak, A. Translation Techies@ DravidianLangTech-ACL2022-Machine Translation in Dravidian Languages. In Proceedings of the Second Workshop on Speech and Language Technologies for Dravidian Languages, Dublin, Ireland, Online, 26 May 2022; pp. 120–124. [Google Scholar]

- Vyawahare, A.; Tangsali, R.; Mandke, A.; Litake, O.; Kadam, D. PICT@ DravidianLangTech-ACL2022: Neural machine translation on dravidian languages. arXiv 2022, arXiv:2204.09098. [Google Scholar]

- Hegde, A.; Shashirekha, H.L.; Madasamy, A.K.; Chakravarthi, B.R. A Study of Machine Translation Models for Kannada-Tulu. In Congress on Intelligent Systems; Springer Nature: Singapore, 2022; pp. 145–161. [Google Scholar]

- Rodrigues, A.P.; Vijaya, P.; Fernandes, R. Tulu Language Text Recognition and Translation. IEEE Access 2024, 12, 12734–12744. [Google Scholar]

- Hochreiter, S. Long short-term memory. Neural Comput. 2010, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chakrabarty, A.; Dabre, R.; Ding, C.; Utiyama, M.; Sumita, E. Low-resource Multilingual Neural Translation Using Linguistic Feature-based Relevance Mechanisms. ACM Trans. Asian Low-Resource Lang. Inf. Process. 2023, 22, 1–36. [Google Scholar] [CrossRef]

- El Marouani, M.; Boudaa, T.; Enneya, N. Incorporation of linguistic features in machine translation evaluation of Arabic. In Big Data, Cloud and Applications: Third International Conference, BDCA-2018, Kenitra, Morocco, Revised Selected Papers 3; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; pp. 500–511. [Google Scholar]

- Agrawal, R.; Shekhar, M.; Misra, D. Integrating knowledge encoded by linguistic phenomena of Indian languages with neural machine translation. In Mining Intelligence and Knowledge Exploration: 5th International Conference, MIKE-2017, Hyderabad, India, Proceedings 5; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 287–296. [Google Scholar]

- Hlaing, Z.Z.; Thu, Y.K.; Supnithi, T.; Netisopakul, P. Improving neural machine translation with POS-tag features for low-resource language pairs. Heliyon 2022, 8, e10375. [Google Scholar] [CrossRef] [PubMed]

- Yin, Y.; Su, J.; Wen, H.; Zeng, J.; Liu, Y.; Chen, Y. Pos tag-enhanced coarse-to-fine attention for neural machine translation. ACM Trans. Asian Low-Resour. Lang. Inf. Process. (TALLIP) 2019, 18, 46. [Google Scholar] [CrossRef]

- Kannada Shallow Parser. LTRC. Available online: https://ltrc.iiit.ac.in/analyzer/kannada/ (accessed on 23 January 2024).

- Tandon, J.; Sharma, D.M. Unity in diversity: A unified parsing strategy for major indian languages. In Proceedings of the Fourth International Conference on Dependency Linguistics, Pisa, Italy, 18–20 September 2017; pp. 255–265. [Google Scholar]

- Popović, M.; Ney, H. Towards automatic error analysis of machine translation output. Comput. Linguist. 2011, 37, 657–688. [Google Scholar] [CrossRef]

- OpenNMT-py. Available online: https://opennmt.net/OpenNMT-py/ (accessed on 22 July 2024).

- Klein, G.; Kim, Y.; Deng, Y.; Senellart, J.; Rush, A.M. Opennmt: Open-source toolkit for neural machine translation. arXiv 2017, arXiv:1701.02810. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Popović, M. chrF: Character n-gram F-score for automatic MT evaluation. In Proceedings of the Tenth Workshop on Statistical Machine Translation, Lisbon, Portugal, 17–18 September 2015; pp. 392–395. [Google Scholar]

- Tilde Custom Machine Translation. LetsMT. Available online: https://www.letsmt.eu/Bleu.aspx (accessed on 24 January 2024).

- Moslem, Y. WER Score for Machine Translation. Available online: https://blog.machinetranslation.io/compute-wer-score/ (accessed on 24 February 2024).

- Krishnamurti, B. The Dravidian Languages; Cambridge University Press: Cambridge, UK, 2003; p. 24. [Google Scholar]

- Kekunnaya, P. A Comparative Study of Tulu Dialects; Rashtrakavi Govinda Pai Research Centre Udupi: Udupi, India, 1997; pp. 168–169. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).