Scalable Compositional Digital Twin-Based Monitoring System for Production Management: Design and Development in an Experimental Open-Pit Mine

Abstract

1. Introduction

- The design of an architecture for a production management system tailored to the mining operations of the experimental pilot of our research, the experimental open-pit mine.

- The development and implementation of a scalable compositional framework for a DT, facilitating an efficient PMS.

2. Background and Related Works

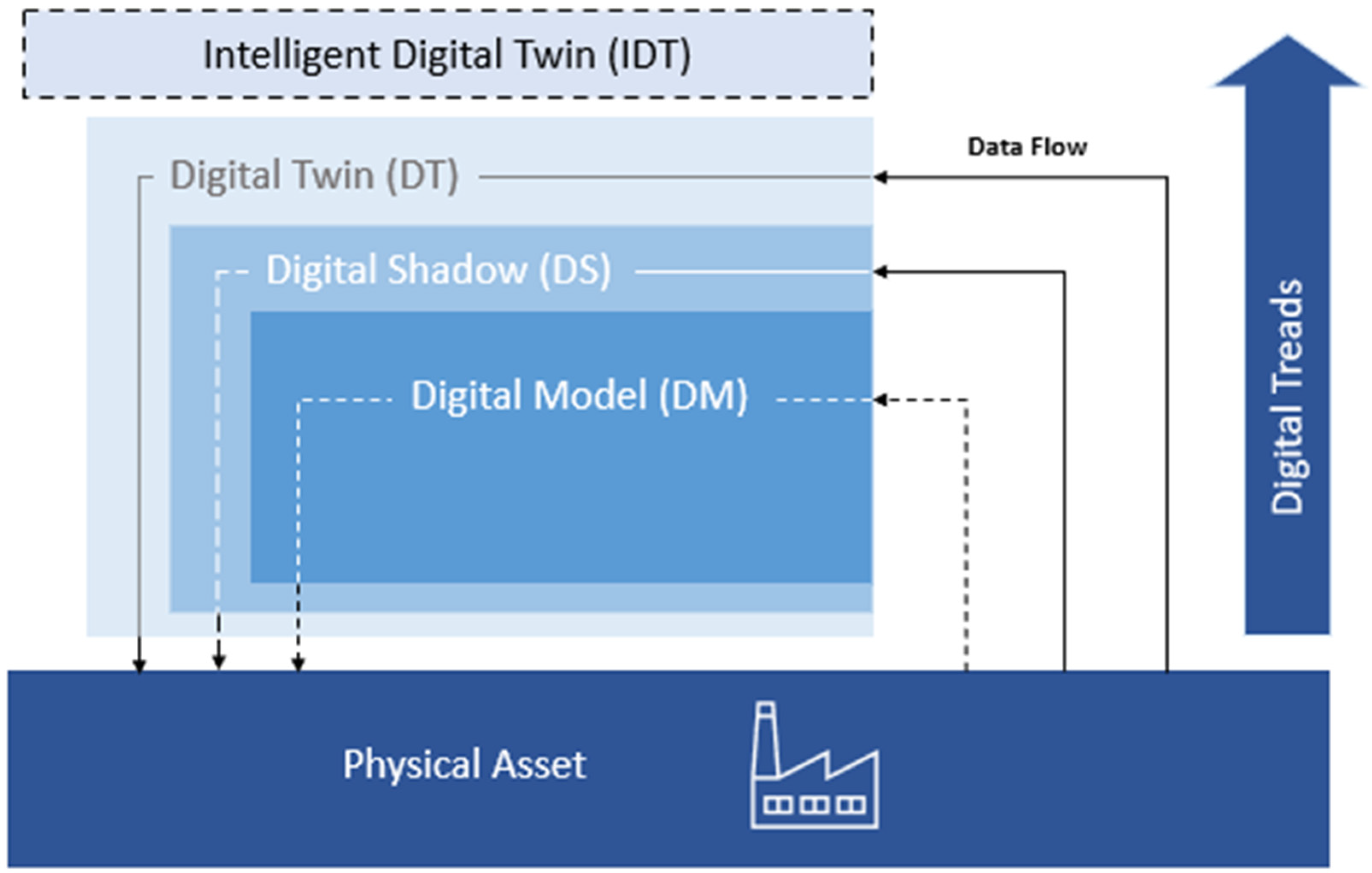

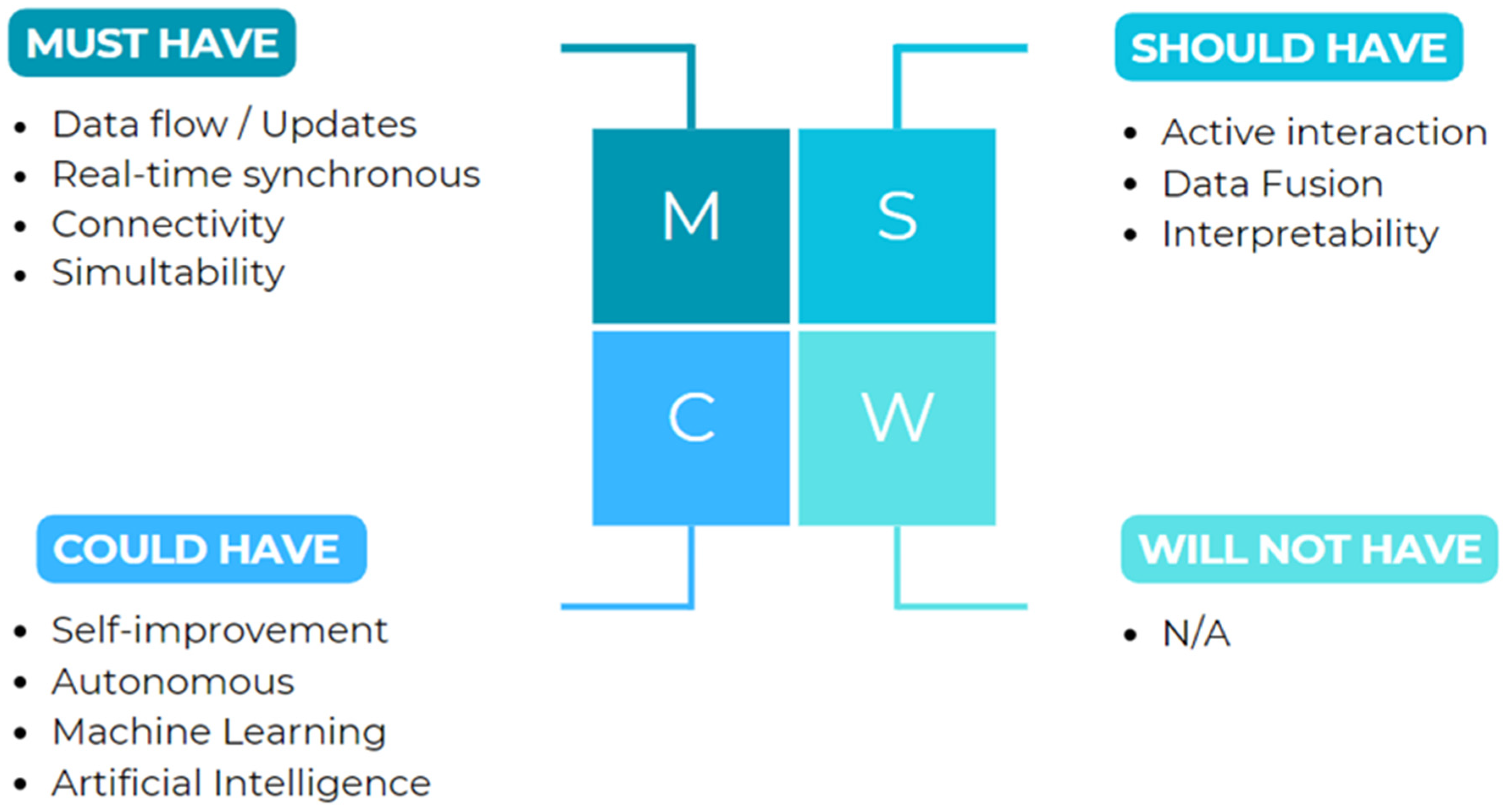

2.1. Digital Twin

2.1.1. Definitions and Associated Attributes

2.1.2. Current State of Digital Twin Research

- Equipment health management: DT enhances system and worker reliability, availability, and safety through seamless monitoring and informed maintenance decisions [10]. For example, it estimates the remaining useful life (RUL) of equipment components, enabling intelligent design and timely monitoring for predictive maintenance [11].

- Production scheduling: Traditional static production scheduling methods struggle with process uncertainty. DTs dynamically elaborate or verify schedules during disruptions. They even communicate with robots for optimal task scheduling.

2.2. Monitoring System

2.3. Production Management System

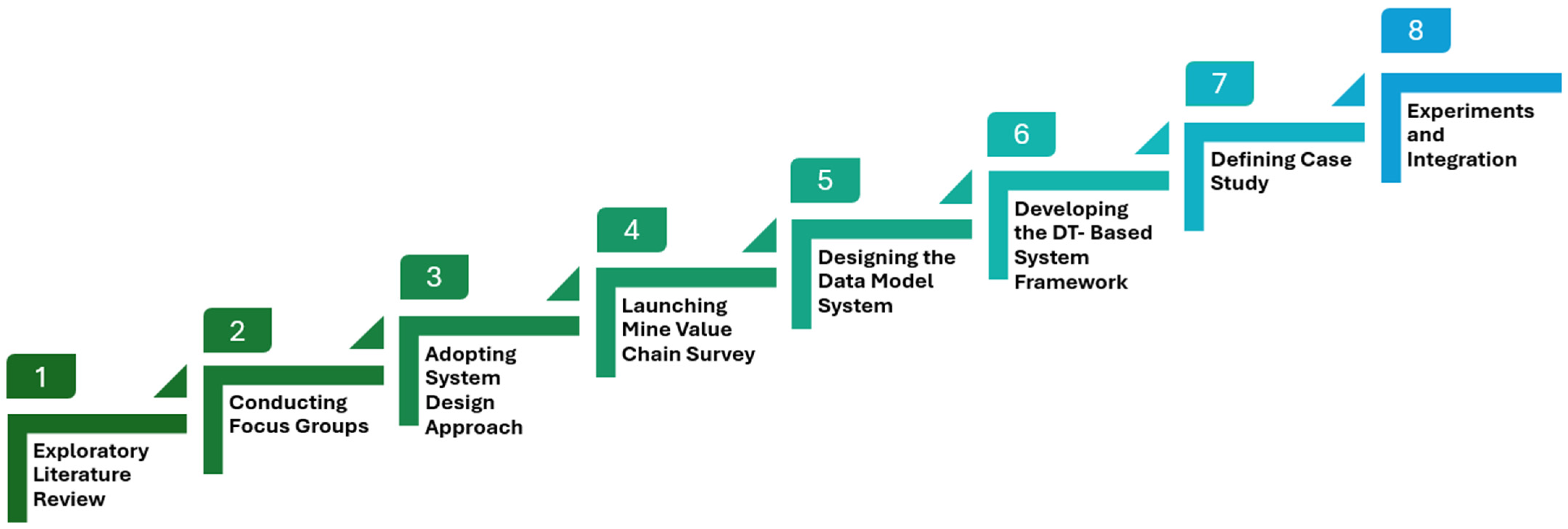

3. Materials and Methods

3.1. Research Methodology

3.2. Data Model

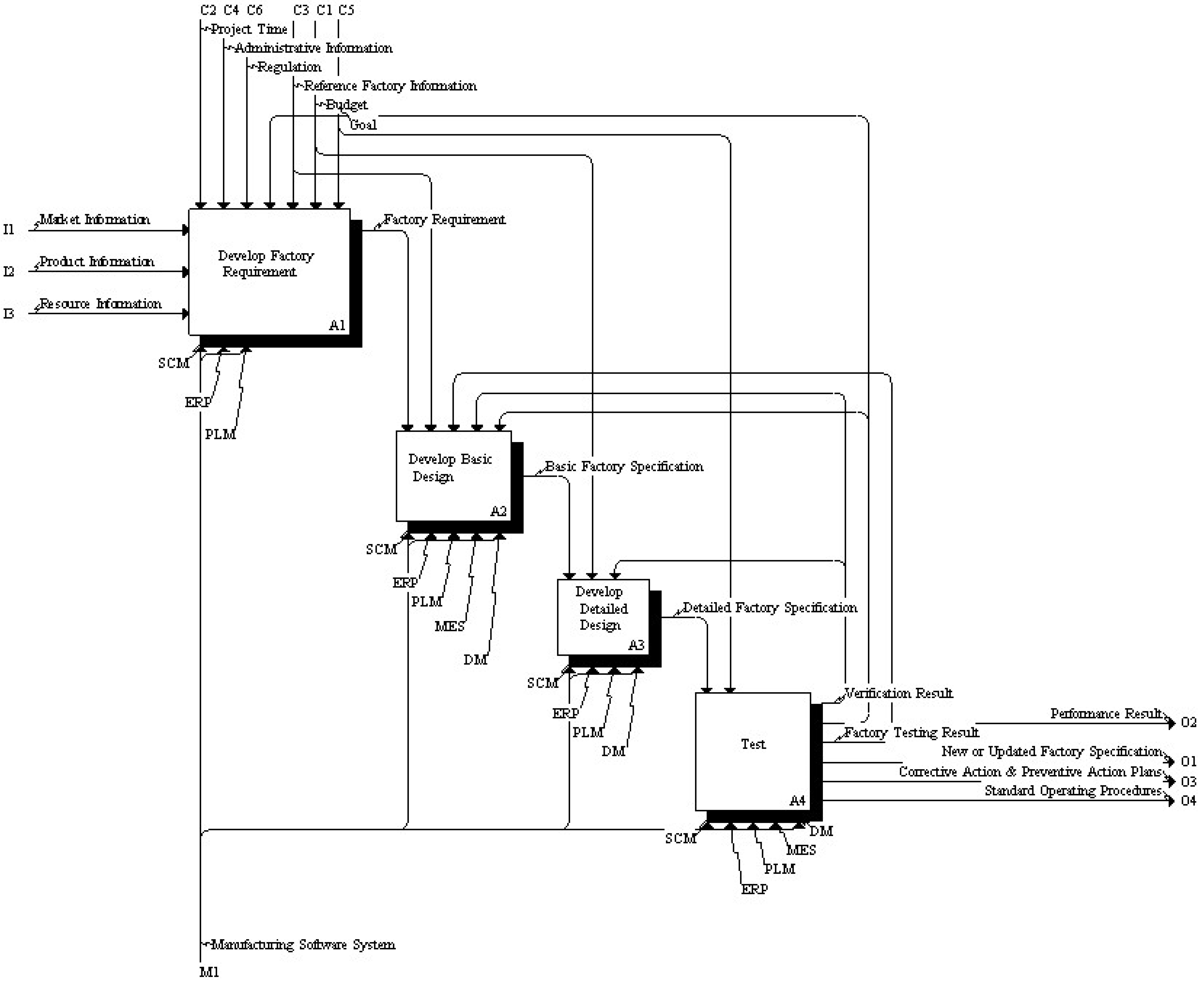

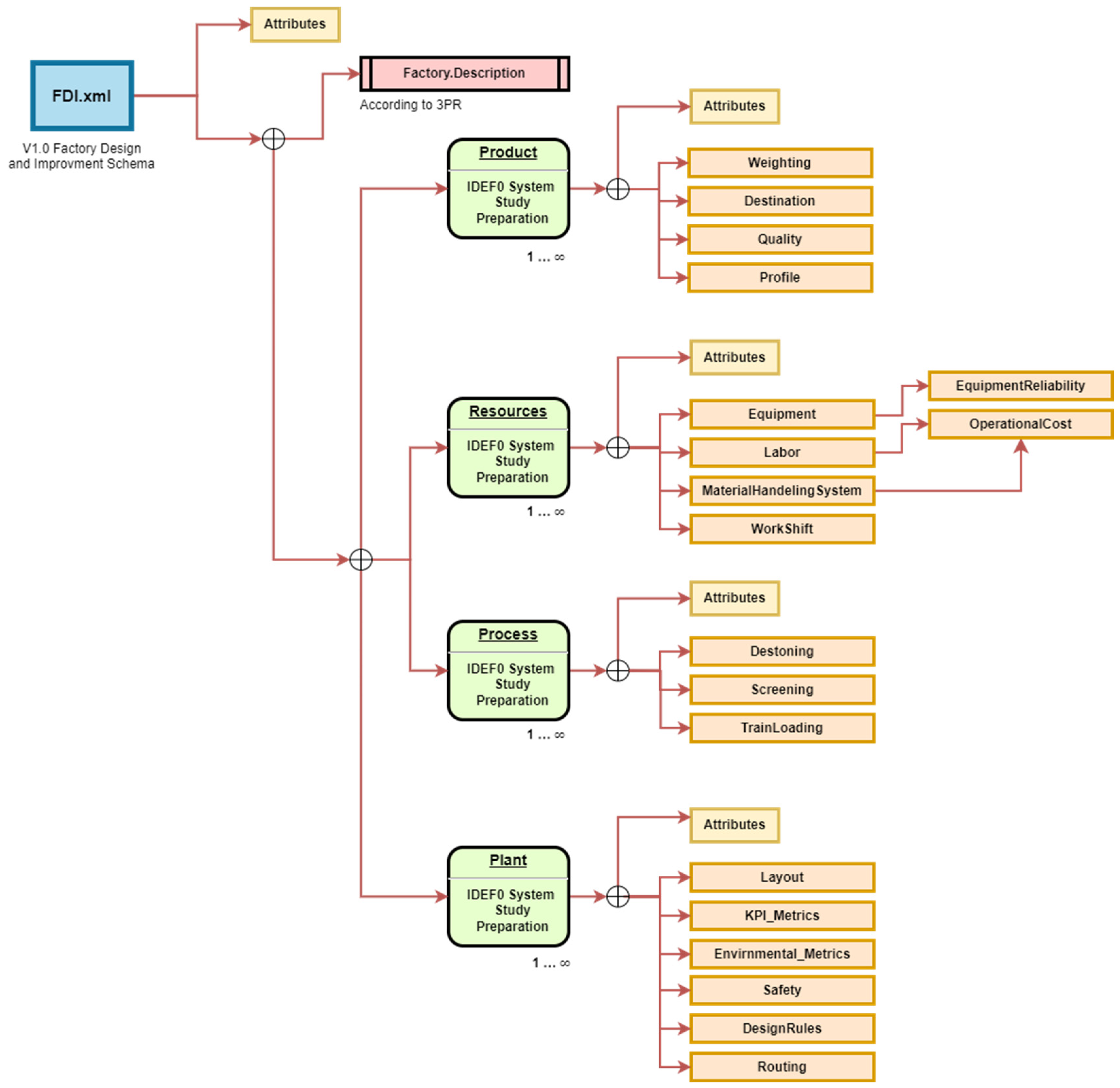

3.2.1. Factory Design and Improvement-Based Data Model

3.2.2. Mine Value Chain Parameters Survey

3.2.3. FDI Data Model Enabling Production Management System

3.3. Autoregressive Integrated Moving Average Model

3.3.1. Theoretical Background

3.3.2. Model Performance Metrics

- Mean Absolute Error

- Root Mean Squared Error

- Mean Absolute Percentage Error

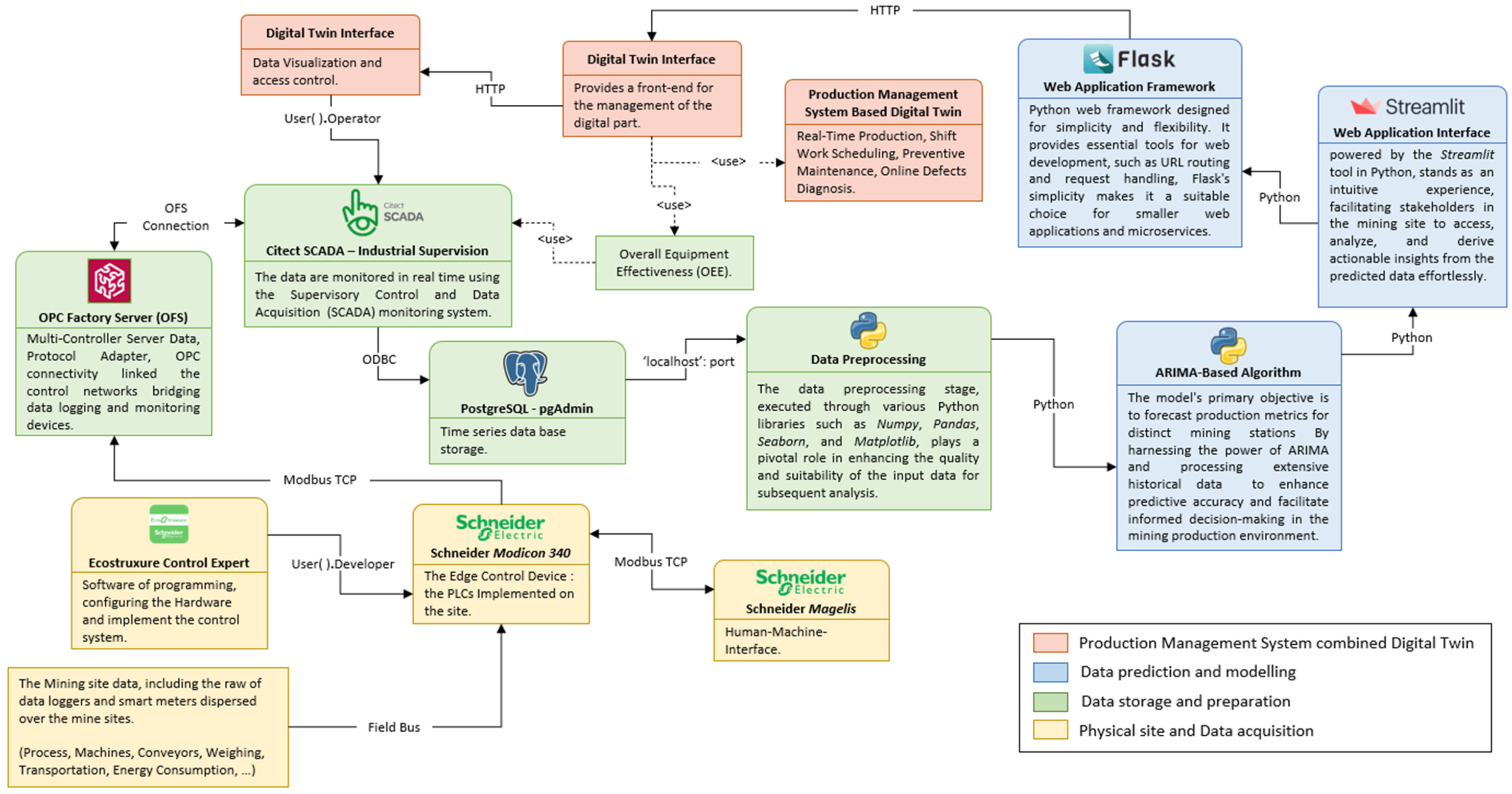

4. Scalable Compositional Digital Twin-Based Production Management System for Real-Time Monitoring and KPI Optimization in Mining Operations

5. Experiment and Results

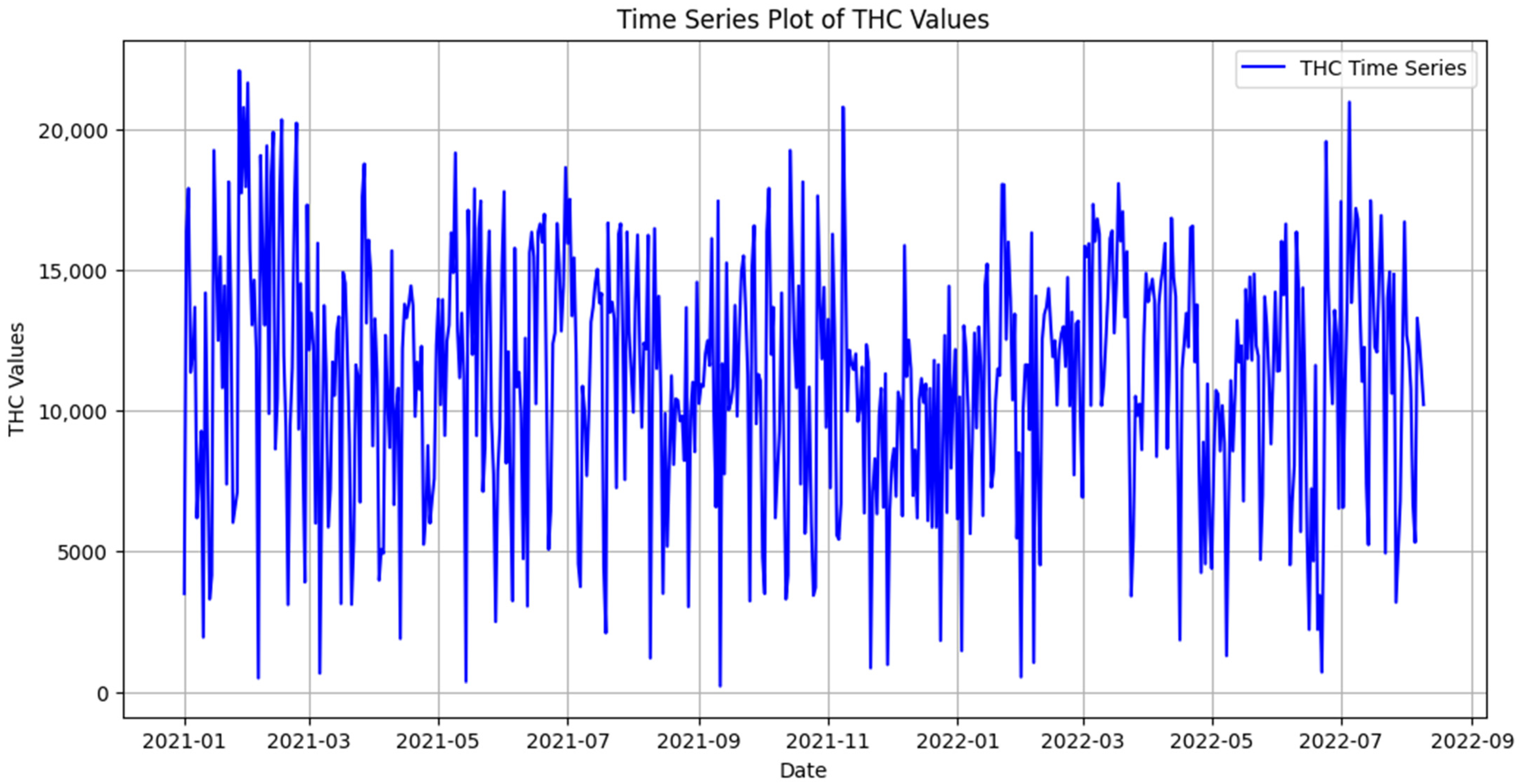

5.1. Data Description

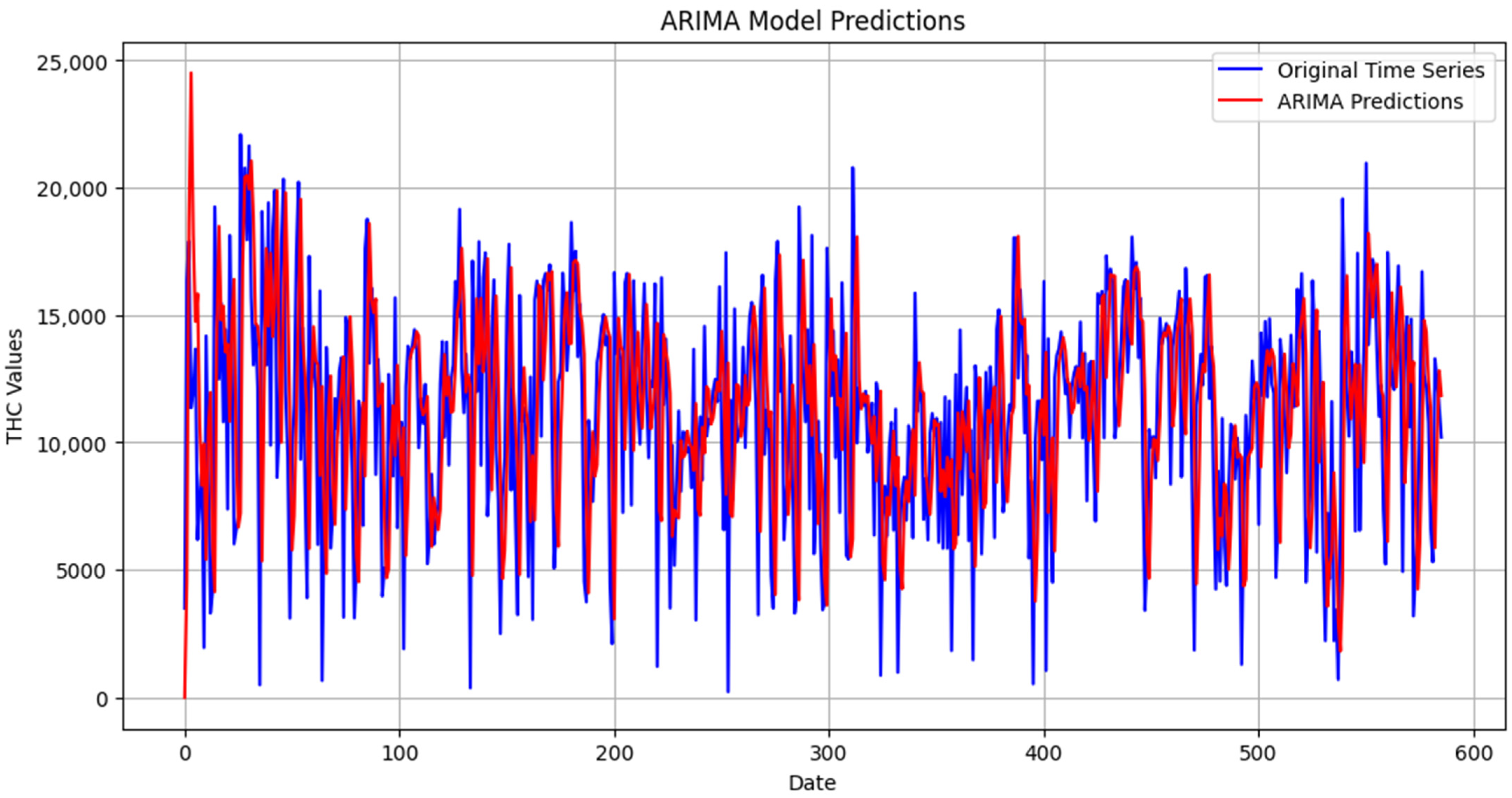

5.2. ARIMA Model Training

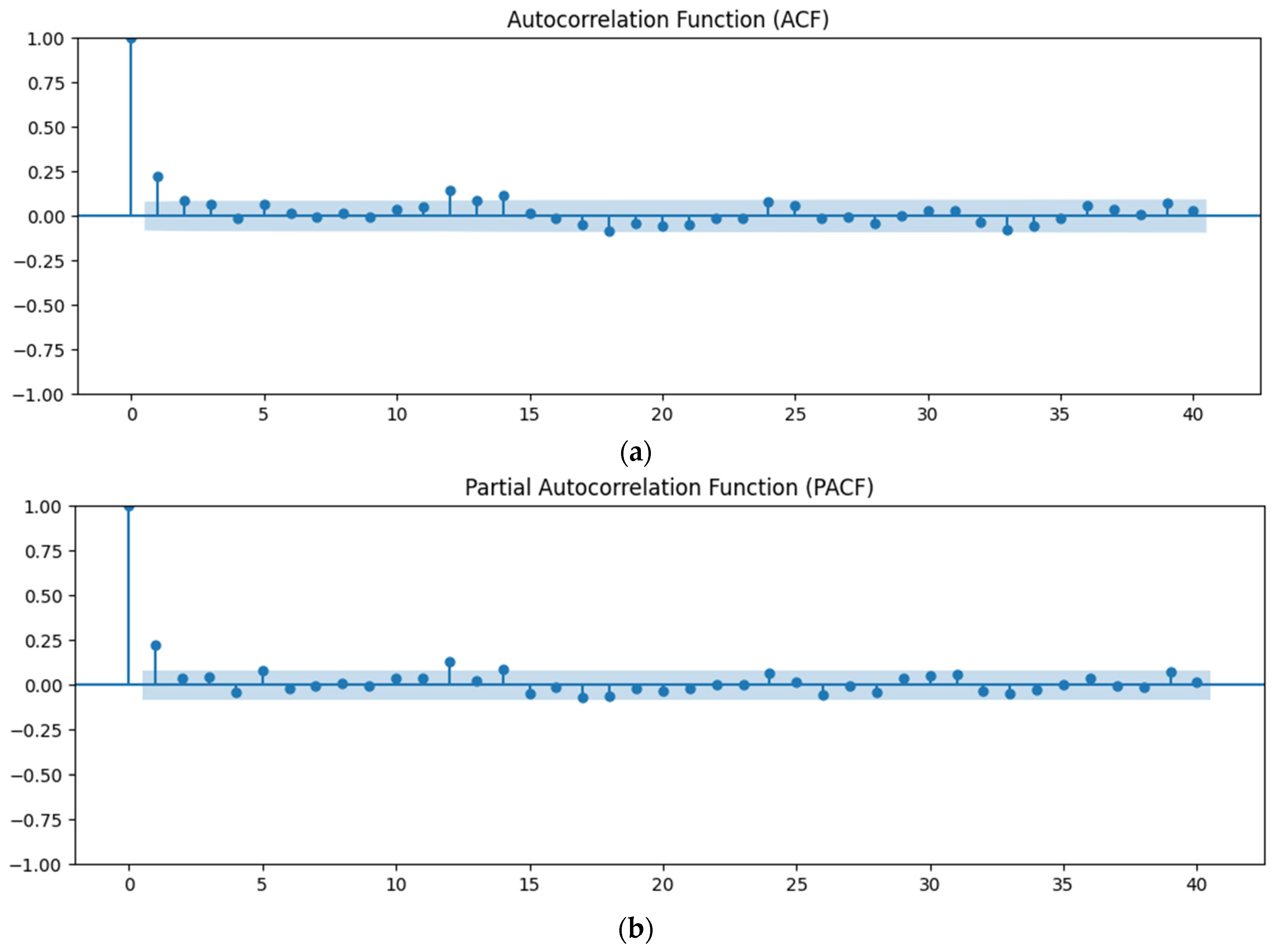

5.2.1. Time Series Stationarity

5.2.2. ARIMA Parameters’ Identification

- Akaike Information Criterion (AIC)

- Bayesian Information Criterion (BIC) (8)

| Algorithm 1: AIC and BIC Calculation Algorithm |

| 1 → Load the dataset |

| 2 → Define a range for p, d and q values |

| p = range (0, 3) |

| d = range (0, 3) |

| q = range (0, 3) |

| 3 → Initialize minimum AIC and minimum BIC as infinity |

| 4 → Initialize best parameters as (0, 0, 0) |

| 5 → for every combination of p, d, and q values Do: |

| Fit the ARIMA model with the current combination |

| Calculate AIC for the model |

| Calculate BIC for the model |

| if current AIC value is lower then |

| Update the minimum AIC for best parameters. |

| end if |

| if current BIC value is lower then |

| Update the minimum BIC for best parameters. |

| end if |

| end for |

| 6 → Print the best parameters identified based on both AIC and BIC |

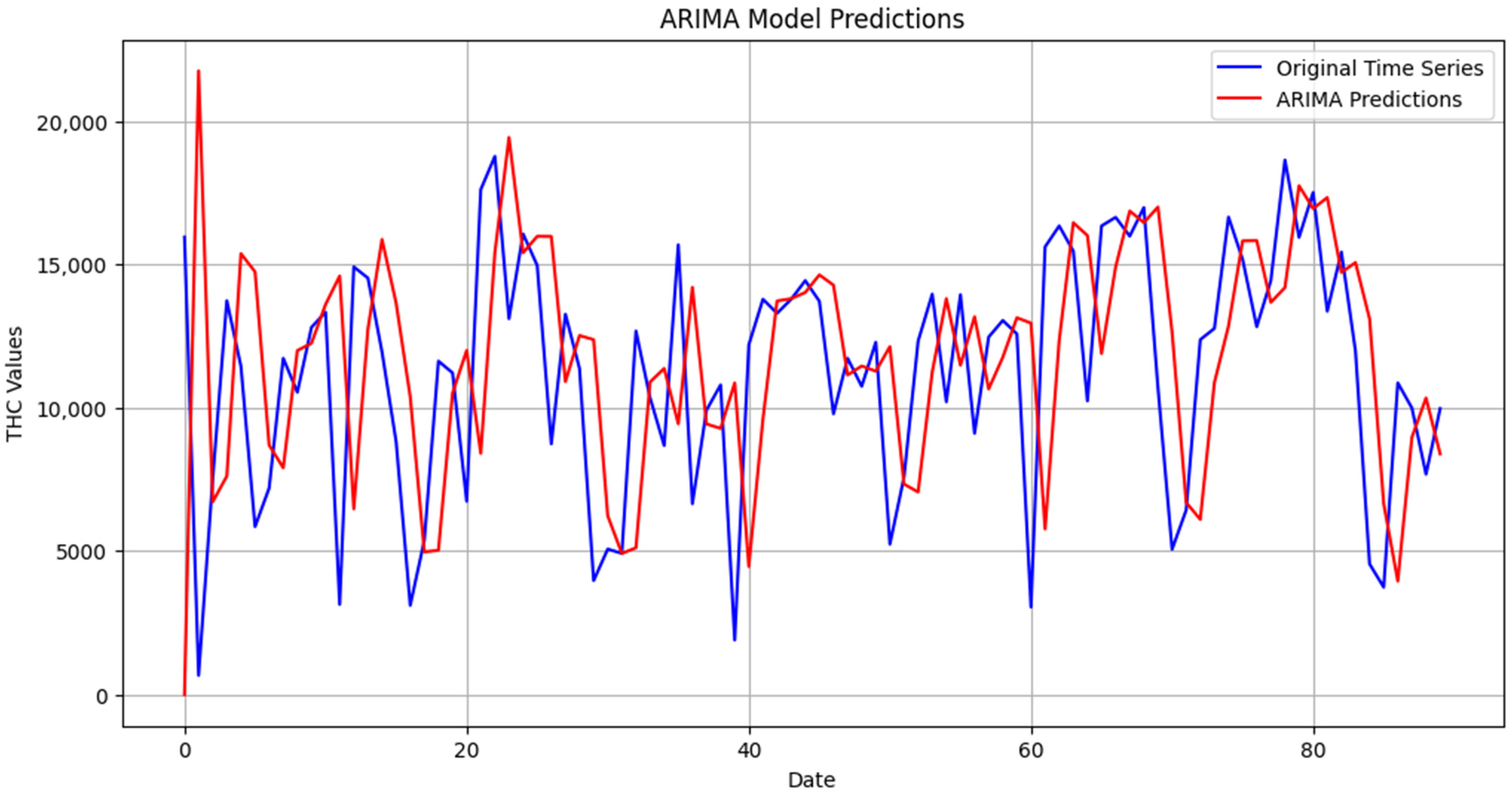

5.2.3. Dataset Splitting

5.3. Model Evaluation

5.3.1. Performance Metrics for ARIMA Model Predictions

5.3.2. Inference and Model Validation with External Time Series

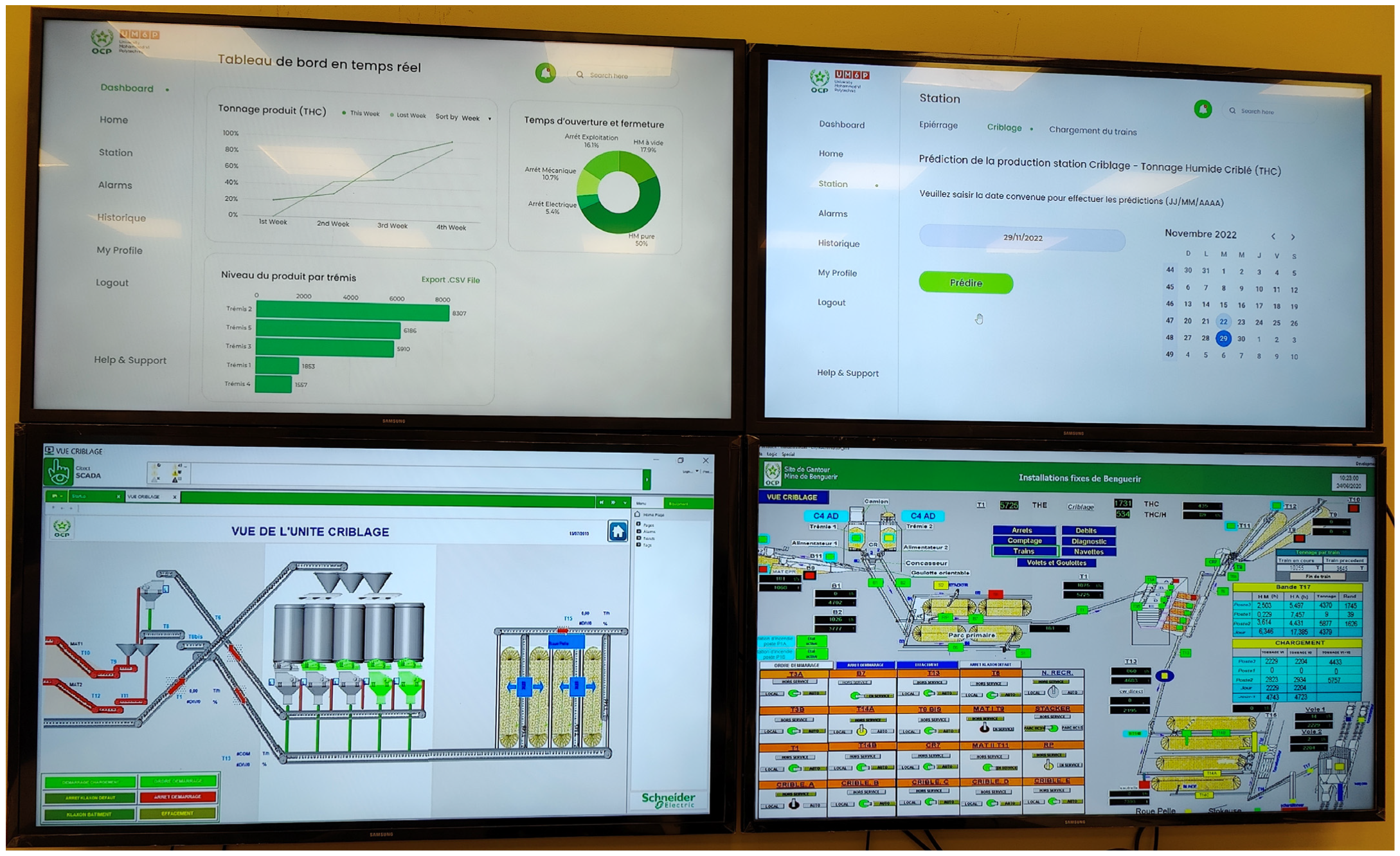

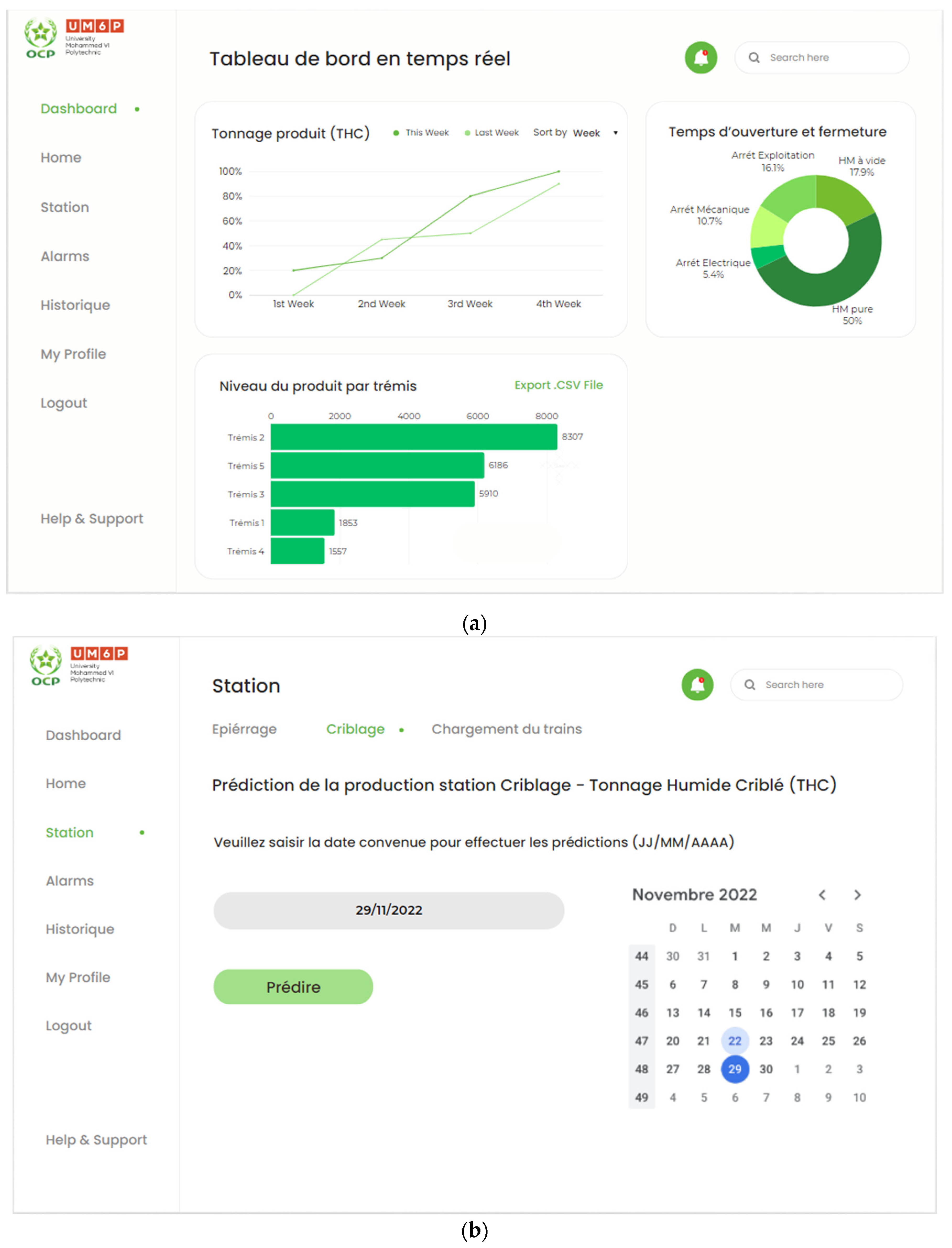

5.4. Proposed Production Management System-Based Digital Twin Implementation

5.4.1. Implementation Setup

5.4.2. Production Management System Digital Dashboard

6. Conclusions and Outlook

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, K.-J.; Lee, Y.-H.; Angelica, S. Digital Twin Design for Real-Time Monitoring—A Case Study of Die Cutting Machine. Int. J. Prod. Res. 2021, 59, 6471–6485. [Google Scholar] [CrossRef]

- Shangguan, D.; Chen, L.; Ding, J. A Digital Twin-Based Approach for the Fault Diagnosis and Health Monitoring of a Complex Satellite System. Symmetry 2020, 12, 1307. [Google Scholar] [CrossRef]

- Elbazi, N.; Tigami, A.; Laayati, O.; Maghraoui, A.E.; Chebak, A.; Mabrouki, M. Digital Twin-Enabled Monitoring of Mining Haul Trucks with Expert System Integration: A Case Study in an Experimental Open-Pit Mine. In Proceedings of the 2023 5th Global Power, Energy and Communication Conference (GPECOM), Cappadocia, Turkiye, 14 June 2023; IEEE: Nevsehir, Turkiye; pp. 168–174. [Google Scholar]

- Slob, N.; Hurst, W. Digital Twins and Industry 4.0 Technologies for Agricultural Greenhouses. Smart Cities 2022, 5, 1179–1192. [Google Scholar] [CrossRef]

- El Bazi, N.; Mabrouki, M.; Laayati, O.; Ouhabi, N.; El Hadraoui, H.; Hammouch, F.-E.; Chebak, A. Generic Multi-Layered Digital-Twin-Framework-Enabled Asset Lifecycle Management for the Sustainable Mining Industry. Sustainability 2023, 15, 3470. [Google Scholar] [CrossRef]

- Elbazi, N.; Mabrouki, M.; Chebak, A.; Hammouch, F. Digital Twin Architecture for Mining Industry: Case Study of a Stacker Machine in an Experimental Open-Pit Mine. In Proceedings of the 2022 4th Global Power, Energy and Communication Conference (GPECOM), Cappadocia, Turkiye, 14 June 2022; IEEE: Nevsehir, Turkiye; pp. 232–237. [Google Scholar]

- Popescu, D.; Dragomir, M.; Popescu, S.; Dragomir, D. Building Better Digital Twins for Production Systems by Incorporating Environmental Related Functions—Literature Analysis and Determining Alternatives. Appl. Sci. 2022, 12, 8657. [Google Scholar] [CrossRef]

- Vodyaho, A.I.; Zhukova, N.A.; Shichkina, Y.A.; Anaam, F.; Abbas, S. About One Approach to Using Dynamic Models to Build Digital Twins. Designs 2022, 6, 25. [Google Scholar] [CrossRef]

- De Benedictis, A.; Flammini, F.; Mazzocca, N.; Somma, A.; Vitale, F. Digital Twins for Anomaly Detection in the Industrial Internet of Things: Conceptual Architecture and Proof-of-Concept. IEEE Trans. Ind. Inform. 2023, 19, 11553–11563. [Google Scholar] [CrossRef]

- Torzoni, M.; Tezzele, M.; Mariani, S.; Manzoni, A.; Willcox, K.E. A Digital Twin Framework for Civil Engineering Structures. Comput. Methods Appl. Mech. Eng. 2024, 418, 116584. [Google Scholar] [CrossRef]

- Kibira, D.; Shao, G.; Venketesh, R. Building A Digital Twin of AN Automated Robot Workcell. In Proceedings of the 2023 Annual Modeling and Simulation Conference (ANNSIM), Hamilton, ON, Canada, 23–26 May 2023; IEEE: New York, NY, USA, 2023; pp. 196–207. [Google Scholar]

- Vilarinho, S.; Lopes, I.; Sousa, S. Design Procedure to Develop Dashboards Aimed at Improving the Performance of Productive Equipment and Processes. Procedia Manuf. 2017, 11, 1634–1641. [Google Scholar] [CrossRef]

- Fathy, Y.; Jaber, M.; Nadeem, Z. Digital Twin-Driven Decision Making and Planning for Energy Consumption. J. Sens. Actuator Netw. 2021, 10, 37. [Google Scholar] [CrossRef]

- Piras, G.; Agostinelli, S.; Muzi, F. Digital Twin Framework for Built Environment: A Review of Key Enablers. Energies 2024, 17, 436. [Google Scholar] [CrossRef]

- Kandavalli, S.R.; Khan, A.M.; Iqbal, A.; Jamil, M.; Abbas, S.; Laghari, R.A.; Cheok, Q. Application of Sophisticated Sensors to Advance the Monitoring of Machining Processes: Analysis and Holistic Review. Int. J. Adv. Manuf. Technol. 2023, 125, 989–1014. [Google Scholar] [CrossRef]

- Balogh, M.; Földvári, A.; Varga, P. Digital Twins in Industry 5.0: Challenges in Modeling and Communication. In Proceedings of the NOMS 2023-2023 IEEE/IFIP Network Operations and Management Symposium, Miami, FL, USA, 8 May 2023; IEEE: Miami, FL, USA; pp. 1–6. [Google Scholar]

- Zhao, Y.; Yan, L.; Wu, J.; Song, X. Design and Implementation of a Digital Twin System for Log Rotary Cutting Optimization. Future Internet 2024, 16, 7. [Google Scholar] [CrossRef]

- Peng, A.; Ma, Y.; Huang, K.; Wang, L. Digital Twin-Driven Framework for Fatigue Life Prediction of Welded Structures Considering Residual Stress. Int. J. Fatigue 2024, 181, 108144. [Google Scholar] [CrossRef]

- Sifat, M.M.H.; Choudhury, S.M.; Das, S.K.; Ahamed, M.H.; Muyeen, S.M.; Hasan, M.M.; Ali, M.F.; Tasneem, Z.; Islam, M.M.; Islam, M.R.; et al. Towards Electric Digital Twin Grid: Technology and Framework Review. Energy AI 2023, 11, 100213. [Google Scholar] [CrossRef]

- Singh, S.K.; Kumar, M.; Tanwar, S.; Park, J.H. GRU-Based Digital Twin Framework for Data Allocation and Storage in IoT-Enabled Smart Home Networks. Future Gener. Comput. Syst. 2024, 153, 391–402. [Google Scholar] [CrossRef]

- El Hadraoui, H.; Laayati, O.; Guennouni, N.; Chebak, A.; Zegrari, M. A Data-Driven Model for Fault Diagnosis of Induction Motor for Electric Powertrain. In Proceedings of the 2022 IEEE 21st Mediterranean Electrotechnical Conference (MELECON), Palermo, Italy, 14–16 June 2022; pp. 336–341. [Google Scholar]

- Onaji, I.; Tiwari, D.; Soulatiantork, P.; Song, B.; Tiwari, A. Digital Twin in Manufacturing: Conceptual Framework and Case Studies. Int. J. Comput. Integr. Manuf. 2022, 35, 831–858. [Google Scholar] [CrossRef]

- Allam, Z.; Sharifi, A.; Bibri, S.E.; Jones, D.S.; Krogstie, J. The Metaverse as a Virtual Form of Smart Cities: Opportunities and Challenges for Environmental, Economic, and Social Sustainability in Urban Futures. Smart Cities 2022, 5, 771–801. [Google Scholar] [CrossRef]

- Asad, U.; Khan, M.; Khalid, A.; Lughmani, W.A. Human-Centric Digital Twins in Industry: A Comprehensive Review of Enabling Technologies and Implementation Strategies. Sensors 2023, 23, 3938. [Google Scholar] [CrossRef]

- Zhu, Z.; Liu, C.; Xu, X. Visualisation of the Digital Twin Data in Manufacturing by Using Augmented Reality. Procedia CIRP 2019, 81, 898–903. [Google Scholar] [CrossRef]

- Elbazi, N.; Hadraoui, H.E.; Laayati, O.; Maghraoui, A.E.; Chebak, A.; Mabrouki, M. Digital Twin in Mining Industry: A Study on Automation Commissioning Efficiency and Safety Implementation of a Stacker Machine in an Open-Pit Mine. In Proceedings of the 2023 5th Global Power, Energy and Communication Conference (GPECOM), Cappadocia, Turkiye, 14 June 2023; pp. 548–553. [Google Scholar]

- Mohammed, K.; Abdelhafid, M.; Kamal, K.; Ismail, N.; Ilias, A. Intelligent Driver Monitoring System: An Internet of Things-Based System for Tracking and Identifying the Driving Behavior. Comput. Stand. Interfaces 2023, 84, 103704. [Google Scholar] [CrossRef]

- Choi, S.; Woo, J.; Kim, J.; Lee, J.Y. Digital Twin-Based Integrated Monitoring System: Korean Application Cases. Sensors 2022, 22, 5450. [Google Scholar] [CrossRef] [PubMed]

- Bendaouia, A.; Abdelwahed, E.H.; Qassimi, S.; Boussetta, A.; Benzakour, I.; Amar, O.; Hasidi, O. Artificial Intelligence for Enhanced Flotation Monitoring in the Mining Industry: A ConvLSTM-Based Approach. Comput. Chem. Eng. 2024, 180, 108476. [Google Scholar] [CrossRef]

- Choi, S.; Kang, G.; Jung, K.; Kulvatunyou, B.; Morris, K. Applications of the Factory Design and Improvement Reference Activity Model. In Advances in Production Management Systems. Initiatives for a Sustainable World; Nääs, I., Vendrametto, O., Mendes Reis, J., Gonçalves, R.F., Silva, M.T., Von Cieminski, G., Kiritsis, D., Eds.; IFIP Advances in Information and Communication Technology; Springer International Publishing: Cham, Switzerland, 2016; Volume 488, pp. 697–704. ISBN 978-3-319-51132-0. [Google Scholar]

- Saihi, A.; Awad, M.; Ben-Daya, M. Quality 4.0: Leveraging Industry 4.0 Technologies to Improve Quality Management Practices—A Systematic Review. Int. J. Qual. Reliab. Manag. 2021, 40, 628–650. [Google Scholar] [CrossRef]

- Alexopoulos, K.; Tsoukaladelis, T.; Dimitrakopoulou, C.; Nikolakis, N.; Eytan, A. An Approach towards Zero Defect Manufacturing by Combining IIoT Data with Industrial Social Networking. Procedia Comput. Sci. 2023, 217, 403–412. [Google Scholar] [CrossRef]

- Foit, K. Agent-Based Modelling of Manufacturing Systems in the Context of “Industry 4.0.”. J. Phys. Conf. Ser. 2022, 2198, 012064. [Google Scholar] [CrossRef]

- Jung, K.; Choi, S.; Kulvatunyou, B.; Cho, H.; Morris, K.C. A Reference Activity Model for Smart Factory Design and Improvement. Prod. Plan. Control 2017, 28, 108–122. [Google Scholar] [CrossRef]

- Zayed, S.M.; Attiya, G.M.; El-Sayed, A.; Hemdan, E.E.-D. A Review Study on Digital Twins with Artificial Intelligence and Internet of Things: Concepts, Opportunities, Challenges, Tools and Future Scope. Multimed. Tools Appl. 2023, 82, 47081–47107. [Google Scholar] [CrossRef]

- Choi, S.; Jun, C.; Zhao, W.B.; Do Noh, S. Digital Manufacturing in Smart Manufacturing Systems: Contribution, Barriers, and Future Directions. In Advances in Production Management Systems: Innovative Production Management Towards Sustainable Growth; Umeda, S., Nakano, M., Mizuyama, H., Hibino, H., Kiritsis, D., Von Cieminski, G., Eds.; IFIP Advances in Information and Communication Technology; Springer International Publishing: Cham, Switzerland, 2015; Volume 460, pp. 21–29. ISBN 978-3-319-22758-0. [Google Scholar]

- Choi, S.; Kim, B.H.; Do Noh, S. A Diagnosis and Evaluation Method for Strategic Planning and Systematic Design of a Virtual Factory in Smart Manufacturing Systems. Int. J. Precis. Eng. Manuf. 2015, 16, 1107–1115. [Google Scholar] [CrossRef]

- Liu, J.; Ji, Q.; Zhang, X.; Chen, Y.; Zhang, Y.; Liu, X.; Tang, M. Digital Twin Model-Driven Capacity Evaluation and Scheduling Optimization for Ship Welding Production Line. J. Intell. Manuf. 2023, 34. [Google Scholar] [CrossRef]

- Yadav, R.S.; Mehta, V.; Tiwari, A. An Application of Time Series ARIMA Forecasting Model for Predicting Nutri Cereals Area in India. 2022. Available online: https://www.thepharmajournal.com/archives/2022/vol11issue3S/PartQ/S-11-3-85-221.pdf (accessed on 27 January 2024).

- Ning, Y.; Kazemi, H.; Tahmasebi, P. A Comparative Machine Learning Study for Time Series Oil Production Forecasting: ARIMA, LSTM, and Prophet. Comput. Geosci. 2022, 164, 105126. [Google Scholar] [CrossRef]

- Fan, D.; Sun, H.; Yao, J.; Zhang, K.; Yan, X.; Sun, Z. Well Production Forecasting Based on ARIMA-LSTM Model Considering Manual Operations. Energy 2021, 220, 119708. [Google Scholar] [CrossRef]

- Implementation of Time Series Forecasting with Box Jenkins ARIMA Method on Wood Production of Indonesian Forests|AIP Conference Proceedings|AIP Publishing. Available online: https://pubs.aip.org/aip/acp/article-abstract/2738/1/060004/2894351/Implementation-of-time-series-forecasting-with-Box?redirectedFrom=fulltext (accessed on 29 January 2024).

- El Maghraoui, A.; Ledmaoui, Y.; Laayati, O.; El Hadraoui, H.; Chebak, A. Smart Energy Management: A Comparative Study of Energy Consumption Forecasting Algorithms for an Experimental Open-Pit Mine. Energies 2022, 15, 4569. [Google Scholar] [CrossRef]

- Pajpach, M.; Pribiš, R.; Drahoš, P.; Kučera, E.; Haffner, O. Design of an Educational-Development Platform for Digital Twins Using the Interoperability of the OPC UA Standard and Industry 4.0 Components. In Proceedings of the 2023 3rd International Conference on Electrical, Computer, Communications and Mechatronics Engineering (ICECCME), Tenerife, Spain, 19 July 2023; IEEE: Tenerife, Spain; pp. 1–6. [Google Scholar]

- Mufid, M.R.; Basofi, A.; Al Rasyid, M.U.H.; Rochimansyah, I.F.; Rokhim, A. Design an MVC Model Using Python for Flask Framework Development. In Proceedings of the 2019 International Electronics Symposium (IES), Surabaya, Indonesia, 27–28 September 2019; pp. 214–219. [Google Scholar]

- Srikanth, G.; Reddy, M.S.K.; Sharma, S.; Sindhu, S.; Reddy, R. Designing a Flask Web Application for Academic Forum and Faculty Rating Using Sentiment Analysis. AIP Conf. Proc. 2023, 2477, 030035. [Google Scholar] [CrossRef]

- Schulze, A.; Brand, F.; Geppert, J.; Böl, G.-F. Digital Dashboards Visualizing Public Health Data: A Systematic Review. Front. Public Health 2023, 11, 999958. [Google Scholar] [CrossRef] [PubMed]

- Gonçalves, C.T.; Gonçalves, M.J.A.; Campante, M.I. Developing Integrated Performance Dashboards Visualisations Using Power BI as a Platform. Information 2023, 14, 614. [Google Scholar] [CrossRef]

- Laayati, O.; El Hadraoui, H.; El Magharaoui, A.; El-Bazi, N.; Bouzi, M.; Chebak, A.; Guerrero, J.M. An AI-Layered with Multi-Agent Systems Architecture for Prognostics Health Management of Smart Transformers: A Novel Approach for Smart Grid-Ready Energy Management Systems. Energies 2022, 15, 7217. [Google Scholar] [CrossRef]

- Islam, M.A.; Sufian, M.A. Employing AI and ML for Data Analytics on Key Indicators: Enhancing Smart City Urban Services and Dashboard-Driven Leadership and Decision-Making. In Technology and Talent Strategies for Sustainable Smart Cities; Singh Dadwal, S., Jahankhani, H., Bowen, G., Yasir Nawaz, I., Eds.; Emerald Publishing Limited: Leeds, UK, 2023; pp. 275–325. ISBN 978-1-83753-023-6. [Google Scholar]

- Jwo, J.-S.; Lin, C.-S.; Lee, C.-H. An Interactive Dashboard Using a Virtual Assistant for Visualizing Smart Manufacturing. Mob. Inf. Syst. 2021, 2021, e5578239. [Google Scholar] [CrossRef]

- Honghong, S.; Gang, Y.; Haijiang, L.; Tian, Z.; Annan, J. Digital Twin Enhanced BIM to Shape Full Life Cycle Digital Transformation for Bridge Engineering. Autom. Constr. 2023, 147, 104736. [Google Scholar] [CrossRef]

- Li, W.; Li, Y.; Garg, A.; Gao, L. Enhancing Real-Time Degradation Prediction of Lithium-Ion Battery: A Digital Twin Framework with CNN-LSTM-Attention Model. Energy 2024, 286, 129681. [Google Scholar] [CrossRef]

| ICOM | Factors | Description |

|---|---|---|

| Input | Information | Product Information, Market Information, Resource Information, Production Schedule, Labor Information, Equipment Information, |

| Output | Key performance indicators (KPIs) | Cycle Time, Lead Time, Production Output, Work-In-Process (WIP), Return-On-Capital-Employed (ROCE), |

| Control | Work process | Product Lifecycle Management (PLM), |

| Methodology | Operational Excellence (OpEx), PDCA | |

| People | Process Operators, Process Designer, Process Engineers, Process Managers | |

| Technology | Statistical method, stochastic method, simulation, co-simulation | |

| Mechanism | Tools/system functions | PLM, Computer-Integrated Manufacturing (CIM) pyramid, SCADA, OEE |

| ADF-Statistic | −19.273189906 | ||

| p-value | 0.0 | ||

| Critical values | 1% | 5% | 10% |

| −3.4415777 | −2.8664932 | −2.569407 | |

| Analysis Based on Visual Observations | Akaike Information Criterion (AIC) | Bayesian Information Criterion (BIC) | |

|---|---|---|---|

| (p, d, q) | (1, 2, 1) | (1, 2, 2) | (1, 1, 1) |

| Related criterion value | - | 11,436.483 | 11,451.199 |

| Mean absolute error (MAE) | 3553.32 | 3628.66 | 3547.81 |

| Mean absolute percentage error (MAPE) | 0.35 | 0.59 | 0.60 |

| Root mean squared error (RMSE) | 4386.69 | 4386.69 | 4383.29 |

| Percentage of Dataset in Use per Each Split | Iteration (Split #) | Time Series Cross-Validator Combination | |

|---|---|---|---|

| Training Set (# of Used Raw) | Testing Set (# of Used Raw) | ||

| 29.18% | 1st | 88 | 83 |

| 43.34% | 2nd | 171 | 83 |

| 57.51% | 3rd | 254 | 83 |

| 71.67% | 4th | 337 | 83 |

| 85.84% | 5th | 420 | 83 |

| 100% | 6th | 503 | 83 |

| Maximum Value | Minimum Value | Mean Value | Standard Deviation Value |

|---|---|---|---|

| 22,092 | 220 | 11,178.58362 | 4314.32453 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

El Bazi, N.; Laayati, O.; Darkaoui, N.; El Maghraoui, A.; Guennouni, N.; Chebak, A.; Mabrouki, M. Scalable Compositional Digital Twin-Based Monitoring System for Production Management: Design and Development in an Experimental Open-Pit Mine. Designs 2024, 8, 40. https://doi.org/10.3390/designs8030040

El Bazi N, Laayati O, Darkaoui N, El Maghraoui A, Guennouni N, Chebak A, Mabrouki M. Scalable Compositional Digital Twin-Based Monitoring System for Production Management: Design and Development in an Experimental Open-Pit Mine. Designs. 2024; 8(3):40. https://doi.org/10.3390/designs8030040

Chicago/Turabian StyleEl Bazi, Nabil, Oussama Laayati, Nouhaila Darkaoui, Adila El Maghraoui, Nasr Guennouni, Ahmed Chebak, and Mustapha Mabrouki. 2024. "Scalable Compositional Digital Twin-Based Monitoring System for Production Management: Design and Development in an Experimental Open-Pit Mine" Designs 8, no. 3: 40. https://doi.org/10.3390/designs8030040

APA StyleEl Bazi, N., Laayati, O., Darkaoui, N., El Maghraoui, A., Guennouni, N., Chebak, A., & Mabrouki, M. (2024). Scalable Compositional Digital Twin-Based Monitoring System for Production Management: Design and Development in an Experimental Open-Pit Mine. Designs, 8(3), 40. https://doi.org/10.3390/designs8030040