Deep Learning Approaches for Long-Term Global Horizontal Irradiance Forecasting for Microgrids Planning

Abstract

1. Introduction

2. Background Framework

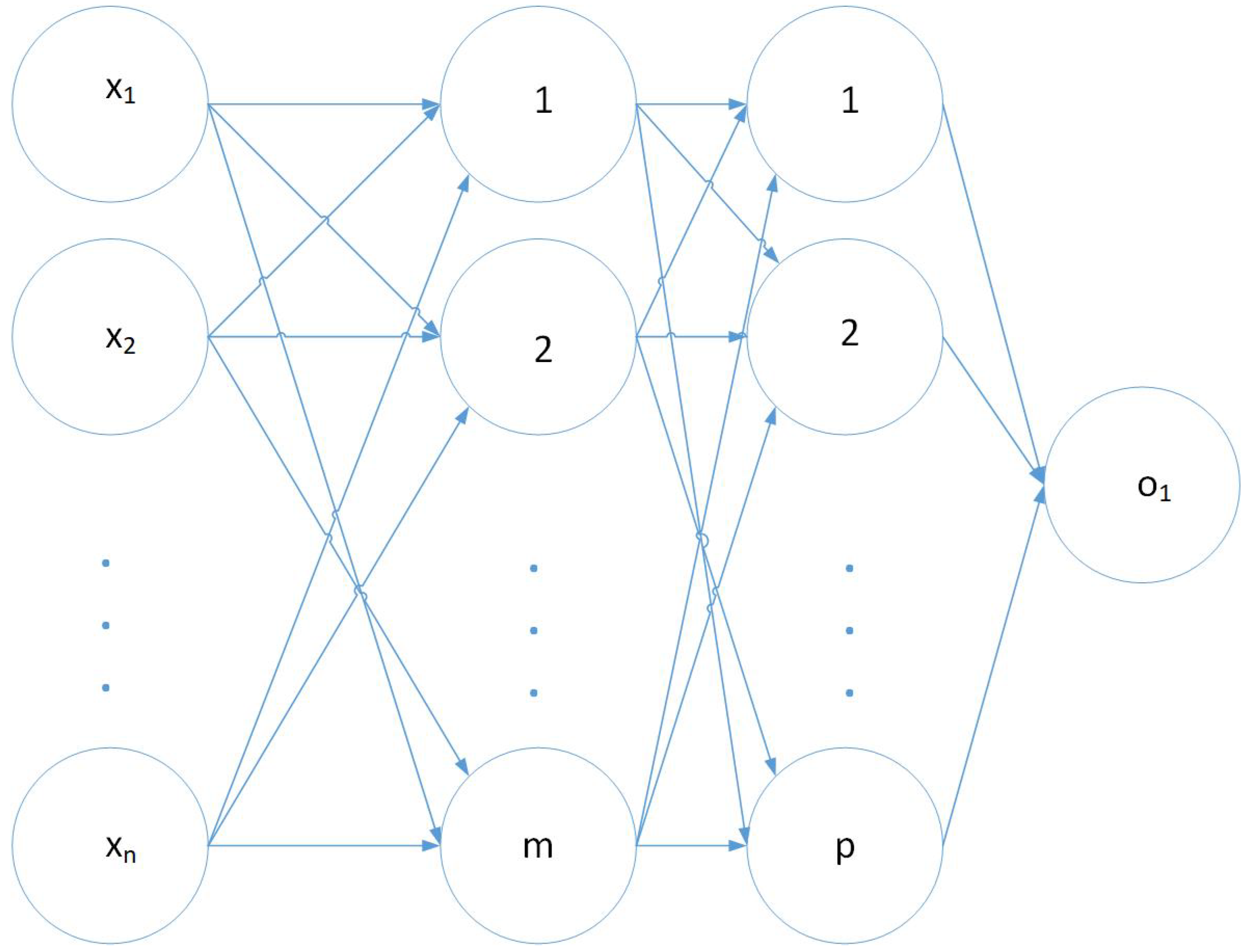

2.1. Feed Forward Neural Networks

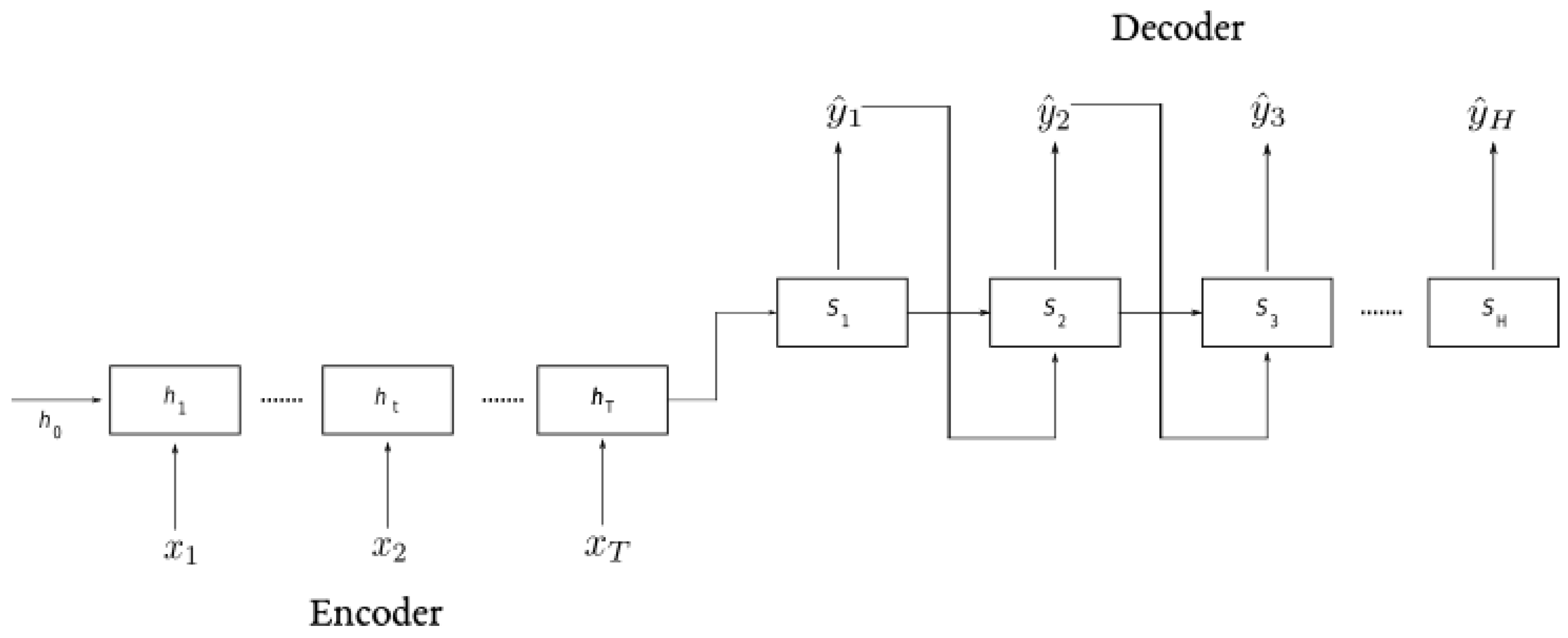

2.2. Recurrent Neural Networks

2.3. Benchmark Methods

3. Case Study

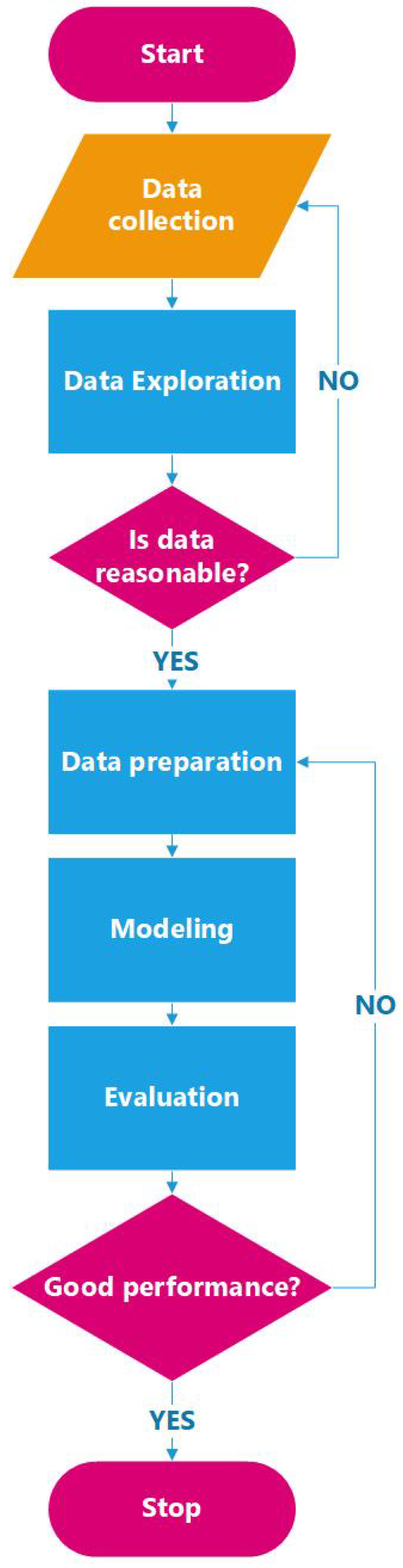

4. Methodology

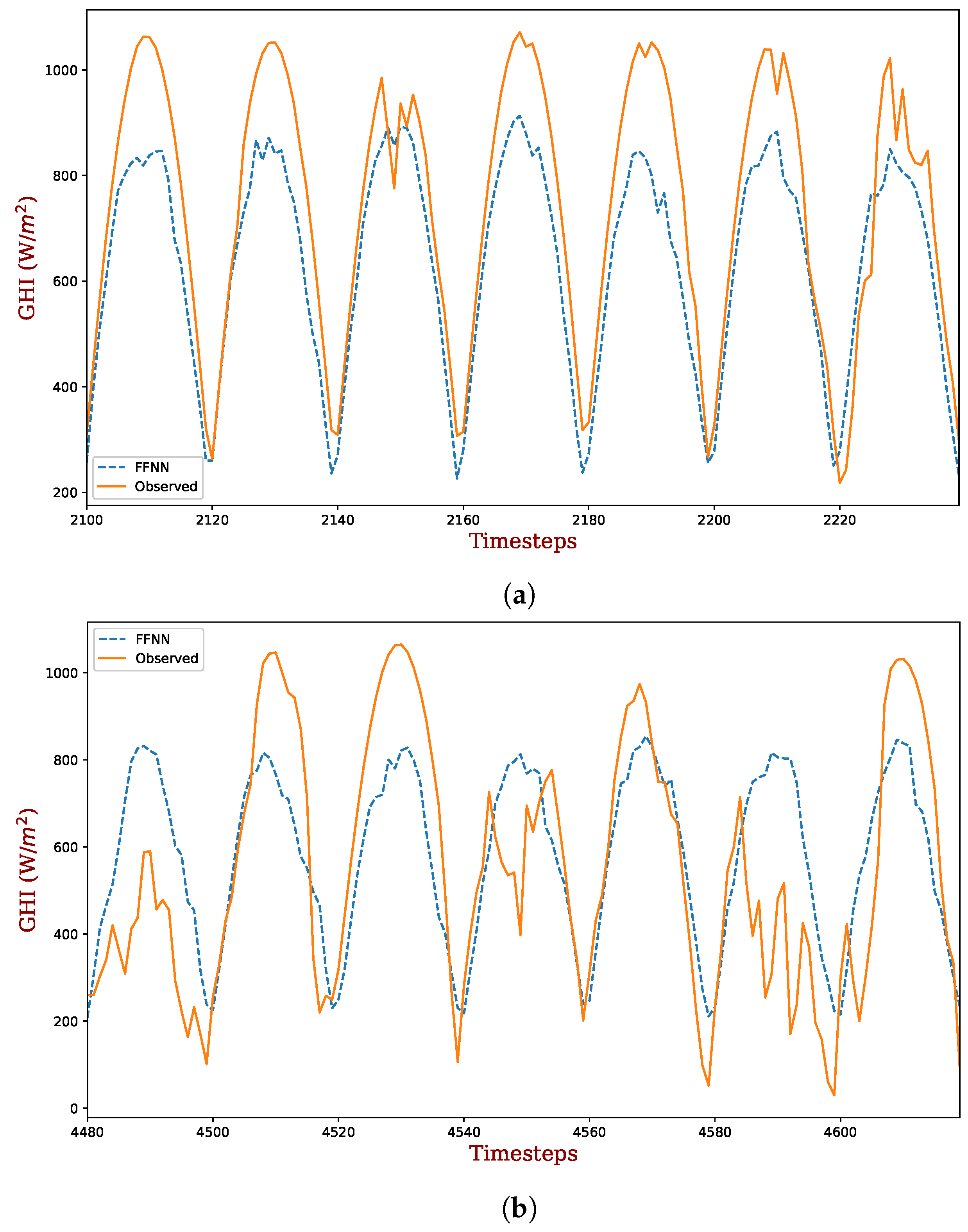

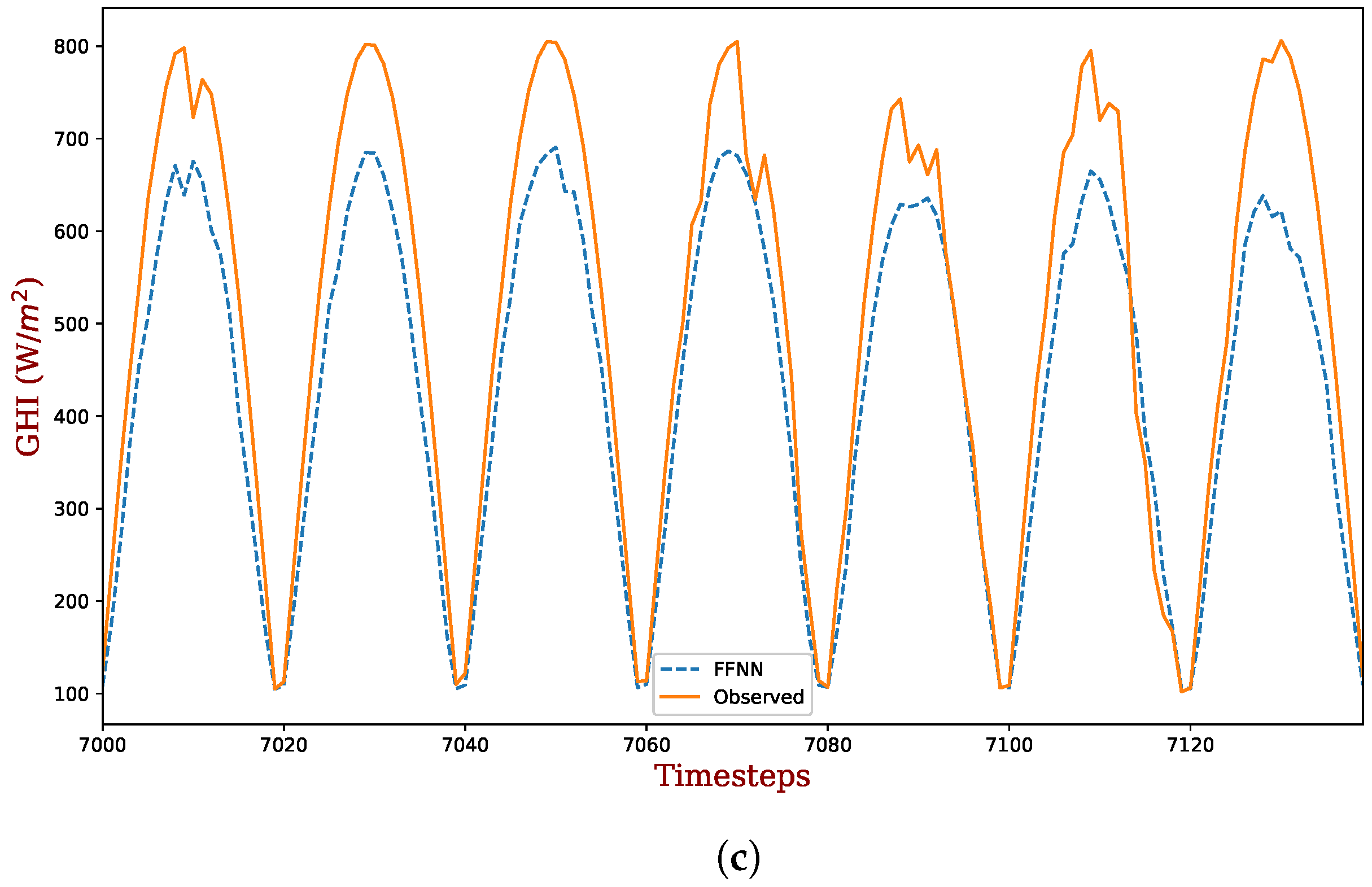

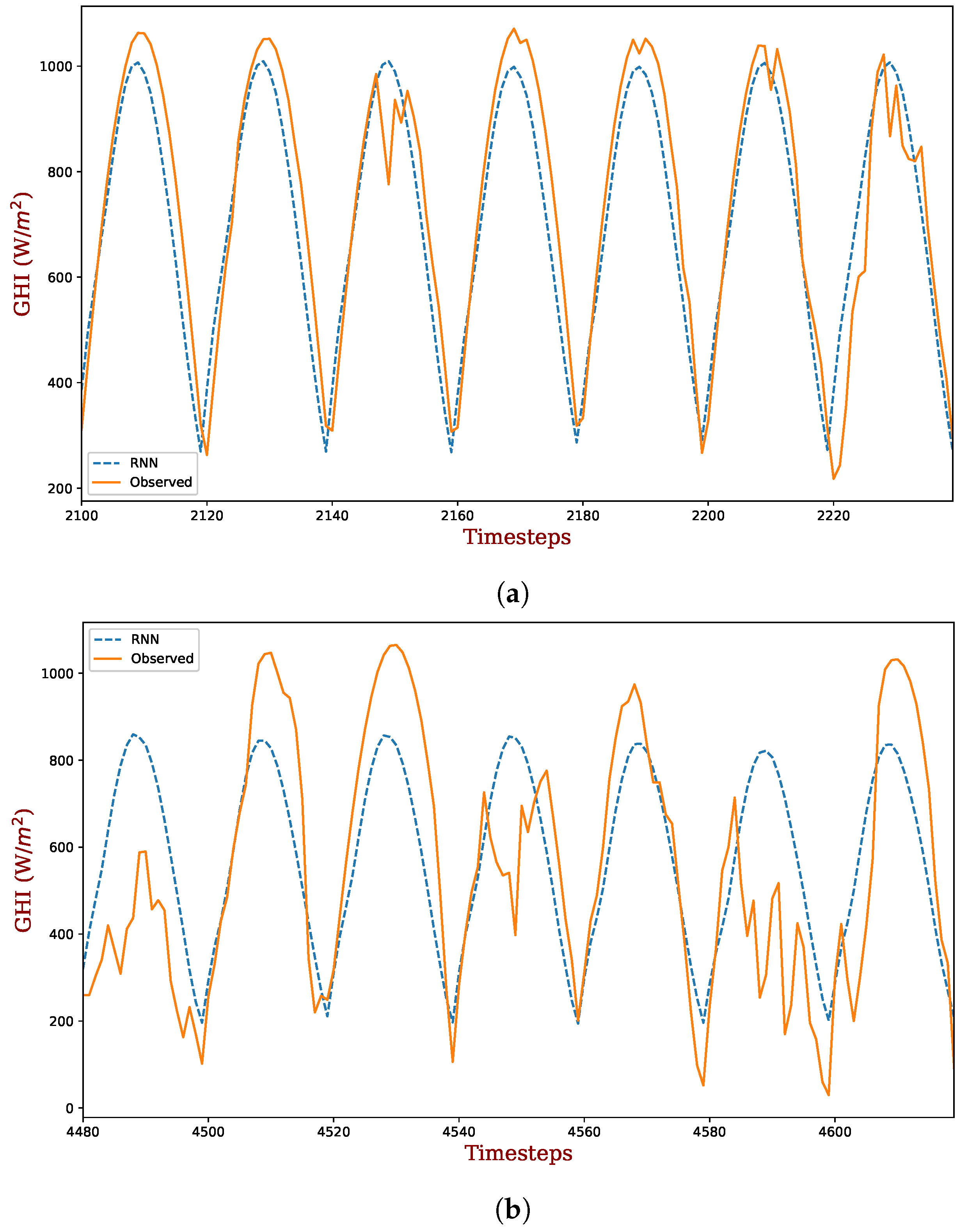

5. Analysis of Results

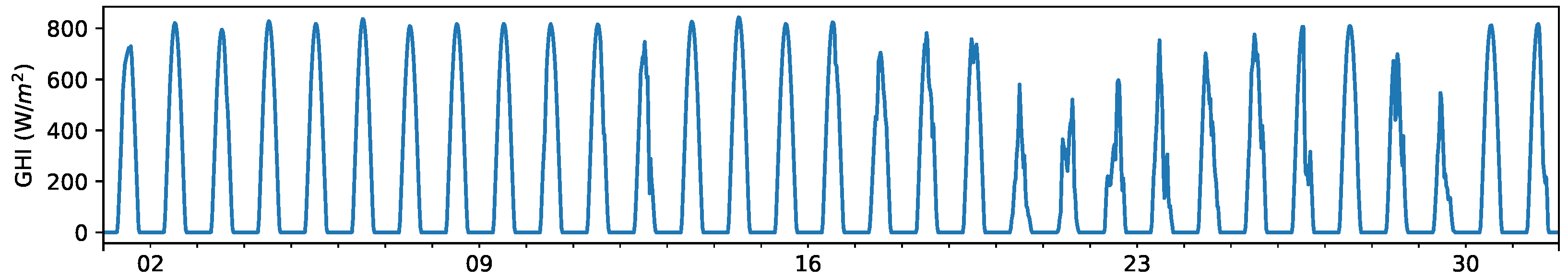

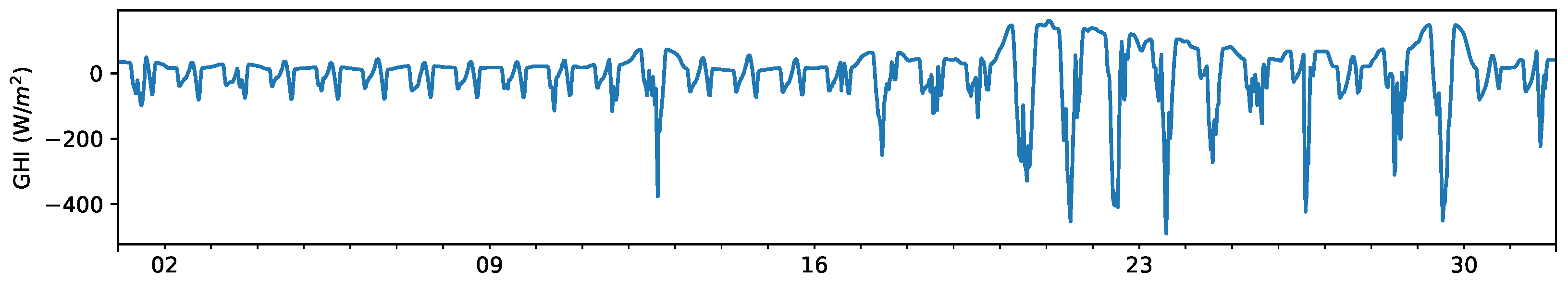

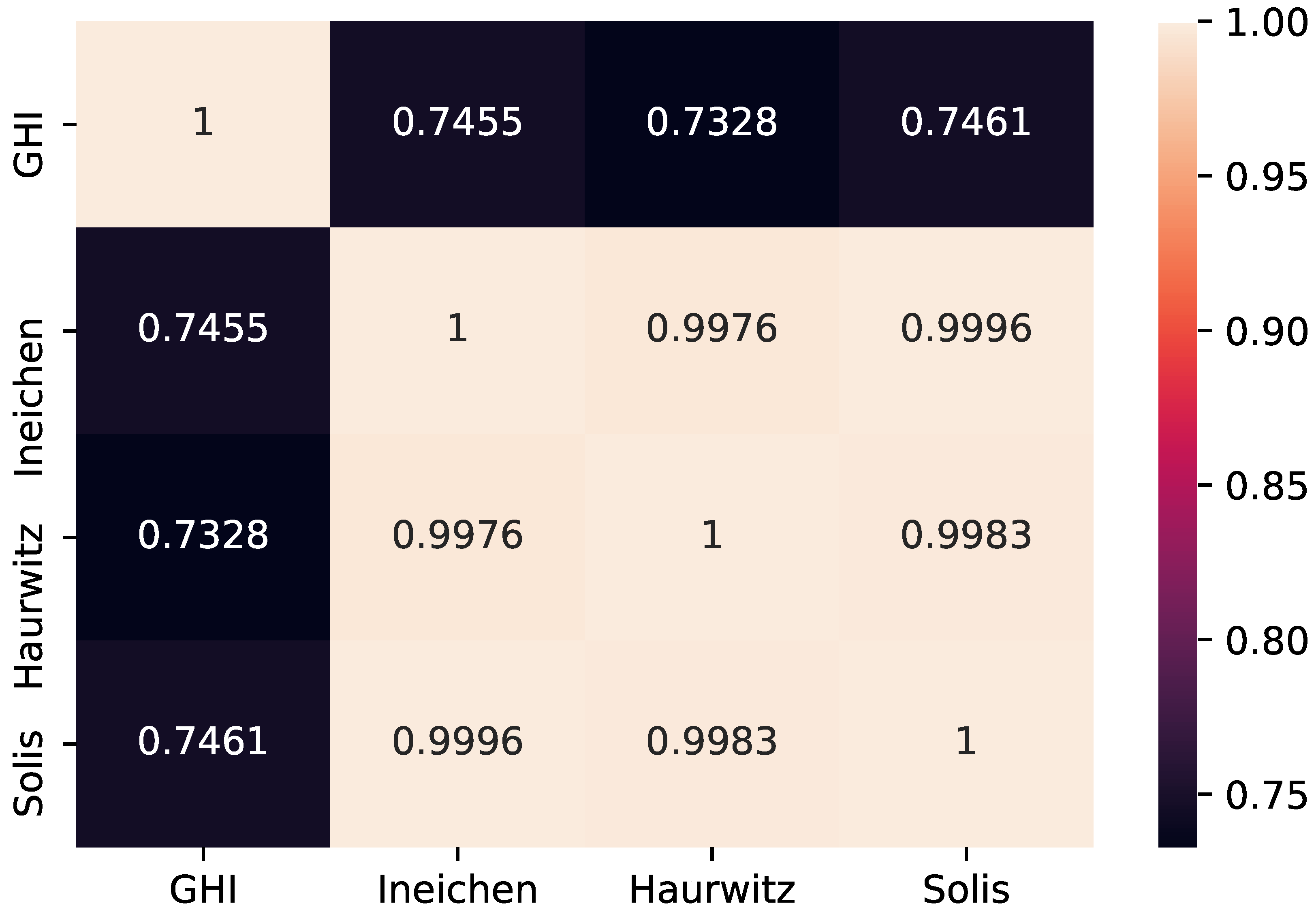

5.1. Data Exploration

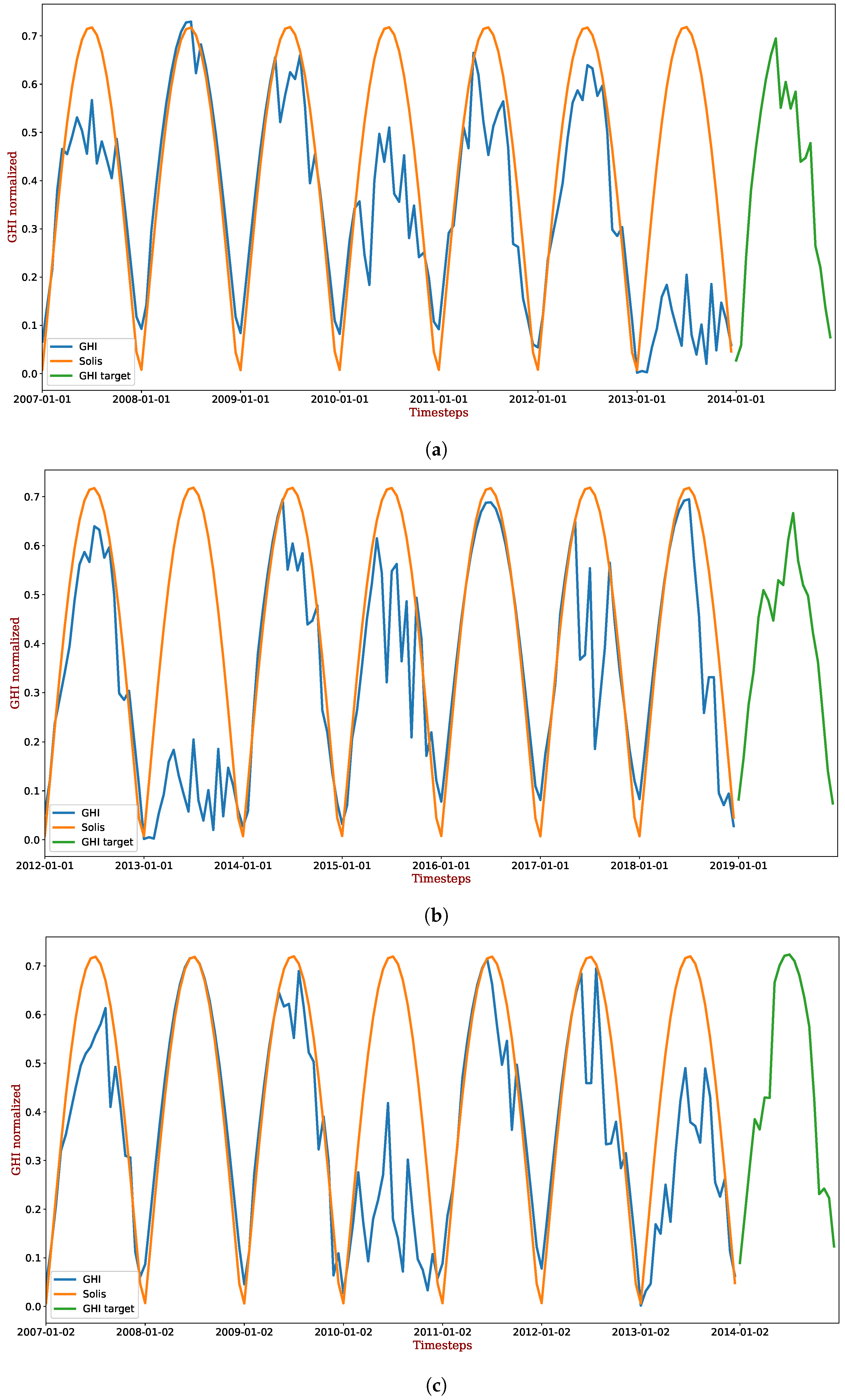

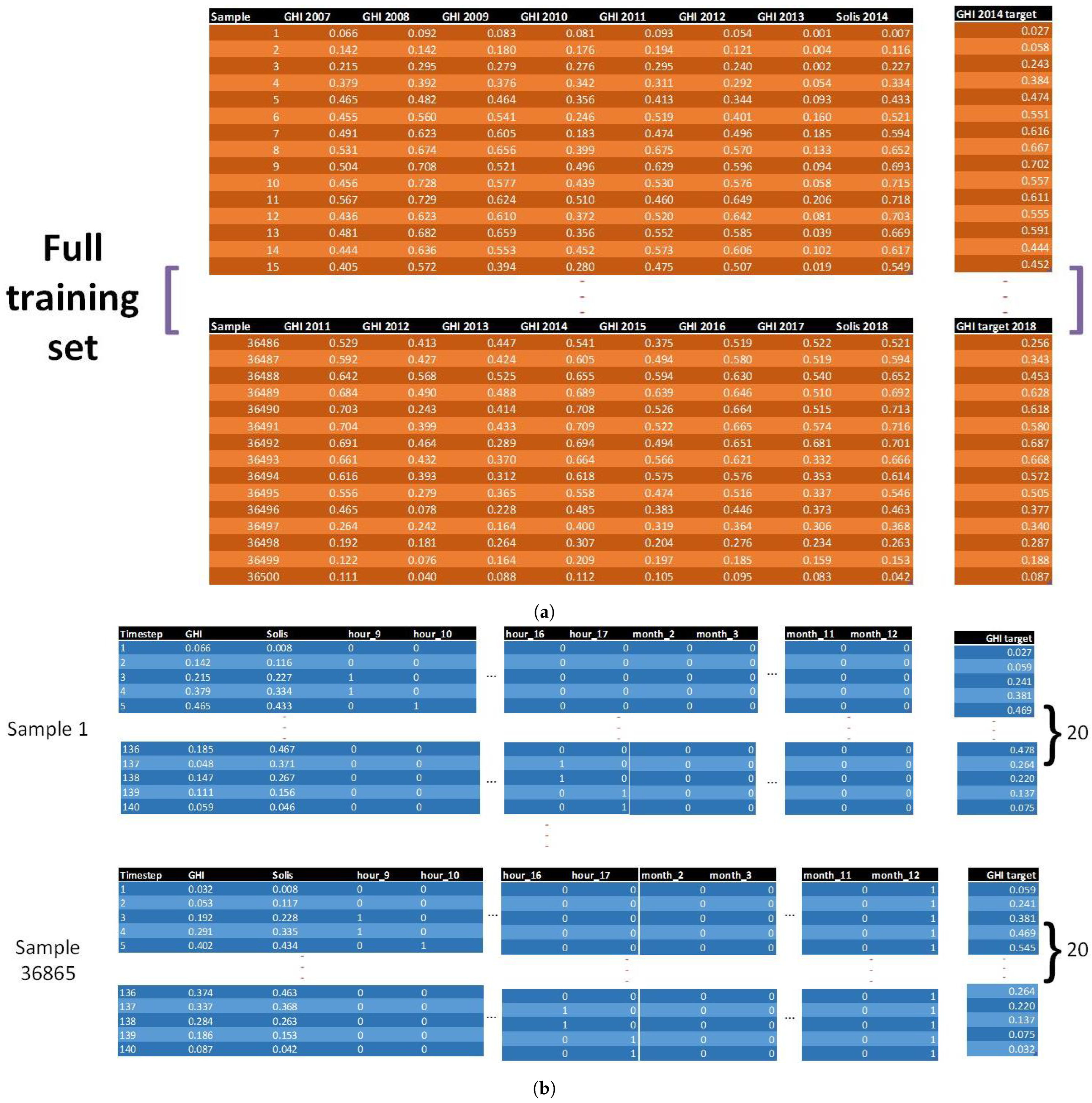

5.2. Data Preparation

5.3. Evaluation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| FFNN | Feed Forward Neural Network |

| RNN | Recurrent Neural Network |

| HRES | Hybrid Renewable Energy System |

References

- IEA. World Energy Outlook 2020. Available online: https://www.iea.org/reports/world-energy-outlook-2020 (accessed on 1 December 2021).

- IEA. Tracking SDG 7: The Energy Progress Report 2019. Available online: https://www.irena.org/publications/2019/May/Tracking-SDG7-The-Energy-Progress-Report-2019 (accessed on 1 December 2021).

- Medina-Santana, A.A.; Flores-Tlacuahuac, A.; Cárdenas-Barrón, L.E.; Fuentes-Cortés, L.F. Optimal design of the water-energy-food nexus for rural communities. Comput. Chem. Eng. 2020, 143, 107120. [Google Scholar] [CrossRef]

- Rodriguez, M.; Espin, V.; Arcos-Aviles, D.; Martinez, W. Energy management system for an isolated microgrid based on Fuzzy logic control and meta-heuristic algorithms. In Proceedings of the 2022 IEEE 31st International Symposium on Industrial Electronics (ISIE), Anchorage, AK, USA, 1–3 June 2022; pp. 462–467. [Google Scholar]

- Emad, D.; El-Hameed, M.; Yousef, M.; El-Fergany, A. Computational methods for optimal planning of hybrid renewable microgrids: A comprehensive review and challenges. Arch. Comput. Methods Eng. 2020, 27, 1297–1319. [Google Scholar] [CrossRef]

- Yuan, H.; Ye, H.; Chen, Y.; Deng, W. Research on the optimal configuration of photovoltaic and energy storage in rural microgrid. Energy Rep. 2022, 8, 1285–1293. [Google Scholar] [CrossRef]

- Das, B.K.; Al-Abdeli, Y.M.; Kothapalli, G. Optimisation of stand-alone hybrid energy systems supplemented by combustion-based prime movers. Appl. Energy 2017, 196, 18–33. [Google Scholar] [CrossRef]

- Kamal, M.M.; Ashraf, I.; Fernandez, E. Planning and optimization of microgrid for rural electrification with integration of renewable energy resources. J. Energy Storage 2022, 52, 104782. [Google Scholar] [CrossRef]

- Lan, H.; Wen, S.; Hong, Y.Y.; David, C.Y.; Zhang, L. Optimal sizing of hybrid PV/diesel/battery in ship power system. Appl. Energy 2015, 158, 26–34. [Google Scholar] [CrossRef]

- Abd el Motaleb, A.M.; Bekdache, S.K.; Barrios, L.A. Optimal sizing for a hybrid power system with wind/energy storage based in stochastic environment. Renew. Sustain. Energy Rev. 2016, 59, 1149–1158. [Google Scholar] [CrossRef]

- Abualigah, L.; Zitar, R.A.; Almotairi, K.H.; Hussein, A.M.; Abd Elaziz, M.; Nikoo, M.R.; Gandomi, A.H. Wind, solar, and photovoltaic renewable energy systems with and without energy storage optimization: A survey of advanced machine learning and deep learning techniques. Energies 2022, 15, 578. [Google Scholar] [CrossRef]

- Al-Falahi, M.D.; Jayasinghe, S.; Enshaei, H. A review on recent size optimization methodologies for standalone solar and wind hybrid renewable energy system. Energy Convers. Manag. 2017, 143, 252–274. [Google Scholar] [CrossRef]

- Kusakana, K.; Vermaak, H.; Numbi, B. Optimal sizing of a hybrid renewable energy plant using linear programming. In Proceedings of the IEEE Power and Energy Society Conference and Exposition in Africa: Intelligent Grid Integration of Renewable Energy Resources (PowerAfrica), Johannesburg, South Africa, 9–13 July 2012; pp. 1–5. [Google Scholar]

- Domenech, B.; Ranaboldo, M.; Ferrer-Martí, L.; Pastor, R.; Flynn, D. Local and regional microgrid models to optimise the design of isolated electrification projects. Renew. Energy 2018, 119, 795–808. [Google Scholar] [CrossRef]

- Khatod, D.K.; Pant, V.; Sharma, J. Analytical approach for well-being assessment of small autonomous power systems with solar and wind energy sources. IEEE Trans. Energy Convers. 2009, 25, 535–545. [Google Scholar] [CrossRef]

- Luna-Rubio, R.; Trejo-Perea, M.; Vargas-Vázquez, D.; Ríos-Moreno, G. Optimal sizing of renewable hybrids energy systems: A review of methodologies. Sol. Energy 2012, 86, 1077–1088. [Google Scholar] [CrossRef]

- Nadjemi, O.; Nacer, T.; Hamidat, A.; Salhi, H. Optimal hybrid PV/wind energy system sizing: Application of cuckoo search algorithm for Algerian dairy farms. Renew. Sustain. Energy Rev. 2017, 70, 1352–1365. [Google Scholar] [CrossRef]

- Suman, G.K.; Guerrero, J.M.; Roy, O.P. Optimisation of solar/wind/bio-generator/diesel/battery based microgrids for rural areas: A PSO-GWO approach. Sustain. Cities Soc. 2021, 67, 102723. [Google Scholar] [CrossRef]

- Bahramara, S.; Moghaddam, M.P.; Haghifam, M. Optimal planning of hybrid renewable energy systems using HOMER: A review. Renew. Sustain. Energy Rev. 2016, 62, 609–620. [Google Scholar] [CrossRef]

- Lambert, T.W.; Hittle, D. Optimization of autonomous village electrification systems by simulated annealing. Sol. Energy 2000, 68, 121–132. [Google Scholar] [CrossRef]

- Singla, P.; Duhan, M.; Saroha, S. A comprehensive review and analysis of solar forecasting techniques. Front. Energy 2021, 16, 187–223. [Google Scholar] [CrossRef]

- Kim, H.; Aslam, M.; Choi, M.; Lee, S. A study on long-term solar radiation forecasting for PV in microgrid. In Proceedings of the APAP Conference, Jeju, Korea, 16–19 October 2017; pp. 16–19. [Google Scholar]

- Cannizzaro, D.; Aliberti, A.; Bottaccioli, L.; Macii, E.; Acquaviva, A.; Patti, E. Solar radiation forecasting based on convolutional neural network and ensemble learning. Expert Syst. Appl. 2021, 181, 115167. [Google Scholar] [CrossRef]

- Patterson, J.; Gibson, A. Deep Learning: A Practitioner’s Approach; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2017. [Google Scholar]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4. [Google Scholar]

- Kumar, N.M.; Subathra, M. Three years ahead solar irradiance forecasting to quantify degradation influenced energy potentials from thin film (a-Si) photovoltaic system. Results Phys. 2019, 12, 701–703. [Google Scholar] [CrossRef]

- Aslam, M.; Lee, J.M.; Kim, H.S.; Lee, S.J.; Hong, S. Deep learning models for long-term solar radiation forecasting considering microgrid installation: A comparative study. Energies 2019, 13, 147. [Google Scholar] [CrossRef]

- Sharika, W.; Fernando, L.; Kanagasundaram, A.; Valluvan, R.; Kaneswaran, A. Long-term Solar Irradiance Forecasting Approaches—A Comparative Study. In Proceedings of the 2018 IEEE International Conference on Information and Automation for Sustainability (ICIAfS), Colombo, Sri Lanka, 21–22 December 2018; pp. 1–6. [Google Scholar]

- Aslam, M.; Seung, K.H.; Lee, S.J.; Lee, J.M.; Hong, S.; Lee, E.H. Long-term Solar Radiation Forecasting using a Deep Learning Approach-GRUs. In Proceedings of the 2019 IEEE 8th International Conference on Advanced Power System Automation and Protection (APAP), Xi’an, China, 21–24 October 2019; pp. 917–920. [Google Scholar]

- Kumar Barik, A.; Malakar, S.; Goswami, S.; Ganguli, B.; Sen Roy, S.; Chakrabarti, A. Analysis of GHI Forecasting Using Seasonal ARIMA. In Data Management, Analytics and Innovation; Springer: Berlin/Heidelberg, Germany, 2021; pp. 55–69. [Google Scholar]

- Abubakr, M.; Akoush, B.; Khalil, A.; Hassan, M.A. Unleashing deep neural network full potential for solar radiation forecasting in a new geographic location with historical data scarcity: A transfer learning approach. Eur. Phys. J. Plus 2022, 137, 474. [Google Scholar] [CrossRef]

- Smyl, S. A hybrid method of exponential smoothing and recurrent neural networks for time series forecasting. Int. J. Forecast. 2020, 36, 75–85. [Google Scholar] [CrossRef]

- Hewamalage, H.; Bergmeir, C.; Bandara, K. Recurrent neural networks for time series forecasting: Current status and future directions. Int. J. Forecast. 2021, 37, 388–427. [Google Scholar] [CrossRef]

- Ghimire, S.; Deo, R.C.; Raj, N.; Mi, J. Deep solar radiation forecasting with convolutional neural network and long short-term memory network algorithms. Appl. Energy 2019, 253, 113541. [Google Scholar] [CrossRef]

- Zhang, W.; Maleki, A.; Rosen, M.A. A heuristic-based approach for optimizing a small independent solar and wind hybrid power scheme incorporating load forecasting. J. Clean. Prod. 2019, 241, 117920. [Google Scholar] [CrossRef]

- Zhang, W.; Maleki, A.; Rosen, M.A.; Liu, J. Sizing a stand-alone solar-wind-hydrogen energy system using weather forecasting and a hybrid search optimization algorithm. Energy Convers. Manag. 2019, 180, 609–621. [Google Scholar] [CrossRef]

- Gupta, R.; Kumar, R.; Bansal, A.K. BBO-based small autonomous hybrid power system optimization incorporating wind speed and solar radiation forecasting. Renew. Sustain. Energy Rev. 2015, 41, 1366–1375. [Google Scholar] [CrossRef]

- Maleki, A.; Khajeh, M.G.; Rosen, M.A. Weather forecasting for optimization of a hybrid solar-wind–powered reverse osmosis water desalination system using a novel optimizer approach. Energy 2016, 114, 1120–1134. [Google Scholar] [CrossRef]

- Benidis, K.; Rangapuram, S.S.; Flunkert, V.; Wang, Y.; Maddix, D.; Turkmen, C.; Gasthaus, J.; Bohlke-Schneider, M.; Salinas, D.; Stella, L.; et al. Deep Learning for Time Series Forecasting: Tutorial and Literature Survey. ACM Comput. Surv. CSUR 2018. [Google Scholar] [CrossRef]

- Heaton, J. Ian goodfellow, yoshua bengio, and aaron courville: Deep learning. Genet. Program. Evolvable Mach. 2018, 19, 305–307. [Google Scholar] [CrossRef]

- Ghritlahre, H.K.; Prasad, R.K. Exergetic performance prediction of solar air heater using MLP, GRNN and RBF models of artificial neural network technique. J. Environ. Manag. 2018, 223, 566–575. [Google Scholar] [CrossRef] [PubMed]

- Feng, Z.K.; Huang, Q.Q.; Niu, W.J.; Yang, T.; Wang, J.Y.; Wen, S.P. Multi-step-ahead solar output time series prediction with gate recurrent unit network using data decomposition and cooperation search algorithm. Energy 2022, 261, 125217. [Google Scholar] [CrossRef]

- IEA. National Solar Radiation Database. 2021. Available online: https://nsrdb.nrel.gov/ (accessed on 1 January 2022).

- Gbémou, S.; Eynard, J.; Thil, S.; Guillot, E.; Grieu, S. A Comparative Study of Machine Learning-Based Methods for Global Horizontal Irradiance Forecasting. Energies 2021, 14, 3192. [Google Scholar] [CrossRef]

- Schröer, C.; Kruse, F.; Gómez, J.M. A systematic literature review on applying CRISP-DM process model. Procedia Comput. Sci. 2021, 181, 526–534. [Google Scholar] [CrossRef]

- Abdar, M.; Pourpanah, F.; Hussain, S.; Rezazadegan, D.; Liu, L.; Ghavamzadeh, M.; Fieguth, P.; Cao, X.; Khosravi, A.; Acharya, U.R.; et al. A review of uncertainty quantification in deep learning: Techniques, applications and challenges. Inf. Fusion 2021, 76, 243–297. [Google Scholar] [CrossRef]

- Taieb, S.B.; Bontempi, G.; Atiya, A.F.; Sorjamaa, A. A review and comparison of strategies for multi-step ahead time series forecasting based on the NN5 forecasting competition. Expert Syst. Appl. 2012, 39, 7067–7083. [Google Scholar] [CrossRef]

- Yu, R.; Zheng, S.; Anandkumar, A.; Yue, Y. Long-Term Forecasting Using Higher Order Tensor RNNs. arXiv 2017, arXiv:1711.00073. [Google Scholar]

- Kumar, R.L.; Khan, F.; Kadry, S.; Rho, S. A survey on blockchain for industrial internet of things. Alex. Eng. J. 2022, 61, 6001–6022. [Google Scholar] [CrossRef]

- Khalil, M.I.; Kim, R.; Seo, C. Challenges and opportunities of big data. J. Platf. Technol. 2020, 8, 3–9. [Google Scholar]

- Han, Y.; Hong, B.W. Deep learning based on fourier convolutional neural network incorporating random kernels. Electronics 2021, 10, 2004. [Google Scholar] [CrossRef]

| ID | 571501 |

| Latitude | 19.65 |

| Longitude | −101.66 |

| Epochs | Batch Size | Units Hidden 1 | Units Hidden 2 | Units Encoder | Units Decoder | Learning Rate | |

|---|---|---|---|---|---|---|---|

| FFNN | 20, 30, 40 | 256, 512, 1024 | 128, 256, 512 | 16, 32, 64 | - | - | 0.0005, 0.001, 0.01 |

| RNN | 40, 80, 120 | 256, 512, 1024 | - | - | 16, 32, 64 | 16, 32, 64 | 0.0001, 0.001, 0.01 |

| Metric | Naive | Exp. Smoothing | ARIMA | FFNN | RNN |

|---|---|---|---|---|---|

| RMSE | 225.817 | 231.580 | 218.461 | 174.728 | 170.033 |

| WAPE | 0.258 | 0.290 | 0.308 | 0.241 | 0.224 |

| MASE | 1.000 | 1.053 | 1.117 | 0.876 | 0.815 |

| MAE | 150.650 | 169.543 | 179.851 | 141.052 | 131.144 |

| APB | 3.067 | −15.399 | 20.470 | 8.051 | 2.795 |

| RMSE | WAPE | MASE | APB | MAE | |

|---|---|---|---|---|---|

| statistic | 34 | 25 | 25 | 25 | 25 |

| p-value | 0.121 | 0.032 | 0.032 | 0.032 | 0.032 |

| Computational Time | Naive | Exp. Smoothing | ARIMA | FFNN * | RNN * |

|---|---|---|---|---|---|

| Training | 0.68 | 12.09 | 18,421.12 | 6.02 | 118.42 |

| Testing | 0.01 | 0.07 | 3.18 | 1.05 | 2.03 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Medina-Santana, A.A.; Hewamalage, H.; Cárdenas-Barrón, L.E. Deep Learning Approaches for Long-Term Global Horizontal Irradiance Forecasting for Microgrids Planning. Designs 2022, 6, 83. https://doi.org/10.3390/designs6050083

Medina-Santana AA, Hewamalage H, Cárdenas-Barrón LE. Deep Learning Approaches for Long-Term Global Horizontal Irradiance Forecasting for Microgrids Planning. Designs. 2022; 6(5):83. https://doi.org/10.3390/designs6050083

Chicago/Turabian StyleMedina-Santana, Alfonso Angel, Hansika Hewamalage, and Leopoldo Eduardo Cárdenas-Barrón. 2022. "Deep Learning Approaches for Long-Term Global Horizontal Irradiance Forecasting for Microgrids Planning" Designs 6, no. 5: 83. https://doi.org/10.3390/designs6050083

APA StyleMedina-Santana, A. A., Hewamalage, H., & Cárdenas-Barrón, L. E. (2022). Deep Learning Approaches for Long-Term Global Horizontal Irradiance Forecasting for Microgrids Planning. Designs, 6(5), 83. https://doi.org/10.3390/designs6050083