Abstract

The buzzword “smart home” promises an intelligent, helpful environment in which technology makes life easier, simpler or safer for its inhabitants. On a technical level, this is currently achieved by many networked devices interacting with each other, working on shared protocols and standards. From a user experience (UX) perspective, however, the interaction with such a collection of devices has become so complex that it currently rather stands in the way of widespread adoption and use. So far, it does not seem likely that a common user interface (UI) concept will emerge as a quasi-standard, as the desktop interface did for graphical UIs. Therefore, our research follows a different approach. Instead of many singular intelligent devices, we envision a UI concept for smart environments that integrates diverse pieces of technology in a coherent mental model of an embodied “room intelligence” (RI). RI will combine smart machinery, mobile robotic arms and mundane physical objects, thereby blurring the line between the physical and the digital world. The present paper describes our vision and emerging research questions and presents the initial steps of technical realization.

1. Introduction

The other day I had a visitor: she was a time traveler from the year 2068. For the moment, the reason for her visit is less important. More important is our discussion about the way people will be living in 2068 and the role of technology in their daily life. “I guess it will all look very modern and futuristic, huh? Flying cars, robots serving you breakfast, connected devices and technology everywhere you look around?”—“Oh no”, she laughed, “thank God it is pretty much the opposite. In fact, you hardly even see technology anymore,” and, with a more nostalgic look in her face, “but you are right, there was a time when technology was all over the place. New devices and zillions of platforms just to manage everyday life. It felt like becoming a slave to it. This was when I was in my 20s, I still remember that horrible time. Then, luckily, things changed. Now we found alternative ways to connect the many devices in a smart way and to integrate them smoothly in a comfortable environment. Officially, we call it a ‘room intelligence’, but I just say ‘roomie’. It is there when you need it, but it does not dominate your home. And it respects the situation: for example, when I am having visitors in my office, the room intelligence brings coffee and cake in a decent and effective way. I can focus on welcoming the guests and on the conversation.” I was stunned by her descriptions: “Wow, interesting! Tell me more about this room intelligence! How do you communicate with it? What does it look like? How do you actually know it is there if you do not see it?”—“Good questions!” she smiled, “I see you got the point.”

2. Vision

Our research picks up the vision of a room intelligence (RI) as described by the visitor from the future. The room organizes connected devices in a smart and aesthetic way and serves as an assistant in the background. On a functional level, this is not a novel idea, of course. For example, the vision of “Ubiquitous Computing” formulated in 1991 by Mark Weiser describes a world in which many things possess computing power and interact with each other to provide new functionality. More recently, the buzzword “smart home” has been frequently used. It promises an intelligent, helpful environment, in which technology makes life easier, simpler or safer for its inhabitants. On a technical level, this is currently achieved by many networked devices interacting with each other, working on shared protocols and standards. From a user experience (UX) perspective, however, configuration of and interaction with such a collection of devices has become so complex that it currently rather stands in the way of widespread adoption and use. Mark Weiser himself also claimed later that a common and understandable interaction paradigm will be one of the key challenges for Ubiquitous Computing. With more and more companies competing in the smart home market and no real “killer application” in sight, it currently does not seem likely that a common user interface (UI) concept will emerge as a quasi-standard, as the desktop interface did for graphical UIs. Furthermore, it seems unlikely that many manufacturers will actually agree on such a unified concept, putting their own interaction designs and brand images in the background.

Therefore, we follow a different approach: instead of many singular, but interacting intelligent devices, we propose an overarching interaction concept for the environment as a whole. The smart home environment will convey to its users the mental model of a central, omnipresent, and embodied room intelligence. In addition to other approaches, in which a central communication device controls several connected smart devices, the room intelligence can control existing UIs and less smart home devices and will also be able to deal with “legacy”, i.e., non-smart machinery or generally any physical object. For this purpose, the room intelligence will be able to manipulate the objects within the room by mobile robotic arms. Hence, the intelligence is embodied and becomes a part of the physical environment. Thereby, the line between the physical and the digital world is blurred. Thus, our approach aims for an inherently different UX: the interaction inside a surrounding, embodied intelligence. Our aim is to provide a comprehensive way of dealing with the increasing complexity of diverse devices assisting us in daily life tasks by delegating details to the room intelligence. The interaction will always be situated and context specific. Examples of environments with such an omnipresent intelligence have been presented in science fiction movies, such as Star Trek or 2001: A Space Odyssey, where the intelligence mostly manifests itself as an omnipresent voice. The 2009 movie Moon even shows a physical manifestation as robotic arms acting as “helping hands” and communicating through small displays.

From a UI design view, there are many ways to operationalize this idea, and the quality of the emerging interaction in terms of efficiency, overall experience and well-being will then be shaped by the “personality” of such a room intelligence. From a UX-point of view, the following characteristics are central to our vision of such a room intelligence:

- Invisibility: The room intelligence is acting “behind the scenes”. It is not an additional element in the room but it essentially is the room.

- Peripheral interaction: Interaction with the RI is a natural, peripheral action, like talking to someone en passant.

- Smooth integration: Blurring the line between the physical and digital world, technology becomes an integral part of the environment.

- Cozy atmosphere: The ambience is that of a living room, not a factory floor.

- Transparency: A coherent and understandable mental model for the room’s users.

- Coherence: The RI is perceived as one entity, not a collection of singular elements.

- Situational awareness: A sense for the current social situation and adequate actions.

- Personality: The RI can express different personalities.

- Adaptability: The RI shall learn from past interactions and optimize its behavior accordingly.

Note, that the listed envisioned characteristics of the RI represent ideals, and a central task of our research is to integrate these in the technical infrastructure in the best possible way. For example, it seems a challenge to combine robotic arms with the design ideal of a cozy atmosphere. Yet, more important is to deliberately address each of these potential contradictions. For example, the first experimentations with different types of robotic arms already revealed that the UX and acceptance of robotic arms in the living room heavily depends on their look and feel (e.g., organic shape, metallic look).

3. Related Work

Mark Weiser’s vision of Ubiquitous Computing [1] inspired a comprehensive body of research on how to implement environments in which digital and physical elements mix. The idea of Tangible Interaction [2] is one important strand in this field, as it directly addresses the combination of physical and digital elements in the real world at an interaction level. Embodiment has also been recognized as an important quality. It is directly addressed in our embodied room intelligence. The field of Mixed or Augmented Reality [3] in a way takes a complementary approach to this, also mixing physical and digital elements, but by means of see-through devices, such as handheld or head-mounted displays. The special variation of Spatial Augmented Reality [4] uses room scale displays as described in [5] or [6] to implement this mix without forcing the human to wear or hold any special equipment. Finally, the concept of ambient displays [7] or UI makes technology blend into the environment in such a way that it can be perceived and interacted with in a peripheral way.

On a conceptual level, existing work in human-computer interaction (HCI) and psychology already proposes some concepts of relevance to our research. For example, Beaudouin-Lafon postulates the transition from the computer as a tool [8] to the computer as a partner [9]. If we take this seriously, we will have to communicate with the computer at eye level, as we do with humans. Indeed, it is well known that people apply rules of social interaction between humans also when interacting with computers or other technical entities (e.g., [10]). For example, they might literally talk to their smartphone (“Why are you taking so long?”), apply humanlike characteristics to it (“Are you stubborn today?”), and even apply norms of courtesy as if they could hurt the computer’s feelings [11]. One central factor that influences peoples’ behavior towards technology seems to be its personality. Depending on how the technology presents itself, i.e., which personality it expresses, humans interact with the technology in a different way and may experience the interaction as more or less satisfying (e.g., [10]). Altogether, the design of a technology crucially impacts how a user perceives and interacts with it. The creation of this impression becomes even more complex in pervasive environments where multiple devices form a unified impression of a “room personality”. Current voice assistants such as Amazon Alexa and Google Home are already trying to unite many smart home technologies under the umbrella of their voice-based UI. However, this integration only happens at the surface level, being implemented as separate skills, and lacking a common operating principle or even personality. Existing work in HCI still lacks insights about how different design cues can be integrated to control the overall perception of system intelligence, entity, and personality, and thus also shape the users’ perceived own role within such an intelligent environment.

4. Initial Implementation Ideas and Next Research Steps

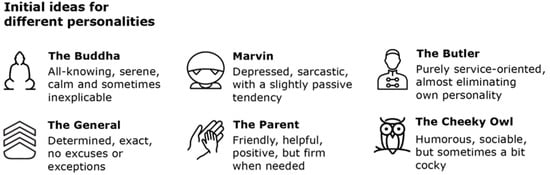

Our goal is to develop a scalable interaction paradigm for private smart spaces by designing a room intelligence with a consistent personality and a physical embodiment which provides a coherent and understand-able mental model for its users. Besides a concept of room intelligence, the mockup we will build will also provide a testbed and evaluation environment for the systematic variation of personality design factors and effects on user perceptions. In particular, we aim to investigate how a personality of the environment can be conveyed in a credible, authentic way and what necessary cues have to be provided by such an environment. Figure 1 displays first ideas of different types of room personality.

Figure 1.

An incomplete list of possible personalities for the room intelligence.

Besides an adequate expression of the room intelligence’s personality, another central question is how the room intelligence can actively attract and guide its users’ attention in the environment.

Regarding the technical operationalization, the room intelligence might combine the following elements:

- Visual elements providing peripheral stimuli to initially attract and guide attention, e.g., an omnidirectional room scale projective display which can create output in any location such as on the walls and the floor;

- Visual design elements to support focused interaction, e.g., smaller displays which can provide high resolution and brightness in limited areas;

- Acoustic elements for spatial sounds and the “voice” of the room intelligence through distributed speakers;

- “Helping hands”, e.g., robotic arms at a ceiling rail system that can move to any position in the room;

- A schematic representation of a “face” of the room intelligence, realized through fixed or mobile screens or also through a grid of movable cylinders as a shape display integrated in the wall that may form a 3D representation of the face (also known as “intraFace”, see Figure 2).

Figure 2. Conceptual sketches of the internal physical room interface (also known as intraFace).

Figure 2. Conceptual sketches of the internal physical room interface (also known as intraFace).

These are only initial ideas and the concrete design and technical operationalization will be a result of future research. Some first important questions will be: What are adequate ways (modalities) of communication for this room intelligence? How can communication between user and system be initiated? How can we attract and guide the user’s attention? In addition, there are many psychological questions regarding the user experience. As one of the reviewers of this paper remarked, some users might be disturbed by the central, but omnipresent intelligence that penetrates the privacy and might wish for a physical switch that can be used to turn on and off the room intelligence. After performing the first, more conceptual studies and combining suited design elements to a prototypical test bed, our next steps will focus on the experimentation with different types of personality and the question of an adequate personality of the intelligence behind the scenes. This may also include evolutionary elements and changes of the room personality over time, e.g., turning from Marvin to Buddha after ten years, gaining “wisdom” over time. Finally, once the general concept of the room intelligence is established and prototyped it will be interesting to explore the market potential. For example, how much would such a system cost? Will there be the need for upgrade and maintenance? How many people may buy into such a service? What are relevant choice criteria from a consumer perspective?

Author Contributions

Conceptualization, S.D., A.B. and D.U.; writing—original draft preparation, S.D.; writing—review and editing, A.B. and D.U.; visualization, D.U.; funding acquisition, S.D. and A.B. All authors have read and agreed to the published version of the manuscript.

Funding

Part of this research was funded by the DFG-project PerforM—Personalities for Machinery in Personal Pervasive Smart Spaces (DI 2436/2-1) in context of the Priority Program “Scalable Interaction Paradigms for Pervasive Computing Environments” (SPP 2199).

Acknowledgments

Thanks to Carl Oechsner, Lara Christoforakos and Corinna Hormes for their support in inspiring brainstorming sessions and first practical realizations of the described vision of room intelligence.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Xu, X.; Sar, S. Do We See Machines the Same Way as We See Humans? A Survey on Mind Perception of Machines and Human Beings. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27 August 2018; pp. 472–475. [Google Scholar]

- Martini, M.C.; Gonzalez, C.A.; Wiese, E. Seeing minds in others—Can agents with robotic appearance have human-like preferences? PLoS ONE 2016, 11, e0146310. [Google Scholar]

- Go, E.; Sundar, S.S. Humanizing chatbots: The effects of visual, identity and conversational cues on humanness perceptions. Comput. Hum. Behav. 2019, 97, 304–316. [Google Scholar] [CrossRef]

- Bimber, O.; Raskar, R. Spatial Augmented Reality: Merging Real and Virtual Worlds; AK Peters/CRC Press: Boca Raton, FL, USA, 2005. [Google Scholar]

- Benko, H.; Wilson, A.D.; Zannier, F. Dyadic projected spatial augmented reality. In Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, Honolulu, HI, USA, 5 October 2014; pp. 645–655. [Google Scholar]

- Fender, A.R.; Benko, H.; Wilson, A. Meetalive: Room-scale omni-directional display system for multi-user content and control sharing. In Proceedings of the 2017 ACM International Conference on Interactive Surfaces and Spaces, Brighton, UK, 17 October 2017; pp. 106–115. [Google Scholar]

- Wisneski, C.; Ishii, H.; Dahley, A.; Gorbet, M.; Brave, S.; Ullmer, B.; Yarin, P. Ambient displays: Turning architectural space into an interface between people and digital information. In Proceedings of the International Workshop on Cooperative Buildings, Darmstadt, Germany, 25 February 1998; pp. 22–32. [Google Scholar]

- Beaudouin-Lafon, M. Instrumental interaction: An interaction model for designing post-WIMP user interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, The Hague, The Netherlands, 1–6 April 2000; pp. 446–453. [Google Scholar]

- Beaudouin-Lafon, M. Designing interaction, not interfaces. In Proceedings of the Working Conference on Advanced Visual Interfaces, Gallipoli, Italy, 25–28 May 2004; pp. 15–22. [Google Scholar]

- Nass, C.; Moon, Y.; Fogg, B.J.; Reeves, B.; Dryer, C. Can computer personalities be human personalities? Int. J. Hum. Comput. Stud. 1995, 43, 223–239. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y. Machines and mindlessness: Social responses to computers. J. Soc. Issues 2000, 56, 81–103. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).