Author Contributions

Conceptualization, S.H.; methodology, S.H., S.S., and J.F.; software, J.G., J.V., and S.S.; validation, S.S., S.H., and J.F.; formal analysis, S.S.; investigation, S.S.; resources, J.G, J.V., and S.S.; data curation, S.S.; writing—original draft preparation, S.S. and S.H.; writing—review and editing, J.F.; visualization, S.S.; supervision, S.H.; project administration, S.S., J.G., and J.V. All authors have read and agreed to the published version of the manuscript.

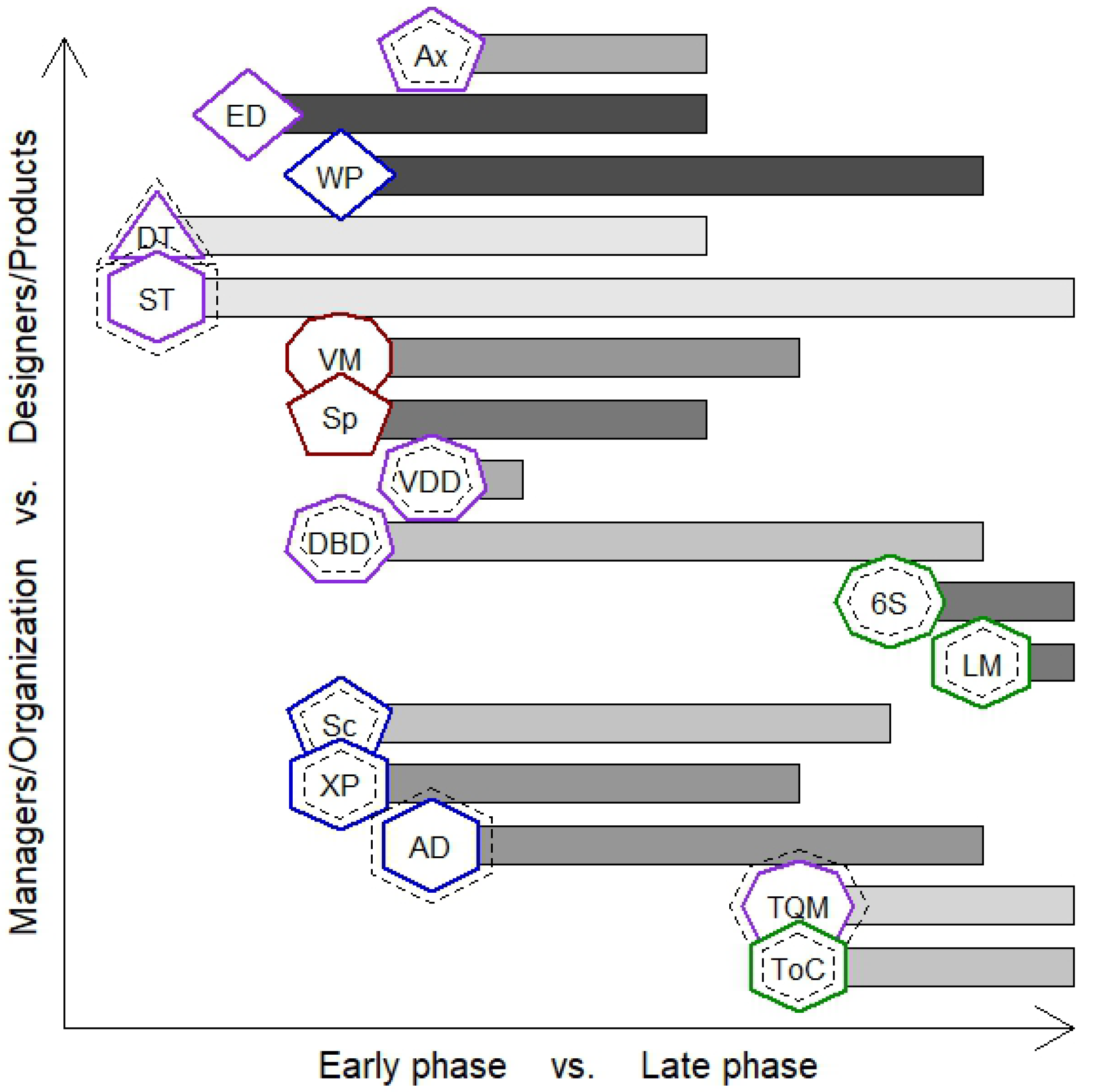

Figure 1.

Graphical representation of approaches and criteria; phase represented by horizontal position, users by vertical position, complexity by number of polygon sides, guidance by darkness of bar shading, values by color of polygon outline (blue = time, green = cost, red = risk, purple = marketability), category by dashed outline (inner = method, none = process, outer = environment); hard/soft is not depicted.

Figure 1.

Graphical representation of approaches and criteria; phase represented by horizontal position, users by vertical position, complexity by number of polygon sides, guidance by darkness of bar shading, values by color of polygon outline (blue = time, green = cost, red = risk, purple = marketability), category by dashed outline (inner = method, none = process, outer = environment); hard/soft is not depicted.

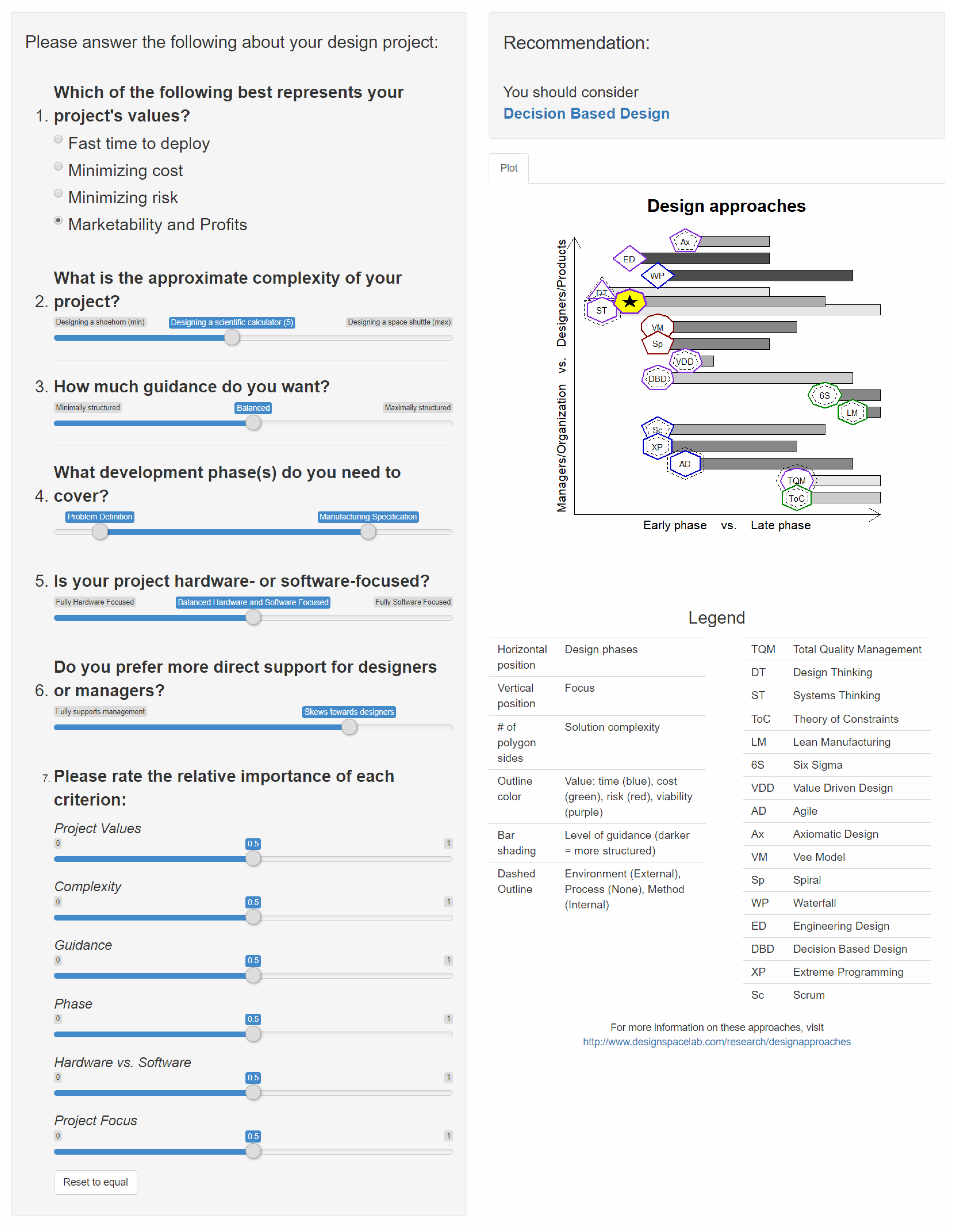

Figure 2.

User interface for prototype PD Advisor.

Figure 2.

User interface for prototype PD Advisor.

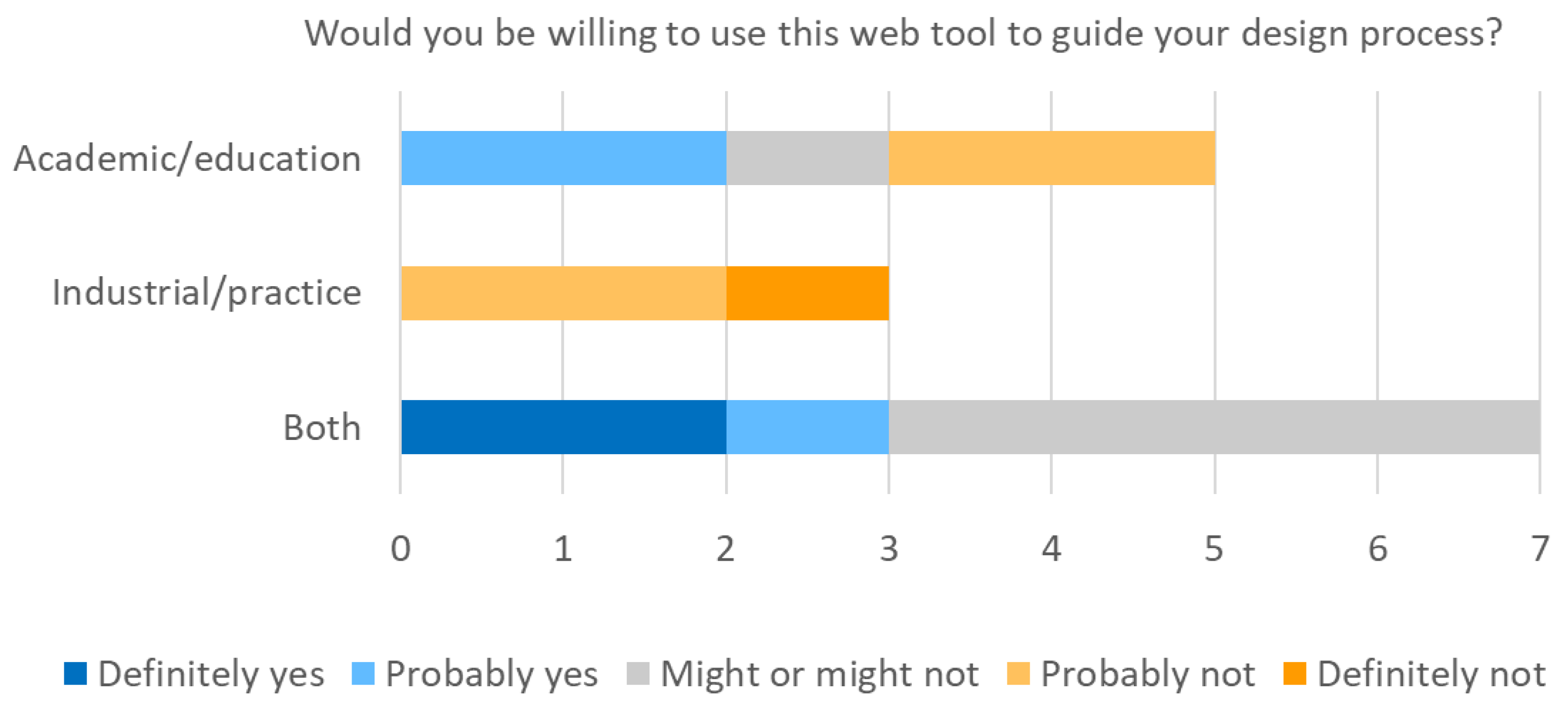

Figure 3.

Perceived willingness to use PD Advisor.

Figure 3.

Perceived willingness to use PD Advisor.

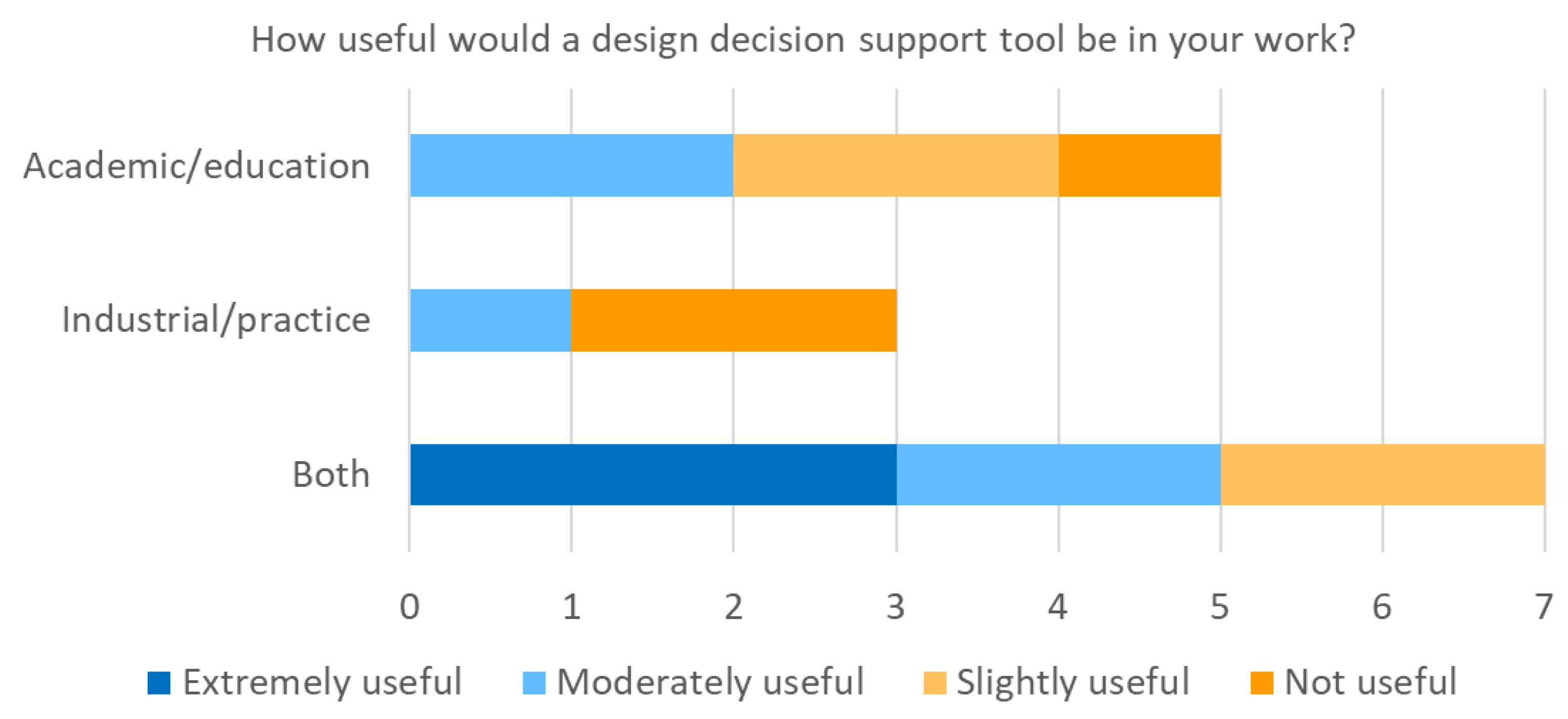

Figure 4.

Perceived usefulness of PD Advisor.

Figure 4.

Perceived usefulness of PD Advisor.

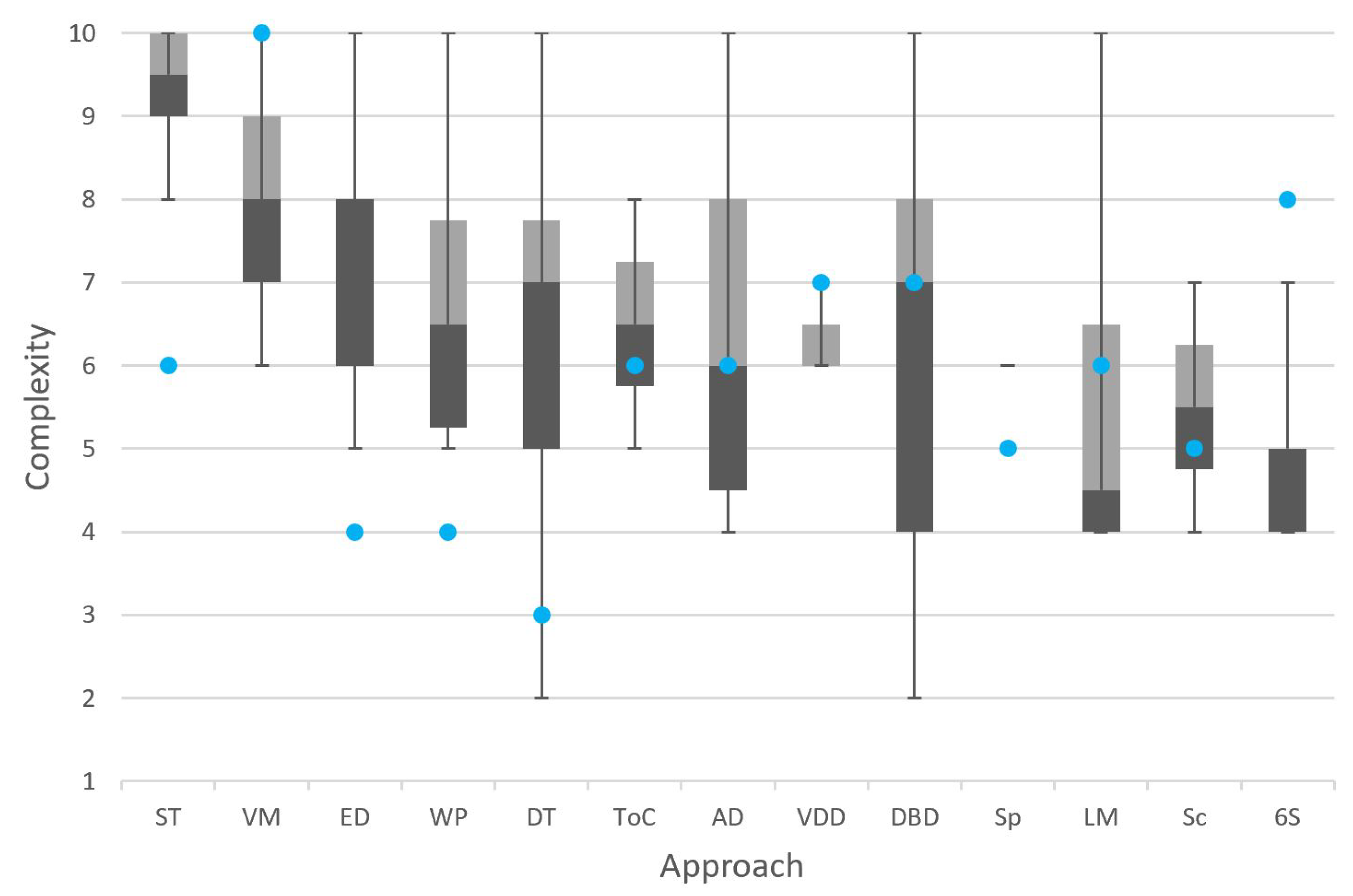

Figure 5.

Complexity level of problem that each approach is relevant to. Shaded boxes span the interquartile range, separated by the median. Error bars represent upper and lower extremes. Blue dots represent the authors’ initial classification. Approaches are ordered by decreasing mean.

Figure 5.

Complexity level of problem that each approach is relevant to. Shaded boxes span the interquartile range, separated by the median. Error bars represent upper and lower extremes. Blue dots represent the authors’ initial classification. Approaches are ordered by decreasing mean.

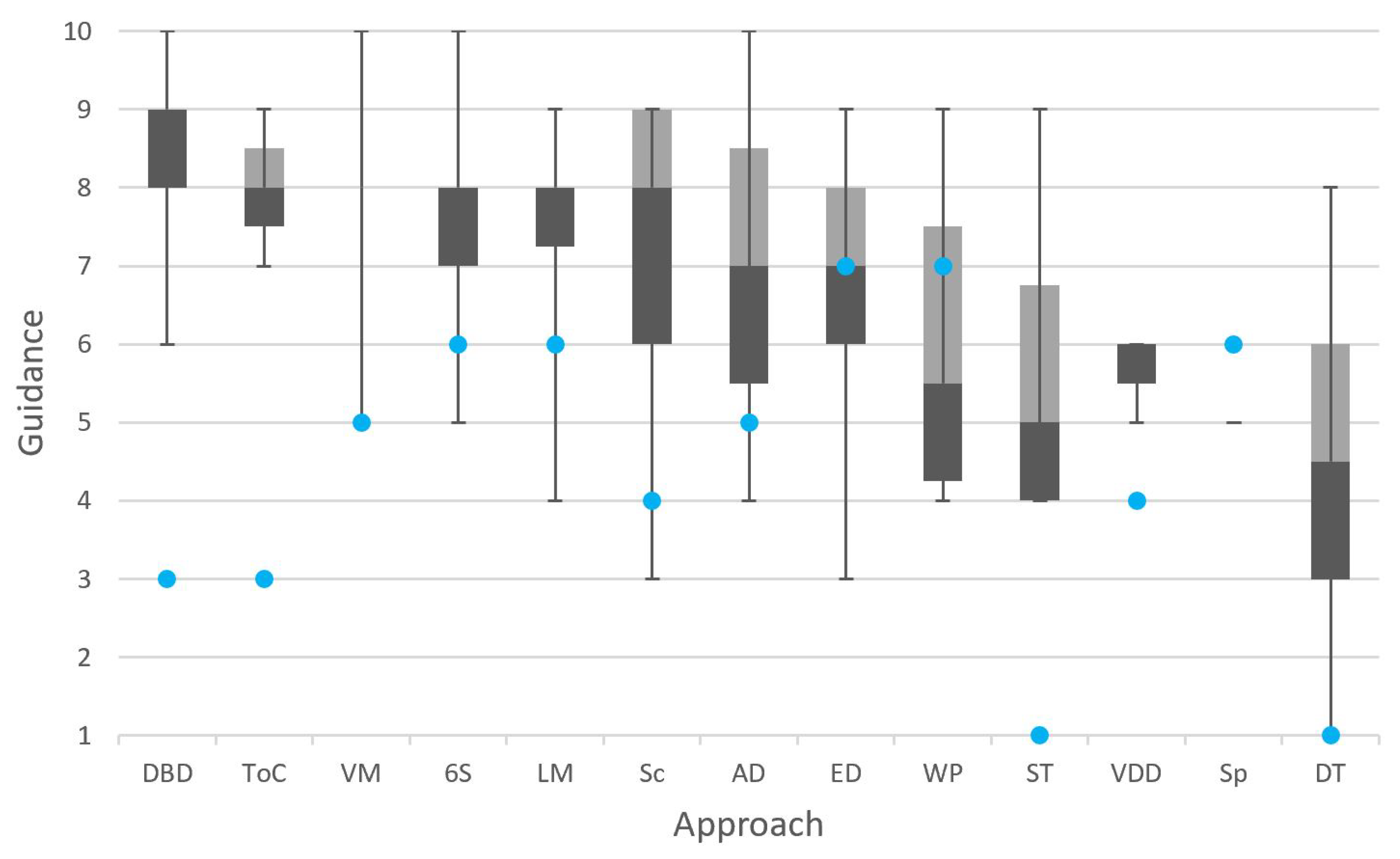

Figure 6.

Level of guidance each approach provides. Shaded boxes span the interquartile range, separated by the median. Error bars represent upper and lower extremes. Blue dots represent the authors’ initial classification. Approaches ordered by decreasing mean, with ties broken by increasing standard deviation.

Figure 6.

Level of guidance each approach provides. Shaded boxes span the interquartile range, separated by the median. Error bars represent upper and lower extremes. Blue dots represent the authors’ initial classification. Approaches ordered by decreasing mean, with ties broken by increasing standard deviation.

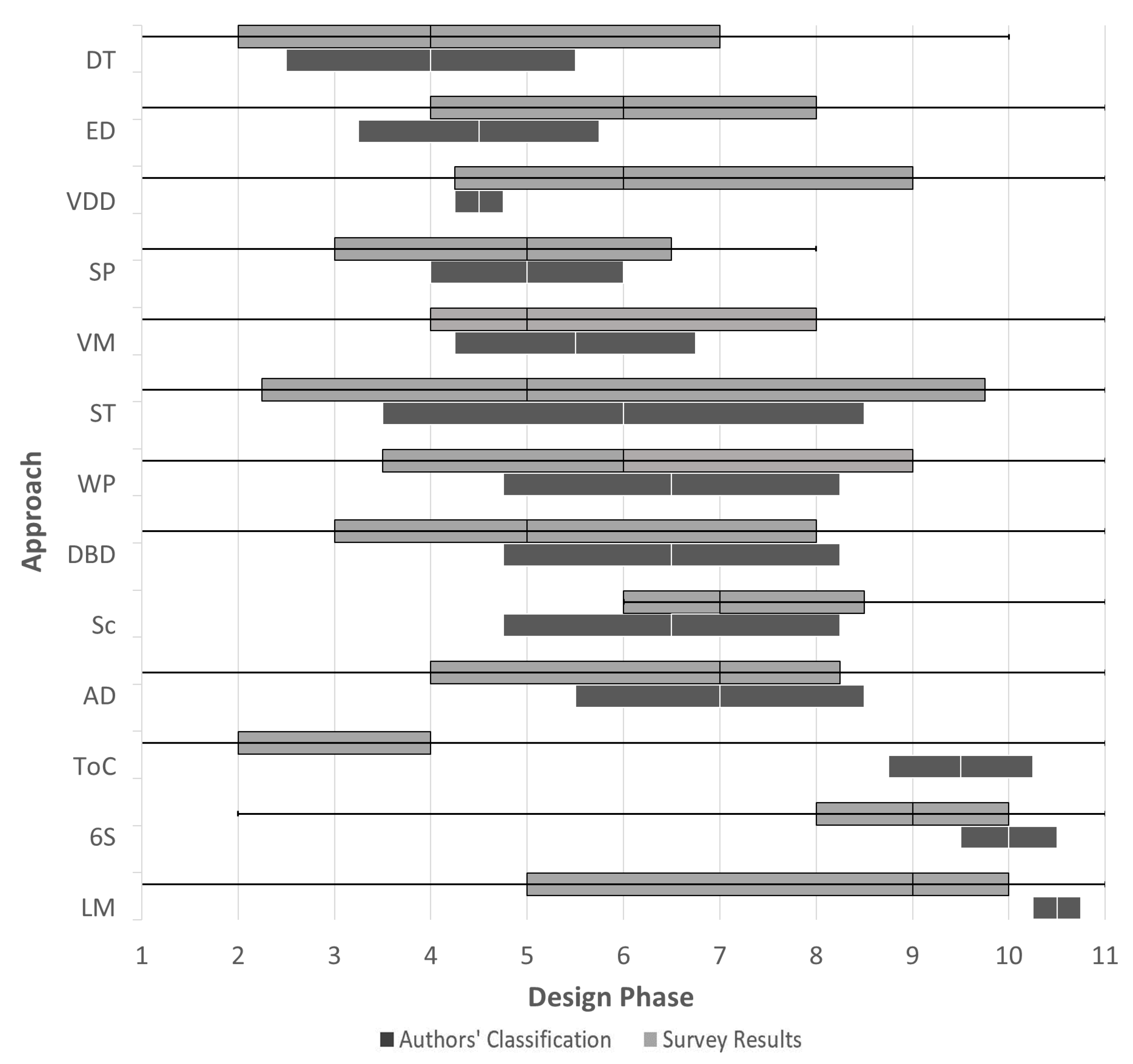

Figure 7.

Design phases covered by each approach, based on survey responses and authors’ original classifications. Shaded boxes span the interquartile range, separated by the median. Error bars represent upper and lower extremes. Approaches are ordered by increasing mean of the midpoint of the authors’ initial classification.

Figure 7.

Design phases covered by each approach, based on survey responses and authors’ original classifications. Shaded boxes span the interquartile range, separated by the median. Error bars represent upper and lower extremes. Approaches are ordered by increasing mean of the midpoint of the authors’ initial classification.

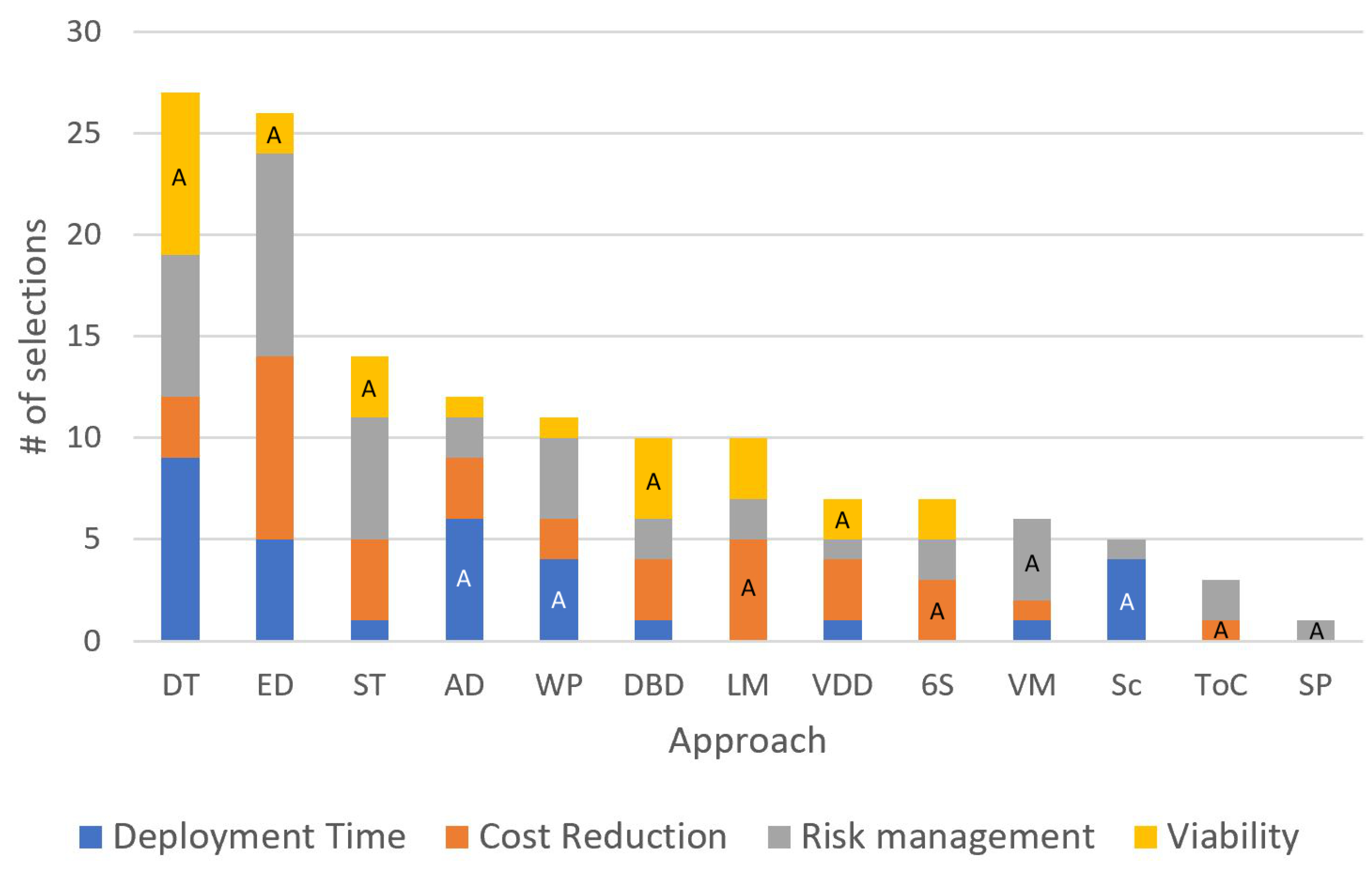

Figure 8.

Values associated with each approach, according to respondents. Approaches ordered by decreasing total selections. “A” signifies authors’ original classification.

Figure 8.

Values associated with each approach, according to respondents. Approaches ordered by decreasing total selections. “A” signifies authors’ original classification.

Figure 9.

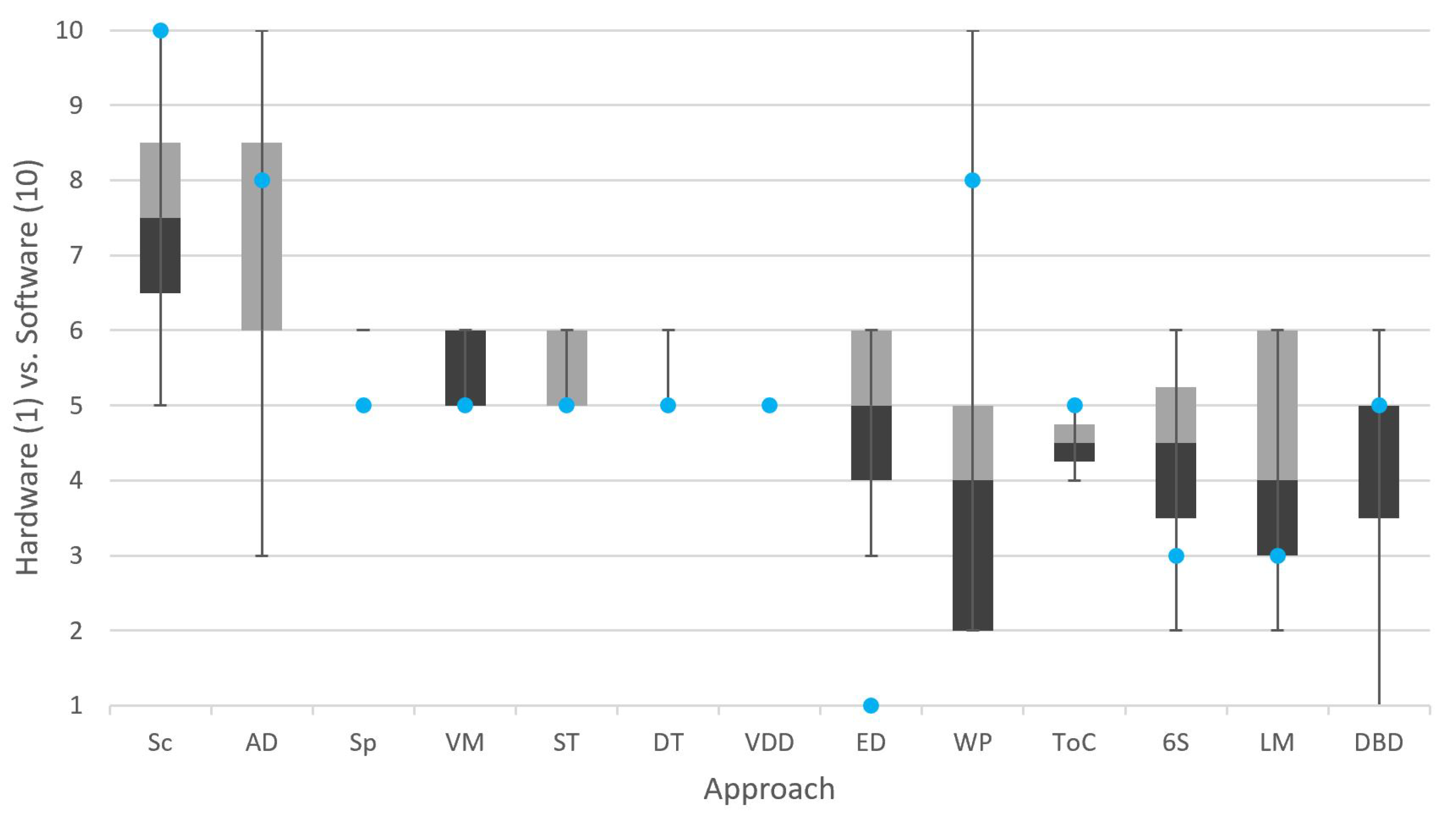

Perceived approach suitability to hardware vs. software. Shaded boxes span the interquartile range, separated by the median. Error bars represent upper and lower extremes. Blue dots represent the authors’ initial classification. Approaches ordered by decreasing mean.

Figure 9.

Perceived approach suitability to hardware vs. software. Shaded boxes span the interquartile range, separated by the median. Error bars represent upper and lower extremes. Blue dots represent the authors’ initial classification. Approaches ordered by decreasing mean.

Figure 10.

Focus of approach on managers vs. designers, based on survey responses and authors’ classifications. Error bars on survey results represent standard deviations above and below the average response value. Approaches are ordered by decreasing authors’ initial ranks.

Figure 10.

Focus of approach on managers vs. designers, based on survey responses and authors’ classifications. Error bars on survey results represent standard deviations above and below the average response value. Approaches are ordered by decreasing authors’ initial ranks.

Figure 11.

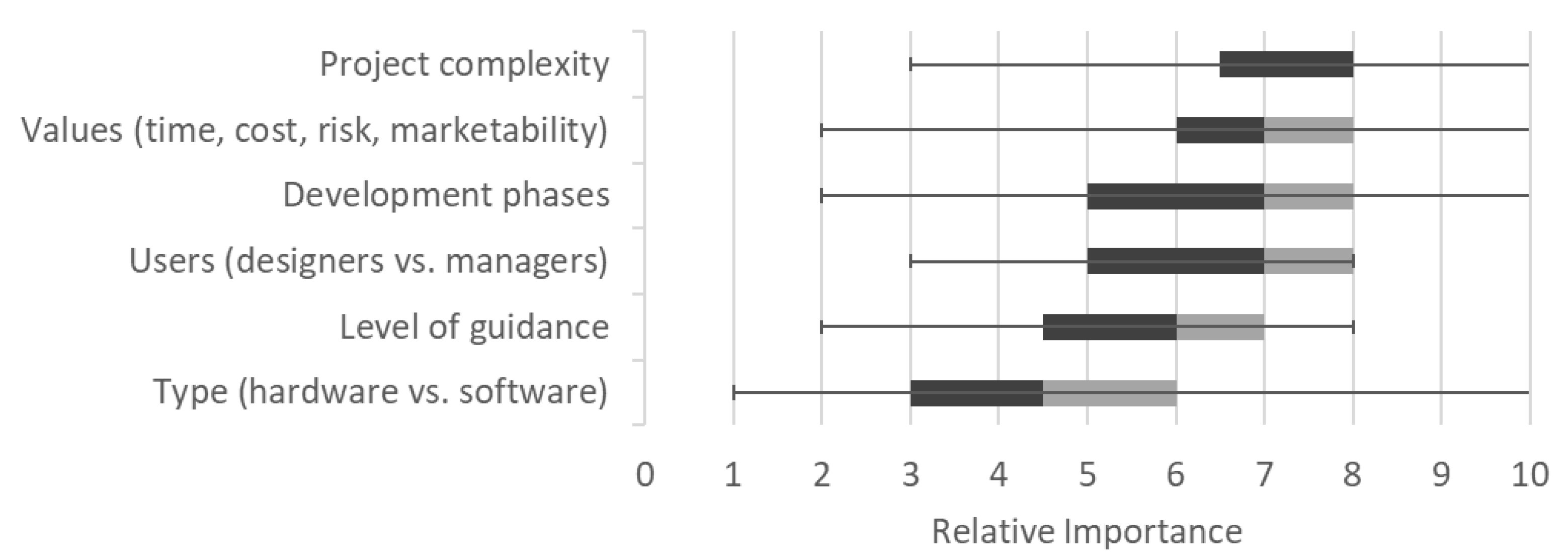

Perceived relative importance of criteria based on survey responses. Shaded boxes span the interquartile range, separated by the median. Error bars represent upper and lower extremes. Approaches are ordered by decreasing mean.

Figure 11.

Perceived relative importance of criteria based on survey responses. Shaded boxes span the interquartile range, separated by the median. Error bars represent upper and lower extremes. Approaches are ordered by decreasing mean.

Figure 12.

Respondent satisfaction with the way they approach design on 7-point scale, broken down by responses to why they approach design the way they do.

Figure 12.

Respondent satisfaction with the way they approach design on 7-point scale, broken down by responses to why they approach design the way they do.

Figure 13.

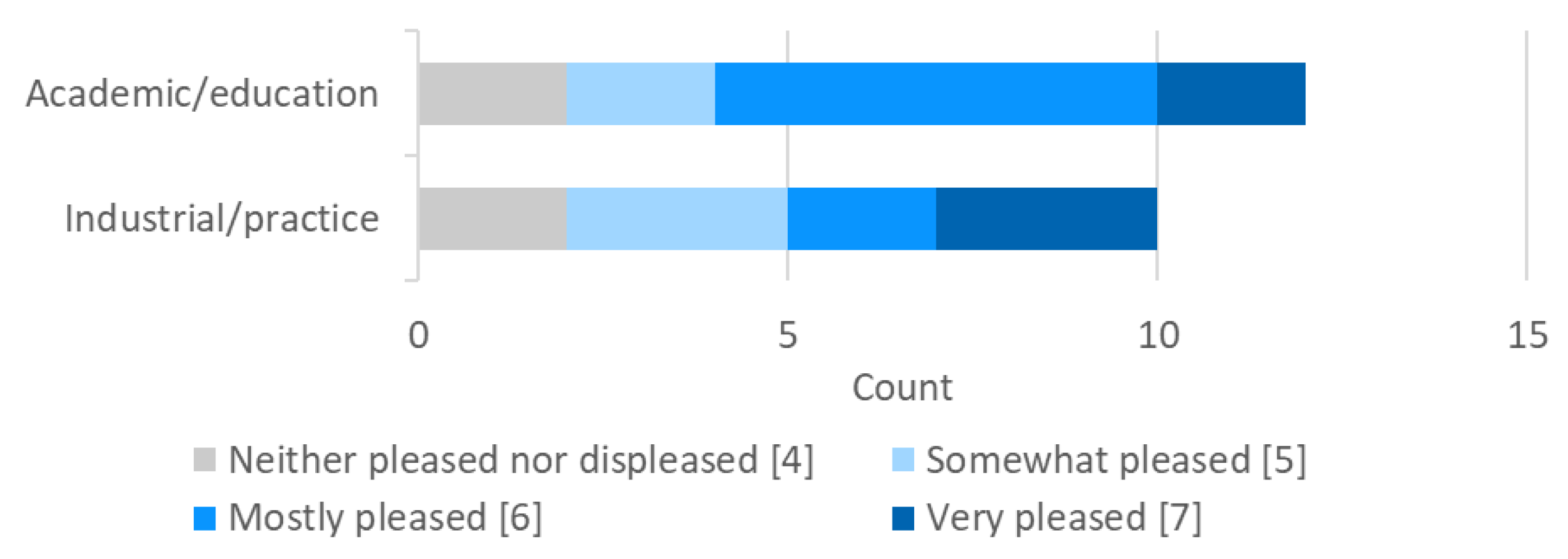

Respondent satisfaction with the way they approach design on 7-point scale, broken down by industry.

Figure 13.

Respondent satisfaction with the way they approach design on 7-point scale, broken down by industry.

Figure 14.

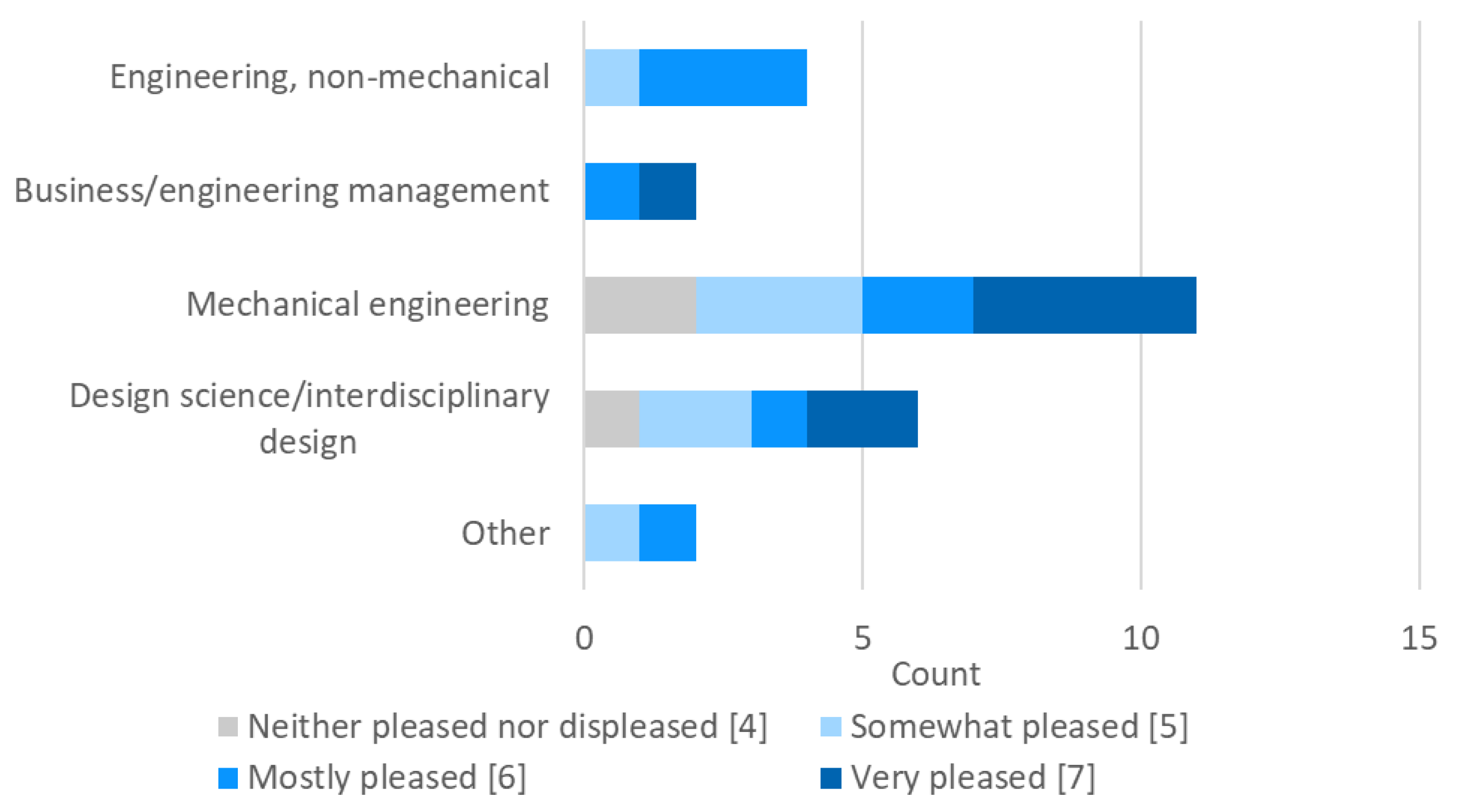

Respondent satisfaction with the way they approach design on 7-point scale, broken down by background; electrical, industrial, systems, and aerospace engineering categorized as engineering, non-mechanical; Business/management and engineering management grouped together.

Figure 14.

Respondent satisfaction with the way they approach design on 7-point scale, broken down by background; electrical, industrial, systems, and aerospace engineering categorized as engineering, non-mechanical; Business/management and engineering management grouped together.

Table 1.

Well-established design approaches included in the review and PD Advisor.

Table 1.

Well-established design approaches included in the review and PD Advisor.

| Approach | Abbreviation | Type | Disciplinary Origin |

|---|

| Design thinking | DT | Environment | Mechanical Engineering |

| Systems thinking | ST | Environment | Systems Engineering |

| Total Quality Management | TQM | Environment | Industrial Engineering |

| Agile development | AD | Environment | Software Engineering |

| Waterfall process | WP | Process | Software Engineering |

| Engineering design | ED | Process | Software Engineering |

| Spiral | Sp | Process | Software Engineering |

| Vee model | VM | Process | Systems Engineering |

| Axiomatic design | Ax | Method | Systems Engineering |

| Value-driven design | VDD | Method | Systems Engineering |

| Decision-based design | DBD | Method | Mechanical Engineering |

| Lean manufacturing | LM | Method | Industrial Engineering |

| Six Sigma | 6S | Method | Industrial Engineering |

| Theory of Constraints | ToC | Method | Industrial Engineering |

| Scrum | Sc | Method | Software Engineering |

| Extreme programming | XP | Method | Software Engineering |

Table 2.

Engineering design approach reviews.

Table 2.

Engineering design approach reviews.

| Reference | Scope | Key Takeaways |

|---|

| Otto and Wood (2003) [11] | Describes design tools categorized into 4 design phases | Design tools support other environments, processes, and methods; user journey maps can support DT, House of Quality can support ED |

| ine Van Boeijen et al.(2014) [35] | Classifies design tools by phases of the design process | Design tools directly support specific design phases, while processes and approaches, including scrum, can be applied to many phases |

| ine Design Exchange [6] | Lists tools with instructions for implementation and examples for each | A large number of design tools have been developed, and each has a specific place and value in its implementation |

| ine Camburn et al. (2017) [4], Foo et al. (2018) [5] | Reviews methods and tools common to design thinking methodologies categorized by stages of discover, define, develop, and deliver | Design tools are specifically applied at different points in the design process and provide valuable information to users |

| ine Howard (2008) [36] | Proposes a new model blending engineering design and creative thinking | Creativity is often overlooked in ED, though it presents a different problem-solving lens; integrating DT and DBD can better incorporate creativity and human factors through design |

| Chakrabarti and Blessing (2016) [3] | Reviews models and theory of design research | Many descriptive and prescriptive design models have been proposed, which vary substantially in definition and implementation |

| ine Evbuomwan et al.(1996) [1] | Reviews common definitions of design and the nature of the design process, connecting to specific design models | Provides a basis for how to review and categorize design approaches |

| ine Gericke and Blessing (2011) [2] | Compares specific aspects of design processes (e.g., stages, characteristics between disciplines, and criticisms) | The comparative categories provide a reference for common characteristics to aid in a new categorization process |

Table 3.

Software development reviews.

Table 3.

Software development reviews.

| Reference | Scope | Key Takeaways |

|---|

| Munassar and Govardhan (2010) [7] | Compares 5 models of software engineering in detail, discussing advantages, disadvantages, and alterations of each model | The different models in software engineering introduce specific aspects that can apply to projects differently based on their needs |

| ine Bhuvaneswari and Prabaharan (2013) [8] | Introduces 17 common SDLC models, and provides a description, advantages, and disadvantages of each | Each model has unique characteristics that are important to consider; many newer models attempt to address the disadvantages of previous models |

| ine Arora and Arora (2016) [9] | Introduces 9 common SDLC models, and provides a description, advantages, disadvantages, and when to use each | Each model is best used in certain scenarios, which relate to, but are separate from its advantages and disadvantages |

Table 4.

Design practitioner surveys.

Table 4.

Design practitioner surveys.

| Reference | Scope | Key Takeaways |

|---|

| Vredenburg et al. (2002) [38] | Surveys design practitioners on user-centered design, identifying common approaches, success factors, and trade-offs | Commonly cited measures and design processes differ from those that are applied in practice |

| ine Yang (2007) [37] | Surveys design practitioners and engineering students to understand the approaches they employ | When designers are familiar with a model, they typically find it useful; provides a basis for creating a survey on design methods, and areas for improvement |

Table 5.

Criteria for differentiating design approaches.

Table 5.

Criteria for differentiating design approaches.

| Criterion | Description | Question Type | Levels |

|---|

| Complexity | Complexity of intended problems or solutions | Slider | 1: Designing a shoehorn |

| 2: Designing a water cup |

| 3: Designing a ballpoint pen |

| 4: Designing a toy car |

| 5: Designing a scientific calculator |

| 6: Designing a cell phone |

| 7: Designing a small robot |

| 8: Designing a laptop computer |

| 9: Designing a new car |

| 10: Designing a space shuttle |

| Guidance | Level of guidance provided by approach | Slider | 1: Minimally Structured |

| 5: Maximally Structured |

| Phase | Development phases covered by approach | Double ended slider | 1: Problem identification |

| 2: Problem definition |

| 3: Market research |

| 4: Concept generation |

| 5: Alternative evaluation |

| 6: Detailed design |

| 7: Prototyping |

| 8: Verification and validation |

| 9: Manufacturing specification |

| 10: Business planning |

| 11: Supply chain and logistics |

| Hard/Soft | Suitability for hardware or software projects | Slider | 1: Fully hardware |

| 5/6: Mixed |

| 10: Fully software |

| Values | Values the approach seeks to improve | Multiple choice | 1: Deployment time |

| 2: Cost reduction |

| 3: Risk management |

| 4: Market viability |

| Users | Whether the approach supports designers or managers | Slider | 1: Managers/Organization |

| 9: Designers/Product |

Table 6.

Authors’ classifications of approaches from

Table 1 within criteria from

Table 5.

Table 6.

Authors’ classifications of approaches from

Table 1 within criteria from

Table 5.

| Approach | Complexity | Guidance | Phases | Hard/Soft | Values | Users |

|---|

| DT | 3 | 1 | 0-6 | 5 | 4 | 7.3 |

| ST | 6 | 1 | 0-10 | 5 | 4 | 6.8 |

| TQM | 8 | 2 | 7-10 | 5 | 4 | 1.1 |

| AD | 6 | 5 | 3-9 | 8 | 1 | 1.7 |

| WP | 4 | 7 | 2-9 | 8 | 1 | 7.9 |

| ED | 4 | 7 | 1-6 | 1 | 4 | 8.4 |

| Sp | 5 | 6 | 2-6 | 5 | 3 | 5.6 |

| VM | 10 | 5 | 2-7 | 5 | 3 | 6.2 |

| AX | 5 | 4 | 3-6 | 5 | 4 | 9 |

| VDD | 7 | 4 | 3-4 | 5 | 4 | 5.1 |

| DBD | 7 | 3 | 2-9 | 5 | 4 | 4.5 |

| LM | 6 | 6 | 9-10 | 3 | 2 | 3.4 |

| 6S | 8 | 6 | 8-10 | 3 | 2 | 3.9 |

| ToC | 6 | 3 | 7-10 | 5 | 2 | 0.6 |

| Sc | 5 | 4 | 2-9 | 10 | 1 | 2.8 |

| XP | 5 | 5 | 2-9 | 10 | 1 | 2.3 |

Table 7.

Respondents’ experience and backgrounds ().

Table 7.

Respondents’ experience and backgrounds ().

| Question | Response | # of Responses |

|---|

| Setting of experience | Academic | 12 |

| Industrial | 10 |

| Job role | Professor (any level) | 6 |

| Consultant | 2 |

| Other | 3 |

| Years of experience | 1–5 | 1 |

| 5–15 | 9 |

| 15+ | 5 |

| Educational background | Mechanical engineering | 11 |

| Design | 7 |

| Systems engineering | 2 |

| Aerospace engineering | 1 |

| Business/management | 1 |

| Electrical engineering | 1 |

| Engineering management | 1 |

| Industrial engineering | 1 |

| Chemical engineering | 1 |

Table 8.

Number of respondents selecting and rating each approach, ordered by decreasing count.

Table 8.

Number of respondents selecting and rating each approach, ordered by decreasing count.

| Approach | Count |

|---|

| DT | 14 |

| ED | 13 |

| ST | 8 |

| AD | 7 |

| DBD | 7 |

| WP | 6 |

| LM | 6 |

| VM | 5 |

| 6S | 5 |

| Sc | 4 |

| ToC | 2 |

| VDD | 3 |

| Sp | 1 |

Table 9.

Combinations of approaches cited by respondents.

Table 9.

Combinations of approaches cited by respondents.

| Combinations of Approaches | Mentions |

|---|

| ST & ToC | 1 |

| DT & TQM | 1 |

| ST & All | 1 |

| DT & AD | 1 |

| VM & All | 1 |

| DT & LM | 1 |

| ED & LM | 1 |

| All | 3 |

Table 10.

Why respondents approach design the way they do.

Table 10.

Why respondents approach design the way they do.

| Category | # of Responses |

|---|

| I do research specific to the given problem to approach it uniquely | 7 |

| I approach design the way I learned in school | 3 |

| Other: I approach design based on my own experiences | 2 |

| I follow company/industry standards for design | 1 |

| Other: I approach design based on research and the way I learned in school | 1 |

| Other: I approach design based on the customers needs | 1 |

| I follow the recommendations of my managers | 0 |