Brain Functional Connectivity During First- and Third-Person Visual Imagery

Abstract

1. Introduction

1.1. Perspective Taking, and First-Person Versus Third-Person Perspectives

1.2. Brain Underpinnings of First-Person and Third-Person Perspectives

2. Materials and Methods

2.1. Participants

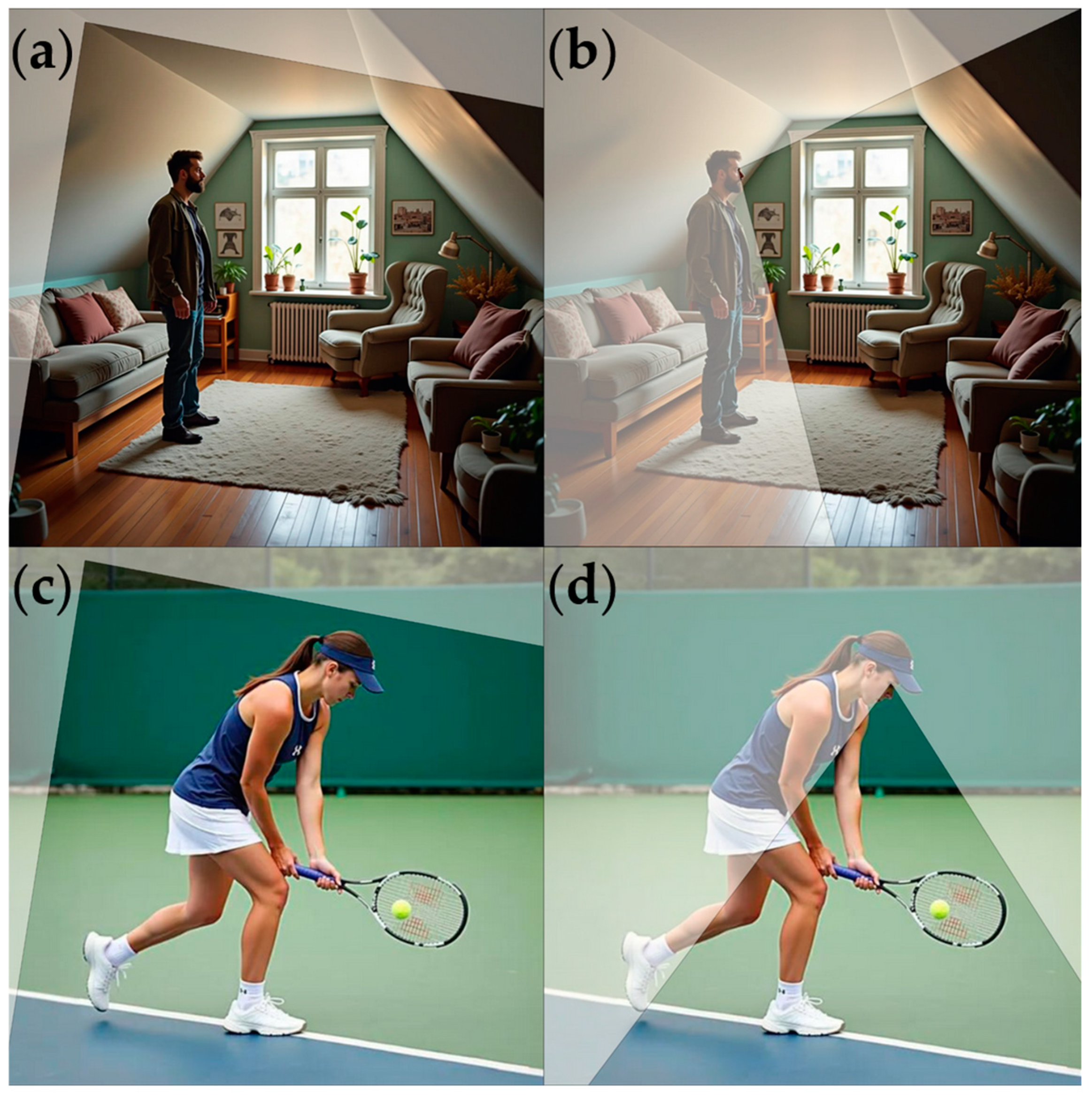

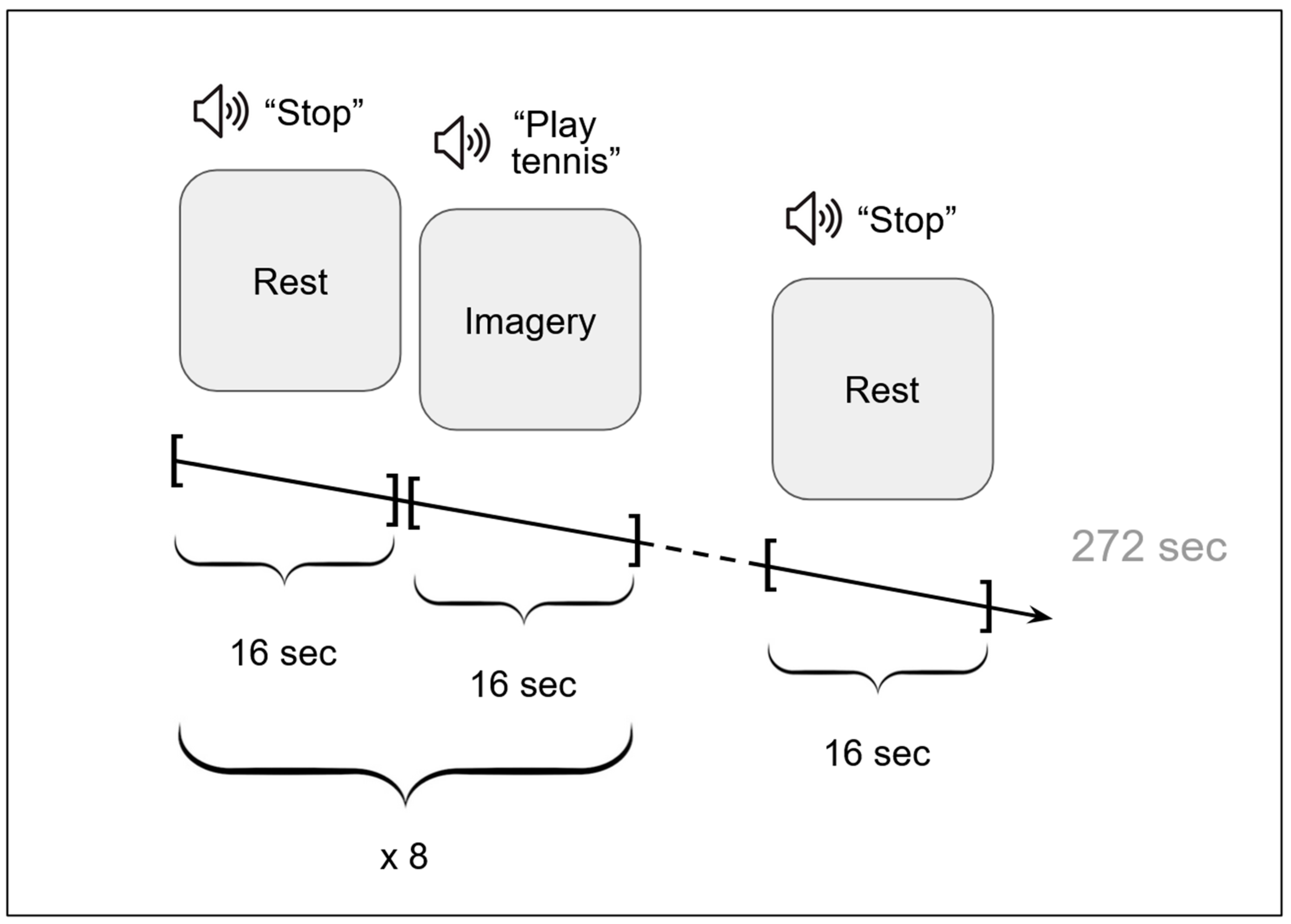

2.2. Procedure

2.3. Functional MRI Data Acquisition and Analysis

2.3.1. Functional MRI Data Acquisition

2.3.2. Functional MRI Data Analysis: Activation

2.3.3. Functional MRI Data Analysis: Functional Connectivity

3. Results

3.1. Subjective Reports

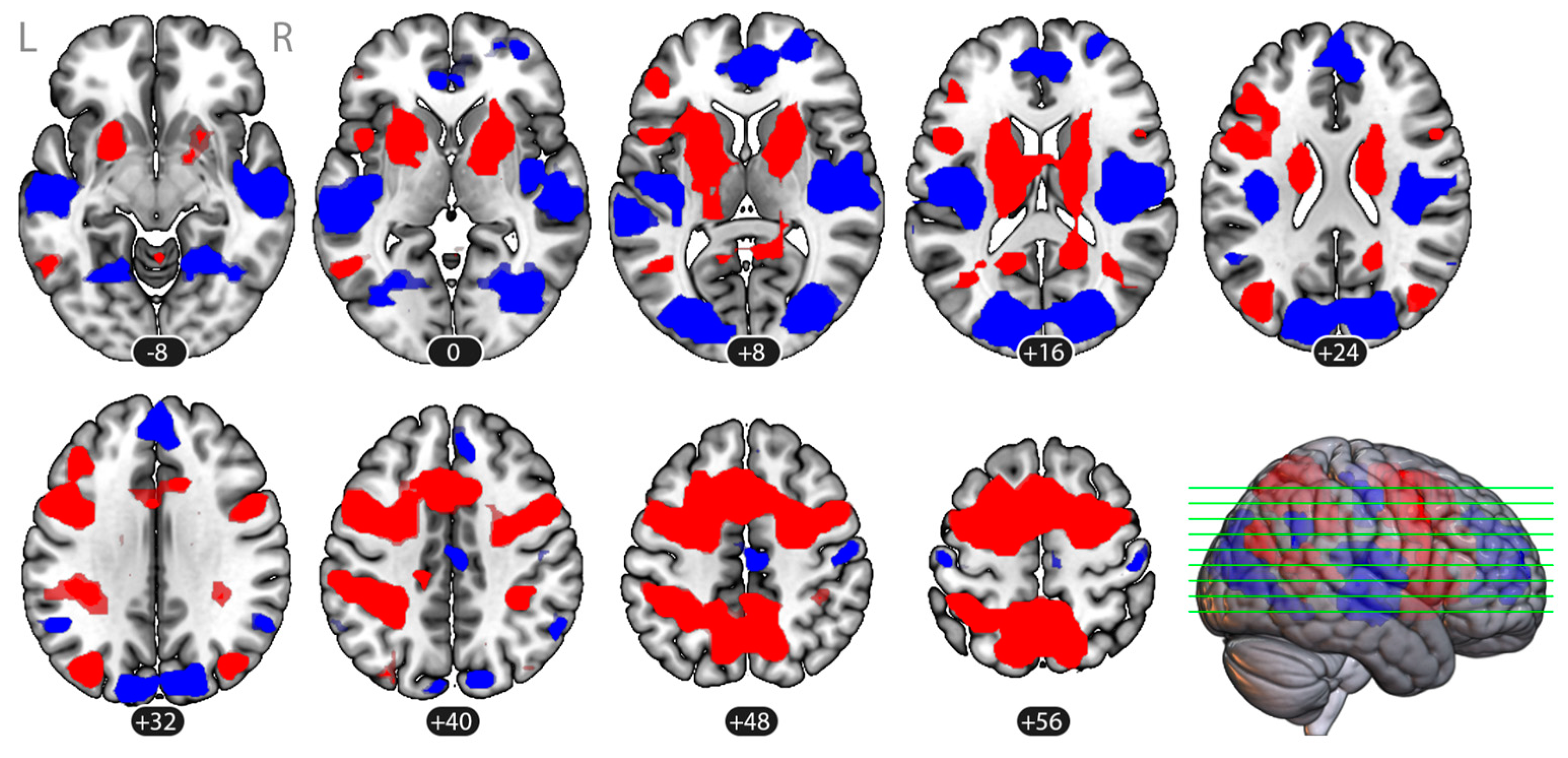

3.2. Brain Activation

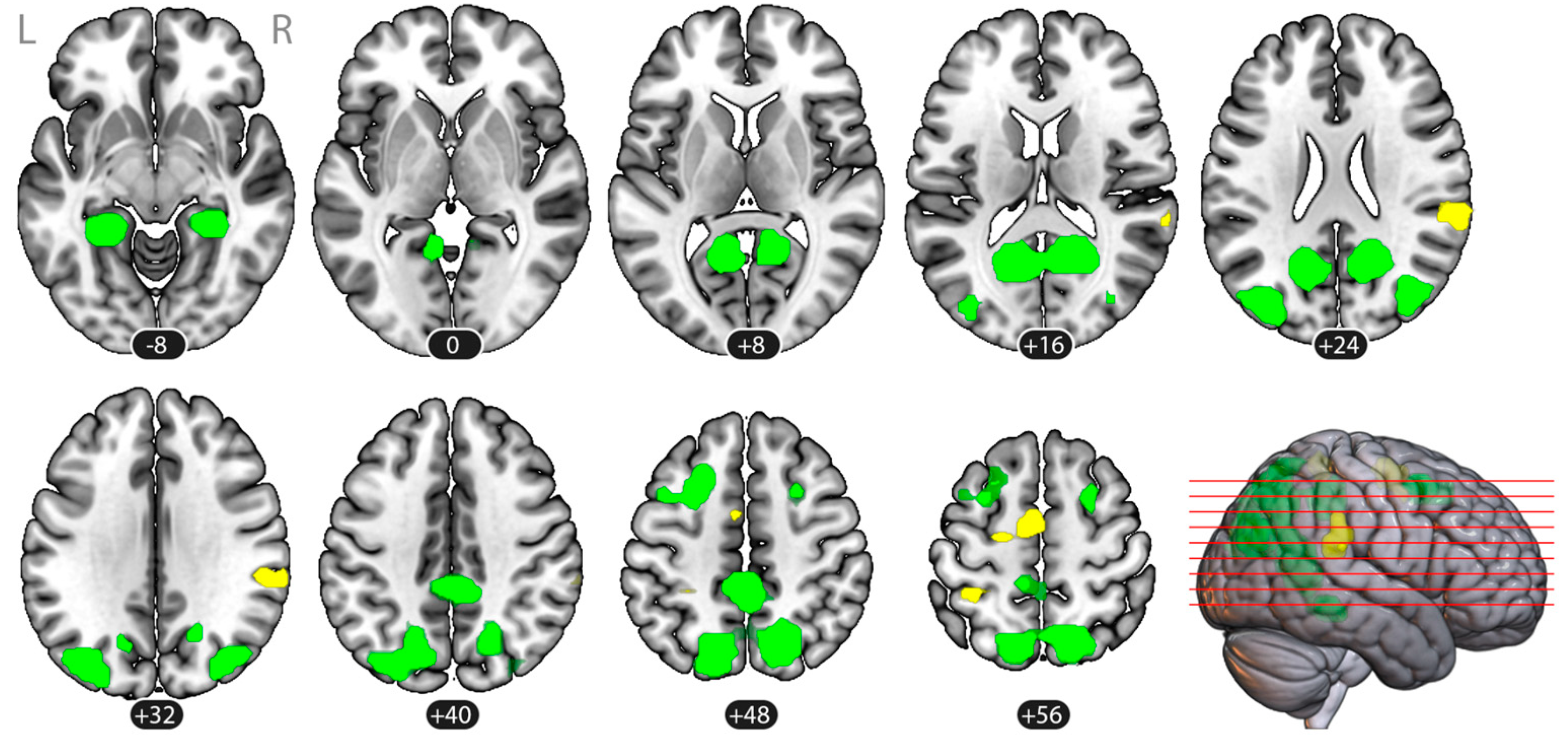

3.3. Task-Based Functional Connectivity

3.3.1. Voxel-to-Voxel Connectivity: Intrinsic Connectivity Contrast

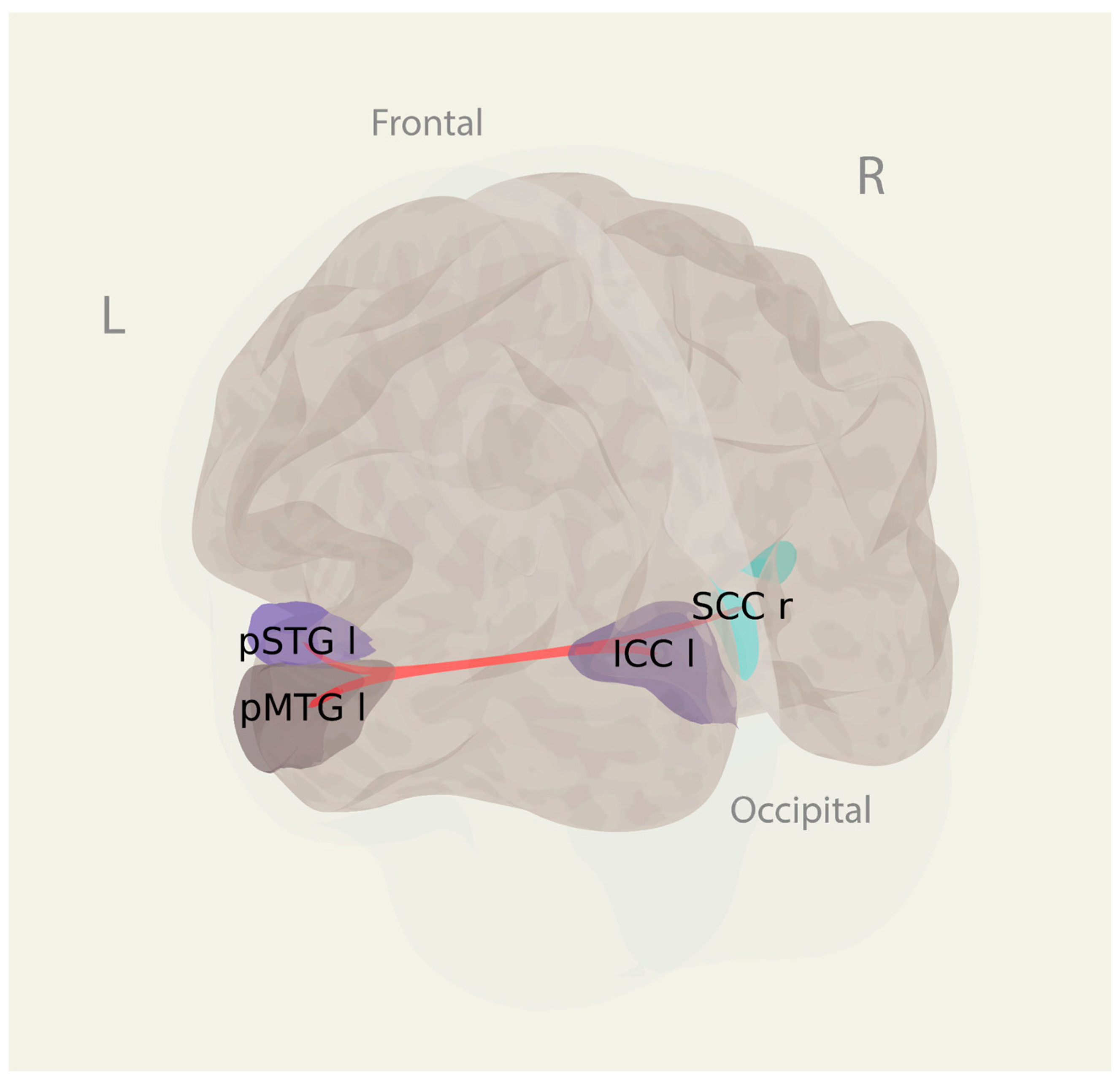

3.3.2. ROI-to-ROI Connectivity

4. Discussion

4.1. Brain Activity and Connectivity Specific for the First-Person and Third-Person Perspectives in Imagery

4.2. Study Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tversky, B.; Hard, B.M. Embodied and Disembodied Cognition: Spatial Perspective-Taking. Cognition 2009, 110, 124–129. [Google Scholar] [CrossRef] [PubMed]

- Piaget, J. The Construction of Reality in the Child; Basic Books: New York, NY, USA, 1954. [Google Scholar]

- Nigro, G.; Neisser, U. Point of View in Personal Memories. Cogn. Psychol. 1983, 15, 467–482. [Google Scholar] [CrossRef]

- Libby, L.K.; Eibach, R.P. Visual Perspective in Mental Imagery. In Advances in Experimental Social Psychology; Elsevier: Amsterdam, The Netherlands, 2011; Volume 44, pp. 185–245. [Google Scholar] [CrossRef]

- Kosslyn, S.M.; Ganis, G.; Thompson, W.L. Neural Foundations of Imagery. Nat. Rev. Neurosci. 2001, 2, 635–642. [Google Scholar] [CrossRef]

- Zacks, J.M.; Mires, J.; Tversky, B.; Hazeltine, E. Mental spatial transformations of objects and perspective. Spat. Cogn. Comput. 2000, 2, 315–332. [Google Scholar] [CrossRef]

- Hong, J.P. The Influence of Visual Perspective on the Cognitive Effort Required for Mental Representation. Ph.D. Thesis, Wilfrid Laurier University, Waterloo, ON, Canada, 2024. [Google Scholar]

- Hegarty, M. A Dissociation between Mental Rotation and Perspective-Taking Spatial Abilities. Intelligence 2004, 32, 175–191. [Google Scholar] [CrossRef]

- Galvan Debarba, H.; Bovet, S.; Salomon, R.; Blanke, O.; Herbelin, B.; Boulic, R. Characterizing First and Third Person Viewpoints and Their Alternation for Embodied Interaction in Virtual Reality. PLoS ONE 2017, 12, e0190109. [Google Scholar] [CrossRef]

- Jackson, P.L.; Meltzoff, A.N.; Decety, J. Neural Circuits Involved in Imitation and Perspective-Taking. NeuroImage 2006, 31, 429–439. [Google Scholar] [CrossRef]

- Higuchi, T.; Nagami, T.; Nakata, H.; Watanabe, M.; Isaka, T.; Kanosue, K. Contribution of Visual Information about Ball Trajectory to Baseball Hitting Accuracy. PLoS ONE 2016, 11, e0148498. [Google Scholar] [CrossRef]

- Avraamides, M.N.; Klatzky, R.L.; Loomis, J.M.; Golledge, R.G. Use of Cognitive Versus Perceptual Heading During Imagined Locomotion Depends on the Response Mode. Psychol. Sci. 2004, 15, 403–408. [Google Scholar] [CrossRef]

- Burgess, N. Spatial Memory: How Egocentric and Allocentric Combine. Trends Cogn. Sci. 2006, 10, 551–557. [Google Scholar] [CrossRef]

- Klatzky, R.L. Allocentric and Egocentric Spatial Representations: Definitions, Distinctions, and Interconnections. In Spatial Cognition; Freksa, C., Habel, C., Wender, K.F., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 1998; Volume 1404, pp. 1–17. [Google Scholar] [CrossRef]

- Zaehle, T.; Jordan, K.; Wüstenberg, T.; Baudewig, J.; Dechent, P.; Mast, F.W. The Neural Basis of the Egocentric and Allocentric Spatial Frame of Reference. Brain Res. 2007, 1137, 92–103. [Google Scholar] [CrossRef] [PubMed]

- Kozhevnikov, M.; Hegarty, M. A Dissociation between Object Manipulation Spatial Ability and Spatial Orientation Ability. Mem. Cogn. 2001, 29, 745–756. [Google Scholar] [CrossRef]

- Vogeley, K.; Fink, G.R. Neural Correlates of the First-Person-Perspective. Trends Cogn. Sci. 2003, 7, 38–42. [Google Scholar] [CrossRef] [PubMed]

- Leng, X.; Zhu, W.; Mayer, R.E.; Wang, F. The Viewing Perspective Effect in Learning from Instructional Videos: A Replication and Neuroimaging Extension. Learn. Instr. 2024, 94, 102004. [Google Scholar] [CrossRef]

- Pavone, E.F.; Tieri, G.; Rizza, G.; Tidoni, E.; Grisoni, L.; Aglioti, S.M. Embodying Others in Immersive Virtual Reality: Electro-Cortical Signatures of Monitoring the Errors in the Actions of an Avatar Seen from a First-Person Perspective. J. Neurosci. 2016, 36, 268–279. [Google Scholar] [CrossRef]

- Cammisuli, D.M.; Castelnuovo, G. Neuroscience-Based Psychotherapy: A Position Paper. Front. Psychol. 2023, 14, 1101044. [Google Scholar] [CrossRef]

- Ruby, P.; Decety, J. Effect of Subjective Perspective Taking during Simulation of Action: A PET Investigation of Agency. Nat. Neurosci. 2001, 4, 546–550. [Google Scholar] [CrossRef]

- Tomasino, B.; Werner, C.J.; Weiss, P.H.; Fink, G.R. Stimulus Properties Matter More than Perspective: An fMRI Study of Mental Imagery and Silent Reading of Action Phrases. NeuroImage 2007, 36, T128–T141. [Google Scholar] [CrossRef]

- Arzy, S.; Thut, G.; Mohr, C.; Michel, C.M.; Blanke, O. Neural Basis of Embodiment: Distinct Contributions of Temporoparietal Junction and Extrastriate Body Area. J. Neurosci. 2006, 26, 8074–8081. [Google Scholar] [CrossRef]

- Blanke, O.; Mohr, C.; Michel, C.M.; Pascual-Leone, A.; Brugger, P.; Seeck, M.; Landis, T.; Thut, G. Linking Out-of-Body Experience and Self Processing to Mental Own-Body Imagery at the Temporoparietal Junction. J. Neurosci. 2005, 25, 550–557. [Google Scholar] [CrossRef]

- Smith, A.M.; Messier, C. Voluntary Out-of-Body Experience: An fMRI Study. Front. Hum. Neurosci. 2014, 8, 70. [Google Scholar] [CrossRef]

- Gauthier, B.; Bréchet, L.; Lance, F.; Mange, R.; Herbelin, B.; Faivre, N.; Bolton, T.A.W.; Ville, D.V.D.; Blanke, O. First-Person Body View Modulates the Neural Substrates of Episodic Memory and Autonoetic Consciousness: A Functional Connectivity Study. NeuroImage 2020, 223, 117370. [Google Scholar] [CrossRef] [PubMed]

- Grol, M.; Vingerhoets, G.; De Raedt, R. Mental Imagery of Positive and Neutral Memories: A fMRI Study Comparing Field Perspective Imagery to Observer Perspective Imagery. Brain Cogn. 2017, 111, 13–24. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Eich, E.; Nelson, A.L.; Leghari, M.A.; Handy, T.C. Neural Systems Mediating Field and Observer Memories. Neuropsychologia 2009, 47, 2239–2251. [Google Scholar] [CrossRef] [PubMed]

- St. Jacques, P.L. A New Perspective on Visual Perspective in Memory. Curr. Dir. Psychol. Sci. 2019, 28, 450–455. [Google Scholar] [CrossRef]

- Owen, A.M.; Coleman, M.R.; Boly, M.; Davis, M.H.; Laureys, S.; Pickard, J.D. Detecting Awareness in the Vegetative State. Science 2006, 313, 1402. [Google Scholar] [CrossRef]

- Oldfield, R.C. The Assessment and Analysis of Handedness: The Edinburgh Inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Fernández-Espejo, D.; Norton, L.; Owen, A.M. The Clinical Utility of fMRI for Identifying Covert Awareness in the Vegetative State: A Comparison of Sensitivity between 3T and 1.5T. PLoS ONE 2014, 9, e95082. [Google Scholar] [CrossRef]

- Desikan, R.S.; Ségonne, F.; Fischl, B.; Quinn, B.T.; Dickerson, B.C.; Blacker, D.; Buckner, R.L.; Dale, A.M.; Maguire, R.P.; Hyman, B.T.; et al. An Automated Labeling System for Subdividing the Human Cerebral Cortex on MRI Scans into Gyral Based Regions of Interest. NeuroImage 2006, 31, 968–980. [Google Scholar] [CrossRef]

- Nieto-Castanon, A. Handbook of Functional Connectivity Magnetic Resonance Imaging Methods in CONN; Hilbert Press: Boston, MA, USA, 2020; ISBN 978-0-578-64400-4. [Google Scholar]

- Whitfield-Gabrieli, S.; Nieto-Castanon, A.; Ghosh, S. Artifact Detection Tools (ART); Release Version 7:11; MIT: Cambridge, MA, USA, 2011. [Google Scholar]

- Behzadi, Y.; Restom, K.; Liau, J.; Liu, T.T. A Component Based Noise Correction Method (CompCor) for BOLD and Perfusion Based fMRI. NeuroImage 2007, 37, 90–101. [Google Scholar] [CrossRef]

- Martuzzi, R.; Ramani, R.; Qiu, M.; Shen, X.; Papademetris, X.; Constable, R.T. A Whole-Brain Voxel Based Measure of Intrinsic Connectivity Contrast Reveals Local Changes in Tissue Connectivity with Anesthetic without a Priori Assumptions on Thresholds or Regions of Interest. NeuroImage 2011, 58, 1044–1050. [Google Scholar] [CrossRef] [PubMed]

- Chumbley, J.; Worsley, K.; Flandin, G.; Friston, K. Topological FDR for Neuroimaging. NeuroImage 2010, 49, 3057–3064. [Google Scholar] [CrossRef]

- Bullmore, E.T.; Suckling, J.; Overmeyer, S.; Rabe-Hesketh, S.; Taylor, E.; Brammer, M.J. Global, Voxel, and Cluster Tests, by Theory and Permutation, for a Difference between Two Groups of Structural MR Images of the Brain. IEEE Trans. Med. Imaging 1999, 18, 32–42. [Google Scholar] [CrossRef]

- Zalesky, A.; Fornito, A.; Bullmore, E.T. Network-Based Statistic: Identifying Differences in Brain Networks. NeuroImage 2010, 53, 1197–1207. [Google Scholar] [CrossRef] [PubMed]

- Spagna, A.; Hajhajate, D.; Liu, J.; Bartolomeo, P. Visual Mental Imagery Engages the Left Fusiform Gyrus, but Not the Early Visual Cortex: A Meta-Analysis of Neuroimaging Evidence. Neurosci. Biobehav. Rev. 2021, 122, 201–217. [Google Scholar] [CrossRef] [PubMed]

- Dijkstra, N. Uncovering the Role of the Early Visual Cortex in Visual Mental Imagery. Vision 2024, 8, 29. [Google Scholar] [CrossRef]

- Hoppe, M.; Baumann, A.; Tamunjoh, P.C.; Machulla, T.-K.; Woźniak, P.W.; Schmidt, A.; Welsch, R. There Is No First- or Third-Person View in Virtual Reality: Understanding the Perspective Continuum. In Proceedings of the CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022; ACM: New York, NY, USA, 2022; pp. 1–13. [Google Scholar]

- Aïte, A.; Berthoz, A.; Vidal, J.; Roëll, M.; Zaoui, M.; Houdé, O.; Borst, G. Taking a Third-Person Perspective Requires Inhibitory Control: Evidence from a Developmental Negative Priming Study. Child Dev. 2016, 87, 1825–1840. [Google Scholar] [CrossRef]

- Guterstam, A.; Larsson, D.E.O.; Szczotka, J.; Ehrsson, H.H. Duplication of the Bodily Self: A Perceptual Illusion of Dual Full-Body Ownership and Dual Self-Location. R. Soc. Open Sci. 2020, 7, 201911. [Google Scholar] [CrossRef]

- Sutton, J. Memory Before the Game: Switching Perspectives in Imagining and Remembering Sport and Movement. J. Ment. Imag. 2012, 36, 85–95. [Google Scholar]

- Kinley, I.; Porteous, M.; Levy, Y.; Becker, S. Visual Perspective as a Two-Dimensional Construct in Episodic Future Thought. Conscious. Cogn. 2021, 93, 103148. [Google Scholar] [CrossRef]

- Davey, J.; Thompson, H.E.; Hallam, G.; Karapanagiotidis, T.; Murphy, C.; De Caso, I.; Krieger-Redwood, K.; Bernhardt, B.C.; Smallwood, J.; Jefferies, E. Exploring the Role of the Posterior Middle Temporal Gyrus in Semantic Cognition: Integration of Anterior Temporal Lobe with Executive Processes. NeuroImage 2016, 137, 165–177. [Google Scholar] [CrossRef] [PubMed]

- Phylactou, P.; Traikapi, A.; Papadatou-Pastou, M.; Konstantinou, N. Sensory Recruitment in Visual Short-Term Memory: A Systematic Review and Meta-Analysis of Sensory Visual Cortex Interference Using Transcranial Magnetic Stimulation. Psychon. Bull. Rev. 2022, 29, 1594–1624. [Google Scholar] [CrossRef] [PubMed]

- Kosslyn, S.M. Image and Mind; Harvard University Press: Cambridge, MA, USA; London, UK, 1980; ISBN 978-0-674-44366-2. [Google Scholar]

| Cluster | Volume, Voxels (mm3) | pFDR | MNI Coordinates (Center of Mass) | Region Labels 1 | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Imagery > Rest (Baseline) | ||||||

| (1) Frontal Bilateral | 1961 (125,504) | <0.001 | −7 | 2 | 32 | Middle Frontal Gyrus (MidFG), LR Precentral Gyrus (PreCG),LR Superior Frontal Gyrus (SFG), LR Supplementary Motor Cortex (SMA), LR Thalamus, LR Putamen, LR |

| (2) Parietal Bilateral | 665 (42,560) | <0.001 | −14 | −52 | 52 | Precuneous Cortex Superior Parietal Lobule (SPL), LR Lateral Occipital Cortex, superior division (sLOC), LR Postcentral Gyrus (PostCG), L Supramarginal Gyrus, posterior division (pSMG), L |

| (3) Occipito-temporal Left | 127 (8128) | <0.001 | −42 | −68 | 19 | Lateral Occipital Cortex, superior division (sLOC), L Middle Temporal Gyrus, temporooccipital part (toMTG), L |

| (4) Occipital Right | 59 (3776) | 0.002 | 40 | −69 | 25 | Lateral Occipital Cortex, superior division (sLOC), R |

| (5) Parietal Right | 33 (2112) | 0.020 | 36 | −39 | 39 | Supramarginal Gyrus, posterior division (pSMG), R Superior Parietal Lobule (SPL), R |

| Rest (Baseline) > Imagery | ||||||

| (1) Right Operculum | 658 (28,032) | <0.001 | 48 | −17 | 8 | Central Opercular Cortex (CO), R Parietal Operculum Cortex (PO), R Insular Cortex (IC), R Middle Temporal Gyrus, posterior division (pMTG), R Superior Temporal Gyrus, posterior division (pSTG), R Heschl’s Gyrus (HG), R Planum Temporale (PT), R |

| (2) Left Operculum | 354 (42,112) | <0.001 | −49 | −23 | 7 | Central Opercular Cortex (CO), L Parietal Operculum Cortex (PO), L Insular Cortex (IC), L Middle Temporal Gyrus, posterior division (pMTG), L Superior Temporal Gyrus, posterior division (pSTG), L Heschl’s Gyrus (HG), L Planum Temporale (PT), L Planum OPerculum (PO), L |

| (3) Occipital Bilateral | 438 (22,656) | <0.001 | 5 | −79 | 14 | Lateral Occipital Cortex, superior division (sLOC), LR Lateral Occipital Cortex, inferior division (iLOC), LR Cuneal Cortex, LR Occipital Pole (OP), LR Lingual Gyrus (LG), LR |

| (4) Medial Frontal | 281 (17,984) | <0.001 | 7 | 48 | 16 | Paracingulate Gyrus (PaCiG), LR Cingulate Gyrus, anterior division (AC) Frontal Pole (FP), R Superior Frontal Gyrus (SFG), LR |

| (5) Left Angular Gyrus | 25 (1600) | 0.028 | −53 | −54 | 31 | Angular Gyrus (AG), L |

| (6) Right Angular Gyrus | 24 (1536) | 0.028 | 55 | −54 | 35 | Angular Gyrus (AG), R |

| (7) Posterior Cingulate | 36 (2304) | 0.014 | 4 | −19 | 46 | Cingulate Gyrus, posterior division (PC) Precentral Gyrus (PreCG), R |

| (8) Right Postcentral | 32 (2048) | 0.017 | 49 | −20 | 52 | Postcentral Gyrus (PostCG), R |

| (9) Left Postcentral | 24 (1536) | 0.028 | −44 | −22 | 61 | Postcentral Gyrus (PostCG), L |

| Cluster | Volume, Voxels (mm3) | pFDR | MNI Coordinates (Center of Mass) | Region Labels 1 | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Tennis > Navigation | ||||||

| (1) SMA | 56 (3584) | 0.001 | −9 | −7 | 59 | Supplementary Motor Cortex (SMA), LR Precentral Gyrus (PreCG), L |

| (2) Right Supramarginal Gyrus | 51 (3264) | 0.001 | 61 | −34 | 27 | Supramarginal Gyrus, posterior division (pSMG), R Supramarginal Gyrus, anterior division (aSMG), R Parietal Operculum Cortex (PO), R |

| (3) Left Superior Parietal | 32 (2048) | 0.008 | −35 | −43 | 60 | Superior Parietal Lobule (SPL), L |

| Navigation > Tennis | ||||||

| (1) Left Para-hippocampal | 51 (3264) | 0.003 | −26 | −41 | −10 | Parahippocampal Gyrus, posterior division (pPaHC), L Lingual Gyrus (LG), L Hippocampus L |

| (2) Right Para-hippocampal | 36 (2304) | 0.010 | 27 | −38 | −10 | Parahippocampal Gyrus, posterior division (pPaHC), R Lingual Gyrus (LG), R Hippocampus R |

| (3) Bilateral Occipito-temporal | 766 (49,024) | <0.001 | −4 | −62 | 34 | Precuneous Lateral Occipital Cortex, superior division (sLOC), LR Cingulate Gyrus, posterior division (PC) |

| (4) Right Lateral Occipital Cortex | 74 (4736) | 0.001 | 38 | −76 | 28 | Lateral Occipital Cortex, superior division (sLOC), R |

| (5) Left Frontal | 66 (4224) | 0.001 | −28 | 13 | 50 | Middle Frontal Gyrus (MFG), L Superior Frontal Gyrus (SFG), L |

| (6) Right Frontal | 22 (1408) | 0.035 | 26 | 9 | 53 | Middle Frontal Gyrus (MFG), R Superior Frontal Gyrus (SFG), R |

| Analysis Unit | Mass | t(24) | p-unc. | pFDR |

|---|---|---|---|---|

| Network 1/2 | 108.87 | 0.022553 | 0.045 | |

| Connection ICCl—pSTGl | 4.76 | 0.000077 | 0.421 | |

| Connection pMTGl—ICCl | 4.19 | 0.000327 | 0.800 | |

| Connection pMTGl—SCCr | 3.78 | 0.000926 | 0.999 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pechenkova, E.; Rachinskaya, M.; Vasilenko, V.; Blazhenkova, O.; Mershina, E. Brain Functional Connectivity During First- and Third-Person Visual Imagery. Vision 2025, 9, 30. https://doi.org/10.3390/vision9020030

Pechenkova E, Rachinskaya M, Vasilenko V, Blazhenkova O, Mershina E. Brain Functional Connectivity During First- and Third-Person Visual Imagery. Vision. 2025; 9(2):30. https://doi.org/10.3390/vision9020030

Chicago/Turabian StylePechenkova, Ekaterina, Mary Rachinskaya, Varvara Vasilenko, Olesya Blazhenkova, and Elena Mershina. 2025. "Brain Functional Connectivity During First- and Third-Person Visual Imagery" Vision 9, no. 2: 30. https://doi.org/10.3390/vision9020030

APA StylePechenkova, E., Rachinskaya, M., Vasilenko, V., Blazhenkova, O., & Mershina, E. (2025). Brain Functional Connectivity During First- and Third-Person Visual Imagery. Vision, 9(2), 30. https://doi.org/10.3390/vision9020030