Systematic Review of the Longitudinal Sensitivity of Precision Tasks in Visual Working Memory

Abstract

1. Introduction

2. Methodology

2.1. Eligibility Criteria

2.2. Information Sources and Search

2.3. Study Selection

2.4. Data Collection Processes

2.5. Data Items

2.6. Risk of Bias in Individual Studies

2.7. Summary Measures

2.8. Synthesis of Results

2.9. Risk of Bias across Studies

3. Results

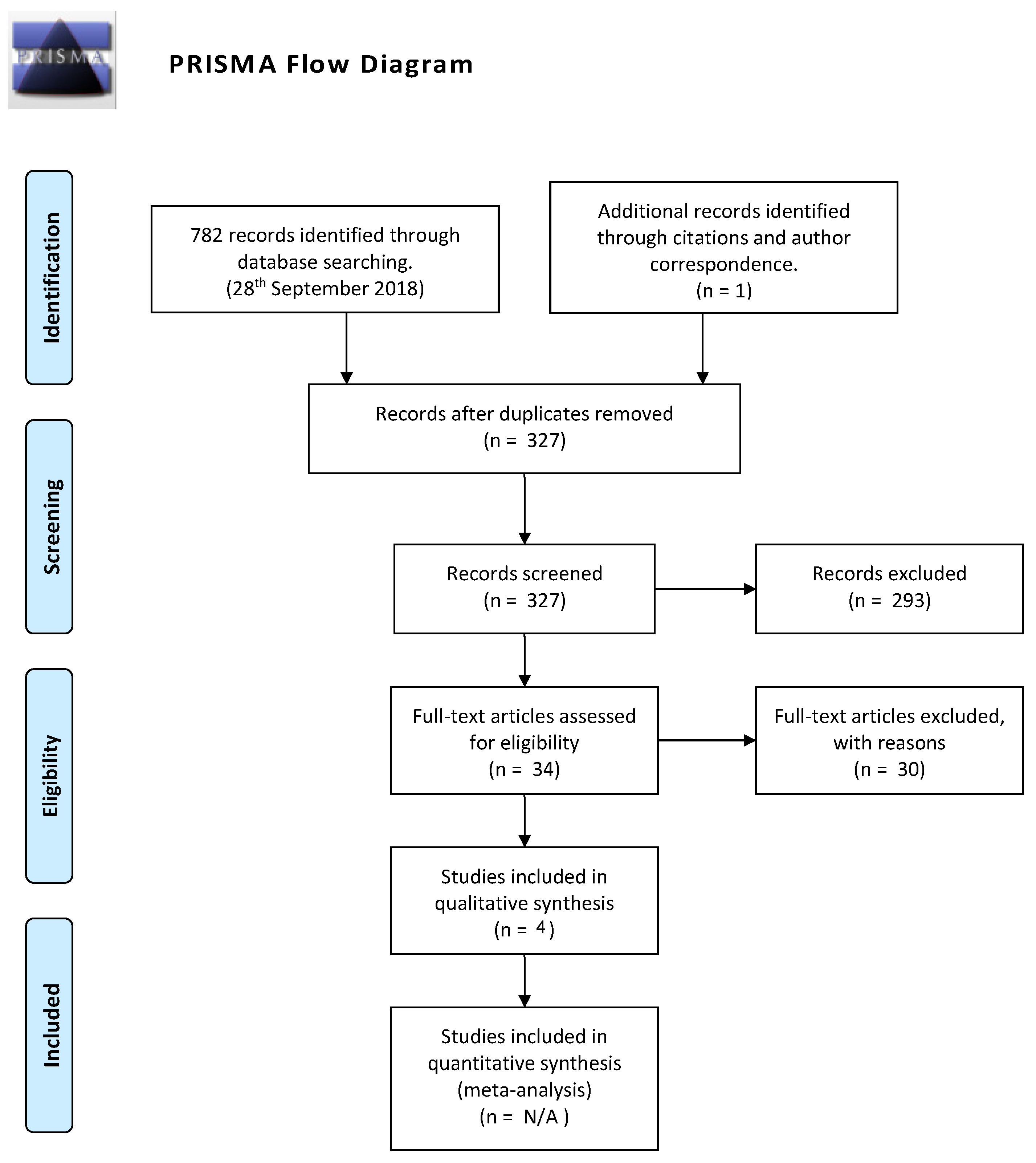

3.1. Study Selection

3.2. Study Characteristics

3.3. Results of Individual Studies

3.4. Synthesis of Results

3.5. Risk of Bias across Studies

3.6. Risk of Bias within Studies

3.7. Additional Analysis

4. Discussion

4.1. Summary of Evidence

4.2. Limitations

4.3. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Baddeley, A. Working Memory: Looking Back and Looking Forward. Nat. Rev. Neurosci. 2003, 4, 829–839. [Google Scholar] [CrossRef]

- Fukuda, K.; Vogel, E.; Mayr, U.; Awh, E. Quantity, Not Quality: The Relationship between Fluid Intelligence and Working Memory Capacity. Psychon. Bull. Rev. 2010, 17, 673–679. [Google Scholar] [CrossRef]

- Unsworth, N.; Fukuda, K.; Awh, E.; Vogel, E.K. Working Memory and Fluid Intelligence: Capacity, Attention Control, and Secondary Memory Retrieval. Cognit. Psychol. 2014, 71, 1–26. [Google Scholar] [CrossRef]

- Pertzov, Y.; Manohar, S.; Husain, M. Rapid Forgetting Results from Competition over Time between Items in Visual Working Memory. J. Exp. Psychol. Learn. Mem. Cogn. 2017, 43, 528–536. [Google Scholar] [CrossRef] [PubMed]

- Lee, E.-Y.; Cowan, N.; Vogel, E.K.; Rolan, T.; Valle-Inclan, F.; Hackley, S.A. Visual Working Memory Deficits in Patients with Parkinson’s Disease Are Due to Both Reduced Storage Capacity and Impaired Ability to Filter out Irrelevant Information. Brain 2010, 133, 2677–2689. [Google Scholar] [CrossRef] [PubMed]

- Lenartowicz, A.; Delorme, A.; Walshaw, P.D.; Cho, A.L.; Bilder, R.M.; McGough, J.J.; McCracken, J.T.; Makeig, S.; Loo, S.K. Electroencephalography Correlates of Spatial Working Memory Deficits in Attention-Deficit/Hyperactivity Disorder: Vigilance, Encoding, and Maintenance. J. Neurosci. 2014, 34, 1171–1182. [Google Scholar] [CrossRef] [PubMed]

- Vogel, E.K.; Woodman, G.F.; Luck, S.J. The Time Course of Consolidation in Visual Working Memory. J. Exp. Psychol. Hum. Percept. Perform. 2006, 32, 1436–1451. [Google Scholar] [CrossRef]

- Zokaei, N.; Heider, M.; Husain, M. Attention Is Required for Maintenance of Feature Binding in Visual Working Memory. Q. J. Exp. Psychol. 2014, 67, 1191–1213. [Google Scholar] [CrossRef]

- Zokaei, N.; Burnett Heyes, S.; Gorgoraptis, N.; Budhdeo, S.; Husain, M. Working Memory Recall Precision Is a More Sensitive Index than Span. J. Neuropsychol. 2015, 9, 319–329. [Google Scholar] [CrossRef]

- Burnett Heyes, S.; Zokaei, N.; Husain, M. Longitudinal Development of Visual Working Memory Precision in Childhood and Early Adolescence. Cogn. Dev. 2016, 39, 36–44. [Google Scholar] [CrossRef]

- Peich, M.-C.; Husain, M.; Bays, P.M. Age-Related Decline of Precision and Binding in Visual Working Memory. Psychol. Aging 2013, 28, 729–743. [Google Scholar] [CrossRef]

- Moher, D.; Liberati, A.; Tetzlaff, J.; Altman, D.G. Preferred Reporting Items for Systematic Reviews and Meta-Analyses: The PRISMA Statement. PLoS Med. 2009, 6, e1000097. [Google Scholar] [CrossRef]

- Jongbloed-Pereboom, M.; Janssen, A.J.W.M.; Steenbergen, B.; Nijhuis-van der Sanden, M.W.G. Motor Learning and Working Memory in Children Born Preterm: A Systematic Review. Neurosci. Biobehav. Rev. 2012, 36, 1314–1330. [Google Scholar] [CrossRef]

- Trevethan, R. Intraclass Correlation Coefficients: Clearing the Air, Extending Some Cautions, and Making Some Requests. Health Serv. Outcomes Res. Methodol. 2017, 17, 127–143. [Google Scholar] [CrossRef]

- Adam, K.C.S.; Vogel, E.K. Improvements to Visual Working Memory Performance with Practice and Feedback. PLoS ONE 2018, 13, e0203279. [Google Scholar] [CrossRef]

- Younger, J.W.; Laughlin, K.D.O.; Anguera, J.A.; Bunge, S.A.; Ferrer, E.; Hoeft, F.; McCandliss, B.D.; Mishra, J.; Rosenberg-Lee, M.; Gazzaley, A.; et al. More Alike than Different: Novel Methods for Measuring and Modeling Executive Function Development. 2021; preprint. [Google Scholar] [CrossRef]

- Fallon, S.J.; Mattiesing, R.M.; Muhammed, K.; Manohar, S.; Husain, M. Fractionating the Neurocognitive Mechanisms Underlying Working Memory: Independent Effects of Dopamine and Parkinson’s Disease. Cereb. Cortex 2017, 27, 5727–5738. [Google Scholar] [CrossRef]

- Bays, P.M.; Catalao, R.F.G.; Husain, M. The Precision of Visual Working Memory Is Set by Allocation of a Shared Resource. J. Vis. 2009, 9, 7. [Google Scholar] [CrossRef]

- Coe, R. It’ s the Effect Size, Stupid What Effect Size Is and Why It Is Important. In Proceedings of the Annual Conference of the British Educational Research Association, Exeter, UK, 12–14 September 2002. [Google Scholar]

- Lakens, D. Calculating and Reporting Effect Sizes to Facilitate Cumulative Science: A Practical Primer for t-Tests and ANOVAs. Front. Psychol. 2013, 4, 863. [Google Scholar] [CrossRef]

- Isbell, E.; Fukuda, K.; Neville, H.J.; Vogel, E.K. Visual Working Memory Continues to Develop through Adolescence. Front. Psychol. 2015, 6, 696. [Google Scholar] [CrossRef]

- Eisinga, R.; Grotenhuis, M.; Pelzer, B. The Reliability of a Two-Item Scale: Pearson, Cronbach, or Spearman-Brown? Int. J. Public Health 2013, 58, 637–642. [Google Scholar] [CrossRef]

- Schatz, P.; Ferris, C.S. One-Month Test–Retest Reliability of the ImPACT Test Battery. Arch. Clin. Neuropsychol. 2013, 28, 499–504. [Google Scholar] [CrossRef]

- Tavakol, M.; Dennick, R. Making Sense of Cronbach’s Alpha. Int. J. Med. Educ. 2011, 2, 53–55. [Google Scholar] [CrossRef]

- Brady, T.F.; Störmer, V.S.; Alvarez, G.A. Working Memory Is Not Fixed-Capacity: More Active Storage Capacity for Real-World Objects than for Simple Stimuli. Proc. Natl. Acad. Sci. USA 2016, 113, 7459–7464. [Google Scholar] [CrossRef]

- Brady, T.F.; Alvarez, G.A. Contextual Effects in Visual Working Memory Reveal Hierarchically Structured Memory Representations. J. Vis. 2015, 15, 6. [Google Scholar] [CrossRef]

- Hardman, K.O.; Cowan, N. Remembering Complex Objects in Visual Working Memory: Do Capacity Limits Restrict Objects or Features? J. Exp. Psychol. Learn. Mem. Cogn. 2015, 41, 325–347. [Google Scholar] [CrossRef]

- Lew, T.F.; Vul, E. Ensemble Clustering in Visual Working Memory Biases Location Memories and Reduces the Weber Noise of Relative Positions. J. Vis. 2015, 15, 10. [Google Scholar] [CrossRef]

- Rerko, L.; Oberauer, K.; Lin, H.-Y. Spatial Transposition Gradients in Visual Working Memory. Q. J. Exp. Psychol. 2014, 67, 3–15. [Google Scholar] [CrossRef]

- Rideaux, R.; Apthorp, D.; Edwards, M. Evidence for Parallel Consolidation of Motion Direction and Orientation into Visual Short-Term Memory. J. Vis. 2015, 15. [Google Scholar] [CrossRef]

- Awh, E.; Barton, B.; Vogel, E.K. Visual Working Memory Represents a Fixed Number of Items Regardless of Complexity. Psychol. Sci. 2007, 18, 622–628. [Google Scholar] [CrossRef]

- Schurgin, M.W.; Wixted, J.T.; Brady, T.F. Psychophysical Scaling Reveals a Unified Theory of Visual Memory Strength. Nat. Hum. Behav. 2020, 4, 1156–1172. [Google Scholar] [CrossRef]

- Cowan, N. The Magical Number 4 in Short-Term Memory: A Reconsideration of Mental Storage Capacity. Behav. Brain Sci. 2001, 24, 87–114; discussion 114. [Google Scholar] [CrossRef]

- Luck, S.J.; Vogel, E.K. The Capacity of Visual Working Memory for Features and Conjunctions. Nature 1997, 390, 279–281. [Google Scholar] [CrossRef]

- Todd, J.J.; Marois, R. Capacity Limit of Visual Short-Term Memory in Human Posterior Parietal Cortex. Nature 2004, 428, 751–754. [Google Scholar] [CrossRef]

- Bays, P.M.; Husain, M. Dynamic Shifts of Limited Working Memory Resources in Human Vision. Science 2008, 321, 851–854. [Google Scholar] [CrossRef]

- Gao, Z.; Li, J.; Liang, J.; Chen, H.; Yin, J.; Shen, M. Storing Fine Detailed Information in Visual Working Memory--Evidence from Event-Related Potentials. J. Vis. 2009, 9, 17. [Google Scholar] [CrossRef]

- Gao, Z.; Yin, J.; Xu, H.; Shui, R.; Shen, M. Tracking Object Number or Information Load in Visual Working Memory: Revisiting the Cognitive Implication of Contralateral Delay Activity. Biol. Psychol. 2011, 87, 296–302. [Google Scholar] [CrossRef]

- Matsuyoshi, D.; Osaka, M.; Osaka, N. Age and Individual Differences in Visual Working Memory Deficit Induced by Overload. Front. Psychol. 2014, 5, 384. [Google Scholar] [CrossRef]

- Oberauer, K.; Eichenberger, S. Visual Working Memory Declines When More Features Must Be Remembered for Each Object. Mem. Cognit. 2013, 41, 1212–1227. [Google Scholar] [CrossRef]

- Bae, G.Y.; Flombaum, J.I. Two Items Remembered as Precisely as One: How Integral Features Can Improve Visual Working Memory. Psychol. Sci. 2013, 24, 2038–2047. [Google Scholar] [CrossRef]

- Donkin, C.; Kary, A.; Tahir, F.; Taylor, R. Resources Masquerading as Slots: Flexible Allocation of Visual Working Memory. Cognit. Psychol. 2016, 85, 30–42. [Google Scholar] [CrossRef] [PubMed]

- He, X.; Zhang, W.; Li, C.; Guo, C. Precision Requirements Do Not Affect the Allocation of Visual Working Memory Capacity. Brain Res. 2015, 1602, 136–143. [Google Scholar] [CrossRef] [PubMed]

- Ikkai, A.; McCollough, A.W.; Vogel, E.K. Contralateral Delay Activity Provides a Neural Measure of the Number of Representations in Visual Working Memory. J. Neurophysiol. 2010, 103, 1963–1968. [Google Scholar] [CrossRef]

- Jonides, J.; Lewis, R.L.; Nee, D.E.; Lustig, C.A.; Berman, M.G.; Moore, K.S. The Mind and Brain of Short-Term Memory. Annu. Rev. Psychol. 2008, 59, 193–224. [Google Scholar] [CrossRef] [PubMed]

- Machizawa, M.G.; Goh, C.C.W.; Driver, J. Human Visual Short-Term Memory Precision Can Be Varied at Will When the Number of Retained Items Is Low. Psychol. Sci. 2012, 23, 554–559. [Google Scholar] [CrossRef]

- Ye, C.; Zhang, L.; Liu, T.; Li, H.; Liu, Q. Visual Working Memory Capacity for Color Is Independent of Representation Resolution. PLoS ONE 2014, 9, e91681. [Google Scholar] [CrossRef]

- Allen, R.J.; Baddeley, A.D.; Hitch, G.J. Evidence for Two Attentional Components in Visual Working Memory. J. Exp. Psychol. Learn. Mem. Cogn. 2014, 40, 1499–1509. [Google Scholar] [CrossRef]

- Berry, E.D.J.; Waterman, A.H.; Baddeley, A.D.; Hitch, G.J.; Allen, R.J. The Limits of Visual Working Memory in Children: Exploring Prioritization and Recency Effects with Sequential Presentation. Dev. Psychol. 2018, 54, 240–253. [Google Scholar] [CrossRef]

- Hu, Y.; Hitch, G.J.; Baddeley, A.D.; Zhang, M.; Allen, R.J. Executive and Perceptual Attention Play Different Roles in Visual Working Memory: Evidence from Suffix and Strategy Effects. J. Exp. Psychol. Hum. Percept. Perform. 2014, 40, 1665–1678. [Google Scholar] [CrossRef]

- Luciana, M.; Conklin, H.M.; Hooper, C.J.; Yarger, R.S. The Development of Nonverbal Working Memory and Executive Control Processes in Adolescents. Child Dev. 2005, 76, 697–712. [Google Scholar] [CrossRef]

- Veksler, B.Z.; Boyd, R.; Myers, C.W.; Gunzelmann, G.; Neth, H.; Gray, W.D. Visual Working Memory Resources Are Best Characterized as Dynamic, Quantifiable Mnemonic Traces. Top. Cogn. Sci. 2017, 9, 83–101. [Google Scholar] [CrossRef]

- Rudkin, S.J.; Pearson, D.G.; Logie, R.H. Executive Processes in Visual and Spatial Working Memory Tasks. Q. J. Exp. Psychol. 2007, 60, 79–100. [Google Scholar] [CrossRef] [PubMed]

- Simmering, V.R.; Miller, H.E.; Bohache, K. Different Developmental Trajectories across Feature Types Support a Dynamic Field Model of Visual Working Memory Development. Atten. Percept. Psychophys. 2015, 77, 1170–1188. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Swanson, H.L.; Kudo, M.F.; Van Horn, M.L. Does the Structure of Working Memory in EL Children Vary across Age and Two Language Systems? Mem. Hove Engl. 2019, 27, 174–191. [Google Scholar] [CrossRef]

- Brocki, K.C.; Bohlin, G. Executive Functions in Children Aged 6 to 13: A Dimensional and Developmental Study. Dev. Neuropsychol. 2004, 26, 571–593. [Google Scholar] [CrossRef] [PubMed]

- Luna, B.; Garver, K.E.; Urban, T.A.; Lazar, N.A.; Sweeney, J.A. Maturation of Cognitive Processes from Late Childhood to Adulthood. Child Dev. 2004, 75, 1357–1372. [Google Scholar] [CrossRef] [PubMed]

- Guillory, S.B.; Gliga, T.; Kaldy, Z. Quantifying Attentional Effects on the Fidelity and Biases of Visual Working Memory in Young Children. J. Exp. Child Psychol. 2018, 167, 146–161. [Google Scholar] [CrossRef] [PubMed]

- Simmering, V.R.; Miller, H.E. Developmental Improvements in the Resolution and Capacity of Visual Working Memory Share a Common Source. Atten. Percept. Psychophys. 2016, 78, 1538–1555. [Google Scholar] [CrossRef]

| Database | Number of Abstracts Returned | Search Terminology |

|---|---|---|

| Embase | 184 abstracts | (EmTREE): these terms were largely unnecessary, as the search was narrowed by topic. |

| PubMed | 151 abstracts | (Visual working memory[Title]) AND ((resolution OR fidelity OR precis *) OR (* OR recall *)) Medical subject heading (MeSH) [short-term] was used (there were no narrower terms). |

| PSYCHinfo | 217 abstracts | (Visual working memory) AND (resolution OR fidelity OR precis *) OR (* OR recall *) |

| Cochrane Register of Controlled Trials | 90 abstracts | We determined the broadest search of relevant articles to be “visual working memory” or VWM |

| Web of Science | 140 abstracts | (“Visual working memory”) Refined by topic: (resolution OR fidelity OR precis *) |

| Authors | Sample Size | Ages | Healthy/Clinical | Duration b/W Timepoints | Tasks (Trials) | Sequential/Whole Report | Control/Ancillary Tasks (Trials) | Attrition | Primary Outcomes |

|---|---|---|---|---|---|---|---|---|---|

| Zokaei et al. [9] | 126 (12 for Parkinson’s longitudinal component) | 51–79 | Both | 3 months | 3-item (90) 4-item (200) PD (100–200) | Sequential | Pre-cueing (200; PD patients 100–200) Sensorimotor (25; only completed by 10 healthy older participants. 1-item (200; PD patients 100–200) | None (Only 12 PD patients) | Recall: Precision/Performance |

| Burnett Heyes et al. [10] | 40 | 7–13 | Healthy | 2 years | 3-item (90) | Sequential | Sensorimotor (25) 1-item (30) | 50 * | Recall: Precision/Performance |

| Fallon et al. [17] | 37 | Patient:Mean 65 Healthy: Mean 68 | Both (20 PD/17 healthy) | 1 week to 1 month | Healthy/PD 2-item, 3 sec (64/32) 2-item, 6 sec (64/32) | Whole report | Healthy/PD Update (64/32) Ignore (64/32) | Not reported, presumably none | Recall: Precision (kappa)/Performance |

| Adam and Vogel [15] | 79 (+35 later) | 18–35 | Healthy | 4 months | Orientation (2 * 30)) | Whole report | Color-change detection (5 * 30) Visual Search (5 * 48) Anti-Saccade (4 * 36) Raven’s Advanced Progressive matrices (10 min for 18 questions) | 7 (and 6 from added 35 member group) | Mean performance (average correct) Change in poor performance |

| Author (Year) | Precision Performance | Recall Performance | One-Item Condition |

|---|---|---|---|

| Zokaie [9] | After three months of dopaminergic treatment, precision significantly increased t (11) = 3.01, p = 0.012 | Significant improvement in performance across all positions F(1,11) = 9.08, p = 0.012 | No significant difference |

| Burnett Heyes [10] | T1: 2.33 (1.08) T2: 2.80 (1.19) Student improvement from t1 to t2: (Z = 2.39, p = 0.017 | Variability around the probed target orientation improved significantly with age, without other sources of error changing t(39) = 3.3, p = 0.002 | (Z = 2.87, p = 0.004; one outlier > 2.5 SD > mean excluded) |

| Fallon [17] | Trials collapsed across participants on/off medication; no significant difference | Trials collapsed. No significant difference | N/A |

| others | |||

| Author (Year) | Performance Measure | Task Condition | |

| Adam and Vogel [15] | Mean performance (average correct) | No improvement in group receiving training: t(47) = −1.68, p = 0.1 Improvement in group receiving no training: t(52) = −2.01, p = 0.05 | |

| Change in poor performance | Not calculated | ||

| Authors (Year) | Effect Sizes (Standardized) | Both Timepoints Reported | Sequential? | Analyses Used | Other |

|---|---|---|---|---|---|

| Zokaei et al. [9] | (Sd not provided, unknown if normally distributed) | No | (Individual target values not reported) F(3,33) = 2.5, p = 0.07. | t-test Mixture Model | Only 12 PD patients were measured at two timepoints. 72 of 126 participants come from Burnett Heyes study. Individual t1/t2 measurements not reported. |

| Burnett Heyes et al. [10] | 0.454 | Yes | Yes, improvement on items 1 and 2 but not 3. | Wilcoxon signed-rank t-test Mixture Model | All male, prep-school population. Large range, 7–13, for children at key developmental period with a sample too small to separate further by age. We calculated effect size using (), where Z is the Z test statistic and N is sample size |

| Fallon et al. [17] | Fallon determined that difference was not statistically significant for PD patients on/off medication. | Graphed | N/A (whole report) | Mixed-effect model Mixed-anova Wilcoxon signed-rank t-test | Very short period between time points (1–4 weeks). One participant could conceivably have 4× as much time between testing as other participants. |

| Adam and Vogel [15] | No improvement (see this table) | Graphed | N/A (whole report) | Mixed Anova Two-tailed t-tests | Focused primarily on motivational factors and effects of feedback on performance. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ades, J.; Mishra, J. Systematic Review of the Longitudinal Sensitivity of Precision Tasks in Visual Working Memory. Vision 2022, 6, 7. https://doi.org/10.3390/vision6010007

Ades J, Mishra J. Systematic Review of the Longitudinal Sensitivity of Precision Tasks in Visual Working Memory. Vision. 2022; 6(1):7. https://doi.org/10.3390/vision6010007

Chicago/Turabian StyleAdes, James, and Jyoti Mishra. 2022. "Systematic Review of the Longitudinal Sensitivity of Precision Tasks in Visual Working Memory" Vision 6, no. 1: 7. https://doi.org/10.3390/vision6010007

APA StyleAdes, J., & Mishra, J. (2022). Systematic Review of the Longitudinal Sensitivity of Precision Tasks in Visual Working Memory. Vision, 6(1), 7. https://doi.org/10.3390/vision6010007