1. Introduction

Optimal control of a dynamic system is a usual task in process engineering. If the process has mathematical properties sufficient to apply theoretical control laws, this task can sometimes be achieved without significant computational complexity. Other times the computational effort is important, or we do not dispose of known control techniques. In this case, metaheuristic algorithms integrated within appropriate control structures constitute a realistic solution. This kind of algorithms (see [

1,

2,

3]) have been used intensively in control engineering (see [

4,

5,

6,

7]) because of their robustness and capacity to cope with big complexity problems.

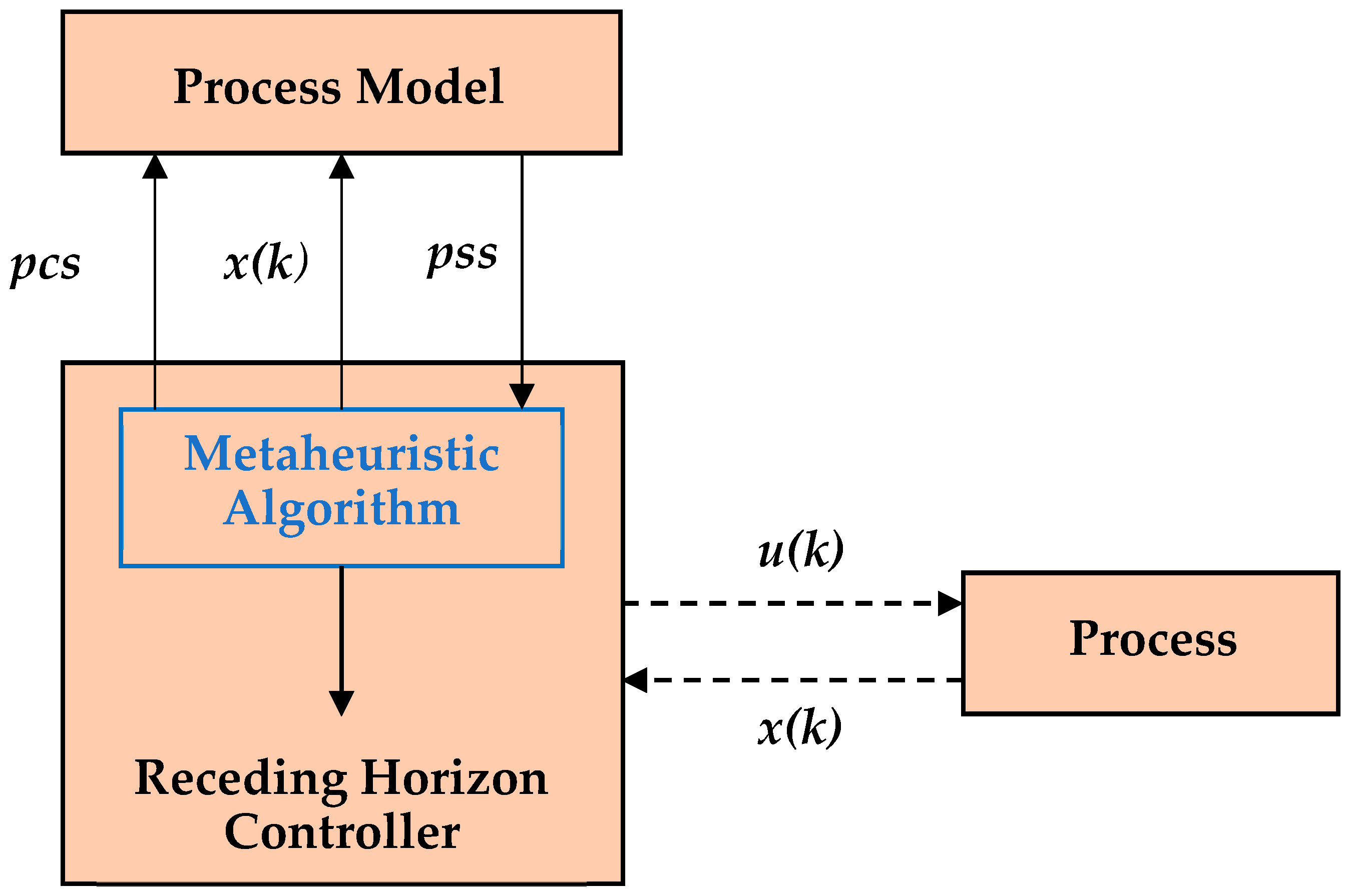

A metaheuristic algorithm (MA), like simulated annealing, genetic algorithm, evolutionary algorithm (AE), ant colony systems, particle swarm optimization, etc., is not by itself prepared to achieve a closed-loop control structure. That is why it needs to be integrated into a structure like Receding Horizon Control (RHC) (see [

8,

9,

10]) or Model Predictive Control ([

11,

12]). Both structures use prediction techniques that could involve MAs because prediction is usually optimization.

This work has rather practical relevance because it is first addressed to a practicing professional engineer who wants to implement an optimal control structure using an Evolutionary Algorithm (see [

11,

13,

14,

15]). More precisely, there are several requests and given data that define our work:

A real process has to be controlled over a given control horizon;

We dispose of a process model (usually nonlinear), usually a set of all algebraic and differential constraints imposed by the dynamic environment;

A closed-loop control structure has to solve an Optimal Control Problem (OCP). Its Controller should optimize an objective function and meet some constraints over the control horizon. The objective function contains a terminal penalty term, which renders the prediction of the optimal control sequence a complex task;

A basic version of the EA can find the optimal value of the objective function over the control horizon.

The RHC structure is a general and simple control structure involving a prediction technique that can fit with an evolutionary algorithm. For this reason, the solution proposed by this paper is based on the RHC structure whose Controller makes optimal predictions using an EA. The implementation aspects emphasized in our presentation mainly refer to two topics:

Section 2 briefly presents the elements that define an OCP and introduces the notations used in the next sections.

After recalling the RHC structure,

Section 3 proposes the first version of the Controller. This is organized as a function and includes the slightly modified basic version of the EA. At each sampling moment, the EA is executed to determine the optimal control sequence covering the prediction horizon. The Controller takes the first value of this sequence and makes it its current control output. After that, this value is applied to the process. At each call, the prediction horizon decreases and keeps the final moment of the control horizon (see for comparison [

9,

10,

11]).

In this work, we propose two versions of the Controller. The EA calls for a numerical integration function using a discretization step equal to the closed-loop sampling period. Consequently, the encoding of a control sequence uses a number of genes proportional to the prediction horizon’s length. The greater computation effort of the Controller is sustained in the first sampling period when the prediction horizon is the largest. Using simulations, the implementer has to decide whether the Controller’s execution time is less than the sampling period; that is, the Controller can be implemented.

The second version of the Controller, proposed in

Section 4, improves the computational complexity such that the execution time would be lower than a sampling period. Regardless of the prediction horizon’s length, the discretization steps’ number is constant. In other words, the encoding of a control sequence always uses the same number of genes. If the increase of the discretization steps does not significantly affect the computation accuracy, this version can improve the computational complexity and assure the feasibility of the Controller.

Section 5 presents a case study concerning a particular OCP and the controller implementation using an adequate EA. The performance index is a terminal penalty. The main objective is to show how a peculiar OCP can be solved and implemented using such a controller. Notice that we do not aim to solve a particular OCP and compare our solution with other approaches but, rather, highlight some implementation aspects. The resulting RHC structure will be simulated to validate the quasi-optimal behaviour of the closed loop.

The addressed problem deals with the

optimal control of a fed-batch reactor for

ethanol production (OCEP). First of all, an EA is chosen to solve this problem and yield an open-loop solution.

Section 5.1 describes an evolutionary algorithm (EAv0) that solves the OCEP problem. Although EAv0 does not refer to a closed-loop implementation, it will prove its capacity to solve the open-loop problem. It also allows us to assess the computational complexity. A simulation series is conducted to evaluate the performances of a typical solution and its state evolution.

The implementation of the Receding Horizon Controller for the OCEP problem is presented in

Section 5.2. EAv0 is slightly modified to obtain EAv1, which is a constituent of the Controller. The control sequence is encoded by a vector of real values whose dimension is proportional to the prediction horizon’s length. The closed-loop evolution (Controller’s outputs and the process’ states) is simulated, considering the real process, which is identical to the process model. The computation complexity is evaluated on the overall control horizon, using simplifying hypothesis.

A Controller’s second version—used to solve the OCEP problem—which can improve the computation complexity, is presented in

Section 5.3. Algorithm EAv0 is modified to generate EAv2, such that a control sequence is encoded by a constant number of real values, regardless of the prediction horizon’s length. The evolution of the closed loop, including the new Controller, is simulated. The computation complexity of EAv2 is analyzed in contrast with EAv1 to prove that the first one is smaller, at least in the first sampling periods.

The results of the simulation series have proved that the RHC structure with the Controller that is able to integrate an EA is feasible with good quasi-optimal behaviour.

2. Defining Elements of an Optimal Control Problem

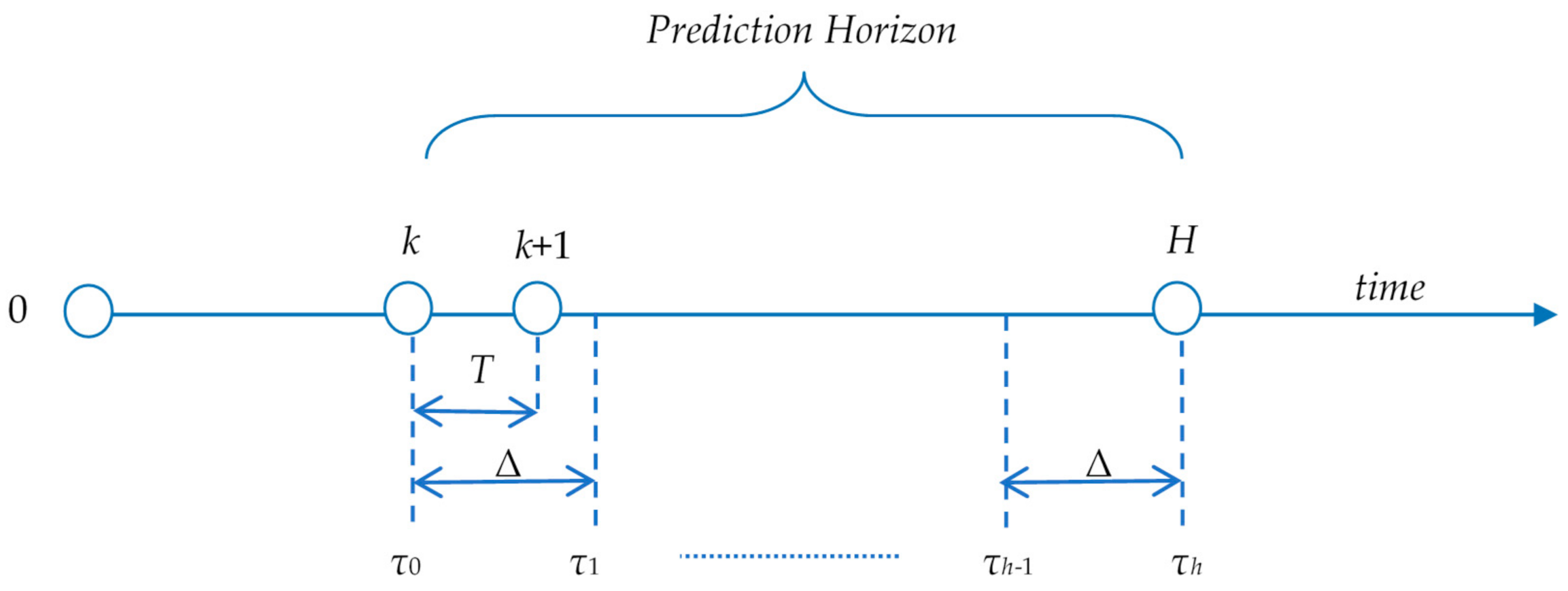

In the upcoming sections, we briefly recall the defining elements of an optimal control problem (OCP) and their usual notations, but we do so in a way that is adapted to the presentation of the next sections. Let us consider an OCP whose control horizon is finite, for example , where H is a positive integer and T is the sampling period. The discrete moments will be specified simply by .

We suppose that a discrete process model, used to simplify the presentation, is available and given in Equation (1). An eventual continuous model can always be converted into a discrete one.

The vectors , have m and n elements (), respectively, and f is an n-dimensional vector function with all regularity properties demanded by the calculations.

Any control sequence

, defined as below, meets certain constraints and determines the system evolution over the control horizon:

This system evolution has to be optimal under a performance index. The latter minimizes a specific objective function, which has the discrete form presented in Equation (3).

Equation (3) refers to the prediction horizon

and the state trajectory that starts with

. The system evolution is generated by a control sequence

having the structure presented in Equation (4). The resulting state trajectory can be denoted by

The first part of the sum presented in Equation (3) is a Lagrange-type term that measures quality along the trajectory of the dynamic system. The scalar value is the contribution of the interval to the quality measure J. The second part is a Mayer-type term that measures the quality of the trajectory in its final extremity. This term will be called the terminal penalty in the upcoming sections. The function TP(x) penalizes the final state x.

The performance index

of an optimal solution over the control horizon minimizes the objective function

J as below:

Obviously, J’s minimization (or maximization) can be subjected to constraints regarding the control inputs and the state variables.

5. Case Study

This section will present an example of implementing a metaheuristic-based controller that integrates an RHC structure.

We have considered a well-known problem that has been described in many articles, among which we recall [

16,

17]. This problem is known as the optimal control of a fed-batch reactor for ethanol production (OCEP) and concerns a nonlinear dynamic system. Different sub-cases are presented in these papers, which differ in control horizon and control bounds. We have considered the OCEP problem stated in

Appendix A, which presents all of the basic elements. Because our main objective is to construct the Controller using an AE, we have considered a fixed final time in contrast with other versions of the problem. Keeping notations, it holds:

The process model has

n state variables

representing the cell mass, substrate, product concentration (g/L), and volume (L). The control variable is the feed rate (L/h) that meets the following constraint:

The state variable

x4 has to meet the following constraint:

The objective function given below has to be maximized:

Although this one is a terminal penalty and the integral component is missing, the implementation maintains its difficulty: the process evolution of the entire control horizon must be determined when a pcs is adopted.

5.1. Open-Loop Solution Using an Evolutionary Algorithm

In this section, we present preliminary work before constructing the Controller for the closed-loop control structure. We have implemented and conducted an evolutionary algorithm (see [

1,

2,

3]) that solves the OCEP problem. It will be denoted by EAv0 (EA version 0) in the upcoming sections. The implementation of the EAv0 and the simulations were conducted using the MATLAB language and system. We have employed special functions devoted to integrating the differential equations for the simulation of the process evolution.

Let us recall that EAv0 determines the following quasi-optimal control input because our problem looks for the maximum value of

J:

This means that the control horizon is [0, H], and the initial state is x0.

This algorithm is the basis for the two versions of EA used inside the Controller. EAv0 is modified and adapted to become part of the optimal Controller.

As mentioned previously, EAv0 uses direct encoding and has the usual characteristics listed below:

For each generation, the population has μ individuals;

The children population has λ individuals ();

The population evolves over NGen generations;

The selection strategy uses stochastic universal sampling and the rank of individuals. The latter is scaled linearly using selection pressure (

s); see [

3] (pp. 205–221);

A BLX-α crossover operator;

Inside the mutation operator, the mutation step is subjected to a global variance adaptation. This one is conducted according to the “1/5 success rule”; see [

1] (pp. 245–274);

The replacement strategy involves that the children replace the λ worst individuals of the generation.

The most important parameters are given in

Table 3.

This algorithm yields a solution that is actually a quasi-optimal sequence of control inputs. It can be only used within an open-loop control structure. For the reasons mentioned before, this sequence is useless for a closed-loop control structure. Nevertheless, this phase is mandatory because it can validate the aptitude of the EA to solve the problem and evaluate the computational complexity.

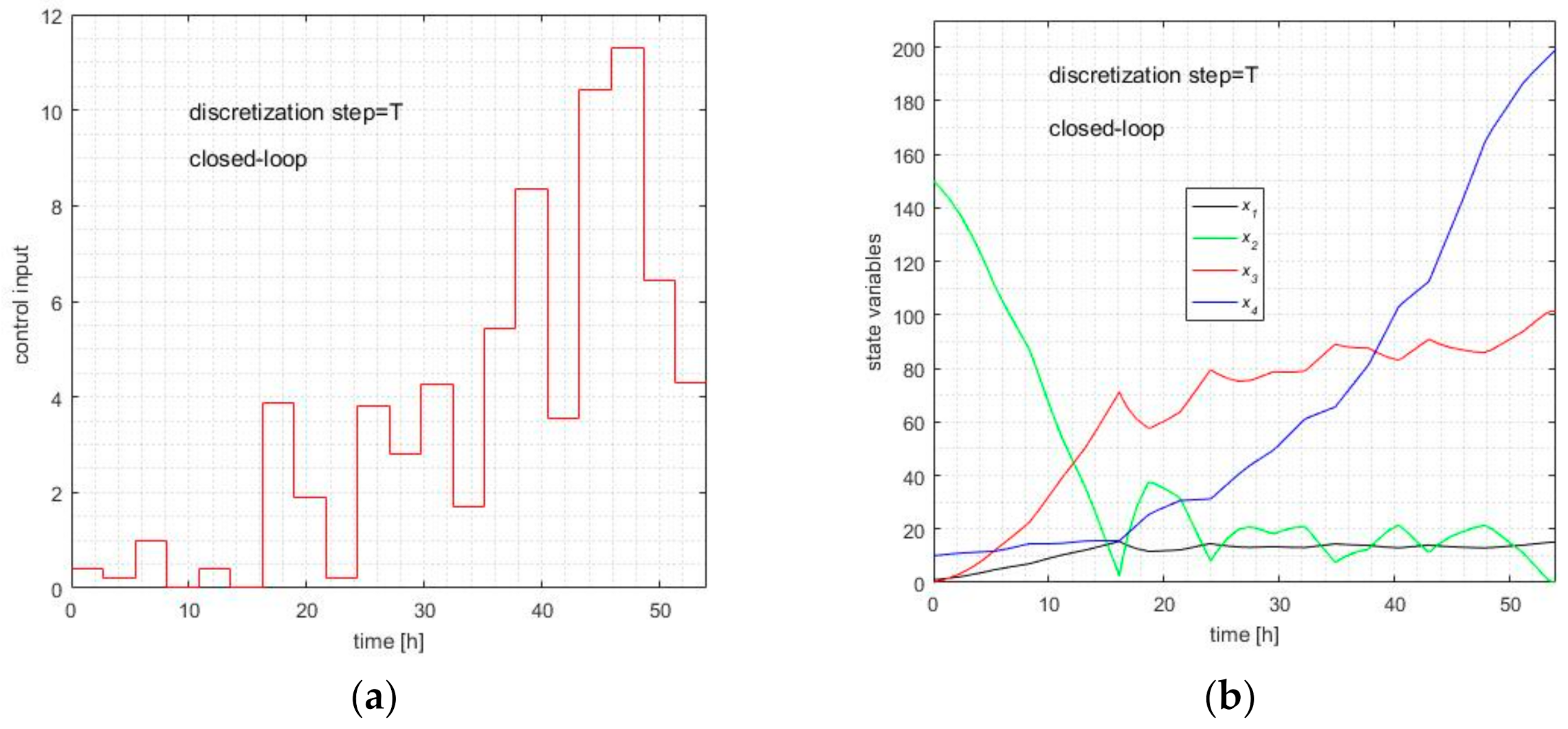

The resulting simulation program has a stochastic character; therefore, we cannot conclude after a single execution. We must repeat the execution more times to confer consistency to statistical parameters. That is why we have conducted a simulation series that has repeated times the execution of EAv0 30 times. The results, namely a performance index statistic, the quasi-optimal control input

u(t), and state evolution, are presented in

Table 4 and

Figure 3.

Table 4 shows some statistical parameters characterizing the 30 executions of EAv0. The minimum, average, and maximum values and standard deviation are indicated for the performance index. We consider typical execution as the particular simulation among the 30 that produce the values that are the closest to the average performance index. In our simulation series, there is an execution that yields

Jtypical = 19885.7. The quasi-optimal control input for the typical execution is depicted in

Figure 3a, and its state evolution in

Figure 3b.

Further details concerning EAv0 are given in

Appendix B.

5.2. Closed-Loop Solution Using an EA with Constant Discretization Step

This section aims to achieve the closed-loop control of the dynamic system involved in the OCEP problem using the Controller based on EAv1. We have implemented the Controller and a program that simulates how the closed loop works. The process model has been used to predict the ocs. We have also considered the same model to simulate the real process.

The Controller has the same structure as the one presented in

Table 1, except that it includes EAv1 (does not call a function). It is organized as a function called for each sampling period (see

Appendix B).

The Controller has the following characteristics:

Input parameters: k and x(k);

Output parameter: (the first element of the best solution, i.e., );

Control horizon: , with ; H = 20;

Number of genes: (see (8));

Discretization step: T;

Other parameters such as those in

Table 3.

EAv1—which is included not called by the Controller—has the same structure and characteristics as EAv0 (given in

Section 5.1).

The program that simulates the closed-loop optimal control has the outline presented in

Table 5. Essentially, the closed loop is achieved by a loop that simulates the Controller’s call for each sampling period and the feedback from the real process.

The Controller’s call is done in Line #3. It uses as an input parameter, which is the initial state that is stored in the global variable . The numerical integration of the process model is accomplished in Line #4. This algorithm is implemented as a MATLAB script.

We conducted a simulation series that executed the script “Closed-loop.m” 30 times. In our simulation series, there was an execution that yielded

Jtypical = 20,153.5. The performance index statistic, the quasi-optimal control input

u(t), and the state evolution are presented for typical execution in

Table 6,

Figure 4a,b, respectively.

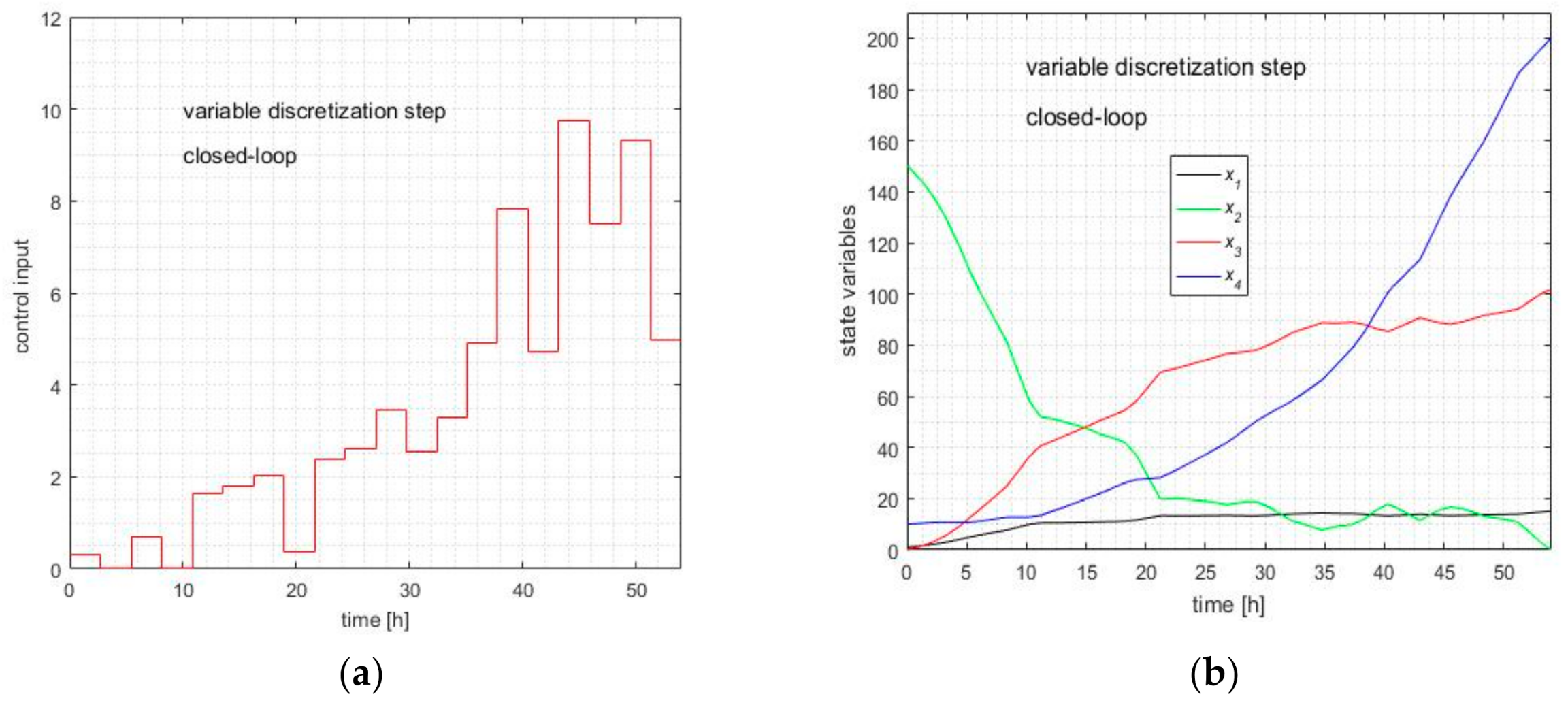

5.3. Closed-Loop Solution Using an EA with Improved Computational Complexity

This section aims to implement the closed-loop control of the dynamic system under consideration using the Controller based on EAv2. We have implemented the Controller and a program that simulates the closed-loop working (see “Closed-loop2.m”, inside the folder “EA_Etanol_loop2”). The latter is basically the same as that described in

Table 5, except for a few initializations.

The new Controller has the structure that was presented in

Table 1, except that it includes EAv2. It is also organized as a function called for each sampling period (see the script “Controller_EA2.m”). It has the following characteristics:

Input parameters: k and x(k);

Output parameter: (the first element of the best solution, i.e., );

Control horizon: , with ; ;

Number of genes: , for the first sampling periods, and for the last sampling periods (see (13)); ;

Discretization step: variable (see (15));

Other parameters such as those in

Table 3.

The EAv2 algorithm —which is included and not called by the Controller—has the same structure and characteristics as EAv0 (given in

Section 5.1)

Because OCEP maximizes the objective function, the best solution is as below:

We conducted a simulation series that executes the script “Closed-loop2.m” 30 times. In our simulation series, there was an execution that yielded

Jtypical = 20319.0. A statistic of the performance index achieved by the closed loop is presented in

Table 7. The quasi-optimal control input calculated by the Controller for typical execution is depicted in

Figure 5a, and its state evolution is depicted in

Figure 5b.

6. Discussion

We can now verify the statement made in

Section 4.2, which was that the Controller based on EAv2 has a smaller computational complexity than the one based on EAv1.

Table 8 shows the call number of the objective function recorded at the time of the closed-loop simulations. This number refers to the call of only one Controller, determining the optimal control input for the current sampling period. Its value is independent of the prediction horizon’s length. The first three columns represent the minimum, average, and maximum recorded values. The last column presents the theoretical value calculated with Equation (14) and

Table 3. The adopted offspring’s probability of undergoing mutation is

p = 0.9.

Hence the call number is the same for the two Controller versions.

For the same prediction horizon [k, H], the process model’s integration works over the same time interval and has similar input functions u(t), which are step functions. The step number of u(t) is not so important for the integration time. Hence, under these circumstances, the integration durations are similar.

Considering the two aspects mentioned above, we can conclude that the computational complexity regarding the objective function evaluation is similar for the two Controllers.

The aspect that makes the difference between the two controllers is related to encoding a pcs, namely the gene number. The Controller based on EAv1 uses a variable number of genes: . Constraint #3 can be prohibitive, especially in the first sampling period.

The Controller based on EAv2 only has

genes and

. Hence, EAv2 obviously has a smaller computational complexity for all genetic operators (selection, crossover, mutation, replacement) and initializations. The confirmation of this statement is given by

Table 9, which gives the execution time for the two controllers. These durations have been recorded at the time of the closed-loop simulations for each sampling period. The columns labelled by

k give the sampling period rank within the control horizon. The columns labelled by

tv1 and

tv2 give the execution times (in seconds) for the Controller based on EAv1 and EAv2, respectively.

The durations indicated in the two columns show that the Controller based on EAv2 has undoubtedly smaller computational complexity than EAv1.

In our case, because the durations tv1 are less than

T, even the first Controller can be used in a closed-loop control structure. Generally speaking, constraint #3 can be prohibitive for other OCPs, and the Controller based on EAv2 could be the solution. The price to pay for this Controller’s computational complexity is underlined by Remark #5. The simulations should prove that the optimal accuracy of the control sequence is not seriously affected. In our case, the values of the performance indexes shown in

Table 7 prove that the best solution accuracy is more than acceptable.

The third line of

Table 10 proves that EAv2 works very well in a closed loop with only ten genes.

The curves depicted in

Figure 3,

Figure 4 and

Figure 5 have similar aspects. Moreover,

Figure 4 and

Figure 5 show that the control action is present and the closed-loops work. To facilitate the comparison among the performance indexes for the three situations,

Table 9 unites

Table 4,

Table 5 and

Table 6. We recall that each simulation series has 30 program executions. Some explanations concerning the differences among the three lines are necessary.

One can remark that the values of J from in the first line are less than those accomplished by the two controllers. This explanation is related to the extent of the search operation. EAv0 searches for the best solution using only 2690 calls (Ncalls) of the objective function, while the controllers use 20 times more calls (2690 calls for each sampling period). In other words, the execution of EAv0 is equivalent to the Controller’s call for the first sampling period.

EAv1 finds the best performance index in the closed loop J = 21,014.9, which is greater than the corresponding value of EAv2, J = 20,982. This time, the explanation is related to the bigger number of genes that involve a finer representation of the pcs. From this point of view, EAv1 is superior to EAv2. More accurate representation leads to better optimization.

At the same time, the bigger number of genes involves a greater explorative character and a greater representation power for u(t). That is why EAv1 has a standard deviation greater than the corresponding value of EAv2. In comparison with EAv0, EAv1 has 20 times more chances to strengthen its explorative character. This fact explains the smaller value of the standard deviation in the open loop.

Our closed-loop simulations had two entities: the Controller based on EA and the controlled process. The Controller already uses a process model to implement the RHC structure (to make predictions). In this work, the real process is also simulated using the process model. That is a simplification because the real process is almost always affected by unmodeled dynamics and noises. One may ask: at what extent could the real process degrade the closed-loop functioning? The answer can always be found through simulation using a real process model. For example, a real process model could be obtained from the process model to which a simulated “noise” is added. We have simulated closed-loop functioning using this technique, but quantitative results are not included in this paper to avoid a presentation that is too complex.

We can notice a degradation of quasi-optimal behaviour according to the amplitude of the noise. The increase of the noise implies the decrease of the performance index. The closed loop does or does not work well depending on whether the performance index value is acceptable.

Generally speaking, it is relatively easy to deal with the constraints regarding the control input . This can be done when generating new pcs. Finally, each component belongs to a certain domain, , and the construction of a new pcs could meet this constraint. What is more difficult is the handling of the constraints regarding the state variables . A valid pcs will yield the resulting state trajectory defined by Equation (5). For a specific OCP, state path constraints involving a trajectory that is a pss could be imposed. The most general constraint could have a form that involves an admissible domain for each k: . In our case study, Constraint (18) limits the produced ethanol’s volume in the final state to the reactor’s volume.

The pss is calculated through the integration procedure. A good strategy is to verify if the component of the pss meet the path constraints just after the calculation of the pss within the objective function. If at least one constraint is not fulfilled, the cause associated with this effect, namely the pcs, must be moved away. A correct approach is to assign an infinite value (for minimization) to its performance index. In this way, the MA will replace the considered pcs, and the stochastic character of the population evolution will not be corrupted.

At successive time moments, the

pcs values are randomly set. Thus, the difference between two successive values could be quite big in one sense or another. This fact does not fit with the certain and desired smoothness of the control profile. Actually, this is the price to pay for the stochastic nature of the EA. The latter can integrate the so-called

step control technique, which implements the following differential constraint:

The value is the maximal accepted slope. If the step control technique is implemented, it will involve a certain smoothness at the level of the state trajectory. Starting from this remark and assimilating gradient-based optimization with evolutionary optimization, in principle, we could facilitate the state evolution toward the optimal pcs. Within a future work, this idea could help to make the control action of the proposed receding horizon controller more effective.

Besides the EA, other metaheuristic algorithms have been used by the authors to implement receding horizon controllers. Both population-based metaheuristic methods (firefly algorithm, harmony search, Gaussian adaptation, memetic algorithm, etc.) and Swarm algorithms (Ant Colony Optimization, the Artificial Bee Colony Algorithm, the Bees Algorithm, Cuckoo search, Lévy flights for global optimization, particle swarm optimization, etc.) have been used to solve OCPs. The communication skills among agents are not making swarm algorithms always more effective than the population-based algorithms. Our first perception is that the effectiveness of the Controller mainly depends on the dynamic process nature. A systematic comparison can be made within a future investigation, and more implementation aspects could be revealed.