Human Gender Classification Using Transfer Learning via Pareto Frontier CNN Networks

Abstract

1. Introduction

2. Background and Related Work

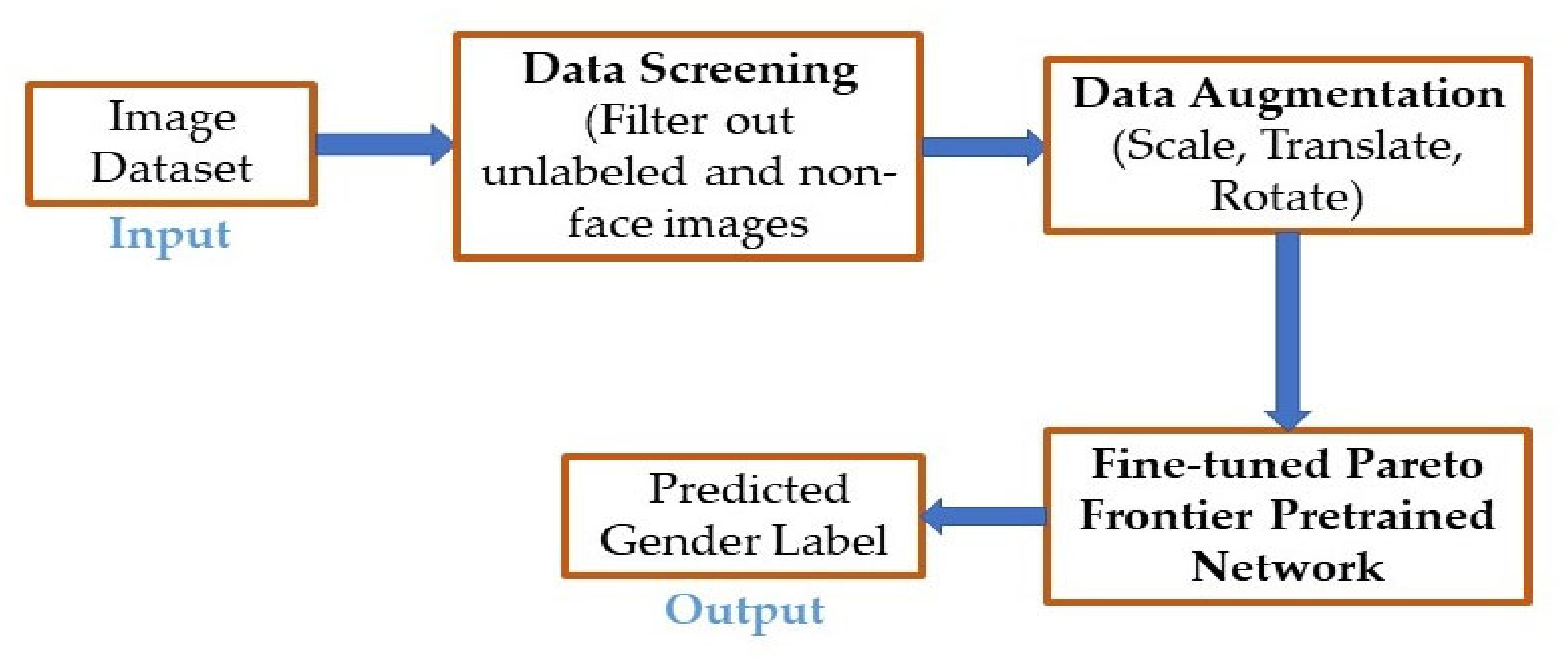

3. Methods

3.1. Convolutional Neural Networks

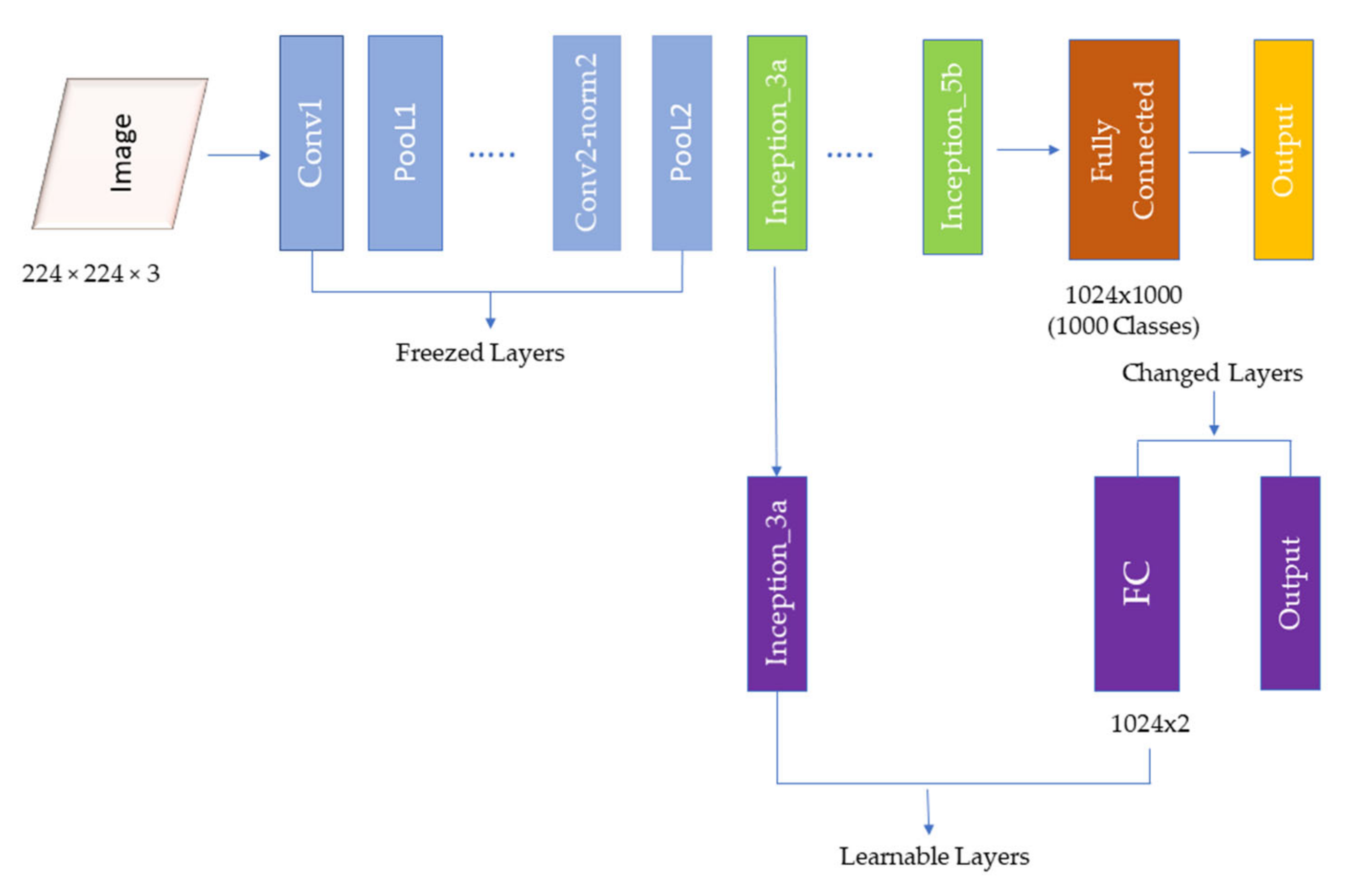

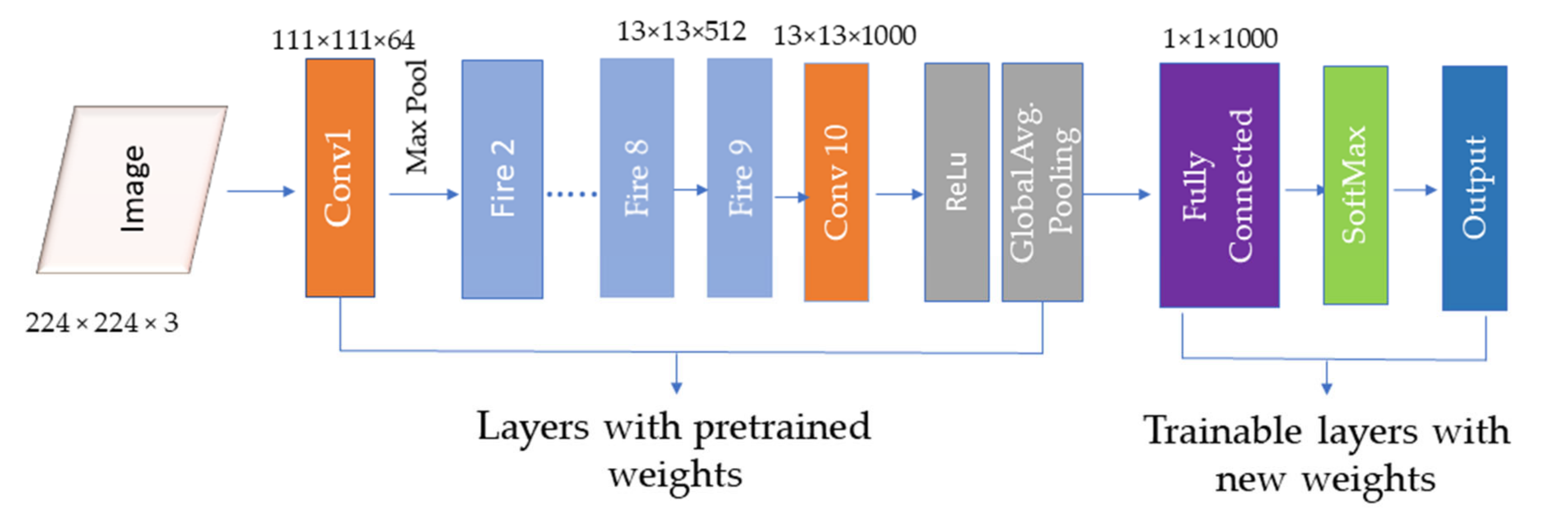

3.2. Transfer Learning

3.3. Pareto Frontier Networks

3.4. Pre-Trained Deep Learning Networks

4. Experiments and Results

4.1. Training Dataset

4.2. Experiments

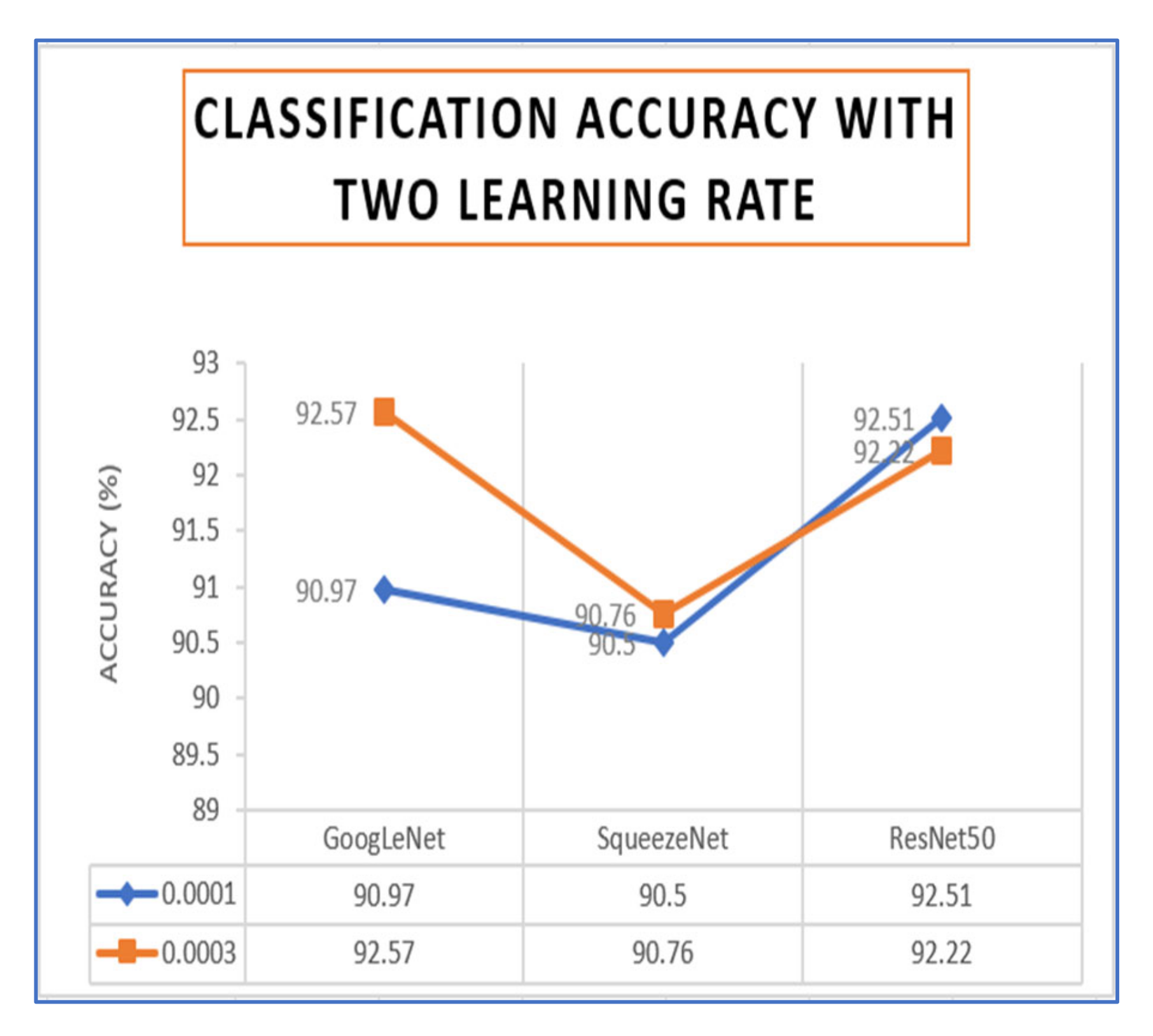

4.3. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Eidinger, E.; Enbar, R.; Hassner, T. Age and gender estimation of unfiltered faces. IEEE Trans. Inf. Forensics Secur. 2014, 9, 2170–2179. [Google Scholar] [CrossRef]

- Liu, C.; Wechsler, H. Gabor feature based classification using the enhanced fisher linear discriminant model for face recognition. IEEE Trans. Image Process. 2002, 11, 467–476. [Google Scholar] [PubMed]

- Liu, Z.; Luo, P.; Wang, X.; Tang, X. Deep learning face attributes in the wild. In Proceedings of the International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3730–3738. [Google Scholar]

- Zhang, N.; Paluri, M.; Ranzato, M.; Darrell, T.; Bourdev, L. PANDA: Pose aligned networks for deep attribute modeling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 1637–1644. [Google Scholar]

- Levi, G.; Hassner, T. Age and Gender Classification using Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 34–42. [Google Scholar]

- Christian, S.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Iandola, N.; Forrest, S.; Han, M.; Moskewicz, W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Rothe, R.; Timofte, R.; Gool, L.V. DEX: Deep EXpectation of apparent age from a single image. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Moghaddam, B.; Yang, M.H. Learning gender with support faces. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 707–711. [Google Scholar] [CrossRef]

- Makinen, E.; Raisamo, R. Evaluation of gender classification methods with automatically detected and aligned faces. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 541–547. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; Tan, T.; Huang, K.; Jia, K.; Wu, X. A study on gait-based gender classification. IEEE Trans. Image Process. 2009, 18, 1905–1910. [Google Scholar] [PubMed]

- Golomb, B.A.; Lawrence, D.T.; Sejnowski, T.J. Sexnet: A neural network identifies sex from human faces. Adv. Neural Inf. Process. Syst. 1990, 3, 572–577. [Google Scholar]

- Baluja, S.; Rowley, H.A. Boosting sex identification performance. Int. J. Comput. Vis. 2006, 71, 111–119. [Google Scholar] [CrossRef]

- Geetha, A.; Sundaram, M.; Vijayakumari, B. Gender classification from face images by mixing the classifier outcome of prime, distinct descriptors. Soft Comput. 2019, 23, 2525–2535. [Google Scholar] [CrossRef]

- Centro Universitario da FEI. FEI Face Database. Available online: http://www.fei.edu.br/~cet/facedatabase.Html (accessed on 25 January 2020).

- Xu, Z.; Lu, L.; Shi, P. A hybrid approach to gender classification from face images. In Proceedings of the 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008. [Google Scholar]

- Shih, H.C. Robust gender classification using a precise patch histogram. Pattern Recognit. 2013, 46, 519–528. [Google Scholar] [CrossRef]

- Matthias, D.; Juergen, G.; Gabriele, F.; Luc, V.G. Real-time facial feature detection using conditional regression forests. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 2578–2585. [Google Scholar]

- Rajeev, R.; Vishal, M.P.; Rama, C. HyperFace: A deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 41, 121–135. [Google Scholar]

- Poggio, B.; Brunelli, R.; Poggio, T. HyberBF Networks for Gender Classification. 1992. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.54.2814 (accessed on 13 April 2020).

- Fellous, J.M. Gender discrimination and prediction on the basis of facial metric information. Vis. Res. 1997, 37, 1961–1973. [Google Scholar] [CrossRef][Green Version]

- Castrillón-Santana, M.; Lorenzo-Navarro, J.; Ramón-Balmaseda, E. Descriptors and regions of interest fusion for gender classification in the wild. comparison and combination with cnns. CVPR 2016. [Google Scholar] [CrossRef]

- Antipov, G.; Berrani, S.A.; Dugelay, J.L. Minimalistic cnn-based ensemble model for gender prediction from face images. Pattern Recognit. Lett. 2016, 70, 59–65. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3-6 December 2012; Volume 1, pp. 1097–1105. Available online: https://dl.acm.org/doi/10.5555/2999134.2999257 (accessed on 13 April 2020).

- Duan, M.; Li, K.; Yang, C. A hybrid deep learning CNN–ELM for age and gender classification. Neurocomputing 2018, 275, 448–461. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Dosenovic, T.; Kopellaar, H.; Radenovic, S. On some known fixed point results in the complex domain: Survey. Mil. Techn. Cour. 2018, 66, 563–579. [Google Scholar]

- Fan, S.; Xu, L.; Fan, Y.; Wei, K.; Li, L. Computer-aided detection of small intestinal ulcer and erosion in wireless capsule endoscopy images. Phys. Med. Biol. 2018, 63, 165001. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How Transferable are Features in Deep Neural Networks. Adv. Neural Inf. Process. Syst. 2014, 3320–3328. [Google Scholar]

- Kwok, T.-Y.; Yeung, D.-Y. Constructive Feedforward Neural Networks for Regression Problems: A Survey. HKUST-CS95 1995, 1–29. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in Network. In Proceedings of the 2nd International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Han, S.; Mao, H.; William, J.D. Deep Compression: Compressing deep neural networks with pruning, trained quantization and Huffman coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Computational Vision. Available online: http://www.vision.Caltech.edu/html-files/archive.html (accessed on 2 January 2020).

| Network | Accuracy (%) | |

|---|---|---|

| LR = 0.0001 | LR= 0.0003 | |

| GoogLeNet | 90.97 | 92.57 |

| SqueezeNet | 90.50 | 90.76 |

| ResNet50 | 92.51 | 92.22 |

| Network | Accuracy (%) | |

|---|---|---|

| LR = 0.0001 | LR= 0.0003 | |

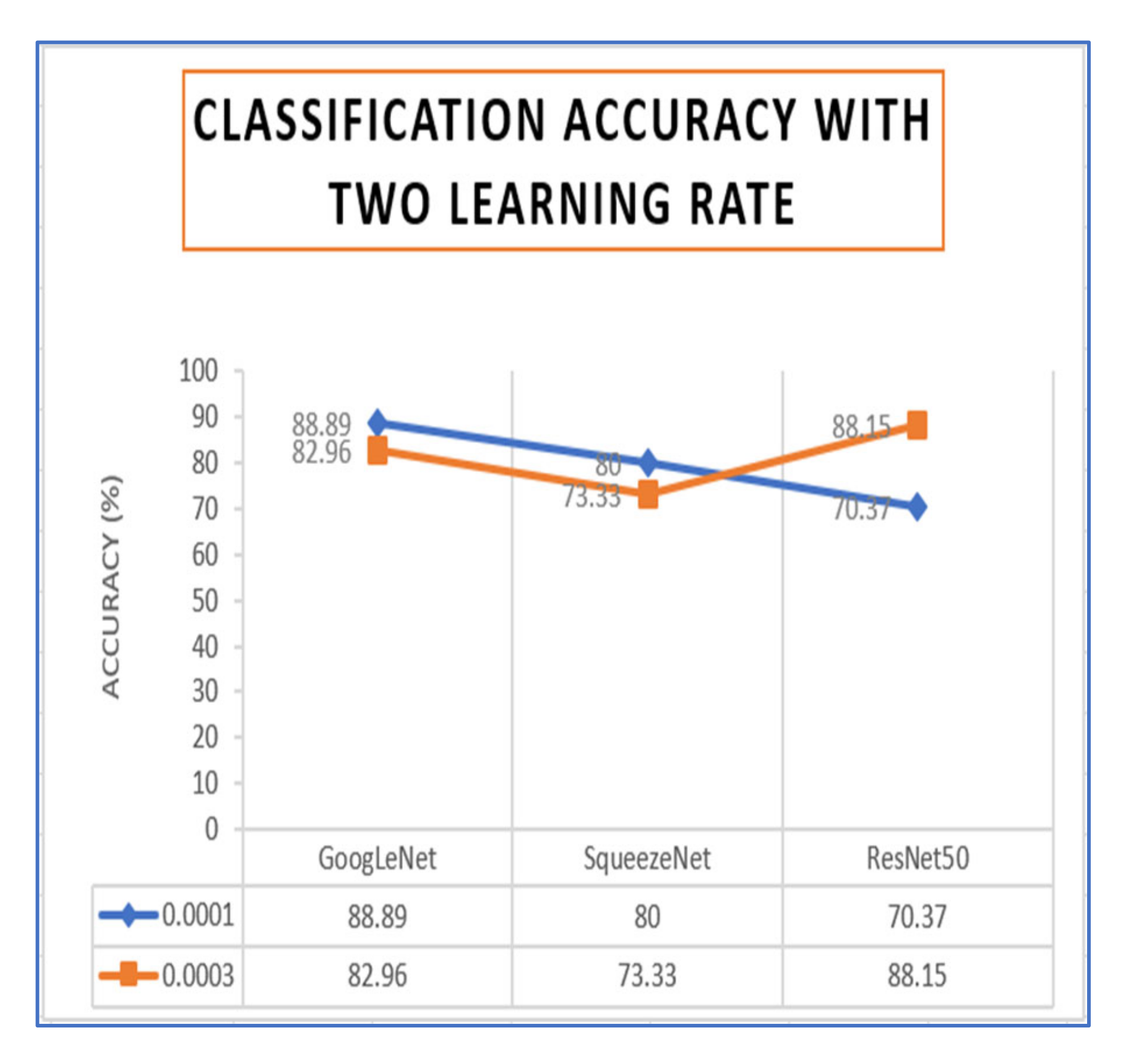

| GoogLeNet | 88.89 | 82.96 |

| SqueezeNet | 80 | 73.33 |

| ResNet50 | 70.37 | 88.15 |

| Network | Runtime (Minutes) | |

|---|---|---|

| LR = 0.0001 | LR = 0.0003 | |

| GoogLeNet | 39:15 | 40:12 |

| SqueezeNet | 25:48 | 46:58 |

| ResNet50 | 89:11 | 158:47 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Islam, M.M.; Tasnim, N.; Baek, J.-H. Human Gender Classification Using Transfer Learning via Pareto Frontier CNN Networks. Inventions 2020, 5, 16. https://doi.org/10.3390/inventions5020016

Islam MM, Tasnim N, Baek J-H. Human Gender Classification Using Transfer Learning via Pareto Frontier CNN Networks. Inventions. 2020; 5(2):16. https://doi.org/10.3390/inventions5020016

Chicago/Turabian StyleIslam, Md. Mahbubul, Nusrat Tasnim, and Joong-Hwan Baek. 2020. "Human Gender Classification Using Transfer Learning via Pareto Frontier CNN Networks" Inventions 5, no. 2: 16. https://doi.org/10.3390/inventions5020016

APA StyleIslam, M. M., Tasnim, N., & Baek, J.-H. (2020). Human Gender Classification Using Transfer Learning via Pareto Frontier CNN Networks. Inventions, 5(2), 16. https://doi.org/10.3390/inventions5020016